Acceleration-Aware Path Planning with Waypoints

Abstract

1. Introduction

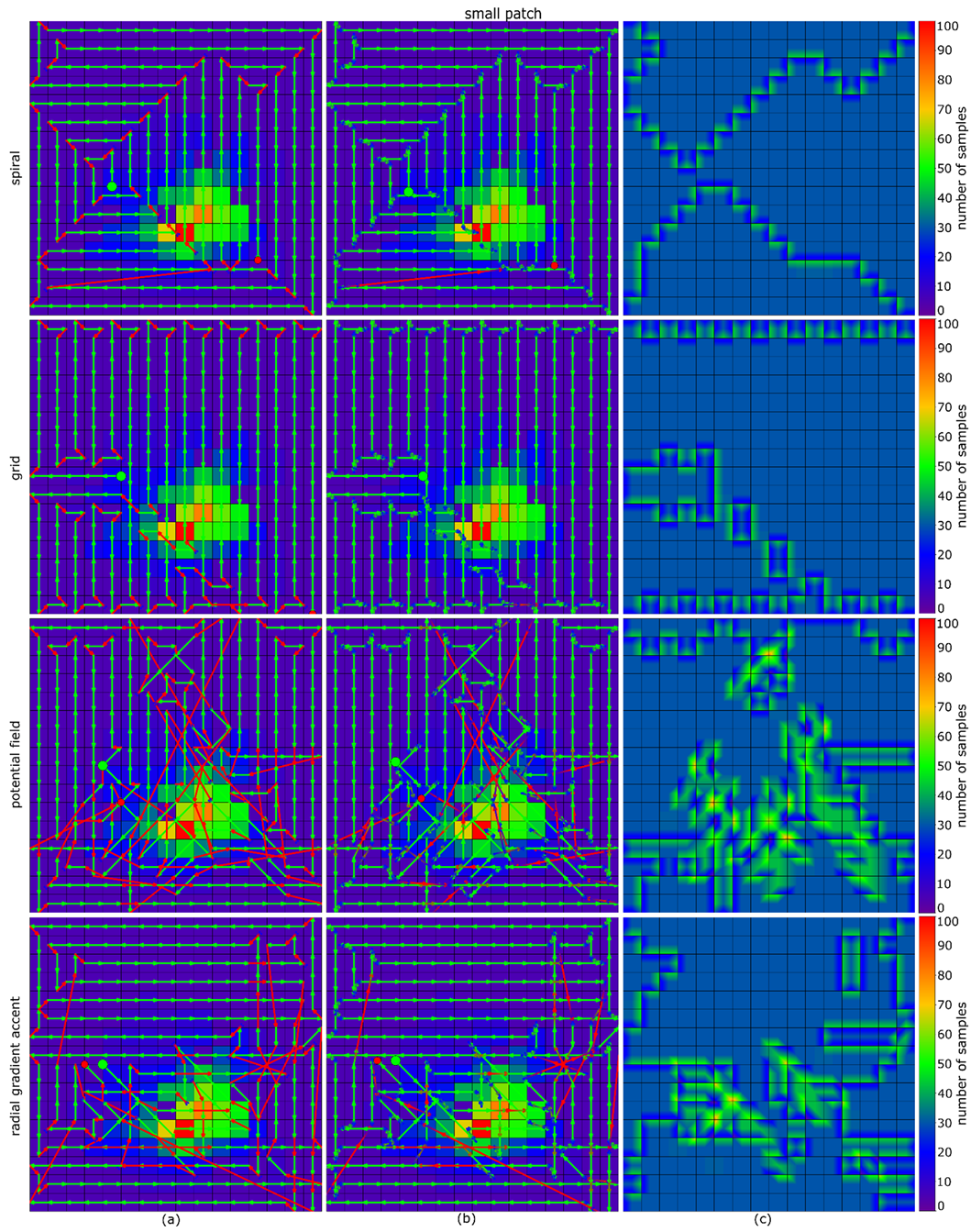

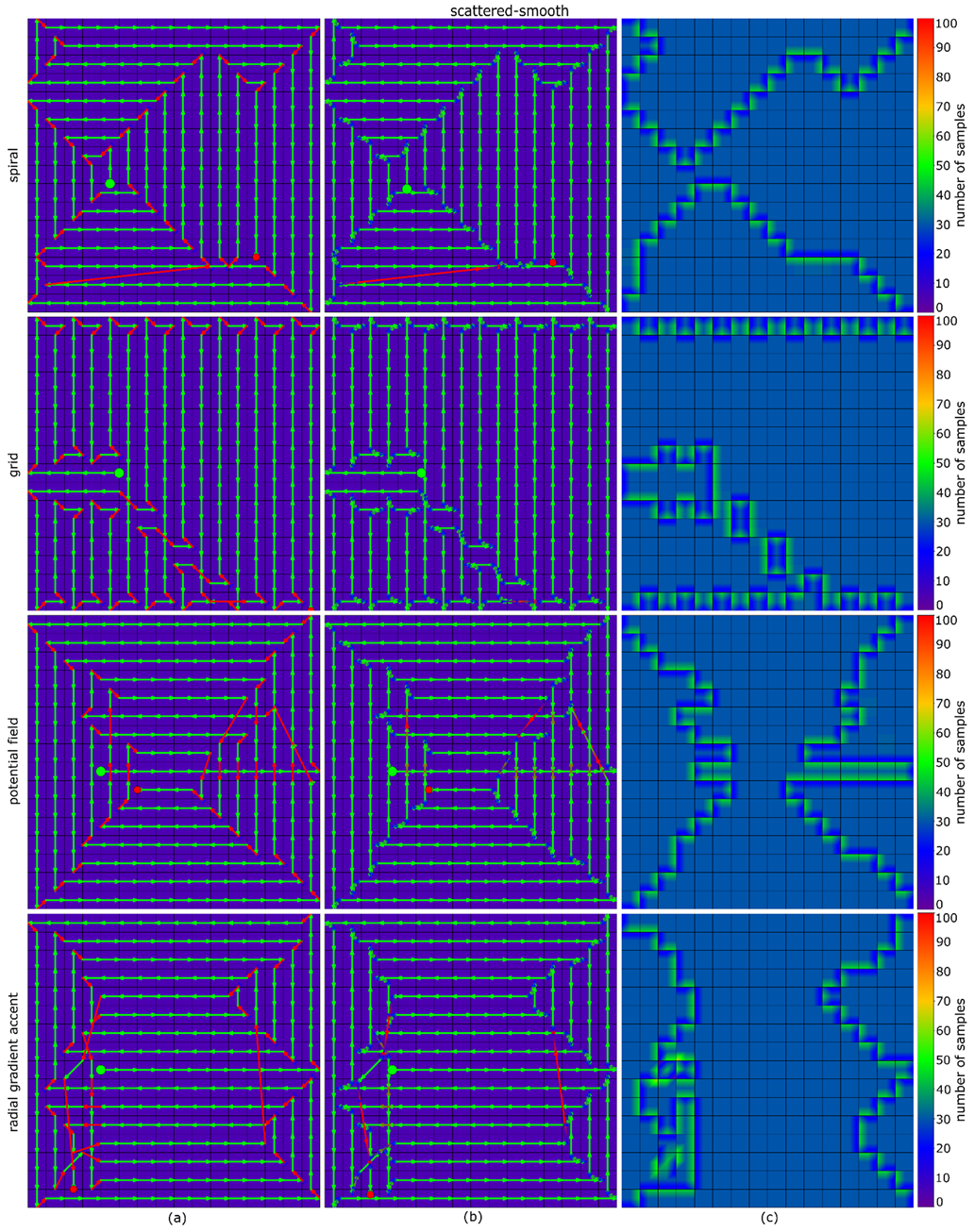

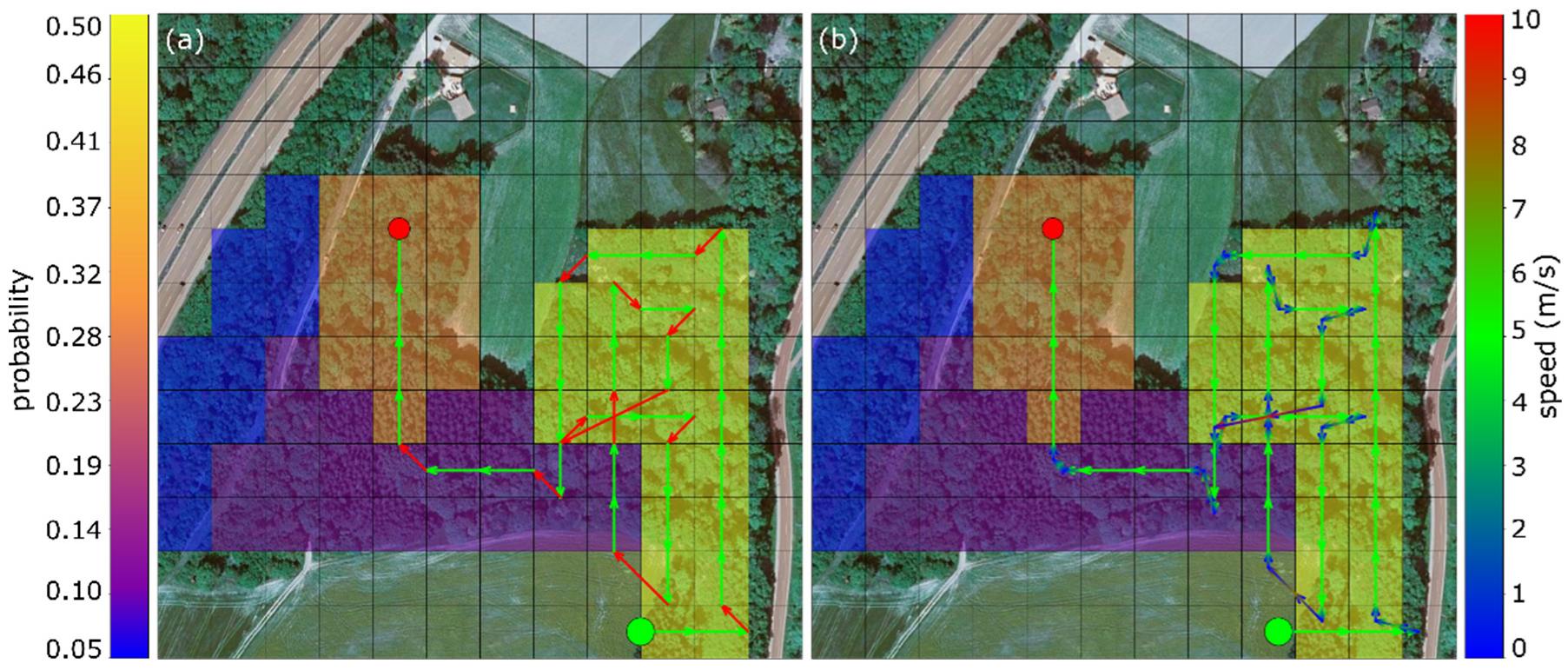

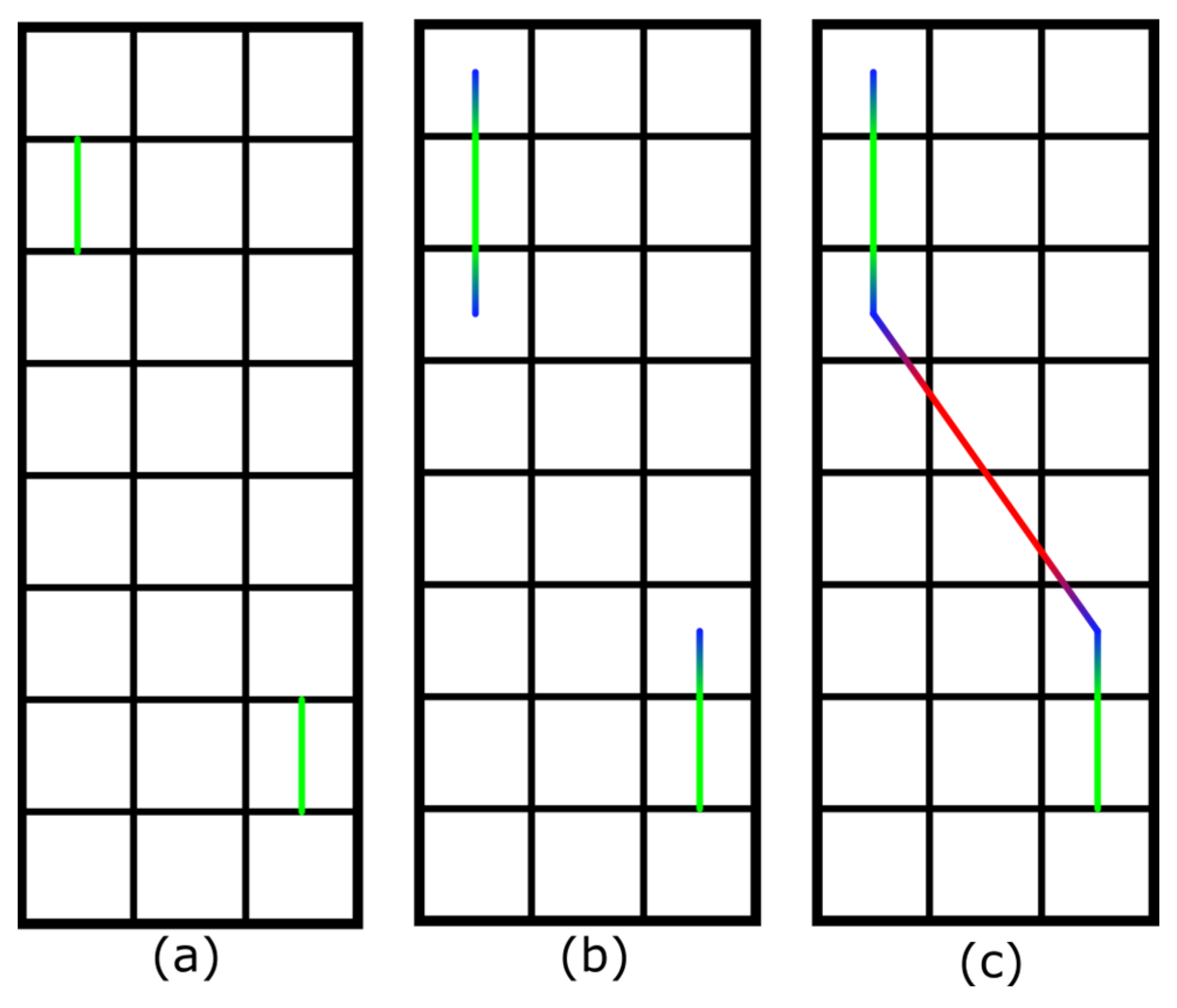

2. Materials and Methods

2.1. Acceleration-Driven Trajectory Planning

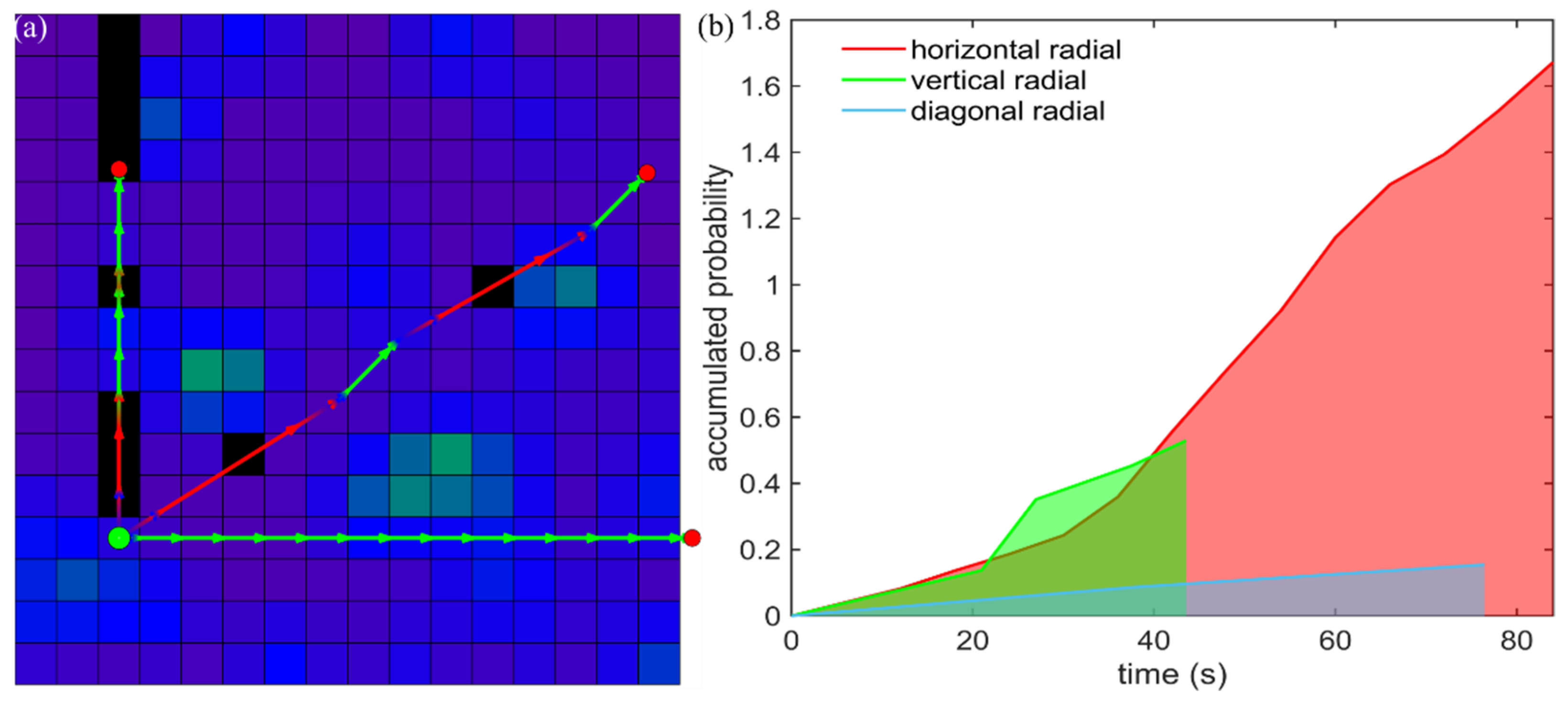

2.2. Radial Gradient Accent (RGA)

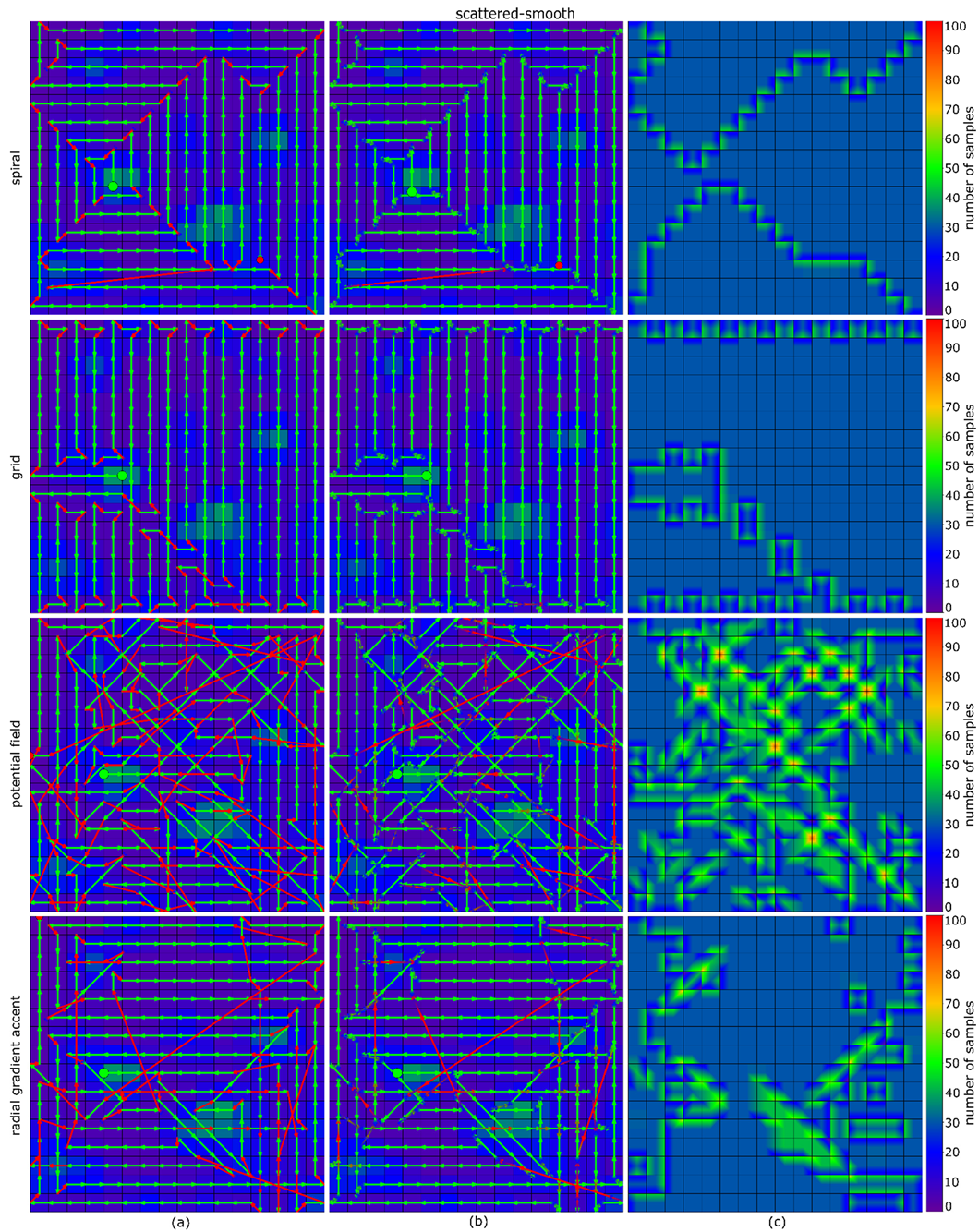

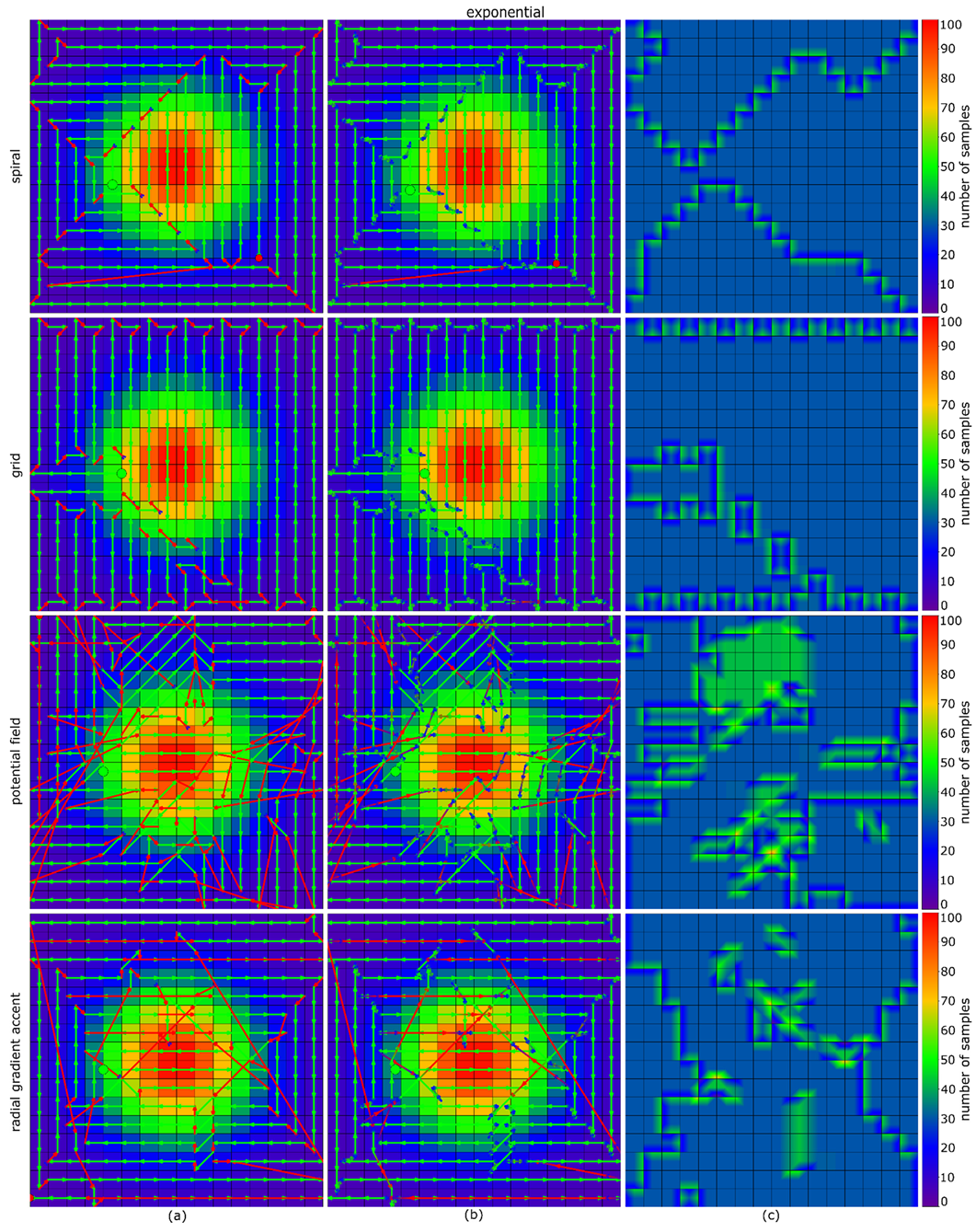

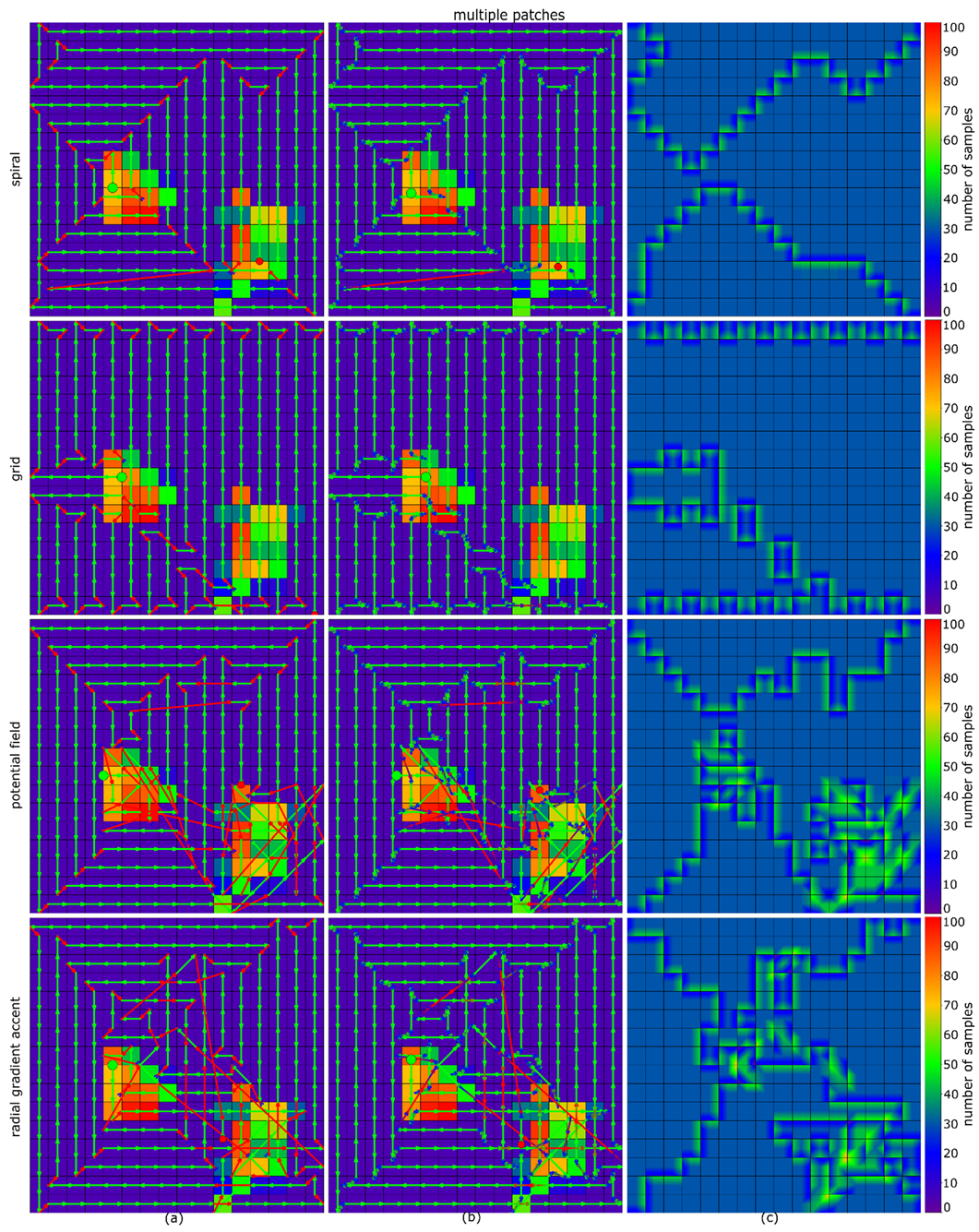

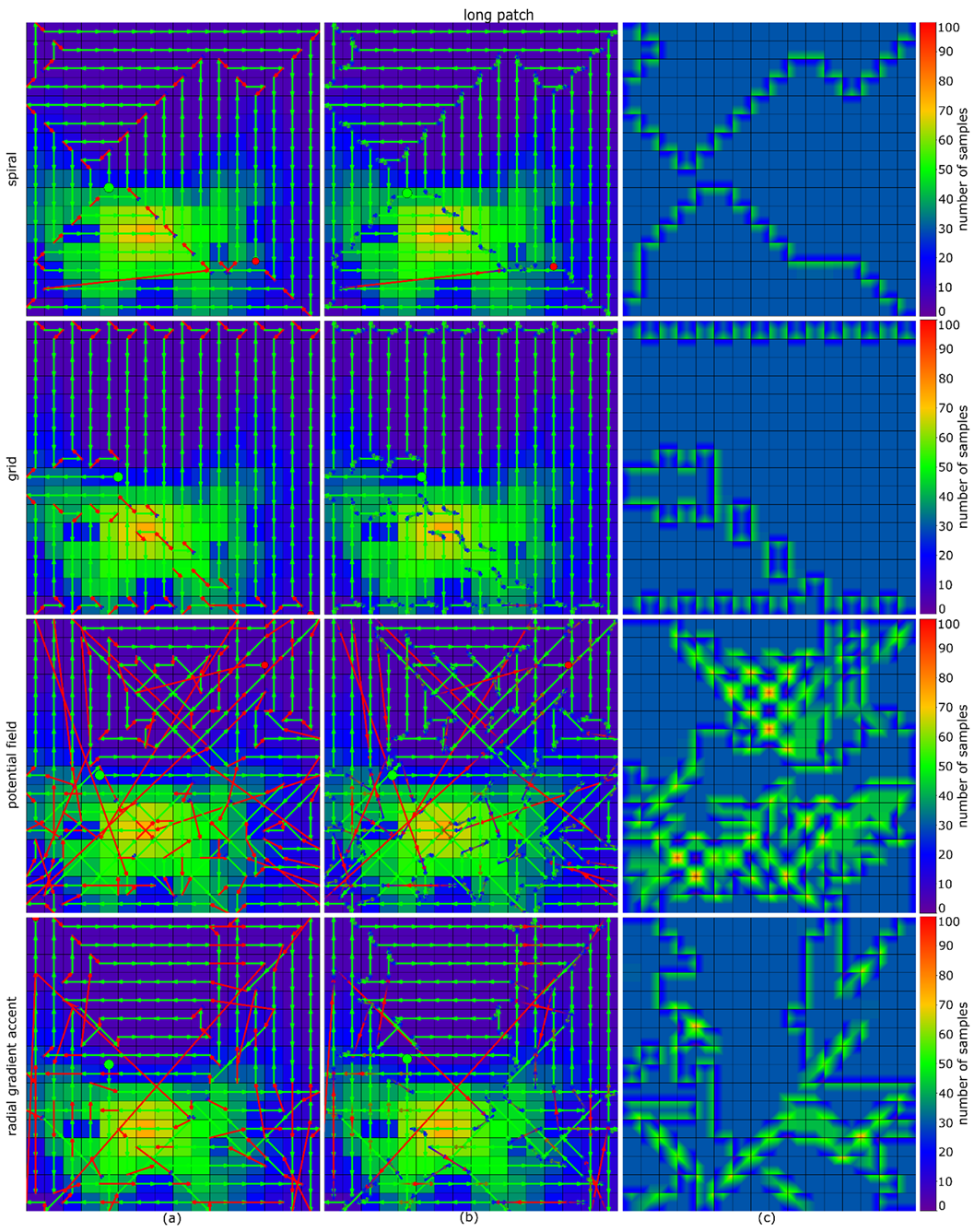

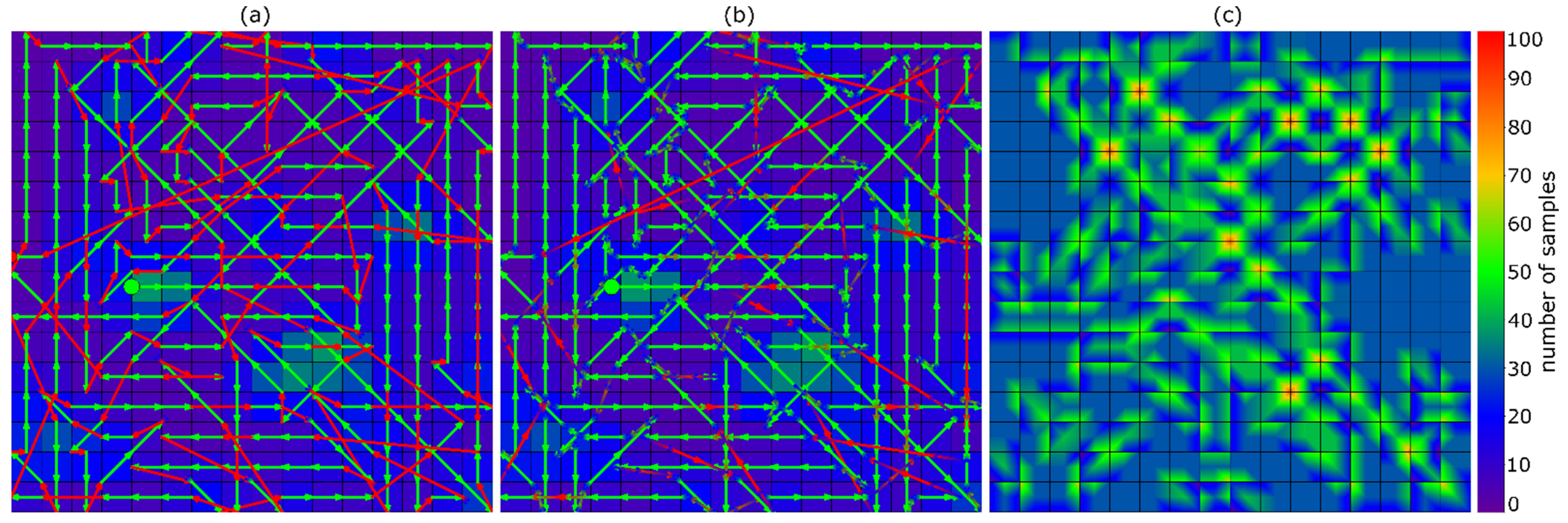

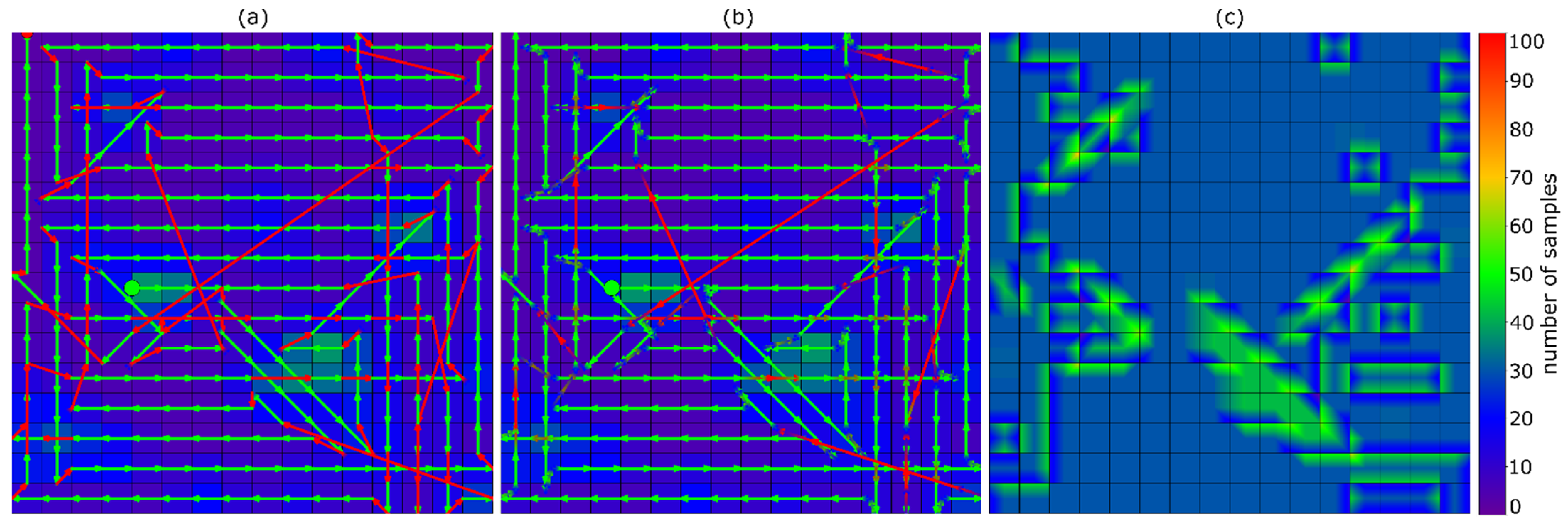

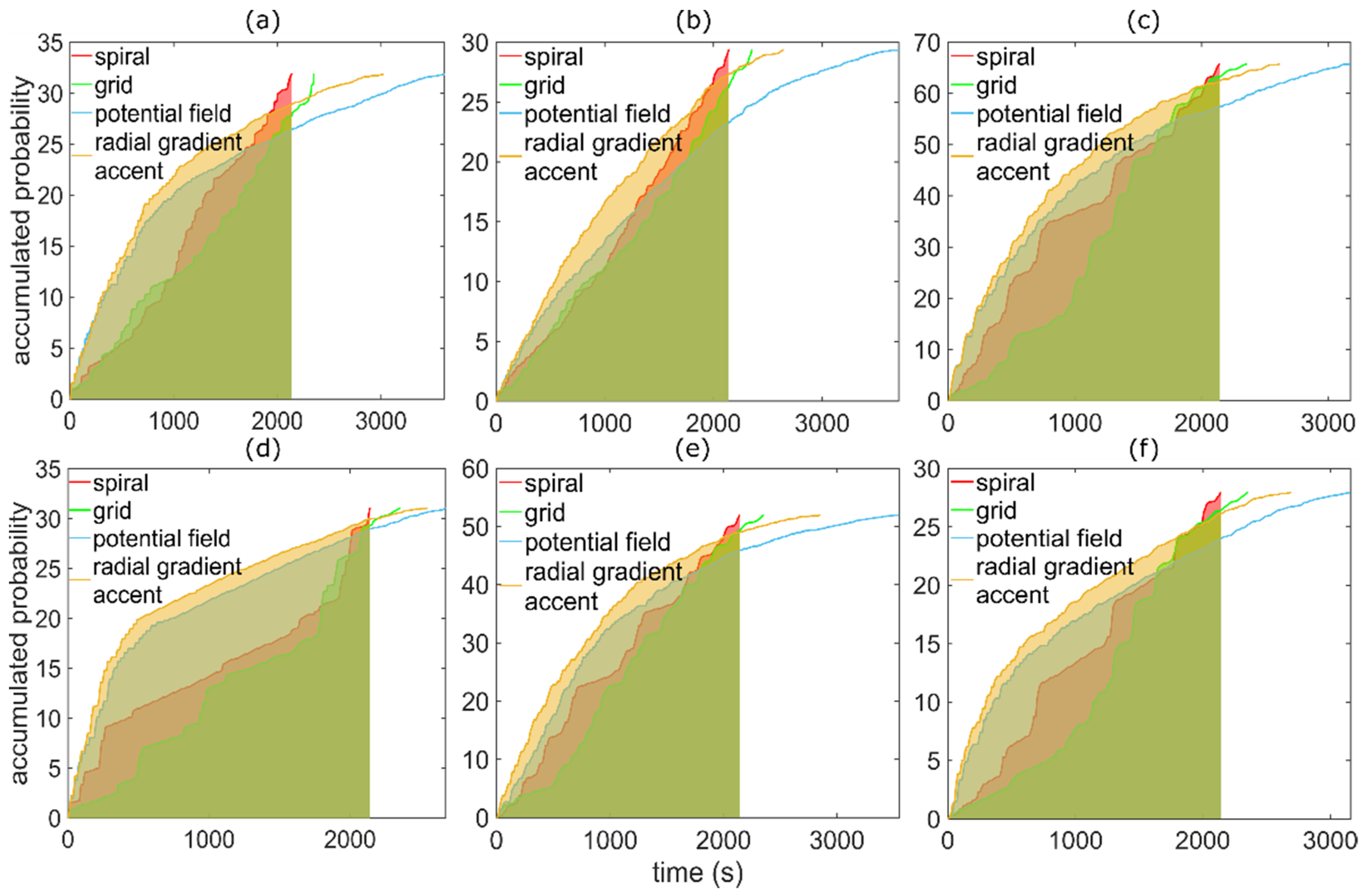

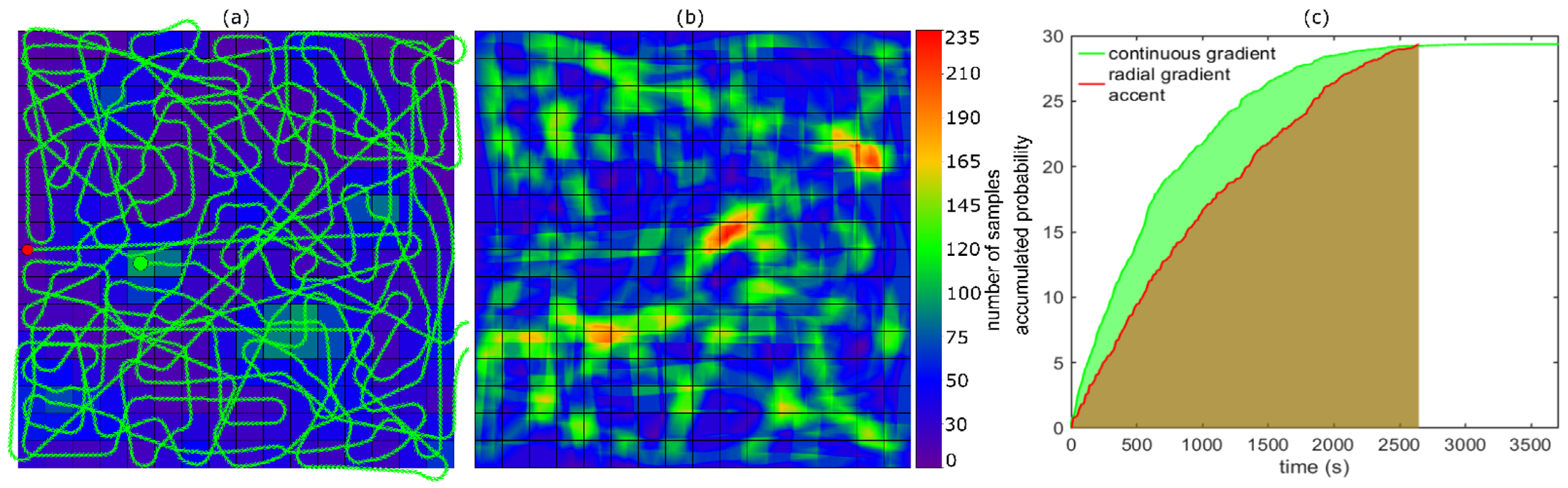

3. Results

4. Discussion and Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

Appendix B

References

- Shakhatreh, H.; Sawalmeh, A.H.; Al-Fuqaha, A.; Dou, Z.; Almaita, E.; Khalil, I.; Othman, N.S.; Khreishah, A.; Guizani, M. Unmanned Aerial Vehicles (UAVs): A Survey on Civil Applications and Key Research Challenges. IEEE Access 2019, 7, 48572–48634. [Google Scholar] [CrossRef]

- Lanillos, P.; Besada-Portas, E.; Pajares, G.; Ruz, J.J. Minimum time search for lost targets using cross entropy optimization. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, Vilamoura, Portugal, 7–12 October 2012; pp. 602–609. [Google Scholar] [CrossRef]

- Pérez-Carabaza, S.; Besada-Portas, E.; Lopez-Orozco, J.A.; Pajares, G. Minimum Time Search in Real-World Scenarios Using Multiple UAVs with Onboard Orientable Cameras. J. Sens. 2019, 2019, 7673859. [Google Scholar] [CrossRef]

- Lanillos, P.; Yañez-Zuluaga, J.; Ruz, J.J.; Besada-Portas, E. A Bayesian approach for constrained multi-agent minimum time search in uncertain dynamic domains. In Proceedings of the 2013 15th Genetic and Evolutionary Computation Conference, GECCO 2013, Amsterdam, The Netherlands, 6–10 July 2013; pp. 391–398. [Google Scholar]

- Lanillos, P.; Besada-Portas, E.; Lopez-Orozco, J.A.; De la Cruz, J.M. Minimum Time Search in Uncertain Dynamic Domains with Complex Sensorial Platforms. Sensors 2014, 14, 14131–14179. [Google Scholar] [CrossRef] [PubMed]

- Perez-Carabaza, S.; Bermudez-Ortega, J.; Besada-Portas, E.; Lopez-Orozco, J.A.; De La Cruz, J.M. A Multi-UAV minimum time search planner based on ACOR. In Proceedings of the 2017 Genetic and Evolutionary Computation Conference, GECCO 2017, Berlin, Germany, 15–19 July 2017; pp. 35–42. [Google Scholar]

- Tisdale, J.; Kim, Z.W.; Hedrick, J.K. Autonomous UAV path planning and estimation: An online path planning framework for cooperative search and localization. IEEE Robot. Autom. Mag. 2009, 16, 35–42. [Google Scholar] [CrossRef]

- Wong, E.M.; Bourgault, F.; Furukawa, T. Multi-vehicle Bayesian search for multiple lost targets. In Proceedings of the 2005 IEEE International Conference on Robotics and Automation, Barcelona, Spain, 18–22 April 2005; pp. 3169–3174. [Google Scholar]

- Bourgault, F.; Furukawa, T.; Durrant-Whyte, H. Optimal search for a lost target in a bayesian world in Field and Service Robotics. In Proceedings of the Recent Advances in Research and Applications, Lake Yamanaka, Japan, 14–16 July 2003; pp. 209–222. [Google Scholar]

- Gan, S.K.; Sukkarieh, S. Multi-UAV target search using explicit decentralized gradient-based negotiation. In Proceedings of the IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 751–756. [Google Scholar]

- Lanillos, P.; Gan, S.K.; Besada-Portas, E.; Pajares, G.; Sukkarieh, S. Multi-UAV target search using decentralized gradient-based negotiation with expected observation. Inf. Sci. 2014, 282, 92–110. [Google Scholar] [CrossRef]

- Hu, J.; Xie, L.; Xu, J.; Xu, Z. Multi-agent cooperative target search. Sensors 2014, 14, 9408–9428. [Google Scholar] [CrossRef]

- Trummel, K.E.; Weisinger, J.R. The complexity of the optimal searcher path problem. Oper. Res. 1986, 34, 324–327. [Google Scholar] [CrossRef]

- Juan, V.S.; Santos, M.; Andújar, J.M. Intelligent UAV map generation and discrete path planning for search and rescue operations. Complexity 2018, 2018, 6879419. [Google Scholar] [CrossRef]

- Schedl, D.C.; Kurmi, I.; Bimber, O. An autonomous drone for search and rescue in forests using airborne optical sectioning. Sci. Robot. 2021, 6, eabg1188. [Google Scholar] [CrossRef] [PubMed]

- Meera, A.A.; Popović, M.; Millane, A.; Siegwart, R. Obstacle-aware Adaptive Informative Path Planning for UAV-based Target Search. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 718–724. [Google Scholar] [CrossRef][Green Version]

- Kurmi, I.; Schedl, D.C.; Bimber, O. Airborne optical sectioning. J. Imaging 2018, 4, 102. [Google Scholar] [CrossRef]

- Bimber, O.; Kurmi, I.; Schedl, D.C. Synthetic aperture imaging with drones. IEEE Comput. Graph. Appl. 2019, 39, 8–15. [Google Scholar] [CrossRef] [PubMed]

- Kurmi, I.; Schedl, D.C.; Bimber, O. A statistical view on synthetic aperture imaging for occlusion removal. IEEE Sensors J. 2019, 19, 9374–9383. [Google Scholar] [CrossRef]

- Kurmi, I.; Schedl, D.C.; Bimber, O. Thermal airborne optical sectioning. Remote Sens. 2019, 11, 1668. [Google Scholar] [CrossRef]

- Schedl, D.C.; Kurmi, I.; Bimber, O. Airborne optical sectioning for nesting observation. Sci. Rep. 2020, 10, 7254. [Google Scholar] [CrossRef] [PubMed]

- Kurmi, I.; Schedl, D.C.; Bimber, O. Fast Automatic Visibility Optimization for Thermal Synthetic Aperture Visualization. IEEE Geosci. Remote Sens. Lett. 2021, 18, 836–840. [Google Scholar] [CrossRef]

- Kurmi, I.; Schedl, D.C.; Bimber, O. Pose Error Reduction for Focus Enhancement in Thermal Synthetic Aperture Visualization. IEEE Geosci. Remote. Sens. Lett. 2021. to be published. [Google Scholar] [CrossRef]

- Schedl, D.C.; Kurmi, I.; Bimber, O. Search and rescue with airborne optical sectioning. Nat. Mach. Intell. 2020, 2, 783–790. [Google Scholar] [CrossRef]

- Kurmi, I.; Schedl, D.C.; Bimber, O. Combined person classification with airborne optical sectioning. arXiv 2021, arXiv:2106.10077. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. 2020. Unpublished work. Available online: https://arxiv.org/abs/2004.10934 (accessed on 15 November 2021).

| Probability Map | Method | Time (s) | Distance (m) | APT Score |

|---|---|---|---|---|

| scattered | grid | 2355.98 | 9945.46 | 28,523.70 |

| spiral | 2144.08 | 9518.96 | 32,121.20 | |

| potential field | 3612.76 | 17,030.36 | 39,247.74 | |

| RGA | 3026.44 | 14,433.31 | 42,834.65 | |

| scattered-smooth | grid | 2355.98 | 9945.46 | 26,463.08 |

| spiral | 2144.08 | 9518.96 | 28,934.02 | |

| potential field | 3704.78 | 16,799.93 | 28,814.11 | |

| RGA | 2644.96 | 12,483.69 | 34,513.97 | |

| exponential | grid | 2355.98 | 9945.46 | 62,702.47 |

| spiral | 2144.08 | 9518.96 | 77,296.31 | |

| potential field | 3173.29 | 15,238.73 | 84,015.36 | |

| RGA | 2614.88 | 12,766.08 | 91,526.18 | |

| multiple-patches | grid | 2355.98 | 9945.46 | 26,909.31 |

| spiral | 2144.08 | 9518.96 | 32,415.52 | |

| potential field | 2678.48 | 12,225.33 | 44,628.51 | |

| RGA | 2551.18 | 11,624.64 | 47,666.90 | |

| large-patch | grid | 2355.98 | 9945.46 | 50,121.14 |

| spiral | 2144.08 | 9518.96 | 57,197.52 | |

| potential field | 3546.04 | 16,542.41 | 62,949.78 | |

| RGA | 2852.51 | 13,679.18 | 70,344.42 | |

| small-patch | grid | 2355.98 | 9945.46 | 24,251.82 |

| spiral | 2144.08 | 9518.96 | 29,707.75 | |

| potential field | 3158.86 | 14,456.42 | 34,517.50 | |

| RGA | 2691.61 | 12,677.97 | 38,068.94 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ortner, R.; Kurmi, I.; Bimber, O. Acceleration-Aware Path Planning with Waypoints. Drones 2021, 5, 143. https://doi.org/10.3390/drones5040143

Ortner R, Kurmi I, Bimber O. Acceleration-Aware Path Planning with Waypoints. Drones. 2021; 5(4):143. https://doi.org/10.3390/drones5040143

Chicago/Turabian StyleOrtner, Rudolf, Indrajit Kurmi, and Oliver Bimber. 2021. "Acceleration-Aware Path Planning with Waypoints" Drones 5, no. 4: 143. https://doi.org/10.3390/drones5040143

APA StyleOrtner, R., Kurmi, I., & Bimber, O. (2021). Acceleration-Aware Path Planning with Waypoints. Drones, 5(4), 143. https://doi.org/10.3390/drones5040143