1. Introduction

Modern drones are more affordable than ever, and their uses extend into many industries such as emergency response, disease control, weather forecasting, and journalism [

1]. Their increased military use and the possible weaponization of drones have caused drone detection and identification to be an important matter of public safety.

There are several types of technology which can facilitate drone detection and classification. Some sensors employ sound-based or acoustic technology to classify drones. Drones give off a unique acoustic signature ranging from 400 Hz to 8 kHz, and microphones can capture this information. Unfortunately, this technology can only be used at a maximum range of 10 meters, and the microphones are sensitive to environmental noise [

2]. When tracking drones through the air, this method becomes impractical.

Optical sensors use one or more cameras to create a video showing the target drone. The classification problem then becomes the identification of specific patterns in the shapes and colours of the drones. This approach is a popular technique because it is intuitive and enables the use of image processing and computer vision libraries [

3] as well as neural networks [

4]. However, optical sensors have a limited range and require favourable weather conditions. For these reasons, they are not reliable enough to use for drone classification, especially at longer ranges.

Drones and other unmanned aerial vehicles (UAVs) rely on (typically hand-held) controllers, which send radio frequency signals to the drone. These signals have a unique radio frequency fingerprint that depends on the circuitry of the controller, drone, and the chosen modulation techniques. Radio frequency fingerprint analysis has been studied as a method to detect and classify drones [

5].

Finally, radar sensors for drone tracking and classification have been extensively studied [

6,

7,

8,

9]. Radars are capable of detecting targets at longer ranges than other sensors and perform reliably in all weather conditions at any time of day [

10].

The classification technique investigated in this paper is based on the target drone’s micro-Doppler signature. A micro-Doppler signature is created when specific components of an object move separately from the rest. The rotation of propeller blades on a drone is sufficient to generate these signatures. The use of radars for studying micro-Doppler signatures has been shown effective [

11] and has been used in conjunction with machine learning for many UAV classification problems [

12,

13,

14,

15,

16,

17,

18,

19]. As such, radars are the chosen technology for this paper.

Previous work has shown that an analysis of the radar return, including the micro-Doppler signature, can reliably distinguish drones from birds [

7,

8,

20]. We now turn to the problem of distinguishing different types of drones. Standard analyses of the radar return include using the short-window and long-window Short Time Fourier Transform (STFT). The short- and long- window labels refer to the rotation period of the drone, or the time it takes for the drone’s blades to make a complete 360-degree rotation. A short-window STFT is when the window length is less than a rotation period, while a long-window STFT is when the window length exceeds the rotation period. The long-window STFT generates a unique signature of the drones in the form of Helicopter Rotation Modulation (HERM) lines. The number of HERM lines and their frequency separation can be used to distinguish between the different drones.

For situations where the pulse repetition frequency (PRF) of the radar is not high enough to extract the full micro-Doppler signature, Huang et al. proposed a log harmonic summation algorithm to use on the HERM lines [

21]. This algorithm estimates the micro-Doppler periodicity and performs better than the previously used cepstrum method [

8] in the presence of noise. Huang also showed using collected radar data that the Minimum Description Length Parametric Spectral Estimation Technique reliably estimates the number of HERM lines. This information can be used to determine whether the target is a rotary drone with spinning propellers [

22].

When the full micro-Doppler signature is available (using a high PRF radar), the short-window STFT can be utilized for analysis. Klaer et al. used HERM lines to estimate the number of propeller blades in these situations [

23]. They also proposed a new multi-frequency analysis of the HERM lines, which enables the approximation of the propeller rates [

23]. In this paper, we leverage the work of Hudson et al., who demonstrated the potential of passing STFT spectrograms into a Convolutional Neural Network (CNN) to classify drones [

13].

Recently, Passafiume et al. presented a novel micro-Doppler vibrational spectral model for flying UAVs using radars. This model incorporates the number of vibrational motors in the drone and the propeller rotation rates. They showed that this model is able to reliably simulate the micro-Doppler signature of drones. Furthermore, they proposed that the model could be further studied for use in unsupervised machine learning [

24].

In another study, Lehmann and Dall trained a Support Vector Machine (SVM) on simulated data. They simulated their data by considering the drone as a set of point scatterers and superimposing the radar return of each point [

25]. However, their work modelled the data as free from thermal noise. Using the Martin and Mulgrew model instead, we can simulate varying signal-to-noise ratio (SNR) conditions in this paper. Doing so provides a more realistic situation in which to apply machine learning. Additionally, our use of CNN provides better classification accuracy than their SVM.

In our investigation, we use the updated versions of the Martin and Mulgrew model [

26] to simulate drone signals and perform additional augmentation to improve the data’s realism. We used the model to produce datasets distinguished by the SNR and PRFs of the contained samples. A CNN was trained for each of these datasets, and their performances were analyzed with the

metric. Our findings suggest that it is possible to train a robust five-drone classifier (plus an additional noise class) using just one thousand data samples, each 0.3 s in duration. Furthermore, we show it is possible to train CNN classifiers robust to SNR-levels not included in training while maintaining performance that is invariant to the blade pitch of the drones.

The work presented in this paper contributes to the field of drone classification in several ways. Many studies explore the use of neural networks for a specific SNR. Here, we provide an analysis for a wide range of SNR values, thus making our results more generalizable to different situations. We also show that the selected model is robust against varying pitches of the propeller blades, maintaining its performance when tested on drones whose blade pitch is outside of the training range. Additionally, we find that X-band radars provide better data than W-band radars for this application within the studied SNR range. This last result is likely due to the configuration of our neural network and may not be true in general cases. Finally, we leverage the Martin and Mulgrew model for data simulation, a model that is not commonly used for drone classification.

This paper is organized as follows.

Section 2 introduces the reader to the concepts used in our work. We review some of the important radar parameters in

Section 2.1, paying close attention to their use in our context. The Martin and Mulgrew model is used to simulate returns from different types of radars and is summarized in

Section 2.2. Drone parameters and data generation are discussed in

Section 2.3, and an overview of the machine learning pipeline is presented in

Section 2.4. The results of the machine learning model are shown and discussed in

Section 3 and

Section 4, respectively. Finally, we present our conclusions and future steps in

Section 5.

2. Materials and Methods

2.1. Radar Preliminaries

As discussed previously, radar (RAdio Detection And Ranging) systems are advantageous over other surveillance systems for several reasons. This subsection will define the radar parameters and discuss the signal-to-noise ratio and the radar cross-section, two significant quantities for drone classification.

2.1.1. Radar Parameters

There are two main classes of radars: active and passive. Active radars emit electromagnetic waves at the radio frequency and detect the pulse’s reflection off of objects. Passive radars detect reflections of electromagnetic waves that originated from other sources or other transmitters of opportunity. In this paper, we will be focusing our attention on active radars. Such radars may be either pulsed or frequency modulated continuous wave (FMCW) radars. Pulse radars transmit pulses at regular intervals, with nominal pulse duration (or pulse width) of the order of a micro-second and pulse repetition interval of the order of a millisecond. Many variables related to the radar and target dictate a radar’s performance. These variables are presented in

Table 1.

For more information about radar types and their operations, we direct interested readers to the text by Dr. Skolnik [

28]. We will now turn our attention to some of the specific measurements that help describe how radars can detect and classify drones.

2.1.2. Radar Cross-Section

The radar cross-section (RCS) is critical when working with drones. As explained in

Table 1, the RCS of a target is the surface area that is visible to the radar. The RCS varies with the target’s size, shape, surface material, and pitch. Typical drones have an RCS value from −

to −

for X-band frequencies and smaller than −

for frequencies between 30–37

[

29]. The RCS of drones varies significantly with the drone model and position in the air. A comprehensive study of drone RCS was performed by Shröder et al. They reported that the material is a significant factor in the blade RCS as metal blades have a much higher RCS than plastic ones [

30].

The strength of the returned radar signal varies directly with the RCS, making it a critical factor in drone classification using radars. This paper will utilize the micro-Doppler effects from drone propeller blades. Thus, the RCS of the drones’ blades is much more important for this investigation than that of the body.

2.1.3. Signal-to-Noise Ratio

Another important quantity for radar studies is the signal-to-noise ratio (SNR). The SNR measures the ratio of received power from the target(s) and the received power from noise. The expression for the SNR depends on the radar parameters previously introduced, including the RCS, and is provided by the radar range equation [

31]:

One would expect that classification performance decreases with the SNR because the target becomes less clear. Dale et al. showed this to be true when distinguishing drones from birds [

32]. As seen in Equation (

1), the SNR is directly related to the RCS and so consequently tends to be small for drones. It is, therefore, crucial to understand and appreciate the signal SNR because it will significantly impact the quality of the trained model. If the training data has an SNR that is too high, the model will not generalize well to realistic scenarios with a lower SNR. The work later in this paper analyzes model performance as a function of the SNR of the signals in the training data.

It is often more convenient to express Equation (

1) in decibels (dB), which is a logarithmic scale. The log-scale simplifies the calculation of the SNR quantity by adding the decibel equivalents of the numerator terms and subtracting those in the denominator. A Blake Chart clarifies this process.

Table 2 shows an example of such a calculation where the radar operates in the X-band (10 GHz frequency) and the object is 1 km away.

Blake Charts make it easy to see how slightly adjusting one parameter can impact the SNR per pulse. It is important to note that for a particular radar and object at a specified range, the only parameters that can be adjusted are the , and . Each of these parameters comes with an associated cost due to limited power supply or the specifications of the radar, and so it is not always possible to achieve a desirable SNR. Due to this, classification models need to perform well in low-SNR conditions.

2.2. Modelling Radar Returns from Drones

The Martin and Mulgrew equation models the complex radar return signal of aerial vehicles with rotating propellers [

26]. The model assumes that the aerial vehicle (or drone in our context) has one rotor. The formulation of the model is presented in Equation (

2) and was used to simulate radar return signals of five different drones under two different radar settings. French [

33] provides a derivation and detailed insights on the model.

where

Table 3 provides a complete description of each of the parameters within the model. Excluding time,

t, the model has eleven parameters approximately categorized as radar and drone parameters. Radar parameters include the carrier frequency,

, and the transmitted wavelength,

. The latter set of parameters depends on the position of the drone relative to the radar and the characteristics of the drone’s propeller. In particular, the strength of the presented model over the initial version of the Martin and Mulgrew equation is its ability to account for variation in blade pitch of the drones,

[

26,

34].

For several reasons, the Martin and Mulgrew model was the chosen data simulation model. It is a model based on electromagnetic theory and Maxwell’s equations. Despite this, it is computationally efficient compared to more sophisticated models. Additionally, the drone parameters used were previously compiled and demonstrated by Hudson et al. [

13]. The following section of this paper will strengthen confidence in the model by comparing it to an actual drone signal. It is found that the Martin and Mulgrew model produces distinct HERM line signatures (dependant on the parameters), as seen in the collected data—a fact that is crucial for this investigation. Although the number of rotors on the drone is not the focus of this paper, it is helpful to note that the model assumes that there is a single rotor. A proposed extension to the model sums the signal over the different rotors [

35], but it has not been extensively studied.

2.3. Data Generation and Augmentation

This section describes the different sampling and augmentation considerations taken to simulate a Martin and Mulgrew signal. As will be elaborated in

Section 2.4, many simulated signals were put together to produce datasets for machine learning.

The data simulation step involved two sets of radar parameters, representing an X-band and W-band radar, respectively. Furthermore, five sets of drone parameters corresponding to different commercial drones were used.

Table 4 and

Table 5 have the parameters for the radars and drones’ blades. Note that along with the five drones (classes), a sixth Gaussian-noise class was produced to investigate the possibility of false alarms during classification and their impact.

Although the selected drones have fixed blade pitches, the parameter was assumed to be variable for modelling purposes since some drones can have adjustable blade pitches. This assumption can improve the generalizability of the analysis. Moreover,

and

R were similarly considered as variable parameters while

and

were set to be constant (zero and four, respectively) for simplicity. As seen in

Table 6, these variable parameters were uniformly sampled to produce meaningful variations between each simulated drone signal.

Besides varying the above parameters, additional methods were used to produce differences between the simulated signals. We applied shifts in the time domain, adjusted the signal to reflect the probability of detection, and added noise to augment each sample. The time shift was introduced by randomly selecting a

such that the resulting signal would be

. Next, a probability of detection (

) of

was asserted by removing some data from the signal, simulating the amount of information typically present in real scenarios. Finally, Gaussian-normal noise was introduced to produce a signal of the desired SNR. The added noise,

, was sampled from

where the standard deviation was given by the rearranged form of Equation (

7). Equation (

8) presents the final augmented signal produced using the Martin and Mulgrew equations. Each simulated sample used for machine learning was

s in length.

The use of a convolutional network requires data with spatially relevant information. The long-window STFT was applied to produce a spectrogram representation of the simulated signals [

36]. The STFT is one of many methods used to produce spectrograms for convolutional learning [

18]. Recall that a short-window STFT has a window size smaller than the rotation period of the drone. However, according to the Nyquist Sampling Theorem, using a short window requires that the radar PRF is at least four times the maximum Doppler shift of the propeller blades to detect micro-Doppler blade flashes unambiguously. In contrast, a long-window STFT cannot detect blade flashes because the window size is larger than the rotational period of the propellers. The long-window method only requires that the PRF is at least twice the propeller rotation rate, making this method more versatile for different radars. Previous work suggests that the long-window STFT can reveal HERM micro-Doppler signatures of drones even under low PRF conditions [

21,

23].

We used two configurations of the long-window STFTs. The first has a window size of 512 for the X-band radar with a PRF of 2 kHz, while the second is a window size of 2048 for the W-band radar with a PRF of 20 kHz. Due to its higher PRF value, the latter requires a larger window size.

Figure 1 shows a long-window STFT spectrogram for each of the five drones outlined in

Table 5. The signals were produced using radar parameters with the X-band (left column) and W-band (right column). For demonstration purposes, these signals have no augmentation. Notice the unique HERM line signature, or bands, within each spectrogram. These signatures are not an exact representation of the drones, but the important fact is that they are distinct from one another—just as we would expect in practice. Furthermore, these spectrograms would be easily identifiable by a convolutional network. We will investigate whether this remains true for signals that have undergone the previously discussed augmentation.

Before continuing with the creation of our neural network, it is prudent to examine whether the radar simulation is suitable for our purposes. To validate the Martin and Mulgrew model, we used the results of a laboratory experiment involving a commercial Typhoon H hexacopter drone. An X-band radar measured the drone, which was fixed in place and operating at a constant blade rotation frequency.

Figure 2 shows the reflected radar signal and its corresponding long-window STFT spectrogram. This measured time-series is periodic, just like the simulated signals produced using Equation (

2). Additionally, there appear to be HERM line signatures in the STFT, verifying the reasonability of the artificial data spectrograms shown previously. The spectrogram is not as clean as those seen in

Figure 1 owing to background noise in the collected signal. This fact is addressed by our augmentation methods which make the simulated signals more realistic. Further validation would have been performed; however, the authors were limited by data access. From this point on, the Martin and Mulgrew model was pursued because it can capture many of the physical drone-blade parameters that contribute to the micro-Doppler signature.

2.4. Machine Learning Pipeline

Datasets were produced using the described data generation and augmentation methodology. A new dataset was created for each combination of radar specification and SNR, where the SNR ranged between 0 dB and 20 dB, in increments of 5 dB. The range for the SNR was motivated by the expected SNR of actual signals collected by our available radars. It is not easy to collect large real-drone datasets of high fidelity in practice. Thus, each dataset contained only 1000 spectrogram-training samples, equally-weighted among the six classes (five drones and noise). A smaller validation set of 350 samples was created to follow an approximate 60-20-20 percentage split. However, having the ability to produce the artificial data with relative ease, three test datasets—each with 350 samples—were uniquely generated. Therefore, when models were evaluated, the results contained standard deviation measures.

The architecture of the neural network has a convolutional and a linear portion. Given an input batch of spectrograms, they undergo three layers of convolution, SoftPlus activation, instance normalization, dropout, and periodic max pooling. The batch then undergoes linearization and passes through three hidden layers with ReLU activation. Finally, the loss is computed using the logits and cross-entropy.

Figure 3 demonstrates this pipeline, with the dashed outline representing the model itself.

Recall that the two simulated radars used different PRF values, resulting in the size of the input spectrograms being different. A PRF of 20 kHz produces more data points per s in the signal, resulting in a larger spectrogram than a PRF of 2 kHz. The spectrogram sizes are and for the 2 kHz PRF and 20 kHz PRF, respectively. This required the creation of two (similar) networks, one for each radar. The general architecture still holds because both networks differ only in kernel sizes and the number of hidden units in the linear layers.

A CNN model was trained for each training dataset (each dataset represents a unique radar and SNR combination). The training was conducted for 300 epochs, and the most generalizable models were selected through consideration of the training and validation loss and accuracy. All training occurred using PyTorch, a Python machine learning library, on an RTX 2060S GPU.

From the work of El Kafrawy et al. [

37], the macro-

score, referred to as the

score, was used to evaluate the performance of the trained models against the three test datasets. In many cases, the

score can be similar to pure accuracy. However, it benefits from considering false positives and false negatives through precision and recall, respectively, making it a preferred metric to accuracy. The formulation is provided in Equation (

9), where

C represents the number of classes (six),

is the precision and

is the recall, both of class

[

37]. In their definitions,

,

, and

are the number of true positives, false positives, and false negatives for class

c, respectively.

where

3. Results

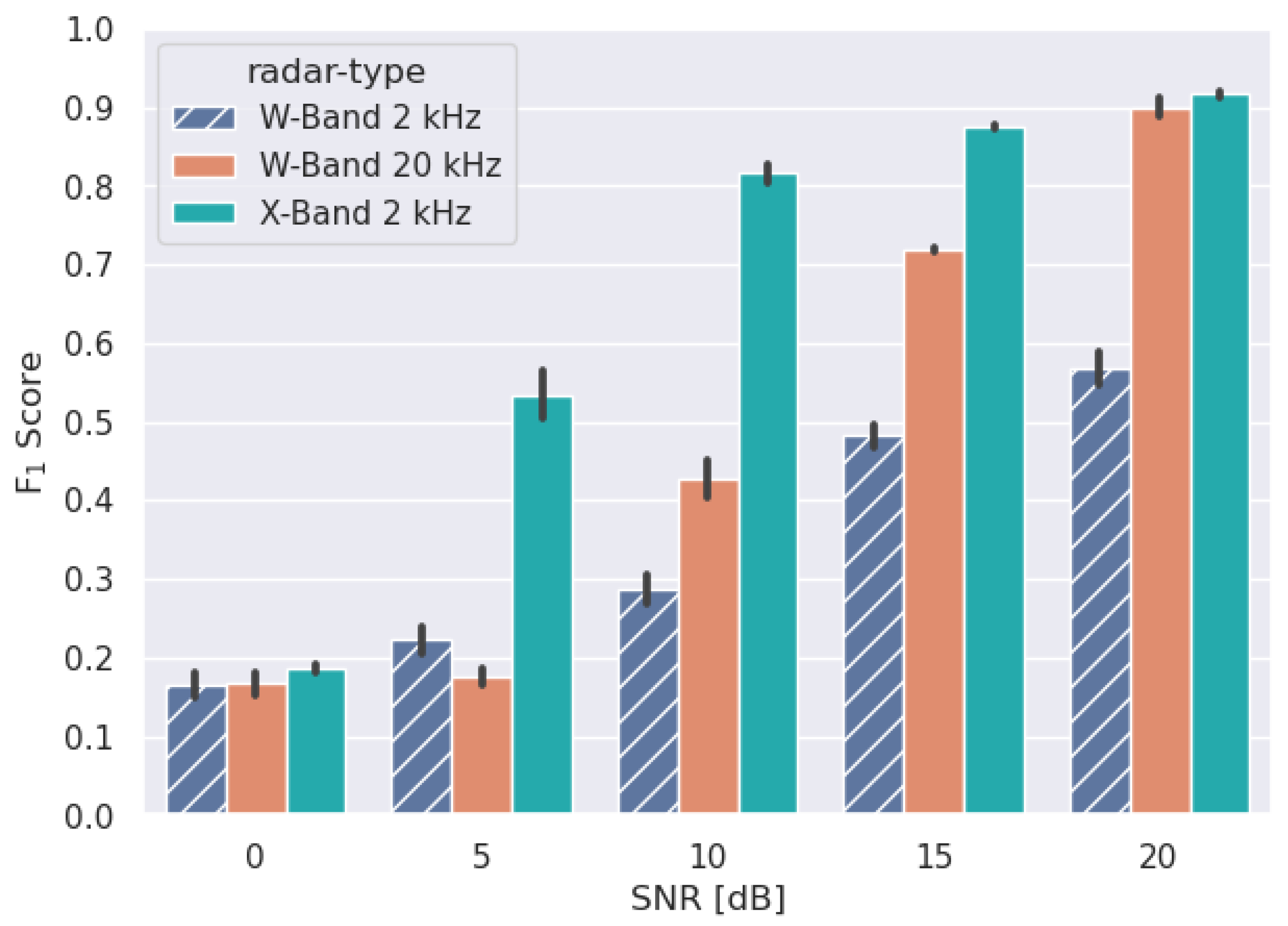

Presented in

Figure 4 are the

score results of the trained models. The model for the X-band 2 kHz PRF radar achieved an

score of

at an SNR of 10 dB. The W-band 20 kHz PRF radar performed much worse, only reaching comparable results at 20 dB SNR. A W-band radar with a 2 kHz PRF was trained as a control model, which demonstrated weaker performance against the X-band radar at the same PRF. The X-band 2 kHz PRF radar, trained on 10 dB SNR, was used for further investigation moving forward due to its ability to perform relatively well under high noise conditions.

A multi-class confusion matrix and Receiver Operating Characteristic (ROC) curve were used to gain insight into the performance of the selected X-band model for each class. The selected model performs well at classifying drone classes. However, the classifier demonstrates a high false-negative rate when a false alarm sample (noise) is presented. As seen in

Figure 5, noise is most often confused with the Matrice 300 RTK. Similarly, within

Figure 6, noise has the lowest area under the ROC curve. Nevertheless, the model maintains a high average ROC area of 0.9767, suggesting a minimal compromise between true positives and false negatives.

Next, the robustness of the model against blade pitch and varying SNR values was investigated. The inclusion of blade pitch was a driving factor in using the more complex version of the Martin and Mulgrew equations to produce the artificial dataset. The results of the trained X-band 2 kHz PRF model against the blade pitch,

, are in

Figure 7. The model’s

score for the bins between 0 and

remains close to the mean of 0.816 as expected. More importantly, the model remained robust, maintaining comparable performance when tested with

values outside those used in training.

In

Figure 8, a similar analysis was conducted to determine the model’s robustness to varying SNR values. The model performs worse when tested on data with an SNR lower than 10 dB. In contrast, the same model can classify much more effectively as the SNR of the provided test data increases.

4. Discussion

Following the processes outlined in

Section 2.3, artificial datasets were generated using the Martin and Mulgrew model, and CNN classifiers were trained for each dataset. Training each of these models enables us to quickly identify the required SNR of the data for drone classification and compare the performance of different radars for this application. Of particular importance, only 1000 spectrograms were present in each training set. This small size reflects the case where real drone data is minimal in applications. Obtained from the work of El Kafrawy et al., and presented in Equation (

9), the multi-class

score metric was used to measure the performance of each trained model [

37].

As found in

Figure 4, the performance in the X-band is superior to that of the W-band for the chosen CNN. This is somewhat surprising as the W-band corresponds to a shorter wavelength and would intuitively result in better classification performance. There could be several reasons for this. Firstly, the 20 kHz PRF spectrogram is quite dense and holds a lot more information than a 2 kHz PRF STFT in the X-band. The additional complexity and detail may not be as robust against noise. By simulating a W-band radar at the same PRF as the X-band, we identify the reason for the discrepancy in performance lies in the transmitted wavelength parameter in the simulation. Note that we do not claim the X-band frequency is the best, but rather that it is better than W-band radars for typical parameters. Furthermore, X-band radars offer superior all-weather capabilities over other frequency bands. The W- and X- band radars were chosen for this paper because they are lighter and more portable than other band radars; however, it is possible that lower frequency bands (e.g., S-band and L-band) may provide superior performance over the X-band. This is undoubtedly a topic of exploration in future work. Additionally, our conclusion is limited to the choice of the model. A deeper CNN might yield a different conclusion, and it is something we plan to explore in future work.

In any case, the result is interesting and valuable. It suggests that an X-band radar with a PRF on the order of a few kHz can be highly effective in classifying drones under our simulation model. The lower PRF requirement in the X-band is also welcome as this leads to a longer unambiguous range. The 2 kHz PRF X-band classifier, trained on 10 dB SNR data, was selected for further investigation.

A multi-class confusion matrix and ROC curve were produced in

Figure 5 and

Figure 6, respectively, which revealed shortcomings in the selected model. The noise class exhibits the weakest performance in both, and the confusion matrix reveals that noise is most often misclassified as a DJI Matrice 300 RTK drone. The reasoning for this misclassification is not immediately apparent. However, a qualitative consideration of

Figure 1 suggests that spectrograms with a dense micro-Doppler signature (HERM lines) might be more easily confused with noise. The Matrice 300 RTK, in particular, has a very dense signature. On the other hand, an STFT of Gaussian noise contains no distinct signature or obvious spatial information. Under the low, 10 dB SNR conditions, the Matrice 300 RTK spectrogram likely becomes augmented to the point where it begins to resemble the random-looking noise STFTs. This result emphasizes the need for reliable drone detection models and algorithms, as false alarms can easily be confused with some drone types during the classification process.

Consideration of

Figure 7 shows that model performance is invariant to different values of

, even for values not included in the training dataset. This result is important because it suggests that classification performance is mostly unaffected by the pitch of the drone blades. In a similar analysis against varying SNR, the trained model demonstrated a linear trend in performance. The model performed worse when evaluated using data with an SNR lower than the 10 dB used for training while showing superior performance on less noisy, higher dB data samples. This result is shown in

Figure 8. Both of these results inform that when training drone classifiers, the blade pitch of the samples is not critical. Additionally, models should maintain linear performance near the SNR of the training samples.

In

Figure 4,

Figure 7 and

Figure 8 we observe the standard deviation of the test results shown by vertical lines. These were produced by evaluating the model’s performance on three different test sets. The small size of the standard deviations strengthens our results’ confidence and, therefore, the efficacy of our CNN classifier.