1. Introduction

Nowadays, it has become possible to come across robots and unmanned systems in various areas, including health, agriculture, mining, driverless vehicles, planet exploration and nuclear studies [

1]. In the unmanned systems integrated roadmap document [

2] presented by the Department of Defense, it is seen that the use of mini/micro robots and Nano-Unmanned Aerial Vehicles (UAVs) will become widespread by 2035 and beyond. As the dimensions of platforms become smaller, it becomes impossible to use a Global Positioning System (GPS) with position errors expressed in meters [

3]. Specifically, the amount of position-error becomes a hundred times larger than the mini-platform size with an approximately 10 cm scale, a level of error which may cause collisions. In addition, GPS signals cannot be used indoors [

4] and in planetary exploration [

5]. Simultaneous localization and mapping (SLAM) have been an active research area in Robotics for 30 years that enable operations even in a GPS-denied environment [

6]. The weight of the mentioned mini/micro-platforms is approximately 100 g, and their length and width are a few centimeters (

Figure 1). Nano mini- and micro-platforms are only sized to carry compact, light, low cost, easily calibrated monocular RGB cameras [

7]. Micro/nano-UAVs must be able to perform localization and mapping simultaneously by using such cameras. These cameras are mostly mounted directly on the vehicle and are used without a gimbal [

8,

9]. As the main challenges of using monocular cameras in a mounting structure, image scale uncertainty [

10] and motion blur [

11] in images are observed. In recent studies, resolution of such troubles has been attempted by using stereo [

12,

13] and RGB-D [

14,

15,

16] cameras and visual inertial SLAM (viSLAM) methods. However, such types of cameras have disadvantages in terms of size, weight and energy consumption. In addition, since the flight time in micro/nano-UAVs is about 10 min due to battery limitations, visual SLAM (vSLAM) applications are performed via transmitting the video on the control panel rather than on-board computations, which enhances the corresponding battery efficiency. Furthermore, IMU calibration time can take up to three minutes on the platform for using IMU data in viSLAM algorithms, which is not required for vSLAM algorithms. Because of the difficulty in synchronization of the IMU and video data at control station, and the long calibration times, the viSLAM methods may not be suitable for such vehicles, which is the main reason for the vSLAM preference in our study.

In this study, a new framework is proposed to solve the problems caused by motion blur for monocular RGB cameras. To the best of the authors’ knowledge, this is the first time that the proposed framework has included modules for detecting and reducing motion blur. Thus, the framework becomes more resistant to existing motion blurs than the frameworks that have been introduced earlier. In our framework, a focus measurement operator (LAP4) has been used to detect the motion blur level and blurry images that remain under the specified threshold are directed to the deblurring module. Motion blur is then reduced by using the selected algorithm in the deblurring module. After that, the process continues with tracking and local mapping stages similar to previously studied frameworks. The proposed method has been tested in the state-of-the-art ORB-SLAM2 [

17] (feature-based method) and DSO [

18] (direct method) algorithms, and its success has been demonstrated.

By definition, the SLAM technique, in which only cameras are used in unmanned systems, is specifically named as vSLAM. The vSLAM method consists of three main modules [

19].

Initialization.

Tracking.

Mapping.

Three main modules can be negatively affected by motion blur. Generally, motion blur arises from the relative motion between the camera and the scene during the exposure time [

20]. In our case, the fast rotational movements of robotic platforms create the motion blur, causing the vSLAM algorithms to lose the pose estimation and thus, the track losses occur [

21]. In this case, if the re-localization of UAVs cannot be applied, the pose of the platform cannot be estimated after the tracking loss. Therefore, some kidnapped robot problems occur. This situation prevents the planned task from being carried out correctly. Moreover, the created map becomes unusable due to inconsistency between the new position and the former one.

Feature-based methods map and track the feature points (corner, line and curves) by extracting the features in the frame with preprocessing. After that, a descriptor defines the features. Some commonly used descriptors are ORB [

22], FAST [

23], SIFT [

24], Harris [

25], SURF [

26]. On the other hand, direct methods use the input image directly without using any feature detector or descriptor [

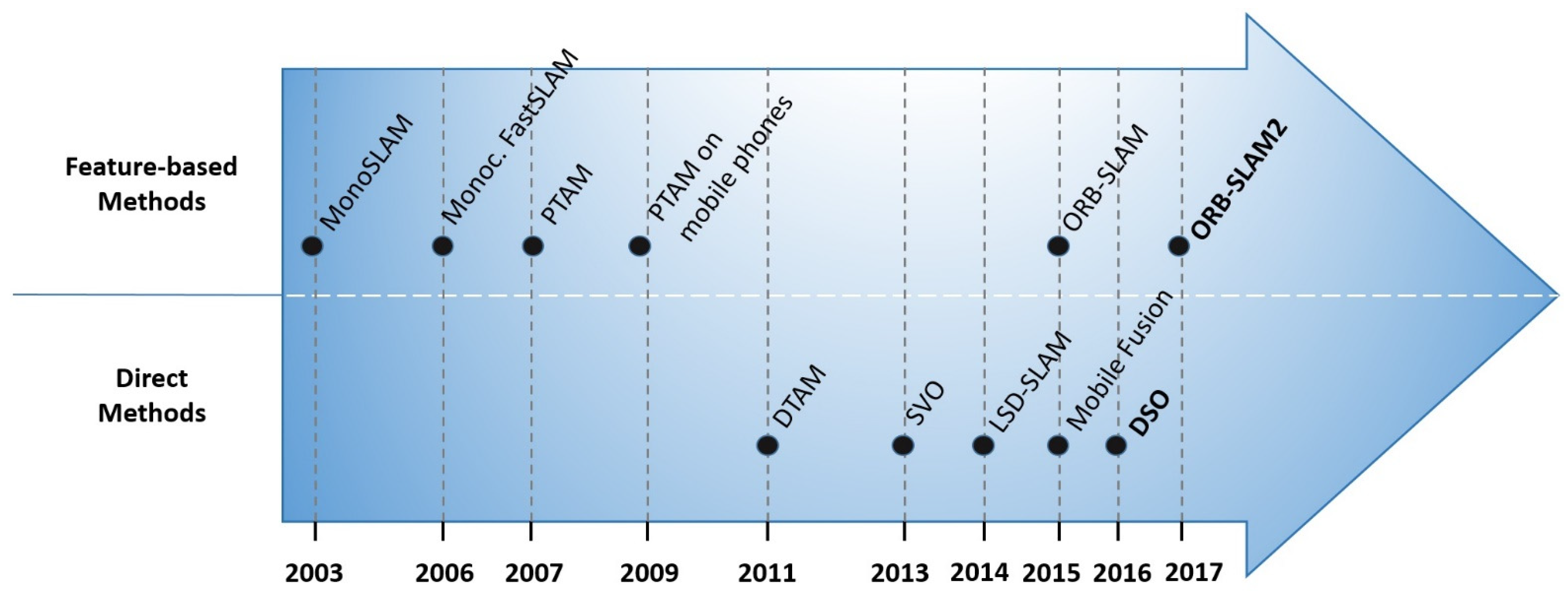

19]. Nevertheless, motion blur has negative impacts on vSLAM performance in both feature-based and direct methods. Another crucial remark is that while motion blur prevents the detection of feature points in feature-based methods, strong rotations obstruct triangulation in direct methods. Therefore, the proposed framework should be compatible with the use of feature-based [

17] and direct [

18] methods (

Figure 2). In our study, both methods are validated experimentally and the corresponding results are presented in the “Experimental Results” section,

Section 3.

2. Proposed Framework and Experimental Setup

Hitherto, limited robotics and SLAM studies have been conducted on reducing motion blur-induced errors or preventing tracking loss in monocular camera-based vSLAM methods; in the paper [

21], several solutions are presented with the prevention of data loss (reverse replay) after the emergence of tracking loss and the implementation of a branching thread structure (parallel tracking) even though it is not suitable for real-time applications. Similarly, in another study [

28], a feature matcher method has been presented for humanoid robots by using point spread function estimation, which is robust to the motion blur effects originating from walking, rotation and squatting movements. In addition to the aforementioned studies, various research has already been established that one must detect additional features such as edges, lines, etc., in order to enhance the corresponding map richness and tracking performance [

29,

30]. If the direction of the motion blur and the direction of the lines/edges are consistent, such approaches become appropriate to improve tracking performance, i.e., trajectory estimation of the vehicle. However, the same performance cannot be achieved in mapping, i.e., the projection of 2D image-features to the 3D space: mapping performance decreases due to floating lines on the map caused by motion blur. It is also crucial to ensure map consistency while avoiding tracking loss.

In the image processing approaches, motion blur is described by the following equation [

20]:

In this expression,

o is the original image,

b is the blurry image,

p is the point spread function and operator (

⊗) is the convolution process. Additive noise is denoted by

n. Image deblurring algorithms can utilize a point spread function (PSF) to deconvolve the blurred image. Deconvolution is categorized into two types: blind and non-blind deconvolution. Blind deconvolution uses the blurred image whereas non-blind deconvolution uses the blurred image and known point spread function for the deblurring process. Blind deconvolution is more complicated and more time-consuming than non-blind deconvolution because it estimates the point spread function after each iteration [

31].

A great number of approaches have been developed in recent years to solve the motion blur problem. For example, a novel local intensity-based prior, namely the patch-wise minimal pixels prior (PMP) [

32], a novel recurrent structure across multiple scales (SRN) [

33], SIUN [

34] with a more flexible network and additive super-resolution, a natural image prior named Extreme Channels Prior (ECP) [

35], graph-based blind image deblurring [

36] and other state of the art methods such Lucy and Richardson [

37], blind deconvolution [

38] and Wiener filter [

39] are available in the literature.

We proposed a framework (

Figure 3) to detect and to reduce motion blur occurrence when compact, lightweight, low cost, easily calibrated monocular cameras are mounted on micro/nano-UAVs. In the proposed framework, Variance of Laplacian (LAP4) is selected as a focus measure operator for detecting motion blur. In the preferred LAP4 method, a single channel of the image is convolved with the Laplacian kernel, and the focus measure score is found by calculating the variance of response. If the focus measure score is above the threshold, then the vSLAM process continues as expected. Otherwise, images are restored by image deblurring methods. Deblurring is applied only for frames below the threshold and not for all frames. In this way, the processing time is kept at a suitable level. It has been observed that the tracking performance is increased, and the tracking loss ratio is decreased in the case of vSLAM algorithms with images restored by deblurring techniques.

In this study, selected motion-deblurring methods (PMP, SRN, SIUN, ECP, graph-based blind image deblurring, Lucy and Richardson, blind deconvolution and Wiener filter) are applied to prevent tracking loss in vSLAM algorithms on a dataset prepared for mini/micro robots and nano-UAVs. A low-cost, low-power light camera was mounted on the mini-UAV and then the blurred low-resolution images were merged to prepare the created dataset. Obtained results based on the created datasets reveal that the proposed framework in

Figure 3 can be implemented in both direct and feature-based vSLAM algorithms.

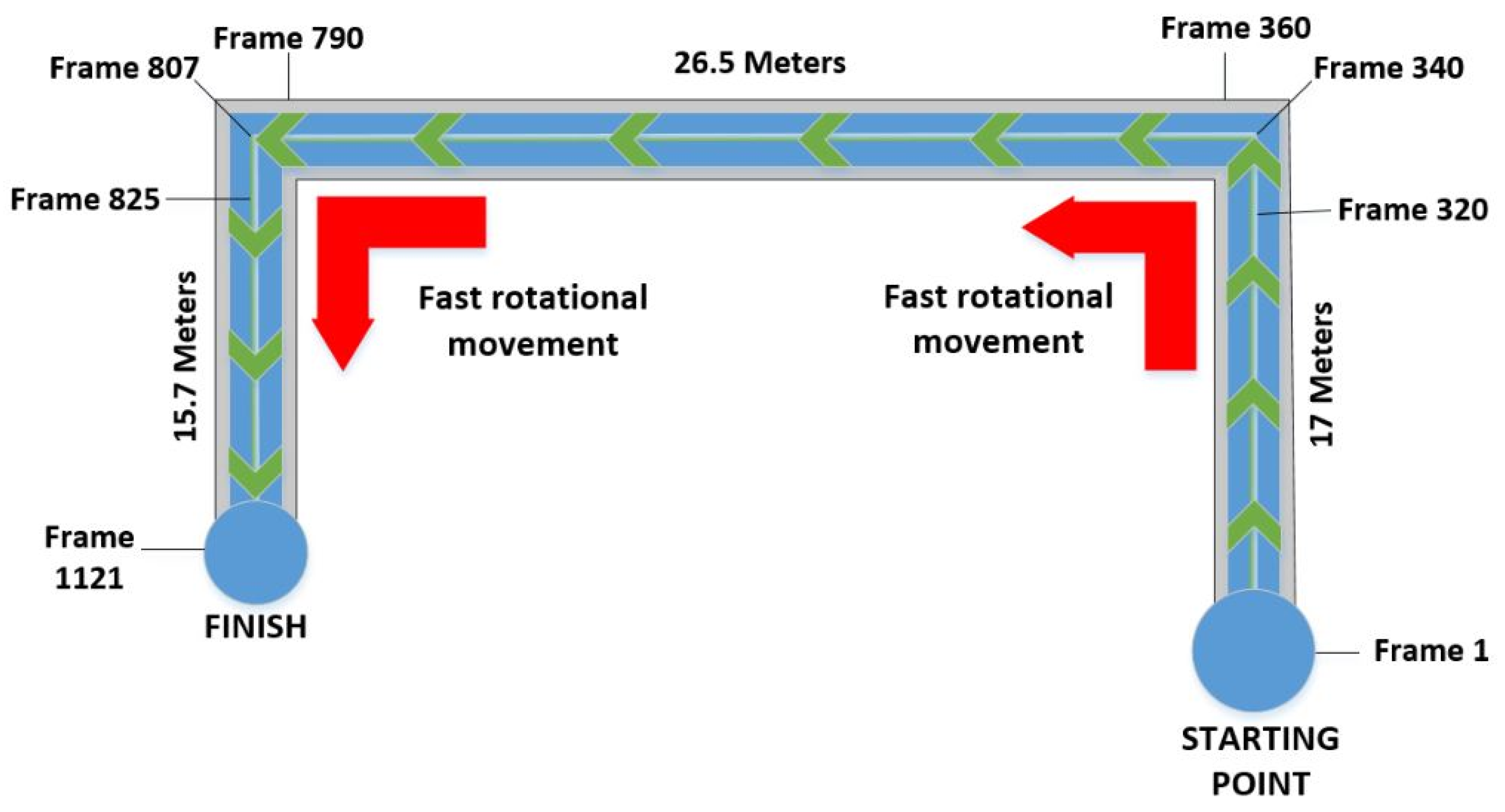

An experiment was performed at an average speed of 1.2 m/s in the corridor environment to observe the tracking loss. A schematical view of the experimental area is drawn in

Figure 4. The experimental area consists of three corridors (17–26.5 and 15.7 m) and two sharp corners. As declared in

Figure 4, the forward movement is plotted in a green color, and fast rotational movements are shown in a red color. In addition, the starting, finish points and the frame numbers corresponding to the rotational movements are given in the same figure.

The experimental area was set up only to observe motion blur caused by fast rotational movements. Tracking loss in forward motion generally occurs in textureless environments, especially in feature-based algorithms. However, ORB-SLAM2 [

17] and DSO [

18] algorithms were resistant to motion blur in forward movements at 1.2 m/s average speed, which was investigated in our experimental area. Several crucial remarks have been deduced from the experiment: the proposed UAV achieved fast rotational movements in the vicinity of rich feature corners at 22 deg/s or 0.384 rad/s. It has been attempted to observe motion blur-based tracking loss in the case when an RGB camera is mounted on the platform and the rapid rotational movement of the unmanned vehicle is realized. A pinhole camera, which is frequently used in nano/micro-unmanned systems and robot platforms, was selected for the experiment. The common feature of such cameras is that they have advantages in terms of both size and cost. In the experiment, a Raspberry Pi v2.1 camera was recorded at the frame rate of 20 fps in 640 × 480 resolutions. Compact and non-gimbal cameras in micro/nano-size unmanned systems are more preferred in terms of dimensions. In addition, the low-cost cameras are considered to be the most preferable ones in disposable, non-reusable vehicles in the future [

2]. The reason why our own dataset is studied is the following: the vSLAM experiments have already been conducted in available datasets such as EUROC [

40] and KITTI [

41]. Nevertheless, the captured images were obtained with high-quality cameras and there were no fast rotational movements defined in vicinity of corners. Even though fast movements were performed in these datasets, sharp rotational movements are not included at large angles such as 90 degrees. Under these circumstances, no targeted tracking losses were observed, which is the requirement for the use of deblurring algorithms.

Drone images flying at the height of 140 cm were obtained in the experiment. The platform made a straight motion with an average speed of 1.2 m/s until it reached the corners. In the turns, especially, 90 degrees of sharp and rapid yaw movement was achieved. The dataset was created by obtaining 1121 frames in the corresponding corridor orbit with a forward-facing camera. Processing speed is crucial for the extraction of targeted features from the dataset. For different processing speeds, the motion blur level does not change, but detector and descriptor’s allocated processing time varies. For example, when the processing speed is varied from 20 to 10 fps, the allocated processing time is doubled, and thus more time can be given to complete the process in the relevant frame. Nevertheless, the corresponding exposure time was kept the same for every processing speed and the blurring effect was the same as well. The images in the studied dataset were processed at a processing speed of 20, 10 and 5 fps in ORB-SLAM2 [

17] and DSO [

18] algorithms. In both algorithms, tracking loss occurred in fast rotational movements at the selected processing speeds. Various objects such as coffee machine, cabinets, doors, etc., were located with distinctive features in the corridor environment. In this way, the feature extraction and triangulation processes were accomplished easily in forward movement.

3. Experimental Results

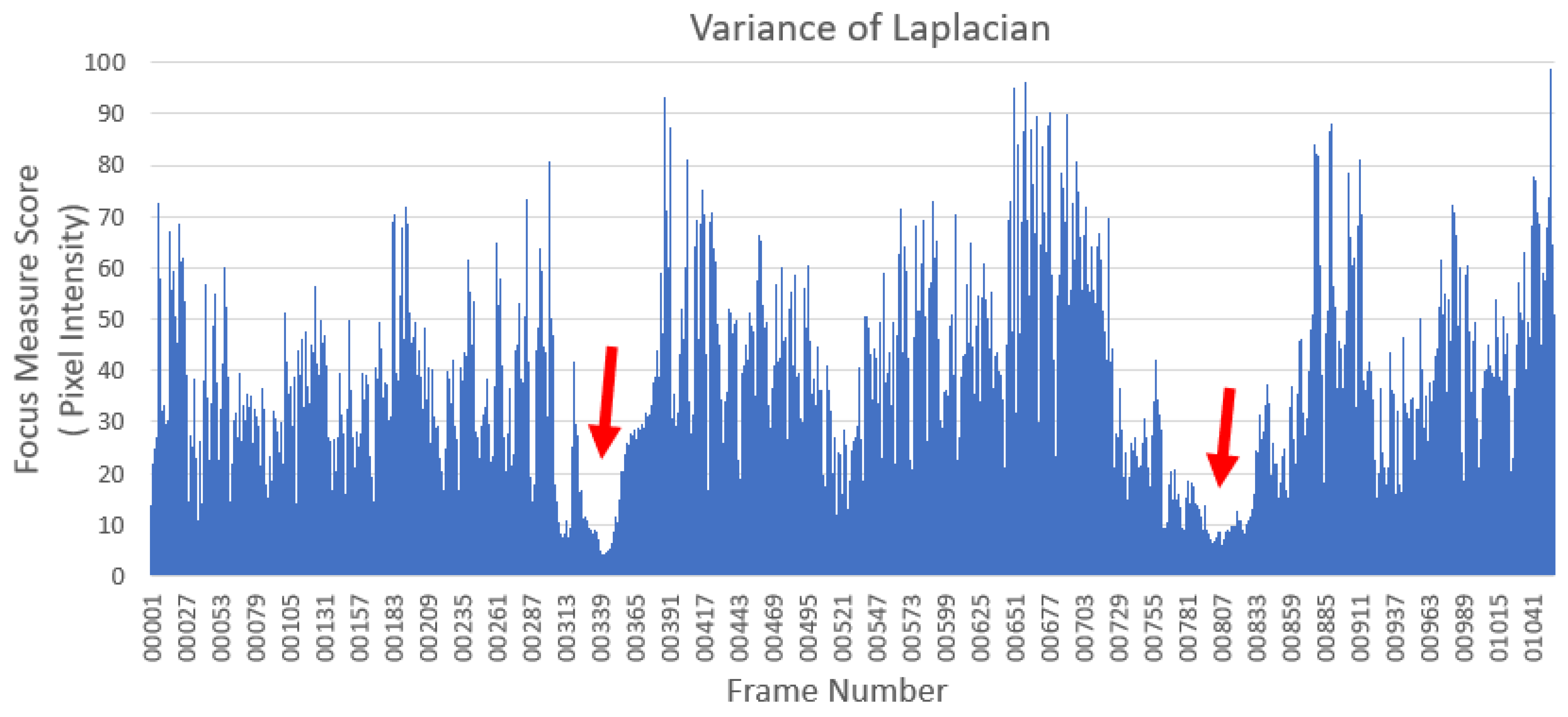

There are an excessive number of focus measurement operators used for motion blur analyses [

42]. The dataset in our study was analyzed with the LAP4 method, which is one of the most suitable for real-time applications. Laplacian operators are aimed to measure the number of edges present in images through the second derivative of Laplacian. Laplacian-based operators are very sensitive to noise because of second derivative calculations [

43]. By means of Variance of Laplacian analysis, the corresponding focus measure score (FMS) was relatively low in fast rotational movement cases.

It has been determined that tracking loss occurs in regions where the focus measure score is relatively low, as pointed out via red arrows in

Figure 5. Frames at which the focus measure score is below 10 were sorted out from the entire dataset and merged in

Figure 6. It was observed that these frames were more exposed to motion blur. In this case, the focus measure score of FMS = 10 was assigned as a threshold value of motion blur throughout the study. In order to eliminate/reduce the motion blur, previously selected deblurring techniques (PMP, SRN, SIUN, ECP, graph-based blind image deblurring, Lucy and Richardson, blind deconvolution and Wiener filter) were applied for the frames with a focus measure score of FMS < 10. Thus, pictures exposed to motion blur were restored via different deblurring techniques. Corresponding focus measure score of the restored pictures was recalculated using the LAP4 method and most of the resultant FMS values were found to be higher than the assigned threshold value of FMS = 10, which indicates the deblurring performance of the studied techniques. Finally, the framework was tested under the observation of the success of the restored images in direct and feature-based vSLAM methods.

Changes in the restored images have been measured with different metrics. The metrics used to measure the correlation between the restored images and the motion blur exposed dataset images are Peak Signal to Noise Ratio (PSNR) and Structural Similarity (SSIM). Such metrics are used for the restored image set, which has a focus measure score below 10.

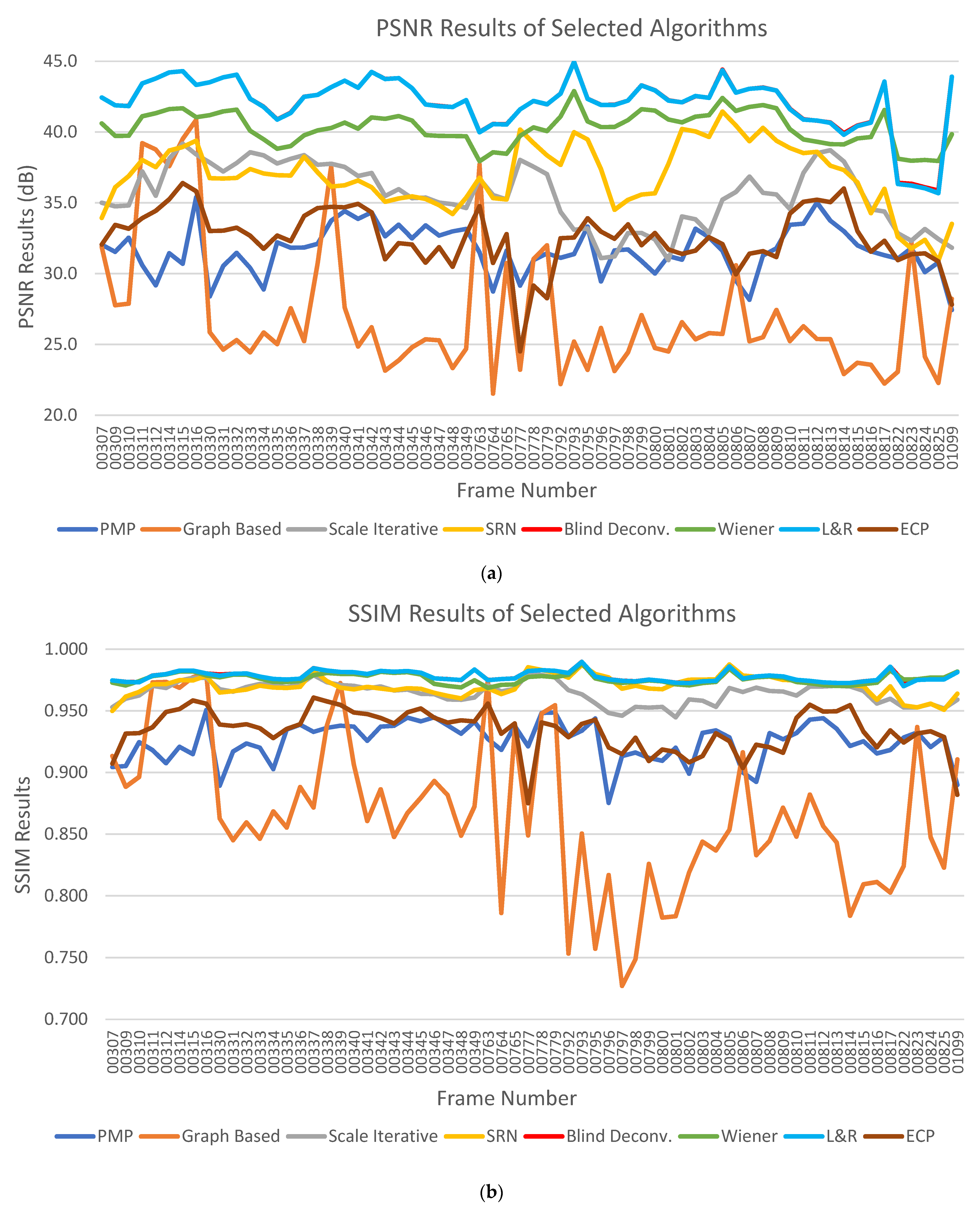

From 1 to 10 pixels, a 360-degree full-circle scanning was performed, and the most suitable point spread function was chosen for Wiener filter, Lucy and Richardson and blind deconvolution algorithms. In addition, the PSNR and SSIM values of each method are given on the graph in

Figure 7. The improvement in focus measure scores of restored images is shown in

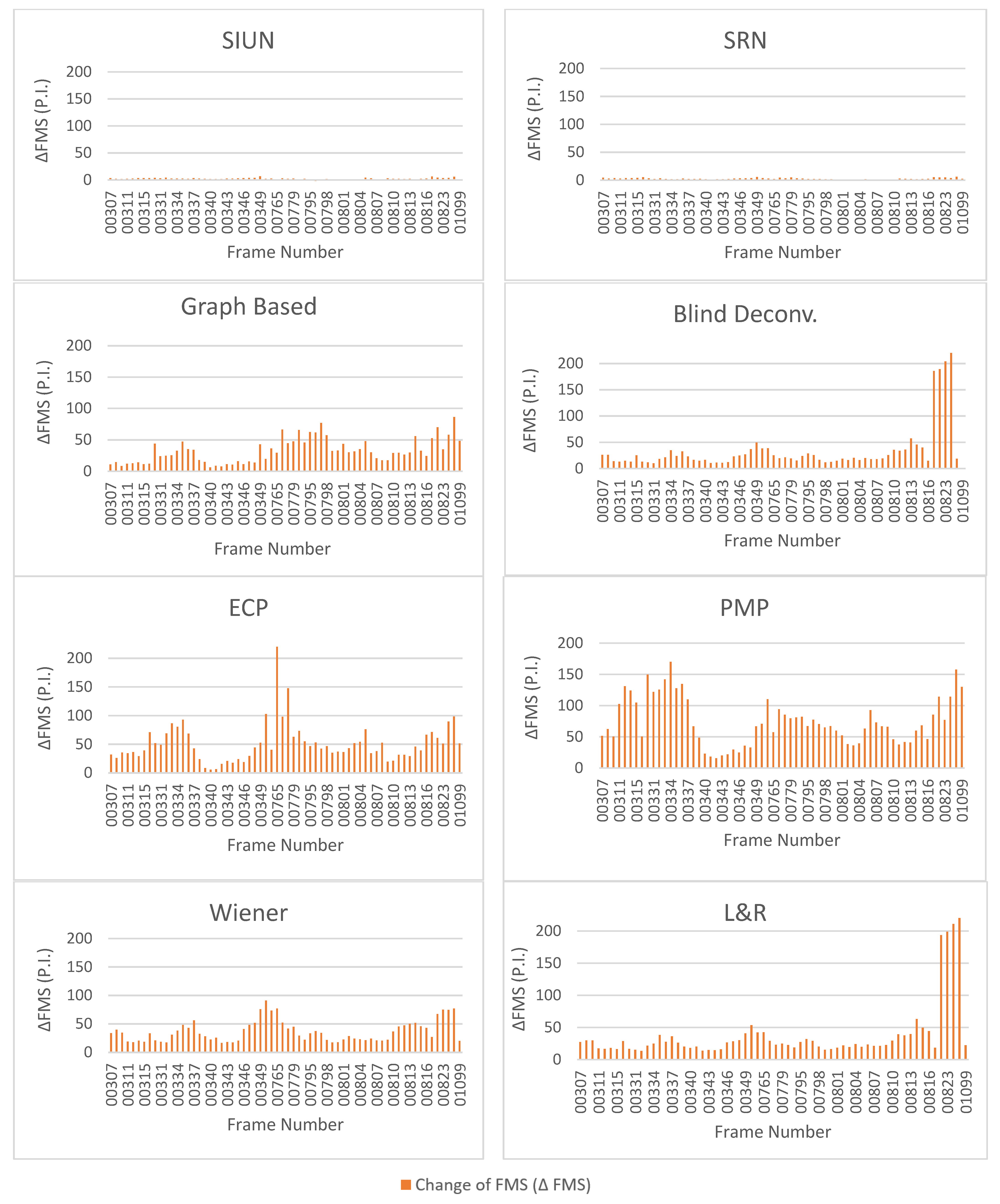

Figure 8. An increment in focus measure score was achieved by using the related methods.

3.1. PSNR and SSIM Results

As the well-known image quality metrics, PSNR and SSIM metrics are investigated for the rest of the study. Terminologically, PSNR is the ratio between the maximum power of the restored image and of its blurred input. SSIM is another indicator used for measuring the similarity between the input blurred image and its restored version. If the calculated SSIM value is close to one, that indicates the structural information of the restored image is very similar to the original blurred one. The value of SSIM is always desired to be close to unity. Corresponding PSNR as well as SSIM analyses were carried out for the selected deblurring methods and represented in

Figure 7. In PSNR and SSIM analyses, it was observed that blind deconvolution and Lucy and Richardson (L&R) algorithms provide similar performance. According to the experimental measurements, the improvement in restored images is the best in these two algorithms.

3.2. Focus Measure Score Analysis

The change of focus measure scores of each deblurring method are given in the

Figure 8. The most successful algorithms in terms of the focus measurement score are PMP, ECP, Wiener, L&R, BD, Graph-Based, SRN and SIUN, respectively (

Table 1). The gain in focus measure score is of the lowest level for the SIUN and SRN algorithms. Other algorithms contributed to three times greater increase in FMS value compared to SIUN and SRN techniques.

The tracking performance of direct and feature-based vSLAM algorithms was observed on a dataset with restored frames, which are the output of each motion-deblurring algorithm. The restored dataset was processed at processing speeds of 5, 10 and 20 fps. The performances of ORB-SLAM2 [

17] and DSO [

18] algorithms, which are the state-of-the-art methods, are given in

Table 2 and

Table 3, respectively. A tracking score parameter was created to reveal how many different processing speeds vSLAM algorithms were successful in total. The tick symbol is used for “successful” results whereas the cross symbol reveals a “fail”.

The average change in FMS results, ΔFMS, for selected motion-deblurring algorithms is presented in

Table 1. It has been observed that the PMP method has better performance in both rapid rotational movements and forward movement cases. Even though the ECP method seems to have a rather higher average ΔFMS value, it shows a worse performance, especially at sharp corners, which could be inferred from ΔFMS scores tables in

Figure 8. The Wiener and L&R methods also possess relatively higher average ΔFMS value compared to other deblurring methods {BD, GB, SIUN, SRN}. An important remark from the table is that although Wiener and L&R methods have lower ΔFMS than the ECP method, both methods have better performance in the case of rapid rotational motion, which can be deduced from the comparison regarding tables in

Figure 8.

Investigating the results in

Figure 7 and

Table 1, the calculated SSIM graph for the GB method shows fluctuating behavior with the lowest value, which implies that the restored images are not structurally matched with the original blurry images in the GB algorithm. For that reason, the GB method may not be considered as a successful candidate for deblurring process in vSLAM algorithms.

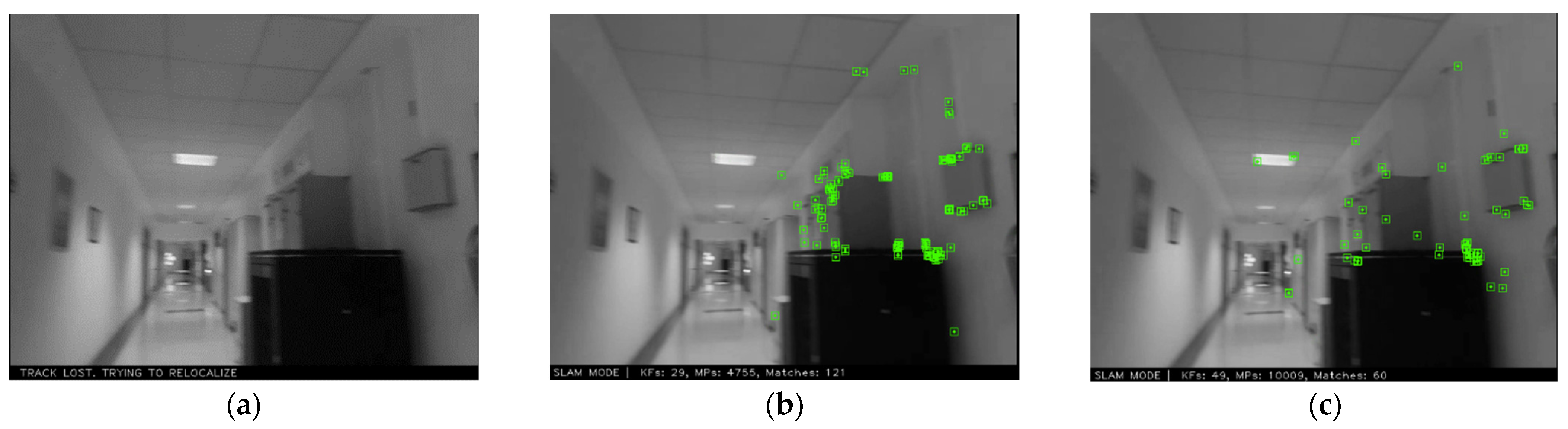

The ORB-SLAM2 algorithm was experimentally studied and the tracking loss performance were investigated for selected PMP and L&R deblurring methods. Sample frames for different methods at specified frame rate are demonstrated in

Figure 9. Compared to the original ORB-SLAM2 algorithm results (without applying any deblurring methods), successful tracking was achieved for the case of PMP (L&R) with a processing speed of 20 fps (5 fps) (see

Figure 9).

4. Discussion

Today, it has become possible to use robots and unmanned vehicles in many areas. Shortly, it is planned to increase the use of micro/nano-size UAVs. Localization and mapping are essential for unmanned vehicles to perform expected operations. GPS signals cannot be used by micro/nano-UAVs because the accuracy of GPS at the meter level is hundreds of times greater than the size of the platforms. Localization with this level of precision is not possible for micro/nano-UAVs. In addition, micro/nanoscale unmanned vehicles and robots are also expected to operate indoors. However, their payload can be compact, lightweight, low cost and easily calibrated monocular RGB cameras. It is also not possible to use a gimbal due to weight and size limitations. Visual SLAM (vSLAM) methods are the preferable methods for locating systems with monocular RGB cameras. Fast rotational movements of UAVs that will occur in operations may cause motion blur.

In the previous studies, the resolution of the tracking loss problem was mostly attempted by the integration of the IMU or the use of more featured cameras such as an RGB-D or stereo-camera. However, hardware-based solutions caused the platforms to increase in dimensions and weight, which hinders their usage in nano platforms. Nano mini and micro-UAVs are only sized to carry compact, light, low cost, easily calibrated monocular RGB cameras. Moreover, such platforms do not have suitable battery capacity for onboard computation. Hence, image processing is applied on the control station with transmitted video. Thus, the transmitted video can be utilized both from the operator to control the platform and for vSLAM applications to mapping and localization.

Event cameras can be an alternative to monocular RGB cameras for vSLAM applications, if developers can produce them cheaply in nanoscale. Nevertheless, an additional reconstruction phase is required in the case of event cameras to provide details of the environment. On the other hand, detailed environmental data can be easily provided by RGB cameras. Moreover, output images of RGB cameras are similar to human vision, which facilitates the operators’ control of the platform.

Our study has two major contributions to the vSLAM literature: for the first time, blur level detection was performed by including the focus measure operator in vSLAM algorithms. In this way, frames with high motion blur levels can be detected without causing tracking loss. The second contribution is to ensure that the algorithm continues to operate both in the mapping and tracking stages by correcting only the frames with high blur level.

As a general evaluation of the experimental data, several important remarks could be declared: (1) it has been observed that tracking loss can be prevented at some speeds when the original frames in the dataset are replaced with restored frames; (2) motion blur in feature-based methods negatively affects feature extraction. When the blurred image is restored via specified deblurring methods, there have been improvements in detecting the relevant features in the restored images; (3) in direct methods, it has been observed that the motion blur reduces the triangulation performance, and deblurring methods can annihilate this situation; (4) it has also been experimentally verified that the tracking performance obtained in vSLAM algorithms is not directly proportional to PSNR and SSIM values even though it is directly related to the focus measure score.

A comparative investigation of existing features such as initialization, tracking, mapping, blur detection and motion blur reduction/elimination is represented in

Table 4. It can be easily explored that the proposed framework is more resistant under motion blur and applicable under the initialization stage.

5. Conclusions

In this study, a framework has been proposed to increase the tracking performance of SLAM algorithms by decreasing the motion blur-caused tracking loss rate. A focus measure operator (Variance of Laplacian) is recommended for detecting motion blur and deblurring methods (PMP, SRN, SIUN, ECP, graph-based blind image deblurring, Lucy and Richardson, blind deconvolution and Wiener filter) are applied for the frames which have a focus measure score less than 10. With our proposed method, reduction/elimination of tracking loss through blur detection and prevention has been tested. The success of the relevant framework has been demonstrated in feature-based and direct vSLAM algorithms. It has been observed experimentally that compared to feature-based and direct methods, the novel vSLAM framework is more resistant to motion blur and its mapping/tracking capability is more effective by means of blur prediction and prevention.

As a future work, our study can be implemented for real-time applications. Detection and reduction of motion blur in real time using learning-based methods may be an innovative research topic for vSLAM algorithms. Our work is focused on reducing/eliminating tracking loss due to motion blur, which stops vSLAM algorithms from working, rather than better trajectory estimation. The effects of motion blur on the trajectory estimation can also be studied in future in a dataset containing blur at a level where tracking loss will not occur. Furthermore, the proposed method also may be extended by using other types of focus measure operators for certain environmental conditions.