Vegetation Extraction Using Visible-Bands from Openly Licensed Unmanned Aerial Vehicle Imagery

Abstract

:1. Introduction

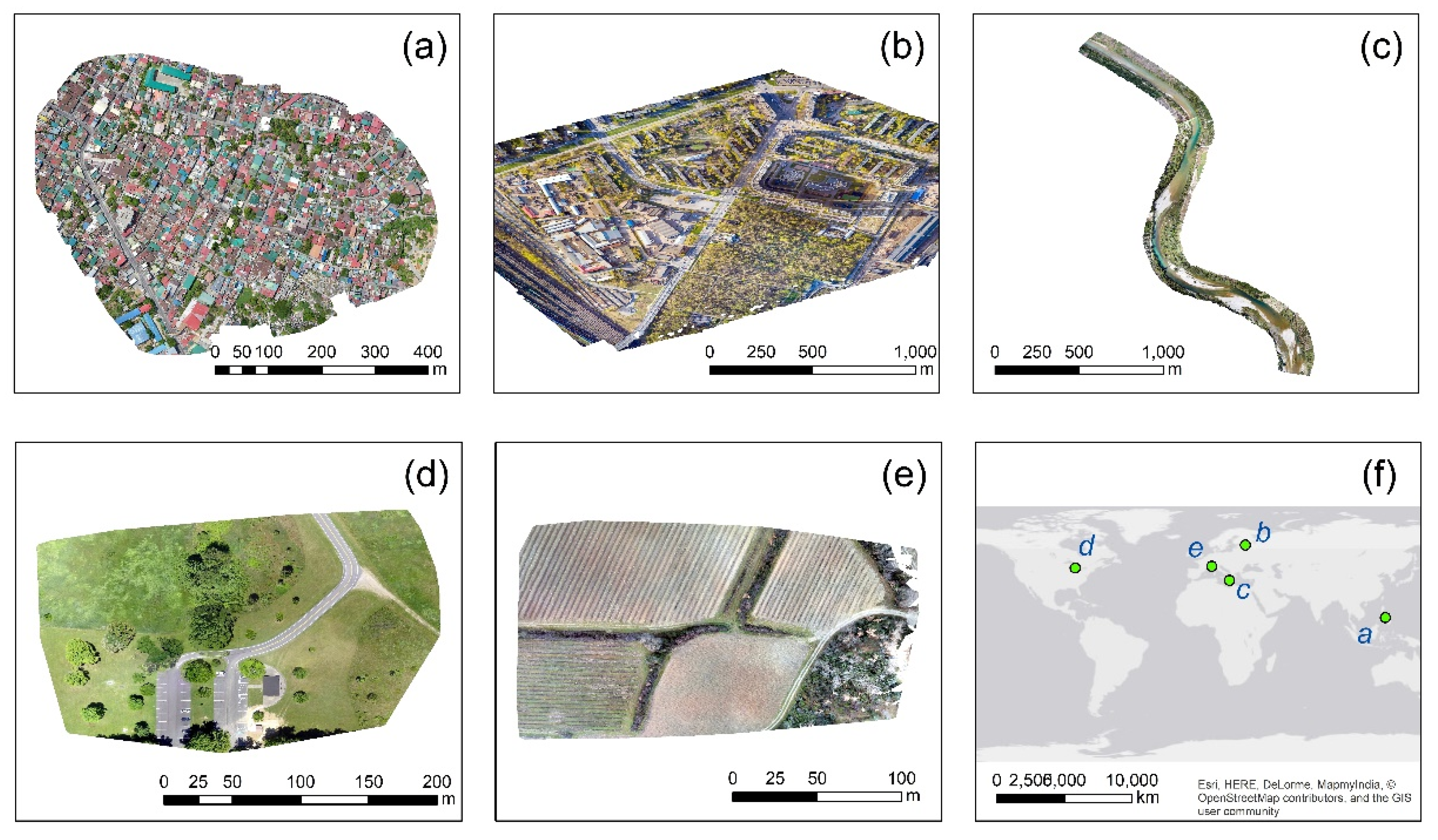

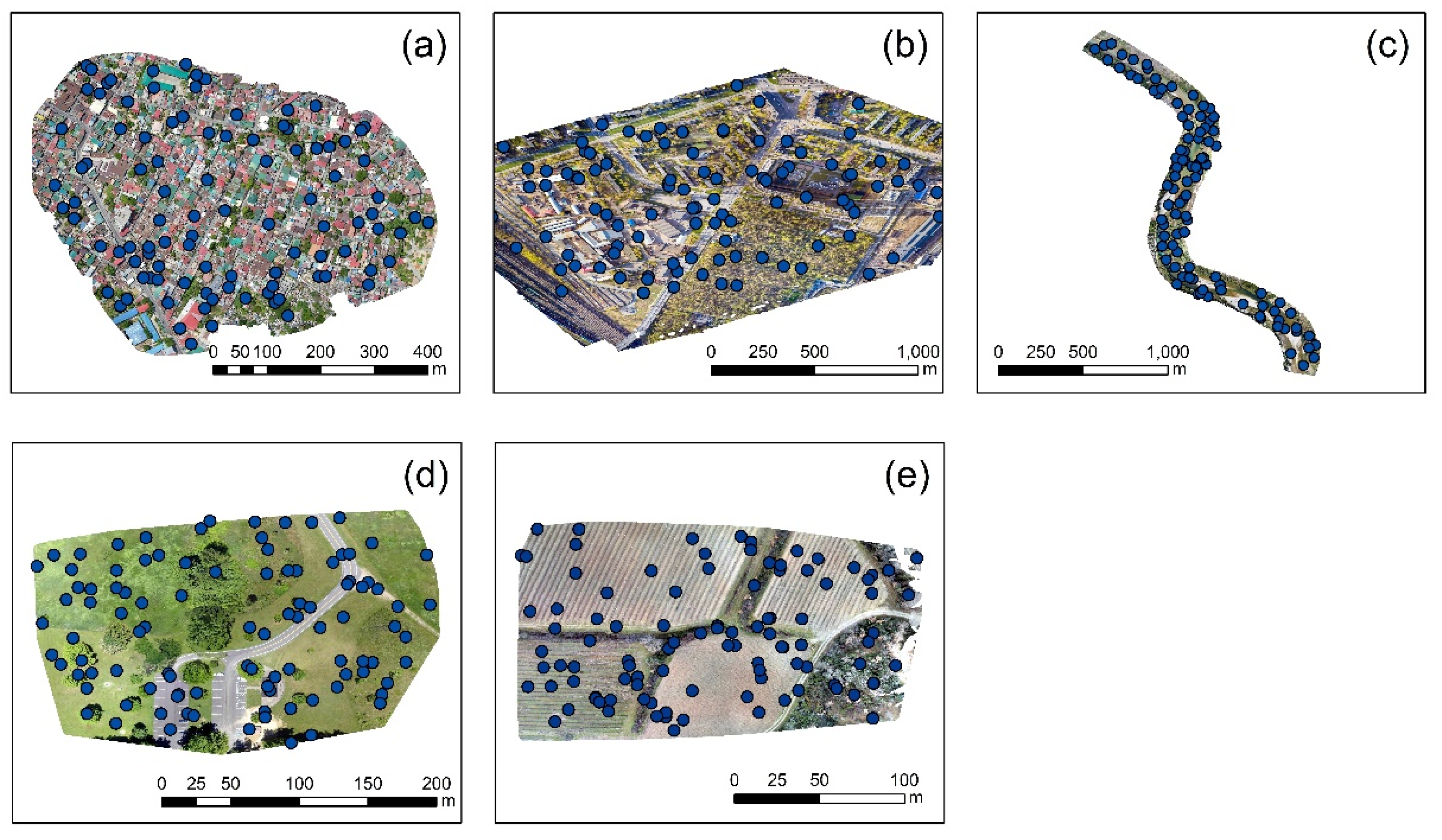

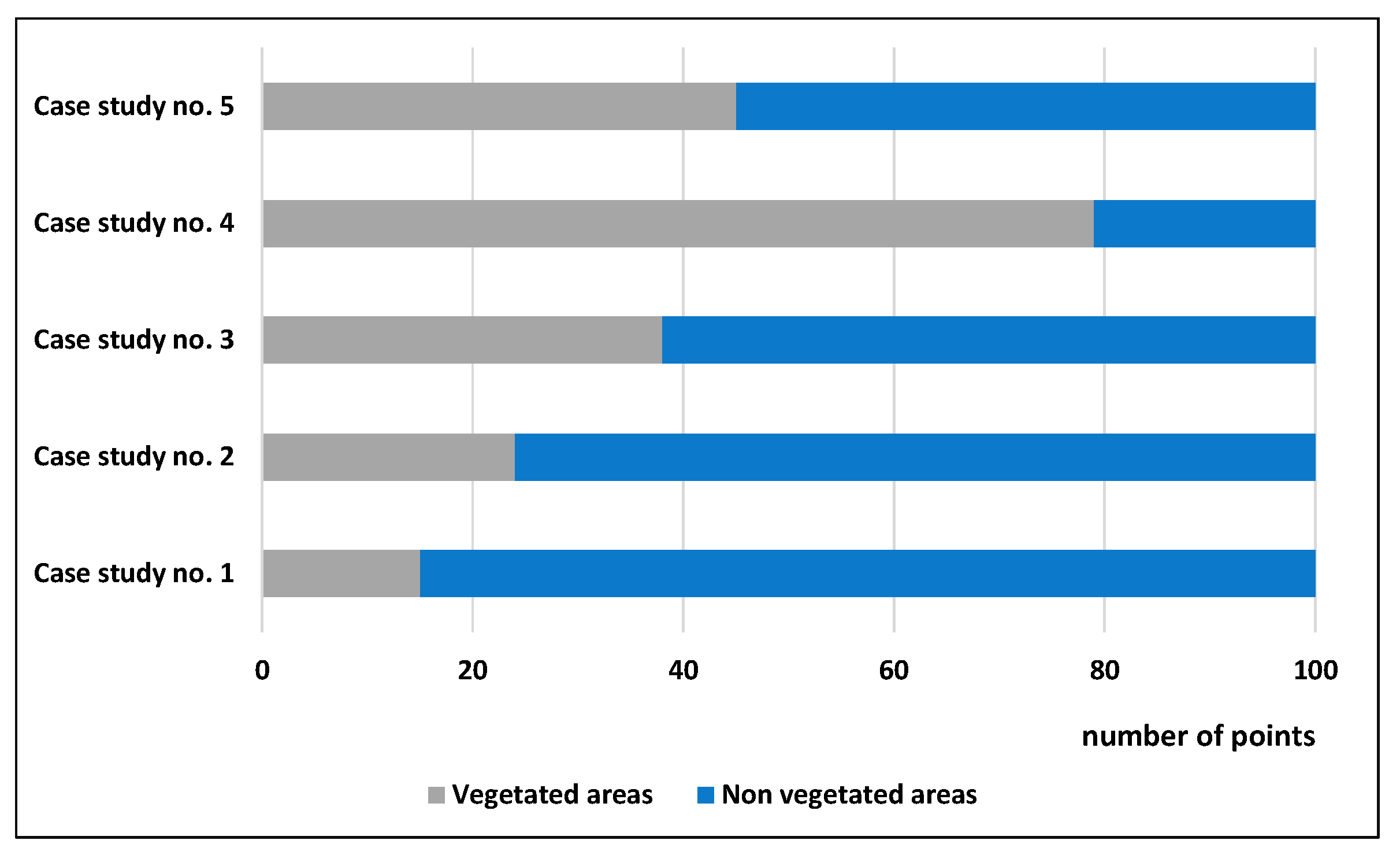

2. Materials and Methods

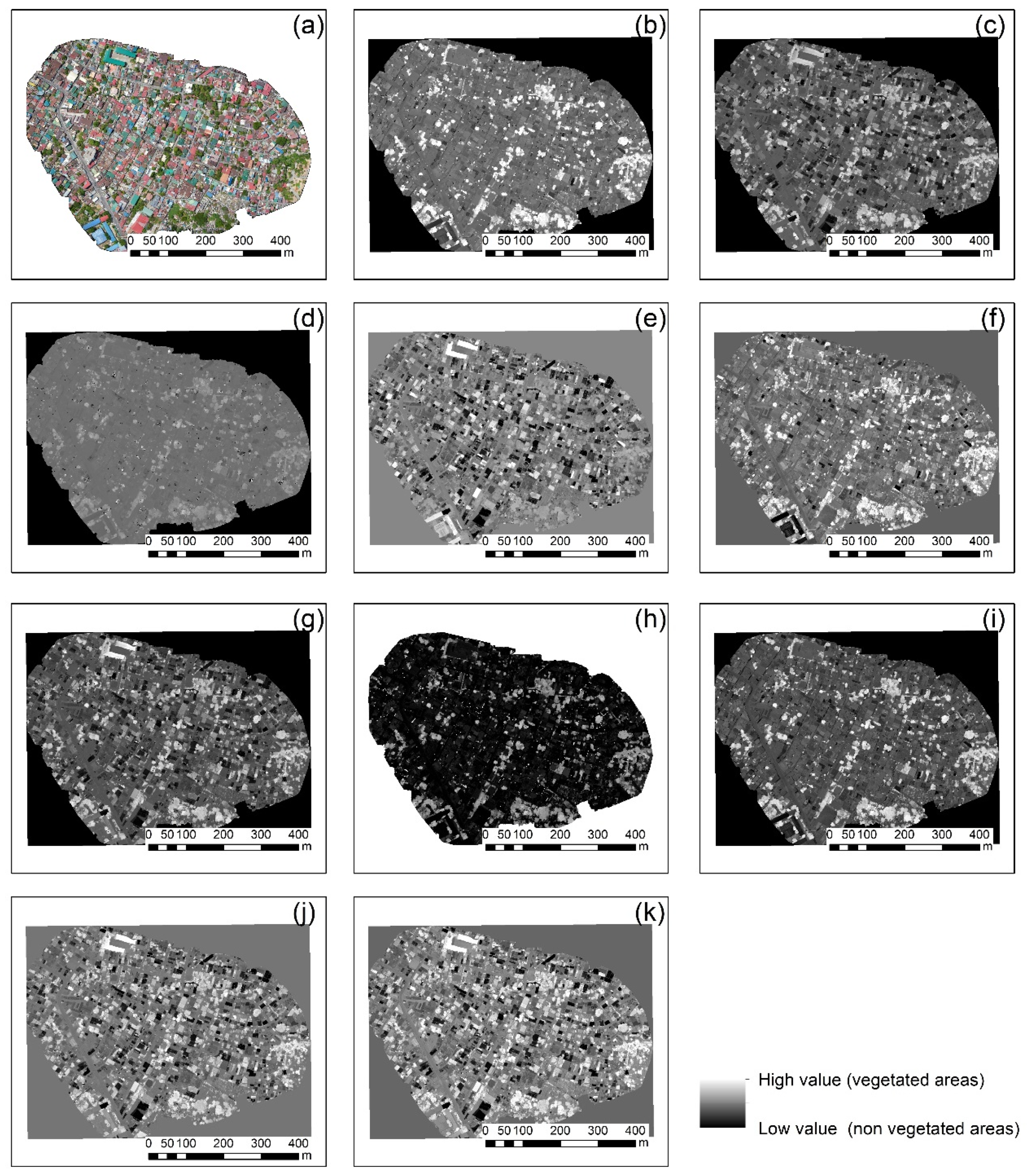

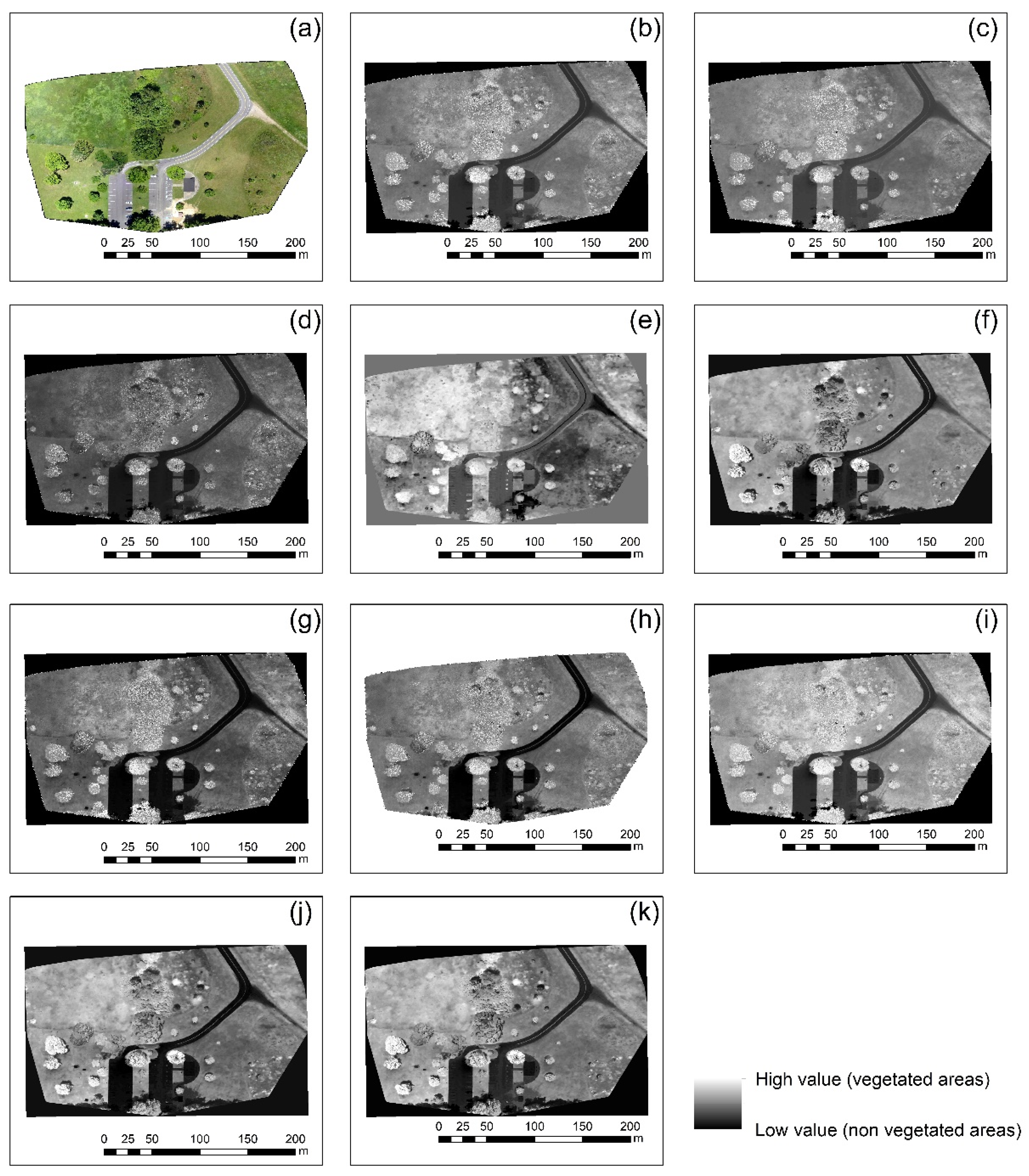

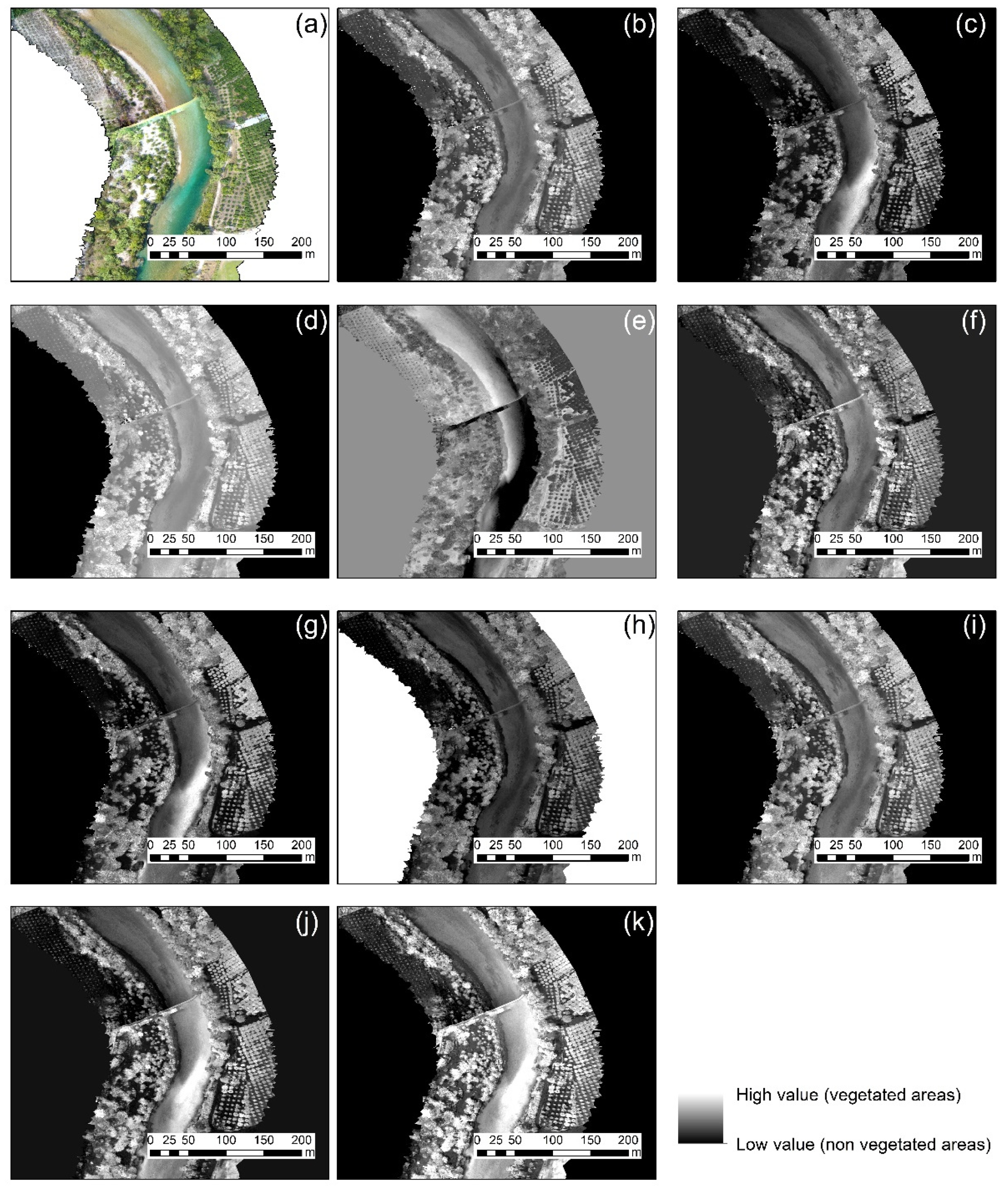

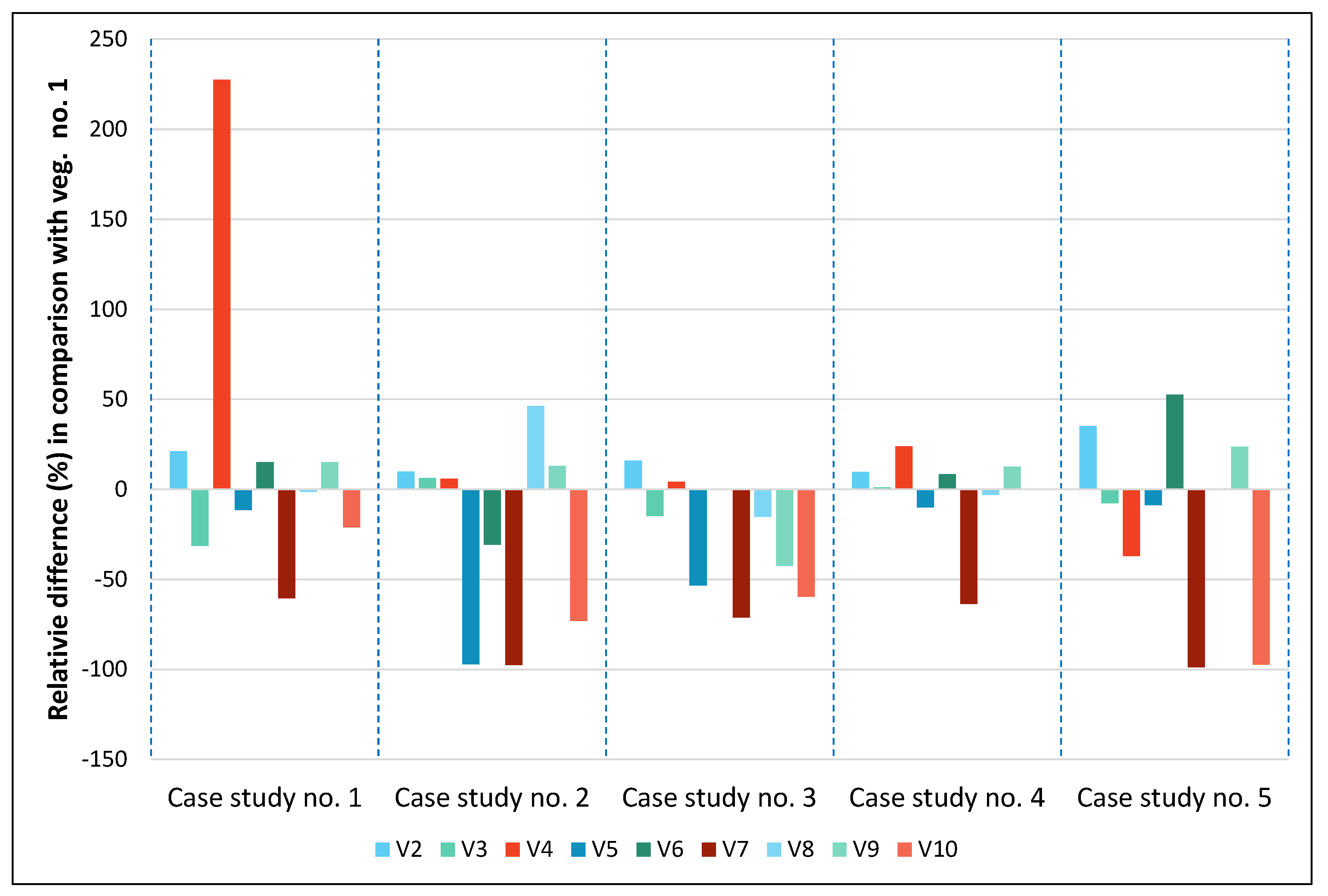

3. Results

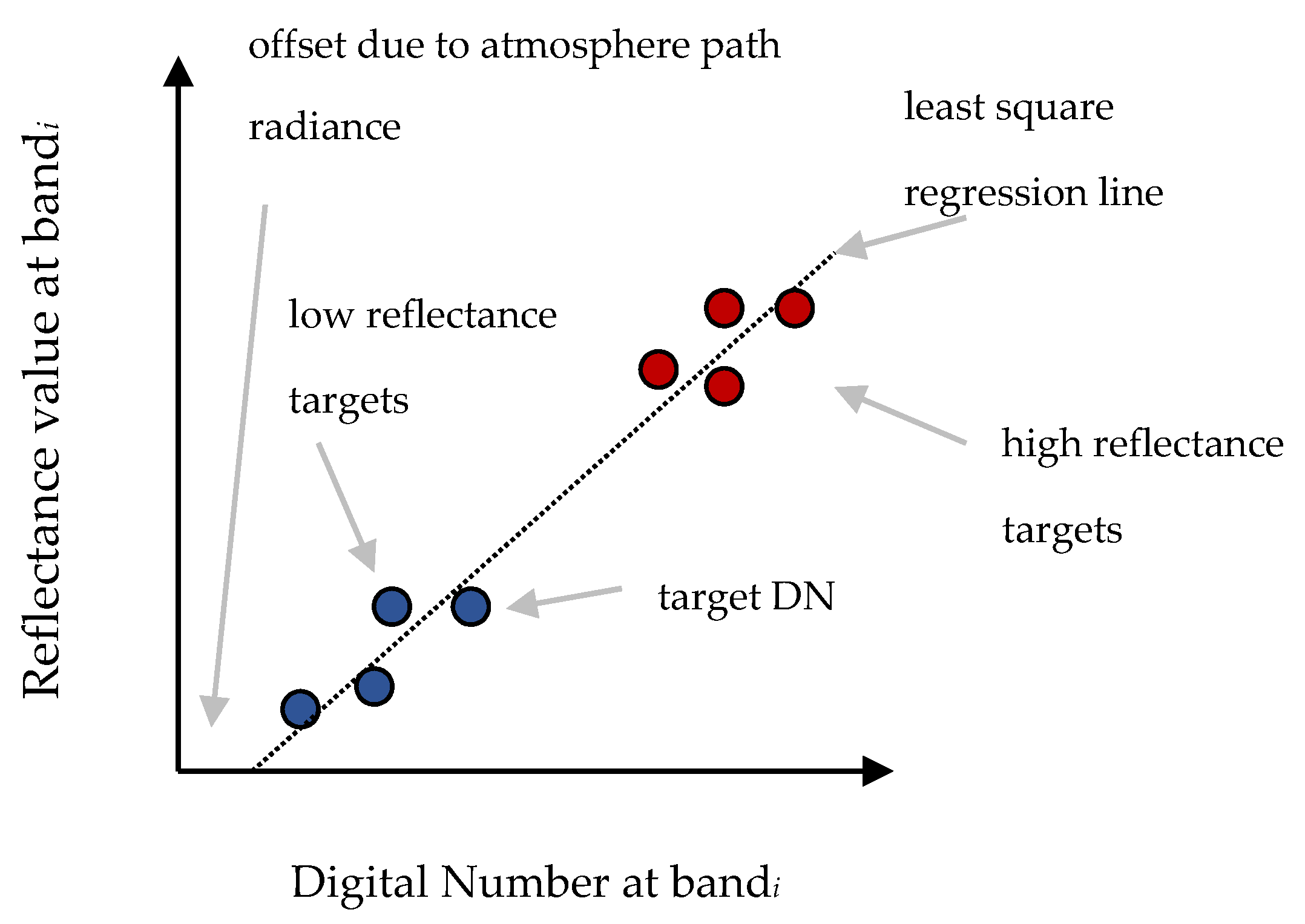

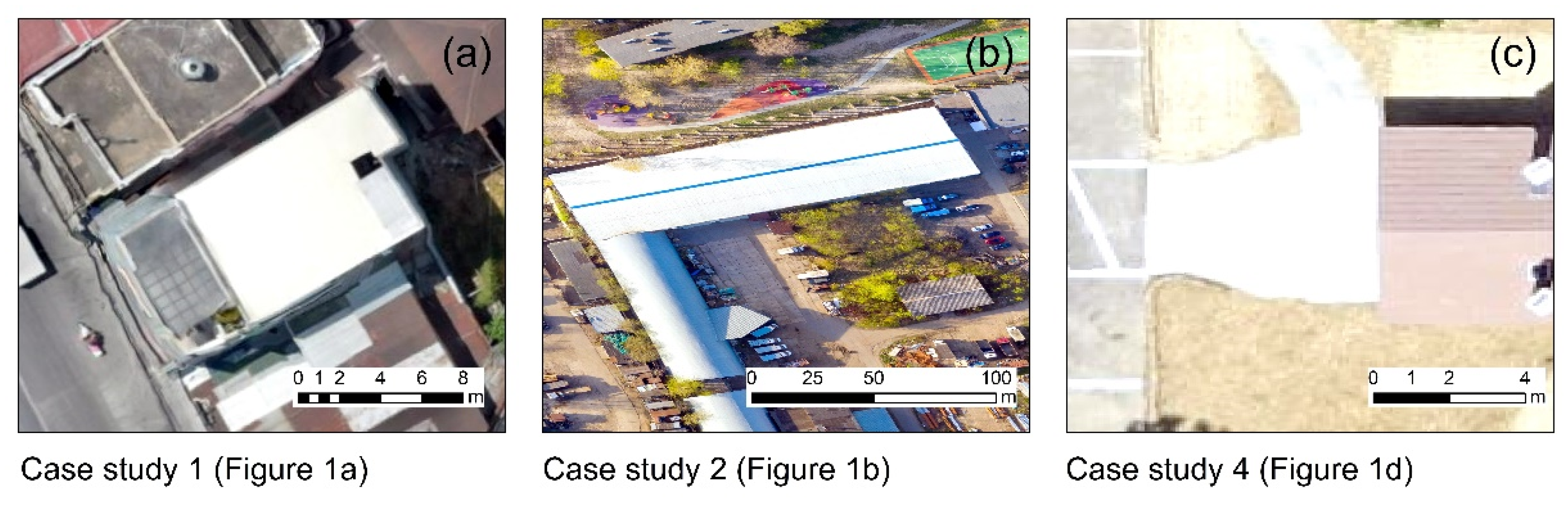

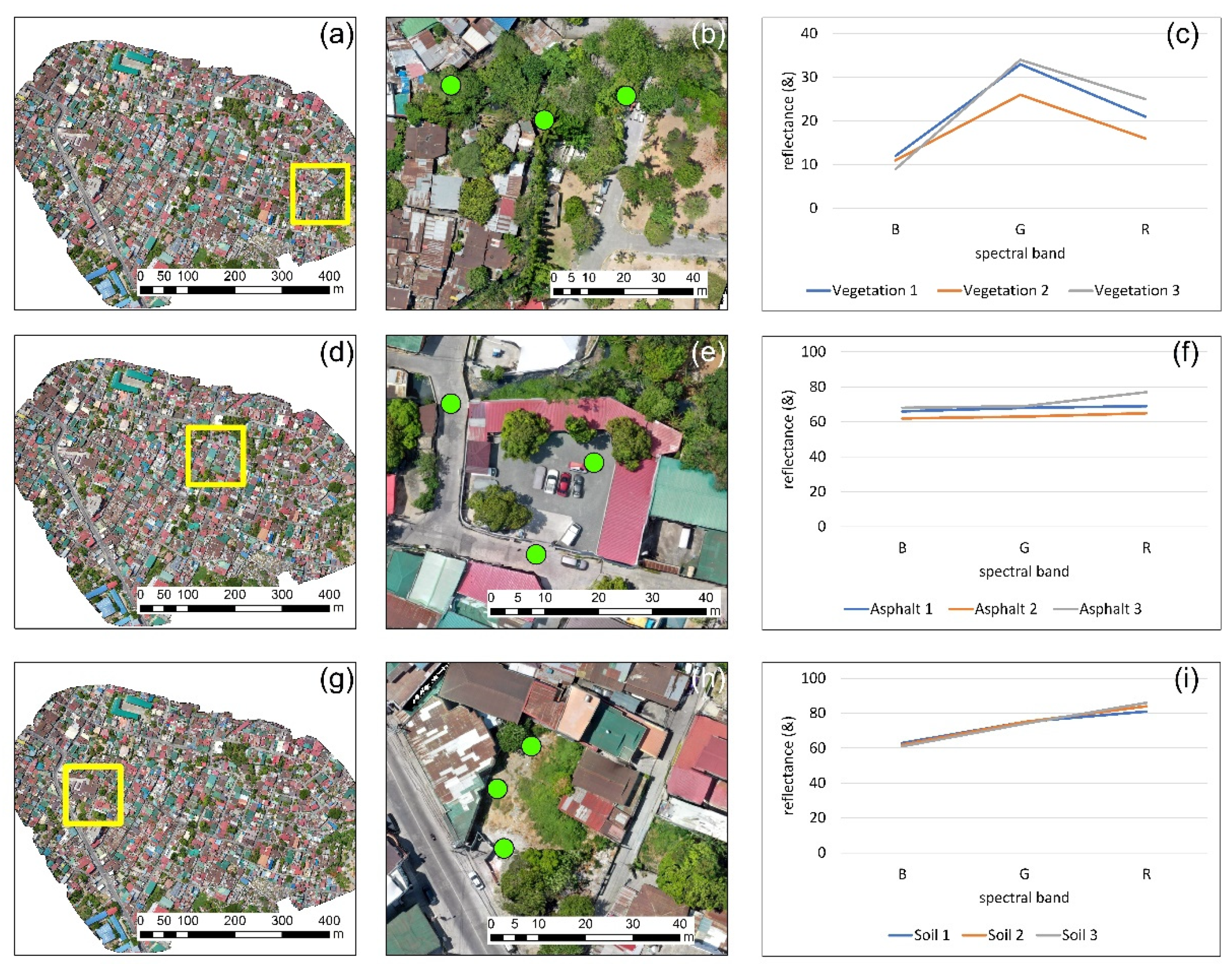

3.1. Radiometric Calibration of the Raw Orthophotos

3.2. Vegetation Indices

3.3. Statistics

4. Discussion

5. Conclusions

- Finding 1: The best vegetation index for all case studies was the green leaf index (GLI), which explores all visible bands of the RGB cameras. The specific index was able to provide better results robustly in all different environments. However:

- Finding 2: The performance of each index varied per case study as expected. Therefore, for each different orthophoto, there was a visible index that highlights better the vegetated areas.

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- García-Berná, J.A.; Ouhbi, S.; Benmouna, B.; García-Mateos, G.; Fernández-Alemán, J.L.; Molina-Martínez, J.M. Systematic Mapping Study on Remote Sensing in Agriculture. Appl. Sci. 2020, 10, 3456. [Google Scholar] [CrossRef]

- Pensieri, M.G.; Garau, M.; Barone, P.M. Drones as an Integral Part of Remote Sensing Technologies to Help Missing People. Drones 2020, 4, 15. [Google Scholar] [CrossRef]

- Feng, Q.; Yang, J.; Liu, Y.; Ou, C.; Zhu, D.; Niu, B.; Liu, J.; Li, B. Multi-Temporal Unmanned Aerial Vehicle Remote Sensing for Vegetable Mapping Using an Attention-Based Recurrent Convolutional Neural Network. Remote Sens. 2020, 12, 1668. [Google Scholar] [CrossRef]

- Pinton, D.; Canestrelli, A.; Fantuzzi, L. A UAV-Based Dye-Tracking Technique to Measure Surface Velocities over Tidal Channels and Salt Marshes. J. Mar. Sci. Eng. 2020, 8, 364. [Google Scholar] [CrossRef]

- Pajares, G. Overview and current status of remote sensing applications based on unmanned aerial vehicles (UAVs). Photogramm. Eng. Remote Sens. 2015, 81, 281–330. [Google Scholar] [CrossRef] [Green Version]

- Cummings, A.R.; McKee, A.; Kulkarni, K.; Markandey, N. The Rise of UAVs. Photogramm. Eng. Remote Sens. 2017, 83, 317–325. [Google Scholar] [CrossRef]

- Stöcker, C.; Bennett, R.; Nex, F.; Gerke, M.; Zevenbergen, J. Review of the Current State of UAV Regulations. Remote Sens. 2017, 9, 459. [Google Scholar] [CrossRef] [Green Version]

- Jiménez López, J.; Mulero-Pázmány, M. Drones for Conservation in Protected Areas: Present and Future. Drones 2019, 3, 10. [Google Scholar] [CrossRef] [Green Version]

- Hashemi-Beni, L.; Jones, J.; Thompson, G.; Johnson, C.; Gebrehiwot, A. Challenges and Opportunities for UAV-Based Digital Elevation Model Generation for Flood-Risk Management: A Case of Princeville, North Carolina. Sensors 2018, 18, 3843. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Yang, M.-D.; Tseng, H.-H.; Hsu, Y.-C.; Tsai, H.P. Semantic Segmentation Using Deep Learning with Vegetation Indices for Rice Lodging Identification in Multi-date UAV Visible Images. Remote Sens. 2020, 12, 633. [Google Scholar] [CrossRef] [Green Version]

- Marino, S.; Alvino, A. Agronomic Traits Analysis of Ten Winter Wheat Cultivars Clustered by UAV-Derived Vegetation Indices. Remote Sens. 2020, 12, 249. [Google Scholar] [CrossRef] [Green Version]

- Lima-Cueto, F.J.; Blanco-Sepúlveda, R.; Gómez-Moreno, M.L.; Galacho-Jiménez, F.B. Using Vegetation Indices and a UAV Imaging Platform to Quantify the Density of Vegetation Ground Cover in Olive Groves (Olea Europaea L.) in Southern Spain. Remote Sens. 2019, 11, 2564. [Google Scholar] [CrossRef] [Green Version]

- Yeom, J.; Jung, J.; Chang, A.; Ashapure, A.; Maeda, M.; Maeda, A.; Landivar, J. Comparison of Vegetation Indices Derived from UAV Data for Differentiation of Tillage Effects in Agriculture. Remote Sens. 2019, 11, 1548. [Google Scholar] [CrossRef] [Green Version]

- Hennessy, A.; Clarke, K.; Lewis, M. Hyperspectral Classification of Plants: A Review of Waveband Selection Generalisability. Remote Sens. 2020, 12, 113. [Google Scholar] [CrossRef] [Green Version]

- Agapiou, A.; Hadjimitsis, D.G.; Alexakis, D.D. Evaluation of Broadband and Narrowband Vegetation Indices for the Identification of Archaeological Crop Marks. Remote Sens. 2012, 4, 3892–3919. [Google Scholar] [CrossRef] [Green Version]

- Rouse, J.W.; Haas, R.H.; Schell, J.A.; Deering, D.W.; Harlan, J.C. Monitoring the Vernal Advancements and Retrogradation (Greenwave Effect) of Nature Vegetation; NASA/GSFC Final Report; NASA: Greenbelt, MD, USA, 1974. [Google Scholar]

- Xu, K.; Gong, Y.; Fang, S.; Wang, K.; Lin, Z.; Wang, F. Radiometric Calibration of UAV Remote Sensing Image with Spectral Angle Constraint. Remote Sens. 2019, 11, 1291. [Google Scholar] [CrossRef] [Green Version]

- Iqbal, F.; Lucieer, A.; Barry, K. Simplified radiometric calibration for UAS-mounted multispectral sensor. Eur. J. Remote Sens. 2018, 51, 301–313. [Google Scholar] [CrossRef]

- OpenAerialMap (OAM). Available online: https://openaerialmap.org (accessed on 19 May 2020).

- Pompilio, L.; Marinangeli, L.; Amitrano, L.; Pacci, G.; D’andrea, S.; Iacullo, S.; Monaco, E. Application of the empirical line method (ELM) to calibrate the airborne Daedalus-CZCS scanner. Eur. J. Remote Sens. 2018, 51, 33–46. [Google Scholar] [CrossRef] [Green Version]

- Tucker, C.J. Red and photographic infrared linear combinations for monitoring vegetation. Remote Sens. Environ. 1979, 8, 127–150. [Google Scholar] [CrossRef] [Green Version]

- Louhaichi, M.; Borman, M.M.; Johnson, D.E. Spatially located platform and aerial photography for documentation of grazing impacts on wheat. Geocarto Int. 2001, 16, 65. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Kaufman, Y.J.; Stark, R.; Rundquist, D. 2002. Novel algorithms for remote estimation of vegetation fraction. Remote Sens. Environ. 2002, 80, 76–87. [Google Scholar] [CrossRef] [Green Version]

- Hunt, E.R.; Daughtry, C.S.T.; Eitel, J.U.H.; Long, D.S. Remote sensing leaf chlorophyll content using a visible band index. Agron. J. 2011, 103, 1090–1099. [Google Scholar] [CrossRef] [Green Version]

- Gamon, J.A.; Surfus, J.S. Assessing leaf pigment content and activity with a reflectometer. New Phytol. 1999, 143, 105–117. [Google Scholar] [CrossRef]

- Bendig, J.; Yu, K.; Aasen, H.; Bolten, A.; Bennertz, S.; Broscheit, J.; Gnyp, M.L.; Bareth, G. Combining UAV-based plant height from crop surface models, visible, and near infrared vegetation indices for biomass monitoring in barley. Int. J. Appl. Earth Obs. Geoinf. 2015, 39, 79–87. [Google Scholar] [CrossRef]

- Verrelst, J.; Schaepman, M.E.; Koetz, B.; Kneubuehler, M. Angular sensitivity analysis of vegetation indices derived from chris/proba data. Remote Sens. Environ. 2008, 112, 2341–2353. [Google Scholar] [CrossRef]

- Woebbecke, D.M.; Meyer, G.E.; Vonbargen, K.; Mortensen, D.A. Color indexes for weed identification under various soil, residue, and lighting conditions. Trans. ASAE 1995, 38, 259–269. [Google Scholar] [CrossRef]

- Kataoka, T.; Kaneko, T.; Okamoto, H.; Hata, S. Crop growth estimation system using machine vision. In Proceedings of the 2003 IEEE/ASME International Conference on Advanced Intelligent Mechatronics, Kobe, Japan, 20–24 July 2003; pp. 1079–1083. [Google Scholar]

- Agapiou, A.; Alexakis, D.D.; Hadjimitsis, D.G. Spectral sensitivity of ALOS, ASTER, IKONOS, LANDSAT and SPOT satellite imagery intended for the detection of archaeological crop marks. Int. J. Dig. Earth 2012, 7(5), 351–372. [Google Scholar] [CrossRef]

- Tran, T.-B.; Puissant, A.; Badariotti, D.; Weber, C. Optimizing Spatial Resolution of Imagery for Urban Form Detection—The Cases of France and Vietnam. Remote Sens. 2011, 3, 2128–2147. [Google Scholar] [CrossRef] [Green Version]

- Agapiou, A. Optimal Spatial Resolution for the Detection and Discrimination of Archaeological Proxies in Areas with Spectral Heterogeneity. Remote Sens. 2020, 12, 136. [Google Scholar] [CrossRef] [Green Version]

| No. | Case Study | Location | UAV Camera Sensor | Named Resolution | Preview |

|---|---|---|---|---|---|

| 1 | Highly urbanized area | Taytay, Philippines | DJI Mavic 2 Pro | 6 cm | Figure 1a |

| 2 | Campus | St. Petersburg, Russia | DJI Mavic 2 Pro | 5 cm | Figure 1b |

| 3 | River-corridor | Arta, Greece | DJI FC6310 | 5 cm | Figure 1c |

| 4 | Picnic area | Ohio, USA | SONY DSC-WX220 | 6 cm | Figure 1d |

| 5 | Agricultural area | Nîmes, France | Parrot Anafi | 5 cm | Figure 1e |

| No. | Vegetation Index | Equation | Ref. | |

|---|---|---|---|---|

| 1 | NGRDI | Normalized green red difference index | (ρg − ρR)/(ρg + ρr) | [21] |

| 2 | GLI | Green leaf index | (2 * ρg − ρr − ρb)/(2 * ρg + ρr + ρb) | [22] |

| 3 | VARI | Visible atmospherically resistant index | (ρg − ρr)/(ρg + ρr − ρb) | [23] |

| 4 | TGI | Triangular greenness index | 0.5 * [(λr − λb)(ρr − ρg) − (λr − λg)(ρr − ρb)] | [24] |

| 5 | IRG | Red–green Ratio index | ρr − ρg | [25] |

| 6 | RGBVI | Red–green–blue vegetation index | (ρg * ρg) − (ρr * ρb) /(ρg * ρg) + (ρr * ρb) | [26] |

| 7 | RGRI | Red–green ratio index | ρr / ρg | [27] |

| 8 | MGRVI | Modified green–red vegetation index | (ρg2 − ρr2)/ (ρg2 + ρr2) | [26] |

| 9 | ExG | Excess green index | 2 * ρg − ρr − ρb | [28] |

| 10 | CIVE | Color index of vegetation | 0.441* ρr − 0.881 * ρg + 0.385 * ρb + 18.787 | [29] |

| V1 | V2 | V3 | V4 | V5 | V6 | V7 | V8 | V9 | V10 | |

|---|---|---|---|---|---|---|---|---|---|---|

| Case study No. 1 | 82.2 | 99.7 | 56.5 | 269.1 | 72.7 | 94.7 | 32.5 | 81.0 | 94.6 | 64.8 |

| Case study No. 2 | 78.7 | 86.6 | 83.8 | 83.4 | 2.2 | 54.5 | 1.9 | 115.3 | 89.0 | 21.2 |

| Case study No. 3 | 49.8 | 57.8 | 42.5 | 52.0 | 23.2 | 50.0 | 14.4 | 42.3 | 28.7 | 20.1 |

| Case study No. 4 | 80.7 | 88.5 | 81.6 | 100.0 | 72.5 | 87.5 | 29.3 | 78.1 | 90.9 | 80.4 |

| Case study No. 5 | 107.2 | 144.8 | 98.9 | 67.4 | 97.6 | 163.7 | 1.2 | 107.2 | 132.5 | 2.9 |

© 2020 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Agapiou, A. Vegetation Extraction Using Visible-Bands from Openly Licensed Unmanned Aerial Vehicle Imagery. Drones 2020, 4, 27. https://doi.org/10.3390/drones4020027

Agapiou A. Vegetation Extraction Using Visible-Bands from Openly Licensed Unmanned Aerial Vehicle Imagery. Drones. 2020; 4(2):27. https://doi.org/10.3390/drones4020027

Chicago/Turabian StyleAgapiou, Athos. 2020. "Vegetation Extraction Using Visible-Bands from Openly Licensed Unmanned Aerial Vehicle Imagery" Drones 4, no. 2: 27. https://doi.org/10.3390/drones4020027