Drones Chasing Drones: Reinforcement Learning and Deep Search Area Proposal

Abstract

:1. Introduction

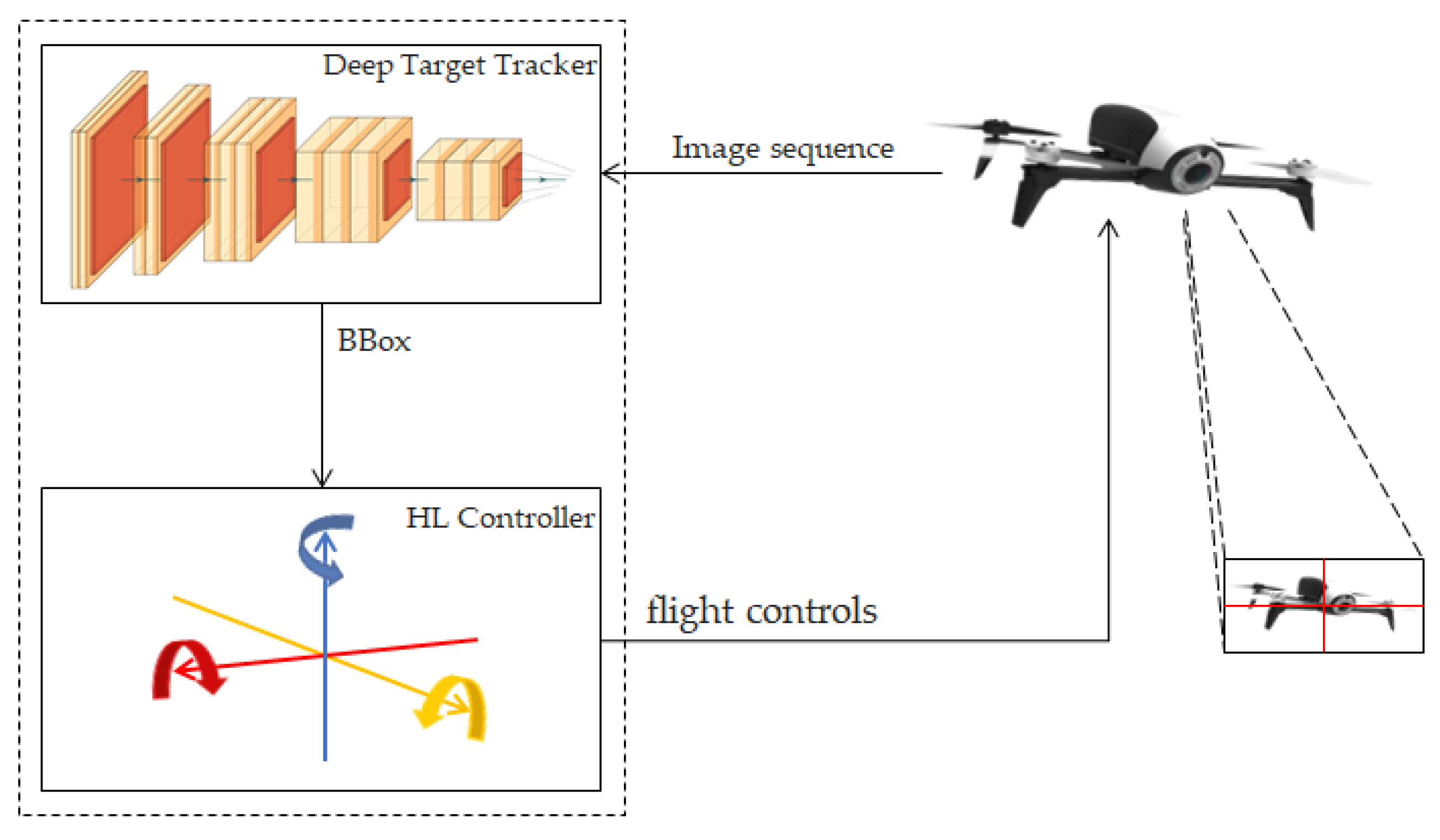

2. Proposed Framework

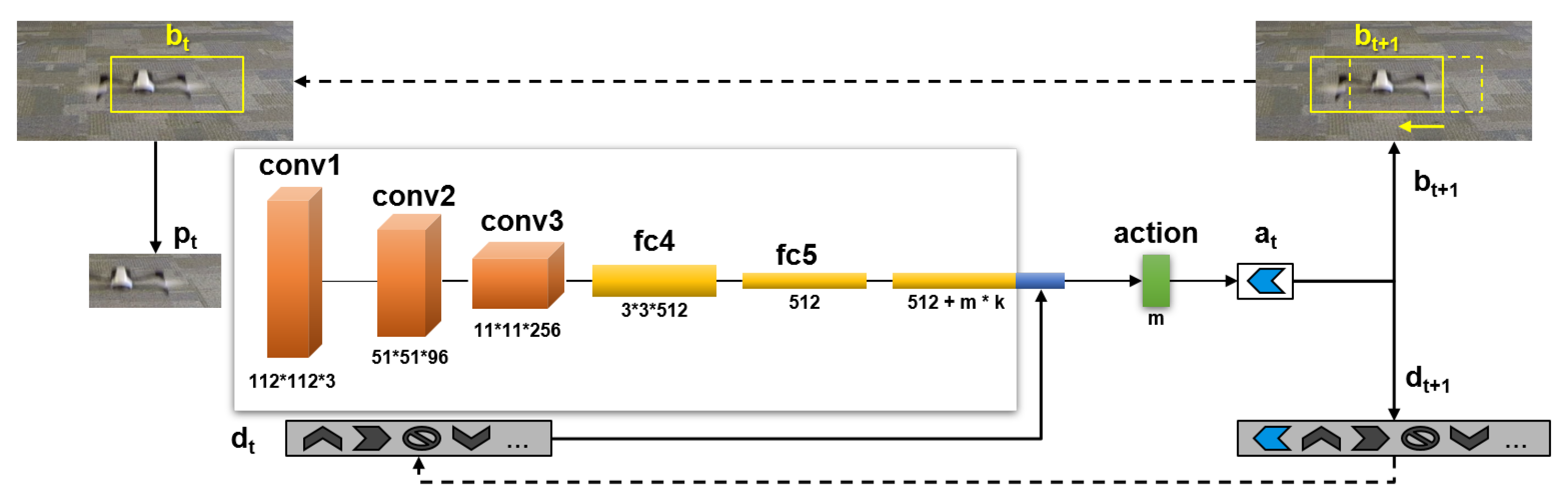

2.1. Reinforcement Learning for Tracking

2.1.1. Supervised Learning

2.1.2. Reinforcement Learning

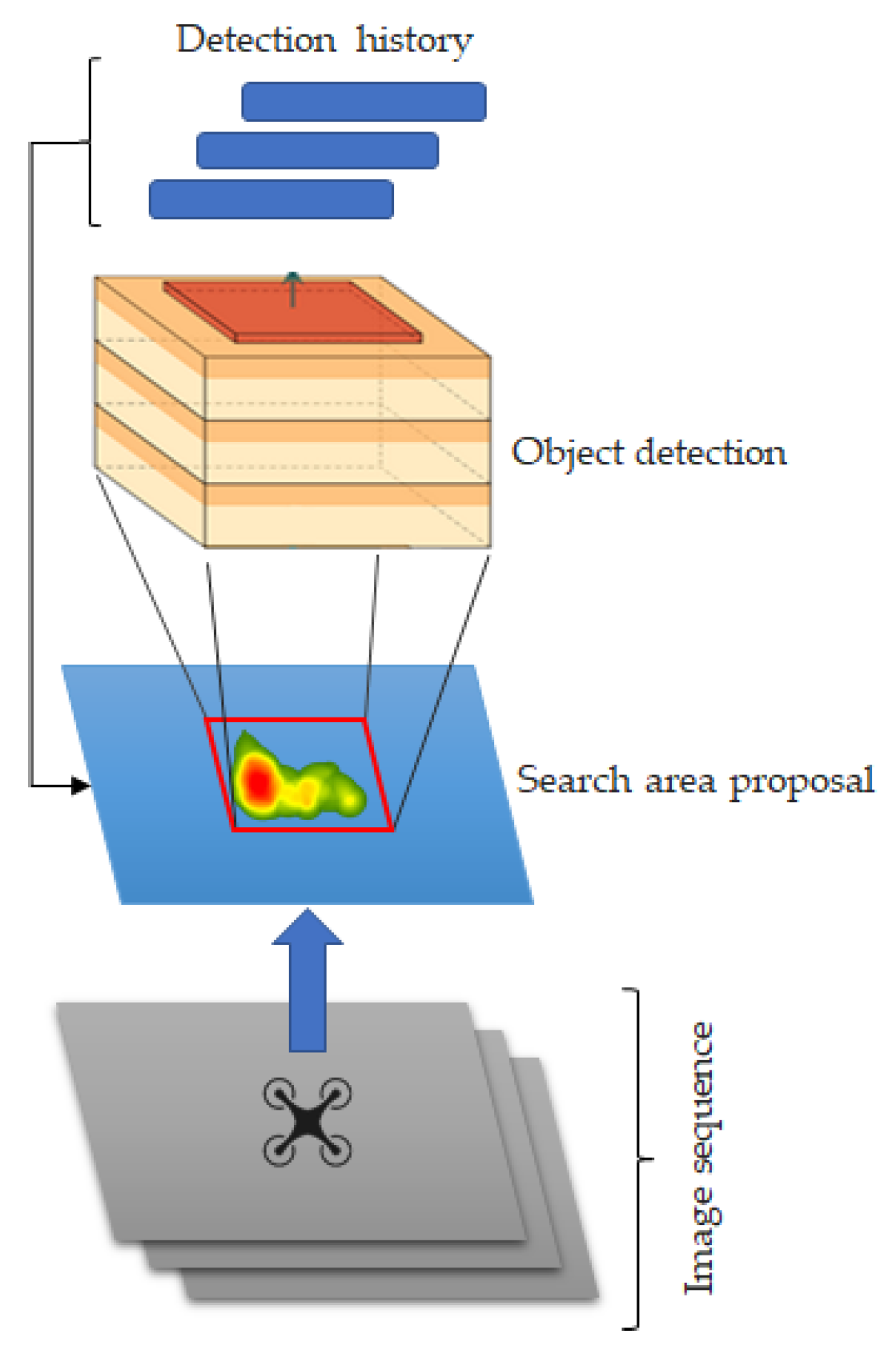

2.2. Deep Object Detection and Tracking

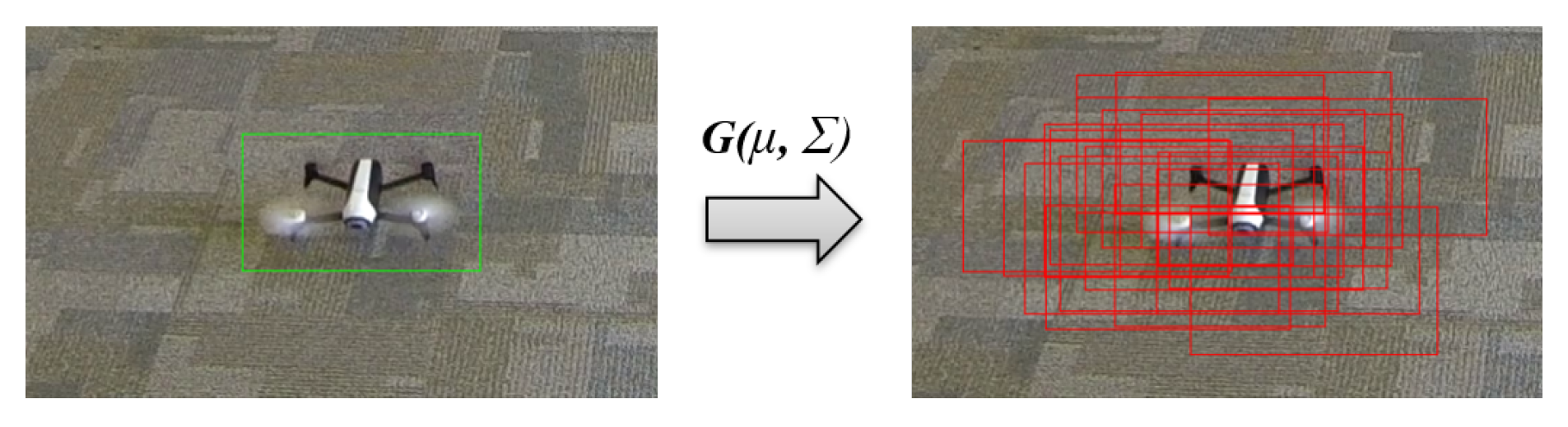

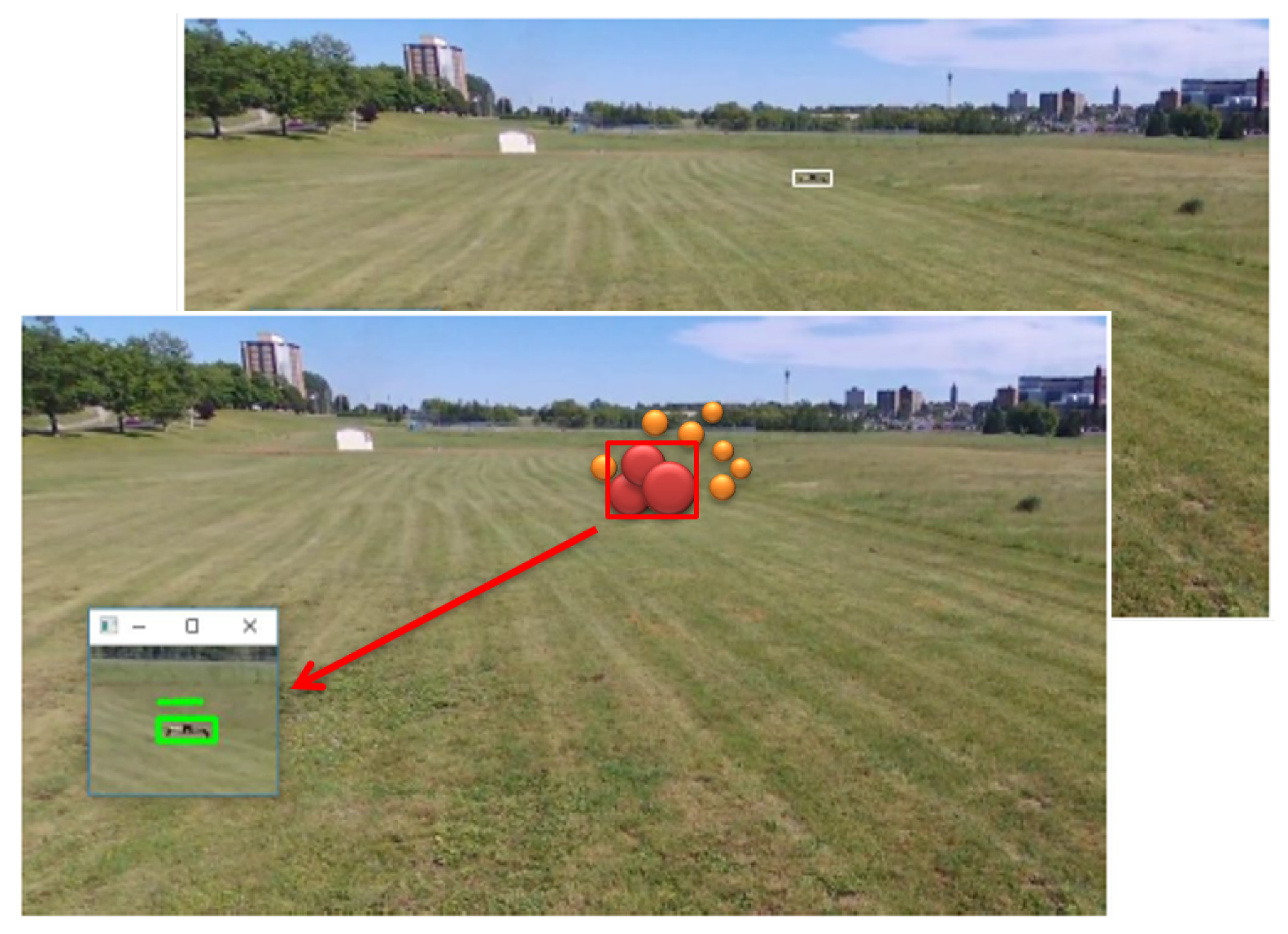

Prediction and Tracking Using a Search Area Proposal (SAP)

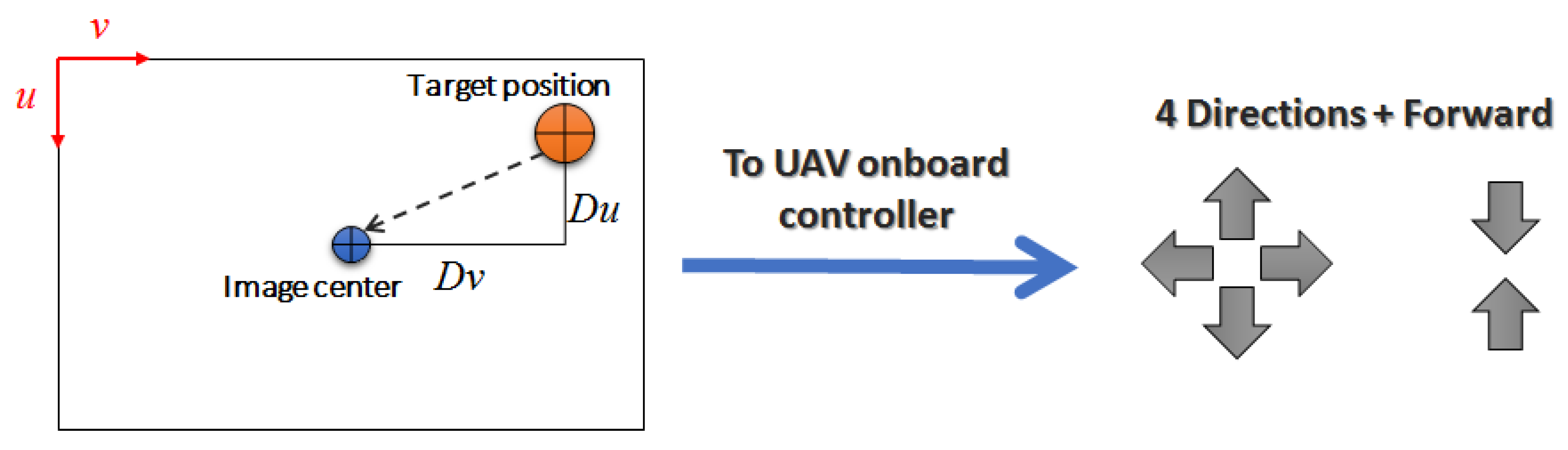

2.3. UAV Control

3. Datasets

3.1. Reinforcement Learning Dataset

3.2. Deep Object Detection and SAP Training Dataset

4. Experimental Results

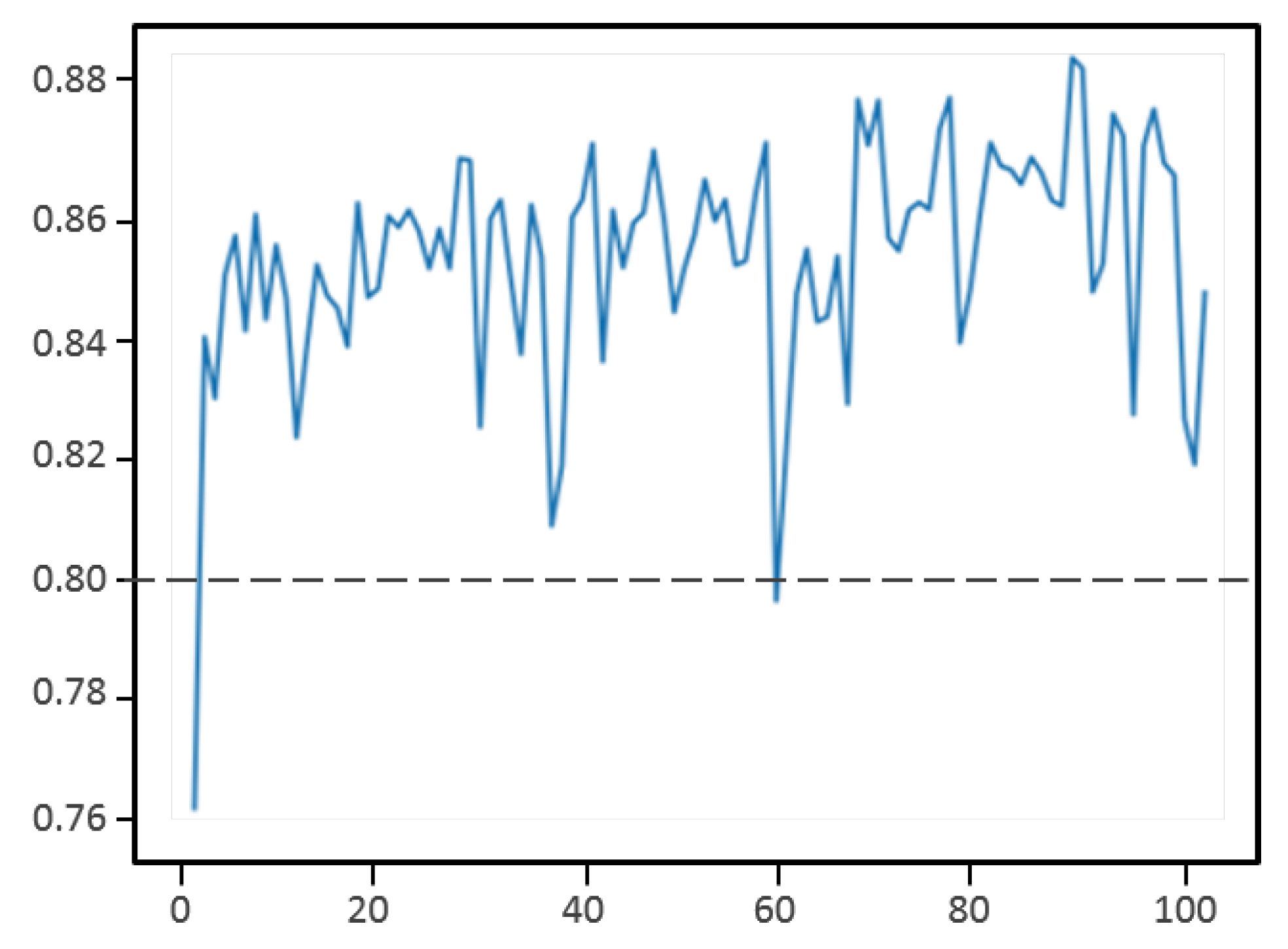

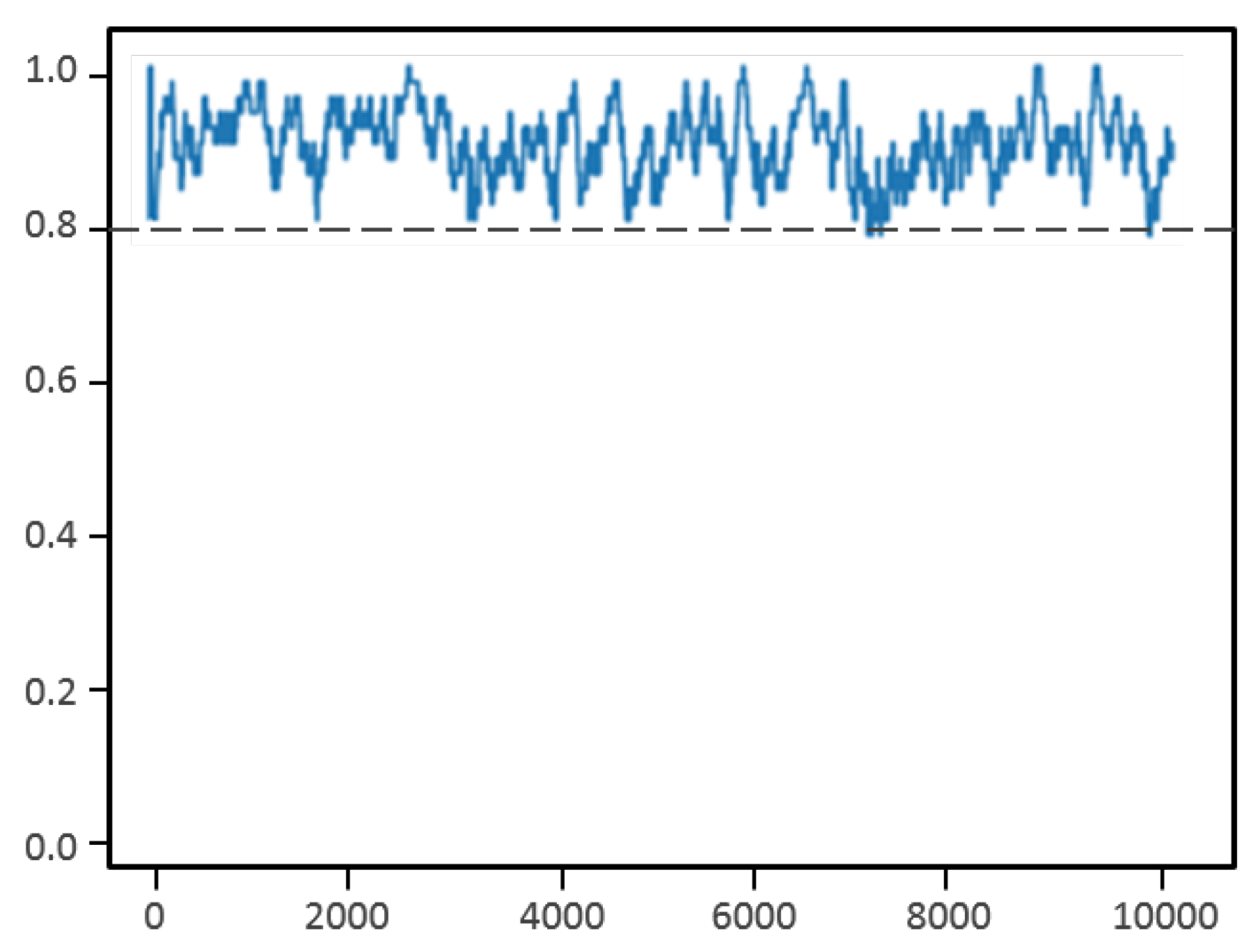

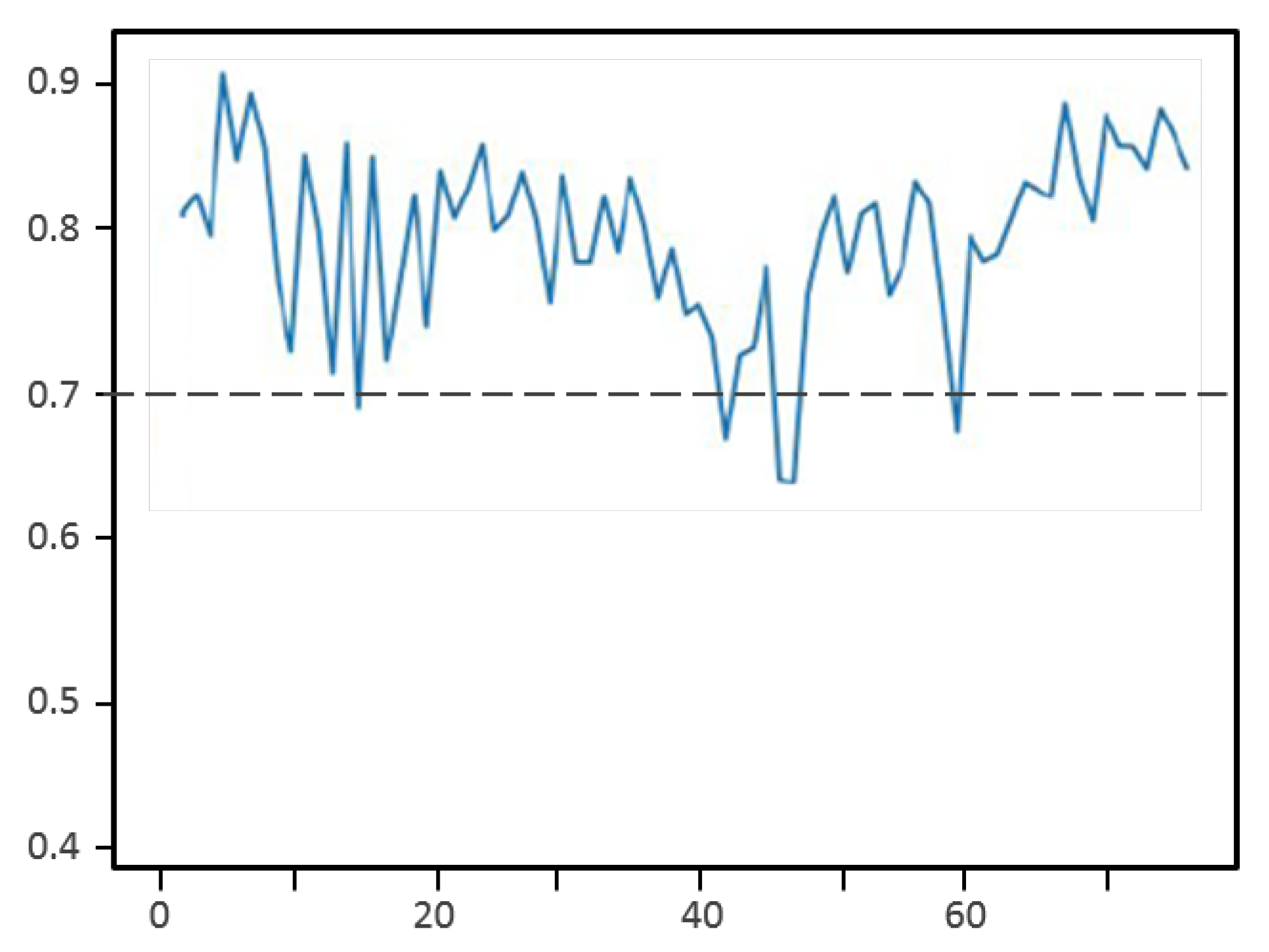

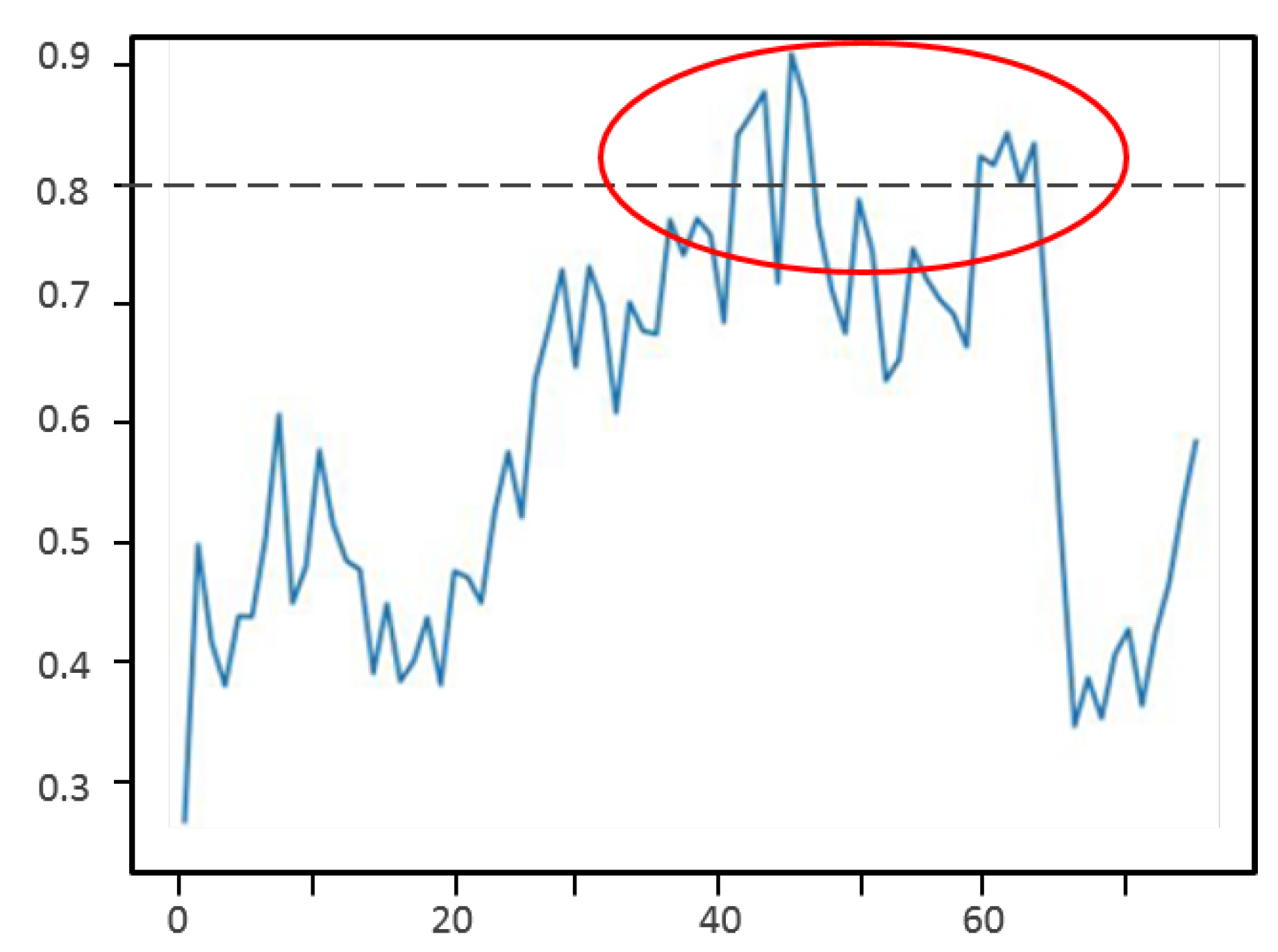

4.1. Reinforcement Learning

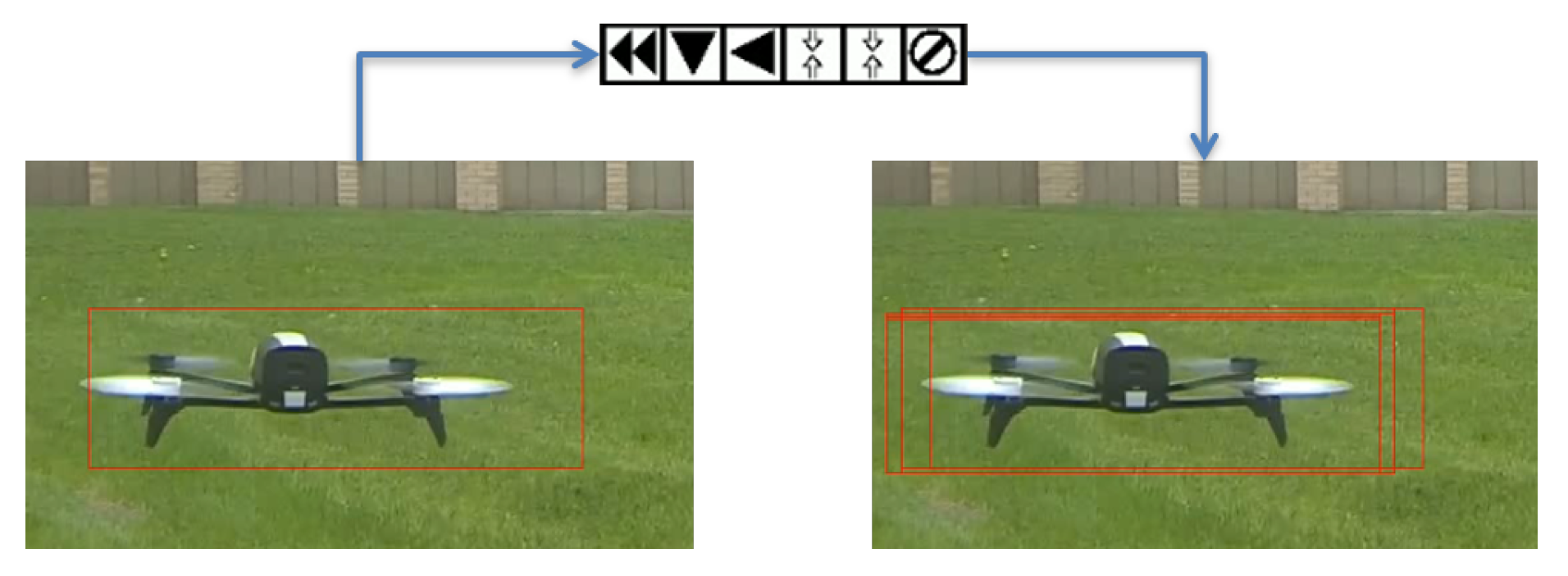

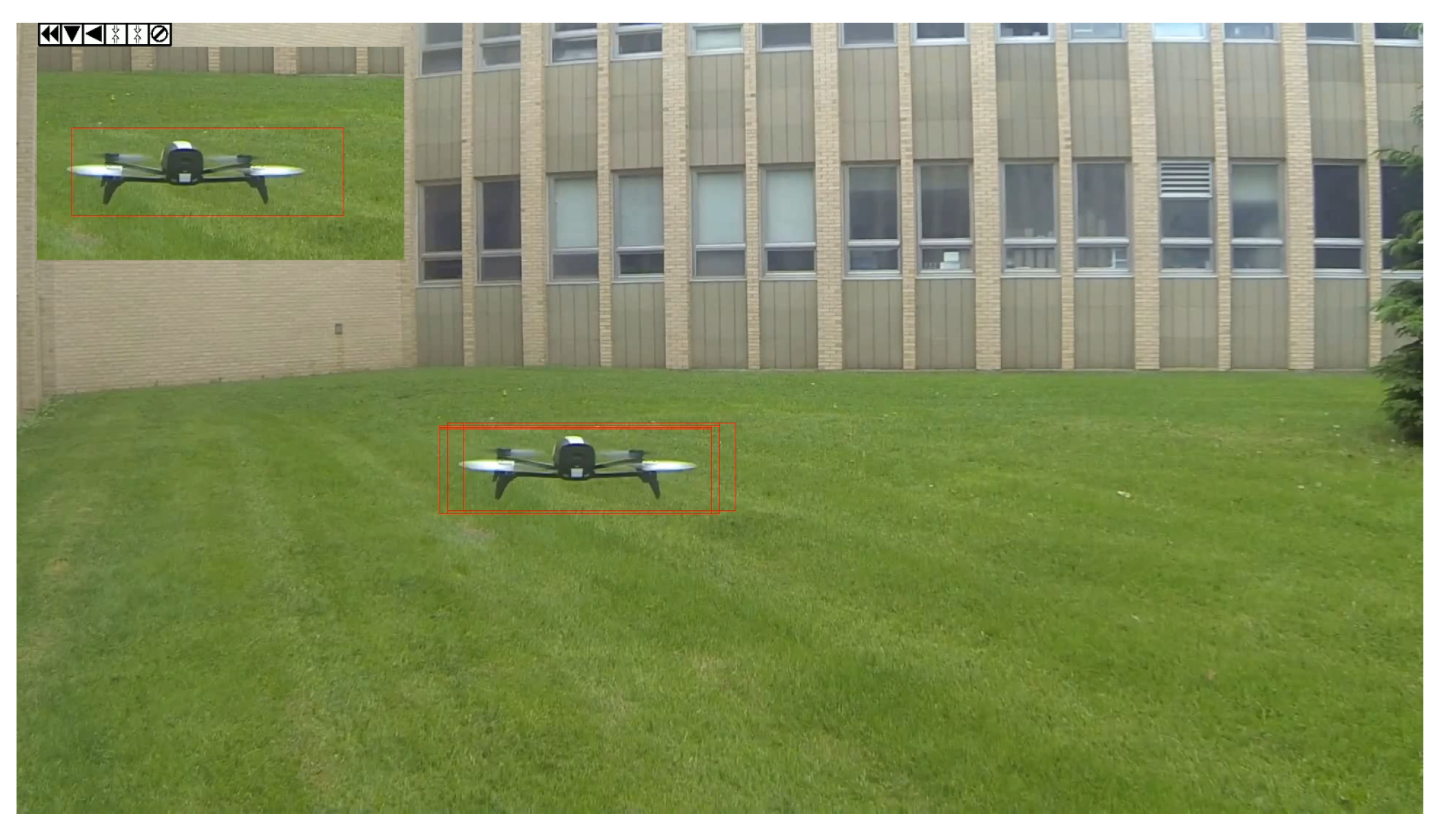

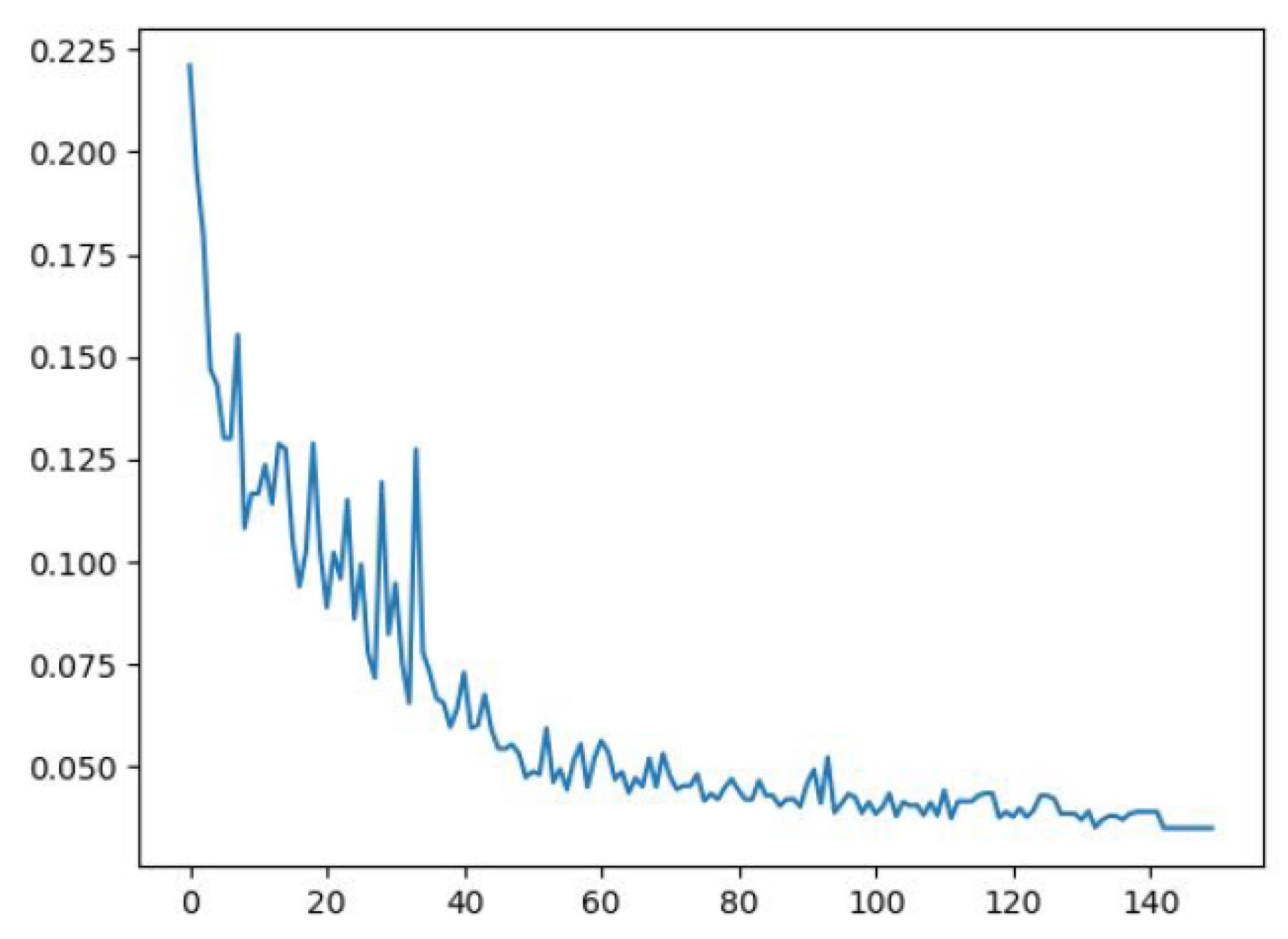

4.2. Deep Object Detection and Tracking

4.2.1. Object Detection Results

4.2.2. Object Detection with a Search Area Proposal (SAP)

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Schmuck, P.; Chli, M. Multi-UAV collaborative monocular SLAM. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 3863–3870. [Google Scholar] [CrossRef]

- Vemprala, S.; Saripalli, S. Monocular Vision based Collaborative Localization for Micro Aerial Vehicle Swarms. In Proceedings of the 2018 International Conference on Unmanned Aircraft Systems (ICUAS), Dallas, TX, USA, 12–15 June 2018; pp. 315–323. [Google Scholar] [CrossRef]

- Shah, S.; Dey, D.; Lovett, C.; Kapoor, A. AirSim: High-Fidelity Visual and Physical Simulation for Autonomous Vehicles. In Field and Service Robotics; Springer: Cham, Switzerland, 2017. [Google Scholar]

- Tian, Y.; Liu, K.; Ok, K.; Tran, L.; Allen, D.; Roy, N.; How, J.P. Search and Rescue under the Forest Canopy using Multiple UAS. In Proceedings of the International Symposium on Experimental Robotics (ISER), Buenos Aires, Argentina, 5–8 November 2018. [Google Scholar]

- Chung, S.; Paranjape, A.A.; Dames, P.; Shen, S.; Kumar, V. A Survey on Aerial Swarm Robotics. IEEE Trans. Robot. 2018, 34, 837–855. [Google Scholar] [CrossRef]

- Alexopoulos, A.; Schmidt, T.; Badreddin, E. A pursuit-evasion game between unmanned aerial vehicles. In Proceedings of the 2014 11th International Conference on Informatics in Control, Automation and Robotics (ICINCO), Vienna, Austria, 1–3 September 2014; Volume 2, pp. 74–81. [Google Scholar] [CrossRef]

- Krishnamoorthy, K.; Casbeer, D.; Pachter, M. Minimum time UAV pursuit of a moving ground target using partial information. In Proceedings of the 2015 International Conference on Unmanned Aircraft Systems (ICUAS), Denver, CO, USA, 9–12 June 2015; pp. 204–208. [Google Scholar] [CrossRef]

- Alexopoulos, A.; Kirsch, B.; Badreddin, E. Realization of pursuit-evasion games with unmanned aerial vehicles. In Proceedings of the 2017 International Conference on Unmanned Aircraft Systems (ICUAS), Miami, FL, USA, 13–16 June 2017; pp. 797–805. [Google Scholar] [CrossRef]

- Fargeas, J.C.L.; Kabamba, P.T.; Girard, A.R. Cooperative Surveillance and Pursuit Using Unmanned Aerial Vehicles and Unattended Ground Sensors. Sensors 2015, 15, 1365–1388. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Fu, X.; Feng, H.; Gao, X. UAV Mobile Ground Target Pursuit Algorithm. J. Intell. Robot. Syst. 2012, 68, 359–371. [Google Scholar] [CrossRef]

- Camci, E.; Kayacan, E. Game of Drones: UAV Pursuit-Evasion Game with Type-2 Fuzzy Logic Controllers tuned by Reinforcement Learning. In Proceedings of the 2016 IEEE International Conference on Fuzzy Systems (FUZZ-IEEE), Vancouver, BC, Canada, 24–29 July 2016; pp. 618–625. [Google Scholar] [CrossRef]

- Bonnet, A.; Akhloufi, M.A. UAV pursuit using reinforcement learning. In Proceedings of the SPIE, Unmanned Systems Technology XXI, Baltimore, MD, USA, 14–18 April 2019; Volume 11021, p. 1102109. [Google Scholar]

- Watkins, C.J.C.H. Learning from Delayed Rewards. Ph.D. Thesis, King’s College, Cambridge, UK, 1989. [Google Scholar]

- Arola, S.; Akhloufi, M.A. Vision-based deep learning for UAVs collaboration. In Proceedings of the SPIE, Unmanned Systems Technology XXI, Baltimore, MD, USA, 14–18 April 2019; Volume 11021, p. 1102108. [Google Scholar]

- Arola, S.; Akhloufi, M.A. UAV Pursuit-Evasion using Deep Learning and Search Area Proposal. In Proceedings of the IEEE International Conference on Robotics and Automation 2019—ARW, Montreal, QC, Canada, 20–24 May 2019; pp. 1–6. [Google Scholar]

- Yun, S.; Choi, J.; Yoo, Y.; Yun, K.; Choi, J.Y. Action-Decision Networks for Visual Tracking with Deep Reinforcement Learning. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1349–1358. [Google Scholar] [CrossRef]

- Chatfield, K.; Simonyan, K.; Vedaldi, A.; Zisserman, A. Return of the Devil in the Details: Delving Deep into Convolutional Nets. arXiv 2014, arXiv:1405.3531. [Google Scholar]

- François-Lavet, V.; Henderson, P.; Islam, R.; Bellemare, M.G.; Pineau, J. An Introduction to Deep Reinforcement Learning. Found. Trends Mach. Learn. 2018. [Google Scholar] [CrossRef]

- Williams, R.J. Simple statistical gradient-following algorithms for connectionist reinforcement learning. Mach. Learn. 1992, 8, 229–256. [Google Scholar] [CrossRef] [Green Version]

- Levandowsky, M.; Winter, D. Distance between sets. Nature 1971, 234, 34–35. [Google Scholar] [CrossRef]

- Daniel McNeela. The REINFORCE Algorithm Aka Monte-Carlo Policy Differentiation. Available online: http://mcneela.github.io/math/2018/04/18/A-Tutorial-on-the-REINFORCE-Algorithm.html (accessed on 15 March 2019).

- Redmon, J.; Divvala, S.K.; Girshick, R.B.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2016, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2017, Honolulu, HI, USA, 21–26 July 2017; pp. 6517–6525. [Google Scholar] [CrossRef]

- Akhloufi, M.; Regent, A.; Ssosse, R. 3D target tracking using a pan and tilt stereovision system. In Proceedings of the SPIE, Defense, Security, and Sensing, Airborne Intelligence, Surveillance, Reconnaissance (ISR) Systems and Applications X, Baltimore, MD, USA, 29 April–3 May 2013; Volume 8713, pp. 8713–8738. [Google Scholar]

- Akhloufi, M. Pan and tilt real-time target tracking. In Proceedings of the Airborne Intelligence, Surveillance, Reconnaissance (ISR) Systems and Applications VII, Orlando, FL, USA, 5–9 April 2010; Volume 7668. [Google Scholar]

- Dellaert, F.; Fox, D.; Burgard, W.; Thrun, S. Monte Carlo localization for mobile robots. In Proceedings of the 1999 IEEE International Conference on Robotics and Automation (Cat. No.99CH36288C), Detroit, MI, USA, 10–15 May 1999; Volume 2, pp. 1322–1328. [Google Scholar] [CrossRef]

- Dellaert, F.; Burgard, W.; Fox, D.; Thrun, S. Using the condensation algorithm for robust, vision-based mobile robot localization. In Proceedings of the 1999 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (Cat. No PR00149), Fort Collins, CO, USA, 23–25 June 1999; Volume 2, pp. 588–594. [Google Scholar]

- Doucet, A. On Sequential Simulation-Based Methods for Bayesian Filtering; Technical Report; Signal Processing Group, Department of Engineering, University of Cambridge: Cambridge, UK, 1998. [Google Scholar]

- Parrot SA. Bebop 2. Available online: https://www.parrot.com/us/drones/parrot-bebop-2#parrot-bebop-2-details (accessed on 15 March 2019).

- Parrot SA. Mambo FPV. Available online: https://www.parrot.com/ca/drones/parrot-mambo-fpv (accessed on 15 March 2019).

- Chollet, F. Keras. 2015. Available online: https://keras.io (accessed on 15 March 2019).

- Abadi, M.; Agarwal, A.; Barham, P.; Brevdo, E.; Chen, Z.; Citro, C.; Corrado, G.S.; Davis, A.; Dean, J.; Devin, M.; et al. TensorFlow: Large-Scale Machine Learning on Heterogeneous Systems. 2015. Available online: tensorflow.org (accessed on 15 March 2019).

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Akhloufi, M.A.; Arola, S.; Bonnet, A. Drones Chasing Drones: Reinforcement Learning and Deep Search Area Proposal. Drones 2019, 3, 58. https://doi.org/10.3390/drones3030058

Akhloufi MA, Arola S, Bonnet A. Drones Chasing Drones: Reinforcement Learning and Deep Search Area Proposal. Drones. 2019; 3(3):58. https://doi.org/10.3390/drones3030058

Chicago/Turabian StyleAkhloufi, Moulay A., Sebastien Arola, and Alexandre Bonnet. 2019. "Drones Chasing Drones: Reinforcement Learning and Deep Search Area Proposal" Drones 3, no. 3: 58. https://doi.org/10.3390/drones3030058