Abstract

In the field of deep learning, the traditional Vision Transformer (ViT) model has some limitations when dealing with local details and long-range dependencies; especially in the absence of sufficient training data, it is prone to overfitting. Structures such as retinal blood vessels and lesion boundaries have distinct fractal properties in medical images. The Fractional Convolution Vision Transformer (FCViT) model is proposed in this paper, which effectively compensates for the deficiency of ViT in local feature capture by fusing convolutional information. The ability to classify medical images is enhanced by analyzing frequency domain features using fractional-order Fourier transform and capturing global information through a self-attention mechanism. The three-branch architecture enables the model to fully understand the data from multiple perspectives, capturing both local details and global context, which in turn improves classification performance and generalization. The experimental results showed that the FCViT model achieved 93.52% accuracy, 93.32% precision, 92.79% recall, and a 93.04% F1-score on the standardized fundus glaucoma dataset. The accuracy on the Harvard Dataverse-V1 dataset reached 94.21%, with a precision of 93.73%, recall of 93.67%, and F1-score of 93.68%. The FCViT model achieves significant performance gains on a variety of neural network architectures and tasks with different source datasets, demonstrating its effectiveness and utility in the field of deep learning.

1. Introduction

Deep learning has made breakthroughs in recent years in the fields of computer vision, natural language processing [1], etc., especially based on convolutional neural network [2] (CNN) and recurrent neural network [3] (RNN) models, which have shown excellent performance in tasks such as image classification, target detection, and speech recognition. However, traditional network architectures still have limitations in dealing with long-range dependencies, computational parallelization, and global information modeling.

The Transformer model has revolutionized the research paradigm of deep learning since it was proposed by Vaswani [4] et al. in 2017. It escapes from the sequential computation limitation of RNNs, relies on the self-attention mechanism [5] to capture global dependencies, and shows great potential in areas such as language modeling, computer vision, and time series analysis. Especially in the field of natural language processing, the Transformer model became the core architecture of pre-trained language models such as BERT [6] and GPT [7]. In the field of computer vision, ViT further validates the feasibility of the self-attention mechanism in image classification tasks [8]. ViT has achieved considerable success in vision tasks; however, it still faces some challenges. For instance, ViT directly computes global self-attention on the entire image, which results in excessive computational overhead on high-resolution images. The ViT model is distinguished by its absence of local inductive bias in comparison to CNNs, a characteristic that limits its generalizability when confronted with limited datasets. In order to enhance the adaptability of Transformer in the field of computer vision, hierarchical and window-based improvement schemes have been proposed. The most representative of these is the Swin Transformer model [9]. The success of the Swin Transformer model in visual tasks further demonstrates the potential of the Transformer model in computer vision. Furthermore, enhancements have been made to the Swin Transformer model. Jianwei Yang [10] et al. proposed the Focal Transformer model as an evolution of the Swin Transformer model. This model introduces a hierarchical attention mechanism, enhancing the model’s local and global feature modeling capabilities and improving the Transformer model’s performance on high-resolution visual tasks. Ali Hatamizadeh [11] et al. combined the hierarchical window attention mechanism of the Swin Transformer model with the 3D medical image segmentation task to significantly improve the segmentation accuracy and computational efficiency. The first introduction of Transformer to target detection was the Detection Transformer [12] (DETR) proposed by Carion et al. This model realizes an end-to-end detection framework, which is free from the traditional Anchor mechanism and hand-designed post-processing steps. The DETR model proved the effectiveness of Transformer in target detection tasks. Shiyang Li [13] et al. demonstrated the advantages of Transformer on time series prediction tasks by utilizing the self-attention mechanism to capture long-range dependencies. Transformer has demonstrated strong performance in sequential and visual tasks, but its ability to model structured data is still limited, and it is difficult to directly capture the topological relationships between nodes. Ying [14] and others proposed the Graphormer model, which enhances Transformer’s perception of graph data by introducing structural coding, and achieved significant improvements in molecular modeling, social network analysis, and other tasks.

For semantic segmentation tasks, Strudel [15] et al. proposed the Segmenter model and further explored the application of the Transformer model in dense prediction tasks. The Segmenter model uses a pure Transformer structure to classify images pixel by pixel, achieving efficient and accurate semantic segmentation. He [16] et al. proposed the masked autoencoder (MAE) strategy, which achieves efficient self-supervised learning by masking most of the image patches and reconstructing only the missing portions. The MAE strategy proves that masked autoencoders can be used as a scalable visual learning method and further promotes the development of Transformer in the field of computer vision. In further exploring a more effective pre-training method for ViT, Bao [17] et al. proposed the BEiT model, which draws on the Masked Image Modeling strategy of the BERT model to allow the Transformer to learn richer visual representations by recovering masked image blocks. With the successful application of Transformer in several fields, more complex non-Euclidean spaces are taken as a new direction to explore. Fein-Ashley [18] et al. proposed a hybrid architecture of a Hyperbolic Vision Transformer by combining hyperbolic and Euclidean geometries, which efficiently captures hierarchical structures in the image data and the localized relationships in image data. In the field of medical image processing, ViT also shows excellent performance. In the task of breast cancer pathology image classification, the ViT-DeiT [19] model is able to effectively capture global features and improve the classification performance by combining the ViT model and Data-efficient Image Transformer model. Koushik Sivarama Krishnan [20] and others demonstrated the potential of Transformer in medical image classification by applying it to the task of COVID-19 [21] detection in chest X-ray images by utilizing the ability of the ViT model to capture global features.

Although some studies have applied fractional Fourier transform (FrFT) to neural network structures to enhance the frequency domain modeling ability of models, such as Wu et al. [22] combining FrFT with convolutional neural networks for robust face recognition tasks, and Barshan et al. [23] using FrFT as a preprocessing method for neural networks for object recognition, these methods typically rely on fixed transformation orders and lack adaptive modeling capabilities for task-specific frequency domain projections.

ViT uses a global self-attention mechanism that is capable of capturing long-range dependencies and lacks the local inductive bias inherent in CNNs when dealing with local features (like edges, textures, and so on). To address the above limitations, the following improvements are proposed by this study:

- (1)

- A Fractional-Order Fourier Transform (FrFT) module based on the Chirp-Z algorithm approximation of the learnable order is introduced. The fractional-order Fourier transform module can provide more flexible frequency domain sensing capabilities. By introducing a learnable parameter to control the “order” of the transformation, the model can automatically adjust the optimal degree of frequency domain projection during the training process. This approach enhances the model’s ability to perceive multi-scale and non-stationary signals, effectively improves the expressive power of feature extraction, and is especially suitable for dealing with data scenarios with complex pattern distributions.

- (2)

- A custom convolution module is incorporated to extract local features using convolutional layers. The custom convolution module can effectively capture texture and shape changes within a localized region, which is especially important for processing images or spatial data.

- (3)

- Combining three different types of feature extraction methods (attention mechanism, convolutional network, and fractional-order Fourier transform) to extract features from input data from different perspectives, the model integrates different information from the three branches. The three-branch fusion approach not only preserves the characteristics of each branch, but also combines their strengths, and improves the model’s ability to handle complex data.

2. Fractional Convolution Vision Transformer Model

2.1. Vision Transformer

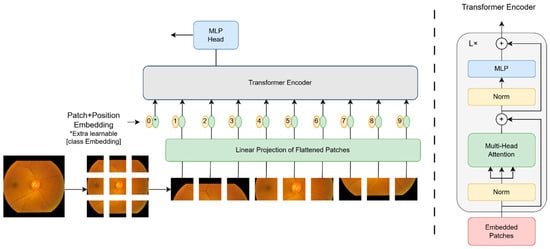

Vision Transformer (ViT) is an image classification model based on the Transformer structure, proposed by Dosovitskiy et al. It breaks away from the dominance of traditional convolutional neural networks in computer vision tasks. ViT employs Transformer for global modeling, which captures long-range dependencies of images through a self-attentive mechanism. The Vision Transformer model architecture is shown in Figure 1.

Figure 1.

Vision Transformer model architecture.

ViT segments the input image into fixed-size patches, treating each patch as a “token” to be processed. Assuming that the input image is

, where H,W,C denote the height, width, and number of channels of the image, respectively, it is first segmented with each patch size P× P, and the number of patches N generated is calculated as shown in Equation (1):

Each patch is spread and mapped to a D-dimensional embedding space, computed as shown in Equation (2):

where

denotes the embedding vector of the

th image patch after linear projection;

is the patch after spreading;

is the linear transformation matrix; b is the bias term; and N denotes the number of patches. The final embedding sequence

is obtained.

ViT introduces a trainable category token

(a randomly initialized and learnable classification token), similar to BERT’s [CLS](Classification) token, together with a fixed positional encoding E, computed as shown in Equation (3):

where

denotes the token sequence of the input Transformer.

The backbone structure of ViT uses a standard Transformer encoder, which consists of multiple layers stacked on top of each other, each of which consists of three main key modules: multi-head self-attention, a feed-forward network (FFN), and LayerNorm with enhanced gradient propagation through residual connectivity.

ViT employs a multi-head mechanism, whereby multiple attention heads are computed in parallel. Multi-head self-attention is utilized to compute the global relationship between input tokens, thereby enhancing feature expression. The multi-head self-attention calculation formula is shown in Equation (4):

where Q,K,V are Query, Key, and Value, respectively, generated from the input Z by different projection matrices; and

is the dimension of the attention header, softmax normalized to compute the weighted sum to obtain the global context information.

The feed-forward network consists of a two-layer fully connected network, which usually contains the GELU (Gaussian Error Linear Unit) activation function, and the feed-forward network layer is calculated as shown in Equation (5):

where

is the weight matrix;

is the bias term; and

is the GELU activation function.

2.2. Fractional-Order Fourier Transform Module

Fourier transform is a mathematical tool to convert a signal from the time domain to the frequency domain, and its core idea is to decompose a complex signal into a superposition of multiple sinusoids of different frequencies. Through this decomposition, the intensity and phase distribution of each frequency component of the signal can be clearly analyzed, thus revealing the frequency domain characteristics of the signal.

In order to realize efficient and microscopic fractional-order Fourier transform, the fractional-order Fourier transform (FrFT) implementation based on the approximation of the Chirp-Z algorithm is adopted in this study, which is approximated in the form of modulated signals, FFTs, and remodulation to obtain the effect of FrFT. The approximate fractional-order Fourier transform formula is shown in Equation (6):

where α is the order parameter of the fractional-order Fourier transform, which controls the degree of transformation, and is equivalent to the original signal when α = 0, to the standard Fourier transform when α = 1, and to the abelian transform of the FrFT when

;

denotes the order of the FrFT; Chirp denotes the exponential modulation kernel; t is the time (or sequence) index; and

is the original signal (time domain function).

The specific implementation process is to construct an exponential modulation function (Chirp kernel) for each feature channel. The input signal is first multiplied with the Chirp kernel to complete the exponential weighting of the time domain signal, achieving the purpose of Chirp modulation. The Chirp pre-modulation formula is shown in Equation (7):

where

represents the FrFT rotation angle;

represents the “degree of curvature” for controlling modulation; and

represents the pre-modulated Chirp signal.

The modulated signal is input into the standard Fast Fourier Transform (FFT) to obtain the initial spectrum, as shown in Equation (8):

where

represents the Fourier transform operation.

The frequency spectrum and Chirp core are multiplied again to obtain an approximate fractional frequency domain result to achieve inverse modulation processing. The Chirp post-modulation formula is shown in Equation (9):

where

represents the frequency domain index.

The Chirp-Z method is a strict transformation of FrFT that includes frequency scaling and phase compensation. It uses a discrete form to approximate the integral definition of continuous FrFT. FFT can only handle discrete sequences and cannot represent true continuous integration. Overall, it is a numerical approximation.

Fast Fourier Transform (FFT) is an efficient algorithm for discrete Fourier transform which is able to quickly convert time domain signals into frequency domain representations, greatly reducing computational complexity. FFT is able to efficiently extract the key frequency features of signals by converting time domain signals into frequency domain representations. Assuming that the last dimension of the input tensor x has N elements, the FFT output

is computed as shown in Equation (10) for any slice on that dimension (other dimensions are fixed):

where

is the nth element of the last dimension of the input tensor;

is the kth complex component of the last dimension of the output tensor; i is an imaginary unit; and

is the rotation factor.

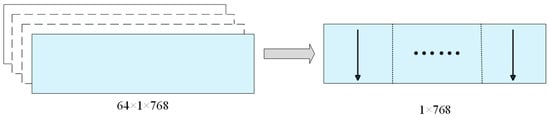

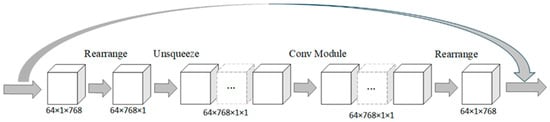

Since the input data are three-dimensional tensors at this point, FFT is performed on each 1 × 768 matrix along the direction of the columns. The FFT operation is illustrated in Figure 2.

Figure 2.

Graphical representation of FFT operations.

The fractional-order Fourier module designs the order parameter α of the FrFT as a learnable parameter, which is dynamically optimized during the training process by gradient backpropagation. To prevent α from crossing the boundary, a sigmoid function is used to constrain it in the (0,1) interval and further mapped to the rotation angle

to control the degree of signal transformation in the complex plane. The implementation of FrFT is based on the Chirp-Z Transform algorithm. The Chirp-Z algorithm allows frequency sampling on non-unit-circle paths. The specific formulation of the Chirp-Z algorithm is shown in Equation (11):

where A is the starting point of the complex plane; W is the rotation factor of each step;

defines an arbitrary sampling path;

denotes the nth sampling point of the original discrete signal; and

denotes the kth output spectral value after the transformation of the Chirp-Z algorithm.

The fractional-order Fourier module maps the input sequence from the time domain to a fractional-order frequency domain and extracts its magnitude and phase features, thus enhancing the model’s ability to model temporal structures or image features in the frequency domain. Although the method is different from the FrFT defined by the traditional integral kernel, it possesses stronger computational efficiency and end-to-end learning capability, which is suitable for the efficient deployment of deep neural networks.

The fractional-order Fourier module projects the input tensor from the time domain to the fractional-order frequency domain and extracts its magnitude spectrum and phase spectrum information. Let the input feature be the tensor

, where B denotes the batch size, T is the sequence length, and D is the feature dimension. First, the input is subjected to FrFT to obtain complex frequency domain features

, where α is the transform order, which is obtained by learning the model end to end.

The magnitude and phase spectra are extracted from the frequency spectrum separately. The magnitude spectrum reflects the intensity information of different frequency components, and the formula for calculating the magnitude spectrum is shown in Equation (12):

The phase spectrum, on the other hand, encodes the relative position of the frequency components on the time axis, and the phase spectrum is calculated as shown in Equation (13):

and

represent the real and imaginary parts of the complex number

, respectively. The combination of the magnitude and phase spectra provides a complete characterization of the original signal in the frequency domain.

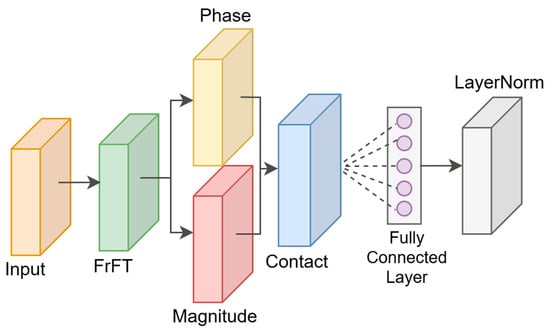

The above two types of features are spliced into a new tensor in the feature dimension

, and after this processing, the feature dimension becomes twice the original dimension. This splicing operation helps the neural network to utilize the complete frequency information, rather than relying solely on the time domain representation. The spliced features are linearly transformed through the fully connected layer, mapping the dimensions back to the original dimensions from twice the original dimensions, ensuring that the shape of the data is compatible with subsequent network structures such as the Transformer. This transformation not only compresses the information, but also allows the network to learn how to effectively fuse magnitude and phase information to generate more discriminative features. Normalization is then applied to the transformed features to stabilize the training and improve the convergence speed of the model. Normalization reduces the bias in the data distribution and avoids gradient explosion or vanishing, allowing the network to learn more efficiently. The FrFT module is shown in Figure 3.

Figure 3.

FrFT module.

Fractional Fourier transform, as a generalized form of traditional Fourier transform, has the ability to smoothly transition signals from the time domain to the frequency domain. Unlike FFT with fixed frequency domain projection, FrFT achieves arbitrary angle projection in the time–frequency plane by introducing a rotation angle α. This rotational characteristic not only improves the flexibility of signal representation, but also enables FrFT to more effectively capture local time–frequency features in non-stationary signals. FrFT can preserve the amplitude and phase information of signals, and when its results are used as input for deep networks, it can provide richer feature representations. Introducing a learnable FrFT module by optimizing the alpha angle enables the network to adaptively select the optimal projection direction, thereby improving the effectiveness and discriminative ability of feature extraction.

2.3. Customized Convolution Module

The traditional Transformer architecture relies on a global self-attention mechanism, which, while effective in modeling long-range dependencies, performs relatively weakly when dealing with the local details of an image. By adding the convolution operation, the ViT model is able to better extract the local features of the image, especially when dealing with high-resolution images or texture-rich scenes; the local feel-good field property of the convolution can significantly enhance the model’s performance. The incorporation of convolution operations within the ViT model has been demonstrated to enhance its capacity to capture local features.

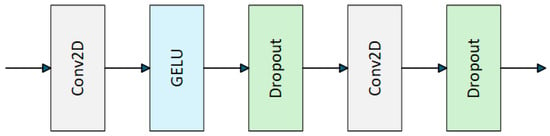

The Custom Convolutional (C-Conv) module takes full advantage of both by combining a convolutional neural network with the Transformer architecture. Specifically, the module first adapts the dimensionality of the input tensor to a form suitable for convolutional operations by means of dimensional rearrangement and dimensional expansion functions. This is followed by a customized Conv module layer. The customization of the Conv module layer is illustrated in Figure 4.

Figure 4.

Customization of the Conv module layer.

The custom Conv module layer consists of feature transformations via two 3 × 3 convolutional layers, a GELU activation function in the middle of the two convolutional layers to enhance nonlinear representations, and regularization via a stochastic deactivation layer to prevent model overfitting. The dimensionality of the output tensor is reduced by the rearrange function, allowing it to be seamlessly integrated into subsequent layers of the Transformer. The operations of the customized convolution module are shown in Equations (14)–(19) as follows.

Given the input tensor

, the first layer is convolved:

where the size of the convolutional kernel is 3 × 3.

GELU activation:

GELU is defined as

, where

is the cumulative distribution function of the standard Gaussian distribution.

Dropout layer:

p = 0.1 means randomly zeroing some of the activation values with a probability of 0.1.

Layer 2 convolution:

This maps the dimension from h back to c.

Dropout layer:

Residual link:

The C-Conv module is shown in Figure 5.

Figure 5.

C-Conv module.

The C-Conv module combines the design of global attention and local convolution to improve the computational efficiency of the model, which enables the ViT model to efficiently process local information while maintaining the global modeling capability, thus achieving better performance in tasks such as image classification and target detection.

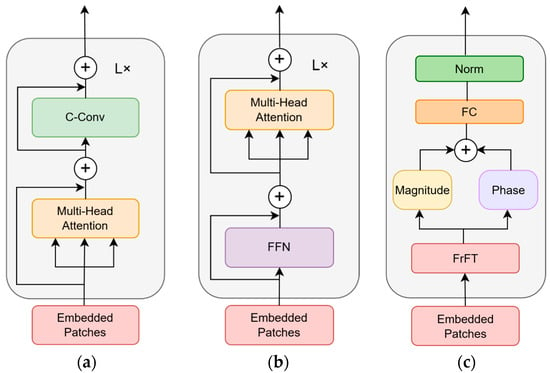

2.4. Three-Branch Module

Based on the traditional ViT module, the three-branch parallel architecture is adopted by the FCViT model to realize multi-dimensional feature processing. The first branch adopts the dual design of “attention–convolution”; the second branch constructs the cascade structure of “feed-forward network–attention”; and the third branch innovatively introduces the FFT module for frequency domain analysis, which complements the spatial domain processing of the other two branches.

For forward propagation, the input tensor is fed synchronously into three branches: an attention–convolution branch that generates spatial features, a feed-forward–attention branch that generates structural features, and an FFT branch that produces spectral features. Finally, the three key pieces of information of spatial relationship, structural transformation, and frequency domain characteristics are organically integrated through the fusion strategy of element-by-element addition of three-way features. The multi-branch synergistic design enables a single Transformer module to simultaneously possess the three major capabilities of global attention modeling, local convolutional perception, and spectral analysis, which significantly enhances the richness of feature representation. The three-branch module branching diagram is shown in Figure 6.

Figure 6.

Three-branch module branching diagram: (a) attention–convolution branching diagram; (b) feed-forward network–attention branching diagram; (c) FrFT frequency domain branching diagram.

In the “spatial–structural hybrid features” generated by the attention–convolution branch, the multi-head self-attention mechanism captures global dependencies, and then local features are extracted through the convolutional layer, and residual connections are used in both stages to maintain the original information. Convolutional operations mainly capture local spatial patterns through their local sensory field characteristics, while the front attention layer establishes global spatial relationships, and global context modeling can compensate for the local limitations of pure convolution to form a “local–global” synergistic spatial understanding. The attention–convolution branching diagram is shown in Figure 6a.

The feed-forward network–attention branch generates “structured semantic features”, which are first transformed by a two-layer MLP containing GELU activation, and then accessed by a second attention layer to form a more complex feature reorganization path. Cross-channel information fusion is used in implicit feature space by forcing the model through feature dimension transformations. On the basis of the FFN-transformed features, the secondary attention mechanism reconstructs the dynamic weight relationships among the tokens and performs context-aware filtering of the higher-order semantic features generated by the FFN. The feed-forward network–attention branching diagram is shown in Figure 6b.

FrFT frequency domain branching generates “frequency domain perceptual features”, the core value of which is to break through the limitations of the conventional Vision Transformer that only focuses on air domain features, and reveal the essential energy distribution and time–frequency characteristics of the signal through the frequency domain analysis, which is more robust to the operation of rotation and scaling. The FFN frequency domain branching diagram is shown in Figure 6c.

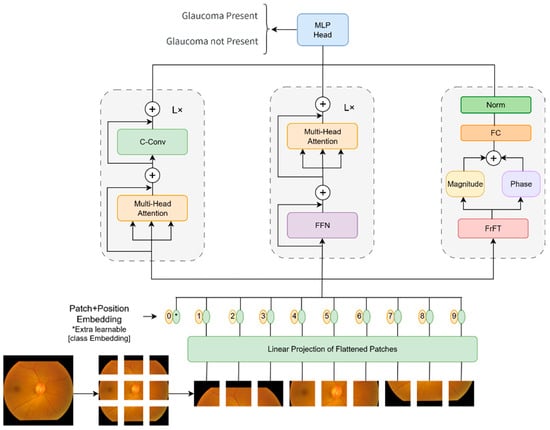

2.5. FCViT Model

The three-branch feature extraction mechanism in the standard ViT architecture is innovatively introduced by the FCViT model to realize multi-dimensional feature fusion through the synergistic work of time domain convolution, frequency domain analysis, and an attention mechanism. The core component adopts residual connection design, in which the custom convolution module adopts dimensional rearrangement to realize channel-space dimension conversion, completes feature interaction while maintaining computational efficiency, and finally retains the original feature information through residual connection.

The frequency domain feature extraction branch obtains a more flexible frequency characterization capability by performing a hybrid time–frequency domain transform on the input sequence. The module utilizes trainable fractional-order parameters to control the depth of the transform, enabling the model to adaptively adjust its representation between the time and frequency domains to more accurately capture the structural features of the signal at different scales. After the fractional-order transformation, the extracted amplitude and phase features are compressed and stabilized by linear projection and layer normalization, which further enhances the model’s ability to recognize complex textures and non-smooth patterns, and improves the overall feature expression quality and discrimination performance.

Three branches construct a multipath feature learning system: the first branch uses the standard multi-head attention mechanism in series with the convolutional module; the second branch is connected in parallel with the attention mechanism via a two-layer MLP; and a new third branch handles frequency domain features. The outputs of the three branches are fused by simple summation to achieve feature fusion, which retains the global modeling capability of ViT and enhances the local feature extraction and frequency domain analysis. The FCViT network model diagram is shown in Figure 7.

Figure 7.

FCViT network module diagram.

3. Experimental Section

3.1. Common Experimental Models

The MobileNetV1 [24] model proposed by Google Research groundbreakingly redefined the design paradigm of efficient neural networks, and its core innovation is to decompose the standard convolution into more efficient computational units, laying the foundation for the subsequent development of deep learning models for mobile applications. Google Research followed with the MobileNetV2 [25] lightweight neural network architecture, which compresses the computation to less than 1/10th of the traditional CNN and has become a benchmark architecture for mobile deployment. MobileNetV3 [26], proposed by the Google Brain team, represents a landmark breakthrough in lightweight neural network design, and its innovations have significantly influenced the direction of deep learning in mobile applications.

The EfficientNet family of models proposed by the Google Brain team achieves an unprecedented balance between computational efficiency and model performance. The lightweight baseline model EfficientNet-B0 [27] has key features including the Mobile Inverted Bottleneck module and progressive resolution adjustment. The EfficientNetV2 [28] model is a comprehensive upgrade of the EfficientNet model, aiming to address the bottleneck of the original model in terms of training speed and parameter efficiency.

The ResNet model is a deep convolutional neural network architecture proposed by Kaiming He’s team, and its core innovation lies in the introduction of a residual learning mechanism, which effectively solves the problems of gradient vanishing and network degradation in deep network training. The ResNet model architecture not only sets a new performance benchmark for the field of computer vision, but has also become the basic design paradigm for all kinds of deep neural networks, which has a profound and lasting impact on the development of deep learning technology as a whole.

3.2. Datasets

The entire experimental code was written using the PyTorch framework. The programming language used was Python 3.11, with torch version 2.0.1, torchvision version 0.15.0, and wandb version 0.16.0.

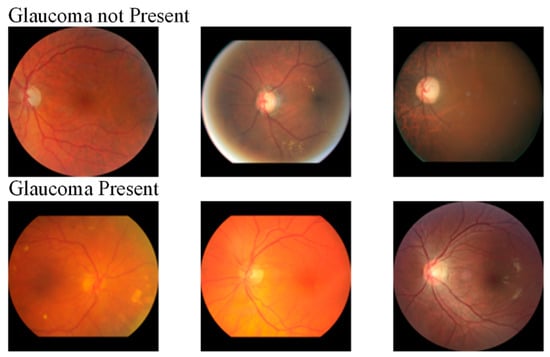

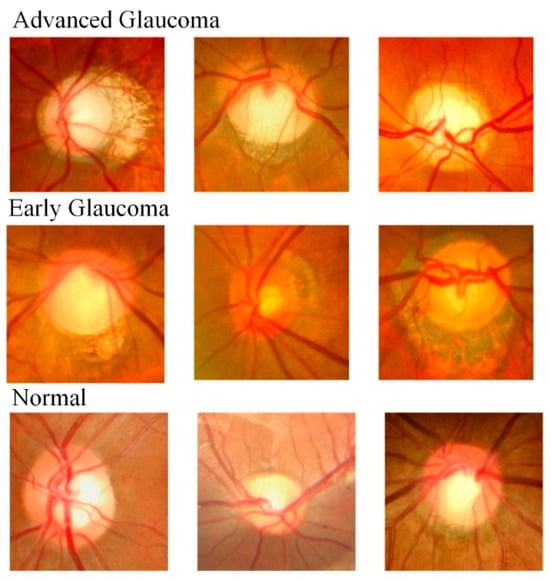

The retinal vasculature in fundus images shows a typical fractal structure with remarkable self-similarity and complex branching patterns, a structural feature that is particularly evident in the progression of glaucoma. Glaucoma is usually accompanied by optic nerve atrophy and changes in vascular morphology, leading to changes in fractal dimensions. Standardized fundus glaucoma dataset [29] images and the Harvard Dataverse-V1 [30] (HDV1) dataset were used for experimental evaluation in this paper. The standardized fundus glaucoma dataset includes two different categories: glaucoma present and glaucoma not present. The glaucoma present category accounted for 5293 samples and the glaucoma not present category accounted for 3328 samples, and the fundus images were in color. The HDV1 dataset includes three categories: early glaucoma, advanced glaucoma, and normal. The early glaucoma category accounted for 289 samples, the advanced glaucoma category accounted for 467 samples, and the normal category accounted for 786 samples. Since the experiments require a large amount of data for training, data augmentation experiments are performed on the HDV1 dataset for dataset expansion. The number of images in the standardized fundus glaucoma dataset and the HDV1 dataset is shown in Table 1.

Table 1.

Number of images in the standardized fundus glaucoma dataset and HDV1 dataset.

A randomly selected sample of images from the standard fundus glaucoma dataset is shown in Figure 8.

Figure 8.

Randomly selected image samples from the standard fundus glaucoma dataset.

A randomly selected sample of images from the HDV1 dataset is shown in Figure 9.

Figure 9.

Randomly selected sample of images from the HDV1 dataset.

3.3. Performance Indicator Test

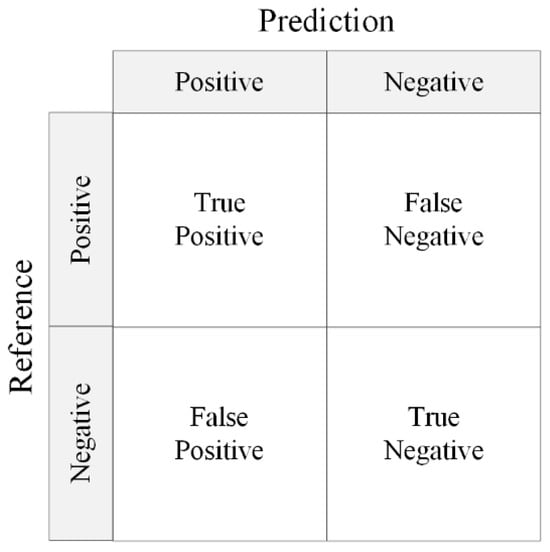

A confusion matrix is an important tool for evaluating the performance of classification models in machine learning, which visualizes the correspondence between the model predictions and the true labels in the form of a matrix. The rows of the matrix represent the true categories and the columns represent the predicted categories, revealing the model’s categorization preferences and error patterns by counting the distribution of samples across categories. The structure of the confusion matrix is shown in Figure 10.

Figure 10.

Confusion matrix structure.

The confusion matrix builds a cornerstone framework for model evaluation through four underlying quantitative metrics. True Positive (TP) reflects the model’s ability to correctly identify positive class samples and is a direct reflection of the model’s core efficacy; False Positive (FP) exposes the model’s over-sensitive tendency to misclassify negative classes as positive; False Negative (FN) reveals the model’s flawed under-detection of positive class samples; and True Negative (TN), on the other hand, demonstrates the model’s level of correct exclusion of negative class samples.

Accuracy measures the overall proportion of correct predictions made by the model for all samples, precision reflects the degree of confidence with which the model makes positive judgments, recall measures the model’s ability to capture positively classified samples, and F1-score is the reconciled average of precision and recall. The FCViT model was evaluated from different perspectives based on accuracy, precision, recall, and F1-score metrics. The accuracy, precision, recall, and F1-score are calculated as shown in Equations (20)–(23):

3.4. Experimental Results and Analysis

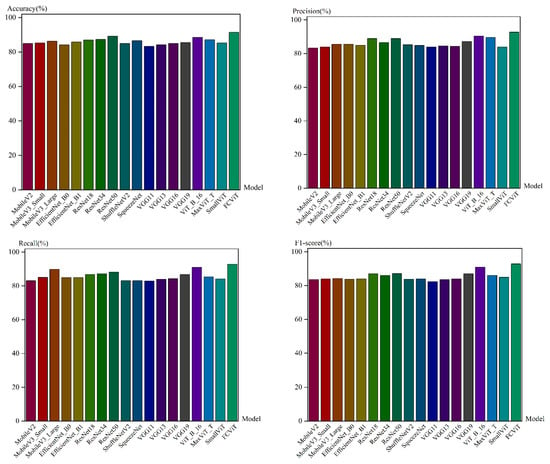

On the standardized fundus glaucoma dataset, the FCViT model achieved an accuracy of 93.52%, precision of 93.32%, recall of 92.79%, F1-score of 93.04%, and loss value of 0.249. Compared to the ViT_B_16 model the accuracy is improved by 6.21%, precision by 6.73%, recall by 6.27%, and F1-score by 6.75%. Compared with the MaxViT_T model, the accuracy, precision, recall, and F1-score are improved by 7.40%, 4.35%, 7.6%, and 8.46%, respectively; and compared with the SmallViT model, the accuracy, precision, recall, and F1-score are improved by 7.78%, 8.52%, 9.17%, and 9.83%. The FCViT model achieves better performance in terms of accuracy, precision, and F1-score compared to other commonly used traditional convolutional models. Early glaucoma symptoms are insidious and optic nerve damage is irreversible. A 1% improvement in accuracy may mean earlier detection of high-risk patients and avoidance of vision loss, while it may also reduce the amount of physician review and improve screening efficiency. The results of the model validation set for the standardized fundus glaucoma dataset are shown in Table 2.

Table 2.

Results of the model validation set for the standardized fundus glaucoma dataset.

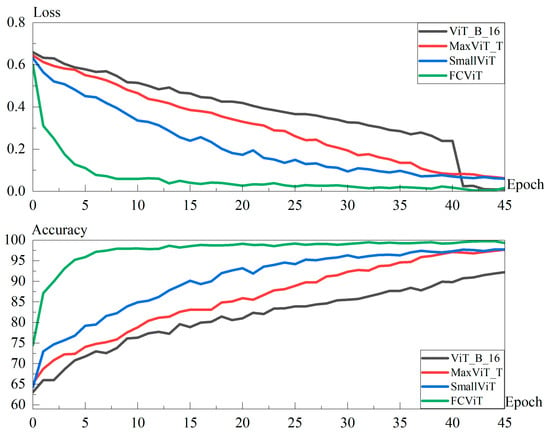

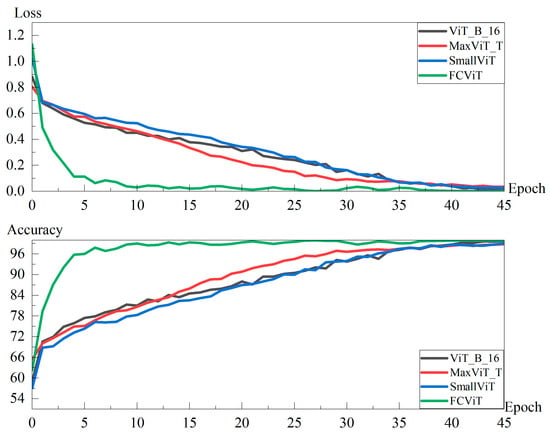

The results of the training set for the standardized fundus glaucoma dataset are shown in Figure 11.

Figure 11.

Results of the training set for the standardized fundus glaucoma dataset.

Figure 11displays the number of iterations (45 rounds) on the standardized fundus glaucoma dataset where the FCViT model has saturated and is in a stable state for demonstration. The FCViT model saturates its performance around 20 rounds of iterations, showing significant and efficient convergence characteristics. In contrast, the comparison models, such as MaxViT_T, SmallViT, and ViT_B_16, are still in the stage of performance increase and have not yet reached a stable state. The experimental results fully demonstrate the advantages of the FCViT model in terms of training efficiency and convergence speed, and its well-designed hybrid architecture can learn effective feature representations faster.

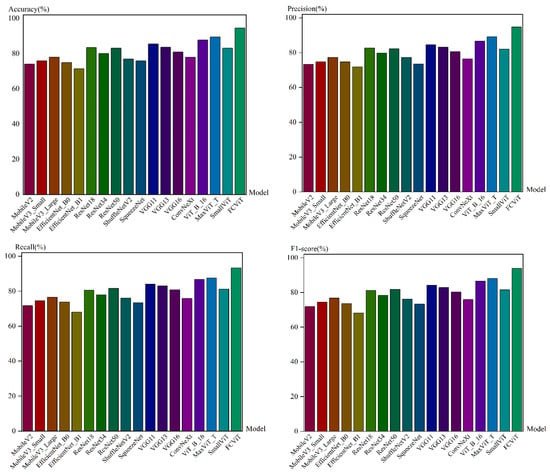

The results of the standardized fundus glaucoma dataset model test set are shown in Figure 12.

Figure 12.

Results of the standardized fundus glaucoma dataset model test set.

As can be seen from Figure 12, the FCViT model shows excellent performance in all key evaluation metrics, and the accuracy, recall, and F1-score are significantly improved over mainstream models such as MaxViT_T and SmallViT. On the test set, the 95% confidence interval for the accuracy of the FCViT model is [92.86%, 95.13%]; the 95% confidence interval for F1-score is [92.43%, 94.81%].

The results of the model validation set for the HDV1 dataset are shown in Table 3.

Table 3.

Results of the model validation set for the HDV1 dataset.

The FCViT model achieved better performance in terms of accuracy, precision, and F1-score compared to other commonly used models. The FCViT model achieved an accuracy of 94.86%, precision of 94.64%, recall of 94.30%, F1-score of 94.46%, and loss value of 0.237. Compared to the ViT_B_16 model the accuracy is improved by 3.17%, precision by 3.47%, recall by 3.41%, and F1-score by 3.44%; compared to the MaxViT_T model, its accuracy, precision, recall, and F1-score are improved by 3.55%, 4.04%, 3.87%, and 3.98%, respectively; compared to the SmallViT model its performance results are more significantly improved, with 9.03%, 9.49%, 10.08%, and 9.95% improvements in accuracy, precision, recall, and F1-score, respectively.

A comparison of the epoch time and number of parameters for the selected models in HDV1 is shown in Table 4.

Table 4.

Comparison of epoch time and number of parameters for selected models in HDV1.

From the data in Table 4, it can be seen that in the case where the FCViT model has more parameters than the ViT_B_16 model, the time for iterating a single round is the same as the time for iterating a single round for the ViT_B_16 model.

The results of the HDV1 dataset training set are shown in Figure 13.

Figure 13.

Results of the HDV1 dataset training set.

As can be seen from Figure 13, the FCViT model saturates at around the tenth round, while the MaxViT_T model, SmallViT model, and ViT_B_16 model saturate at around 45 rounds of iterations. Compared to traditional models that often require dozens or even hundreds of iterations to stabilize, the FCViT model captures the essential laws of the data in a very short period of time, while its generalization robustness allows the model to converge quickly while maintaining excellent prediction reliability. The training curve shows that the model’s training set performance is always stabilized in the ideal interval after reaching the saturation point, indicating that its learning process is not disturbed by noise and has a strong resistance to overfitting.

The results of the HDV1 dataset test set are shown in Figure 14.

Figure 14.

Results of the HDV1 dataset test set.

As can be seen from the experimental data in Figure 14, the FCViT model significantly outperforms MaxViT_T, SmallViT, and other comparative models in terms of core metrics such as accuracy, recall, and F1-score, demonstrating an all-round performance advantage. The FCViT model has good generalization capabilities across different tasks, ensuring robust performance. On the test set, the 95% confidence interval for the accuracy of the FCViT model is [91.30%, 94.17%]; the 95% confidence interval for the F1-score is [90.68%, 93.76%].

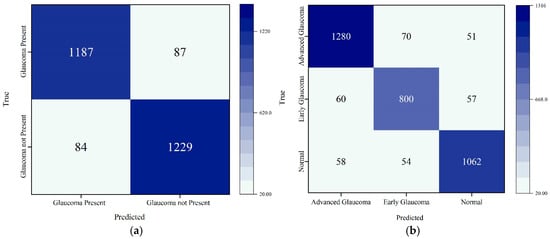

The FCViT model confusion matrix is shown in Figure 15.

Figure 15.

FCViT model confusion matrix: (a) standardized fundus glaucoma dataset; (b) HDV1 dataset.

In Figure 15a, the overall accuracy of the model is 93.69%, meaning it can correctly predict whether one has glaucoma in most cases. The model is able to accurately identify samples with glaucoma and can also help find most patients with glaucoma in practical applications. In Figure 15b, the model correctly classifies a high percentage of all samples. The model performs well overall, with the vast majority of samples being correctly classified. Late glaucoma was predicted most accurately in the model, and early glaucoma was also relatively accurate, reflecting to a large extent the ability of the model to recognize all stages of glaucoma, providing strong support in early screening and intervention.

4. Conclusions

Adopting a multi-branch parallel architecture, the Fourier Convolution Vision Transformer (FCViT) model, which combines the frequency domain analysis capability of Fractional-order Fourier Transform, the local feature extraction advantage of a convolution operation, and the global modeling capability of a self-attention mechanism, is proposed in this paper, realizing multi-level feature fusion, and demonstrating in the glaucoma classification task excellent performance. It performs particularly well on datasets from different sources, reaching 93.52%, 93.32%, 92.79%, and 93.04% for accuracy, precision, recall, and F1-score on the standardized fundus glaucoma dataset, respectively. The accuracy, precision, recall, and F1-score on the Harvard Dataverse-V1 dataset reached 94.21%, 93.73%, 93.67%, and 93.68%.

The FCViT model is able to capture both local details and global contextual information in medical images, accurately identifying key features of glaucoma as well as understanding a larger range of structural variations in complex images, thus significantly improving the classification performance and demonstrating stronger generalization ability under different data distributions. The FCViT model effectively avoids overfitting and captures the essential features of the complex disease of glaucoma, rather than relying solely on noise or specific biases that may be present in the data. In the context of medical image classification, the variability in the quality and diversity of datasets is frequently identified as a significant factor contributing to model overfitting. In contrast, the FCViT model’s capacity to consistently exhibit robust performance across diverse data sources is indicative of its superior adaptability and stability. This renders the FCViT model a promising candidate for real-time medical image analysis and diagnosis, offering a novel approach and technological solution for the early detection of glaucoma.

Author Contributions

Conceptualization, methodology, and writing—original draft preparation, W.Z.; writing—review and editing, supervision, and formal analysis, H.S.; software, project administration, and resources, Y.S.; data curation, J.Y.; supervision, L.Z. and Z.M. All authors have read and agreed to the published version of the manuscript.

Funding

(1). Liaoning Provincial Department of Education Basic Research Project for Higher Education Institutions (General Project), Shenyang University of Technology, Research on Optimization Design of Wind Turbine Cone Angle Based on Fluid Physics Method (LJKZ0159). (2). Basic Research Project of Liaoning Provincial Department of Education “Training and Application of Multimodal Deep Neural Network Models for Vertical Fields” Project Number: JYTMS20231160. (3). Research on the Construction of a New Artificial Intelligence Technology and High-Quality Education Service Supply System in the 14th Five-Year Plan for Education Science in Liaoning Province, 2023–2025, Project Number: JG22DB488. (4). “Chun hui Plan” of the Ministry of Education, Research on Optimization Model and Algorithm for Microgrid Energy Scheduling Based on Biological Behavior, Project No. 202200209. (5). Shenyang Science and Technology Plan “Special Mission for Leech Breeding and Traditional Chinese Medicine Planting in Dengshibao Town, Faku County”, Project No. 22-319-2-26.1.

Data Availability Statement

Optimizer and basic program code: https://github.com/zhou0618/Fourier-Convolution-Vision-Transformer (accessed on 11 July 2025); Harvard Dataverse-V1 dataset: https://dataverse.harvard.edu/dataset.xhtml?persistentId=doi:10.7910/DVN/1YRRAC (accessed on 28 March 2025); glaucoma dataset: https://www.kaggle.com/datasets/sabari50312/fundus-pytorch (accessed on 28 March 2025).

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The list of abbreviations and symbols is shown below:

| ViT | Vision Transformer |

| CLS token | Classification Token |

| MLP | Multilayer Perceptron |

| GELU | Gaussian Error Linear Unit |

| FCViT | Fourier Convolution Vision Transformer |

| FFT | Fast Fourier Transform |

| FrFT | Fractional-Order Fourier Transform |

| HDV1 | Harvard Dataverse-V1 |

| C-Conv | Custom Convolutional |

| FFN | Feed-Forward Network |

References

- van Le, C. Unveiling Global Discourse Structures: Theoretical Analysis and NLP Applications in Argument Mining. arXiv 2025, arXiv:2502.08371. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Zaremba, W.; Sutskever, I.; Vinyals, O. Recurrent neural network regularization. arXiv 2014, arXiv:1409.2329. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 6000–6010. [Google Scholar]

- Guo, M.H.; Xu, T.X.; Liu, J.J.; Liu, Z.N.; Jiang, P.T.; Mu, T.J.; Zhang, S.H.; Martin, R.R.; Cheng, M.M.; Hu, S.M. Attention mechanisms in computer vision: A survey. Comp. Vis. Media 2022, 8, 331–368. [Google Scholar] [CrossRef]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Minneapolis, MN, USA, 2–7 June 2019; Volume 1, pp. 4171–4186. [Google Scholar]

- Radford, A.; Narasimhan, K.; Salimans, T.; Sutskever, I. Improving Language Understanding by Generative Pre-Training. Available online: https://gwern.net/doc/www/s3-us-west-2.amazonaws.com/d73fdc5ffa8627bce44dcda2fc012da638ffb158.pdf (accessed on 28 March 2025).

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale. arXiv 2021, arXiv:2010.11929. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 10012–10022. [Google Scholar]

- Yang, J.; Li, C.; Zhang, P.; Dai, X.; Xiao, B.; Yuan, L.; Gao, J. Focal self-attention for local-global interactions in vision transformers. arXiv 2021, arXiv:2107.00641. [Google Scholar]

- Hatamizadeh, A.; Nath, V.; Tang, Y.; Yang, D.; Roth, H.R.; Xu, D. Swin unetr: Swin transformers for semantic segmentation of brain tumors in MRI images. In International MICCAI Brainlesion Workshop; Springer: Cham, Switzerland, 2021; pp. 272–284. [Google Scholar]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-end object detection with transformers. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2020; pp. 213–229. [Google Scholar]

- Li, S.; Jin, X.; Xuan, Y.; Zhou, X.; Chen, W.; Wang, Y.X.; Yan, X. Enhancing the locality and breaking the memory bottleneck of transformer on time series forecasting. Adv. Neural Inf. Process. Syst. 2019, 32. [Google Scholar] [CrossRef]

- Ying, C.; Cai, T.; Luo, S.; Zheng, S.; Ke, G.; He, D.; Shen, Y.; Liu, T.Y. Do transformers really perform badly for graph representation? Adv. Neural Inf. Process. Syst. 2021, 34, 28877–28888. [Google Scholar]

- Strudel, R.; Garcia, R.; Laptev, I.; Schmid, C. Segmenter: Transformer for semantic segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 7262–7272. [Google Scholar]

- He, K.; Chen, X.; Xie, S.; Li, Y.; Dollár, P.; Girshick, R. Masked autoencoders are scalable vision learners. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 16000–16009. [Google Scholar]

- Bao, H.; Dong, L.; Piao, S.; Wei, F. BEiT: BERT Pre-Training of Image Transformers. arXiv 2022, arXiv:2106.08254. [Google Scholar] [CrossRef]

- Fein-Ashley, J.; Feng, E.; Pham, M. HVT: A Comprehensive Vision Framework for Learning in Non-Euclidean Space. arXiv 2024, arXiv:2409.16897. [Google Scholar] [CrossRef]

- Alotaibi, A.; Alafif, T.; Alkhilaiwi, F.; Alatawi, Y.; Althobaiti, H.; Alrefaei, A.; Hawsawi, Y.; Nguyen, T. ViT-DeiT: An ensemble model for breast cancer histopathological images classification. In Proceedings of the 1st International Conference on Advanced Innovations in Smart Cities (ICAISC), Jeddah, Saudi Arabia, 23–25 January 2023; pp. 1–6. [Google Scholar]

- Krishnan, K.S.; Krishnan, K.S. Vision Transformer Based COVID-19 Detection Using Chest X-Rays. IEEE Conference Publication. In Proceedings of the 2021 6th International Conference on Signal Processing, Computing and Control (ISPCC), Solan, India, 7–9 October 2021; Available online: https://ieeexplore.ieee.org/document/9609375.

- Wang, L.L.; Lo, K.; Chandrasekhar, Y.; Reas, R.; Yang, J.; Burdick, D.; Eide, D.; Funk, K.; Katsis, Y.; Kinney, R.; et al. CORD-19: The COVID-19 Open Research Dataset. arXiv 2024, arXiv:2004.10706. [Google Scholar] [CrossRef]

- Wu, X.; Tao, R.; Hong, D.; Wang, Y. The FrFT convolutional face: Toward robust face recognition using the fractional Fourier transform and convolutional neural networks. Sci. China Inf. Sci. 2020, 63, 119103_1–119103_3. [Google Scholar] [CrossRef]

- Barshan, B.; Ayrulu, B. Fractional Fourier transform pre-processing for neural networks and its application to object recognition. Neural Netw. 2002, 15, 131–140. [Google Scholar] [CrossRef] [PubMed]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar] [CrossRef]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar]

- Howard, A.; Sandler, M.; Chu, G.; Chen, L.C.; Chen, B.; Tan, M.; Wang, W.; Zhu, Y.; Pang, R.; Vasudevan, V.; et al. Searching for mobilenetv3. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1314–1324. [Google Scholar]

- Tan, M.; Le, Q. Efficientnet: Rethinking model scaling for convolutional neural networks. In Proceedings of the International Conference on Machine Learning, PMLR, Long Beach, CA, USA, 9–15 June 2019; pp. 6105–6114. [Google Scholar]

- Tan, M.; Le, Q. Efficientnetv2: Smaller models and faster training. In Proceedings of the International Conference on Machine Learning, PMLR, Online, 18–24 July 2021; pp. 10096–10106. [Google Scholar]

- Kiefer, R.; Abid, M.; Steen, J.; Ardali, M.R.; Amjadian, E. A Catalog of Public Glaucoma Datasets for Machine Learning Applications: A detailed description and analysis of public glaucoma datasets available to machine learning engineers tackling glaucoma-related problems using retinal fundus images and OCT images. In Proceedings of the 7th International Conference on Information System and Data Mining (ICISDM), Atlanta, GA, USA, 10–12 May 2023; pp. 24–31. [Google Scholar] [CrossRef]

- Acharya, U.R.; Ng, E.Y.K.; Eugene, L.W.J.; Noronha, K.P.; Min, L.C.; Nayak, K.P.; Bhandary, S.V. Decision support system for the glaucoma using Gabor transformation. Biomed. Signal Process. Control 2015, 15, 18–26. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).