1. Introduction

Stable Lévy motions owe their importance in both theory and practice to, among other factors, price fluctuations. The seeming departure from normality, along with the demand for a self-similar model for financial data (i.e., one in which the shape of the distribution for yearly asset price changes resembles that of the constituent daily or monthly price changes), led Benoît Mandelbrot to propose that cotton prices follow an -stable Lévy motion with equal to . The high variability of the stable Lévy motions means that they are much more likely to take values far away from the median, and this is one of the reasons why they play an important role in modeling. Stable Lévy motions have been frequently used to model such diverse phenomena as gravitational fields of stars, temperature distributions in nuclear reactors and stresses in crystalline lattices, as well as stock market prices, gold prices and other financial data.

Recall that a stochastic process is called a (standard) –stable Lévy motion if the following three conditions hold:

- (C1)

almost surely;

- (C2)

L has independent increments;

- (C3)

for any and for some .

Here,

stands for a stable random variable with index of stability

, scale parameter

, skewness parameter

and a shift parameter equal to

When

we denote

L as

for clarity. Such processes have stationary increments, and they are

–self-similar (unless

); that is, for all

the processes

and

have the same finite-dimensional distributions. An

–stable Lévy motion is symmetric when

. Recall that

governs the intensity of jumps. When

is small, the intensity of jumps is large. Contrarily, when

is large, the intensity of jumps is small; see

Figure 1.

However, the stationary property of their increments restricts the uses of stable Lévy motions in some situations, and generalizations are needed for instance to model real-world phenomena such as annual temperature (rainfall, wind speed), epileptic episodes in EEG, stock market prices over long periods and daily internet traffic. A significant feature of these cases is that the “local intensity of jumps” varies with time t; that is, varies with time t.

One way to deal with such a variation is to set up a class of processes whose stability index is a function of t. More precisely, one aims at defining non-stationary increments processes that are, at each time t, “tangent” (in a certain sense explained below) to a stable process with stability index . Such processes are called multistable Lévy motions (MsLM); these are non-stationary increments extensions of stable Lévy motions.

Formally, one says that a stochastic process

is

multistable if, for almost all

,

X is

localizable at

t with tangent process

an

–stable process,

. Recall that

is said to be

–localizable at

t (cf. Falconer [

1,

2]), with

, if there exists a non-trivial process

, called the tangent process of

X at

t, such that

where convergence is in finite dimensional distributions. By (

1), a multistable process is also a multifractional process (cf. [

3,

4] for such processes). Two such extensions exist [

5]:

- 1.

The

field-based MsLM admit the following series representation:

where

is a Poisson point process on

with the Lebesgue measure as a mean measure

,

and

Their joint characteristic function is as follows:

for

and

These processes have correlated increments, and they are localizable as soon as the function

is Hölder-continuous.

- 2.

The

independent-increments MsLM admit the following series representation:

As their name indicates, they have independent increments, and their joint characteristic function is as follows:

for

and

These processes are localizable as soon as the function

satisfies the following condition uniformly for all

x in finite interval as

; see [

5]:

In particular, independent-increments MsLM are, at each time t, “tangent ” to a stable Lévy process with stability index .

Of course, when

is a constant

for all

t, both

and

are simply the Poisson representation of

–stable Lévy motion

In general,

and

are semi-martingales. For more properties of

and

such as Ferguson-Klass-LePage series representations, Hölder exponents, stochastic Hölder continuity, strong localizability, functional central limit theorem and the Hausdorff dimension of the range, we refer to [

6,

7,

8]. See also [

9,

10] for wavelet series representation of the multifractional multistable processes.

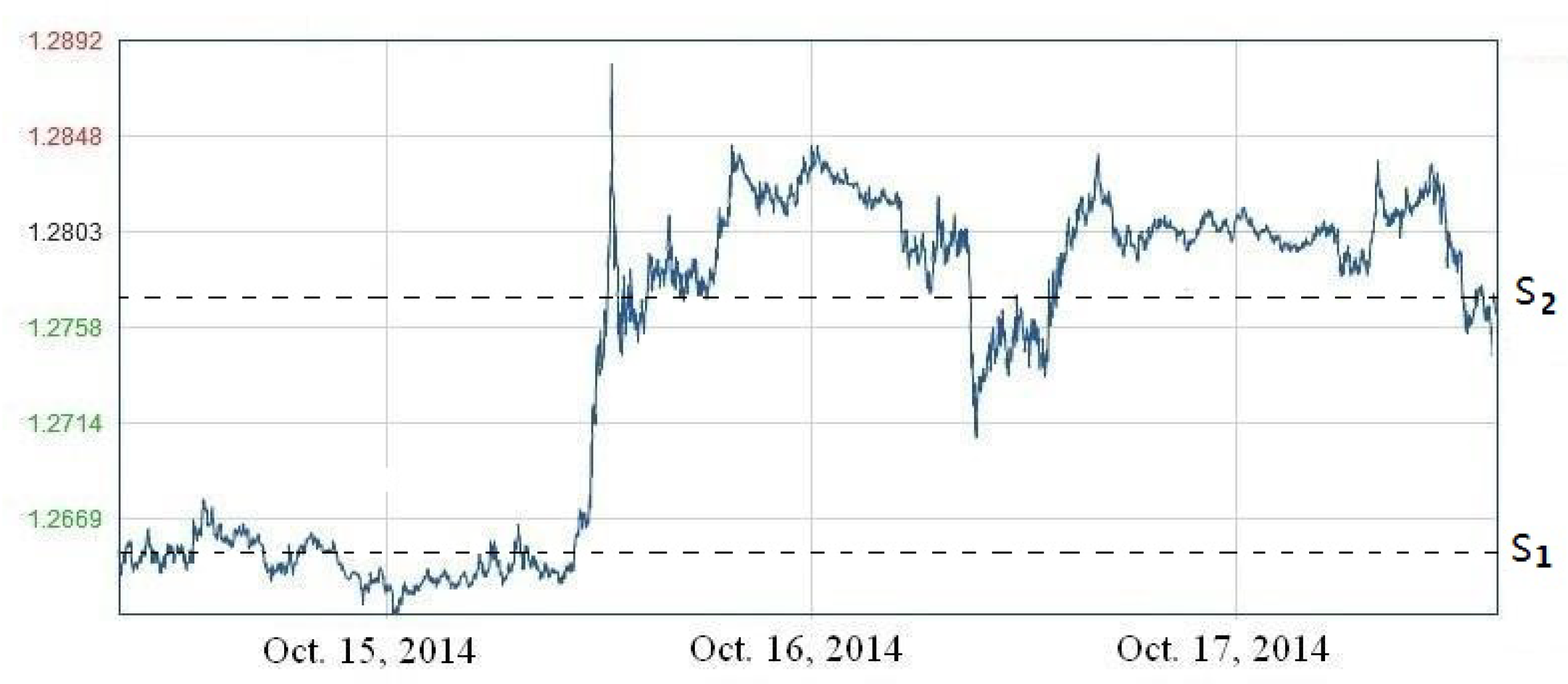

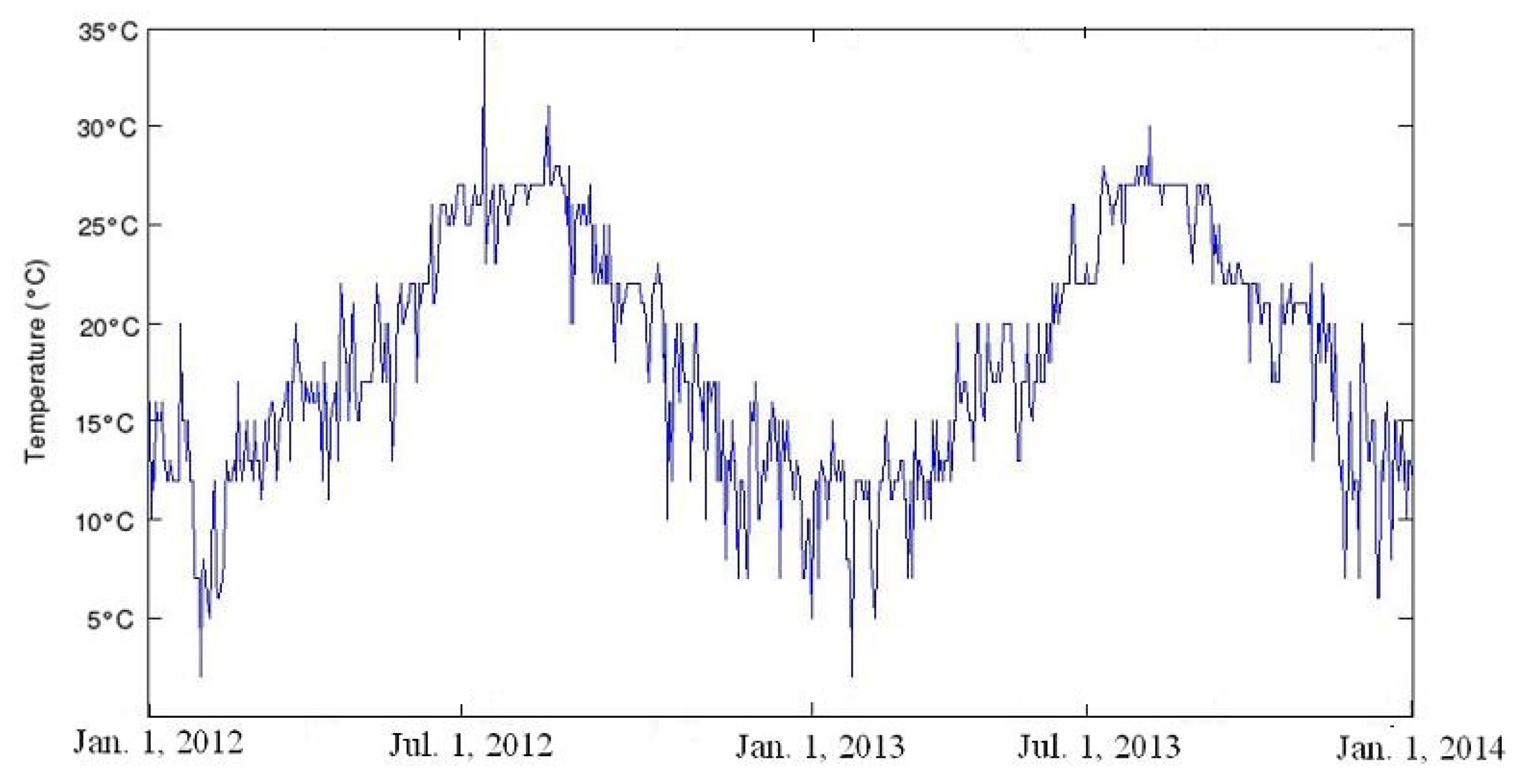

Similar to the MsLM, we find that some stochastic processes have the property that the “local intensity of jumps” varies with the values of the processes. For instance, when one analysis certain records such as the stock market prices (see

Figure 2), the exchange rates (see

Figure 3) or annual temperature (see

Figure 4), it seems that there exits a relation between the local value of the records, denoted by

, and the local intensity of jumps, measured by the index of stability

.

This calls for the development of the self-stabilizing models, i.e., a class of stochastic processes S satisfying a functional equation of the form: almost surely for all t, where g is a smooth deterministic function. All the information concerning the future evolution of is then incorporated in which may be estimated from historical data under the assumption that the relation between S and does not vary in time. This class of models is in a sense analogous to local volatility models: instead of having the local volatility depending on S, it is the local intensity of jumps that does so. This class of models is also in a sense analogous to MsLM: instead of the “local intensity of jumps” depending on time t, the “local intensity of jumps” depending on the values of

The main aim of this paper is to establish the self-stabilizing models, called the self-stabilizing processes (cf. Falconer and Lévy Véhel [

11]), via a Donsker-type construction. Formally, one says that a stochastic process

is a self-stabilizing process if, for almost surely all

,

S is localizable at

t with tangent process

a

–stable process, with respect to the conditional probability measure

In formula, it holds

where convergence is in finite dimensional distributions with respect to

Formula (

8) states that “local intensity of jumps” varies with the values of

, instead of time

t. In particular, if

equality (

8) implies that

provided that

r is small. Thus, it is natural to define

where

which illustrates the use of our method to prove the existence of these self-stabilizing processes. The main difficulty associated with using this method involves proving the weak convergence of

To this end, we make use of the Arzelà–Ascoli theorem and its generalization.

The paper is organized as follows. In

Section 2, we establish a self-stabilizing and self-scaling process. In

Section 3, we show that it has many good properties, such as stochastic Hölder continuity and strong localizability. In particular, it is simultaneously a Markov process, a martingale (when

), and a self-regulating process. Conclusions are presented in

Section 4. In

Appendix A, we give the Arzelà–Ascoli theorem and its generalization.

2. Existence of Self-Stabilizing and Self-Scaling Process

In this section, we make use of the general version of the Arzelà–Ascoli theorem to prove the existence of this self-stabilizing process. Moreover, our self-stabilizing process is also a self-scaling process. We call a random process self-scaling if the scale parameter is also a function of the value of the process.

Definition 1.

We call the sequence subequicontinuous on if for any there exist and a sequence of nonnegative numbers as such that, for all functions in the sequence,whenever In particular, if for all , then is equicontinuous. The following lemma gives a general version of the Arzelà–Ascoli theorem, whose proof is given in

Appendix A.

Lemma 1.

Assume that is a sequence of real-valued continuous functions defined on a closed and bounded set If this sequence is uniformly bounded and subequicontinuous, then there exists a subsequence that converges uniformly.

We give an approximation for a self-stabilizing and self-scaling process via Markov processes. The main idea behind this method is that the unknown stability index and scale parameter of the process at point are replaced by the predictable values and , respectively. When it is obvious that in distribution, provided that g is a Hölder function and that almost surely. The same argument holds for

Theorem 1.

Let be a Hölder function defined on with values in the range .

Let be a positive Hölder function defined on and assume that lies within the range There exists a self-stabilizing and self-scaling process such that it is tangent at u to a stable Lévy process under the conditional expectation given as .

Proof. The theorem can be proved in four steps.

Step 1. Donsker’s construction: For all

and all

set

where

is a symmetric

–stable random variable

with unit-scale parameters and is independent of the random variables

for a given

. Then, we define a sequence of partial sums

In particular, when

and

, this method is known as Donsker’s construction (cf. Theorem 16.1 of Billingsley [

12] for instance). Define the processes

, where

Then, for given

n,

is a Markov process. For simplicity of notation, denote this as

According to the construction of

we have

, and, for all

It is easy to see that, for all

satisfying

,

where the last line follows from (

10). In particular, it can be rewritten in the following form:

where

as before. More generally, we have, for all

and

,

where the last line follows from (

12). Since

is a Markov process. Equality (

13) also holds if

is replaced by

where

and

Set

and set

for all

and

It is worth noting that the following estimation holds:

Recall

and

Then, we may assume that

lies in the range

By (

11), it is easy to see that the following inequalities hold for all

and

Thus, for all

By a similar argument, we have, for all

The inequalities (

14) and (

15) can be rewritten in the following form: for all

Step 2. Sub-equicontinuous for : Denote

by

Next, we prove that for given

the sequence

is subequicontinuous on

By Theorems A2 and (

14), it is easy to see that

is equicontinuous with respect to

However, we can even prove a better result, that is

is Hölder equicontinuous of order

with respect to

By (

14) and the Billingsley inequality (cf. p. 47 of [

12]), it is easy to see that, for all

with

and all

Similarly, by (

15), for all

with

and all

For all

we have

and

The random variable

is dominated by the constant 2. Therefore, (

19) and (

17) imply that

where

C is a constant only depending on

M and

Thus,

is Hölder equicontinuous of order

with respect to

Similarly, the inequalities (

20) and (

18) imply that

where

C is a constant depending on

and

The last inequality implies that for all

is Hölder subequicontinuous of order

with respect to

Notice that

Thus, the sequence

is subequicontinuous on

Step 3. Convergence for a subsequence: Denote by

For every given

and

, by Lemma 1, there exists a subsequence

of

and a function

defined on

such that

uniformly on

By induction, the following relation holds:

Hence, the diagonal subsequence

converges to

on

Moreover, by (

14) and (

15), the following inequalities hold for all

:

and, for all

By the Lévy continuous theorem, these exists a random process

such that

converges to

in distribution for any

as

Similarly, by (

13) instead of by (

11), we can prove that for all

there exists a random process

such that

converges to

in finite dimensional distribution, where

is a subsequence of

Letting

by (

13) and the dominated convergence theorem, we have, for all

Equality (

23) also holds if

is replaced by

where

and

Step 4. Self-stabilizing for the limiting process: Next, we prove that

S is a self-stabilizing process, that is,

S is localizable at

u to a

–stable Lévy motion

under the conditional expectation given as

. For any

and

, from equality (

23), it is easy to see that

Setting

, we find that

From equality (

23), by an argument similar to (

14), we obtain, for all

and all

with

,

By an argument similar to that of (

18), it follows that, for

and

,

where

C is a constant depending on

and

Since

g and

are both Hölder functions, by (

25), we get

and

in probability with respect to

Hence, using the dominated convergence theorem, we have

which means that

S is localizable at

u to a

–stable Lévy motion

under the conditional expectation given as

, where

is the standard symmetric

–stable Lévy motion. This completes the proof of the theorem. □

Remark 1.

Let us comment on Theorem 1.

- 1.

A slightly different method by which to approximate the self-stabilizing and self-scaling process S can be described as follows. Define By an argument similar to the proof of Theorem 1, there exists a subsequence of such that converges to the process S in finite dimensional distribution. Notice that with this method, all of the random variables are changed from to even is a constant. However, in the proof of Theorem 1, we only add one random variable from to when a constant.

- 2.

Notice that if we define for all then, by a similar argument of Theorem 1, we can define the self-stabilizing and self-scaling process on the positive whole line via the limit of - 3.

An interesting question is whether converges to in finite dimensional distribution. To answer this question, we need a judgment that if every subsequence of has a further subsequence that converges to x, then converges to According to the proof of the theorem, it is known that every subsequence of has a further subsequence that converges to S in finite dimensional distribution, where S is defined by (23). Thus, the question reduces to proving that (23) defines a unique process S.