Abstract

Semiparametric panel data models are powerful tools for analyzing data with complex characteristics such as linearity and nonlinearity of covariates. This study aims to investigate the estimation and testing of a random effects semiparametric model (RESPM) with serially and spatially correlated nonseparable error, utilizing a combination of profile quasi-maximum likelihood estimation and local linear approximation. Profile quasi-maximum likelihood estimators (PQMLEs) for unknowns and a generalized F-test statistic are built to determine the beingness of nonlinear relationships. The asymptotic properties of PQMLEs and are proven under regular assumptions. The Monte Carlo results imply that the PQMLEs and performances are excellent on finite samples; however, missing the spatially and serially correlated error leads to estimator inefficiency and bias. Indonesian rice-farming data is used to illustrate the proposed approach, and indicates that exhibits a significant nonlinear relationship with , in addition, -, -, and have significant positive impacts on rice yield.

1. Introduction

By tracking each individual in a predetermined sample over time, a panel dataset with multiple observations can be collected. In contrast to cross-sectional data (Cheng and Chen []), the panel data structure is sufficiently abundant to permit estimation and testing of regression models that include not only individual characteristics observed by the researchers but also unobserved characteristics that vary across individuals or/and time periods, simply referred to as “individual or/and time effects”. In recent decades, many studies have focused on parametric panel data models (Chamberlain [], Chamberlain [], Hsiao [], Baltagi [], Arellano [], Wooldridge [], Thapa et al. [], and Rehfeldt and Weiss []).

In practice, a lot of spatial panel datasets are collected from “locations” and require further studying. Therefore, based on classic parametric panel data models, different types of parametric panel data models with spatial correlations at different locations are presented. There are three fundamental types of spatial parametric panel data model (Elhorst []): spatial autoregressive (SAR) model (Brueckner []), spatial Durbin model (SDM) (LeSage [], Elhorst []), and spatial error model (SEM) (Allers and Elhorst []). A more detailed discussion can be found in Elhorst [], Baltagi and Li [], Pesaran [], Kapoor et al. [], Lee and Yu [], Mutl and Pfaffermayr [], and Baltagi et al. []. Among these, the SEM introduced by Anselin [] accounts for the interaction effects in spatially correlated determinants of the dependent variable that is excluded from the model or in unobserved shocks with a spatial mode (Elhorst []). The development of testing and estimation of SEM has been summarized in books by Baltagi [], Elhorst [], and Anselin [] as well as in surveys by Baltagi et al. [], Bordignon et al. [], and Kelejian and Prucha [], among others. However, the aforementioned SEM assumes that the only correlation over time results from the same regional impact presented across the panel. In spatial panel data analysis, this assumption may be too restrictive for real situations. For example, if an undetected shock influences investments across regions, behavioral relationships are affected, at least in the next few stages. Thus, ignoring serially correlated errors may lead to inefficient regression coefficient estimators (Baltagi []).

By adding serially correlated errors to SEM, parametric panel data models with serially and spatially correlated errors can be established. The extended models consider not only serial correlations through spatial units but also spatial correlations at every point temporally. Researchers have established two types of parametric panel data models with serially and spatially correlated separable and nonseparable errors. The model with the separable error was used to analyze the effects of public infrastructure investment on the costs and productivity of private enterprises (Cohen and Paul []). The MLE and corresponding asymptotic properties of spatial panel data model with serially and spatially correlated separable error were investigated by Lee and Yu []. A random effects parametric panel data model with serially and spatially correlated error was introduced by Parent and LeSage [], and the estimators were obtained using the Markov chain Monte Carlo (MCMC) method. Elhorst [] constructed the MLE of a parametric panel data model with serially and spatially correlated nonseparable error without establishing the asymptotic properties of the estimators. Lee and Yu [] established the QMLE and corresponding asymptotic properties for both linear panel data models with serially and spatially correlated separable and nonseparable errors.

Although the theories and applications of the aforementioned linear parametric panel data models with serially and spatially correlated separable/nonseparable errors have been thoroughly explored, their usefulness in applications is often impractical because they lack flexibility and are limited in their ability to detect complicated structures (e.g., nonlinearity). Furthermore, a misspecification of the data generating process by the linear parametric panel data model may result in significant modeling bias and can lead to incorrect findings. For these reasons, Zhao et al. [] investigated the estimation and testing issues of a partially linear single-index panel data model with serially and spatially correlated separable errors using the semiparametric minimum average variance method and a F-type test statistic. Li et al. [] obtained the PQMLE, the generalized F-test, and the asymptotic properties for a partially linear nonparametric regression model with serially and spatially correlated separable error. Li et al. [] studied the PQMLEs of the unknowns for the fixed effects partially linear varying coefficient panel data model with serially and spatially correlated nonseparable error, thereby deriving the consistency and asymptotic normality. Bai et al. [] constructed the estimators of the parametric and nonparametric components of a partially linear varying-coefficient panel data model with serially and spatially correlated separable errors using weighted semiparametric least squares and weighted polynomial spline series methods, respectively, thereby proving their asymptotic normality.

By adding a nonparametric component to a random effects parametric model with serially and spatially correlated nonseparable errors, a new random effects semiparametric model (RESPM) can be established to concurrently capture the linearity and nonlinearity of the covariates, spatial correlation and serial correlation of errors, and individual random effects. This study explores its PQMLE and hypothesis testing issues from theoretical, simulation, and application perspectives. Our proposed model is different from the model proposed by Li et al. [], which assumes spatially and serially correlated errors are separable. The difference in model structure leads to differences in the following aspects: (1) The parameter spaces (i.e, stationarity conditions) of the two models are different. (2) There are differences in the estimation process, assumption conditions and theorem proofs.

The remainder of this paper is organized as follows: In Section 2, a RESPM with serially and spatially correlated nonseparable error is introduced, and the estimation and testing procedures are established. Section 3 lists several regular assumptions and theorems for the estimators and test statistic. In Section 4 and Section 5, numerical simulations and real data analyses are presented. Finally, Section 6 summarizes the results. The Appendix contains the proofs of the lemmas and theorems.

Notation. Some important symbols and their definitions that appear throughout the paper are given in Table 1.

Table 1.

Some important symbols and their definitions.

2. Estimation and Testing

2.1. The Model

The form of RESPM with serially and spatially correlated nonseparable errors is as follows:

where denotes the i-th spatial unit; denotes the t-th period; denotes the observation of the response variable; and denote observations of p- and q-dimensional covariates, respectively; is a p-dimensional regression coefficient vector of ; is an unknown nonparameteric function; represents the i-th individual random effect; and represents the it-th error term and is independent of .

Let , , , and . Then, model (1) can be expressed in the following matrix form

and its error term follows a serially and spatially correlated nonseparable error structure.

where and is predetermined spatial weights matrix; , , and , , are the variances of and , respectively; and and denote the spatial and serial correlation coefficients, respectively. Similar to Elhorst [], model stationarity requires not only and but also

where and denote the largest and smallest eigenvalues of W, respectively. Furthermore, let be the true values of ; is the true value of .

2.2. PQMLE

To ensure homogeneity of variance, (3) can be rewritten as

where , denotes an identity matrix. By repeated substitution, we obtain the following equation: because C is nonsingular. Therefore, , and the variance of is

Substituting and into (3), we obtain

Because and , model (2) for the first period of can be written as

where and may potentially be the Cholesky or spectral decomposition of ; , ⊗ denotes the Kronecker product. Letting , then , and the covariance matrix of can be calculated as follows:

where and ; I denotes the identity matrix; and is a T-dimensional vector consisting of ones. Hence, the log quasi-likelihood function of the proposed model is obtained as follows:

where

Because is unknown, Equation (6) cannot be maximized to obtain the quasi-maximum likelihood estimators of the unknowns. To solve this issue, the unknowns of the model are estimated by combining PQMLE and Working Independence Theory (Cai []). The primary steps are as follows:

Step 1. Let be the initial estimator of . We further assume that is known and let . Then, can be obtained as

where is the first derivative of , , , , ; is the determinant of , ; and is a q-dimensional kernel function.

Let , then and

where , .

Step 2. Denote , where is an block of in Equation (7) and . Substituting into (6), we obtain

where , . Therefore, the initial estimators of , , and are:

where , and .

2.3. Hypothesis Test for Nonparametric Component

As outlined in Section 2.2, a hypothesis test is constructed to ensure the rationality of nonparametric model specification (1) as follows:

where are constant parameters. Following the generalized F-test statistic introduced by Fan et al. [], let be the estimator obtained by the PQML method in Section 2.2. Let and be the quasi-maximum likelihood estimators. The resulting residual sum of squares can be obtained using the null and alternative hypotheses, respectively:

To test the null hypothesis, a test statistic is conducted as follows:

3. Asymptotic Theory

3.1. Assumptions

Before presenting the theoretical analysis of the proposed PQMLEs and test statistic, we make some regular assumptions.

Assumption 1.

- (i)

- Covariates are nonstochastic regressors which are uniformly bounded (UB) in , where and Δ are parameter spaces of and , respectively.

- (ii)

- There exist and a continuous function that satisfy and .

Assumption 2.

- (i)

- , , where .

- (ii)

- When , holds.

- (iii)

- C is nonsingular in parameter space Λ.

- (iv)

- The row and column sums of W and are UB.

Assumption 3.

As , , , and , where M is a constant.

Assumption 4.

is a kernel function on with 0 odd order moments.

Assumption 6.

is a positive definite matrix, as defined in Theorem 2.

Remark 1.

Assumption 1 provides the features of the covariates, random error term, and density function. Assumption 2 concerns the basic features of the spatial weights matrix parallels Assumption 3 in Su and Jin [] and Assumption 5 in Lee []. Assumptions 3 and 4 are related to the bandwidth and kernel functions. Specifically, Assumption 3 is similar to Assumption 4 of Hamilton and Truong [] and Assumption 7 of Su and Ullah [] and is easily satisfied by considering with for . Assumptions 5 and 6 are important for proving the consistency and asymptotic properties of the estimators.

3.2. Asymptotic Properties

The reduced form of is obtained as follows:

Note that , where . Denote a typical entry of by , where is a typical column of .

Lemma 1.

Upon fulfilling Assumptions 1–5, we have

Hamilton and Truong [] provided the proof.

Lemma 2.

Upon fulfilling Assumptions 1–5, we have

- (i)

- , where .

- (ii)

- for .

- (iii)

- .

Su and Ullah [] provided the proofs.

Lemma 3.

Upon fulfilling Assumptions 1–5, we have

Hamilton and Truong [] provided the proof.

Using the lemmas listed above, we establish the following theorems:

Theorem 1.

Fulfillment of Assumptions 1–5 leads to .

Theorem 2.

Theorem 3.

Fulfillment of Assumptions 1–4 leads to

where and the form of is provided in the Appendix.

Theorem 4.

Fulfillment of Assumptions 1–4 leads to

where , Δ is the support set of ι, .

Remark 2.

This paper focuses on the case with a large N and fixed T. In terms of estimation procedure, there is no restriction on N and T. Therefore, the estimation method can be applied to both fixed and large T cases. However, the asymptotic theory of estimators with both the fixed T and large T cases may be different.

4. Monte Carlo Simulation

4.1. Performance of PQMLEs

This section is devoted to use Monte Carlo simulation to examine the performance of PQMLEs in finite sample cases. The mean square error (), mean absolute deviation error (), and standard deviation () are used as the evaluation criteria, where

are fixed nodes in the support set of , and denotes the number of repetitions. In the subsequent simulations, (Su []) and the rule of thumb method (Mack and Silverman []) is utilized to select window width and srt is set as 1000.

The simulation data are generated using the following model:

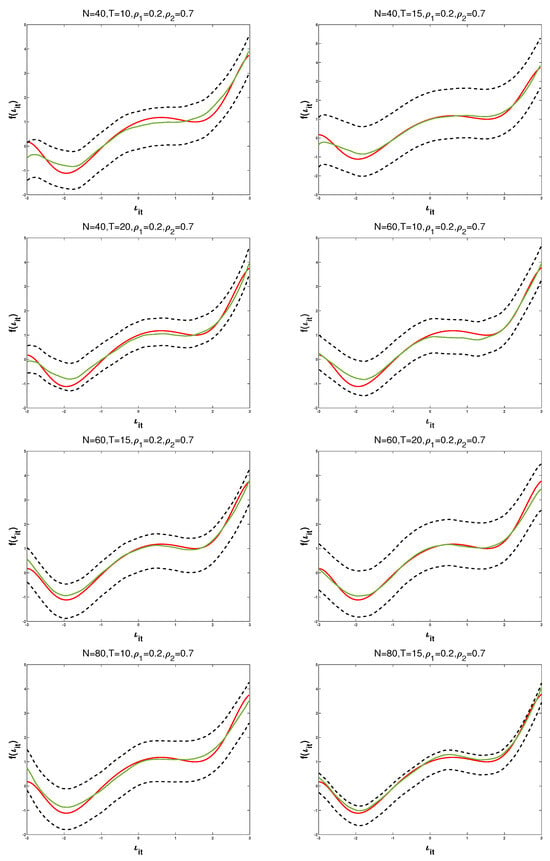

where N, T are the numbers of spatial unit and time period, respectively. In order to study the performance of PQMLEs in different finite sample cases, we take and respectively. , , , , , , respectively. According to the parameter space of and , we set , and , respectively. is replaced with the Rook weights matrix (Anselin []). The simulation results are listed in Figure 1 and Table 2, Table 3, Table 4, Table 5, Table 6 and Table 7.

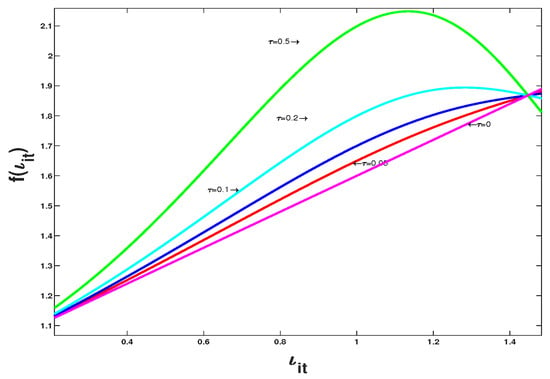

Figure 1.

and its 95% confidence intervals with and under different N and T.

Table 2.

Parametric simulation results with N = 40.

Table 3.

Parametric simulation results with N = 60.

Table 4.

Parametric simulation results with N = 80.

Table 5.

Nonparametric simulation results for medians and SDs of MADEs with N = 40.

Table 6.

Nonparametric simulation results for medians and SDs of MADEs with N = 60.

Table 7.

Nonparametric simulation results for medians and SDs of MADEs with N = 80.

Figure 1 shows the trajectories of and its 95% confidence interval for and . In Figure 1, the green solid, red solid, and black dashed curves denote , , and the corresponding 95% confidence intervals, respectively. Upon examining each curve and subgraph carefully, clearly, is quite close to and its confidence bandwidth is tight, indicating that the PQMLE of nonparametric component works effectively on finite samples.

In Table 2, Table 3, Table 4, Table 5, Table 6 and Table 7, we observe the following: First, the of , , and are extremely small and decrease with an increase in N. The of and are slightly larger than those of the other parameter estimates, but decrease with an increase in N or T. When N is fixed and T gradually increases, the behavior of the parameter estimates resembles that of N varying under a fixed T. Second, the s and s for s of decrease as T or N increases. Thus, the PQMLEs are convergent.

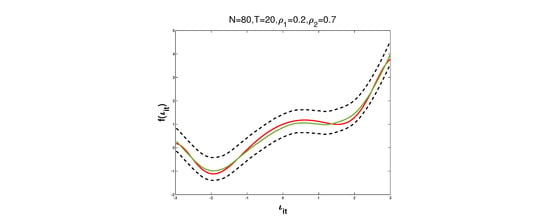

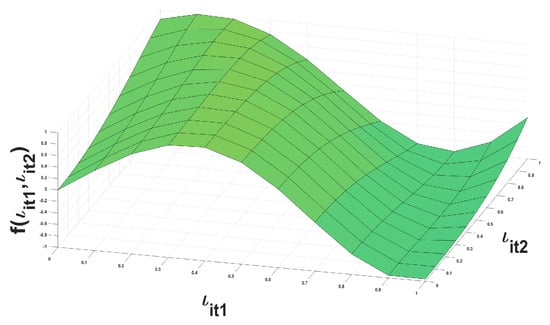

4.2. Monte Carlo Simulation of Multivariate Nonparametric Estimates

A two-dimensional nonparametric function is selected for the simulation experiment. Considering model (21), we set , , , , , , and . The settings of the other variables in this simulation are the same as those of the model in Section 4.1. The simulation results indicate that the parametric estimation results are similar to those described in Section 4.1. To save space, we only present the nonparametric simulation results. Figure 2 and Figure 3 show the trajectories of and , where Figure 2 is and Figure 3 is . The median and SD values of are 0.2987 and 0.1328, respectively. The trajectory of is similar to the real trajectory, and the fitting effect is acceptable.

Figure 2.

The trajectory of .

Figure 3.

The trajectory of .

4.3. Simulation Results of Different Models

To examine the necessity of including , , and of the model (21), four new models are established by successively eliminating , , , , and from model (21) as follows:

where all the variables of the aforementioned four models have the same setting as in model (21). To save space, the following simulation results only present the cases of , , , , and : The PQMLE results for the parameters of models (21)–(25) are provided in Table 8.

Table 8 displays the parametric estimation results for models (21)–(25), where represents the growth rate of MSE which is compared with the MSE of model (21). From Table 7, the following facts can be deduced: (1) The s of the parameter estimates in model (21) are closer to the real value as sample size increases. The s of parameter estimates in models (22)–(25) approach the real value with increasing sample size except for , , and in model (22), and in model (23), and in model (24), and all the parameters in model (25). (2) Compared to model (21), the s of each parameter in models (22)–(25) increase, and for almost all parametric estimates, they decrease with increasing sample size, except for and in model (22), in model (23), and in model (24). (3) The of all parametric estimates are large. However, do not decrease with increasing sample size for some parameter estimates, such as and in model (22), and in model (23), and and in model (24). This implies that consistency of parameter estimation is difficult to ensure in case of model misspecification.

4.4. Performance of the Testing

In this section, to assess the performance of in Section 2.3, the power is calculated. Consider model (21), where the settings of and remain unchanged from those in Section 4.1, namely, , , , , 16, . Thus, we study the following hypotheses:

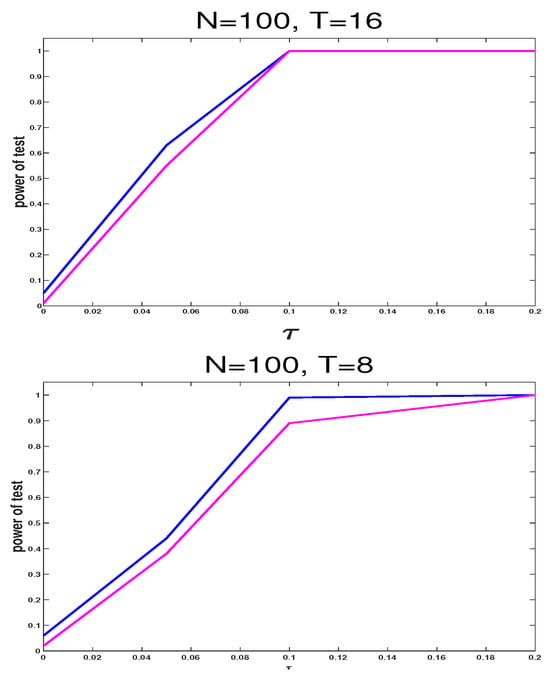

where denotes a series of nonparametric functions with , and is set to . is a combination of a nonparametric function controlled by and a linear function. Moreover, when is 0, is a linear function, and as increases slightly, is a nonlinear function. All the trajectories of at different values are shown in Figure 4, where clearly the trajectory of at is the same as that under the null hypothesis. As increases, the trajectory of digresses from the null hypothesis. The powers of are shown in Figure 5.

Figure 4.

Trajectories of under , and , respectively.

Figure 5.

The test size ( = 0) and the powers () of test statistic under , respectively, with sample size .

In Figure 5, (1) when = 0, the test size approaches its significance level and surges with an increase in . This implies that is susceptible to the alternative hypothesis of the given test problem. (2) For the same N, testing at T = 16 outperforms that of This demonstrates that testing performance improves with increasing sample size.

5. Real Data Analysis

In this section, the proposed estimation and testing methods are demonstrated on the Indonesian rice farm dataset. This dataset is collected by the Agricultural Economic Research Center of the Ministry of Agriculture of Indonesia. It covers 171 farms over six growing seasons (three wet and three dry seasons) and has been widely used in random effects models (Feng and Horrace []).

The dataset includes observations of five variables which are rice yield, high-yield varieties, mixed-yield varieties, seed weight, and land area, respectively. Rice yield is taken as response variable and others are taken as covariates whose definitions are given in Table 9.

Table 9.

The description of variables.

The testing method proposed in Section 2.3 is used to determine whether a nonlinear connection exists between the covariates and response variable. The outcomes of the F-test are listed in Table 10. Table 10 shows that (other covariates) exhibits a significant nonlinear (linear) relationship with at a significance level of .

Table 10.

F-test results with .

Therefore, the following model is established for the data analysis:

where , , and denote the denotes the ith observation of log(rice), high- and mixed-yield varieties, seed weight, and land area during the tth growing season, respectively. Furthermore, the is determined using the following method (Druska and Horrace []):

where denote the i-th and j-th village, respectively. Moreover, the weights matrix is normalized to ensure the elements of each row sum to 1. Additionally, the weights matrix is assumed time invariant, so the t subscript can be dropped.

Table 11 shows the parametric estimation results, which reveals that (1) All linear covariates have a positive impact on rice yield. (2) demonstrates that the rice yield is generally steady and less susceptible to exogenous perturbations.

Table 11.

Parameter estimation results.

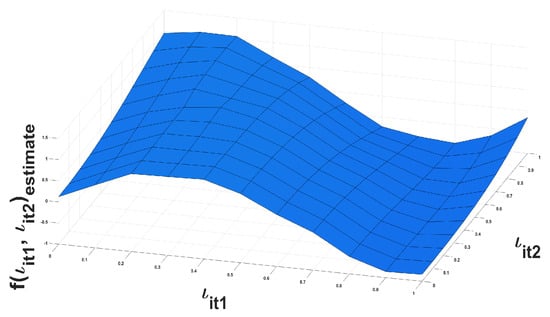

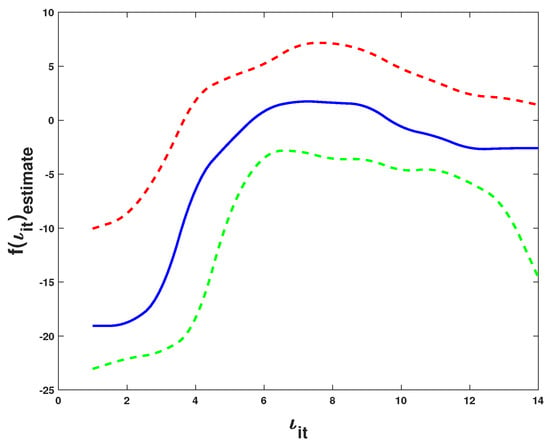

Figure 6 presents and its 95% confidence interval, where the blue short dashed curve denotes , and the red and green solid curves correspond to the 95% confidence bands. Clearly, has a nonlinear effect on rice yield.

Figure 6.

The nonlinear effect of () on ().

6. Summary

This study mainly explores the PQMLE and F-test of RESPM with serially and spatially correlated nonseparable error. Our model simultaneously captures the linear and nonlinear effects of the covariates of spatially and serially correlated errors and individual random effects. PQMLEs are constructed for unknowns along with test statistic to determine the beingness of nonlinear relationships. Their asymptotic properties are derived under regular conditions. The Monte Carlo results indicate that the proposed estimators and test statistic behave well on finite samples and that ignoring the spatial and serial correlations of errors may lead to inefficient and biased estimators. The proposed estimation and testing techniques are used to analyze Indonesian rice farming data.

The paper can be extended in several ways. We can apply spline estimation method or local polynomial method to approximate the nonparametric function, and combine GMM (Cheng and Chen []), quadratic inference function estimation (Qu []) or PQMLE to obtain the estimators of unknowns. Additionally, we can also consider extending these methods to other similar models, and the Bayesian analysis, variable selection, and quantile regression analysis of these models are also worth studying. In the future, we may use the proposed estimation and test methods to conduct empirical analysis, for example, investigating the drivers of , and energy efficiency.

Author Contributions

Formal analysis, S.L. and D.C.; methodology, S.L. and J.C.; software and writing—original draft, S.L.; supervision, writing—review and editing, and funding acquisition, J.C.; data curation, D.C. All authors have read and agreed to the published version of manuscript.

Funding

This research was funded by National Social Science Fund of China (22BTJ024) and Natural Science Foundation of Fujian Province (2020J01170, 2022J01193).

Data Availability Statement

Data is available upon request from the authors.

Acknowledgments

The authors thank the editors and reviewers for their hard work, who have made outstanding contributions to the improvement of the quality of this paper.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A

Proof of Theorem 1.

Define . A straightforward calculation provides

and

The method for proving the consistency of is adopted from Lee [] and White [] (Theorem 3.4), to show

By (11), we have .

First, we prove that (A2) holds. Recall

where , , and . By a straightforward calculation, we obtain:

where and .

For the above expression of , we find that the first term converges to the probabilistic mean with certainty.

The above expression for can be decomposed as follows:

where ,

By simple calculations and Lemma 1, we obtain

and

According to Chebyshev’s law of large numbers, . Similarly, we obtain . Therefore, is proven, and thus, . The proof of is similar to that of . To avoid repetition, this is not described in this article. Combined with Assumption 5, the consistency of is obtained.

□

Proof of Theorem 2.

Taylor expanding (8) at , we obtain

where and lies in between and . By Theorem 1, we have .

Denote

Thus,

Next, we need to prove

and

To prove (A5), we need to show that each element of converges to 0 in probability. It can be shown that

where and is identity matrix.

Consequently, we have

So, according to Assumption 2 and Fact 2 in Lee [], it is important to know

Then, we have

Now, to prove (A6), from Assumption 1 and Theorem 1 in Kelejian and Prucha [], the conclusion that is an asymptotically normal distribution with mean 0 is obtained. Therefore, the variance is calculated as follows:

where

and are the third and fourth moments, and are the true value of , is the true value of , respectively.

Therefore, according to Assumption 6 and the forms of and , we obtain

□

Proof of Theorem 3.

Recall and

then

where and

Let by the second order Taylor expression, we know that . It is easy to obtain

Therefore,

and

where and

For , write

For , it is easy to know that and

For , by Lemma 2 and Facts 1, 2 (Lee []) and Theorem 1, we can show that and , then can be obtained. Following Slutsky’s theorem and central limit theorem, we obtain

where . □

Proof of Theorem 4.

It can be seen that

where and . For , it can be obtained by Theorems 1–3 and the calculation that

Similarly, we have that

where , and . For , it can be obtained by Theorems 1–3 and the calculation that

Furthermore, we obtain that

According to the Remark 3.4 in Fan et al. [], under , we have

where , , is the support set of , , and is the convolution of K. □

References

- Cheng, S.; Chen, J. GMM estimation of partially linear additive spatial autoregressive model. Comput. Stat. Data Anal. 2023, 182, 107712. [Google Scholar] [CrossRef]

- Chamberlain, G. Multivariate regression models for panel data. J. Econ. 1982, 18, 5–46. [Google Scholar] [CrossRef]

- Chamberlain, G. Panel data. Handb. Econom. 1984, 2, 1247–1318. [Google Scholar]

- Hsiao, C. Analysis of Panel Data; Cambridge University Press: Cambridge, UK, 2022; Volume 64. [Google Scholar]

- Baltagi, B.H. Econometric Analysis of Panel Data; Springer: Berlin/Heidelberg, Germany, 2008. [Google Scholar]

- Arellano, M. Panel Data Econometrics; Oxford University Press: Oxford, UK, 2003. [Google Scholar]

- Wooldridge, J.M. Econometric Analysis of Cross Section and Panel Data; MIT Press: Cambridge, MA, USA, 2010. [Google Scholar]

- Thapa, S.; Lomholt, M.A.; Krog, J.; Cherstvy, A.G.; Metzler, R. Bayesian analysis of single-particle tracking data using the nested-sampling algorithm: Maximum-likelihood model selection applied to stochastic-diffusivity data. Phys. Chem. Chem. Phys. 2018, 20, 29018–29037. [Google Scholar] [CrossRef] [PubMed]

- Rehfeldt, F.; Weiss, M. The random walker’s toolbox for analyzing single-particle tracking data. Soft Matter 2023, 19, 5206–5222. [Google Scholar] [CrossRef] [PubMed]

- Elhorst, J.P. Spatial Econometrics: From Cross-Sectional Data to Spatial Panels; Springer: Berlin/Heidelberg, Germany, 2014. [Google Scholar]

- Brueckner, J.K. Strategic interaction among governments: An overview of empirical studies. Int. Reg. Sci. Rev. 2003, 26, 175–188. [Google Scholar] [CrossRef]

- LeSage, J.P. An introduction to spatial econometrics. Rev. Econ. Ind. 2008, 123, 19–44. [Google Scholar]

- Elhorst, J.P. Applied spatial econometrics: Raising the bar. Spat. Econ. Anal. 2010, 5, 9–28. [Google Scholar] [CrossRef]

- Allers, M.; Elhorst, J. Tax mimicking and yardstick competition among local governments in The Netherlands. Int. Tax Public Financ. 2005, 12, 493–513. [Google Scholar] [CrossRef]

- Elhorst, J.P. Specification and estimation of spatial panel data models. Int. Reg. Sci. Rev. 2003, 26, 244–268. [Google Scholar] [CrossRef]

- Baltagi, B.H.; Li, D. Prediction in the panel data model with spatial correlation. In Advances in Spatial Econometrics: Methodology, Tools and Applications; Springer: Berlin/Heidelberg, Germany, 2004; pp. 283–295. [Google Scholar]

- Pesaran, M.H. General diagnostic tests for cross-sectional dependence in panels. Empir. Econ. 2021, 60, 13–50. [Google Scholar] [CrossRef]

- Kapoor, M.; Kelejian, H.H.; Prucha, I.R. Panel data models with spatially correlated error components. J. Econ. 2007, 140, 97–130. [Google Scholar] [CrossRef]

- Lee, L.; Yu, J. Estimation of spatial autoregressive panel data models with fixed effects. J. Econ. 2010, 154, 165–185. [Google Scholar] [CrossRef]

- Mutl, J.; Pfaffermayr, M. The Hausman test in a Cliff and Ord panel model. Econ. J. 2011, 14, 48–76. [Google Scholar] [CrossRef]

- Baltagi, B.H.; Egger, P.; Pfaffermayr, M. A generalized spatial panel data model with random effects. Econ. Rev. 2013, 32, 650–685. [Google Scholar] [CrossRef]

- Anselin, L. The Scope of Spatial Econometrics. In Spatial Econometrics: Methods and Models; Springer: Dordrecht, The Netherlands, 1988; pp. 7–15. [Google Scholar]

- Baltagi, B.H.; Song, S.H.; Koh, W. Testing panel data regression models with spatial error correlation. J. Econ. 2003, 117, 123–150. [Google Scholar] [CrossRef]

- Bordignon, M.; Cerniglia, F.; Revelli, F. In search of yardstick competition: A spatial analysis of Italian municipality property tax setting. J. Urban Econ. 2003, 54, 199–217. [Google Scholar] [CrossRef]

- Kelejian, H.H.; Prucha, I.R. On the asymptotic distribution of the Moran I test statistic with applications. J. Econ. 2001, 104, 219–257. [Google Scholar] [CrossRef]

- Cohen, J.P.; Paul, C.J. Public infrastructure investment, interstate spatial spillovers, and manufacturing costs. Rev. Econ. Stat. 2004, 86, 551–560. [Google Scholar] [CrossRef]

- Lee, L.; Yu, J. Spatial panels: Random components versus fixed effects. Int. Econ. Rev. 2012, 53, 1369–1412. [Google Scholar] [CrossRef]

- Parent, O.; LeSage, J.P. A space–time filter for panel data models containing random effects. Comput. Stat. Data Anal. 2011, 55, 475–490. [Google Scholar] [CrossRef]

- Elhorst, J.P. Serial and spatial error correlation. Econ. Lett. 2008, 100, 422–424. [Google Scholar] [CrossRef]

- Lee, L.; Yu, J. Estimation of fixed effects panel regression models with separable and nonseparable space–time filters. J. Econ. 2015, 184, 174–192. [Google Scholar] [CrossRef]

- Zhao, J.; Zhao, Y.; Lin, J.; Miao, Z.X.; Khaled, W. Estimation and testing for panel data partially linear single-index models with errors correlated in space and time. Random Matrices Theory Appl. 2020, 9, 2150005. [Google Scholar] [CrossRef]

- Li, S.; Chen, J.; Li, B. Estimation and Testing of Random Effects Semiparametric Regression Model with Separable Space-Time Filters. Fractal Fract. 2022, 6, 735. [Google Scholar] [CrossRef]

- Li, B.; Chen, J.; Li, S. Estimation of Fixed Effects Partially Linear Varying Coefficient Panel Data Regression Model with Nonseparable Space-Time Filters. Mathematics 2023, 11, 1531. [Google Scholar] [CrossRef]

- Bai, Y.; Hu, J.; You, J. Panel data partially linear varying-coefficient model with errors correlated in space and time. Stat. Sin. 2015, 35, 275–294. [Google Scholar]

- Cai, Z. Trending time-varying coefficient time series models with serially correlated errors. J. Econ. 2007, 136, 163–188. [Google Scholar] [CrossRef]

- Fan, J.; Zhang, C.; Zhang, J. Generalized likelihood ratio statistics and Wilks phenomenon. Ann. Stat. 2001, 29, 153–193. [Google Scholar] [CrossRef]

- Su, L.; Jin, S. Profile quasi-maximum likelihood estimation of partially linear spatial autoregressive models. J. Econ. 2010, 157, 18–33. [Google Scholar] [CrossRef]

- Lee, L. Asymptotic distributions of quasi-maximum likelihood estimators for spatial autoregressive models. Econometrica 2004, 72, 1899–1925. [Google Scholar] [CrossRef]

- Hamilton, S.A.; Truong, Y.K. Local linear estimation in partly linear models. J. Multivar. Anal. 1997, 60, 1–19. [Google Scholar] [CrossRef]

- Su, L.; Ullah, A. Nonparametric and semiparametric panel econometric models: Estimation and testing. In Handbook of Empirical Economics and Finance; CRC: Boca Raton, FL, USA, 2011; pp. 455–497. [Google Scholar]

- Su, L.; Ullah, A. Profile likelihood estimation of partially linear panel data models with fixed effects. Econ. Lett. 2006, 92, 75–81. [Google Scholar] [CrossRef]

- Su, L. Semiparametric GMM estimation of spatial autoregressive models. J. Econ. 2012, 167, 543–560. [Google Scholar] [CrossRef]

- Mack, Y.; Silverman, B.W. Weak and strong uniform consistency of kernel regression estimates. Z. Wahrscheinlichkeitstheorie Verwandte Geb. 1982, 61, 405–415. [Google Scholar] [CrossRef]

- Feng, Q.; Horrace, W.C. Alternative technical efficiency measures: Skew, bias, and scale. J. Appl. Econ. 2010, 27, 253–268. [Google Scholar] [CrossRef]

- Druska, V.; Horrace, W.C. Generalized moments estimation for spatial panel data: Indonesian rice farming. Am. J. Agric. Econ. 2004, 86, 185–198. [Google Scholar] [CrossRef]

- Cheng, S.; Chen, J. Estimation of partially linear single-index spatial autoregressive model. Stat. Pap. 2021, 62, 495–531. [Google Scholar] [CrossRef]

- Qu, A.; Lindsay, B.G.; Li, B. Improving generalized estimating equations using quadratic inference functions. Biometrika 2000, 87, 823–836. [Google Scholar] [CrossRef]

- White, H. Estimation, Inference and Specification Analysis; Cambridge University Press: Cambridge, UK, 1996. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).