A Novel Fractional Model and Its Application in Network Security Situation Assessment

Abstract

1. Introduction

2. Materials and Methods

2.1. Fundamental Theory of Fractional Calculus

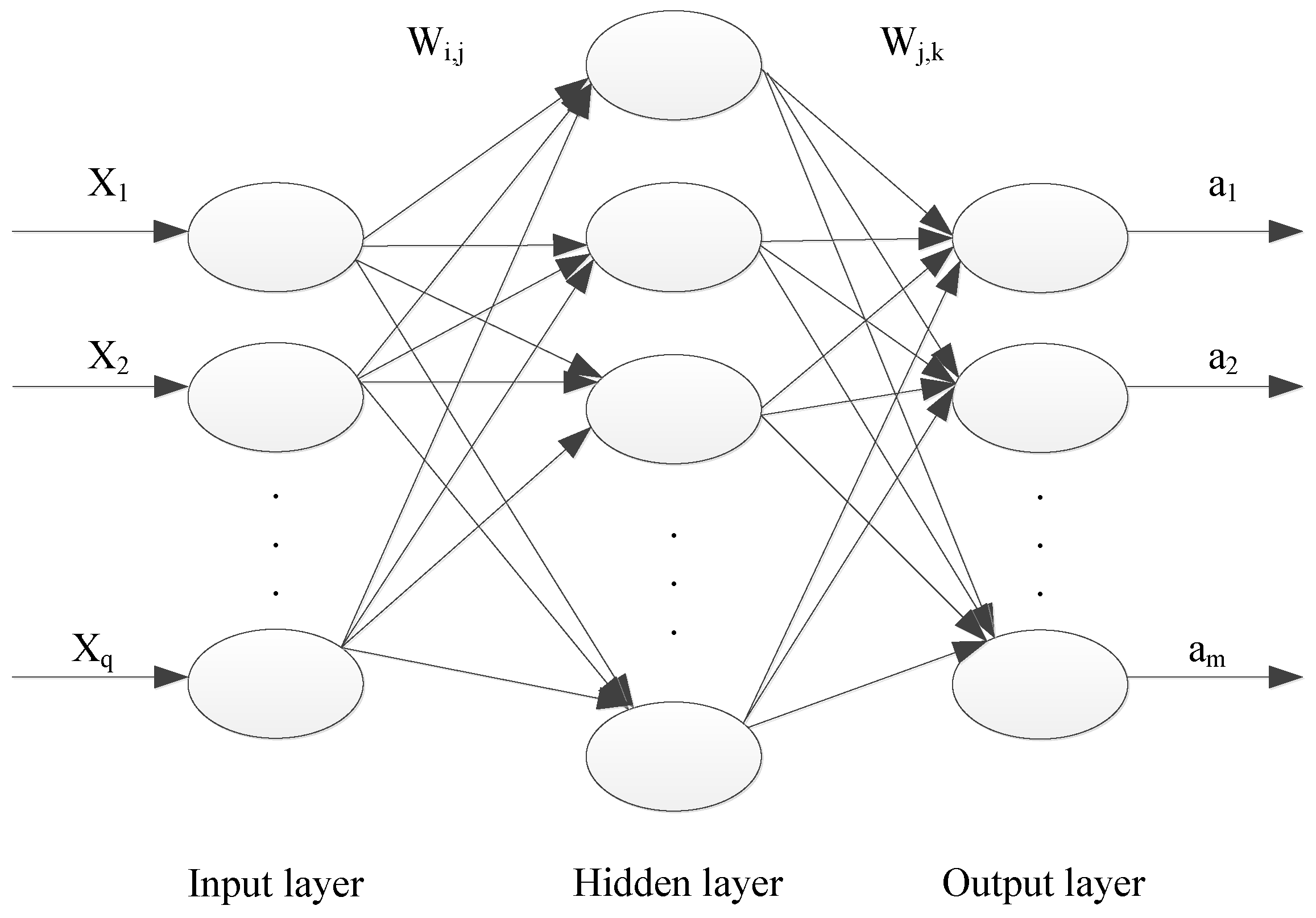

2.2. Fundamental Theory of BP Neural Networks

2.3. Basic Principles of BP Neural Networks

2.4. Fractional BP Neural Network

2.4.1. Caputo Fractional Derivative

2.4.2. Caputo Fractional BP Neural Network

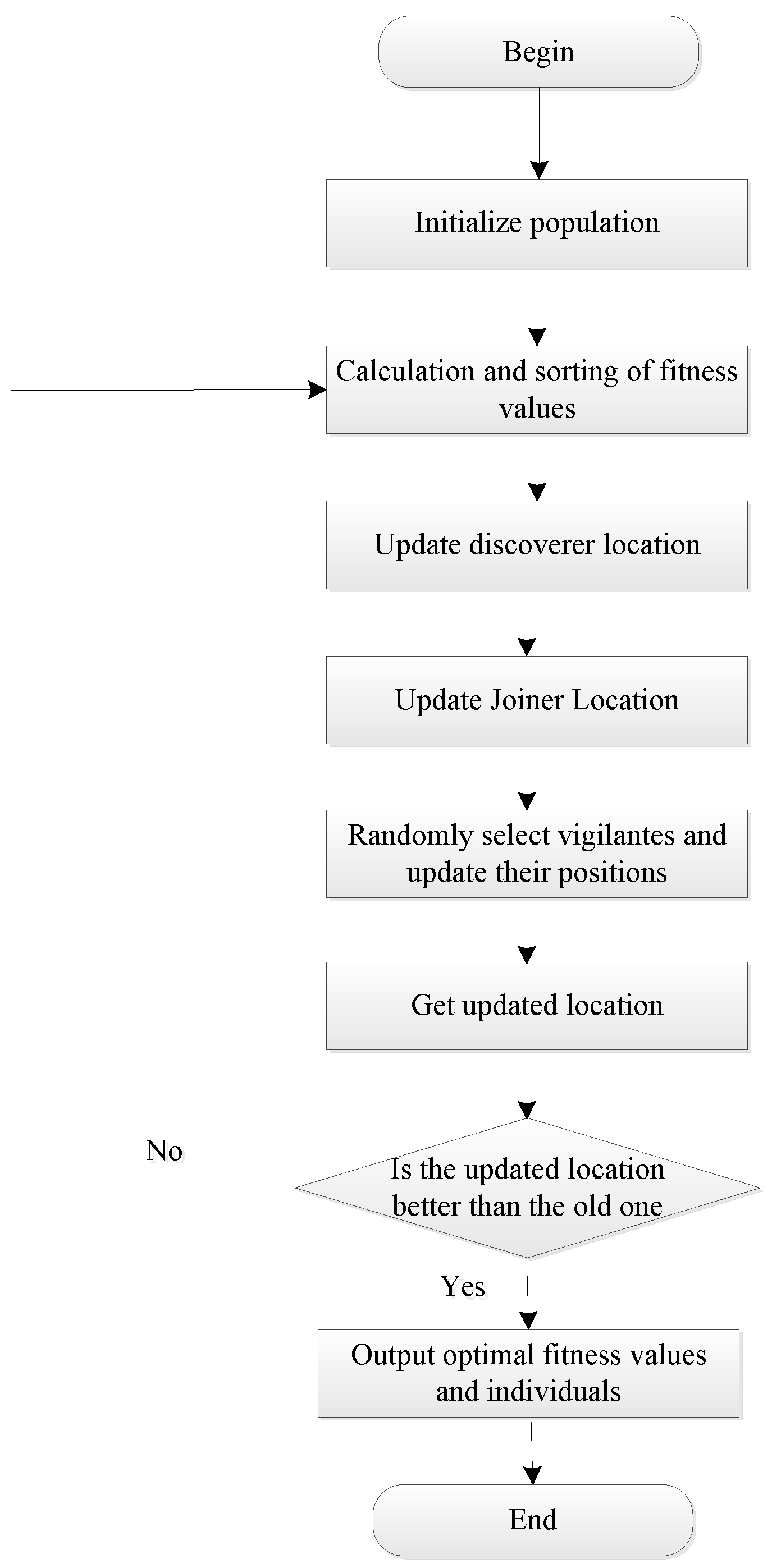

2.5. Fundamental Theory of the Sparrow Search Algorithm

2.5.1. Mathematical Model of the Algorithm

2.5.2. Analysis of the Advantages and Disadvantages of SSA

2.6. Fundamental Theory of Chaos

2.6.1. Definition of Chaos

Li-Yorke Definition of Chaos

- (1)

- The periodic points of have unbounded periods.

- (2)

- For any where ,

- (3)

- For any ,

- (4)

- Where , and for any and any periodic point of , Then is said to be chaotic on interval .

- (1)

- The system includes a countable number of stable periodic trajectories that exhibit regular and repetitive patterns.

- (2)

- It also contains numerous unstable non-periodic trajectories that display irregular and unpredictable behavior.

- (3)

- Crucially, there is at least one unstable non-periodic trajectory, underscoring the complexity and unpredictability of chaotic motion.

Devaney’s Definition of Chaos

- (1)

- is topologically transitive.

- (2)

- The periodic points of are dense in .

- (3)

- Sensitivity to initial conditions: there exists , such that for any and any , there exists in the -neighborhood of and a natural number such that .

2.6.2. Chaotic Mapping

Logistic Map

Chebyshev Map

Tent Chaotic Map

Cubic Chaotic Map

Selection of Chaotic Maps

3. Results

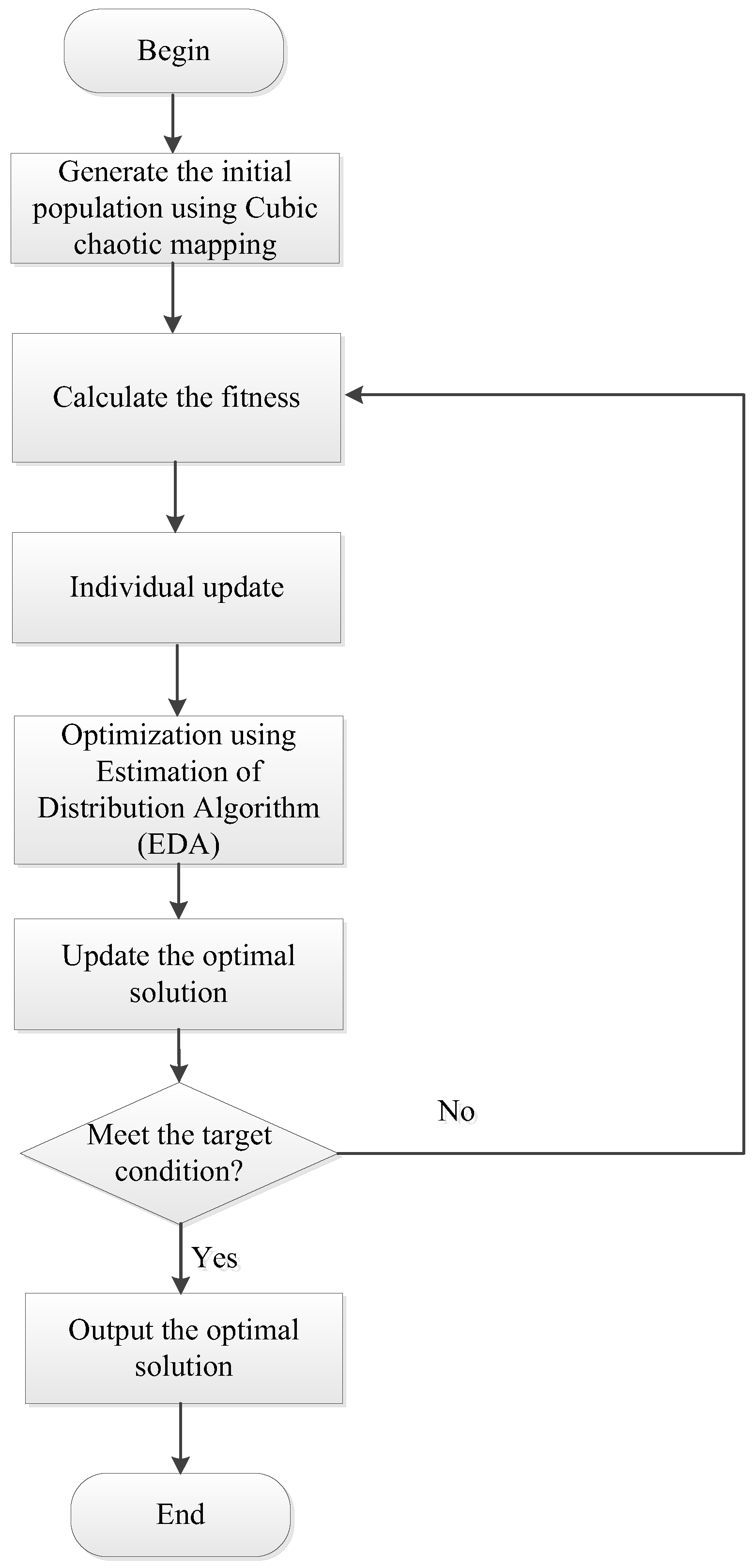

3.1. The Sparrow Search Algorithm Based on Chaotic Mapping and Estimation of Distribution Algorithm (EDA)

- (1)

- Generate the initial population using the cubic chaotic map to determine the initial positions of the population, as described by the following equation:where: —the lower and upper boundaries of the solution space; —Chaotic sequence generated by the cubic chaotic map.

- (2)

- Fitness Calculation: For each individual in the initial population, calculate its fitness value and determine the position and fitness of the best individual in the population.

- (3)

- Iterative Population Update: Update the positions of explorers, followers, and scouts in the population according to the standard SSA update rules.

- (4)

- Optimization using EDA: Every five iterations, apply EDA for the secondary optimization of the current population. Construct a Gaussian probability model to generate new individuals, replacing underperforming ones. The formula is as follows:where: μ—mean vector; —covariance matrix.

3.2. TESA-FBP Model Evaluation Process

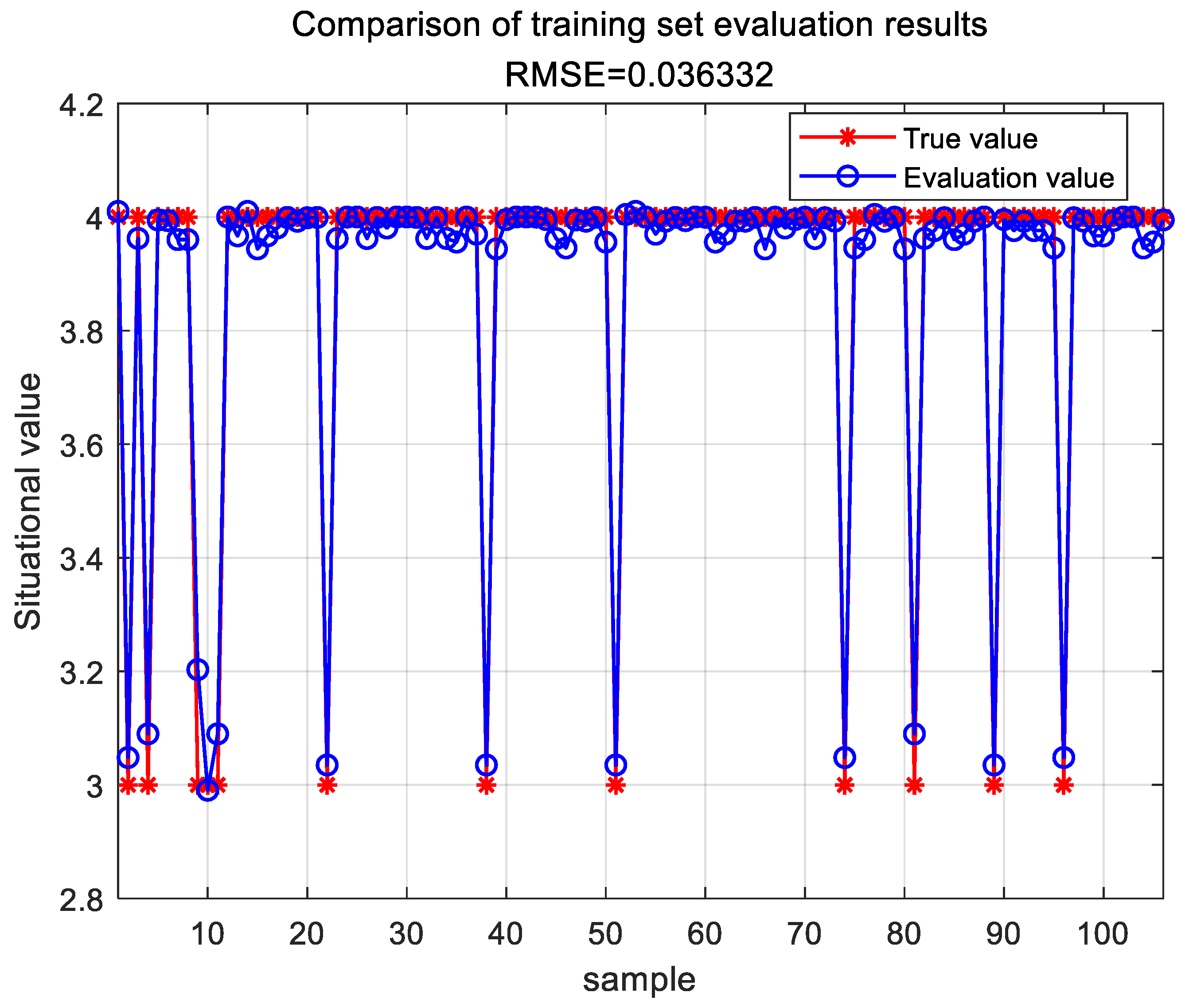

3.3. Experimental Analysis

3.3.1. Selection of Experimental Data and Evaluation Metrics

3.3.2. Evaluation Model Comparison

4. Discussion

5. Conclusions

- (1)

- High Adaptability: The chaotic sparrow algorithm enhances the diversity and global optimization capability of the search process through chaotic mapping, thereby improving the model’s adaptability in complex environments.

- (2)

- High Optimization Efficiency: EDA (Estimation of Distribution Algorithm) effectively utilizes information from historical data distributions to guide the search process, allowing the model to converge more quickly in large-scale data and complex network structures.

- (3)

- Model Stability: The fractional-order BP (Backpropagation) model increases the network’s memory capacity and robustness by incorporating fractional-order calculus, leading to greater stability when dealing with variations in complex network environments.

- (4)

- Computational Complexity Management: The combination of chaotic sparrow optimization and EDA effectively manages computational complexity, maintaining high efficiency even when handling large-scale networks.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Talebi, S.P.; Werner, S.; Li, S.; Mandic, D.P. Tracking dynamic systems in α stable environments. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing, Brighton, UK, 12–17 May 2019; pp. 4853–4857. [Google Scholar]

- Bai, L.; An, Y.; Sun, Y. Measurement of project portfolio benefits with a GA-BP neural network group. IEEE Trans. Eng. Manag. 2023, 71, 4737–4749. [Google Scholar] [CrossRef]

- Chaudhary, N.I.; Zubair, S.; Raja, M.A.Z. A new computing approach for power signal modeling using fractional adaptive algorithms. ISA Trans. 2017, 68, 189 202. [Google Scholar] [CrossRef] [PubMed]

- Gorenflo, R.; Mainardi, F. Fractional Calculus: Integral and Differential Equations of Fractional Order; Springer: Berlin/Heidelberg, Germany, 1997. [Google Scholar]

- Sabatier, J.; Agrawal, O.P.; Machado, J.T. Advances in Fractional Calculus; Springer: Berlin/Heidelberg, Germany, 2007. [Google Scholar]

- Machado, J.T.; Kiryakova, V.; Mainardi, F. Recent history of fractional calculus. Commu Nications Nonlinear Sci. Numer. Simul. 2011, 16, 1140–1153. [Google Scholar] [CrossRef]

- Alsaedi, A.; Ahmad, B.; Kirane, M. A survey of useful inequalities in fractional calculus. Fract. Calc. Appl. Anal. 2017, 20, 574–594. [Google Scholar] [CrossRef]

- Luchko, Y. Fractional derivatives and the fundamental theorem of fractional calculus. Fract. Calc. Appl. Anal. 2020, 23, 939–966. [Google Scholar] [CrossRef]

- Hamamci, S.E. An algorithm for stabilization of fractional order time delay systems using fractional order pid controllers. IEEE Trans. Autom. Control. 2007, 52, 1964–1969. [Google Scholar] [CrossRef]

- Kumar, A.; Pan, S. Design of fractional order pid controller for load frequency control system with communication delay. ISA Trans. 2022, 129, 138–149. [Google Scholar] [CrossRef]

- Pu, Y.F.; Zhou, J.L.; Yuan, X. Fractional differential mask: A fractional differential based approach for multiscale texture enhancement. IEEE Trans. Image Process. 2010, 19, 491–511. [Google Scholar]

- Pu, Y.F.; Siarry, P.; Chatterjee, A.; Wang, Z.N.; Yi, Z.; Liu, Y.G.; Zhou, J.L.; Wang, Y. A fractional order variational framework for retinex: Fractional order partial differential equation based formulation for multi scale nonlocal contrast en hancement with texture preserving. IEEE Trans. Image Process. 2018, 27, 1214–1229. [Google Scholar] [CrossRef]

- Wang, Q.; Ma, J.; Yu, S.; Tan, L. Noise detection and image denoising based on fractional calculus. Chaos Solitons Fractals 2020, 131, 109463. [Google Scholar] [CrossRef]

- Munkhammar, J. Chaos in a fractional order logistic map. Fract. Calc. Appl. Anal. 2013, 16, 511–519. [Google Scholar] [CrossRef]

- Wu, G.C.; Baleanu, D.; Zeng, S.D. Discrete chaos in fractional sine and standard maps. Phys. Lett. A 2014, 378, 484–487. [Google Scholar] [CrossRef]

- Wang, S.; Zhu, H.; Wu, M.; Zhang, W. Active disturbance rejection decoupling control for three-degree-of-freedom six-pole active magnetic bearing based on BP neural network. IEEE Trans. Appl. Supercond. 2020, 30, 1–5. [Google Scholar] [CrossRef]

- Liu, X.; Zhou, Y.; Wang, Z.; Chen, X. A BP neural network-based communication blind signal detection method with cyber-physical-social systems. IEEE Access 2018, 6, 43920–43935. [Google Scholar] [CrossRef]

- Tian, L.; Noore, A. Short-term load forecasting using optimized neural network with genetic algorithm. In Proceedings of the 2004 International Conference on Probabilistic Methods Applied to Power Systems, Ames, IA, USA, 12–16 September 2004; pp. 135–140. [Google Scholar]

- Dam, M.; Saraf, D.N. Design of neural networks using genetic algorithm for on-line property estimation of crude fractionator products. Comput. Chem. Eng. 2006, 30, 722–729. [Google Scholar] [CrossRef]

- Panda, S.S.; Ames, D.P.; Panigrahi, S. Application of vegetation indices for agricultural crop yield prediction using neural network techniques. Remote Sens. 2010, 2, 673–696. [Google Scholar] [CrossRef]

- Zuogong, W.; Huiyang, L.I.; Yuanhua, J.I.A. A neural network model for expressway investment risk evaluation and its application. J. Transp. Syst. Eng. Inf. Technol. 2013, 13, 94–99. [Google Scholar]

- Wang, W.; Tang, R.; Li, C.; Liu, P.; Luo, L. A BP neural network model optimized by mind evolutionary algorithm for predicting the ocean wave heights. Ocean. Eng. 2018, 162, 98–107. [Google Scholar] [CrossRef]

- Tan, X.; Ji, Z.; Zhang, Y. Non-invasive continuous blood pressure measurement based on mean impact value method, BP neural network, and genetic algorithm. Technol. Health Care 2018, 26 (Suppl. S1), 87–101. [Google Scholar] [CrossRef]

- Mamat, T.; Jumaway, M.; Zhang, X.; Hassan, M. Research on impact factors of agricultural mechanization development level based on BP neural network. J. Agric. Mech. Res. 2018, 40, 21–25. [Google Scholar]

- Chen, W. BP Neural Network-Based Evaluation Method for Enterprise Comprehensive Performance. Math. Probl. Eng. 2022, 2022, 1–11. [Google Scholar]

- Liu, J.; He, X.; Huang, H.; Yang, J.; Dai, J.; Shi, X.; Xue, F.; Rabczuk, T. Predicting gas flow rate in fractured shale reservoirs using discrete fracture model and GA-BP neural network method. Eng. Anal. Bound. Elem. 2024, 159, 315–330. [Google Scholar] [CrossRef]

- Zhou, J.; Lin, H.; Li, S.; Jin, H.; Zhao, B.; Liu, S. Leakage diagnosis and localization of the gas extraction pipeline based on SA-PSO BP neural network. Reliab. Eng. Syst. Saf. 2023, 232, 109051. [Google Scholar] [CrossRef]

- Li, X.; Jia, C.; Zhu, X.; Zhao, H.; Gao, J. Investigation on the deformation mechanism of the full-section tunnel excavation in the complex geological environment based on the PSO-BP neural network. Environ. Earth Sci. 2023, 82, 326. [Google Scholar] [CrossRef]

- Tutueva, A.V.; Nepomuceno, E.G.; Karimov, A.I.; Andreev, V.S.; Butusov, D.N. Adaptive chaotic maps and their application to pseudo-random numbers generation. Chaos Solitons Fractals 2020, 133, 109615. [Google Scholar] [CrossRef]

- Shahna, K.U. Novel chaos based cryptosystem using four-dimensional hyper chaotic map with efficient permutation and substitution techniques. Chaos Solitons Fractals 2023, 170, 113383. [Google Scholar] [CrossRef]

- Wang, Y.; Liu, H.; Ding, G.; Tu, L. Adaptive chimp optimization algorithm with chaotic map for global numerical optimization problems. J. Supercomput. 2023, 79, 6507–6537. [Google Scholar] [CrossRef]

- Aydemir, S.B. A novel arithmetic optimization algorithm based on chaotic maps for global optimization. Evol. Intell. 2023, 16, 981–996. [Google Scholar] [CrossRef]

- Mehmood, K.; Chaudhary, N.I.; Khan, Z.A.; Cheema, K.M.; Raja, M.A.Z.; Shu, C.M. Novel knacks of chaotic maps with Archimedes optimization paradigm for nonlinear ARX model identification with key term separation. Chaos Solitons Fractals 2023, 175, 114028. [Google Scholar] [CrossRef]

- Mehmood, K.; Chaudhary, N.I.; Khan, Z.A.; Cheema, K.M.; Zahoor Raja, M.A. Atomic physics-inspired atom search optimization heuristics integrated with chaotic maps for identification of electro-hydraulic actuator systems. Mod. Phys. Lett. B 2024, 38, 2450308. [Google Scholar] [CrossRef]

- Aguiar, B.; González, T.; Bernal, M. A way to exploit the fractional stability domain for robust chaos suppression and synchronization via LMIs. IEEE Trans. Autom. Control. 2015, 61, 2796–2807. [Google Scholar] [CrossRef]

- Olusola, O.I.; Vincent, E.; Njah, A.N.; Ali, E. Control and synchronization of chaos in biological systems via backsteping design. Int. J. Nonlinear Sci. 2011, 11, 121–128. [Google Scholar]

- Yin, K.; Yang, Y.; Yang, J.; Yao, C. A network security situation assessment model based on BP neural network optimized by D-S evidence theory. J. Phys. Conf. Ser. 2022, 2258, 012039. [Google Scholar] [CrossRef]

- Rezaeian, N.; Gurina, R.; Saltykova, O.A.; Hezla, L.; Nohurov, M.; Reza Kashyzadeh, K. Novel GA-Based DNN Architecture for Identifying the Failure Mode with High Accuracy and Analyzing Its Effects on the System. Appl. Sci. 2024, 14, 3354. [Google Scholar] [CrossRef]

| The Framework of Sparrow Search Algorithm |

|---|

| input: |

| G: Maximum Number Of Iterations; |

| w: Number of Discoverers in Sparrow Population; |

| SD: Number of vigilantes in the sparrow population; |

| R2: warning value; |

| Establish an objective function where are initialized, and relevant parameters are defined; |

| output: ; |

| 1: while |

| 2: Sort the fitness values to find the current best individual and the current worst individual; |

| 4: for |

| 5: Update the position of individual sparrow discoverers using Equation (10); |

| 6: end for; |

| 7: for |

| 8: Use Equation (11) to update the position of individual sparrow joiners; |

| 9: end for; |

| 10: for |

| 11: Use Equation (12) to update the position of individual sparrow watchers; |

| 12: end for; |

| 13: Obtain the latest global optimum; |

| 14: |

| 15: end while; |

| 16: return . |

| Serial Number | Names of Chaotic Mappings | Expression |

|---|---|---|

| 1 | Logistic | . |

| 2 | Chebyshev | . |

| 3 | Tent | |

| 4 | Cubic |

| Optimization Strategy | Description | Limitations |

|---|---|---|

| Adaptive Weight Strategy | Dynamically adjusts the weight of individuals’ position updates, enhancing exploration in early stages and exploitation in later stages. | Requires fine-tuning, which may increase computational complexity. |

| Mutation Strategy | Introduces random mutations similar to genetic algorithms, increasing population diversity and preventing early convergence. | Excessive mutation can lead to instability and impact convergence accuracy. |

| Multi-Strategy Cooperative Optimization | Combines multiple algorithm strategies (e.g., PSO, GA, DE) to enhance global search and convergence performance through synergy. | High algorithmic complexity; managing conflicts between different strategies can be challenging. |

| Levy Flight Strategy | Utilizes the Levy flight mechanism to allow individuals to perform long-distance jumps, enhancing global exploration capability. | Jump distances are hard to control and may lead to unstable search processes. |

| Adaptive Chaos Strategy | Dynamically adjusts chaotic parameters during the search, allowing different chaotic behaviors at various stages to balance exploration and exploitation. | Parameter selection can be complex and problem-dependent. |

| The Framework of Sparrow Search Algorithm |

|---|

| input: |

| G: Maximum Number Of Iterations; |

| w: Number of Discoverers in Sparrow Population; |

| SD: Number of vigilantes in the sparrow population; |

| R2: warning value; |

| Establish an objective function ,where , are initialized by cubic algorithm and relevant parameters are defined; |

| output: |

| 1: while |

| 2: Sort the fitness values to find the current best individual and the current worst individual; |

| 4: for |

| 5: Update the position of individual sparrow discoverers using Equation (13); |

| 6: end for; |

| 7: for |

| 8: Use Equation (14) to update the position of individual sparrow joiners; |

| 9: end for; |

| 10: for |

| 11: Use Equation (15) to update the position of individual sparrow watchers; |

| 12: Use EDA for optimization: construct a Gaussian probability model using Equation (31) to generate new individuals after every five iterations, replacing those with poor performance. |

| 12: end for; |

| 13: Obtain the latest global optimum; |

| 14: |

| 15: end while; |

| 16: return . |

| Excellent | Good | Secondary | Poor | Dangerous |

|---|---|---|---|---|

| 5 | 4 | 3 | 2 | 1 |

| Sample | Actual Value | Evaluation Value | Absolute Error |

|---|---|---|---|

| 1 | 3 | 3.048 | 0.048 |

| 2 | 4 | 3.995 | 0.005 |

| 3 | 4 | 4 | 0 |

| 4 | 4 | 3.993 | 0.007 |

| 5 | 4 | 4.005 | 0.005 |

| 6 | 4 | 3.981 | 0.019 |

| 7 | 4 | 3.998 | 0.002 |

| 8 | 4 | 4 | 0 |

| 9 | 4 | 4.01 | 0.01 |

| 10 | 3 | 3.09 | 0.09 |

| 11 | 4 | 3.993 | 0.007 |

| 12 | 4 | 4.005 | 0.005 |

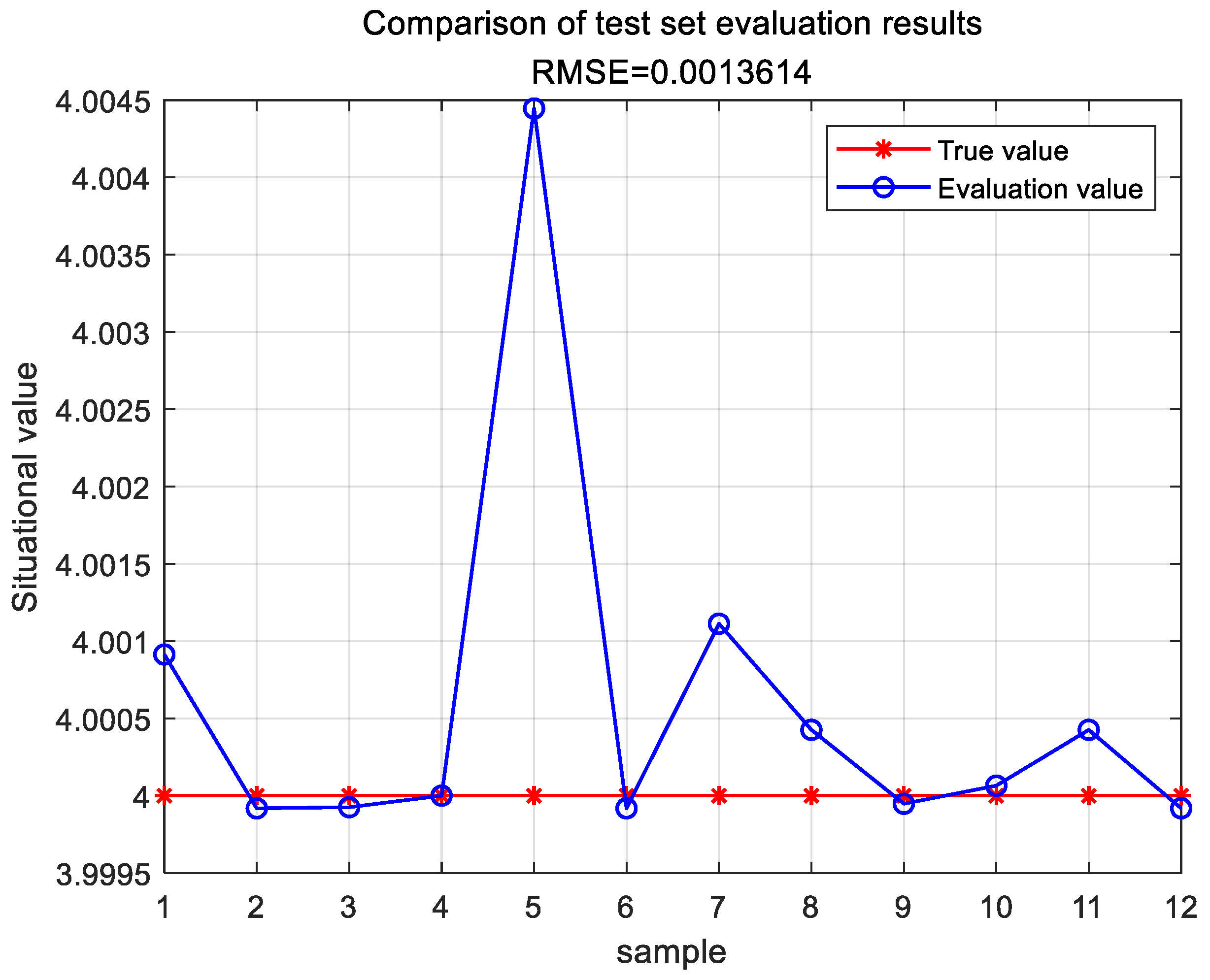

| Sample | Actual Value | Evaluation Value | Absolute Error |

|---|---|---|---|

| 1 | 4 | 4.001 | 0.001 |

| 2 | 4 | 4 | 0 |

| 3 | 4 | 4 | 0 |

| 4 | 4 | 4 | 0 |

| 5 | 4 | 4.004 | 0.004 |

| 6 | 4 | 4 | 0 |

| 7 | 4 | 4.001 | 0.001 |

| 8 | 4 | 4 | 0 |

| 9 | 4 | 4 | 0 |

| 10 | 4 | 4 | 0 |

| 11 | 4 | 4 | 0 |

| 12 | 4 | 4 | 0 |

| Sample | Actual Value | Evaluation Value | Absolute Error |

|---|---|---|---|

| 1 | 4 | 4.002 | 0.002 |

| 2 | 4 | 3.999 | 0.001 |

| 3 | 4 | 3.999 | 0.001 |

| 4 | 4 | 4 | 0 |

| 5 | 4 | 3.999 | 0.001 |

| 6 | 3 | 3 | 0 |

| 7 | 3 | 2.984 | 0.016 |

| 8 | 4 | 3.996 | 0.004 |

| 9 | 4 | 4 | 0 |

| 10 | 4 | 4 | 0 |

| 11 | 4 | 4 | 0 |

| 12 | 4 | 4 | 0 |

| Sample | Actual Value | Evaluation Value | Absolute Error |

|---|---|---|---|

| 1 | 4 | 4.001 | 0.001 |

| 2 | 4 | 4 | 0 |

| 3 | 4 | 4 | 0 |

| 4 | 4 | 4 | 0 |

| 5 | 4 | 4.005 | 0.005 |

| 6 | 4 | 4 | 0 |

| 7 | 4 | 4.001 | 0.001 |

| 8 | 4 | 4 | 0 |

| 9 | 4 | 4 | 0 |

| 10 | 4 | 4 | 0 |

| 11 | 4 | 4 | 0 |

| 12 | 4 | 4 | 0 |

| Evaluation Model | MAE |

|---|---|

| SA-PSO-BP | 0.0165 |

| DS-BP | 0.002083 |

| GA-DNN | 0.001065 |

| TESA-FBP | 0.000583 |

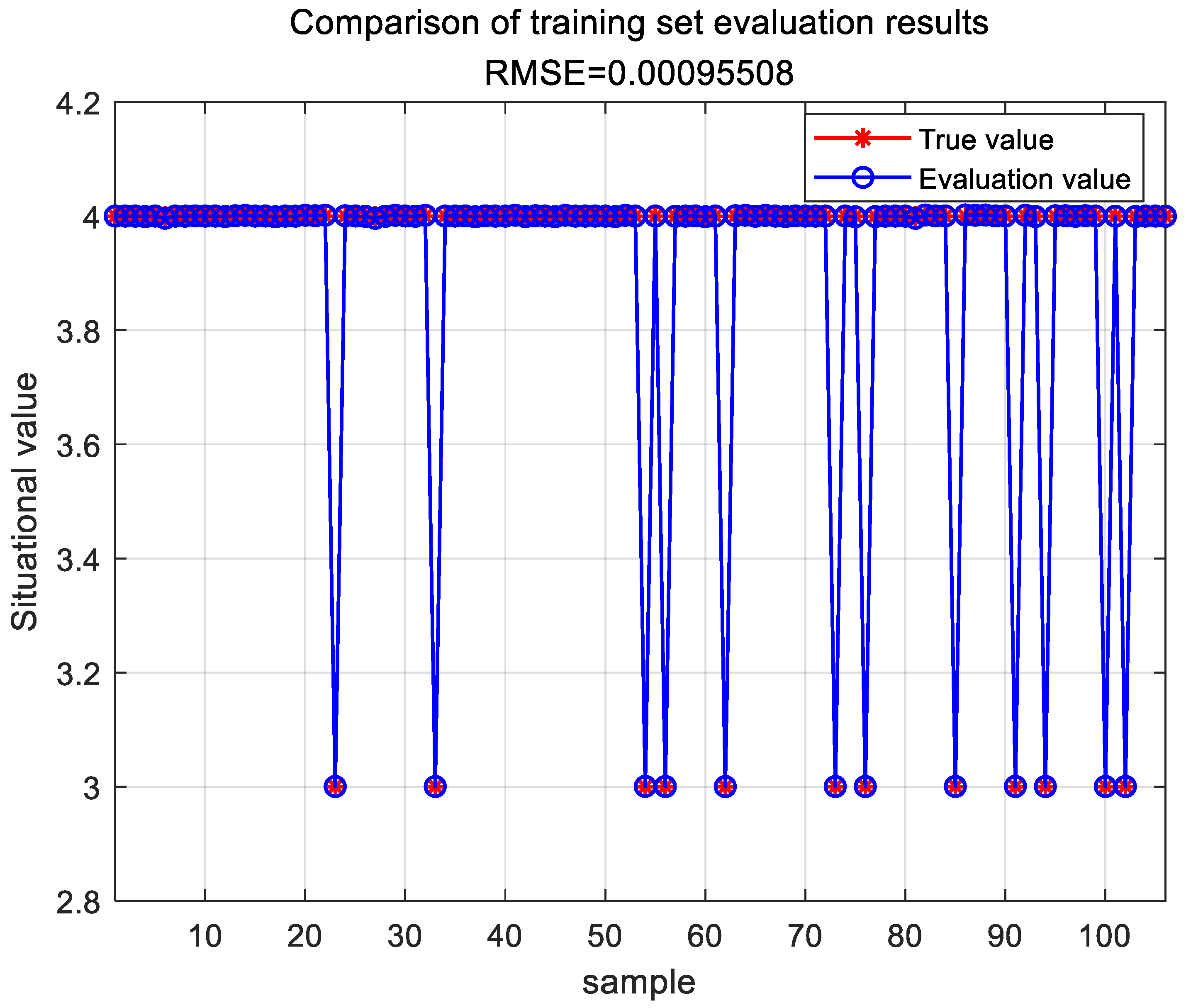

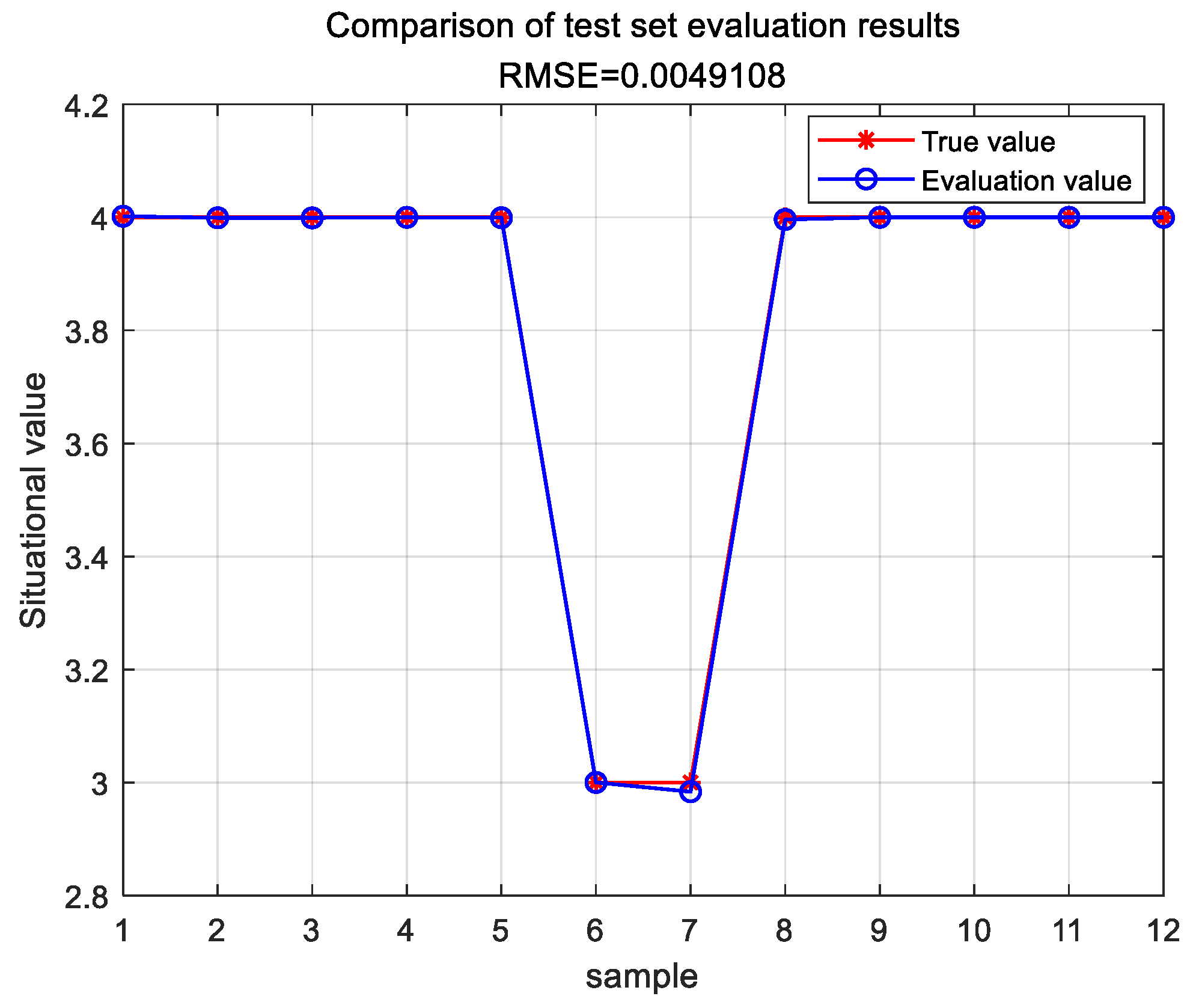

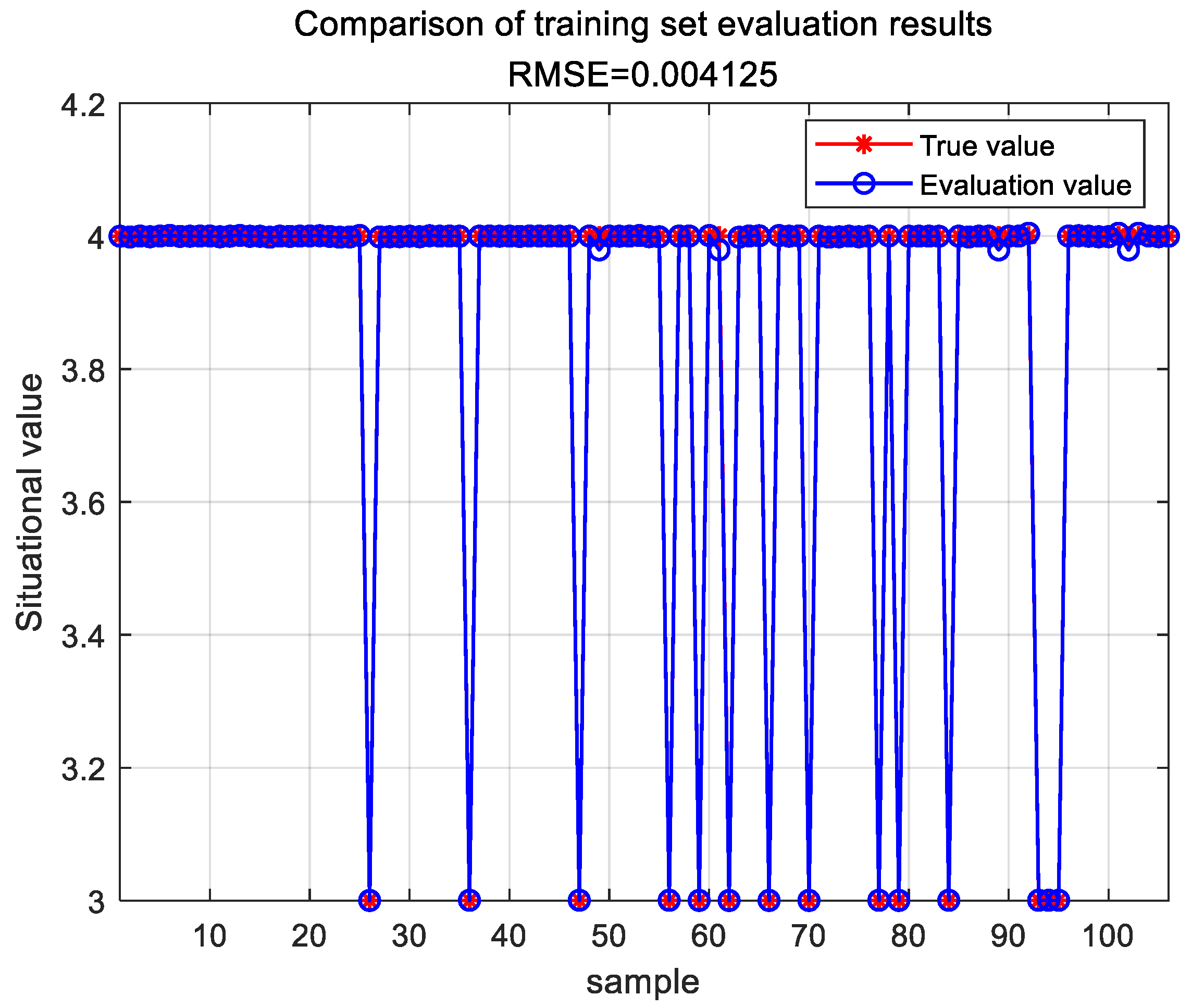

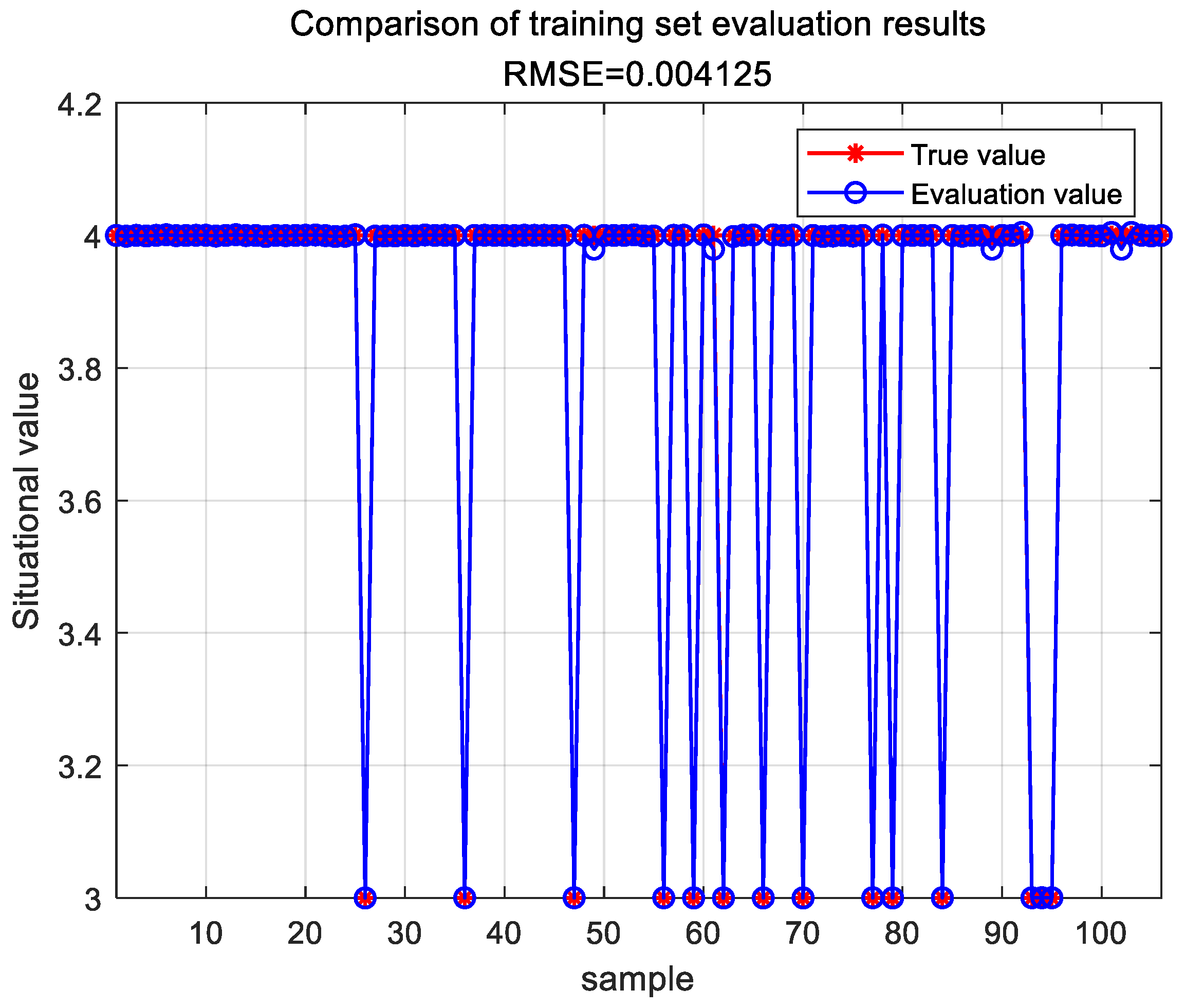

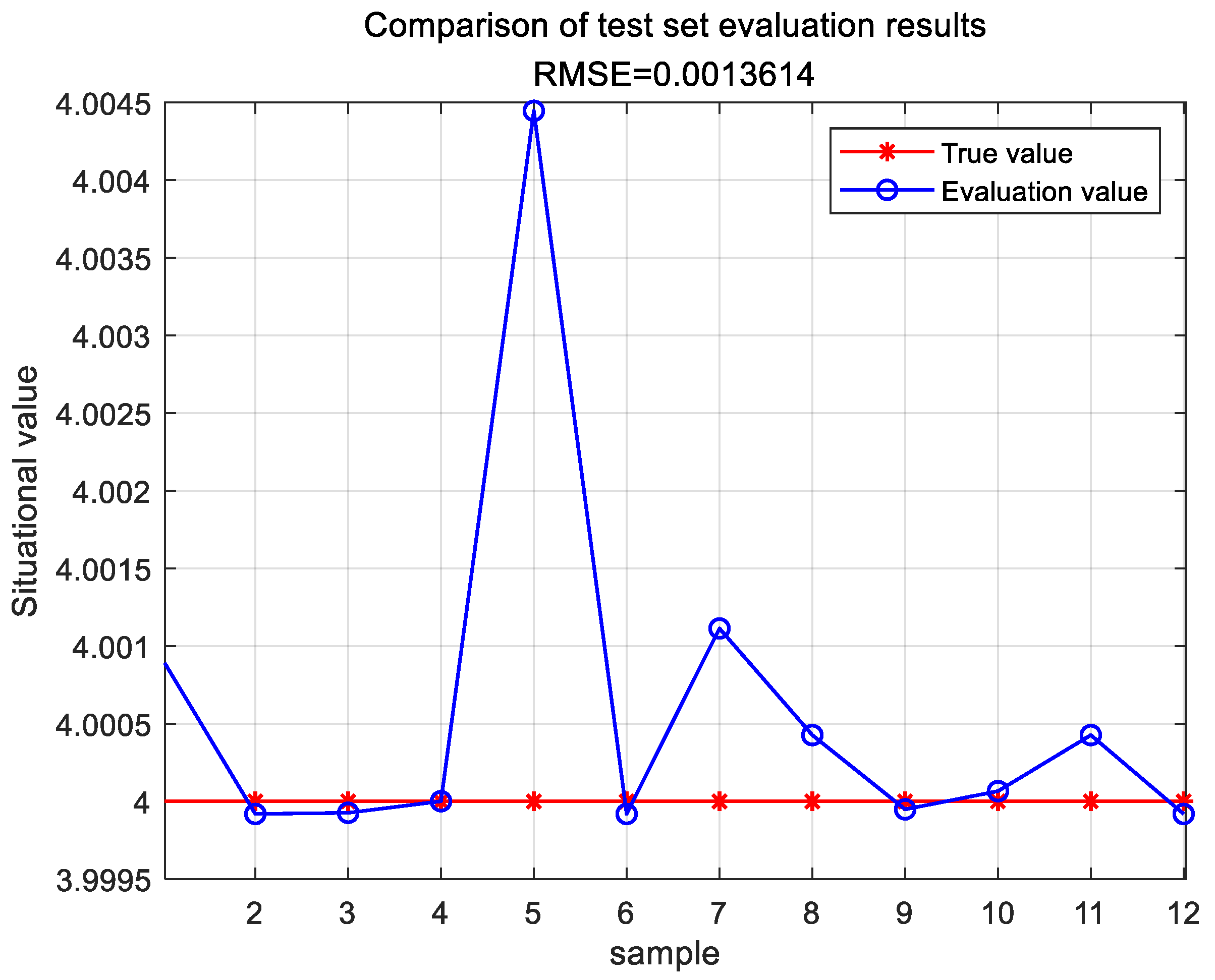

| Evaluation Model | RMSE | |

|---|---|---|

| Train Data (90%) | Test Data (10%) | |

| SA-PSO-BP | 0.036332 | 0.036394 |

| DS-BP | 0.00095508 | 0.0049108 |

| GA-DNN | 0.00654654 | 0.0072345 |

| TESA-FBP | 0.004125 | 0.0013614 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huang, R.; Pu, Y. A Novel Fractional Model and Its Application in Network Security Situation Assessment. Fractal Fract. 2024, 8, 550. https://doi.org/10.3390/fractalfract8100550

Huang R, Pu Y. A Novel Fractional Model and Its Application in Network Security Situation Assessment. Fractal and Fractional. 2024; 8(10):550. https://doi.org/10.3390/fractalfract8100550

Chicago/Turabian StyleHuang, Ruixiao, and Yifei Pu. 2024. "A Novel Fractional Model and Its Application in Network Security Situation Assessment" Fractal and Fractional 8, no. 10: 550. https://doi.org/10.3390/fractalfract8100550

APA StyleHuang, R., & Pu, Y. (2024). A Novel Fractional Model and Its Application in Network Security Situation Assessment. Fractal and Fractional, 8(10), 550. https://doi.org/10.3390/fractalfract8100550