1. Introduction

Radial basis functions (RBFs) have become instrumental in various mathematical and computational domains, stemming from the necessity to address challenges in multivariate interpolation and partial differential equations (PDEs) when dealing with randomly distributed, scattered data points, as encountered in cartography. The pioneering contribution of Hardy marked the inception of a research area that has significantly evolved [

1]. Coined by Kansa in the 1990s, the term “radial basis functions” traces its development back to earlier works in the 1970s by Micchelli, Powell, and other researchers exploring the nonsingularity theorem [

2,

3]. Kansa’s proposal to consider analytical derivatives of RBFs paved the way for numerical schemes in solving PDEs [

4,

5], proving valuable in higher-dimensional and irregular domains.

The power of RBFs lies in their ability to achieve accurate interpolation and approximation in cases where traditional grids and structured approaches are not feasible. The unique flexibility of RBFs in the choice of functions, allowing adaptation to various problems and applications across scientific and engineering fields, boosts their ongoing development and refinement. Applications of RBFs extend into diverse domains, including physics, engineering, complex systems modeling, data science, and more [

6,

7,

8,

9]. In computational physics, RBFs play a crucial role in solving partial differential equations that describe natural phenomena like fluid flow and wave propagation. In engineering, they serve as valuable tools for designing and analyzing structures, enabling the precise simulation of complex behaviors. Additionally, RBFs find applications in data science and machine learning tasks, such as data interpolation, approximation, and pattern detection in multidimensional datasets.

Within the realm of radial basis functions, various types of radial functions have been proposed for different applications. Examples include polyharmonic splines, multiquadric functions, inverse multiquadric functions, and Gaussian functions, with each serving different purposes [

10,

11]. Despite their advantages, the matrices resulting from methods involving RBFs can be dense and suffer from ill-conditioning, leading to numerical challenges. Additionally, some RBFs have a shape parameter that significantly impacts the accuracy of numerical results, influencing the interpolation and approximation process. To tackle these challenges and enhance the conditioning of interpolation matrices, alternative algorithms have been developed. Examples include the Contour–Padé method, proposed by Fornberg and Wright [

12], which generates better-conditioned interpolants, and the RBF-QR method, introduced by Fornberg and Piret [

13], using

matrix decomposition to transform function bases into well-conditioned ones.

In the realm of fractional calculus, a fractional derivative generalizes the ordinary derivative, and fractional differential equations involve operators of fractional order, becoming increasingly essential in various research areas, including magnetic field theory, fluid dynamics, electrodynamics, and multidimensional processes [

14,

15,

16,

17]. Fractional operators find applications in finance, economics, the Riemann zeta function, and the study of hybrid solar receivers [

18,

19,

20,

21,

22,

23]. Furthermore, the study of fractional operators has expanded to include solving nonlinear algebraic systems [

24,

25,

26,

27,

28,

29,

30].

Due to the importance of fractional differential equations, numerous numerical methods have been proposed, with radial basis functions standing out due to their independence from problem dimensions and their meshless characteristics [

31,

32,

33,

34]. The acquisition of precise solutions for differential equations, both classical and fractional, remains fundamental in engineering and computational mathematics. In this context, the thin plate spline (TPS), a radial basis function defined as follows [

10]:

emerges as a versatile tool for modeling various behaviors. However, its direct application faces challenges that require meticulous adaptations to address specific domains and problems. To exemplify one of the challenges of directly applying the TPS function, it is considered an

m-th derivative, which may be written in general form as follows:

where

and

denote the Gamma function and the Kronecker delta, respectively. So, it should be noted that when

, the previous function presents singularities whenever

r is equal to zero, the consequences of which include obtaining interpolation matrices that may be analytically invertible but numerically singular when

. This phenomenon is usually associated with the ill-conditioning of the matrices. Ill-conditioned matrices have eigenvalues very close to zero, making the numerical inversion process very sensitive to computational errors. Then, when the TPS function is applied directly, significant obstacles may arise when dealing with particular problems that demand a more specific approach. These challenges may stem from the complexity of certain domains or from the inherent characteristics of the differential equations to be solved. In response to these challenges, this paper focuses on building a family of radial functions designed to emulate and extend the behavior of the TPS function. This approach provides a flexible and adaptable alternative, allowing, in some cases, addressing the limitations associated with direct application of the aforementioned function. Rather than solely relying on a specific function, the proposed family of radial functions aims to more effectively address the inherent complexity of differential problems in various domains, including those involving fractional operators, even in multiple dimensions [

31].

2. Polynomials with Similar Behavior to the TPS Function

This section begins with a simple but fundamental objective for subsequent results, which is to extend the behavior of the TPS function within a domain

of the type:

into a domain of the following form:

For this purpose, it is essential to consider that Equation (

1) fulfills the following:

Therefore, a radial function

that fulfills the following is sought:

To fulfill the conditions outlined in Equation (

3), a polynomial of the following form is considered:

where the coefficients

and

are determined using Equation (

4). The value of

N will be determined later. Subsequently:

In matrix form, the system above may be represented as

Let

be the determinant of the matrix

B from the previous system. Performing some algebraic operations, it is obtained that:

Hence, for the system (

5), a solution always exists. Let

be the adjoint matrix of matrix

B. So, using the following equality:

it is obtained that

Then, the solution to the system (

5) is given by

As a consequence, the following polynomial is obtained:

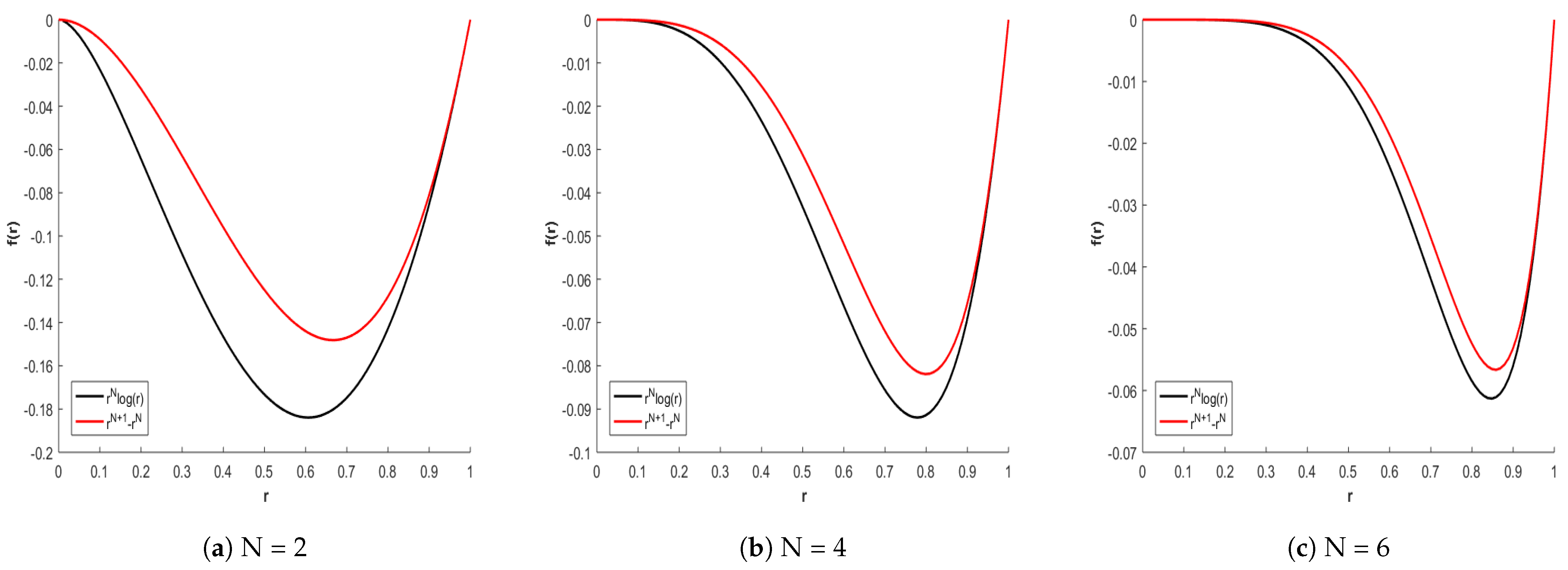

Through the previous construction, Equation (

7) in the domain

fulfills the following (see

Figure 1):

In the previous construction, only two coefficients were used to approximate the TPS function. To introduce one more coefficient, it is considered that Equation (

1) in the domain

fulfills the following:

As a consequence, a radial function

is sought to fulfill the following:

To fulfill Equation (

8), the following polynomial is chosen:

and to fulfill Equation (

9), the following matrix system is obtained:

After some algebraic manipulation, it is obtained that

So, using Equation (

6), it is obtained that

Therefore, system (

10) has the following solution:

This results in the following polynomial:

Thus, through the previous construction, Equation (

11) in the domain

fulfills the following (see

Figure 2):

From the matrix systems given in Equations (

5) and (

10), it may be deduced that to construct a polynomial with

n coefficients that approximates the TPS function, it is necessary to consider the

derivatives of both the polynomial and the TPS function. However, this approach would lead to more complex expressions for the coefficients

. In the next section, an alternative method is presented that allows obtaining an approximation for the TPS function while keeping the coefficients

in a simpler form.

2.1. Pseudo TPS Function

In pursuit of subsequently employing the fractional derivatives of polynomials [

35], while preserving the TPS function’s behavior of being zero at the boundaries of the domain

, the approach begins by seeking a polynomial that becomes zero, along with its derivatives, at the boundaries of the proposed domain. Directly solving the system (

5) with the mentioned conditions leads to a trivial solution. Instead, the polynomial involved in the system (

10) is considered with a vector

c of the form:

where

and the minus sign is included to ensure that the solution exhibits a convex behavior analogous to the TPS function in the domain

. With these considerations, the system (

10) may be rewritten as

This system has the following solution:

which generates the polynomial:

Although

may be chosen arbitrarily, a method is subsequently proposed to select its value in such a way that the coefficients of the polynomial (

13) remain simple. For the specific case of

, the following polynomial is obtained:

Thus, the choice of

and the construction of the polynomial (

14) ensure that, in the domain

, it fulfills the following (see

Figure 3):

To enhance the approximation, a small perturbation

, where

, is introduced in the exponent of the term with the highest power associated with a negative coefficient. Simultaneously, the exponent of said coefficient is adjusted by adding a perturbation

. For the aforementioned case, this allows defining the following function:

which in the domain

fulfills the following (see

Figure 4):

To conclude this section, it is worth mentioning that Equation (

16) is referred to as the pseudo TPS function, while Equation (

15) is referred to as the generalized pseudo TPS function.

2.2. Generalizing the Previous Construction

To extend the previous process used in constructing the pseudo TPS function, this begins by employing a polynomial of the following form:

such that its coefficients fulfill the following conditions:

This leads to a matrix system of the form

, where the vector

c has the following form:

and the determinant of matrix

B fulfills the following:

Therefore, using Equation (

6), it is obtained that

where

are the column vectors of the inverse matrix of

B, with

Then, the previous matrix system has the following solution:

with which the following polynomial is obtained:

Let

M be the least common multiple (LCM) of the denominators in the coefficients of polynomial (

17). Consequently, the value of

is defined as follows:

where

To exemplify the previous approximation, it is possible to choose the value

in Equation (

17), resulting in the following polynomial:

Due to the choice of

and the way in which the function (

18) is constructed, in the domain

, it fulfills the following (see

Figure 5):

To enhance the previous approximation, a small perturbation

, where

, is introduced in the exponent of the term with the highest power associated with a negative coefficient. Simultaneously, the exponent of this coefficient is adjusted by adding a perturbation

. This leads to defining the following function:

which in the domain

fulfills the following (see

Figure 6):

On the other hand, it is worth mentioning that the construction of the polynomial (

7) may be generalized by replacing the vector

c with the following:

resulting in the following function:

Taking the particular case

, and to improve the previous approximation, a small perturbation

, where

, is introduced in the exponent of the term with the highest power associated with a negative coefficient. Simultaneously, the exponent of this coefficient is adjusted by adding a perturbation

. This leads to defining the following function:

which in the domain

fulfills the following (see

Figure 7):

2.3. Radial Functions with Behavior Similar to the TPS Function

The functions in Equations (

22), (

19), and (

15) exhibit behavior similar to the TPS function within the domain

. However, the objective is to obtain radial functions [

10,

11] that adhere to this behavior. To accomplish this, the following constraints are imposed:

where

and

. Henceforth, it will be assumed that all functions used implicitly adhere to the restrictions given in Equation (

24), unless otherwise stated. By imposing the aforementioned constraints on polynomials (

22), (

15), and (

19), this ensures obtaining radial functions that exhibit a behavior similar to the TPS function. To illustrate this, the pseudo TPS function is selected, and Equation (

1) is allowed to take rational values, resulting in the graphs shown in

Figure 8:

Conditionally Positive Definite Functions

In this section, a definition and a crucial theorem are introduced [

11], which will be fundamental in the subsequent discussion.

Definition 1. A function is said to be completely monotone in if it belongs to and fulfills the following: Theorem 1. (Michelli) Let be a given function. Then the function is radial and conditionally positive definite of order m in for all d if and only if is completely monotone in .

On the other hand, for future results, the following example is provided:

Example 1. Let ϕ be a function defined as follows:where . Furthermore, the derivatives of the function ϕ are given by the following expressions: It should be noted that the last expression may be rewritten as follows:with which it is possible to obtain the following result:and therefore, the following is fulfilled:which means that is completely monotonic. It is worth noting that is the smallest value for which this is true. Since β is not a natural number, ϕ is not a polynomial. Therefore, the following functionsare strictly conditionally positive definite of order and radial in for all d. A conditionally positive definite function of order

m remains conditionally positive definite of order

. Moreover, if a function is conditionally positive definite of order

m in

, then it is also conditionally positive definite of order

m in

for

[

10]. So, considering the previous example, it follows that the following function

is conditionally positive definite of order:

3. Interpolation with Radial Functions

Before proceeding, it is necessary to provide the following definition:

Definition 2. Let be a function. Then, is called radial if there exists a function such thatwhere denotes any vector norm (generally the Euclidean norm). Given a set of values

, where

with

, an interpolant is a function

that fulfills the following:

On the other hand, let

be the space of polynomials in

d variables of degree less than

m. So, when using a conditionally positive definite radial function

, it is possible to propose an interpolant of the following form [

10,

11]:

where

and

forms a basis for

. Additionally, it is worth mentioning that the interpolation conditions given in Equation (

26) are complemented by the following moment conditions:

Before continuing, it should be noted that solving the interpolation problem given in Equation (

26) using the interpolant (

27), along with the moment conditions (

28), is equivalent to solving the following linear system:

where

A and

P are matrices of dimensions

and

respectively, with the components

The condition that a function

is conditionally positive definite of order

m may ensure that the matrix

A with components

is positive definite in the space of vectors

, such that

As a consequence, if in Equation (

27) the function

is conditionally positive definite of order

m and the set of centers

contains a unisolvent subset, the interpolation problem will have a unique solution [

10,

11].

Examples with Radial Functions

Before proceeding, it is necessary to define the following domain for the subsequent examples:

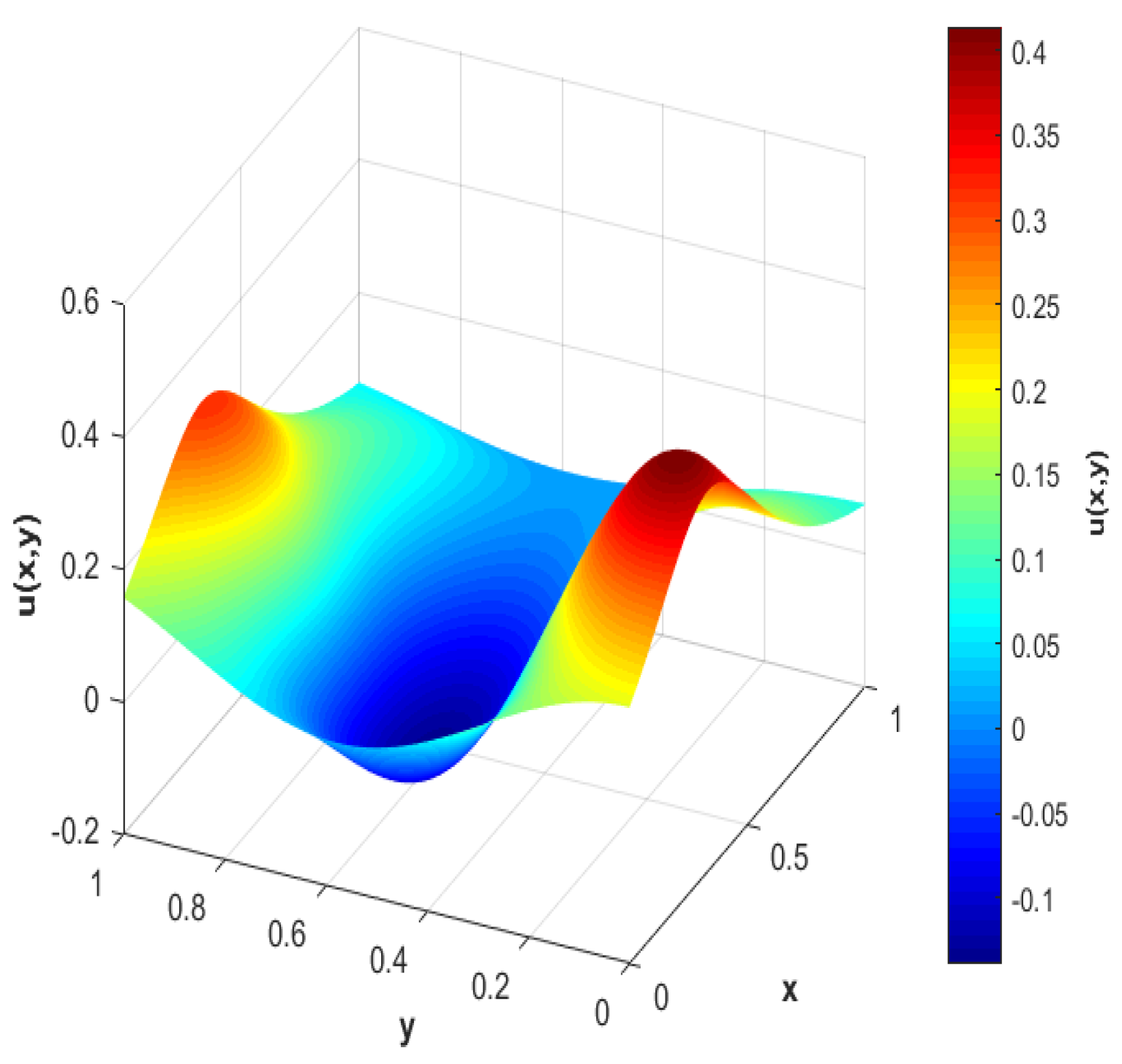

So, considering the following function:

and considering a distribution of nodes in a Halton-type pattern over the domain

, it is possible to visualize a graph of the function above (see

Figure 9).

Thus, to perform the interpolation examples, a set of values

are generated, along with a sequence of values

. So, denoting

and

, it is possible to use the root mean square error (RMSE) to get an idea of the error generated in the interpolation problems, which is defined as follows:

Additionally, for the following examples, the condition number of the matrix

G from Equation (

29) is defined as follows:

where

denotes any matrix norm of the inverse matrix of

G (generally the Euclidean norm).

Example 2. Using the generalized pseudo TPS functionwith . Then, to use the interpolant (27) considering the previous function, the value of m that allows defining the value of Q may be assigned by considering the endpoints of the interval, where the values are taken as follows:with which the results shown in Table 1 are obtained. Example 3. Using the radial functionwith . Then, to use the interpolant (27) considering the previous function, the value of m that allows defining the value of Q may be assigned as follows:with which the results shown in Table 2 are obtained. To conclude this section, it is worth noting that in the previous examples, the condition number of the obtained matrices was excessively high. Therefore, before proceeding, a method is proposed to reduce the condition number of these matrices. For this purpose, the linear system (

29) may be written in a compact form as follows:

where

G is a matrix of size

, and

and

U are column vectors of size

. So, to address the issue of reducing the condition number of the previous system, the

decomposition of the matrix

G is employed [

36]; that is,

with which it is feasible to replace the linear system (

29) with the following equivalent system:

where the matrix

H is defined by the following components:

in which the value of

n is chosen based on the following conditions:

Before proceeding, it is important to clarify that in the upcoming examples, the preconditioned linear system (

34) will be used considering the value

.

4. Fractional Operators

Fractional calculus is a branch of mathematics that involves derivatives of non-integer order, and it emerged around the same time as conventional calculus, in part due to Leibniz’s notation for derivatives of integer order:

Thanks to this notation, L’Hopital was able to inquire in a letter to Leibniz about the interpretation of taking

in a derivative. At that moment, Leibniz could not provide a physical or geometrical interpretation for this question, so he simply replied to L’Hopital in a letter that “… is an apparent paradox from which, one day, useful consequences will be drawn” [

37]. The name “fractional calculus” comes from a historical question, as in this branch of mathematical analysis, derivatives and integrals of a certain order

are studied, with

. Currently, fractional calculus does not have a unified definition of what is considered a fractional derivative. As a consequence, when it is not necessary to explicitly specify the form of a fractional derivative, it is usually denoted as follows:

Fractional operators have various representations, but one of their fundamental properties is that they recover the results of conventional calculus when

. Before continuing, it is worth mentioning that due to the large number of fractional operators that exist [

38,

39,

40,

41,

42,

43,

44,

45,

46,

47,

48,

49,

50,

51,

52,

53,

54,

55,

56], it seems that the most natural way to fully characterize the elements of fractional calculus is by using sets, which is the main idea behind the methodology known as

fractional calculus of sets [

57,

58,

59,

60], whose seed of origin is the fractional Newton–Raphson method [

24]. Therefore, considering a scalar function

and the canonical basis of

denoted by

, it is feasible to define the following fractional operator of order

using Einstein’s notation:

Therefore, denoting

as the partial derivative of order

n applied with respect to the

k-th component of the vector

x, using the previous operator, it is feasible to define the following set of fractional operators:

which corresponds to a nonempty set since it contains the following sets of fractional operators:

As a consequence, the following result may be obtained:

On the other hand, the complement of the set (

36) may be defined as follows:

with which it is feasible to obtain the following result:

where

denotes any permutation different from the identity. On the other hand, considering a function

, it is feasible to define the following sets:

where

denotes the

k-th component of the function

h. So, the following set of fractional operators may be defined:

which under the classical Hadamard product fulfills that

Furthermore, it is worth noting that for each operator

, it is feasible to define the following

fractional matrix operator:

On the other hand, considering that when using the classical Hadamard product, in general

, it is feasible to define the following modified Hadamard product [

57,

60]:

with which for each operator

, a group isomorphic to the group of integers under the addition may be defined, which corresponds to the abelian group generated by the operator

, denoted as follows: [

58,

61]:

Before proceeding, it is worth mentioning that some applications may be derived based on the previous definition, among which the following corollary can be found [

59,

60]:

Corollary 1. Let be a fractional operator such that and let be the group of integers under the addition. Therefore, considering the modified Hadamard product given by (47) and some subgroup of the group , it is feasible to define the following set of fractional matrix operators:which corresponds to a subgroup of the group generated by the operator ; that is, Example 4. Let be the set of residual classes less than a positive integer n. Therefore, considering a fractional operator and the set , it is feasible to define, under the modified Hadamard product given by (47), the following abelian group of fractional matrix operators: Furthermore, all possible combinations of the elements of the group are summarized in the following Cayley table: On the other hand, it is important to highlight that Corollary 1 allows generating groups of fractional operators under other operations [

59]. For example, considering the following operation

it is feasible to obtain the following corollary:

Corollary 2. Let be the set of positive residual classes less than p, with p a prime number. Therefore, for each fractional operator , it is feasible to define the following abelian group of fractional matrix operators under the operation (52): Example 5. Let be a fractional operator such that . Therefore, considering the set , it is feasible to define, under the operation (52), the following abelian group of fractional matrix operators: Furthermore, all possible combinations of the elements of the group are summarized in the following Cayley table: Before proceeding, it is worth mentioning that while the above theory may seem overly abstract and restrictive at first, when considering specific cases, the results may be applied to a wide variety of well-known fractional operators in the literature. Among this is the operator

Riemann–Liouville fractional integral, defined as follows [

35,

62]:

which allows constructing the operator

Riemann–Liouville fractional derivative, defined as follows [

62,

63]:

where

and

. Furthermore, operator (

55) also enables the construction the operator

Caputo fractional derivative, defined as follows [

62,

63]:

where

and

. So, to exemplify the aforementioned point that in specific cases the previous theory may be extended to multiple fractional operators, considering

, for the specific value

, it is feasible to replicate the results from Example 4 with the fractional operators (

55)–(

57), individually or in any combination when constructing a fractional operator using the definition (

43). Similarly, for the specific value

, the results from Example 5 may be replicated with the aforementioned fractional operators. This opens the possibility of obtaining applications different from the conventional ones for fractional operators, since they are commonly used in the literature to replace derivatives and integrals of integer order in models aimed at predicting the behavior of certain physical phenomena, to achieve greater accuracy.

On the other hand, it is important to mention that if a function

f fulfills the condition

, the Riemann–Liouville fractional derivative coincides with the Caputo fractional derivative; that is,

So, applying operator (

56) with

to the function

, with

, the following result is obtained: [

26]:

where if

, it is fulfilled that

. On the other hand, it is worth noting that, as shown in the above equation, the perturbations

used in the functions that may be extended from the pseudo TPS function given in Equations (

15) and (

19) bear a certain resemblance to the application of fractional operators (

56) and (

57) to functions of the form

. Thus, proposing another application of fractional operators different from the conventional, in Equations (

15) and (

19), it is feasible to carry out the following substitution:

resulting in the following modified functions:

4.1. Examples with Partially Implemented Fractional Derivative

In the upcoming examples, Equation (

32) will be employed once more, in conjunction with the node distribution illustrated in

Figure 9, and the set of values

are generated accordingly. Additionally, the fractional derivative, defined in Equation (

59) with

, will be applied. So, the results presented below are obtained:

Example 6. Using the modified function (60) with . Then, to use the interpolant (27) considering the previous function, the value of m that allows defining the value of Q may be assigned by considering the endpoints of the interval where the values are taken as follows:with which the results shown in Table 3 are obtained. Example 7. Using the modified function (61) with . Then, to use the interpolant (27) considering the previous function, the value of m that allows defining the value of Q may be assigned as follows:with which the results shown in Table 4 are obtained. 4.2. Examples with Fully Implemented Fractional Derivative

Considering that the partial implementation of fractional derivatives in the functions from the previous examples resulted in well-behaved errors, the next step is to fully implement fractional derivatives in the functions (

15) and (

19) to analyze the behavior of the errors. This is accomplished through the following substitution:

resulting in the following modified functions:

Then, applying the fractional derivative defined in Equation (

59) with

, the results presented below are obtained:

Example 8. Using the modified function (62) with . Then, to use the interpolant (27) considering the previous function, the value of m that allows defining the value of Q may be assigned by considering the endpoints of the interval where the values are taken as follows:with which the results shown in Table 5 are obtained. Example 9. Using the modified function (63) with . Then, to use the interpolant (27) considering the previous function, the value of m that allows defining the value of Q may be assigned as follows:with which the results shown in Table 6 are obtained. A Change in the Interpolant

In the preceding sections, the interpolator given by Equation (

27) was utilized, where

. This leads to a substantial increase in the value of

Q for certain cases. For instance, considering a polynomial in

of degree 4, results in

Q being equal to 15. Recognizing that sometimes simplicity is key to solving certain problems, it is proposed to modify the polynomial in the interpolator (

27) with a radial polynomial, such that

. This allows the proposal of the following interpolant:

As a consequence, the moment conditions may be rewritten as follows:

With the previous changes, the advantage is gained that, when considering a radial polynomial in

of degree 4, the value of

Q would be equal to 5. Then, the following examples are presented using the modified interpolant (

64):

Example 10. Using the modified function (62) with . Then, to use the interpolant (27) considering the previous function, the value of m that allows defining the value of Q may be assigned by considering the endpoints of the interval where the values are taken as follows:with which the results shown in Table 7 are obtained. Example 11. Using the modified function (61) with . Then, to use the interpolant (27) considering the previous function, the value of m that allows defining the value of Q may be assigned as follows:with which the results shown in Table 8 are obtained. 5. Asymmetrical Collocation

Before commencing this section, it is crucial to note that the interpolation technique discussed earlier may also be extended to solve differential equations [

10]. So, considering a domain

and the following problem

where

f and

g are given functions,

and

are linear differential operators, and

u is the sought solution. Before proceeding, a modification to the interpolant (

64) is necessary, to avoid discontinuities caused by the application of the operators

and

. Denoting by

the order of the differential operators, it is feasible to define the following values:

Then, the interpolant (

64) may be rewritten as follows:

where

. As a consequence, the moment conditions take the form:

In addition to the constraints given in (

24), it is necessary to add the following restriction:

On the other hand, when substituting the interpolant (

69) as a potential solution of the system (

66); that is,

the following linear system is obtained:

where

,

,

,

, and

P are matrices of dimensions

,

,

,

, and

respectively, whose components are given by the following expressions:

Examples with Fractional Differential Operators

Before proceeding, it should be noted that the interpolant provided by Equation (

69) features a structure that is particularly effective for solving radial differential equations, which will be the main focus in the following examples. Furthermore, by utilizing the Caputo fractional derivative (

57), it is feasible to construct the following fractional radial differential operator [

31]:

and considering the operator

as the identity operator, the following differential equation may be formulated:

which has the special feature that when

, it takes the form of Poisson’s equation; that is,

So, to solve the system (

74) in the following examples, a distribution of interior nodes based on Halton-type nodes combined with Cartesian nodes near the boundary of the domain

is considered, as shown in

Figure 10, along with a sequence of values

. Furthermore, denoting

and

, the mean square error from Equation (

33) may be written as follows:

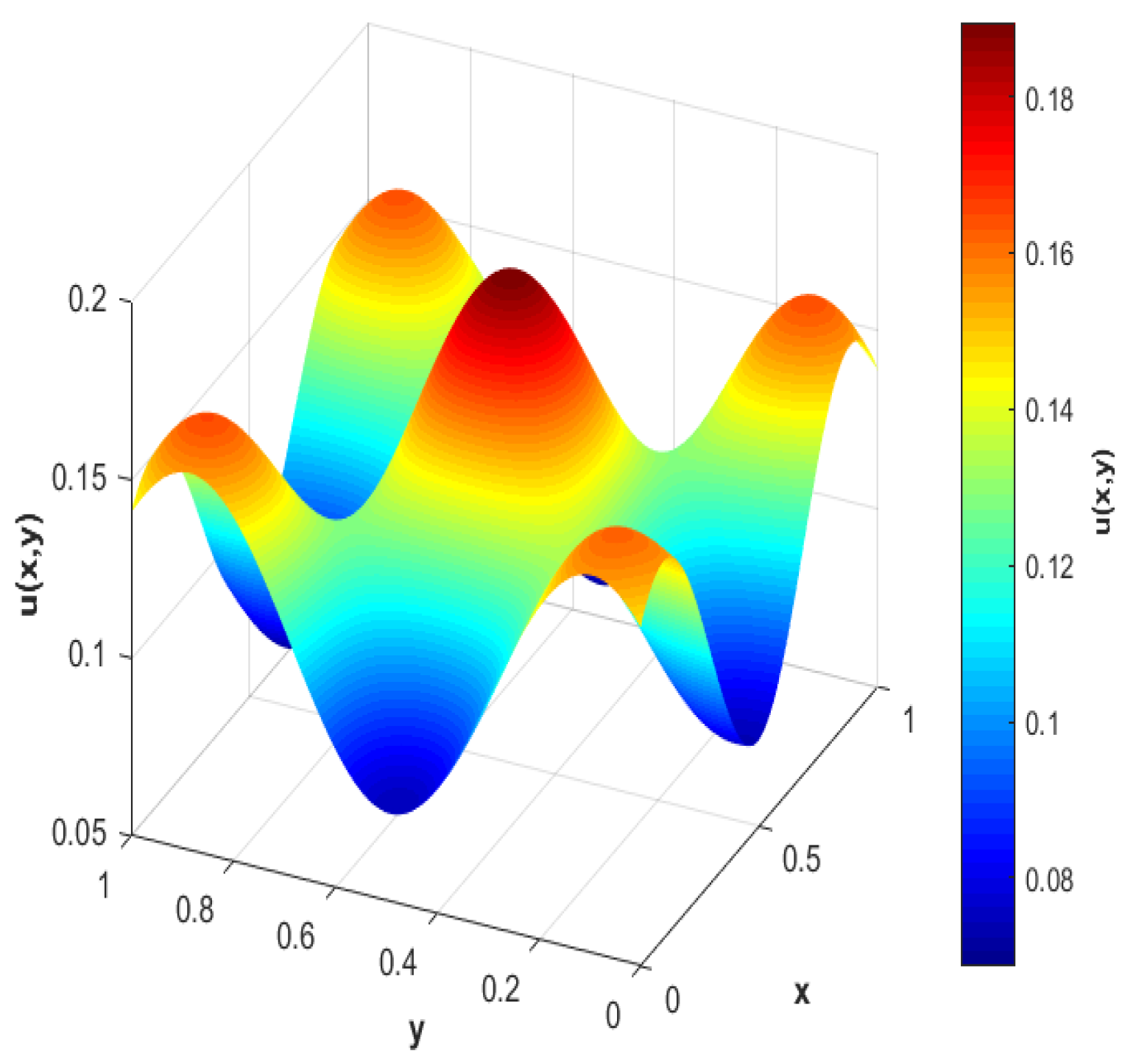

Example 12. Considering the value in the differential operator (73) and the following functions in the system (74): So, to use the interpolant (69), the following values are calculated:along with the values of N and m (since the latter allows defining the value of Q), which may be assigned by considering the endpoints of the interval where the values are taken, as follows: Finally, using the modified function (62) with the previous value of N in the interpolant, the results shown in Table 9 are obtained, and the graph of the numerical solution of the system with the minimal error obtained is shown in Figure 11. Example 13. Considering the value in the differential operator (73) and the following functions in the system (74): So, to use the interpolant (69), the following values are calculated:along with the values of N and m (since the latter allows defining the value of Q), which may be assigned by considering the endpoints of the interval where the values are taken, as follows: Finally, using the modified function (62) with the previous value of N in the interpolant, the results shown in Table 10 are obtained, and the graph of the numerical solution of the system with the minimal error obtained is shown in Figure 12. To continue validating the interpolant provided in Equation (

69), the following differential operator is defined using the Riemann–Liouville fractional derivative (

56):

and considering that this operator is defined on a domain

, in the following example, a distribution of interior nodes based on Halton-type nodes combined with Cartesian nodes near the boundary of the domain

is considered, as shown in

Figure 13.

Example 14. Considering the value in the differential operator (76) and the following functions in the system (74): So, to use the interpolant (69), the following values are calculated:along with the values of N and m (since the latter allows defining the value of Q), which may be assigned by considering the endpoints of the interval where the values are taken, as follows: Finally, using the modified function (62) with the previous value of N in the interpolant, the results shown in Table 11 are obtained, and a graph of the numerical solution of the system with the minimal error obtained is shown in Figure 14. 6. Conclusions

The pursuit of accurate solutions to differential equations is a fundamental requirement in the fields of computational mathematics and engineering. Among the available tools, the thin plate spline (TPS), a radial basis function, stands out for its versatility in modeling various behaviors. However, its direct application poses challenges that demand meticulous adaptations to effectively address specific domains. This work focuses on developing a family of radial functions designed to emulate the behavior of the TPS function, providing a flexible and adaptable alternative that enables the numerical approximation of solutions to differential equations, including those of a fractional nature.

Additionally, an innovative approach was proposed by considering the application of fractional derivatives to the proposed radial functions, allowing both partial and full implementation in their structure. This broadens the applications of fractional operators and, under certain conditions, enables the solution of fractional differential equations, a field of growing interest today. Furthermore, a matrix preconditioning technique was introduced through QR decomposition that can be used in solving interpolation problems. The combination of these elements results in a versatile tool for solving differential equations in both traditional and fractional contexts.

In this paper, a method was also presented to define two different types of abelian groups for any fractional operator defined in the interval , among which the Riemann–Liouville fractional integral, Riemann–Liouville fractional derivative, and Caputo fractional derivative are worth mentioning. Finally, this study employed asymmetric collocation in solving systems of fractional differential equations, using the radial functions generated with fractional derivatives in their structure, along with a radial interpolant adaptable to different fractional differential operators. This work has shown an innovative application of fractional operators in the context of both abelian groups and radial functions.