Image Enhancement Model Based on Fractional Time-Delay and Diffusion Tensor

Abstract

1. Introduction

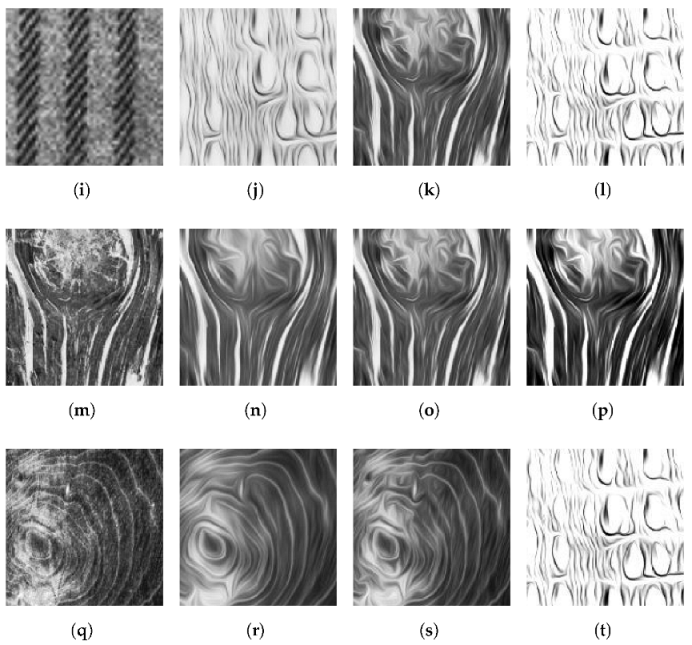

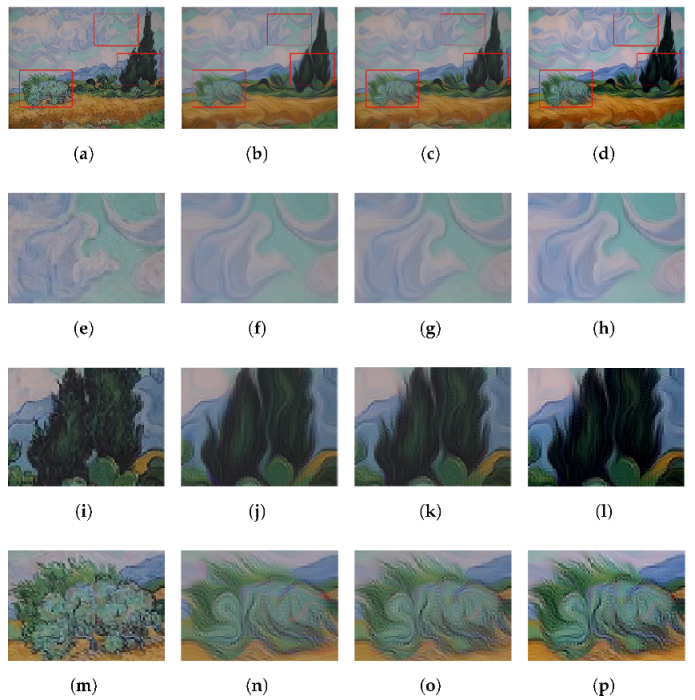

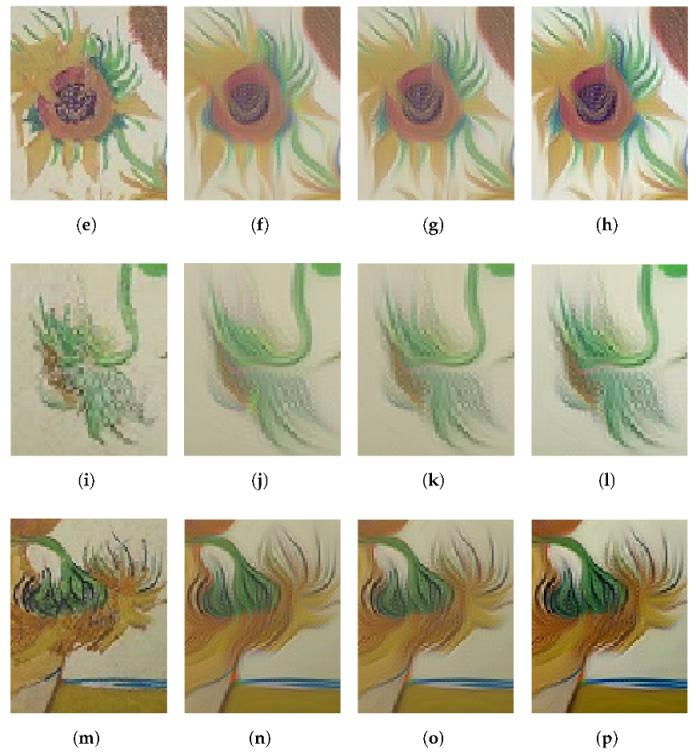

- The nonlinear isotropic diffusion equation is applied to make use of the spatial information in the image. The fractional time-delay equation is applied to make use of the past information of the image. The diffusion tensor of CED is applied to complete interrupted lines and enhance flow-like structures.

- The introduced source term is used to make a contrast enhancement between the image and its background by changing the diffusion type and behavior. In addition, this term can also reduce the noise in the image.

- Based on the theory of partial differential equations and some properties of fractional calculus, we prove the existence and uniqueness of weak solutions.

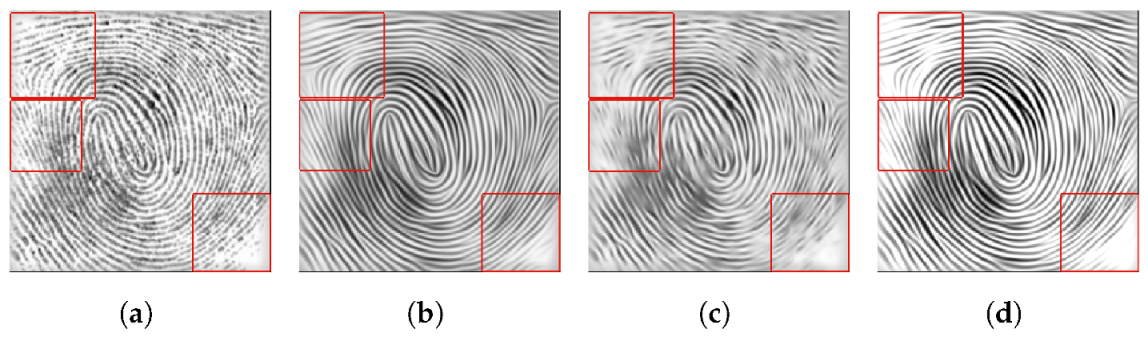

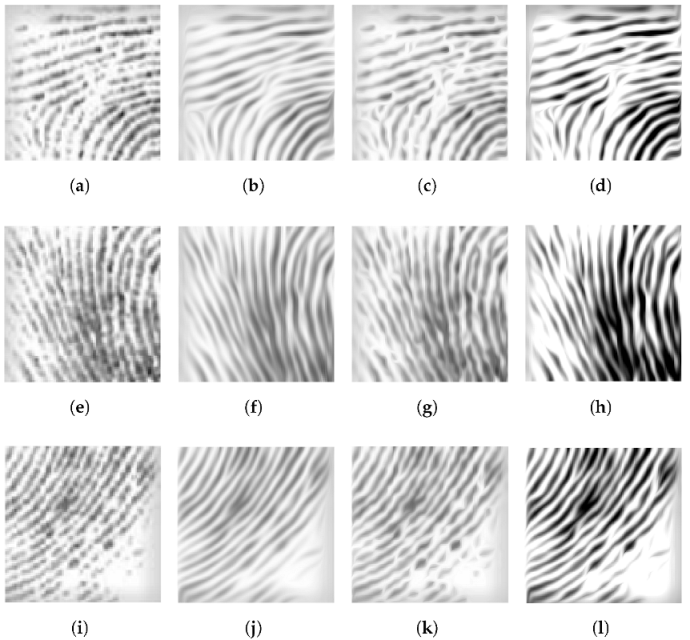

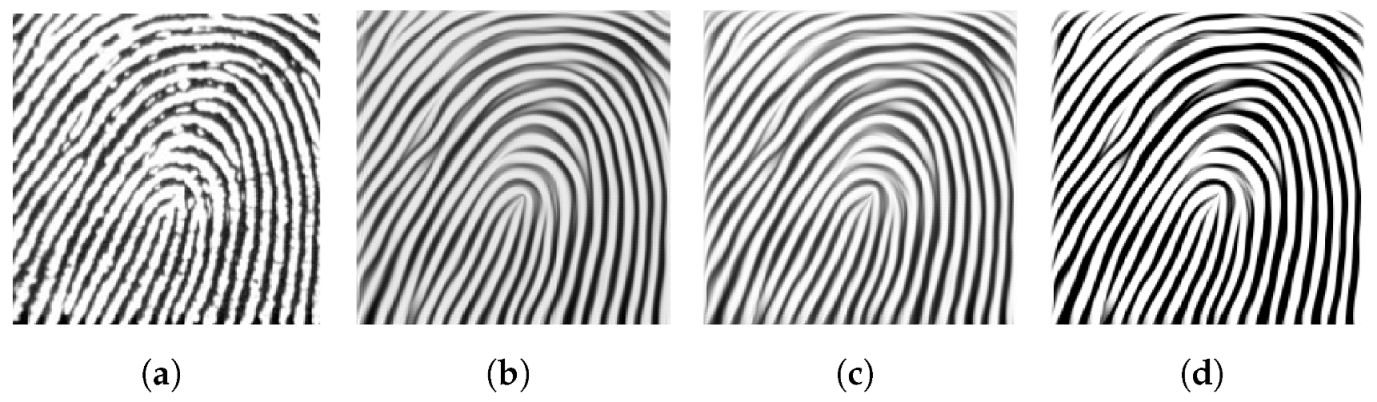

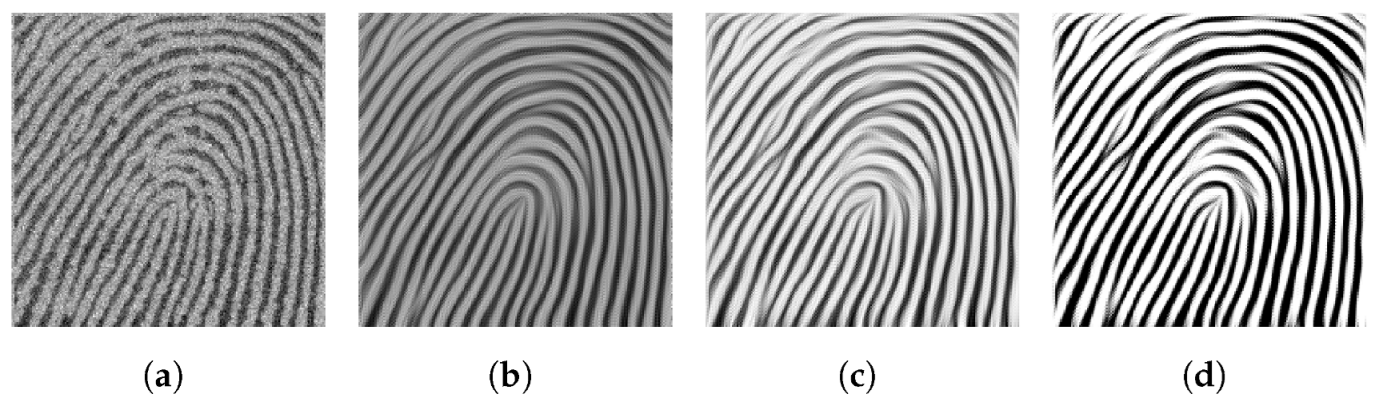

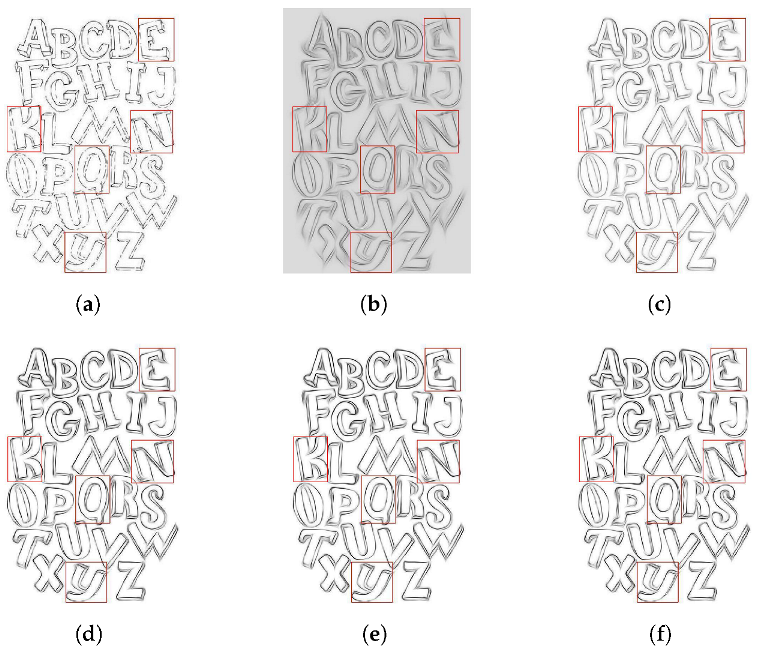

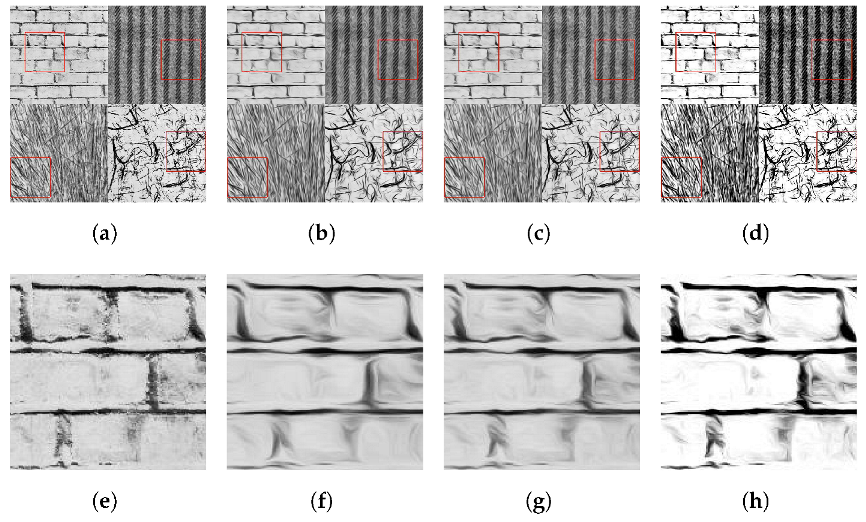

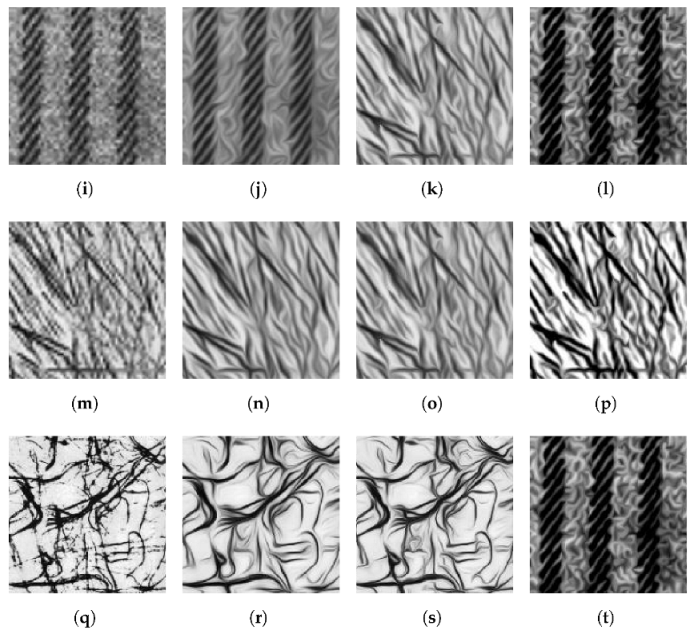

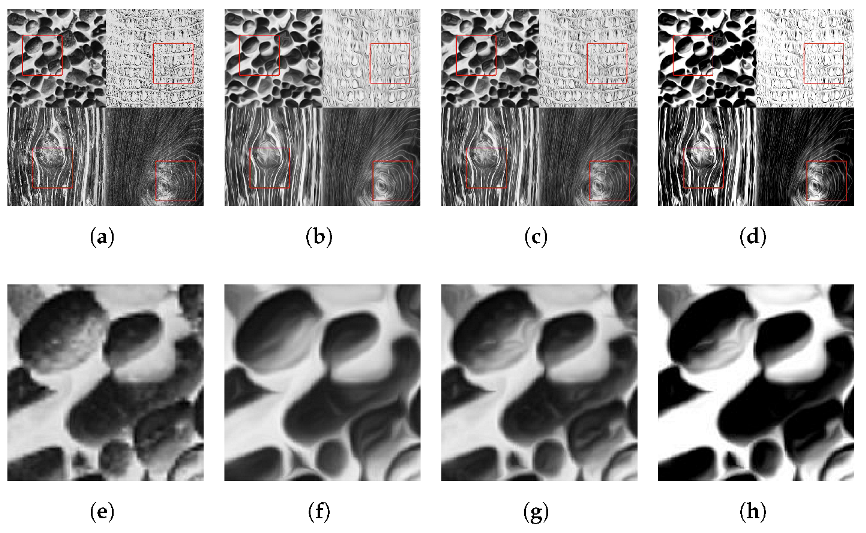

- The comparative experimental results verify the superiority of the proposed method. It shows that this model can complete the connection of interrupted lines, enhance the contrast of images, and deepen the fluidity characteristics of various types of lines.

2. The Proposed Model and Its Theoretical Analysis

2.1. Preliminary Knowledge

2.2. The Proposed Model

- The first equation is an anisotropic diffusion equation, which can enhance flow-like structures and connect interrupted lines. Since the eigenvalues in J imply the coherent structure, we select as the measure of coherence. More related details can be found in reference [24]. Specifically, the eigenvectors of structural tensors provide optimal choices for local directions, while the corresponding eigenvalues represent local contrast along these directions. By constructing diffusion tensor D with the same eigenvector as J and selecting appropriate eigenvalues for smoothing, it can be ensured that the model can complete the connection of interrupted lines and enhance similar flow structures. The source term in the first equation is used to change the diffusion type and behavior so as to make a contrast enhancement between the target image and the background and enhance the texture structure; more details are referred to in [38].

- The second equation performs as a fractional time-delay regularization, which considers the past information of the image. Meanwhile, the long-range dependency of this equation can avoid excessive smoothing.

- The final equation is based on a structure tensor; this equation is an isotropic diffusion equation, which performs well when dealing with the discontinuity. Let , and choose the diffusion function , where K is a threshold value. Alternatively, we can choose the diffusion function as , where is a smaller positive number. The diffusion coefficient changes with the local features of the image, thereby preserving the edge information of the image and avoiding texture and edge information to be blurred.

2.3. The Theoretical Analysis of the Proposed Model

2.4. The Existence of Weak Solutions

2.5. Uniqueness of Weak Solutions

3. Numerical Algorithms and Experimental Results

3.1. Numerical Algorithm

| Algorithm 1 The proposed model |

| Input: Initial image , parameter , , , p, iteration step size , time step for isotropic diffusion , and time step for anisotropic diffusion Initial conditions:. For ()

Output: The image u. |

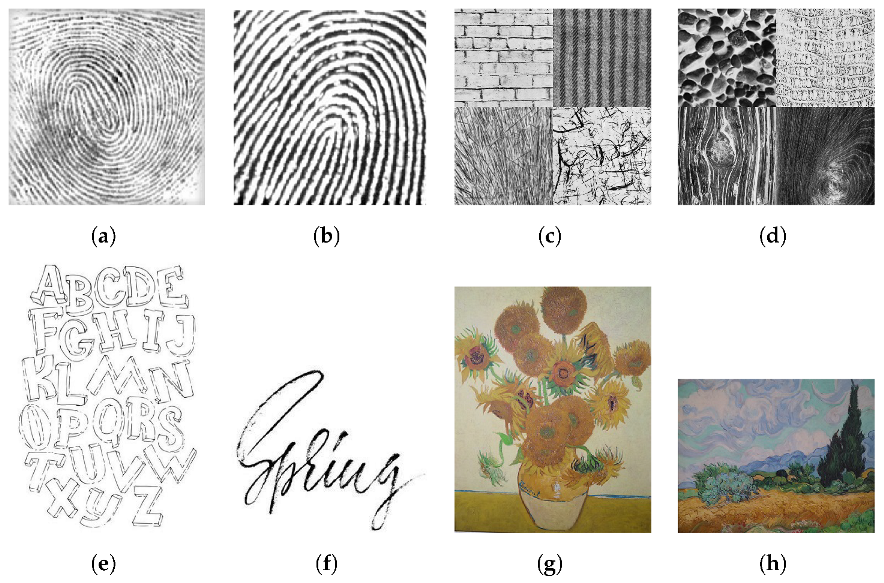

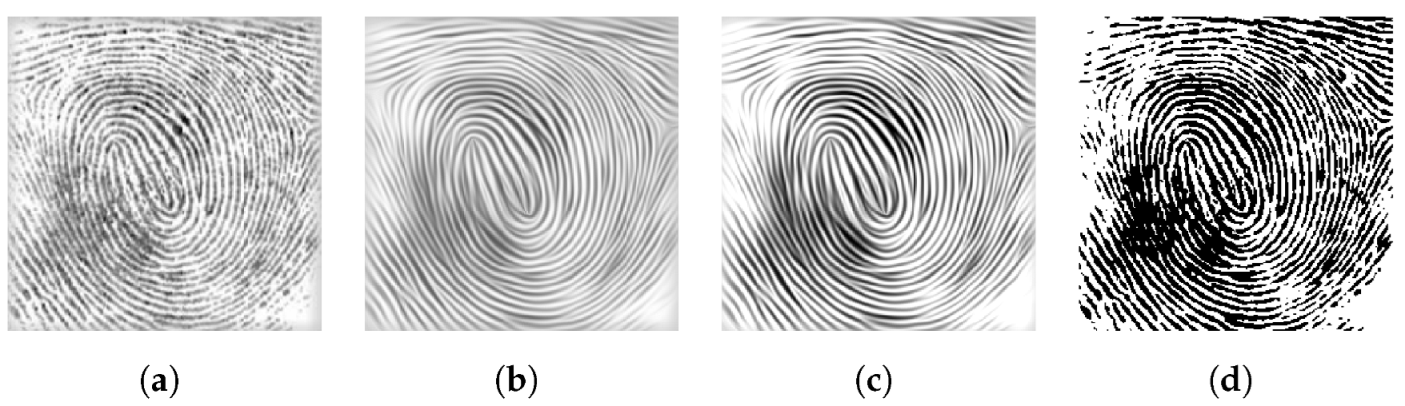

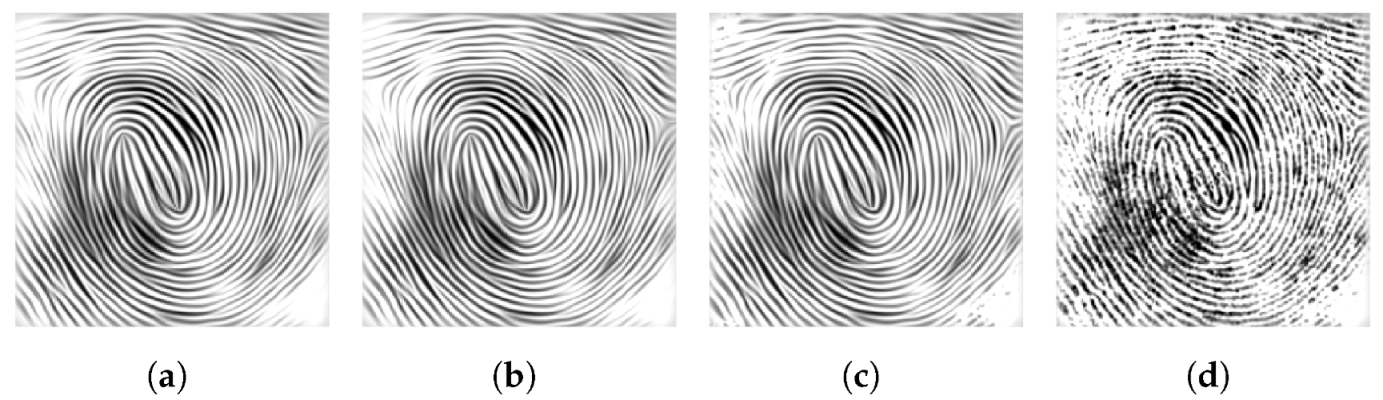

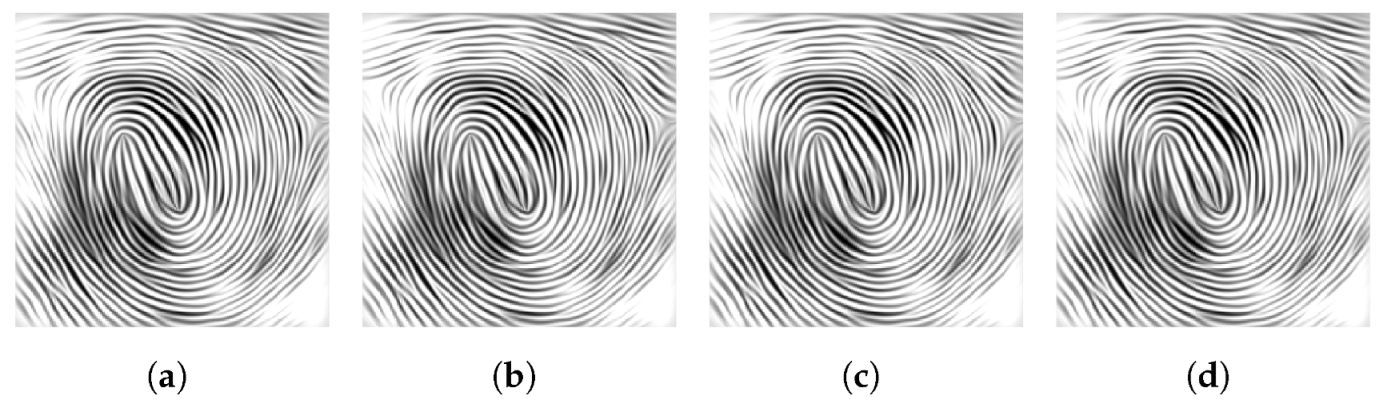

3.2. Experimental Results

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Zou, G.; Li, T.; Li, G.; Peng, X.; Fu, G. A visual detection method of tile surface defects based on spatial-frequency domain image enhancement and region growing. In Proceedings of the 2019 Chinese Automation Congress (CAC), Hangzhou, China, 22–24 November 2019; pp. 1631–1636. [Google Scholar]

- Bhandari, A.K. A logarithmic law based histogram modification scheme for naturalness image contrast enhancement. J. Ambient. Intell. Humaniz. Comput. 2020, 11, 1605–1627. [Google Scholar] [CrossRef]

- Yu, T.; Zhu, M. Image enhancement algorithm based on image spatial domain segmentation. Comput. Inform. 2021, 40, 1398–1421. [Google Scholar] [CrossRef]

- Zhao, J.; Fang, Q. Noise reduction and enhancement processing method of cement concrete pavement image based on frequency domain filtering and small world network. In Proceedings of the 2022 International Conference on Edge Computing and Applications (ICECAA), Tamilnadu, India, 13–15 October 2022; pp. 777–780. [Google Scholar]

- Fabbri, C.; Islam, M.J.; Sattar, J. Enhancing underwater imagery using generative adversarial networks. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 12–15 May 2018; pp. 7159–7165. [Google Scholar]

- Huang, J.; Zhu, P.; Geng, M.; Ran, J.; Zhou, X.; Xing, C.; Wan, P.; Ji, X. Range scaling global u-net for perceptual image enhancement on mobile devices. In Proceedings of the European Conference on Computer Vision (ECCV) Workshops, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Gabor, D. Information theory in electron microscopy. Lab. Investig. J. Tech. Methods Pathol. 1965, 14, 801–807. [Google Scholar]

- Jain, A.K. Partial differential equations and finite-difference methods in image processing, Part 1: Image represent. J. Optim. Theory Appl. 1977, 23, 65–91. [Google Scholar] [CrossRef]

- Perona, P.; Malik, J. Scale-space and edge detection using anisotropic diffusion. IEEE Trans. Pattern Anal. Mach. Intell. 1990, 12, 629–639. [Google Scholar] [CrossRef]

- Nitzberg, M.; Shiota, T. Nonlinear image filtering with edge and corner enhancement. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 14, 826–833. [Google Scholar] [CrossRef]

- Cottet, G.H.; Germain, L. Image processing through reaction combined with nonlinear diffusion. Math. Comput. 1993, 61, 659–673. [Google Scholar] [CrossRef]

- Hao, Y.; Yuan, C. Fingerprint image enhancement based on nonlinear anisotropic reverse-diffusion equations. In Proceedings of the 26th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, San Francisco, CA, USA, 1–5 September 2004; Volume 1, pp. 1601–1604. [Google Scholar]

- Brox, T.; Weickert, J.; Burgeth, B.; Mrázek, P. Nonlinear structure tensors. Image Vis. Comput. 2006, 24, 41–55. [Google Scholar] [CrossRef]

- Wang, W.W.; Feng, X.C. Anisotropic diffusion with nonlinear structure tensor. Multiscale Model. Simul. 2008, 7, 963–977. [Google Scholar] [CrossRef]

- Marin-McGee, M.J.; Velez-Reyes, M. A spectrally weighted structure tensor for hyperspectral imagery. IEEE J. Sel. Top. Appl. Earth Obs. And Remote Sens. 2016, 9, 4442–4449. [Google Scholar] [CrossRef]

- Marin-McGee, M.; Velez-Reyes, M. Coherence enhancement diffusion for hyperspectral imagery using a spectrally weighted structure tensor. In Proceedings of the 2016 8th Workshop on Hyperspectral Image and Signal Processing: Evolution in Remote Sensing (WHISPERS), Los Angeles, CA, USA, 21–24 August 2016; pp. 1–4. [Google Scholar]

- Nnolim, U.A. Partial differential equation-based hazy image contrast enhancement. Comput. Electr. Eng. 2018, 72, 670–681. [Google Scholar] [CrossRef]

- Gu, Z.; Chen, Y.; Chen, Y.; Lu, Y. SAR image enhancement based on PM nonlinear diffusion and coherent enhancement diffusion. In Proceedings of the IGARSS 2020—2020 IEEE International Geoscience and Remote Sensing Symposium, Waikoloa, HI, USA, 26 September–2 October 2020; pp. 581–584. [Google Scholar]

- Bai, J.; Feng, X.C. Fractional-order anisotropic diffusion for image denoising. IEEE Trans. Image Process. 2007, 16, 2492–2502. [Google Scholar] [CrossRef] [PubMed]

- Sharma, D.; Chandra, S.K.; Bajpai, M.K. Image enhancement using fractional partial differential equation. In Proceedings of the 2019 Second International Conference on Advanced Computational and Communication Paradigms (ICACCP), Gangtok, India, 25–28 February 2019; pp. 1–6. [Google Scholar]

- Chandra, S.K.; Bajpai, M.K. Fractional mesh-free linear diffusion method for image enhancement and segmentation for automatic tumor classification. Biomed. Signal Process. Control 2020, 58, 101841. [Google Scholar] [CrossRef]

- Nnolim, U.A. Forward-reverse fractional and fuzzy logic augmented partial differential equation-based enhancement and thresholding for degraded document images. Optik 2022, 260, 169050. [Google Scholar] [CrossRef]

- Ben-loghfyry, A. Reaction-diffusion equation based on fractional-time anisotropic diffusion for textured images recovery. Int. J. Appl. Comput. Math. 2022, 8, 177. [Google Scholar] [CrossRef]

- Weickert, J. Coherence-enhancing diffusion filtering. Int. J. Comput. Vis. 1999, 31, 111. [Google Scholar] [CrossRef]

- Chen, Y.; Levine, S. Image recovery via diffusion tensor and time-delay regularization. J. Vis. Commun. Image Represent. 2002, 13, 156–175. [Google Scholar] [CrossRef][Green Version]

- Cottet, G.; Ayyadi, M.E. A Volterra type model for image processing. IEEE Trans. Image Process. 1998, 7, 292–303. [Google Scholar] [CrossRef][Green Version]

- Koeller, R. Applications of fractional calculus to the theory of viscoelasticity. Trans. ASME J. Appl. Mech. 1984, 51, 299–307. [Google Scholar] [CrossRef]

- Benson, D.A.; Wheatcraft, S.W.; Meerschaert, M.M. Application of a fractional advection-dispersion equation. Water Resour. Res. 2000, 36, 1403–1412. [Google Scholar] [CrossRef]

- Butzer, P.L.; Westphal, U. An introduction to fractional calculus. In Applications of Fractional Calculus in Physics; World Scientific: Singapore, 2000; pp. 1–85. [Google Scholar]

- Dou, F.; Hon, Y. Numerical computation for backward time-fractional diffusion equation. Eng. Anal. Bound. Elem. 2014, 40, 138–146. [Google Scholar] [CrossRef]

- Cuesta-Montero, E.; Finat, J. Image processing by means of a linear integro-differential equation. In Proceedings of the 3rd IASTED International Conference on Visualization, Imaging, and Image Processing, Benalmadena, Spain, 8–10 September 2003; Volume 1. [Google Scholar]

- Janev, M.; Pilipović, S.; Atanacković, T.; Obradović, R.; Ralević, N. Fully fractional anisotropic diffusion for image denoising. Math. Comput. Model. 2011, 54, 729–741. [Google Scholar] [CrossRef]

- Li, Y.; Liu, F.; Turner, I.W.; Li, T. Time-fractional diffusion equation for signal smoothing. Appl. Math. Comput. 2018, 326, 108–116. [Google Scholar] [CrossRef]

- Li, L.; Liu, J.G. Some compactness criteria for weak solutions of time fractional PDEs. SIAM J. Math. Anal. 2018, 50, 3963–3995. [Google Scholar] [CrossRef]

- Li, L.; Liu, J.G. A generalized definition of Caputo derivatives and its application to fractional ODEs. SIAM J. Math. Anal. 2018, 50, 2867–2900. [Google Scholar] [CrossRef]

- Alikhanov, A. A priori estimates for solutions of boundary value problems for fractional-order equations. Differ. Equ. 2010, 46, 660–666. [Google Scholar] [CrossRef]

- Agrawal, O. Fractional variational calculus in terms of Riesz fractional derivatives. J. Phys. A Math. Theor. 2007, 40, 6287. [Google Scholar] [CrossRef]

- Dong, G.; Guo, Z.; Zhou, Z.; Zhang, D.; Wo, B. Coherence-enhancing diffusion with the source term. Appl. Math. Model. 2015, 39, 6060–6072. [Google Scholar] [CrossRef]

- Evans, L.C. Partial Differential Equations; American Mathematical Society: Providence, RI, USA, 2022; Volume 19. [Google Scholar]

- Suri, J.S.; Laxminarayan, S. PDE and Level Sets; Springer Science & Business Media: New York, NY, USA, 2002. [Google Scholar]

- Murio, D.A. Implicit finite difference approximation for time fractional diffusion equations. Comput. Math. Appl. 2008, 56, 1138–1145. [Google Scholar] [CrossRef]

- Weickert, J.; Scharr, H. A scheme for coherence-enhancing diffusion filtering with optimized rotation invariance. J. Vis. Commun. Image Represent. 2002, 13, 103–118. [Google Scholar] [CrossRef]

| Test Figures | ||||

|---|---|---|---|---|

| fingerprint1 | 0.3 | 4 | 0.3 | 100 |

| fingerprint2 | 0.5 | 4 | 0.5 | 100 |

| spring | 0.5 | 5 | 0.2 | 80 |

| alphabet | 0.3 | 6 | 0.3 | 150 |

| texture1 | 0.3 | 5 | 0.5 | 100 |

| texture2 | 0.3 | 7 | 0.5 | 150 |

| sunflower | 0.2 | 2 | 0.6 | 50 |

| cypress | 0.2 | 2 | 0.6 | 50 |

| Test Figures | ||||

|---|---|---|---|---|

| fingerprint1 | 0.5 | 0.2 | 0.3 | 30 |

| fingerprint2 | 0.5 | 0.2 | 0.5 | 100 |

| spring | 0.3 | 0.1 | 0.2 | 50 |

| alphabet | 0.3 | 0.15 | 0.3 | 90 |

| texture1 | 0.5 | 0.15 | 0.5 | 110 |

| texture2 | 0.5 | 0.2 | 0.5 | 100 |

| sunflower | 0.2 | 0.2 | 0.6 | 30 |

| cypress | 0.2 | 0.2 | 0.6 | 30 |

| Test Figures | |||||||

|---|---|---|---|---|---|---|---|

| fingerprint1 | 0.5 | 0.018 | 0.5 | 0.7 | 0.2 | 0.3 | 120 |

| fingerprint2 | 0.5 | 0.018 | 0.5 | 0.7 | 0.2 | 0.5 | 100 |

| spring | 0.3 | 0.02 | 0.5 | (0.1, 0.5, 0.7) | 0.1 | 0.2 | 40 |

| alphabet | 0.5 | 0.025 | 0.3 | (0.1, 0.5, 0.7) | 0.15 | 0.3 | 80 |

| texture1 | 0.5 | 0.02 | 0.5 | 0.7 | 0.15 | 0.5 | 60 |

| texture2 | 0.5 | 0.007 | 0.5 | 0.1 | 0.2 | 0.5 | 150 |

| sunflower | 0.2 | 0.02 | 0.5 | 0.5 | 0.2 | 0.6 | 30 |

| cypress | 0.2 | 0.02 | 0.5 | 0.5 | 0.2 | 0.6 | 30 |

| Figure 5 | Figure 5 | Figure 7 | Figure 7 | Figure 8 | Figure 8 | |

|---|---|---|---|---|---|---|

| contrast | entropy | contrast | entropy | contrast | entropy | |

| original | 43.59 | 7.24 | 80.31 | 6.62 | 81.39 | 6.74 |

| CED | 35.27 | 7.15 | 71.18 | 7.27 | 71.31 | 7.25 |

| CDEs | - | - | 71.95 | 7.28 | 75.69 | 7.15 |

| OURS | 73.57 | 6.49 | 113.56 | 3.75 | 119.13 | 3.05 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yao, W.; Huang, Y.; Wu, B.; Zhou, Z. Image Enhancement Model Based on Fractional Time-Delay and Diffusion Tensor. Fractal Fract. 2023, 7, 569. https://doi.org/10.3390/fractalfract7080569

Yao W, Huang Y, Wu B, Zhou Z. Image Enhancement Model Based on Fractional Time-Delay and Diffusion Tensor. Fractal and Fractional. 2023; 7(8):569. https://doi.org/10.3390/fractalfract7080569

Chicago/Turabian StyleYao, Wenjuan, Yi Huang, Boying Wu, and Zhongxiang Zhou. 2023. "Image Enhancement Model Based on Fractional Time-Delay and Diffusion Tensor" Fractal and Fractional 7, no. 8: 569. https://doi.org/10.3390/fractalfract7080569

APA StyleYao, W., Huang, Y., Wu, B., & Zhou, Z. (2023). Image Enhancement Model Based on Fractional Time-Delay and Diffusion Tensor. Fractal and Fractional, 7(8), 569. https://doi.org/10.3390/fractalfract7080569