Abstract

In this paper, we generalize the scheme proposed by Ermakov and Kalitkin and present a class of two-parameter fourth-order optimal methods, which we call Ermakov’s Hyperfamily. It is a substantial improvement of the classical Newton’s method because it optimizes one that extends the regions of convergence and is very stable. Another novelty is that it is a class containing as particular cases some classical methods, such as King’s family. From this class, we generate a new uniparametric family, which we call the KLAM, containing the classical Ostrowski and Chun, whose efficiency, stability, and optimality has been proven but also new methods that in many cases outperform these mentioned, as we prove. We demonstrate that it is of a fourth order of convergence, as well as being computationally efficienct. A dynamical study is performed allowing us to choose methods with good stability properties and to avoid chaotic behavior, implicit in the fractal structure defined by the Julia set in the related dynamic planes. Some numerical tests are presented to confirm the theoretical results and to compare the proposed methods with other known methods.

1. Introduction

Many problems in science, physics, economics, engineering, etc., need to find the roots of nonlinear equations of the form , where is a real or complex function with some characteristics.

Frequently, there is not an algorithm to obtain the exact solutions of these equations, and we need to approximate them by means of iterative processes. One of the most widely used iterative methods is Newton’s method. It has a second order of convergence, and its iterative expression is (see, for example [1,2])

The convergence of Newton’s scheme depends on the initial estimate being “close enough” to the solution, but there is no guarantee that this assumption holds when modeling many real-world problems. In an attempt to address this problem, and to make the convergence domain wider, Ermakov and Kalitkin [3] proposed a damped version of Newton’s method for the equations with the general form

where is a sequence of real numbers determined by a certain rule or algorithm. One of the forms that can take is the Ermakov–Kalitkin coefficient

We can classify iterative methods [4] according to the data needed to determine the next value of the succession of approximations to the root. The method is memoryless if it depends on the value of the last iterate, not the previous ones:

However, if the method contains memory, then it will also be a function of one or more of the iterates prior to the predecessor:

Iterative methods can also be classified [5,6] according to the number of steps in each iteration. Therefore, we consider either one-step or multi-step methods. A one-step method is in the form of the Equation (4), while a multi-step method is described by:

For the study of the different iterative methods, we use the order of convergence as a measure of the speed at which the sequence generated by the method converges to the root. If where C and p are constants, then p represents the order of convergence.

If and , we have linear convergence, but if and , the method has convergence of order p (quadratic, cubic, …)

An iterative method is said to be optimal, if it achieves convergence order , using d functional evaluations at each iteration. According to the Kung–Traub conjecture [7], the order of convergence of any multi-step method without memory cannot exceed , where d is the number of functional evaluations per iteration. Therefore, the order is the optimal order.

When delving into the problem of nonlinear equations to find higher order iterative methods of convergence, methods arise that require, in general, increasing the number of functional evaluations, as well as the number of steps, thus obtaining multi-step methods whose form is the one expressed in [5]. In the following, we present five iterative multi-step procedures with a fourth order of convergence, four of them being optimal. We use them in the numerical tests of this research to compare with some members of the KLAM family.

We consider, for later comparison purposes, Newton’s scheme (an optimal second-order scheme), given by

and Jarratt’s method, presented in [8], with convergence order four and iterative expression

The following schemes are two-step methods, the first one being Newton’s scheme: we start with Ostrowski’s method, [9], with order of convergence four and whose second step is:

Moreover, King’s family of fourth-order methods [10] is given by

where . For , Ostrowski’s scheme is obtained.

We also consider the fourth-order Chun method, [11],

All these two-steps schemes are optimal according to the Kung and Traub conjecture. For the numerical tests, in the final section, we denote these methods as N2, J4, Os4, K4, and Ch4, respectively.

In order to improve Newton’s method, Budzko et al. in [12] proposed a triparametric family of iterative methods with two steps, using a damped Newton method in the first step (as a predictor). The second step (corrector) resembles the Ermakov–Kalitkin scheme (2), (3). The resulting iterative procedure involves the evaluation of three functions at each iteration,

with and as complex parameters. Budzko et al. showed that if a one-parameter family of two-step iterative methods for solving nonlinear equations with a third order of convergence was obtained for values of the parameter other than 0 or 1. Through numerical tests, they showed that the numerical performance of this family was better, in several problems, than that presented by Newton’s method. We denote scheme (10) as the Budzco x-family or PM.

Cordero et al. in [13] carried out an in-depth study on the dynamics of the Budzco family and determined the convergence properties that allow this method to have a stable dynamical behavior for parameter values close to 0. They called the accelerating factor of the second step, , the “Kalitkin-type factor”. This uniparametric family of methods managed to improve Newton’s, both in order of convergence [12] and in providing much larger basins of attraction. They showed that, for small values of the parameter, the basins of attraction that did not correspond to the roots of the polynomial were indeed small [13].

Our contribution is to present a new biparametric family of two-step iterative methods, also based on the Ermakov–Kalitkin scheme (Section 2) that improves the third order of Budzco’s family of methods and, hence, Newton’s, while keeping the same number of functional evaluations. We obtain a class of fourth-order optimal methods, with low computational cost. The dynamical analysis of the proposed family, with the stability of the fixed points, the behavior of the critical points, etc., are presented in Section 3. We devote Section 4 to the numerical tests and to comparing the proposed methods with other known ones. With some conclusions and the references used, we finish this manuscript.

2. Design and Analysis of the Methods

We want to increase the order of convergence of the iterative methods of the form given in (10) to the fourth order while maintaining the Kalitkin-type factor, and we achieve this, as we show, by finding a weight function introduced in the corrector step:

We include the weight function to increase the order of convergence of the method, without adding new functional evaluations.

Theorem 1.

Let be a simple zero of a function f of class such that in a convex set D, and let be an initial approximation close enough to ξ. The biparametric family defined by (11) has a fourth order of convergence whenever for every weight function sufficiently differentiable, satisfying , and Moreover, the error equation is

where for and

Proof.

By using Taylor’s series developments around , we have the expressions for and , respectively,

Taylor’s series of around gives

and combining these equations, we obtain the expressions of and , respectively:

where , and

Given that tends to zero when tends to , we develop in Taylor series around zero,

Finally, if we require , and , then we cancel the coefficients of , and in expression (16), and we obtain the error equation of the methods

☐

Taking into account the convergence conditions of Theorem 1, by means of some algebraic manipulations and using as weight function the cubic Taylor polynomial, we obtain the iterative expression of the proposed method (11)

where is Newton’s scheme, and . This expression defines a family of two-parameter methods, and d, which we call Ermakov’s Hyperfamily (EH4).

Taking in (18), we obtain a uniparametric family

which we call the KLAM family.

This family includes some known schemes as particular cases:

- (i)

- (ii)

- (iii)

3. Dynamical Behavior of the KLAM Family

Complex dynamics has become, in recent years, a very useful tool to deepen the knowledge of rational functions obtained by applying iterative processes on low-degree polynomials . This knowledge gives us important information about the stability of the iterative method.

The tools of complex dynamics are applied to the rational function resulting from acting an iterative scheme on the quadratic polynomial . When a parametric class of iterative algorithms is applied to , a parametric rational function is obtained. This analysis allows us to choose the members of the class with good stability properties and avoid the elements with chaotic behavior.

We recall some concepts of complex dynamics. Extended information can be found in [14].

Let be a rational function, where is the Riemann sphere. The orbit of a point is the set of successive images of by the rational function

A point is a fixed point of if and it is classified as attractor, a repulsor, and neutral or parabolic, if , and respectively. When it is a superattractor. On the other hand, a point is a periodic point of period , if and . Finally, is a critical point of R, if .

The basin of attraction of a fixed point (or periodic) attractor is formed by the set of its pre-images of any order; that is,

The Fatou set is formed by those points whose orbits tend to an attractor. The Julia set is the complementary set in the Riemann sphere of the Fatou set.

Theorem 2.

(Julia and Fatou [15,16]) Let R be a rational function. The basin of attraction of a periodic (or fixed) attractor point contains at least one critical point.

This last result has important consequences: by locating the critical points and calculating their orbits, we determine whether there can be other types of basins of attraction other than the roots of the polynomial. It is useful to analyze the behavior of a critical point used as the initial estimation of the iterative method; its orbit tends to a root of the polynomial or to another attractor element.

On the other hand, the scaling theorem allows us to extend the stability properties obtained for to any quadratic polynomial.

3.1. Rational Operator and Conjugacy Classes

We prove that the rational operator associated with the KLAM family, presented in (19), on , satisfies the scaling theorem.

Theorem 3.

(Scaling theorem of the KLAM family) Let be an analytic function, and let with , be an affine application. Let with Let be the fixed point operator of the KLAM method on Then,

that is, and are affine conjugate by A.

Proof.

Let be the fixed point operator of the KLAM family of methods, presented in (19), on a function that is

where

First, let us determine ,

Now,

As , then

So, it is concluded that the scaling theorem is satisfied by the KLAM family, presented in (19). ☐

Now, we analyze the dynamical behavior of the fourth-order parametric family (19), studying the rational operator obtained to apply the family on the quadratic polynomial . This operator depends on the roots of and parameter ,

where

and

In order to eliminate the dependence of and , we apply the Möbius transformation

whose inverse is . So,

The set of values of the parameter that reduces the expression of operator is

We analyze the dynamics of this operator.

3.2. Stability Analysis of the Fixed Points

Both the number of fixed points and their stability depend on the parameter . We need to determine an expression for the differential operator, for analyzing the stability of the fixed points and to determine the critical points.

It is not difficult to verify that both and are superattracting fixed points, since they come from the zeros polynomial. However, the stability of the other fixed points depends on the value of .

Proposition 1.

The fixed points of rational operator are the roots of equation . Then, for each value of λ, it has the next fixed points:

- (a)

- and are superattractor fixed points for any value of λ.

- (b)

- is a fixed point, if and only if .

- (c)

- The strange fixed points, ex1 ex2, ex3, and ex4 are where .

- In the following result, we analyze the stability of .

Theorem 4.

The character of the strange fixed point , where , is as follows:

- (i)

- is an attractor, if and only if It can be a superattractor for .

- (ii)

- is a parabolic point in the circumference .

- (iii)

- is a repulsor, if and only if .

Proof.

First let us apply the operator in (3.2) on ,

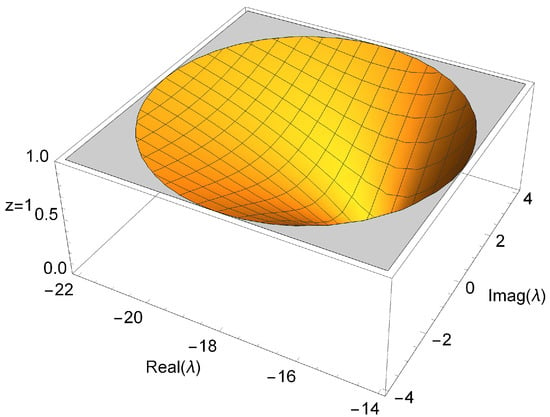

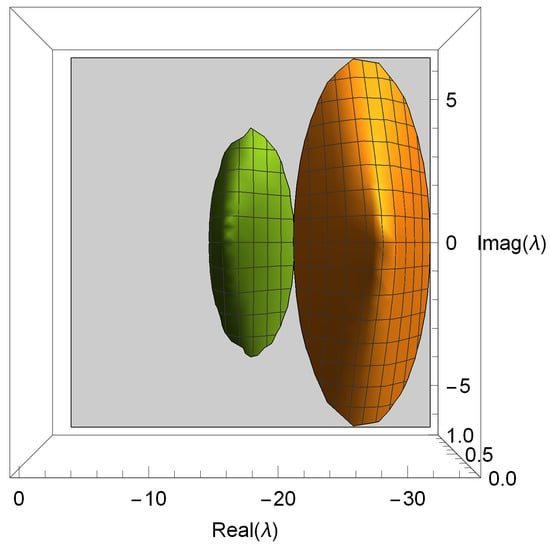

Let us analyze , taking and after some algebraic manipulations, we have as shown in Figure 1. Then, the fixed point is an attractor, if and only if

Figure 1.

Stability of the fixed point .

Analogously, the rest of the statements of the theorem are satisfied. ☐

Now, we study the stability of the strange fixed points , , , and .

Theorem 5.

The character of the strange fixed points and is as follows:

- If, then and are attractors. They are superattractors for .

- When , and are parabolic points.

- If , then and are repulsors.

Proof.

First, let us consider the stability function on strange fixed points and :

where and .

Let us make and after some algebraic manipulations, we have

if and only if

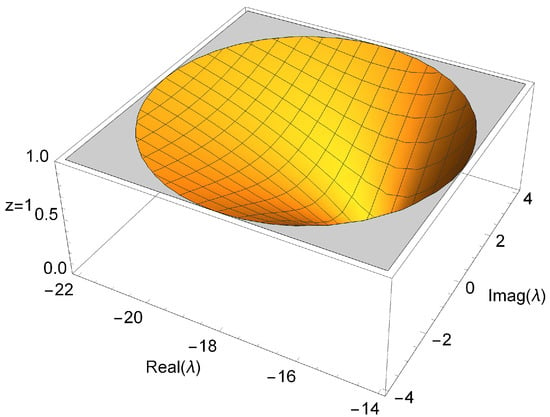

as shown in Figure 2. Therefore, are attractors, if and only if

Figure 2.

Stability of the fixed points and .

Analogously, the rest of statements of the theorem are satisfied. ☐

Theorem 6.

The character of the strange fixed points and is as follows:

- (a)

- If , then and are attractors. They are superattractors for .

- (b)

- In the circumference , or for , and are parabolic points.

- (c)

- If , then and are repulsors.

Proof.

The stability function on strange fixed points and is

where

Let us make and after some algebraic manipulations, we have

if and only if

as shown in Figure 2. Therefore, are attractors, if and only if

In analogous way, the rest of the Theorem is proved. ☐

The strange fixed points for the values of the parameter that reduce the operator are the following:

- (a)

- (b)

- (c)

- (d)

- (e)

- (f)

3.3. Analysis of Critical Points

From the definition of the critical point, given in Section 3.1, it is easy to prove that and are critical points, for all values of the parameter. In addition, there are other critical points that we call “free”.

Theorem 7.

The rational operator has the following free critical points:

- (i)

- , if

- (ii)

- (iii)

where

The free critical points in the values reducing the operator are for for none for for for and for

Let us note that if , then the only fixed points are the roots of the polynomial, without free critical points. We can observe that it is among the best values that we can assign to the parameter, because it reduces the rational operator to So, with this value our method has the most stable behavior.

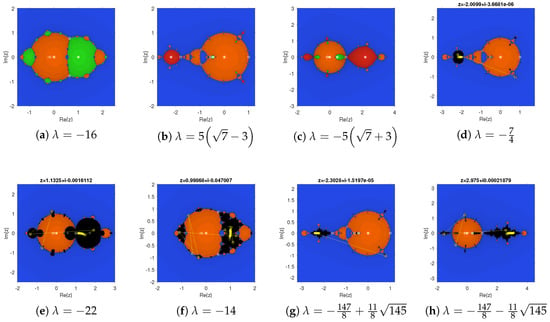

3.4. Parameter Planes of Critical Points

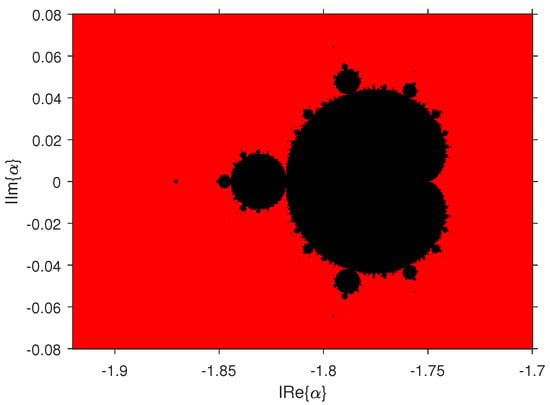

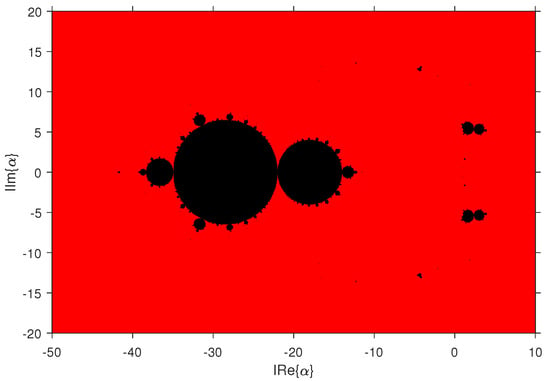

Chicharro et al. in [14] contributed to the generalized study of dynamic planes and parameter planes of families of iterative method. We conduct a similar study for the KLAM family (19).

There is one parameter plane for each independent free critical point. It is obtained by iterating the method taking as initial estimate each free critical point. We first define the mesh for complex values of the parameter and then consider each of its nodes as an element of the iterative method family. When iterating that method over the free critical point used as the initial estimate, we represent the parameter plane by assigning the color red or black, depending on whether that critical point converges to 0 or infinity, or not, respectively. The elements used are meshing in the desired domain, points; the maximum number of iterations is 80, with tolerance .

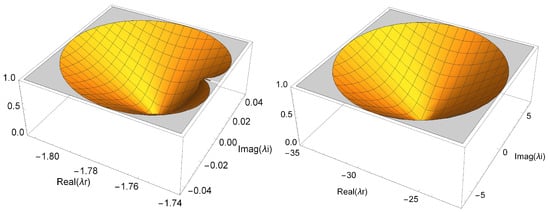

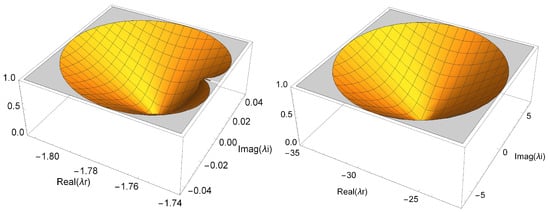

Let us now examine the two big regions that complete the study of the instability of the KLAM family of methods, based on the attractiveness of the fixed points, shown in Figure 3. The region where strangers 1 and 2 are attractors is very small and is not visualized in this graph, even if we consider the interval where it is contained.

Figure 3.

Stability of the fixed points , and .

Some regions where the extraneous fixed points are attractors, indicated in Figure 3, are the same in Figure 4 and Figure 5 that do not lead to the solution.

Figure 4.

Parameter plane critical points and .

Figure 5.

Parameter plane critical points and .

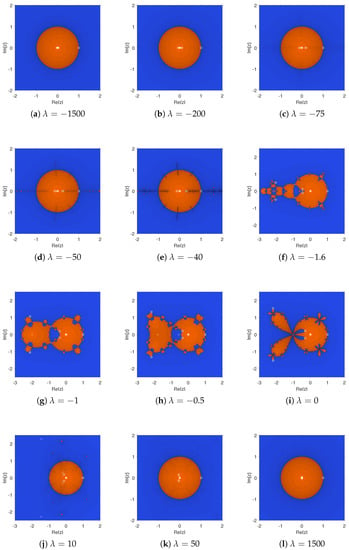

3.5. Dynamical Planes

To draw the dynamic planes, as with the Section 3.5 about parameter planes, we base our work on the contributions of Chicharro et al. in [14], applying the study to the KLAM family.

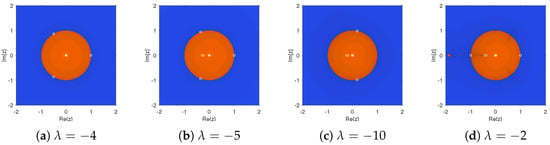

To generate the dynamical planes, we proceed in a similar way as for the parameter planes. In this case, parameter is constant, then the dynamical plane is related to a specific element of KLAM family. Each point of the plane is considered as an initial point in the iterative scheme, and different colors are used, depending on its convergence point.

To obtain the dynamical planes, we used a mesh of points of the complex plane, and a maximum of 200 iterations. We have chosen the parameter values shown in red in the parameter plane of the Figure 4 and Figure 5. We represent the superattractor fixed points with asterisk, the fixed points as circles, and the critical points as squares.

We took some values that simplified the parameter, as indicated in the Section 3.2, among others −4, −5, −10, and −2. These were the best choices for We obtained the corresponding drawings in Figure 6.

Figure 6.

Parameter values with stable behavior.

According to Theorems 4–6 about the strange fixed points, we knew the values of the parameter for which they were attracting, repulsive, parabolic, or superattracting points. We presented the dynamical planes with some values, where all strange fixed points were repulsors, and we obtained the planes of the Figure 7. We noticed that only two basins appeared, corresponding to the superattracting and It can be seen that the larger the absolute value of , the more the method tended to a single shape.

Figure 7.

Good choices for parameter .

The closer we moved to zero, the wider the basin of , until it became compact at , where we had only two critical points, and while the strange fixed points were , and We recall that with this value of our scheme corresponded to Chun’s method.

Figure 8 corresponds to values of the parameter where the strange fixed points were neutral or superattactors. In all these cases, there were three or four basins of attraction.

Figure 8.

Some poor parameter value choices.

4. Computational Implementation

We compared three of the most stable elements of the KLAM family, specifically for , and three of the more unstable methods of the family, as indicated in Theorems 4–6, where which we call KLAM5, KLAM10, KLAM2, KLAM22, KLAM16, KLAM73, respectively; with the methods of Newton, Jarratt, (6), Ostrowski, (7), King (), (8), and Chun, (9). These last four schemes are optimal methods of order four. We also compared our methods with the third-order scheme PM (10).

We used MATLAB R2020a for each of the tests, in a computer with the following specifications, Intel(R) Core(TM) i3-7100U CPU @2.40 GHz, 16 GB RAM.

The input parameters required for the programs of the iterative methods were the nonlinear function, the initial estimate previously deduced (approximated graphically), a tolerance of , and a maximum number of iterations. We worked with 2000-digit variable precision arithmetic mantissa, and used as the stopping criterion . We provided as output the computational approximation of the order of convergence ACOC, the error estimates , the total error , the number of iterations “iter”, the approximate solution, and the time elapsed in seconds.

The nonlinear equations that we solved numerically were

- .

- .

- (Sphere floating in water) A sphere of density and radius r was partially submerged in water to a depth x, we calculated this depth:considering the density of water was the radius of the sphere was and the wooden sphere of density was

- (Compression of a real spring) An object of mass m was dropped from a height h onto a real spring, whose elastic force was , where x was the compression of the spring; we calculated the maximum compression of the spring:considering that the gravity was ; the proportionality constants were ; the mass of the object was ; and the height was

By plotting the nonlinear functions whose zero we are looking for, we observed an initial estimate for the iterative process in each case.

Some numerical test results for various iterative schemes are presented in Table 1, Table 2, Table 3 and Table 4, where and

Table 1.

.

Table 2.

.

Table 3.

.

Table 4.

.

We must take into account that the approximate computational order of convergence (ACOC), defined as

We obtained the results detailed below.

- Case 1: , .KLAM5 was better, requiring only four iterations. All the unstable representatives diverged. The more stable ones behaved similarly and even better than the classical ones, as shown in Table 1.

- Case 2: .This function had steep rises and falls. The only values that converged were the close ones to the solution, for all methods. The best performance was the KLAM5 with three iterations. All the other methods, even the unstable ones, converged and required, as the classical ones, four iterations, except N2 that needed six, as show in Table 2.

- Case 3: .KLAM5 was better, requiring only three iterations. All others needed four, including the most unstable members of the KLAM family. KLAM2 was better than others, including classics. KLAM10 was behind Os4; but it was as J4, as shown in Table 3.

- Case 4:For our last function, with real-world applications, KLAM5, KLAM2, and KLAM16 (unstable member) had the best performance with fewer iterations than all the others: four. The other two unstable representatives behaved as the classics in this problem, as shown in Table 4.

5. Conclusions

The biparametric Ermakov Hyperfamily, presented in this paper, improves the uniparametric Budzco third-order family, thus achieving a significant improvement over Newton’s method, since Budzco’s has wider regions of convergence and achieves a higher order than the latter. Our new family provides fourth-order optimal methods. This implies that many applied problems will be able to use our method, which is simple in its scheme but more powerful than Newton’s scheme.

This new family generalizes some important classical schemes, such as King’s family and Chun’s method, of proven application and reference for fourth-order methods. A new class, as a particular case of the Ermakov Hyperfamily, is the uniparametric KLAM family, also presented in this paper, whose dynamical behavior we have analyzed. It is a class of very stable iterative schemes for any value of the parameters, except in a few cases where a black region appears in the parameter plane, which is minuscule, compared to the stable region.

We note that Ostrowski’s method is a particular case, with , as it is Chun, with . The method performs very well with other values of the parameter , outperforming in many cases this and the other classical methods in numerical tests. The best-performing representative in all the numerical tests was the KLAM5, corresponding to which in the dynamical study for quadratic problems was higher than the fourth order.

The Ermakov Hyperfamily, with its subfamily KLAM, constitutes a significant contribution to the scientific community. We consider that we have a very stable family that can be extended to systems. If it behaves with similar stability in the vector case, we have a family that could be a reference for fourth-order methods in the multidimensional case.

Author Contributions

Conceptualization, A.C.; methodology, R.V.R.-H.; software, M.P.V.; validation, J.R.T.; formal analysis, A.C.; investigation, R.V.R.-H.; writing—original draft preparation, R.V.R.-H. and M.P.V.; writing—review and editing, A.C. and J.R.T.; supervision, J.R.T. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Ortega, J.M.; Rheinboldt, W.G. Iterative Solutions of Nonlinear Equations in Several Variables; Academic Press: New York, NY, USA, 1970. [Google Scholar]

- Traub, J.F. Iterative Methods for the Solution of Equation; Chelsea Publishing Company: New York, NY, USA, 1982. [Google Scholar]

- Ermakov, V.V.; Kalitkin, N.N. The optimal step and regularization for Newton’s method. USSR Comput. Math. Math. Phys. 1981, 21, 235–242. [Google Scholar] [CrossRef]

- Chicharro, F. Análisis dinámico y aplicaciones de métodos iterativos de resolución de ecuaciones no lineales. Ph.D. Thesis, Universitat Politècnica de València, Valencia, Spain, 2017. Available online: https://riunet.upv.es/bitstream/handle/10251/83582/Chicharro (accessed on 1 March 2023).

- Amorós, C. Estudio Sobre Convergencia y Dinámica de los Métodos de Newton, Stirling y Alto Orden. Ph.D. Thesis, Universidad Internacional de la Rioja, Madrid, Spain, 2020. Available online: https://reunir.unir.net/bitstream/handle/123456789/10259/TesisCristinaAmorosCanet.pdf?sequence=3 (accessed on 1 March 2023).

- Artidiello, S. Diseño, Implementación y Convergencia de Métodos Iterativos para Resolver Ecuaciones y Sistemas No Lineales Utilizando Funciones Peso. Ph.D. Thesis, Universitat Politècnica de València, Valencia, Spain, 2014. Available online: https://riunet.upv.es/bitstream/handle/10251/44230/ARTIDIELLO (accessed on 1 March 2023).

- Kung, H.T.; Traub, J.F. Optimal order of one-point and multi-point iteration. J. ACM 1974, 21, 643–651. [Google Scholar] [CrossRef]

- Jarratt, P. Some fourth order multipoint iterative methods for solving equations. Math. Comput. 1966, 20, 434–437. [Google Scholar] [CrossRef]

- Ostrowski, A.M. Solutions of Equations and Systems of Equations; Academic Press: Cambridge, MA, USA, 1966. [Google Scholar]

- King, R. A family of fourth order methods for nonlinear equations. SIAM J. Numer. Anal. 1973, 10, 876–879. [Google Scholar] [CrossRef]

- Chun, C.; Lee, M.Y.; Neta, B.; Dzunic, J. On optimal fourth-order iterative methods free from second derivative and their dynamics. Appl. Math. Comput. 2012, 218, 6427–6438. [Google Scholar] [CrossRef]

- Budzko, D.A.; Cordero, A.; Torregrosa, J.R. A new family of iterative methods widening areas of convergence. Appl. Math. Comput. 2015, 252, 405–417. [Google Scholar] [CrossRef]

- Cordero, A.; Torregrosa, J.R.; Vindel, P. Dynamical Analysis to Explain the Numerical Anomalies in the Family of Ermakov-Kalitkin Type Methods. Math. Model. Anal. 2019, 24, 335–350. [Google Scholar] [CrossRef]

- Chicharro, F.; Cordero, A.; Torregrosa, J.R. Drawing dynamical and parameters planes of iterative families and methods. Sci. World J. 2013, 2013, 780153. [Google Scholar] [CrossRef]

- Julia, G. Mémoire sur l’iteration des fonctions rationnelles. J. Math. Pures Appl. 1918, 8, 47–245. [Google Scholar]

- Fatou, P. Sur les équations fonctionelles. Bull. Soc. Math. Fr. 1919, 47, 161–271. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).