The Fractal Tapestry of Life: III Multifractals Entail the Fractional Calculus

Abstract

1. Introduction

…it has occurred to a number of the more philosophically attuned contemporary scientists that we are now at another point of transition, where the implications of complexity, memory, and uncertainty have revealed themselves to be barriers to our future understanding of our technological society. The fractional calculus (FC) has emerged from the shadows as a way of taming these three disrupters with a methodology capable of analytically smoothing their singular natures.

2. Fractality

2.1. Fractal Time Series

2.2. Multifractal Time Series

2.2.1. Ergodicity Breaking

2.2.2. Information Transfer

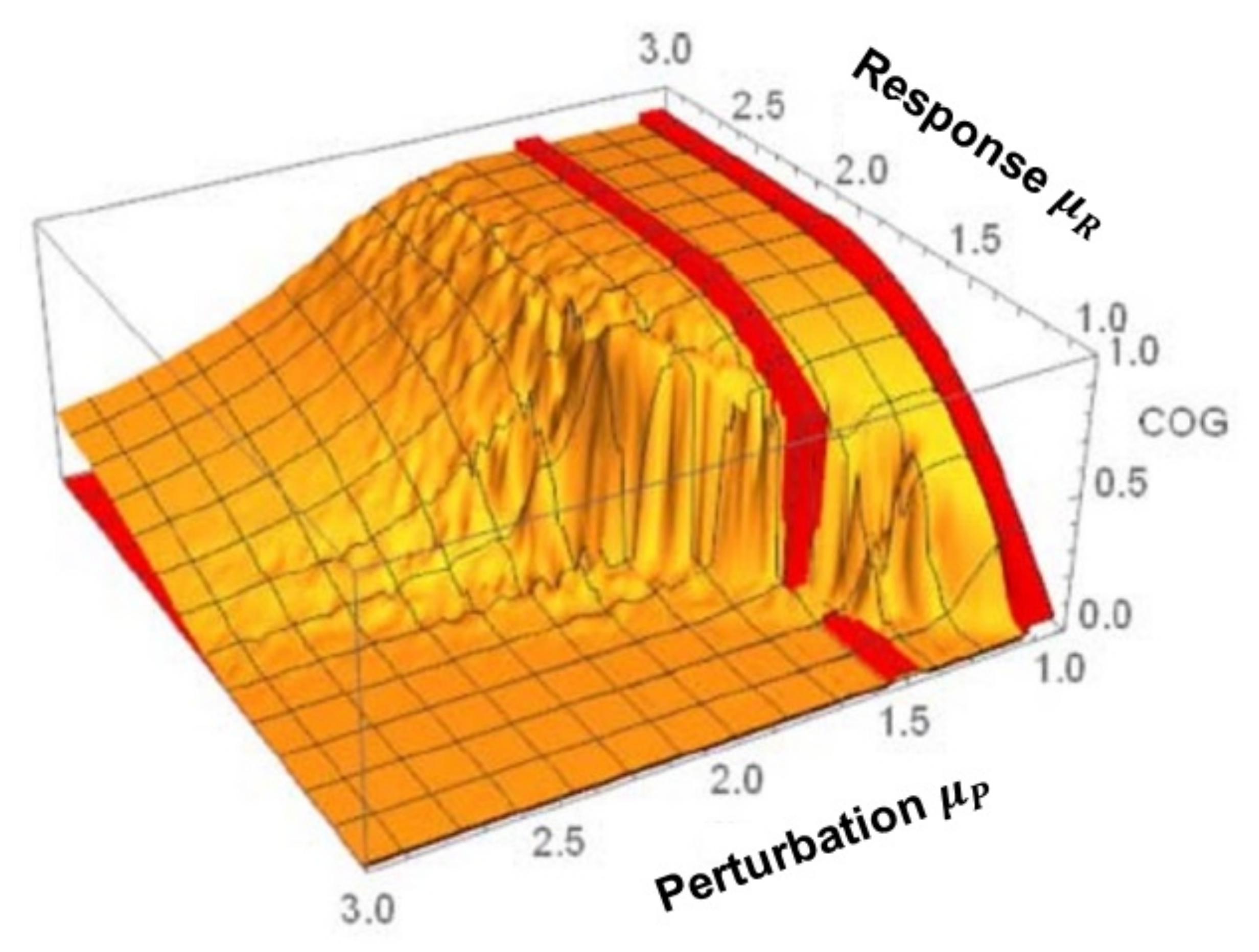

3. Cross-Correlation Cube

- (1)

- A complex network belonging to region 2 cannot exert any asymptotic influence on a complex network belonging to region 1. This is the square denoted II on the CCC and is where LRT supposedly died.

- (2)

- A complex network belonging to region 2 exerts varying degrees of influence on a complex network belonging to region 2. This follows from PCM and is indicated by IV on the CCC.

- (3)

- A complex network belonging to region 1 exerts varying degrees of influence on a complex network belonging to region 1. This follows from PCM and is indicated by I on the CCC.

- (4)

- (5)

- When the two IPL indices are equal to 2 there is an abrupt jump up from zero (square II) to one (square III), or down from one to zero, depending on the values of the IPL indices just before they converge on 2. This is a singular point where the spectra of the two networks display exact -noise fluctuations.

4. Fractional Calculus

We are all deeply conscious today that the enthusiasm of our fore bears for the marvelous achievements of Newtonian mechanics lead them to make generalizations in this area of predictability which, indeed, we may have generally tended to believe before 1960, but which we now recognize were false. We collectively wish to apologize for having misled the general educated public by spreading ideas about determinism of systems satisfying Newton’s laws of motion that, after 1960, were to be proved incorrect…

4.1. Nexus with Multifractality

4.1.1. Fractional Linear Langevin Equation (FLLE)

4.1.2. Stochastic Fractional Index

5. Fractional Probability Calculus

5.1. Fractal Diffusion

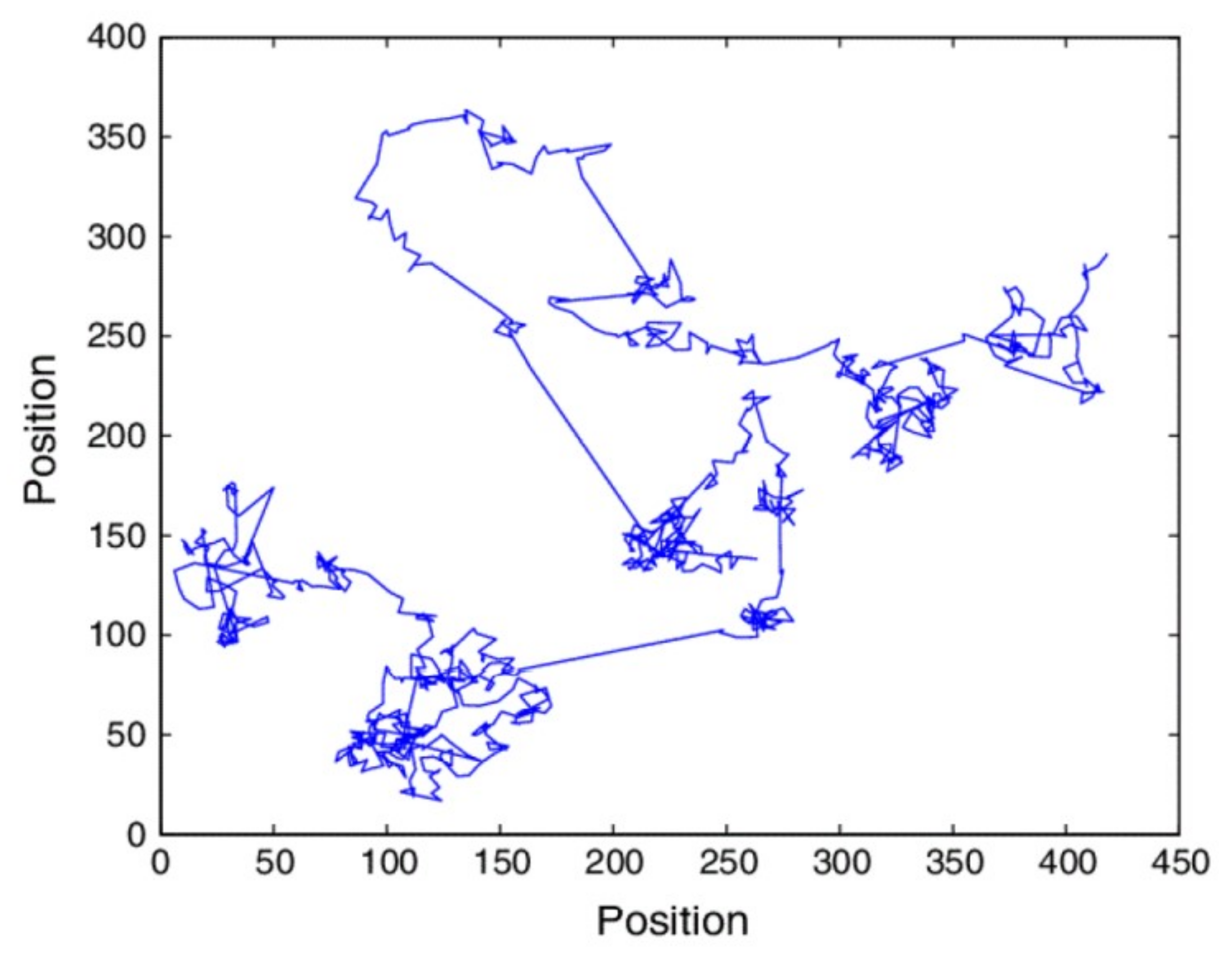

5.2. Fractal Random Walks

6. Discussion and Conclusions

- (1).

- The simple analytic functions of the IC have been found to be insufficient to describe the time dependence of most physiology networks. The notion of fractality was introduced to capture the true complexity of such biomedical network time series through fractal geometry, fractal statistics and fractal dynamics.

- (2).

- A fractal function diverges when an integer-order derivative is taken, so that such a fractal function cannot be the solution to a Newtonian equation of motion. However, when a fractional-order derivative of a fractal function is taken, it results in a new fractal function. Consequently, a time-dependent fractal process can have an equation of motion that is a FDE.

- (3).

- The network effect is the influence exerted by a complex dynamic network on each member of the network. When the network dynamics is a member of the Ising universality class, the interconnected set of IDEs for the probability of an individual being in one of two states during its non-linear interaction with the other members of the network can be replaced by an equivalent linear FDE and solved using the FC.

- (4).

- Even the simplest FDEs has a built-in memory resulting from the hidden interaction of the observable with its environment, which is manifest in the non-integer order of the time derivative, as in the network effect.

- (5).

- The solution to a linear FRE is a MLF for and becomes an exponential function for . The MLF is the workhorse of the FC just as the exponential is for the IC.

- (6).

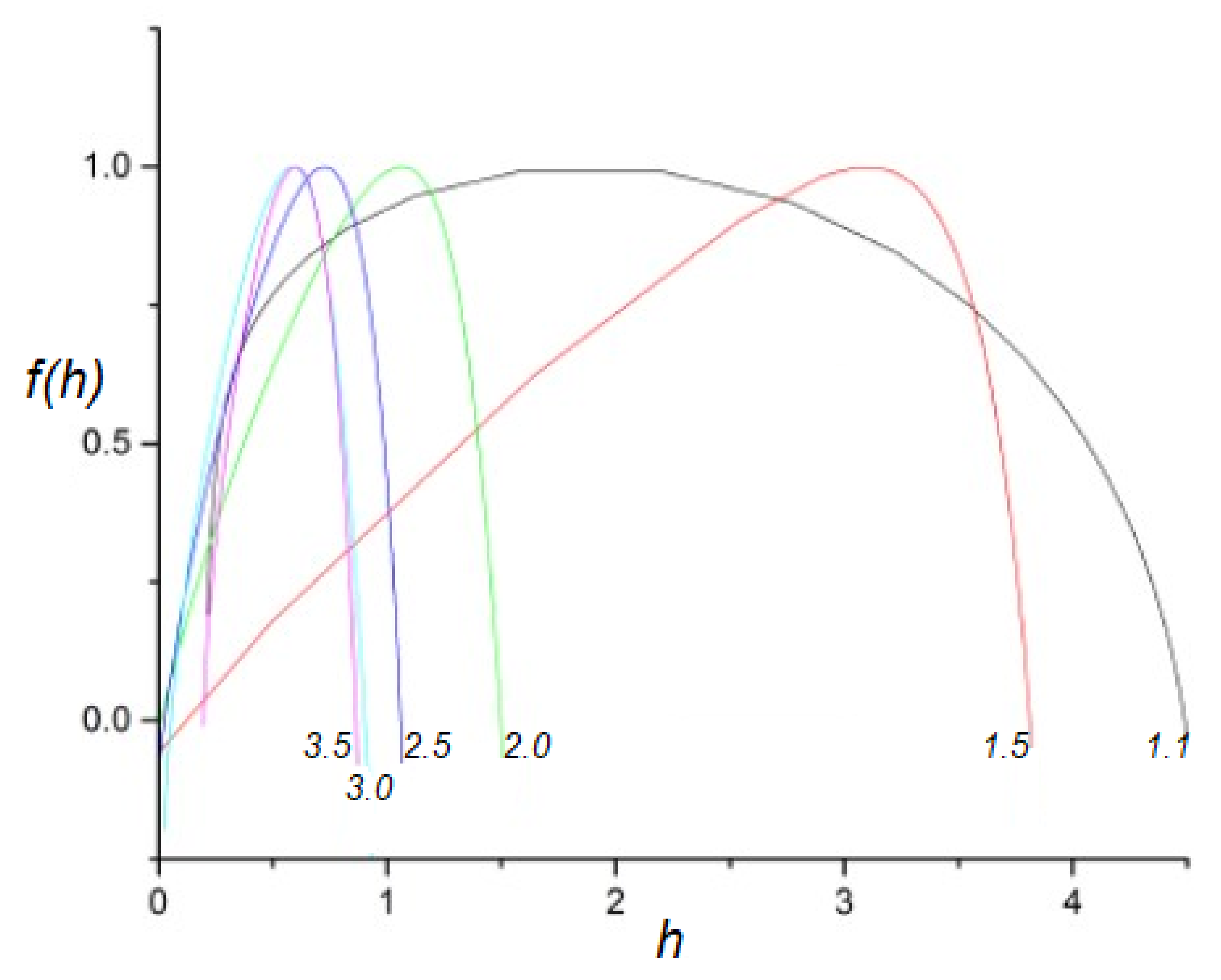

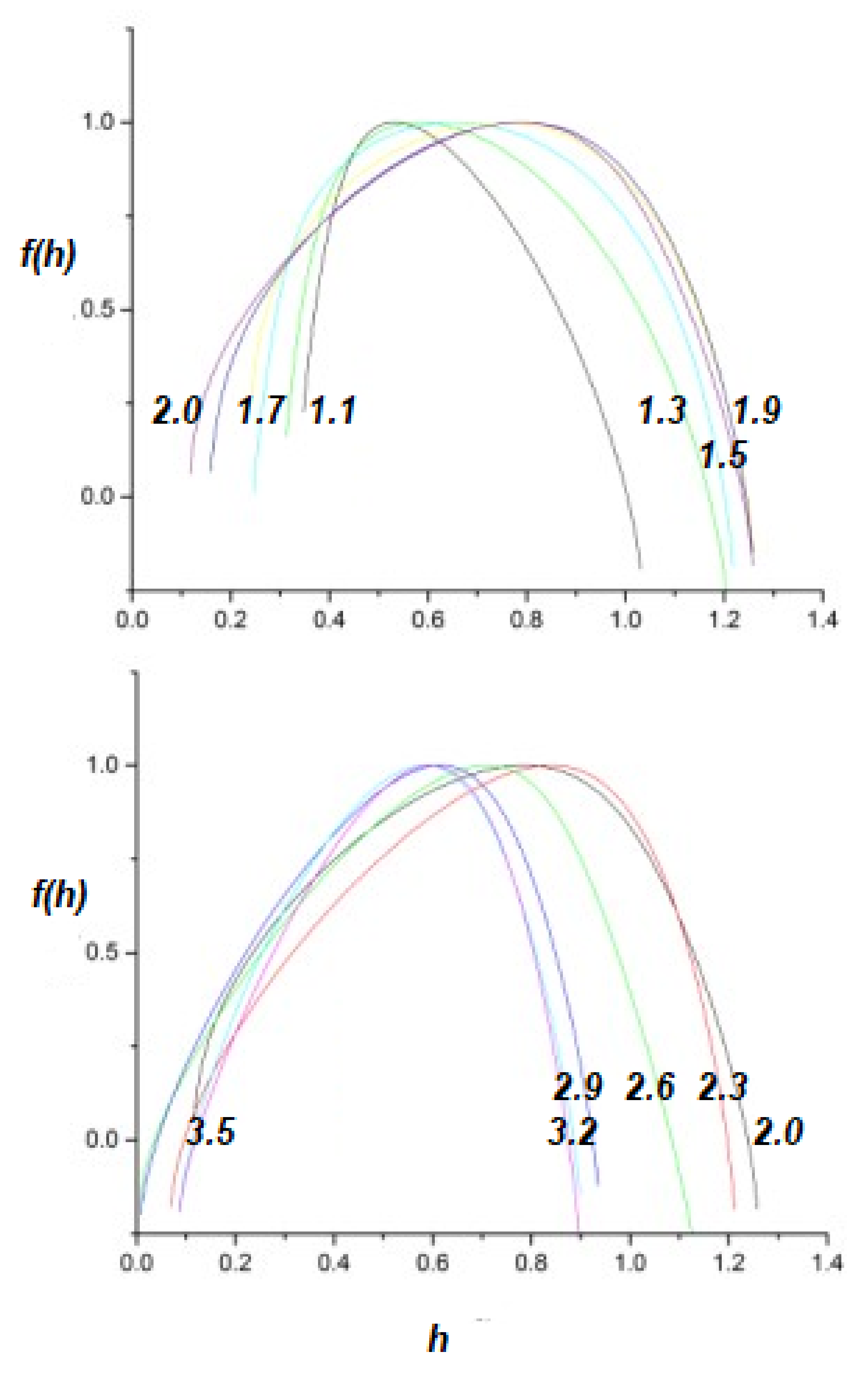

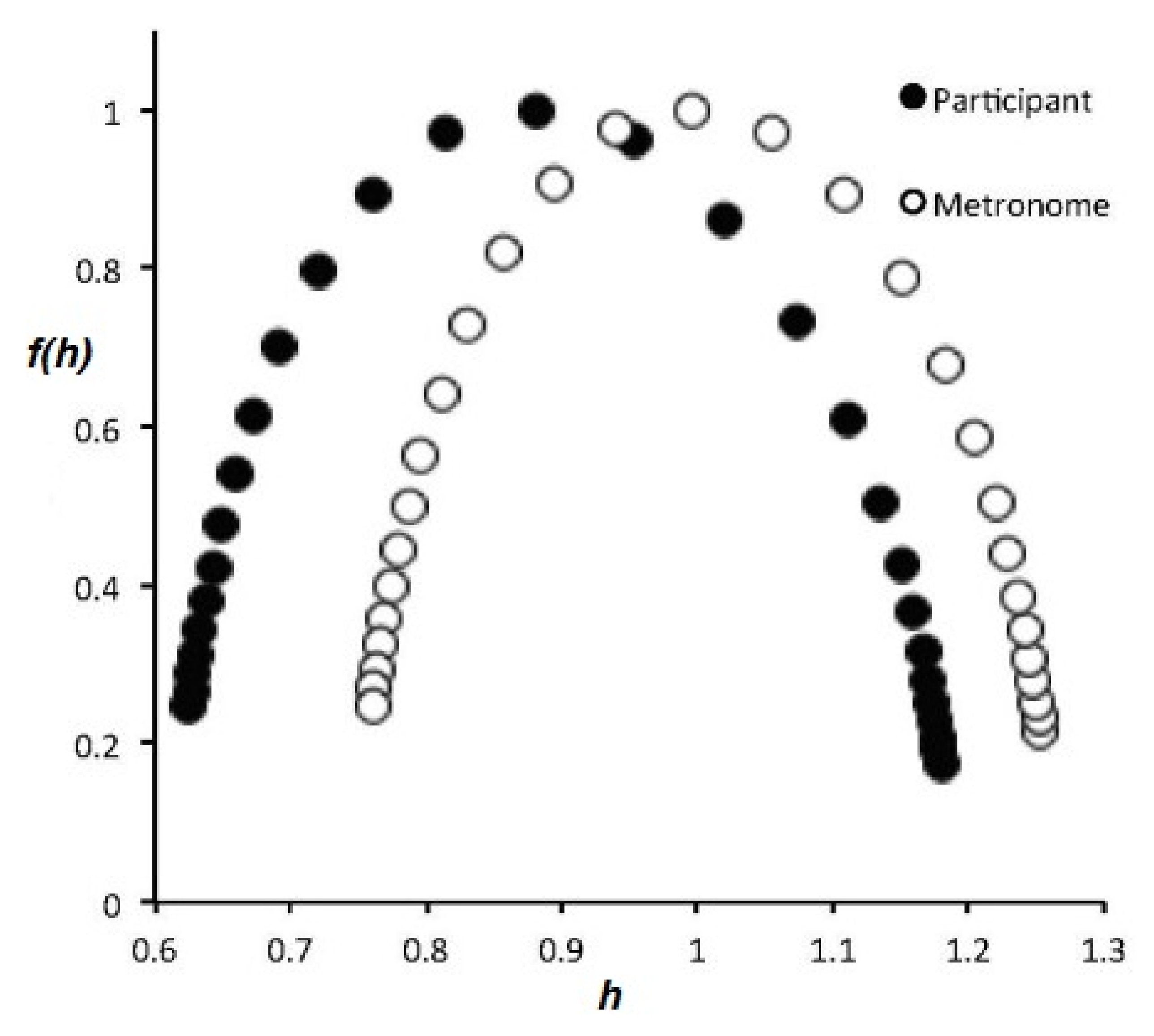

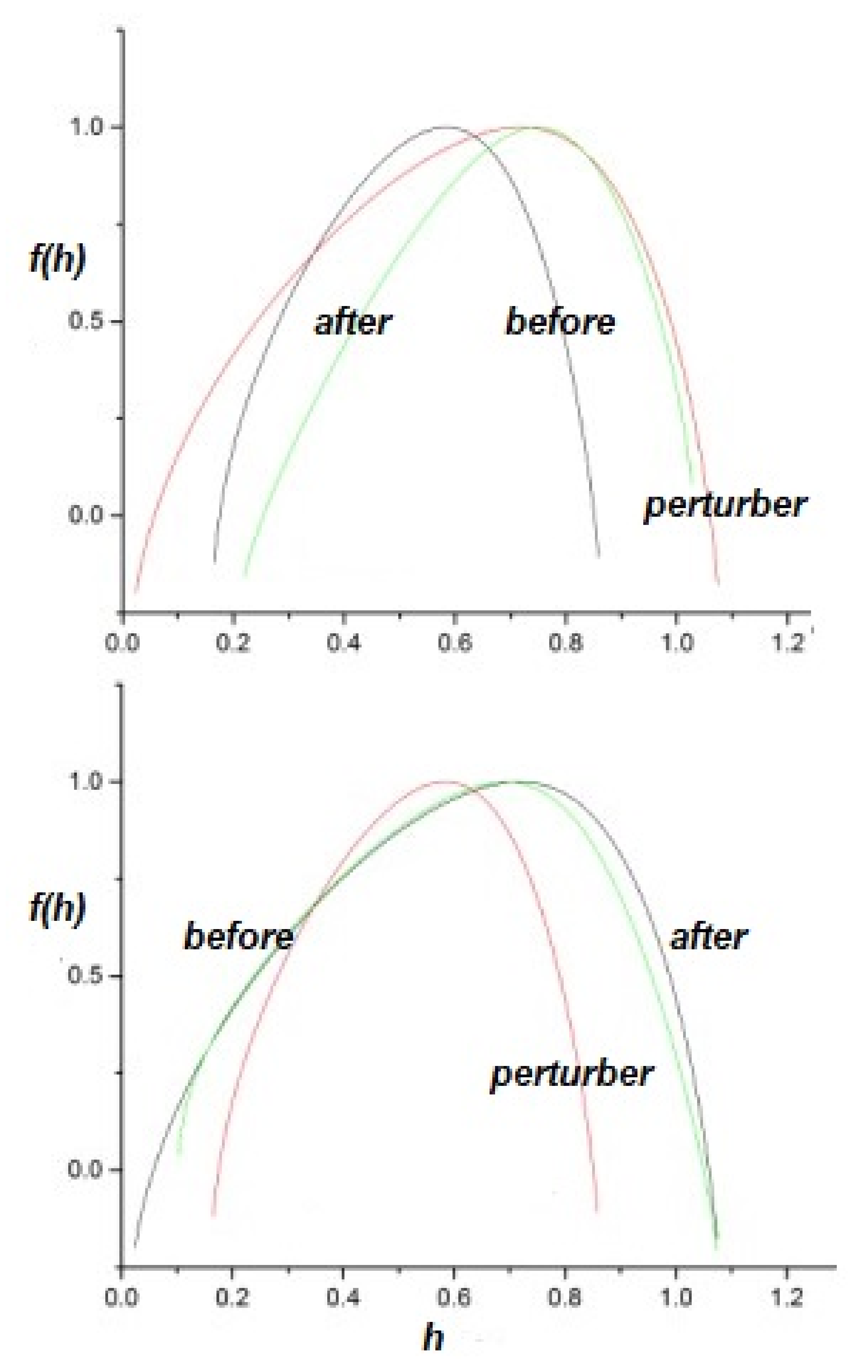

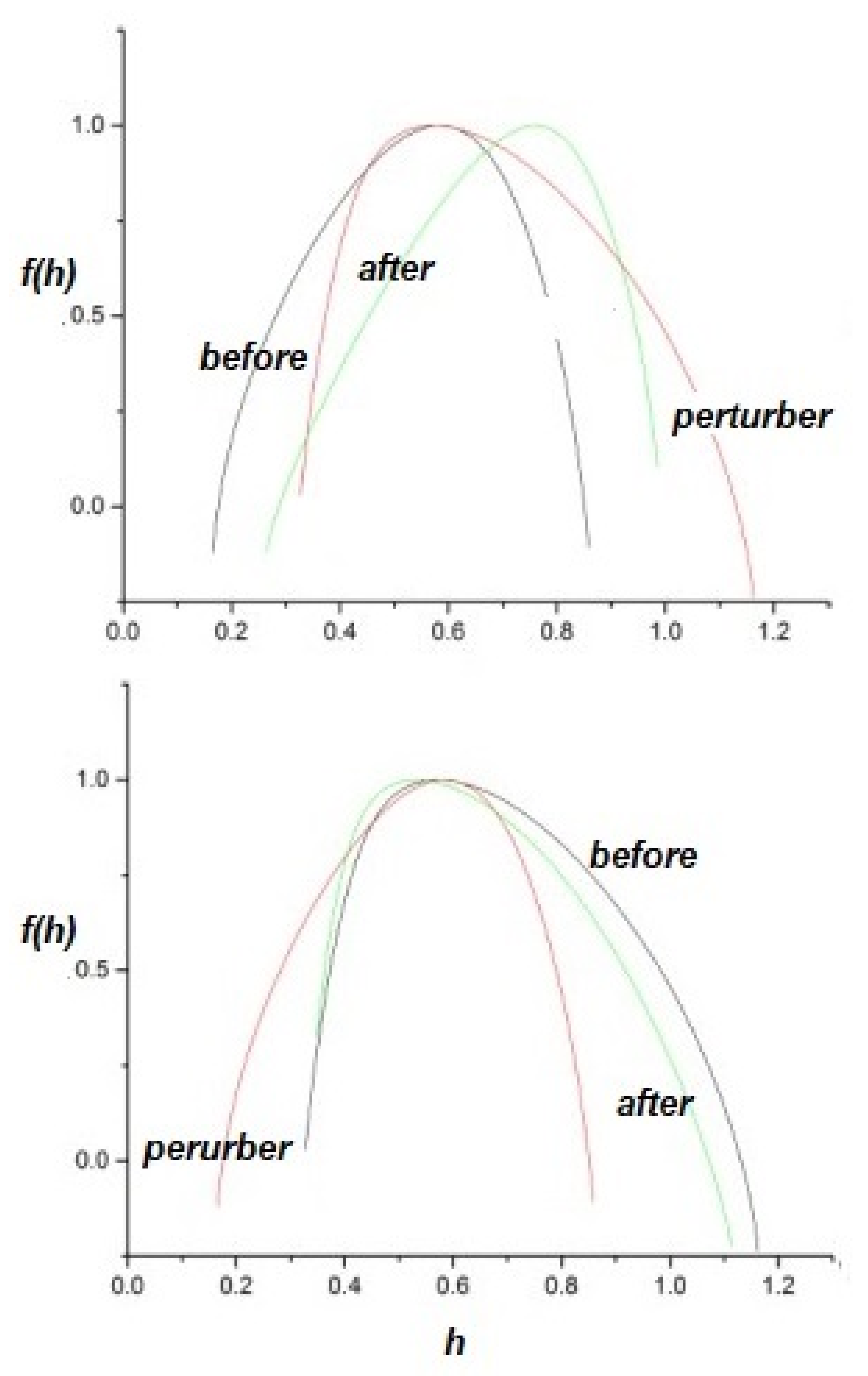

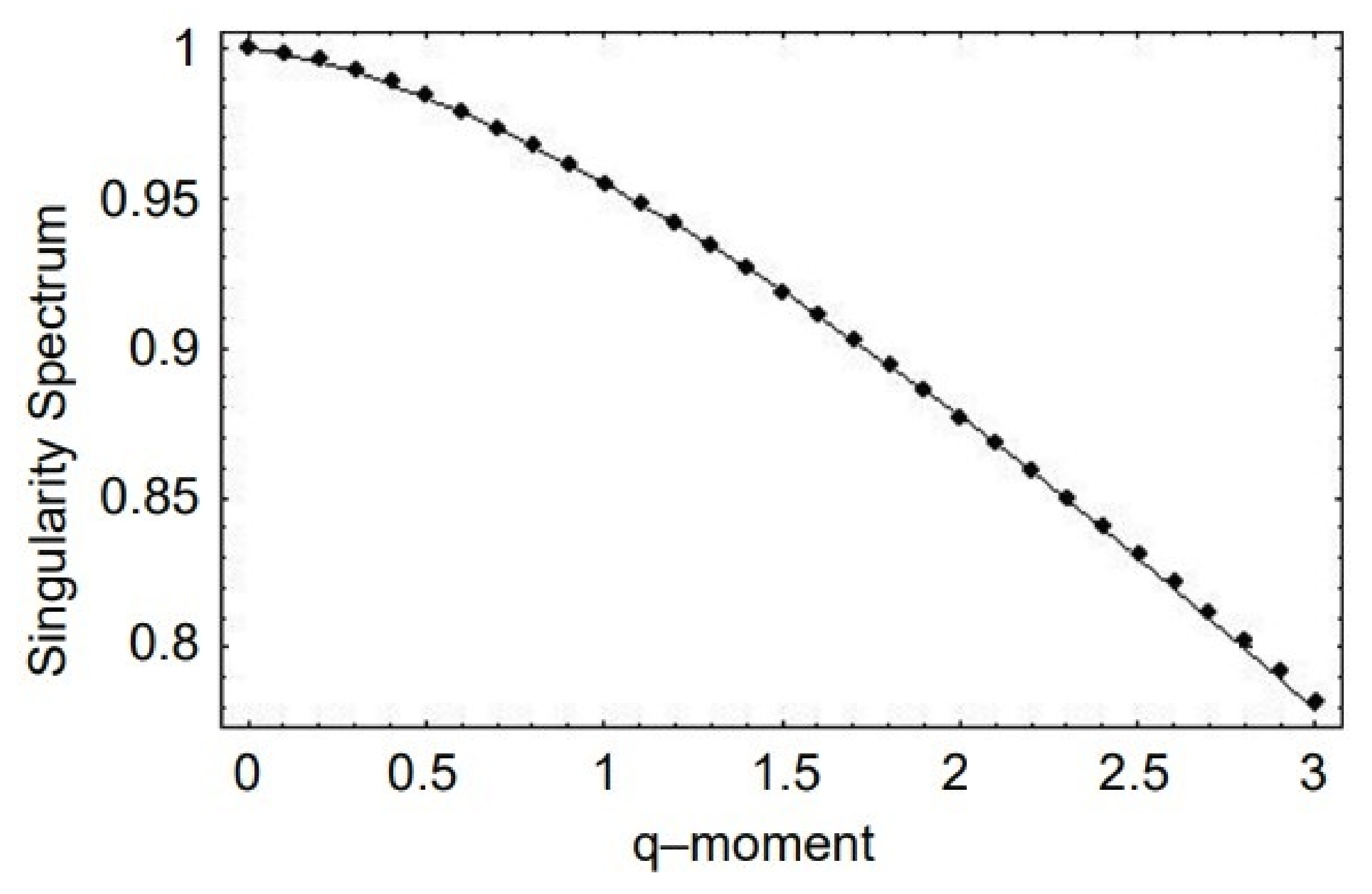

- A truly complex stochastic dynamic process can have more than one fractal dimension. A multifractal process is characterized by a uni-modal spectrum peaked at the value of the Hurst exponent .

- (7).

- The flow of information due to interaction of two complex networks each generating a multifractal time series is from the network with the broader to that with the narrower multifractal spectrum. This is summarized in the interpretation of the efficiency of information transfer using the CCC.

- (8).

- FREs with random fractional derivatives are shown to generate multifractal processes and therefore can be used to model the dynamics of both healthy and pathological physiologic networks.

- (9).

- Multifractality emerges from three distinct sources: (1) the introduction of random fractional derivatives into the dynamics of complex networks; (2) a FKT developed to define the evolution of PDF over fractional trajectories; (3) fractional random walks with diverging central moments.

- (10).

- A simple FDE that has a built-in non-locality in space is the FSDE. The solution to this fractional diffusion equation in space is a Lévy PDF, whose index is given by the order of the spatial fractional derivative. Yet another fractional diffusion equation differs in having a built-in memory and is the FTDE. The solution to this fractional diffusion equation in time is expressed in terms of the inverse Fourier transform of a MLF.

- (11).

- The health of a physiologic network is manifest by the width of the multifractal spectrum of the time series generated by that network. Experiments include but are not limited to CBF, HRV, BRV and SRV, which also show that pathologies in each of the underlying networks narrow the approprate multifractal spectrum.

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Nomenclature

| BRV | breathrate variability |

| GLRT | generalized linar response theory |

| CBF | cerebral blood flow |

| HRV | heartrate variability |

| CCC | cross correlation cube |

| IC | integer calculus |

| CE | crucial event |

| IDE | integer differential equation |

| CTRW | continuous time random walk |

| IPL | inverse power law |

| DMM | decision-making model |

| LE | Langevin equation |

| FBM | fractional Brownian motion |

| LRT | linear response theory |

| FC | fractional calculus |

| MLF | Mittag-Leffler function |

| FDE | fractional diffusion equaton |

| PCM | principle of compexity management |

| FFPE | fractional Fokker–Planck equation |

| probability density function | |

| FKE | fractional kinetic equaition |

| RG | renormalization group |

| FKT | fractional kinetic theory |

| RHS | right hand side |

| FLE | fractional Langevin equation |

| RW | random walk |

| FLLE | fractional linear Langevin equation |

| SFLE | simplest fractional Langevin equation |

| FO | fractional order |

| SOC | self-organized criticality |

| FPE | Fokker–Planck equation |

| SOTC | self-organized temporal criticality |

| FPC | fractional probability calculus |

| SRV | striderate variability |

| FRE | fractional rate equation |

| WF | Weirstrass function |

| FTDE | fractional time diffusion equation |

| WRW | Weirstrass random walk |

| FSDE | fractional space diffuson equation |

References

- West, B.J. Sir Isaac Newton Stranger in a Strange Land. Entropy 2020, 22, 1204. [Google Scholar] [CrossRef]

- West, B.J. The Fractal Tapestry of Life: A review of Fractal Physiology. Nonlinear Dyn. Psychol. Life Sci. 2021, 23, 261–296. [Google Scholar]

- Norwich, K.H. Le Chatelier’s principle in sensation and perception: Fractal-like enfolding at different scales. Front. Physiol. 2010, 1, 17. [Google Scholar] [CrossRef]

- Lloyd, D.; Aon, M.; Cortassa, S. Why Homeodynamics, Not Homeostasis? Sci. World 2001, 1, 133–145. [Google Scholar] [CrossRef]

- West, B.J.; Deering, W. Fractal physiology for physicists: Lévy statistics. Phys. Rep. 1994, 246, 1–100. [Google Scholar] [CrossRef]

- Mandelbrot, B.B. Fractals, Form, Chance and Dimension; W.H. Freeman and Co.: San Francisco, CA, USA, 1977. [Google Scholar]

- West, B.J.; Grigolini, P. Crucial Events: Why Are Catastrpies Never Expected? World Scientific: Singapore, 2021. [Google Scholar]

- Allegrini, P.; Grigolini, P.; Hamilton, P.; Palatella, L.; Raffaelli, G. Memory beyond memory in heart beating, a sign of a healthy physiological condition. Phys. Rev. E 2002, 65, 041926. [Google Scholar] [CrossRef]

- Lin, D.C.; Sharif, A. Integrated central-autonomic multifractal complexity in the heart rate variability of healthy humans. Front. Physiol. 2012, 2, 123. [Google Scholar] [CrossRef]

- Peng, C.K.; Mistus, J.; Hausdorff, J.M.; Havlin, S.; Stanley, H.E.; Goldberger, A.L. Long-range anticorrelations and non-Gaussian behavior of the heartbeat. Phys. Rev. Lett. 1993, 70, 1343–1346. [Google Scholar] [CrossRef]

- Altemeier, W.A.; McKinney, S.; Glenny, R.W. Fractal nature of regional ventilation distribution. J. Appl. Physiol. 2000, 88, 1551–1557. [Google Scholar] [CrossRef]

- Bohara, G.; West, B.J.; Grigolini, P. Bridging Waves and Crucial Events in the Dynamics of the Brain. Front. Physiol. 2018, 9, 1174. [Google Scholar] [CrossRef]

- McMullen, M.; Girling, L.; Graham, M.R.; Mutch, W.A. Biologically variable ventilation improves gas exchange and respiratory mechanics in a model of severe bronchospasm. Crit. Care Med. 2007, 35, 1749–1755. [Google Scholar]

- Mutch, W.A.C.; Harm, S.H.; Lefevre, G.R.; Graham, M.R.; Girling, L.G.; Kowalski, S.E. Biologically variable ventilation increases arterial oxygenation over that seen with positive end-expiratory pressure alone in a porcine model of acute respiratory distress syndrome. Crit. Care Med. 2000, 28, 2457–2464. [Google Scholar] [CrossRef] [PubMed]

- Hausdorff, J.M.; Zemany, L.; Peng, C.-K.; Goldberger, A.L. Maturation of gait dynamics: Stride-to-stride variability and its temporal organization in children. J. Appl. Physiol. 1999, 86, 1040. [Google Scholar] [CrossRef] [PubMed]

- Hausdorff, J.M.; Peng, C.K.; Ladin, Z.; Wei, J.Y.; Goldberger, A.L. Is walking a random walk? Evidence for long-range correlations in stride interval of human gait. J. Appl. Physiol. 1995, 78, 349–358. [Google Scholar] [CrossRef]

- Hausdorff, J.M.; Purdon, P.L.; Peng, C.-K.; Ladin, Z.; Wei, J.Y.; Goldberger, A.L. Fractal dynamics of human gait: Stability of long-range correlations. J. Appl. Physiol. 1996, 80, 1448–1457. [Google Scholar] [CrossRef] [PubMed]

- Jordan, K.; Challis, J.; Newell, K. Long range correlations in the stride interval of running. Gait Posture 2006, 24, 120–125. [Google Scholar] [CrossRef] [PubMed]

- Kozlowska, K.; West, M.L.B.J. Significance of trends in gait dynamics. PLoS Comput. Biol. 2020, 16, e1007180. [Google Scholar] [CrossRef]

- Werner, G. Fractals in the nervous system: Conceptual implications for theoretical neuroscience. Front. Physiol. 2010, 1, 15. [Google Scholar] [CrossRef]

- Allegrini, P.; Menicucci, D.; Bedini, R.; Gemignani, A.; Paradisi, P. Complex intermittency blurred by noise: Theory and application to neural dynamics. Phys. Rev. E 2010, 82, 015103. [Google Scholar] [CrossRef]

- Beggs, J.M.; Plenz, D. Neuronal Avalanches in Neocortical Circuits. J. Neurosci. 2003, 23, 11167–11177. [Google Scholar] [CrossRef]

- Kello, C.T.; Beltz, B.C.; Holden, J.G.; Van Orden, G.C. The Emergent Coordination of Cognitive Function. J. Exp. Psychol. 2007, 136, 551. [Google Scholar] [CrossRef] [PubMed]

- Plenz, D.; Thiagarjan, T.C. The organizing principle of neuronal avalanch activity: Cell asseemblies in the cortex? Trends Neurosci. 2007, 30, 101. [Google Scholar] [CrossRef] [PubMed]

- Almurad, Z.M.H.; Roume, C.; Delignières, D. Complexity matching in side-by-side walking. Hum. Mov. Sci. 2017, 54, 125. [Google Scholar] [CrossRef] [PubMed]

- Almurad, Z.M.H.; Clèment, R.; Hubert, B.; Delignixexres, D. Complexity Matching: Restoring the Complexity of Locomotion in Older People Through Arm-in-Arm Walking. Front. Physiol. 2018, 9, 1766. [Google Scholar] [CrossRef]

- Coey, C.A.; Washburna, A.; Hassebrockb, J.; Richardson, M.J. Complexity matching effects in bimanual and interpersonal syncopated finger tapping. Neurosci. Lett. 2016, 616, 204. [Google Scholar] [CrossRef]

- Deligniéres, D.; Almurad, Z.M.H.; Roume, C.; Marmelat, V. Multifractal signatures of complexity matching. Exp. Brain Res. 2016, 234, 2773. [Google Scholar] [CrossRef][Green Version]

- Fine, J.M.; Likens, A.D.; Amazeen, E.L.; Amazeen, P.G. Emergent Complexity Matching in Interpersonal Coordination: Local Dynamics and Global Variability. J. Exp. Psychol. Hum. Percept. Perform. 2015, 41, 723. [Google Scholar] [CrossRef]

- Marmelat, V.; Deligniéres, D.D. Strong anticipation: Complexity matching in interpersonal coordination. Exp. Brain. Res. 2012, 222, 137. [Google Scholar] [CrossRef][Green Version]

- Correll, J. 1/f-noise and effort on implicit measures of bias. J. Personal. Soc. Psychol. 2008, 94, 48. [Google Scholar] [CrossRef]

- West, B.J. Where Medicine Went Wrong; World Scientific: Singapore, 2006. [Google Scholar]

- Beggs, J.M.; Timme, N. Being critical of criticality in the brain. Front. Physiol. 2012, 3, 163. [Google Scholar] [CrossRef]

- Das, M.; Gebber, G.L.; Bauman, S.M.; Lewis, C.D. Fractal Properties of sympathetic nerve discharges. J. Neurophysiol. 2003, 89, 833–840. [Google Scholar] [CrossRef] [PubMed]

- Collins, J.J.; DeLuca, J.C. Random walking during quiet standing. Phys. Rev. Lett. 1994, 73, 764–767. [Google Scholar] [CrossRef] [PubMed]

- Boonstra, T.W.; He, B.J.; Daffertshofer, A. Scale-free dynamics and critical phenomena in cortical activity. Front. Physiol. 2013, 4, 79. [Google Scholar] [CrossRef] [PubMed][Green Version]

- West, B.J. Fractal Physiology and Chaos in Medicine, 2nd ed.; Studies of Nonlinear Phenomena in Life Sciences; World Scientific: Singapore, 2013; Volume 16. [Google Scholar]

- West, B.J. Nature’s Patterns and the Fractional Calculus; de Gruyter GmbH: Berlin, Germany, 2017. [Google Scholar]

- West, B.J.; Geneston, E.L.; Grigolini, P. Maximizing information exchange between complex networks. Phys. Rep. 2008, 468, 1–99. [Google Scholar] [CrossRef]

- Aquino, G.; Bologna, M.; Grigolini, P.; West, B.J. Transmission of information between complex systems: 1/f resonance. Phys. Rev. E 2011, 83, 051130. [Google Scholar] [CrossRef]

- Picccinini, N.; Lambert, D.; West, B.J.; Bologna, M.; Grigolini, P. Nonergodic complexity management. Phys. Rev. E 2016, 93, 062301. [Google Scholar] [CrossRef]

- Faes, L.; Nollo, G.; Jurysta, F.; Marinazzo, D. Information dynamics of brain-heart physiological networks during sleep. New J. Phys. 2014, 16, 105005. [Google Scholar] [CrossRef]

- McCraty, R.; Atkinson, M.; Tomasino, D.; Bradley, R.T. The Coherent Heart, Heart-Brain Interactions, Psychophysiological Coherence, and the Emergence of System-Wide Order. Integral Rev. 2009, 5, 10. [Google Scholar]

- Mandelbrot, B.B.; van Ness, J.W. Fractional Brownian motions, fractional noises and applications. SIAM Rev. 1968, 10, 422. [Google Scholar] [CrossRef]

- Bogdan, P.; Eke, A.; Ivanov, P.C. Editorial: Fractal and Multifractal Facets in the Structure and Dynamics of Physiological Systems and Applications to Homeostatic Control, Disease Diagnosis and Integrated Cyber-Physical Platforms. Front. Physiol. 2020, 11, 447. [Google Scholar] [CrossRef]

- Bassingthwaighte, J.B.; Liebovitch, L.S.; West, B.J. Fractal Physiology; Oxford University Press: Oxford, UK, 1994. [Google Scholar]

- Ivanov, P.C.; Ma, Q.D.Y.; Bartsch, R.P.; Hausdorff, J.M.; Amaral, L.A.N.; Schulte-Frohlinde, V. Levels of complexity in scale invariant neural signals. Phys. Rev. E 2009, 79, 041920. [Google Scholar] [CrossRef] [PubMed]

- Xue, Y.; Bogdan, P. Reconstructing missing complex networks against adversarial interventions. Nat. Commun. 2019, 10, 1738. [Google Scholar] [CrossRef] [PubMed]

- Magin, R.L. Fractional Calculus in Bioengineering; Begell House Publishers, Co.: Redding, CT, USA, 2006. [Google Scholar]

- Feder, J. Fractals; Plenum Press: New York, NY, USA, 1988. [Google Scholar]

- Meakin, P. Fractals, Scaling and Growth Far from Equilibrium; Cambridge Universty Press: Cambridge, UK, 1998. [Google Scholar]

- Cakir, R.; Grigolini, P.; Krokhin, A.A. Dynamical origin of memory and renewal. Phys. Rev. E 2006, 74, 021108. [Google Scholar] [CrossRef] [PubMed]

- Mannella, R.; Grigolini, P.; West, B.J. A dynamical approach to fractional brownian motion. Fractals 1994, 2, 81. [Google Scholar] [CrossRef]

- Boettcher, S.; Brunson, C.T. Renormalization group for critical phenomena in complex networks. Front. Physiol. 2011, 2, 102. [Google Scholar] [CrossRef] [PubMed]

- Dutta, P.; Horn, P.M. Low-frequency fluctuations in solids: 1/f noise. Rev. Mod. Phys. 1981, 53, 497–516. [Google Scholar] [CrossRef]

- Schottky, W. Uber spontane Stromshwanjungen in verschiedenen Elektrizitattsleitern. Ann. Phsyik 1918, 362, 54–567. [Google Scholar]

- Deligniéres, D.; Marmelat, V. Fractal fluctuations and complexity: Current debates and future challenges. Crit. Rev. Biomed. Eng. 2012, 40, 485–500. [Google Scholar] [CrossRef]

- Chialvo, D.R. Emergent complex neural dynamics: The brain at the edge. arXiv 2010, arXiv:1010.2530v1. [Google Scholar]

- Mora, T.; Bialek, W. Are biological systems poised at criticality? J. Stat. Phys. 2011, 144, 268. [Google Scholar] [CrossRef]

- Turalska, M.; West, B.J. Fractional Dynamics of Individuals in Complex Networks. Front. Phys. 2018, 6, 110. [Google Scholar] [CrossRef]

- Mega, M.S.; Allegrini, P.; Grigolini, P. Power-law time distribution of large earthquakes. Phys. Rev. Lett. 2003, 90, 188501. [Google Scholar] [CrossRef] [PubMed]

- Abney, D.H.; Paxton, A.; Dale, R.; Kello, C.T. Complexity Matching in Dyadic Conversation. J. Exp. Gen. 2014, 143, 2304. [Google Scholar] [CrossRef] [PubMed]

- Mandelbrot, B.B. Multifractals and 1/f Noise; Springer: New York, NY, USA, 1999. [Google Scholar]

- Sornette, D. Critical Phenomena in Natural Science, 2nd ed.; Springer: Berlin, Germany, 2004. [Google Scholar]

- West, B.J. Fractal physiology and the fractional calculus: A perspective. Front. Physiol. 2010, 1, 12. [Google Scholar] [CrossRef]

- Bak, P. How Nature Works, the Science of Self-Organized Criticality; Springer: New York, NY, USA, 1996. [Google Scholar]

- Watkins, N.W.; Pruessner, G.; Chapman, S.C.; Crosby, N.B.; Jensen, H.J. 25 Years of Self organized Criticality: Concepts and Controversies. Space Sci. Rev. 2015, 198, 3–44. [Google Scholar] [CrossRef]

- Aburn, M.J.; Holmes, C.A.; Roberts, J.A.; Boonstra, T.W.; Breakspear, M. Critical fluctuations in cortical models near instability. Front. Physiol. 2012, 3, 331. [Google Scholar] [CrossRef]

- Timme, N.M.; Marshall, N.J.; Bennett, N.; Ripp, M.; Lautzenhiser, E.; Beggs, J.M. Criticality Maximizes Complexity in Neural Tissue. Front. Physiol. 2016, 7, 425. [Google Scholar] [CrossRef]

- Jung, P.; Cornell-Bell, A.; Madden, S.; Moss, F. Noise-induced spiral waves in astrocyte syncytia show evidence of self-organized criticality. J. Neurophysiol. 1998, 79, 10981101. [Google Scholar] [CrossRef]

- Carlson, J.M.; Doyle, J. Highly-optimized tolerance: A mechanism for power law in dssigned systems. Phys. Rev. E 1999, 60, 1412–1427. [Google Scholar] [CrossRef]

- Norwich, K.H. Information, Sensation and Percetion; Academic Press: San Diego, CA, USA, 1993. [Google Scholar]

- Medina, J.M. 1/fα-noise in reasction times: A Proposed model based on Pierson’s law and information processing. Phys. Rev. E 2009, 79, 011902. [Google Scholar] [CrossRef]

- Yu, Y.; Romero, R.; Lee, T.S. Preference of sensory neural coding for 1/f-signals. Phys. Rev. Lett. 2005, 94, 108103. [Google Scholar] [CrossRef] [PubMed]

- Mahmoodi, K.; West, B.J.; Grigolini, P. Self-organizing Complex Networks: Individual versus global rules. Front. Physiol. 2017, 8, 478. [Google Scholar] [CrossRef] [PubMed]

- West, B.J. The Fractal Tapestry of Life: II The Entailment of Fractional Oncology. Front. Netw. Physiol. 2022, 2, 845495. [Google Scholar] [CrossRef]

- Pavlov, A.N.; Semyachkina-Glushkovskay, O.V.; Abdurashitov, O.N.P.S.; Shihalov, G.M.; Rybalova, E.V.; Sindeev, S.S. Multifractality in cerebrovascular dynamics: An approach for mechanisms-related analysis. Chaos Solitons Fractals 2016, 91, 210–213. [Google Scholar] [CrossRef]

- West, B.J.; Latka, M.; Glaubic-Latka, M.; Latka, D. Multifractality of cerebral blood flow. Phys. A 2003, 318, 453. [Google Scholar] [CrossRef]

- Humeau, A.; Buard, B.; Mahé, G.; Chapeau-Blondeau, F.; Rousseau, D.; Abraham, P. Multifractal analysis of heart rate variability and laser Doppler flowmetry fluctuations: Comparison of results from different numerical methods. Phys. Med. Biol. 2010, 55, 6279–6297. [Google Scholar] [CrossRef]

- Ivanov, P.C.; Rosenblum, M.G.; Peng, C.K.; Meitus, J.; Havlin, S.; Stanley, H.E. Scaling behavior of heartbeat intervals obtained by wavelet-based time-series analysis. Nature 1996, 383, 323. [Google Scholar] [CrossRef]

- Ivanov, P.C.; Amaral, L.A.N.; Goldberger, A.L.; Havlin, S.; Rosenblum, M.G.; Struzik, Z.R.; Stanley, H.E. Multifractality in human heartbeat dynamics. Nature 1999, 399, 461. [Google Scholar] [CrossRef]

- Suki, B.; Alencar, A.M.; Frey, U.; Ivanov, P.C.; Buldyrev, S.V.; Majumdar, A.; Stanley, H.E.; Dawson, C.; Krenz, G.S.; Mishima, M. Fluctuations, noise and scaling in the cardiopulmonary system. Fluct. Noise Lett. 2003, 3, R1–R25. [Google Scholar] [CrossRef]

- West, B.J.; Turalska, M. Hypothetical Control of Heart Rate Variability. Front. Physiol. 2019, 10, 1078. [Google Scholar] [CrossRef]

- Fraiman, D.; Chialvo, D.R. What kind of noise is brain noise: Anomalous scaling behavior of the resting brain activity fluctuations. Front. Physiol. 2012, 3, 307. [Google Scholar] [CrossRef] [PubMed]

- Gallos, L.K.; Sigman, M.; Makse, H.A. The conundrum of functional brain networks: Small-world efficiency or fractal modularity. Front. Physiol. 2012, 3, 123. [Google Scholar] [CrossRef] [PubMed]

- Mallat, S. A Wavelet Tour of Signal Processing, 2nd ed.; Academic Press: San Diego, CA, USA, 1999. [Google Scholar]

- Muzy, J.F.; Bacry, E.; Arneodo, A. Wavelets and multifractal forrmalism for singular signals: Application to turbulence data. Phys. Rev. Lett. 1991, 67, 3515. [Google Scholar] [CrossRef] [PubMed]

- Muzy, J.F.; Bacry, E.; Arneodo, A. Multifractal forrmalism for singular signals: The structure functon approach versus the wavelet-transform modulus-maxima method. Phys. Rev. E 1993, 47, 875. [Google Scholar] [CrossRef] [PubMed]

- Lin, P.-F.; Lo, M.-T.; Tsao, J.; Chang, Y.-C.; Lin, C.; Ho, Y.-L. Correlations between the Signal Complexity of Cerebral and Cardiac Electrical Activity: A Multiscale Entropy Analysis. PLoS ONE 2014, 9, e87798. [Google Scholar] [CrossRef] [PubMed]

- Piper, D.; Schiecke, K.; Pester, B.; Benninger, F.; Feucht, M.; Witte, H. Time-variant coherence between heart rate variability and EEG activity in epileptic patients: An advanced coupling analysis between physiological networks. New J. Phys. 2014, 16, 115012. [Google Scholar] [CrossRef]

- Botcharova, M.; Berthouze, L.; Brookes, M.J.; Barnes, G.R.; Farmer, S.F. Resting state MEG oscillations show long-range temporal correlations of phase synchrony that break down during finger movement. Front. Physiol. 2015, 6, 183. [Google Scholar] [CrossRef]

- Kadota, H.; Kudo, K.; Ohtsuki, T. Time-series pattern changes related to movement rate in synchronized human tapping. Neurosci. Lett. 2004, 370, 97. [Google Scholar] [CrossRef]

- Fingelkurts, A. Information Flow in the Brain: Ordered Sequences of Metastable States. Information 2017, 8, 22. [Google Scholar] [CrossRef]

- Beer, R.D. Dynamical approaches to cognitive science. Trends Cogn. Sci. 2000, 4, 3. [Google Scholar] [CrossRef]

- Silberstein, M.; Chemero, A. Complexity and Extended Phenomenological-Cognitive Systems. Top. Cogn. 2012, 4, 35. [Google Scholar] [CrossRef] [PubMed]

- Picccinini, N.; West, B.J.; Grigolini, P. Ergodicity Breaking, Transport of Information and Multifractality. unpublished.

- Shlesinger, M.F.; Zaslavsky, G.M.; Klafter, J. Strange Kinetics. Nature 1993, 363, 31. [Google Scholar] [CrossRef]

- Kantelhardt, J.W.; Zschiegnera, S.A.; Koscielny-Bundec, E.; Havlind, S.; Bunde, A.; Stanley, H.E. Multifractal detrended fluctuation analysis of nonstationary time series. Phys. A 2002, 316, 87. [Google Scholar] [CrossRef]

- Greene, J.A.; Loscalzo, J. Putting the Patient Back Together—Social Medicine, Network Medicine, and Limits of Reductionism. N. Engl. J. Med. 2017, 377, 2493–2499. [Google Scholar] [CrossRef]

- Ivanov, P.C.; Liu, K.K.L.; Bartsch, R.P. Focus on the emerging new fields of network physiology and network medicine. New J. Phys. 2016, 18, 10021. [Google Scholar] [CrossRef]

- Loscalzo, J.; Barabasi, A.; Silverman, E.K. Network Medicine. In Complex Systems in Human Disease and Therapeutics; Harvard University Press: Cambridge, MA, USA, 2017; pp. 1–16. [Google Scholar]

- Lovecchio, E.; Allegrini, P.; Geneston, E.; West, B.J.; Grigolini, P. From self-organized to extended criticality. Front. Physiol. 2012, 3, 98. [Google Scholar] [CrossRef]

- Mahmoodi, K.; West, B.J.; Grigolini, P. On the Dynamical Foundation of Multifractality. Phys. A 2020, 551, 124038. [Google Scholar] [CrossRef]

- Goldberger, A.L.; Amaral, L.A.N.; Hausdorff, J.M.; Ivanov, P.C.; Peng, C.-K.; Stanley, H.E. Fractal dynamics in physiology: Alterations with disease and aging. Proc. Natl. Acad. Sci. USA 2002, 99, 2466–2472. [Google Scholar] [CrossRef]

- Aquino, G.; Bologna, M.; Grigolini, P.; West, B.J. Beyond the death of Linear Response Theory:1/f Optimal Information Transport. Phys. Rev. Lett. 2010, 105, 040601. [Google Scholar] [CrossRef]

- Stephen, D.G.; Dixon, J.A. Strong anticipation: Multifractal cascade dynamics modulate scaling in synchronization behaviors. Chaos Solitons Fractals 2011, 44, 160. [Google Scholar] [CrossRef]

- Stephen, D.G.; Anastas, J.R.; Dixon, J.A. Scaling in cognitive performance reflects multiplicative multifractal cascade dynamics. Front. Physiol. 2012, 3, 102. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Dixon, J.A.; Holden, J.C.; Mirman, D.; Stephen, D.G. Multifractal Dynamics in the Emergence of CognitiveStructure. Top. Cogn. Sci. 2012, 4, 51. [Google Scholar] [CrossRef] [PubMed]

- Dotov, D.G.; Nie, L.; Chemero, A. A Demonstration of the Transition from Ready-to-Hand to Unready-to-Hand. PLoS ONE 2010, 5, e9433. [Google Scholar] [CrossRef] [PubMed]

- Dotov, D.G.; Nie, L.; Wojcik, K.; Jinks, A.; Yu, X.; Chemero, A. Cognitive and movement measures reflect the transition to presence-at-hand. New Ideas Psychol. 2017, 45, 1. [Google Scholar] [CrossRef]

- Grigolini, P.; Piccinini, N.; Svenkeson, A.; Pramukkul, P.; Lambert, D.; West, B.J. From Neural and Social Cooperation to the Global Emergence of Cognition. Front. Bioeng. Biotechnol. 2015, 3, 78. [Google Scholar] [CrossRef] [PubMed]

- Van Orden, G.C.; Holden, J.G.; Turvey, M.T. Human cognition and 1/f scaling. J. Exp. Psychol. Gen. 2005, 134, 117–123. [Google Scholar] [CrossRef]

- Deligniéres, D.; Torre, K.; Lemaine, L. Fractal models for event-based and dynamical timers. Acta Psychol. 2008, 127, 382. [Google Scholar] [CrossRef]

- Barbi, F.; Bologna, M.; Grigolini, P. Linear Response to Perturbation of Nonexponential Renewal Processes. Phys. Rev. Lett. 2005, 95, 220601. [Google Scholar] [CrossRef]

- Heinsalu, E.; Patriarca, M.; Goychuk, I.; Hänggi, P. Use and Abuse of a Fractional Fokker-Planck Dynamics for Time-Dependent Driving. Phys. Rev. Lett. 2007, 99, 120602. [Google Scholar] [CrossRef]

- Magdziarz, M.; Weron, A.; Klafter, J. Equivalence of the Fractional Fokker-Planck and Subordinated Langevin Equations: The Case of a Time-Dependent Force. Phys. Rev. Lett. 2008, 101, 210601. [Google Scholar] [CrossRef]

- Sokolov, I.M.; Blumen, A.; Klafter, J. Linear response in complex systems: CTRW and the fractional Fokker–Planck equations. Phys. A 2001, 302, 268. [Google Scholar] [CrossRef][Green Version]

- Sokolov, I.M.; Klafter, J. Field-Induced Dispersion in Subdiffusion. Phys. Rev. Lett. 2006, 97, 140602. [Google Scholar] [CrossRef] [PubMed]

- Weron, A.; Magdziarz, M.; Weron, K. Modeling of subdiffusion in space-time-dependent force fields beyond the fractional Fokker-Planck equation. Phys. Rev. E 2008, 77, 036704. [Google Scholar] [CrossRef] [PubMed]

- Wiener, N. Cybernetics; MIT Press: Cambridge, MA, USA, 1948. [Google Scholar]

- Li, T.Y.; Yorke, J.A. Period Three Implies Chaos. Am. Math. Mon. 1975, 82, 985. [Google Scholar] [CrossRef]

- Siegel, C.L.; Moser, J.K. Lectures on Celestial Mechanics; Springer: Berlin, Germany, 1971. [Google Scholar]

- Ott, E. Chaos in Dynamical Systems; Cambridge University Press: New York, NY, USA, 1993. [Google Scholar]

- Lighthill, J. The recently recognized failure of predictability in Newtonian dynamics. Proc. R. Soc. Lond. A 1986, 407, 35. [Google Scholar]

- Valentim, C.A.; Rabi, J.A.; David, S.A.; Machado, J.A.T. On multi step tumor growth models of fractional variable-order. BioSystems 2021, 199, 104294. [Google Scholar] [CrossRef]

- Samko, S.G.; Kilbas, A.A.; Marichev, O.L. Fractional Integrals and Derivatives, Theory and Experiment; Gordon and Breach Science Publishers: New York, NY, USA, 1987. [Google Scholar]

- Lutz, E. Fractional Langevin equation. Phys. Rev. E 2001, 64, 051106. [Google Scholar] [CrossRef]

- Podlubny, I. Fractional Differential Equations; Academic Press: San Diego, CA, USA, 1999. [Google Scholar]

- Rajagopalon, B.; Tarboton, D.G. Understanding complexity in the structure of rainfall. Fractals 1993, 1, 6060. [Google Scholar] [CrossRef]

- West, B.J.; Bologna, M.; Grigolini, P. Physics of Fractal Operators; Springer: New York, NY, USA, 2003. [Google Scholar]

- Goldberger, A.L.; Rigney, D.R.; West, B.J. Chaos, fractals and physiology. Sci. Am. 1990, 262, 42–49. [Google Scholar] [CrossRef]

- Einstein, A. Über die von der molekularinetischen Theorie der Wärme geforderte Bewegung von in ruhenden Flüssigkeiten suspendierten Teilchen. Ann. Phys. 1905, 322, 549. [Google Scholar] [CrossRef]

- Langevin, P. On the Theory of Brownian Motion. C. R. Acad. Sci. Paris 1908, 146, 530–533. [Google Scholar]

- Mondol, A.; Gupta, R.; Das, S.; Dutta, T. An insight into Newton’s cooling law using fractional calculus. J. App. Phys. 2018, 123, 064901. [Google Scholar] [CrossRef]

- Rocco, A.; West, B.J. Fractional calculus and the evolution of fractal phenomena. Phys. A 1999, 265, 535. [Google Scholar] [CrossRef]

- Lindenberg, K.; West, B.J. The Nonequlibrium Statistical Mechanics of Open and Closed Systems; VCH Publishers: New York, NY, USA, 1990. [Google Scholar]

- Reichl, L.E. A Modern Course in Statitica Physics, 2nd ed.; John Wiley & Sons Inc.: Hoboken, NJ, USA, 1998. [Google Scholar]

- Allegrini, P.; Grigolini, P.; West, B.J. Dynamic approach to Lévy processes. Phys. Rev. E 1996, 54, 4760–4767. [Google Scholar] [CrossRef] [PubMed]

- West, B.J. Fractional Calculus View of Complexity, Tomorrow’s Science; CRC Press: Boca Raton, FL, USA, 2016. [Google Scholar]

- Fechner, G.T. Elemente der Psychophysik; Breitkopf and Härtel: Leipzig, Germany, 1860. [Google Scholar]

- Zaslavsky, G.M. Fractional Kinetics of Hamiltonian Chaotic sytems. In Applications of Fractioanl Calculus in Physics; Hilfer, R., Ed.; World Scientific: River Edge, NJ, USA, 2000; pp. 203–240. [Google Scholar]

- Zaslavsky, G.M. Chaos, fractional kinetics, and anomalous transport. Phys. Rep. 2002, 371, 461. [Google Scholar] [CrossRef]

- Montroll, E.W.; Scher, H. Random walks on lattices. IV. Continuous-time walks and influence of absorbing boundaries. J. Stat. Phys. 1973, 9, 101–135. [Google Scholar] [CrossRef]

- Metzler, R.; Klafter, J. The random walk’s guide to anomalous diffusion: A fractional dynamics approach. Phys. Rep. 2000, 339, 1–77. [Google Scholar] [CrossRef]

- Montroll, E.W.; Weiss, G.H. Random walks on lattices IÍ. J. Math. Phys. 1965, 6, 167–181. [Google Scholar] [CrossRef]

- Montroll, E.W.; West, B.J. On an enriched collection of stochatic processes. In Fluctuation Phenomena, 2nd ed.; Montroll, E.W., Lebowitz, J.L., Eds.; North-Holand Personal Library: New York, NY, USA, 1987. [Google Scholar]

- Zolotarev, V.M. One-Dimensional Stable Distributions; American Mathematical Soc.: Providence, RI, USA, 1986. [Google Scholar]

- Mainardi, F.; Goreflo, R.; Li, B.-L. A fractional generalization of the Poisson process. Vietnam J. Math. 2004, 32, 53–64. [Google Scholar]

- Meiss, J.D. Class renormalization: Islands around islands. Phys. Rev. A 1986, 34, 2375. [Google Scholar] [CrossRef]

- Hughes, B.; Montroll, E.; Shlesinger, M. Fractal random walks. J. Stat. Phys. 1982, 28, 111–126. [Google Scholar] [CrossRef]

- Gillis, J.E.; Weiss, G.H. Expected number of distinct sites visited by a random walk with an infinite variance. J. Math. Phys. 1970, 11, 1307–1312. [Google Scholar] [CrossRef]

- Taleb, N.N. Antifragile, Things that Gain from Disorder; Random House: New York, NY, USA, 2012. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

West, B.J. The Fractal Tapestry of Life: III Multifractals Entail the Fractional Calculus. Fractal Fract. 2022, 6, 225. https://doi.org/10.3390/fractalfract6040225

West BJ. The Fractal Tapestry of Life: III Multifractals Entail the Fractional Calculus. Fractal and Fractional. 2022; 6(4):225. https://doi.org/10.3390/fractalfract6040225

Chicago/Turabian StyleWest, Bruce J. 2022. "The Fractal Tapestry of Life: III Multifractals Entail the Fractional Calculus" Fractal and Fractional 6, no. 4: 225. https://doi.org/10.3390/fractalfract6040225

APA StyleWest, B. J. (2022). The Fractal Tapestry of Life: III Multifractals Entail the Fractional Calculus. Fractal and Fractional, 6(4), 225. https://doi.org/10.3390/fractalfract6040225