RecurrentOcc: An Efficient Real-Time Occupancy Prediction Model with Memory Mechanism

Abstract

1. Introduction

- We introduce a new temporal fusion module, SMG, which selectively condenses valuable temporal information into a historical feature map. It allows the model to integrate only a single feature map instead of multiple temporal features, improving computational efficiency and achieving superior performance.

- We design a simpler encoder structure based on the VRWKV network, termed the Light Encoder. The introduction of this encoder reduces the model’s parameters to only 59.1 M without compromising performance.

2. Related Works

2.1. Vision-Based 3D Occupancy Prediction

2.2. Deployment-Friendly Occupancy Prediction

2.3. Temporal Fusion in 3D Occupancy Prediction

3. Method

3.1. Problem Setup

3.2. Overall Architecture

3.3. Scene Memory Gate

3.4. Light Encoder

3.5. Loss Function

4. Experiment

4.1. Datasets

4.2. Evaluation Metrics

4.3. Implementation Details

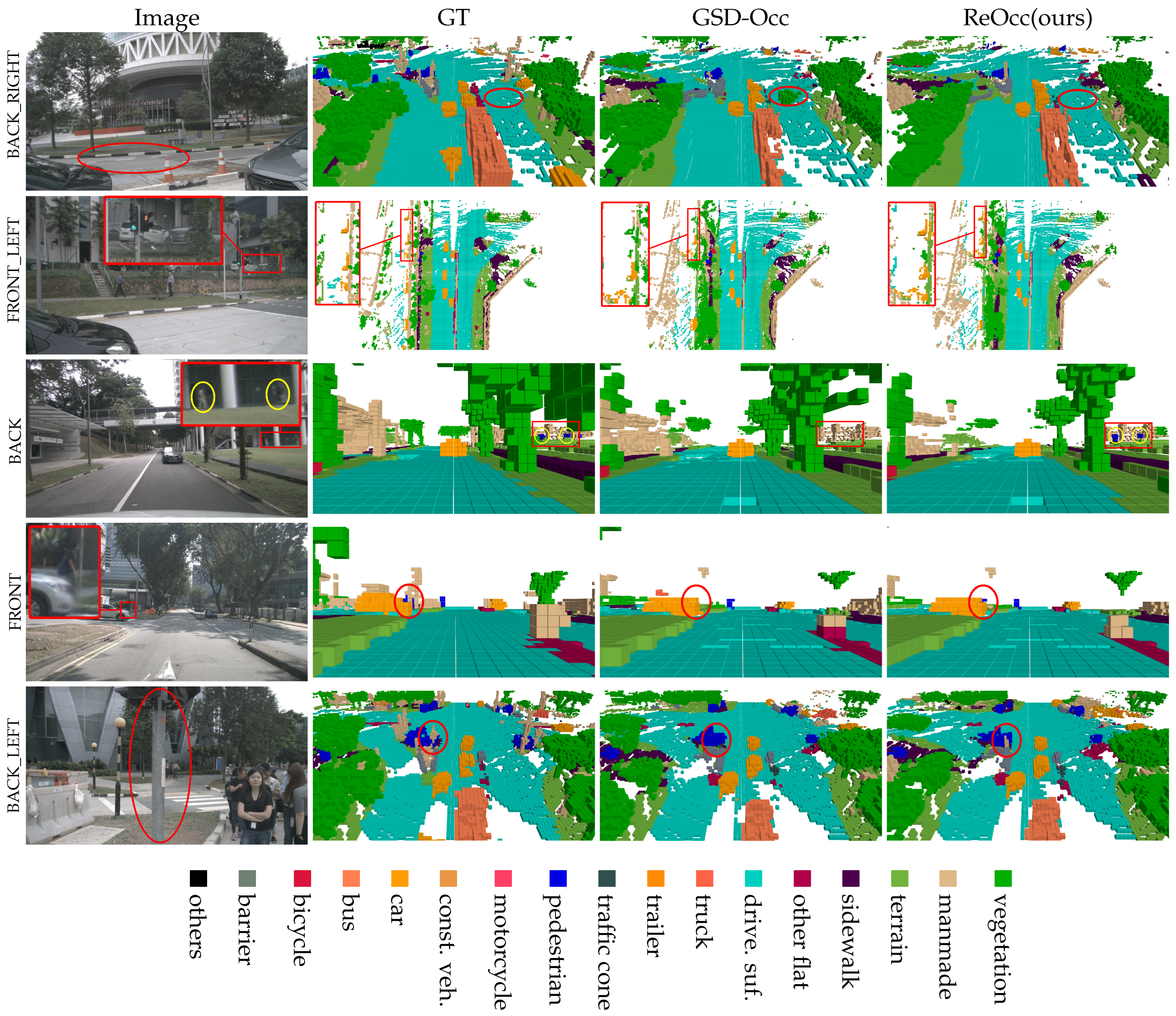

4.4. Main Results

4.5. Ablation Studies

- Ablation on different components: Table 3 demonstrates the effectiveness of the Spatial-Motion Gating (SMG) and Light Encoder. The baseline uses the GSD-Occ structure with a temporal fusion of 2 frames. Replacing the baseline’s temporal fusion method with SMG results leads to only minor changes in the model’s parameters and inference speed while increasing IoU by , mIoU by , and RayIoU by 6.0%. This demonstrates SMG’s effectiveness in preserving fused historical features. Replacing the baseline’s 2D encoder with the Light Encoder keeps mIoU and RayIoU nearly unchanged while reducing the parameter count by 16.8%, demonstrating the Light Encoder’s efficiency. When both modules are combined, the model’s inference speed slightly decreases compared with the baseline, but its IoU, mIoU, and RayIoU improve by 5.1%, 8.4%, and 6.3%, respectively, and its parameter count decreases by 16.5%.Ablation on Light Encoder: To explore a more efficient network structure for the encoded block, we conduct experiments with the VRWKV [13], VMamba [30], and ResNet [31] networks, as shown in Table 4. Compared with ResNet, VMamba improves IoU by 0.37, mIoU by 0.20, slightly decreases RayIoU by 0.03, reduces parameters by 0.8 M, and lowers inference speed by 1.5 FPS. VRWKV, on the other hand, achieves a higher IoU and mIoU by 0.43 and 0.28 but a lower RayIoU by 0.3. It has 0.4M fewer parameters but has a lower inference speed by 0.8 FPS. Considering mIoU as the primary metric, we choose the VRWKV network as the basic block in the Light Encoder. We also perform ablation experiments on the number of encode blocks. Increasing the number of blocks from 1 to 2 improves IoU by 0.21, mIoU by 0.3, and RayIoU by 0.03, but FPS decreases by 0.8, and the parameter count increases by 2.34 M. Increasing the number of blocks from 2 to 3 improves IoU by 0.37, mIoU by 0.23, and RayIoU by 0.12, but FPS decreases by 1.4, and the parameter count increases by 5.77 M. To balance the model’s performance, size, and inference speed, we set the number of blocks to 2 in this paper.Ablation on SMG: To further validate the effectiveness and efficiency of SMG on temporal fusion, we compared it with GSD-Occ’s temporal fusion method (denoted as GSD fusion) with 2-frame and 16-frame inputs, as shown in Table 5. SMG outperforms GSD fusion(16f) by 0.63 in IoU, 0.89 in mIoU, 0.41 in RayIoU, and 0.4 in FPS, while requiring only 3.53M of storage space for temporal features. Although GSD fusion(2f) has fewer parameters and smaller storage compared with SMG, its performance is significantly inferior to SMG. SMG outperforms GSD fusion(2f) by 3.4% in IoU, 7.9% in mIoU, and 7.3% in RayIoU. This demonstrates SMG’s superior temporal feature fusion efficiency compared with GSD-Occ’s method. Moreover, to further validate the effectiveness of SMG’s mask mechanism, we conducted an ablation study by removing the mask module. As shown in Table 6, this modification resulted in performance degradation of 0.42 mIoU and 0.45 RayIoU. The result demonstrates that SMG’s mask mechanism effectively filters temporal feature information and enhances the model’s focus on semantically valuable regions. Additionally, we conducted an experiment to investigate whether SMG requires an active forgetting mechanism similar to LSTM’s forget gate, which is also present in Table 6. We implemented a simple forget gate (FG) consisting of a convolutional layer with kernel size 3 and a sigmoid activation layer. Before storing the history feature map, is first processed through this forget gate:The addition of the forget gate yielded marginal IoU improvement while causing slight degradation in mIoU and RayIoU metrics. These results demonstrate that the active forgetting mechanism is unnecessary, as our existing mask mechanism already provides adequate filtering capability, and the recurrent computation inherently possesses long-term forgetting capacity.Ablation on 3D-to-BEV transformation: We compared two existing methods for compressing 3D features into 2D representations. The first approach performs mean pooling along the height dimension, while the second method, proposed by FlashOcc [6], initially merges both the feature dimension and height dimension into a single dimension and then applies a convolutional layer for channel compression. As shown in Table 7, we denote these methods as ’mean pooling’ and ’convolution’, respectively. Both methods achieve comparable IoU scores, with mean pooling showing a slight advantage of +0.33 mIoU and +0.21 RayIoU.

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A

| Method | Vis. Mask | SC IoU | SSC mIoU |  Others Others |  Barrier Barrier |  Bicycle Bicycle |  Bus Bus |  Car Car |  Const. Veh. Const. Veh. |  Motorcycle Motorcycle |  Pedestrian Pedestrian |  Traffic Cone Traffic Cone |  Trailer Trailer |  Truck Truck |  Drive. Suf. Drive. Suf. |  Other Flat Other Flat |  Sidewalk Sidewalk |  Terrain Terrain |  Manmade Manmade |  Vegetation Vegetation |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| ReOcc-B | ✓ | 70.50 | 39.97 | 12.37 | 47.80 | 28.76 | 43.82 | 51.23 | 27.32 | 28.02 | 28.94 | 29.40 | 32.94 | 37.64 | 81.34 | 43.50 | 50.96 | 55.53 | 42.86 | 37.01 |

| ReOcc-S | ✓ | 70.02 | 39.28 | 12.78 | 47.22 | 27.54 | 44.31 | 50.90 | 26.38 | 27.58 | 28.20 | 28.23 | 31.00 | 36.92 | 80.77 | 42.21 | 50.39 | 55.10 | 41.73 | 36.48 |

| ReOcc-B | ✕ | 45.91 | 31.98 | 11.20 | 43.64 | 24.75 | 40.42 | 43.91 | 22.51 | 26.89 | 25.74 | 27.90 | 22.36 | 32.48 | 65.40 | 36.75 | 39.75 | 36.70 | 21.26 | 22.01 |

| ReOcc-S | ✕ | 45.47 | 31.61 | 10.87 | 42.60 | 25.93 | 39.50 | 43.62 | 22.30 | 26.46 | 25.63 | 27.35 | 21.48 | 31.95 | 65.07 | 35.91 | 39.08 | 36.22 | 21.03 | 22.11 |

References

- Cao, A.-Q.; de Charette, R. Monoscene: Monocular 3d Semantic Scene Completion. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022. [Google Scholar]

- Li, Y.; Yu, Z.; Choy, C.B.; Xiao, C.; Álvarez, J.M.; Fidler, S.; Feng, C.; Anandkumar, A. VoxFormer: Sparse Voxel Transformer for Camera-Based 3D Semantic Scene Completion. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 18–22 June 2023. [Google Scholar]

- Ma, Q.; Tan, X.; Qu, Y.; Ma, L.; Zhang, Z.; Xie, Y. COTR: Compact Occupancy TRansformer for Vision-Based 3D Occupancy Prediction. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 17–21 June 2024. [Google Scholar]

- Wang, Y.; Chen, Y.; Liao, X.; Fan, L.; Zhang, Z. PanoOcc: Unified Occupancy Representation for Camera-based 3D Panoptic Segmentation. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 17–21 June 2024. [Google Scholar]

- Liu, H.; Chen, Y.; Wang, H.; Yang, Z.; Li, T.; Zeng, J.; Chen, L.; Li, H.; Wang, L. Fully Sparse 3D Occupancy Prediction. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, Netherlands, 8–16 October 2023. [Google Scholar]

- Yu, Z.; Shu, C.; Deng, J.; Lu, K.; Liu, Z.; Yu, J.; Yang, D.; Li, H.; Chen, Y. FlashOcc: Fast and Memory-Efficient Occupancy Prediction via Channel-to-Height Plugin. arXiv 2023, arXiv:2311.12058. [Google Scholar]

- Hou, J.; Li, X.; Guan, W.; Zhang, G.; Feng, D.; Du, Y.; Xue, X.; Pu, J. FastOcc: Accelerating 3D Occupancy Prediction by Fusing the 2D Bird’s-Eye View and Perspective View. In Proceedings of the 2024 IEEE International Conference on Robotics and Automation (ICRA), Yokohama, Japan, 13–17 May 2024. [Google Scholar]

- Pan, M.; Liu, J.; Zhang, R.; Huang, P.; Li, X.; Liu, L.; Zhang, S. Renderocc: Vision-Centric 3d Occupancy Prediction with 2d Rendering Supervision. In Proceedings of the 2024 IEEE International Conference on Robotics and Automation (ICRA), Yokohama, Japan, 13–17 May 2024. [Google Scholar]

- Yu, Z.; Shu, C.; Sun, Q.; Linghu, J.; Wei, X.; Yu, J.; Liu, Z.; Yang, D.; Li, H.; Chen, Y. Panoptic-FlashOcc: An Efficient Baseline to Marry Semantic Occupancy with Panoptic via Instance Center. arXiv 2024, arXiv:2406.10527. [Google Scholar]

- Li, Z.; Yu, Z.; Wang, W.; Anandkumar, A.; Lu, T.; Alvarez, J.M. Fb-bev: Bev representation from forward-backward view transformations. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 2–6 October 2023. [Google Scholar]

- Huang, J.; Huang, G. BEVDet4D: Exploit Temporal Cues in Multi-camera 3D Object Detection. arXiv 2022, arXiv:2203.17054. [Google Scholar]

- He, Y.; Chen, W.; Xun, T.; Tan, Y. Real-Time 3D Occupancy Prediction via Geometric-Semantic Disentanglement. arXiv 2024, arXiv:2407.13155. [Google Scholar]

- Duan, Y.; Wang, W.; Chen, Z.; Zhu, X.; Lu, L.; Lu, T.; Qiao, Y.; Li, H.; Dai, J.; Wang, W. Vision-RWKV: Efficient and Scalable Visual Perception with RWKV-Like Architectures. arXiv 2024, arXiv:2403.02308. [Google Scholar]

- Tian, X.; Jiang, T.; Yun, L.; Wang, Y.; Wang, Y.; Zhao, H. Occ3D: A Large-Scale 3D Occupancy Prediction Benchmark for Autonomous Driving. arXiv 2023, arXiv:2304.14365. [Google Scholar]

- Zhang, Y.; Zhu, Z.; Du, D. OccFormer: Dual-path Transformer for Vision-based 3D Semantic Occupancy Prediction. In Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 2–6 October 2023. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is All you Need. In Proceedings of the 31st Conference on Neural Information Processing Systems (NeurIPS), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Cheng, B.; Misra, I.; Schwing, A.G.; Kirillov, A.; Girdhar, R. Masked-attention Mask Transformer for Universal Image Segmentation. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022. [Google Scholar]

- Wei, Y.; Zhao, L.; Zheng, W.; Zhu, Z.; Zhou, J.; Lu, J. SurroundOcc: Multi-Camera 3D Occupancy Prediction for Autonomous Driving. In Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 2–6 October 2023. [Google Scholar]

- Zhu, X.; Su, W.; Lu, L.; Li, B.; Wang, X.; Dai, J. Deformable detr: Deformable transformers for end-to-end object detection. In Proceedings of the International Conference on Learning Representations (ICLR), Virtual Event, 26–30 April 2020. [Google Scholar]

- Jiang, H.; Cheng, T.; Gao, N.; Zhang, H.; Liu, W.; Wang, X. Symphonize 3D Semantic Scene Completion with Contextual Instance Queries. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 17–21 June 2024. [Google Scholar]

- Huang, Y.; Zheng, W.; Zhang, Y.; Zhou, J.; Lu, J. Tri-Perspective View for Vision-Based 3D Semantic Occupancy Prediction. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 18–22 June 2023. [Google Scholar]

- Lu, Y.; Zhu, X.; Wang, T.; Ma, Y. OctreeOcc: Efficient and Multi-Granularity Occupancy Prediction Using Octree Queries. arXiv 2023, arXiv:2312.03774. [Google Scholar]

- Meagher, D. Geometric modeling using octree encoding. Comput. Graph. Image Process. 1982, 19, 129–147. [Google Scholar] [CrossRef]

- Ding, X.; Zhang, Y.; Ge, Y.; Zhao, S.; Song, L.; Yue, X.; Shan, Y. Unireplknet: A universal perception large-kernel convnet for audio, video, point cloud, time-series and image recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 17–21 June 2024. [Google Scholar]

- Li, B.; Deng, J.; Zhang, W.; Liang, Z.; Du, D.; Jin, X.; Zeng, W. Hierarchical Temporal Context Learning for Camera-Based Semantic Scene Completion. In Proceedings of the European Conference on Computer Vision, Milan, Italy, 1–4 October 2024. [Google Scholar]

- Park, J.; Xu, C.; Yang, S.; Keutzer, K.; Kitani, K.M.; Tomizuka, M.; Zhan, W. Time will tell: New outlooks and a baseline for temporal multi view 3d object detection. In Proceedings of the International Conference on Learning Representations (ICLR), Virtual Event, 1–5 May 2023. [Google Scholar]

- Philion, J.; Fidler, S. Lift, splat, shoot: Encoding images from arbitrary camera rigs by implicitly unprojecting to 3d. In Proceedings of the European Conference on Computer Vision (ECCV), Glasgow, UK, 23–28 August 2020. [Google Scholar]

- Li, Y.; Ge, Z.; Yu, G.; Yang, J.; Wang, Z.; Shi, Y.; Sun, J.; Li, Z. Bevdepth: Acquisition of reliable depth for multi-view 3d object detection. In Proceedings of the AAAI Conference on Artificial Intelligence (AAAI), Washington, DC, USA, 7–14 February 2023. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Liu, Y.; Tian, Y.; Zhao, Y.; Yu, H.; Xie, L.; Wang, Y.; Ye, Q.; Liu, Y. VMamba: Visual State Space Model. arXiv 2024, arXiv:2401.10166. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Caesar, H.; Bankiti, V.; Lang, A.H.; Vora, S.; Liong, V.E.; Xu, Q.; Krishnan, A.; Pan, Y.; Baldan, G.; Beijbom, O. nuscenes: A multi modal dataset for autonomous driving. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Loshchilov, I.; Hutter, F. Fixing Weight Decay Regularization in Adam. arXiv 2017, arXiv:1711.05101. [Google Scholar]

- Li, Z.; Wang, W.; Li, H.; Xie, E.; Sima, C.; Lu, T.; Qiao, Y.; Dai, J. Bevformer: Learning bird’s-eye-view representation from multi-camera images via spatiotemporal transformers. In Proceedings of the European Conference on Computer Vision (ECCV), Tel Aviv, Israel, 23–27 October 2022. [Google Scholar]

- Gan, W.; Mo, N.; Xu, H.; Yokoya, N. A Comprehensive Framework for 3D Occupancy Estimation in Autonomous Driving. IEEE Trans. Intell. Veh. 2024. early access. [Google Scholar] [CrossRef]

| BEVFormer [34] | R101 | 1600 × 900 | ✓ | 39.2 | 32.4 | 26.1 | 32.9 | 38.0 | 3.0 |

| FB-Occ(16f) [10] | R50 | 704 × 256 | ✓ | 39.1 | 33.5 | 26.7 | 34.1 | 39.7 | 10.3 |

| FlashOcc [6] | R50 | 704 × 256 | ✓ | 32.0 | - | - | - | - | 29.6 |

| PanoOcc [4] | R101 | 1600 × 900 | ✓ | 42.1 | - | - | - | - | 3.0 |

| COTR [3] | R50 | 704 × 256 | ✓ | 44.5 | - | - | - | - | 0.9 |

| GSD-Occ(16f) [12] | R50 | 704 × 256 | ✓ | 39.4 | - | - | - | - | 20.0 |

| ReOcc-B(2f) (ours) | R50 | 704 × 256 | ✓ | 39.9 | 31.5 | 25.0 | 32.0 | 37.6 | 23.4 |

| ReOcc-S(2f) (ours) | R50 | 704 × 256 | ✓ | 39.2 | 31.4 | 24.9 | 31.9 | 37.3 | 26.4 |

| BEVFormer [34] | R101 | 1600 × 900 | ✕ | 23.7 | 33.7 | - | - | - | 3.0 |

| FB-Occ(16f) [10] | R50 | 704 × 256 | ✕ | 27.9 | 35.6 | - | - | - | 10.3 |

| SimpleOcc [35] | R101 | 672 × 336 | ✕ | 31.8 | 22.5 | 17.0 | 22.7 | 27.9 | 9.7 |

| BEVDet-Occ(2f) [11] | R50 | 704 × 256 | ✕ | 36.1 | 29.6 | 23.6 | 30.0 | 35.1 | 2.6 |

| BEVDet-Occ-Long(8f) [11] | R50 | ✕ | 39.3 | 32.6 | 26.6 | 33.1 | 38.2 | 0.8 | |

| SparseOcc(8f) [5] | R50 | 704 × 256 | ✕ | 30.1 | 34.0 | 28.0 | 34.7 | 39.4 | 17.3 |

| GSD-Occ(16f) [12] | R50 | 704 × 256 | ✕ | 31.8 | 38.9 | 32.9 | 39.7 | 44.0 | 20.0 |

| Panoptic-FlashOcc(8f) [9] | R50 | 704 × 256 | ✕ | 31.6 | 38.5 | 32.8 | 39.3 | 43.4 | 35.6 |

| ReOcc-B(2f) (ours) | R50 | 704 × 256 | ✕ | 31.9 | 38.2 | 32.4 | 38.9 | 43.2 | 23.4 |

| ReOcc-S(2f) (ours) | R50 | 704 × 256 | ✕ | 31.6 | 37.8 | 31.9 | 38.6 | 43.0 | 26.4 |

| *↓ | *↓ | |||||||

|---|---|---|---|---|---|---|---|---|

| GSD-Occ(2f) [12] | R50 | 704 × 256 | ✓ | 37.2 | - | 21.0 | 2.5 M | 114.7 M |

| GSD-Occ(8f) [12] | R50 | 704 × 256 | ✓ | 38.4 | - | 20.6 | 15.7 M | 114.8 M |

| GSD-Occ(16f) [12] | R50 | 704 × 256 | ✓ | 39.4 | - | 20.0 | 32.3 M | 115.0 M |

| ReOcc-B(2f) (ours) | R50 | 704 × 256 | ✓ | 39.9 | 31.5 | 23.4 | 3.5 M | 59.1 M |

| ReOcc-S(2f) (ours) | R50 | 704 × 256 | ✓ | 39.2 | 31.4 | 26.4 | 1.9 M | 55.8 M |

| SparseOcc(8f) [5] | R50 | 704 × 256 | ✕ | 30.1 | 34.0 | 17.3 | ∖ | 80.3 M |

| GSD-Occ(16f) [12] | R50 | 704 × 256 | ✕ | 31.8 | 38.9 | 20.0 | 32.3 M | 115.0 M |

| Panoptic-FlashOcc(2f) [9] | R50 | 704 × 256 | ✕ | 30.3 | 36.8 | 35.9 | 6.8 M | 75.2 M |

| Panoptic-FlashOcc(8f) [9] | R50 | 704 × 256 | ✕ | 31.6 | 38.5 | 35.6 | 55.0 M | 76.8 M |

| Panoptic-FlashOcc(2f) * [9] | R50 | 704 × 256 | ✕ | 30.3 | 36.8 | 22.5 * | ∖ | 75.2 M |

| Panoptic-FlashOcc(8f) * [9] | R50 | 704 × 256 | ✕ | 31.6 | 38.5 | 6.9 * | ∖ | 76.8 M |

| ReOcc-B(2f) (ours) | R50 | 704 × 256 | ✕ | 31.9 | 38.2 | 23.4 | 3.5 M | 59.1 M |

| ReOcc-S(2f) (ours) | R50 | 704 × 256 | ✕ | 31.6 | 37.8 | 26.4 | 1.9 M | 55.8 M |

| Baseline | 67.07 | 36.85 | 29.71 | 23.6 | 70.84 M |

| +SMG | 69.43 | 39.43 | 31.52 | 23.6 | 71.07 M |

| +Light Encoder | 68.13 | 37.02 | 29.44 | 23.3 | 58.91 M |

| Final | 70.50 | 39.97 | 31.59 | 23.4 | 59.15 M |

| ResNet | 2 | 70.07 | 39.69 | 31.89 | 24.2 | 59.55 M |

| VMamba [30] | 2 | 70.44 | 39.89 | 31.86 | 22.7 | 58.95 M |

| VRWKV [13] | 2 | 70.50 | 39.97 | 31.59 | 23.4 | 59.15 M |

| VRWKV [13] | 1 | 70.29 | 39.67 | 31.56 | 24.2 | 56.81 M |

| VRWKV [13] | 3 | 70.87 | 40.20 | 31.71 | 22.0 | 64.92 M |

| SMG | 70.50 | 39.97 | 31.59 | 23.4 | 59.15 M | 3.53 M |

| GSD fusion(2f) | 68.13 | 37.02 | 29.44 | 23.3 | 58.91 M | 2.53 M |

| GSD fusion(16f) | 69.87 | 39.08 | 31.18 | 23.0 | 59.06 M | 25.09 M |

| SMG | 70.50 | 39.97 | 31.59 | 25.04 | 32.05 | 37.66 |

| SMG w/o mask | 70.27 | 39.55 | 31.14 | 24.70 | 31.63 | 37.08 |

| SMG w/ FG | 70.59 | 39.77 | 31.46 | 24.88 | 31.97 | 37.54 |

| mean pooling | 70.50 | 39.97 | 31.59 | 25.04 | 32.05 | 37.66 |

| convolution | 70.51 | 39.64 | 31.38 | 24.93 | 31.81 | 37.39 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, Z.; Xie, Y.; Wei, Y. RecurrentOcc: An Efficient Real-Time Occupancy Prediction Model with Memory Mechanism. Big Data Cogn. Comput. 2025, 9, 176. https://doi.org/10.3390/bdcc9070176

Chen Z, Xie Y, Wei Y. RecurrentOcc: An Efficient Real-Time Occupancy Prediction Model with Memory Mechanism. Big Data and Cognitive Computing. 2025; 9(7):176. https://doi.org/10.3390/bdcc9070176

Chicago/Turabian StyleChen, Zimo, Yuxiang Xie, and Yingmei Wei. 2025. "RecurrentOcc: An Efficient Real-Time Occupancy Prediction Model with Memory Mechanism" Big Data and Cognitive Computing 9, no. 7: 176. https://doi.org/10.3390/bdcc9070176

APA StyleChen, Z., Xie, Y., & Wei, Y. (2025). RecurrentOcc: An Efficient Real-Time Occupancy Prediction Model with Memory Mechanism. Big Data and Cognitive Computing, 9(7), 176. https://doi.org/10.3390/bdcc9070176