Abstract

Automatic Facial Expression Recognition (AFER) is a key component of affective computing, enabling machines to recognize and interpret human emotions across various applications such as human–computer interaction, healthcare, entertainment, and social robotics. Dynamic AFER systems, which exploit image sequences, can capture the temporal evolution of facial expressions but often suffer from high computational costs, limiting their suitability for real-time use. In this paper, we propose an efficient dynamic AFER approach based on a novel spatio-temporal representation. Facial landmarks are extracted, and all possible Euclidean distances are computed to model the spatial structure. To capture temporal variations, three statistical metrics are applied to each distance sequence. A feature selection stage based on the Extremely Randomized Trees (ExtRa-Trees) algorithm is then performed to reduce dimensionality and enhance classification performance. Finally, the emotions are classified using a linear multi-class Support Vector Machine (SVM) and compared against the k-Nearest Neighbors (k-NN) method. The proposed approach is evaluated on three benchmark datasets: CK+, MUG, and MMI, achieving recognition rates of 94.65%, 93.98%, and 75.59%, respectively. Our results demonstrate that the proposed method achieves a strong balance between accuracy and computational efficiency, making it well-suited for real-time facial expression recognition applications.

1. Introduction

Human beings are inherently social and rely heavily on communication to interact with one another. Emotions constitute a fundamental form of non-verbal communication and play a critical role in daily interactions [1]. The rapid development and widespread adoption of Information and Communication Technologies (ICT) have paved the way for the automatic recognition of human emotions, giving rise to numerous applications in fields such as Human–Computer Interaction (HCI), healthcare, entertainment, social robotics, and Ambient Assisted Living (AAL) [2].

Emotions can be expressed and perceived through various modalities, including speech, body gestures, and facial expressions. Automatic emotion recognition systems can be unimodal, relying on a single modality, or multimodal, combining multiple sources of information to improve accuracy. Among these, facial expressions represent one of the most informative and widely studied modalities. According to Mehrabian’s well-known study (1968) [1], approximately of emotional information is conveyed through facial expressions, while vocal and verbal cues account for and , respectively.

Among the foundational works in this field, Ekman and Friesen [3] introduced the concept of six basic emotions—happiness, sadness, anger, fear, surprise, and disgust—each with distinct facial signatures. Their Facial Action Coding System (FACS) [4] laid the groundwork for Automatic Facial Expression Recognition (AFER), providing a structured way to decode muscular movements into emotional states.

AFER systems can be broadly divided into static approaches, which analyze individual images, and dynamic ones, which process image sequences to capture temporal evolution. Although dynamic systems tend to achieve better recognition performance by incorporating motion and progression of expressions, they introduce new challenges in terms of model complexity and computational cost.

Several recent works have attempted to address these issues. For instance, Perveen et al. [5] proposed an efficient kernel-based spatio-temporal modeling approach; however, its reliance on global statistical patterns may limit its ability to capture fine-grained local deformations. Lopez-Gil and Garay-Vitoria [6] focused on frame-level classification for real-time efficiency, but their method lacks temporal context modeling, which is crucial for understanding subtle expression transitions.

To improve robustness, Shahid et al. [7] employed facial region segmentation and harmonic descriptors. While effective against occlusion and misalignment, this method depends heavily on accurate facial region division, which may be unreliable in real-world conditions. Similarly, Ngoc et al. [8] used a Directed Graph Neural Network over facial landmarks for dynamic modeling. Yet, such graph-based deep models often require large annotated datasets and significant computational resources, limiting their deployment in constrained environments.

Hybrid methods like those of Aghamaleki and Ashkani Chenarlogh [9], which combine handcrafted features with learned features, help mitigate data scarcity. However, these architectures still depend on deep neural networks and often fail to generalize across datasets without careful tuning. Moreover, methods that fuse appearance-based features often suffer from sensitivity to lighting, pose variations, and expression intensity.

In addition, despite advances in deep learning, the generalization of AFER systems remains a concern. Most models are trained and tested on small and controlled datasets, and their performance often degrades significantly when applied to more varied or spontaneous datasets, as shown in multiple comparative studies [5,7,8].

Therefore, there is still a need for computationally efficient, geometrically grounded, and dataset-agnostic approaches to dynamic facial expression recognition—especially those capable of operating in real-time and under limited-resource constraints.

In this work, we propose such an approach: an efficient dynamic AFER method that recognizes emotions from facial expression sequences using only statistical geometric descriptors. The method is structured into three main stages. First, facial landmarks are detected, and all pairwise Euclidean distances are computed as spatial features. Then, temporal dynamics are encoded by computing three statistical measures over the sequence for each distance, yielding a compact spatio-temporal feature vector. To handle the high dimensionality of this vector, we apply a feature selection strategy based on the Extremely Randomized Trees (ExtRa-Trees) classifier. Finally, we evaluate two standard classifiers: multi-class Support Vector Machine (SVM) and k-Nearest Neighbors (k-NN).

To assess the performance of the proposed method, we conduct experiments on three well-known benchmark datasets: CK+ [10], MUG [11], and MMI [12], analyzing both recognition accuracy and computational cost.

The main contributions of this paper are summarized as follows:

- A novel geometry-based dynamic AFER pipeline → We introduce a lightweight and efficient facial expression recognition system that relies solely on facial landmark trajectories. By avoiding appearance-based or deep-learning features, our method significantly reduces computational cost and dependency on high-end hardware, making it well-suited for real-time and embedded applications.

- A compact spatio-temporal representation of facial motion → We propose a simple yet effective statistical descriptor that models the temporal dynamics of facial expressions through the variation of distances and angles between landmarks over time. This handcrafted representation captures discriminative patterns without requiring large datasets or complex training procedures.

- Extensive and comparative evaluation on benchmark datasets → We evaluate our method on three widely used dynamic benchmark datasets (CK+, MMI, and MUG) and provide a comparative analysis against eleven recent state-of-the-art approaches from the last five years. The results demonstrate that our method achieves competitive accuracy, especially given its low complexity and independence from deep neural networks.

The remainder of this paper is structured as follows. Section 2 and Section 3 present the fundamentals of AFER and review related work. Section 4 discusses the current limitations and challenges of existing AFER methods. Section 5 details the proposed approach, while Section 6 describes the experimental setup and the datasets used for evaluation. The results and their interpretation are provided in Section 7. Finally, Section 8 concludes the paper and outlines future research directions.

2. Fundamentals

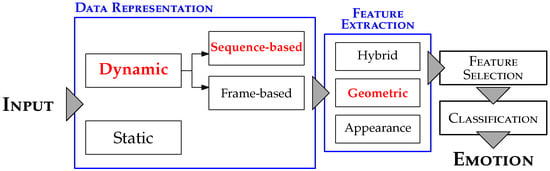

A basic AFER system shares the same fundamental building blocks as a typical pattern recognition system [13]. As illustrated in Figure 1, the first block concerns the input, and depending on its nature, AFER systems can be classified into two main categories.

Figure 1.

Basic building blocks of a common AFER system.

Static systems [14] recognize facial expressions from a single image. Generally, this type of system is less demanding in terms of computational time and resource consumption. However, static systems are limited in their ability to capture the temporal dynamics inherent to real-world facial expressions, which typically evolve through three distinct transitional phases: (1) Onset, marking the initial formation of the expression; (2) Apex, representing the peak of emotional intensity; and (3) Offset, characterizing the return to the neutral state. These phases reflect the natural progression of facial deformations, and their full characterization requires analyzing image sequences rather than isolated images.

To address this need, dynamic systems [5,15,16] have been introduced, capable of processing temporal information to better model the evolution of expressions. Furthermore, depending on how the input data are handled (see Figure 1), dynamic systems can be subdivided into two approaches:

- Sequence-based systems, which exploit the entire image sequence to build a comprehensive and temporal representation of the expression.

- Frame-based systems, which process each frame independently, without explicitly modeling the temporal continuity across frames.

Depending on the chosen data representation, the feature extraction block is adapted accordingly to process the input. As illustrated in Figure 1, three main types of descriptors can be distinguished in the context of AFER.

- Geometric-based features rely on the extraction of facial fiducial points to generate high-level representations [14], such as the Active Appearance Model (AAM) and the Active Shape Model (ASM) [17].

- Appearance-based descriptors operate on the entire facial image to capture texture information, often through techniques such as the two-dimensional Discrete Wavelet Transform (DWT) [18] or the Local Binary Pattern (LBP) [19].

- Hybrid-based descriptors combine both geometric and appearance features [18]. While hybrid approaches can enhance recognition accuracy, they also tend to increase system complexity, processing time, and computational load.

The feature selection block, although optional, plays a crucial role in improving system efficiency. It aims to reduce the dimensionality of feature vectors by retaining only the most relevant attributes, thereby eliminating redundant and noisy information.

Finally, the classification block typically relies on a supervised machine learning approach. It requires training on labeled samples to build a predictive model capable of classifying new, unlabeled inputs.

3. Related Works

Facial Expression Recognition (FER) has received significant attention, with a wide range of techniques proposed for both static and dynamic settings. Dynamic AFER systems, which analyze image sequences, are particularly effective in capturing the temporal evolution of expressions. However, these systems often encounter computational challenges that hinder real-time deployment.

To address these, Perveen et al. [5] proposed a kernel-based dynamic method using a universal Gaussian Mixture Model with a Mean Interval Kernel (uGMM-MIK), capable of modeling local spatio-temporal patterns while maintaining global contextual variations. Their work shows that probability-based kernels provide strong discriminative capabilities, while matching kernels enhance computational efficiency. In a similar direction, Zhang et al. [15] developed a hybrid deep learning framework that fuses spatial and temporal CNNs through a Deep Belief Network (DBN), effectively combining spatio-temporal features for video-based FER.

To better exploit the temporal dimension, spatio-temporal fusion has been explored using both handcrafted and learned features. Aghamaleki and Ashkani Chenarlogh [9] introduced a multi-stream CNN architecture that combines traditional descriptors like LBP and Sobel edge maps with CNN-based features, offering a robust solution in contexts with limited training data. Further enhancing robustness, Shahid et al. [7] partitioned the face into eleven sub-regions and extracted harmonic shape descriptors to mitigate the impact of misalignments, lighting variations, and occlusions.

Feature selection also plays a critical role in balancing recognition performance with computational cost. Pham et al. [20] introduced a custom loss function that encourages intra-class compactness and inter-class separability, yielding highly discriminative CNN features. In another approach, Vaijayanthi and Arunnehru [21] employed dense SIFT features coupled with classical classifiers, showing improved adaptability across varying temporal and environmental conditions.

Regarding classification, several strategies have been proposed to handle the multi-class nature of emotion recognition. Sen et al. [17] utilized Directed Acyclic Graph SVMs (DAGSVM) to achieve accurate and efficient multi-emotion classification. Kartheek et al. [22] introduced Windmill Graph-based Feature Descriptors (WGFD), leveraging graph structures to encode local and global pixel relationships, which outperformed conventional descriptors when paired with multi-class SVMs.

For real-time applications, some studies emphasized lightweight solutions. Lopez-Gil and Garay-Vitoria [6] achieved near real-time performance by classifying individual frames using an ensemble of classifiers, striking a balance between accuracy and speed. Similarly, Perveen et al. [5] highlighted the potential of dynamic kernels to reduce computational burden while maintaining recognition performance.

Recent advances in deep learning have further pushed the field forward. Yang et al. [23] developed a multi-branch CNN with a multi-source loss function to boost generalization under uncontrolled conditions. Singh et al. [24] designed a hybrid architecture combining 3D-CNN and ConvLSTM modules, capturing temporal dependencies more effectively than standard LSTM-based models while preserving spatial features.

Several studies have explored hybrid representations. Mukhopadhyay et al. [25] integrated Completed Local Binary Patterns (CLBP) with CNNs to improve resilience to noise, pose, and lighting changes. Kumar Tataji et al. [26] proposed a Cross-Connected CNN (CC-CNN) that fuses handcrafted descriptors like Cyclopentane Feature Descriptor (CyFD) with learned features across multiple layers to extract both local and global information.

Handcrafted descriptors remain relevant in certain contexts. Verma et al. [27] introduced the Cross-Centroid Ripple Pattern (CRIP), which models spatial variation via centroid analysis across concentric ripples, enhancing the model’s ability to detect spontaneous expressions and subtle changes under varying conditions.

In terms of architectural innovation, Reddy et al. [28] proposed the Deep Cross Feature Adaptive CNN (DCFA-CNN), a two-branch architecture extracting both shape and texture features via dedicated modules, followed by cross-feature fusion to better target expression-relevant regions.

To address the challenge of limited training data, transfer learning approaches have proven valuable. Li et al. [29] introduced a Feature Transfer Learning (FTL) framework that uses a large pre-trained model to guide a smaller FER model, aligning features via selective parameter sharing to accelerate convergence and retain flexibility.

Multimodal fusion has also emerged as a promising direction. Samadiani et al. [30] presented VERMFF, a multimodal framework combining visual (e.g., LBP, LBP-TOP) and audio modalities. A kernel-based sparse representation mechanism selects keyframes, while a random forest and decision-level fusion improve performance in unconstrained environments.

Graph-based approaches have recently gained traction. Ngoc et al. [8] proposed a Directed Graph Neural Network (DGNN) that represents facial landmarks as graph nodes connected via Delaunay triangulation. A master node is added to enhance global communication, and gated linear units (GLU) stabilize temporal modeling. The model’s accuracy is further improved through late fusion with appearance-based CNNs.

Together, these studies highlight the critical importance of effective spatio-temporal feature modeling, discriminative classification, and computational efficiency in dynamic FER. Building upon these insights, our proposed method leverages statistical geometric spatio-temporal descriptors and optimized feature selection, offering a balanced solution that achieves competitive accuracy while remaining computationally lightweight—a valuable feature for real-time applications.

4. Limitations & Challenges

The review of existing dynamic FER methods highlights several recurrent and emerging challenges that limit their practical deployment, especially in real-time and unconstrained environments. These challenges, many of which remain critical in recent literature, can be categorized into computational, robustness, and representation-related limitations.

4.1. Computational Complexity and Efficiency Challenges

Traditional deep learning models, despite achieving high accuracy, often come with excessive computational complexity, large parameter sizes, and significant memory and inference costs [31,32,33,34,35,36]. Recent state-of-the-art models such as POSTER++ [31] incorporate sophisticated components like two-stream pipelines, pyramidal feature extractors, and cross-attention mechanisms, achieving high accuracy but at the cost of high computational complexity and large parameter counts. This reliance on computationally intensive deep architectures, including 3D convolutions, recurrent units, or multi-branch designs [15,24,28], severely restricts their suitability for real-time applications and deployment on low-resource or embedded devices [32,34,35].

Traditional attention mechanisms, such as vanilla cross-attention, often have quadratic complexity, making them unsuitable for low-latency applications [31]. While lightweight FER models [32,34,35] aim to address deployment constraints, they often sacrifice representation power, leading to limited accuracy and poor generalization, especially under varying illumination, occlusions, and pose variations.

4.2. Robustness and Generalization Issues

Current AFER systems frequently demonstrate a lack of robustness in uncontrolled environments due to factors such as occlusions, pose variations, illumination changes, motion blur, and subtle/transient expressions [33,36,37]. Models trained in constrained, single-modality settings often fail to generalize well to “in-the-wild” datasets, leading to a significant domain gap [33,36]. The reliance on unimodal input (e.g., RGB images) is often insufficient for resilience to noise or missing data [37].

Multimodal approaches employing RGB, optical flow, depth, and landmarks [37] enhance robustness in uncontrolled settings, yet these methods remain computationally expensive and struggle to efficiently fuse diverse modalities in real time. Methods relying on optical flow can be sensitive to noise and occlusions [36], while 3D CNNs, despite capturing spatio-temporal properties, often lead to an increased number of training parameters [36].

4.3. Expression Modeling and Representation Challenges

A particularly pressing issue, highlighted in recent research, is the difficulty in recognizing compound expressions and modeling their inherent ambiguity in real-world conditions [38]. Most traditional models focus on basic emotions and struggle with nuanced combinations of expressions, failing to capture the overlapping nature and varying degrees of emotional states [38]. Hybrid architectures that combine CNNs and transformers [38] show promise in modeling the ambiguity and complexity of compound emotions, but face challenges in capturing fine-grained local textures while modeling global dependencies, requiring sophisticated multi-label learning schemes that complicate training and inference.

Challenges persist in efficiently handling multi-scale features and designing adaptive attention mechanisms [31,35,37]. There is a need for lightweight and effective feature fusion strategies that can capture both local texture and global dependencies without sacrificing efficiency [35,38]. Additionally, many CNN-based methods overly focus on central facial regions, neglecting subtle cues on the cheeks or forehead.

4.4. Data and Training Limitations

The underlying data limitations remain a persistent challenge, as FER datasets are often small, suffer from class imbalance, and are tailored for specific tasks, leading to overfitting in deep models [33,36]. Training difficulties include the need for extensive hyperparameter tuning and sensitivity to class imbalance, particularly in accurately recognizing compound or subtle expressions [36,38].

High dimensionality of feature vectors in handcrafted or hybrid methods increases memory footprint and inference latency, while temporal inconsistency and difficulties in preserving the authentic dynamics of facial sequences affect methods that rely on frame sampling or normalization [36].

4.5. Essential Requirements for Effective Dynamic FER Systems

Based on these observations and the latest research, we identify five essential requirements for an effective dynamic AFER system that aims to address these persistent and emerging challenges:

- Preservation of sequence integrity without adding or removing frames, to maintain authentic temporal dynamics, crucial given the complexity of dynamic emotional evolution [37].

- Construction of a compact spatio-temporal representation that efficiently captures both spatial geometry and temporal evolution, directly addressing the need for lighter models and efficient feature fusion [31,32].

- Computational efficiency suitable for real-time use, avoiding heavy models or large feature vectors, enabling deployment on low-resource devices without sacrificing accuracy [31,32,34,35].

- Achieving competitive accuracy comparable to state-of-the-art methods, while balancing it with efficiency and demonstrating robustness across multiple benchmark datasets [33].

- Demonstrating robustness and generalization across diverse datasets and challenging “in-the-wild” scenarios, including compound emotions, with interpretability and simplicity to facilitate deployment and maintenance [33,37,38].

Our proposed method effectively addresses these challenges by relying on a purely geometric, statistical modeling of landmark-based distances over time. Unlike deep or hybrid models, it eschews deep feature extraction, sequence normalization, and complex attention mechanisms, producing a compact and discriminative spatio-temporal representation. This approach preserves the authentic temporal dynamics of sequences, substantially reduces computational load, and is highly suitable for real-time applications. Importantly, it achieves competitive accuracy and robust performance across multiple benchmark datasets, making it a practical and efficient alternative to existing complex methods.

5. Proposed Approach

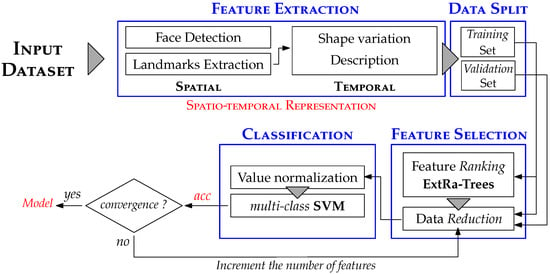

As illustrated in Figure 2, the proposed dynamic AFER approach follows the same fundamental building blocks as a typical pattern recognition system (refer to Section 2). In our case, the input consists of a facial expression dataset where the number of frames per image sequence may vary.

Figure 2.

Overview of our dynamic AFER approach.

In the following sections, we detail each component of the proposed dynamic AFER approach, namely feature extraction, feature selection, and classification.

5.1. Feature Extraction

As discussed in Section 2, three types of features are commonly distinguished in the field of AFER. In our approach, we focus specifically on geometric-based features, which offer the advantage of being invariant to face translation, rotation, and illumination.

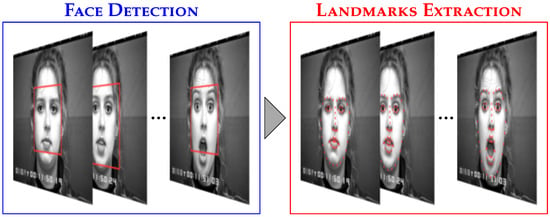

Before computing the feature vectors, we apply the Viola and Jones algorithm [39] to detect the face region (see Figure 3). As illustrated in Figure 2, our method requires a combination of two distinct representations: spatial and temporal.

Figure 3.

Overview of the pre-processing steps.

The spatial representation involves extracting the facial shape. Several techniques, such as AAM and ASM, can be used for this purpose. However, we chose a more recent method introduced by Kazemi and Sullivan [40], which enables the extraction of sixty-eight facial fiducial points, as shown in Figure 3. This technique is based on the following Equation (1):

Here, the input consists of the current estimation of the face shape and the input face image I. The facial shape is defined as , where each element represents a specific facial fiducial point. We denote facial fiducial points, and each point is represented by Cartesian coordinates . The goal of Equation (1) is to iteratively adjust the face shape until convergence, using the regression function , which takes as inputs and I. This function is implemented as a cascade of decision trees trained using the gradient boosting method.

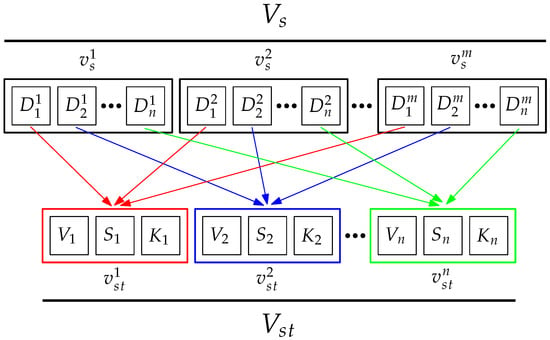

Following inspiration from previous works [16], the spatial representation of the input is achieved by computing all possible Euclidean distances between pairs of facial fiducial points (see Equation (2)). For each frame of an image sequence, we have facial fiducial points, resulting in possible distances, as shown in Equation (3).

As noted earlier, an image sequence consists of a variable number of frames, denoted as m. Thus, we must extract feature vectors, with each vector corresponding to a specific frame in the image sequence (see Figure 4). The spatial representation can be defined as , where is the descriptor vector for the frame, with . Each vector contains all the possible Euclidean distances represented by , corresponding to the distance, where . This representation is inspired by FACS [4], but instead of using specific distances corresponding to Action Units (AUs), we compute all possible distances.

Figure 4.

Spatio-temporal representation of the input.

While the spatial representation may suffice for recognition, as demonstrated in [16], it still faces challenges, particularly the variable number of frames in each image sequence. This requires sequence normalization to apply a supervised machine learning technique effectively. Even after normalization, this approach remains computationally expensive in terms of time and resources. To address this issue, we propose a new representation to capture the temporal variations of each Euclidean distance using statistical metrics. As shown in Figure 4, the spatio-temporal representation is defined as , where each vector contains a triplet of statistical metrics that represent the temporal variation of the Euclidean distance. The statistical metrics are as follows: variance (Equation (4)), skewness (Equation (5)), and kurtosis (Equation (6)):

Finally, the resulting spatio-temporal representation of the input is defined by the feature vector . This descriptor vector has distinct attributes.

5.2. Feature Selection

As previously defined, the size of the obtained spatio-temporal representation is estimated at . However, it is well-known that a high number of attributes can lead to several issues, such as overfitting, which arises from redundant or noisy attributes; an increase in training time due to the larger feature space; and a potential accuracy reduction since some attributes may be misleading or irrelevant when constructing the model. To address these challenges, we introduce a feature selection stage (see Figure 2) aimed at reducing the size of the spatio-temporal representation.

Several feature selection techniques have been proposed in the literature. Among them, Principal Component Analysis (PCA) [19] remains one of the most common approaches. PCA is a statistical procedure that applies an orthogonal transformation to convert a set of potentially correlated attributes into a set of linearly uncorrelated variables known as principal components. This transformation improves the discriminatory power of the features by maximizing the variance captured in the selected components. Another approach involves ranking attributes by their importance. In this method, each attribute is assigned a score, and the higher the score, the more important the attribute is deemed. Based on these scores, a threshold is applied to select the most relevant attributes, determining the percentage of features to retain.

For our approach, we utilize the ExtRa-Trees technique, which is a variant of Random Forests [41]. As described in [42], the ExtRa-Trees algorithm constructs an ensemble of unpruned decision or regression trees using a classical top-down procedure. The key differences between ExtRa-Trees and other tree-based ensemble methods are that node splits are chosen completely at random, and the entire learning sample is used to grow the trees, rather than relying on a bootstrap replica.

In our case, we propose using the ExtRa-Trees technique along with the Gini index as the impurity measure. In a supervised manner, the feature vectors, along with their corresponding labels, are employed to build the ExtRa-Trees model. Subsequently, the feature importance is determined by generating score values for each attribute. The attributes are then ranked in descending order of importance. As illustrated in Figure 2, a threshold is set to select a specific percentage of the most important features. This threshold is incrementally adjusted until the model’s accuracy reaches convergence.

5.3. Classification

Classification represents the final component of the dynamic AFER approach, enabling the identification of various facial expressions. It relies on a supervised machine learning technique, requiring a training or learning phase with labeled samples. Furthermore, machine learning techniques are often sensitive to the value range of feature vectors. To improve accuracy, we propose applying a value normalization technique. We employ min-max normalization, which transforms the data into a predefined range, as shown in Equation (7):

Each element of the original feature vector is represented by v, with its current value range defined by . The new range for the normalized feature vector is , where we set and . While various machine learning techniques can be explored, we focus on two specific ones: k-NN and SVM, also testing other methods for an objective comparison of recognition rates.

We evaluated several commonly used classifiers in the field, prioritizing those with optimized implementations that are suitable for deployment in resource-constrained environments. Based on preliminary experiments, we shortlisted four classifiers—SVM, k-NN, DT, and MLP. For each technique, hyperparameters were carefully tuned to achieve the best possible performance within our experimental framework.

5.3.1. SVM Classifier

The Support Vector Machine (SVM) classifier is a supervised machine learning technique introduced by Cortes and Vapnik [43]. The algorithm constructs a hyperplane designed to optimally separate two distinct classes. Thus, SVM is a binary classifier, as it distinguishes between two classes. For linearly separable datasets, multiple hyperplanes can be used to separate the classes. However, the best choice is the hyperplane that maximizes the margin to the nearest data points, known as the maximum-margin hyperplane. As defined in [44], a basic linear SVM classifier is represented by (see Equation (8)):

where represent the maximum-margin hyperplane, are the feature vectors, and are the labels. The SVM algorithm can also handle nonlinearly separable datasets using the kernel trick, which maps the data into a transformed feature space to find the maximum-margin hyperplane.

In our case, we employed a linear SVM classifier due to its robustness and generalization capabilities. However, we face a multi-class classification problem, as we need to distinguish between M facial expressions. Fortunately, this limitation can be overcome by combining multiple SVM classifiers. Two strategies are commonly used: (1) One-Against-One, where an SVM classifier is trained for each possible pair of classes, and (2) One-Against-All, where an SVM classifier is built for each class. For the proposed dynamic AFER approach, we chose the One-Against-All strategy, as it requires training fewer classifiers. Additionally, the best performance in terms of accuracy was achieved with the cost variable set to .

5.3.2. k-NN Classifier

The k-NN classifier is one of the simplest machine learning techniques and belongs to the category of instance-based methods [45]. The learning phase involves storing the training samples, and the prediction of an unlabeled instance is made by finding the closest neighbors in the training set. Its main advantage over other methods lies in its simplicity and interpretability, as it does not generate a black-box model. To construct a k-NN classifier, two parameters must be defined: (1) k, the number of neighbors to consider for classification, and (2) , the distance metric used to compute the distance between training instances and the one to classify. The k-NN classification is determined by the majority label among , where y are the labels, is the reordering of training set instances based on the distance , and k is the number of nearest neighbors considered.

In our case, we employed the k-NN classifier as presented in [16]. The number of neighbors was set to , and the chosen distance metric is the Cosine distance (see Equation (9)). In , several other distances such as Euclidean and Manhattan were compared, but the best performance was achieved using Cosine distance:

is computed between two feature vectors and .

5.3.3. Other Classifiers

To provide an objective comparison in terms of accuracy, we also tested two other widely-used classification techniques. The first is the Decision Tree (DT) classifier using the C4.5 algorithm with the Gini index as the impurity measure. The second technique is the Multi-Layer Perceptron (MLP), which utilizes the well-known backpropagation algorithm during the learning phase.

6. Experimentation & Evaluation

This section presents the validation protocol and experimental results obtained for the proposed dynamic AFER approach. We first describe the benchmark datasets used in our evaluation. Next, we detail the adopted validation strategy, followed by a thorough analysis of classification performance based on various evaluation metrics.

6.1. Benchmark Datasets

To evaluate the effectiveness and generalizability of our method, we used three publicly available and widely recognized benchmark datasets. Each dataset consists of facial expression image sequences that capture the temporal evolution of expressions. Table 1 summarizes their characteristics.

Table 1.

Benchmark facial expression datasets.

The Extended Cohn-Kanade (CK+) dataset [10] contains 593 video sequences from 123 different subjects, aged between 18 and 50 years, with diverse gender identities and ethnic backgrounds. Each video sequence captures the transition from a neutral expression to a peak facial expression, recorded at 30 frames per second with resolutions of pixels. Among the 593 sequences, 327 are annotated with one of seven basic emotions: anger (AN), contempt (CO), disgust (DI), fear (FE), happiness (HA), sadness (SA), or surprise (SU). The demographic diversity and high-quality annotations make CK+ one of the most widely used and reliable datasets for benchmarking facial expression recognition systems under controlled conditions.

The MMI Facial Expression Database [12] includes over 2900 videos and 1500 high-resolution static images from 75 subjects, offering rich annotations of facial action units (AUs), both at the event level and, partially, at the frame level (neutral, onset, apex, offset). The dataset covers the six basic emotions and includes a small portion of audio-visual laughter data. It offers substantial variation in head pose and expression dynamics, making it suitable for evaluating robustness in dynamic conditions. The participant pool includes subjects from different genders and ethnic backgrounds, enhancing its relevance for cross-cultural studies in expression analysis.

The Multimedia Understanding Group (MUG) dataset [11] comprises 931 video sequences collected from 86 participants—51 men (with or without beards) and 35 women—all of Caucasian origin and aged between 20 and 35 years. The dataset is notable for its high-resolution sequences and the gradual, smooth transitions from neutral to expressive states, making it highly appropriate for studying temporal dynamics in facial expression recognition. Although ethnically homogeneous, the dataset provides useful intra-group variability in facial features, grooming, and expression intensity.

6.2. Validation Strategy

We adopted a ten-fold stratified cross-validation strategy to ensure reliable and unbiased evaluation. In this setup, each dataset is partitioned into ten folds, maintaining the class distribution across all subsets. The training and evaluation process is repeated over ten iterations, where in each iteration, nine folds are used to train the model and the remaining one for testing. All experiments were conducted on an HP ProBook equipped with an Intel Core i7 processor and 8 GB of RAM, providing a balanced environment for performance assessment without relying on high-performance computing resources.

The implementation was carried out using Python 3.12 and relied on several widely-used open-source libraries, including scikit-learn for machine learning, scikit-image for image processing, and

dlib for facial landmark detection. This software stack ensured reproducibility, flexibility, and compatibility with the proposed dynamic AFER approach.

The final recognition performance is computed by averaging the accuracy obtained across all folds. This validation strategy minimizes the effects of random partitioning and ensures that every data point is used for both training and evaluation.

Our experimental evaluation was conducted in the following stages:

- Classifier Evaluation: We compared several classification techniques under the same feature extraction pipeline. These included SVM, k-NN, DT, and MLP, allowing us to identify the most effective learning model for our dynamic AFER approach.

- Comparison with State-of-the-Art: We benchmarked the performance of our approach against existing methods in the literature using the same datasets and validation setup. This comparison aimed to demonstrate the competitiveness of our method in terms of recognition accuracy.

- Detailed Metric Analysis: In addition to accuracy, we also report precision, recall, and F1-score to provide a more comprehensive evaluation of classification performance, especially in the presence of class imbalance.

7. Results & Discussion

This section presents the experimental results and provides a detailed analysis. Each aspect of the evaluation is not only reported but also discussed and interpreted, with particular attention given to the classification performance across different datasets and classifiers, as well as a comparative analysis with state-of-the-art methods.

7.1. Performance of Classifiers

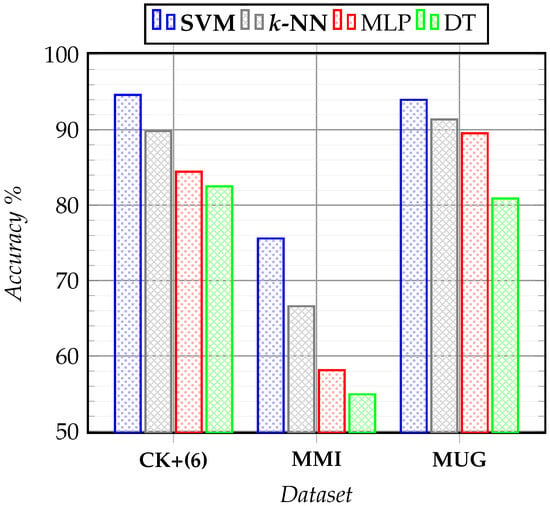

Figure 5 illustrates the classification accuracy of the proposed dynamic AFER approach using four machine learning classifiers: SVM, k-NN, MLP, and DT. The evaluation was carried out on three benchmark datasets: CK+, MMI, and MUG. As observed, multi-class SVM and k-NN consistently outperform MLP and DT across all datasets, making them the most suitable classifiers for our approach.

Figure 5.

Accuracy of the proposed dynamic AFER using different classifiers.

These results can be explained by the nature of the data and the characteristics of each classifier. The multi-class SVM achieves the highest performance across all datasets, likely due to its strong ability to handle non-linear decision boundaries, which are common in spatio-temporal facial features [46]. Similarly, k-NN yields competitive results, particularly because of an optimal choice of hyperparameters—namely, the distance metric and the value of k—which align well with the distribution of our geometric descriptors.

In contrast, the DT and MLP classifiers exhibit weaker performance. This can be attributed to their limitations in capturing non-linear relationships effectively in this context, especially without deep architectures or ensemble strategies. Additionally, the relatively high dimensionality of the feature vectors may affect their performance adversely. For DT, large input vectors can lead to overfitting or poor generalization, while for shallow MLPs, the absence of sufficient depth and representational power may hinder learning complex spatio-temporal patterns.

7.2. CK+ Dataset (Six Basic Emotions)

Table 2 presents the confusion matrix obtained on the CK+ dataset for six emotion classes. The results are overall very good. Recognition rates are close to for SU, HA, and DI, which is expected due to their distinctive facial muscle activations, making their spatio-temporal patterns easier to discriminate.

Table 2.

Confusion matrix using CK+ (six classes) dataset.

In contrast, slightly lower performance is observed for FE, AN, and SA, with accuracy ranging between and . This drop can be attributed to confusion between these emotions, whose dynamic facial expressions are more subtle and similar. In the case of CK+, this affects the model’s ability to fully separate their spatio-temporal trajectories.

These findings confirm the model’s ability to effectively distinguish the most expressive emotions, while also highlighting the challenges associated with more ambiguous ones.

Table 3 presents a comparison of recognition rates for each individual emotion, evaluated against four recent works published between 2019 and 2023. The results are consistent with our findings: all four studies report lower recognition rates for FE, AN, and SA. This convergence reinforces the validity of our results and underlines a common challenge in the field—these particular emotions remain more difficult to classify accurately, indicating that further work is needed to improve performance in these categories.

Table 3.

Recognition rate comparison by emotion with state-of-the-art methods (CK+).

Table 4 compares our proposed method with nine recent state-of-the-art approaches, published between 2019 and 2023, all evaluated using the same six-class setup on CK+. The compared methods span both traditional techniques and deep learning-based models. Our approach, particularly when using the multi-class SVM classifier, achieves the highest recognition accuracy among all tested methods. Only two methods—those proposed by Shahid et al. [7] and Singh et al. [24]—come close in terms of performance; however, both rely on deep neural networks, which typically require substantial computational resources. In contrast, our method is lightweight and designed to operate efficiently in resource-constrained environments, making its competitive performance particularly noteworthy.

Table 4.

Comparing with state-of-the-art methods (CK+).

7.3. MMI Dataset

Table 5 presents the confusion matrix for the MMI dataset, which is inherently more challenging than CK+ due to greater variability in head pose and lighting conditions. Consequently, we observe a drop in overall recognition performance compared to CK+. Most emotions achieve accuracy rates above , with HA reaching a notably high accuracy of . However, the FE class exhibits a significant performance decline to , largely due to confusion with SU and SA. This difficulty in distinguishing certain emotions on MMI is a known challenge not unique to our method; many existing and recent approaches face similar limitations when evaluated on this dataset. Further improvements will be needed to better handle these challenging cases.

Table 5.

Confusion matrix using MMI dataset.

Table 6 presents a comparison of recognition rates for each emotion against four recent studies (2020–2023). The results align well with ours, as all four studies report a noticeable drop in performance for the FE emotion. Notably, two methods by Pham et al. [20] and Samadiani et al. [30] show recognition rates as low as and respectively for FE. Aside from this, our method demonstrates a more balanced distribution of recognition accuracy across the remaining five emotions.

Table 6.

Recognition rate comparison by emotion with state-of-the-art methods (MMI).

As shown in Table 7, we compared our method with eight existing approaches published between 2019 and 2024. Our method consistently achieves the highest accuracy, particularly when using the multi-class SVM classifier. The only exception is a method by Yang et al. [23], which employs deep CNNs and outperforms ours by less than . It is also worth noting that, in this dataset, there is a larger performance gap of about between our multi-class SVM and k-NN classifiers, whereas on the CK+ dataset this difference was closer to . These results further confirm the strong generalization capabilities of our approach across datasets with varying acquisition conditions and subject variability.

Table 7.

Comparing with state-of-the-art methods (MMI).

7.4. MUG Dataset

Table 8 presents the confusion matrix obtained on the MUG dataset. Overall, the results are excellent, with all emotions being recognized with an accuracy above . Notably, the model achieves near-perfect recognition for certain expressions such as disgust and happiness. The only exception is FE, which is recognized with an accuracy of and is mainly confused with SU. Compared to the other datasets, MUG yields the highest overall performance. This can be attributed to its high-resolution sequences, smooth and natural expression transitions, as well as a larger and more balanced number of samples across all emotion classes.

Table 8.

Confusion matrix using MUG dataset.

Table 9 presents a comparison of emotion-wise recognition rates between our method and four recent studies (2019–2024). The results show a strong alignment with ours, particularly regarding the emotion FE, which remains challenging across all methods. Reported accuracies for FE range from (Kartheek et al. [22]) to (Shahid et al. [7]), showing less than a difference from our results. For the remaining emotions, performance is more balanced. Notably, our method achieves superior recognition rates for DI and SA compared to all four referenced approaches.

Table 9.

Recognition rate comparison by emotion with state-of-the-art methods (MUG).

As shown in Table 10, we compared our method with nine existing approaches published between 2019 and 2024. Our method consistently achieves the highest accuracy, particularly when using the multi-class SVM classifier. The only two approaches with comparable performance are those proposed by Shahid et al. [7] () and Kumar Tataji et al. [26] (), both relying on deep neural networks. In contrast, our method avoids the use of deep learning to maintain a lightweight and resource-efficient architecture. Interestingly, the performance gap between k-NN and multi-class SVM is the smallest on this dataset—less than —which highlights the effectiveness of k-NN in this case. This can be attributed to the balanced distribution and the relatively large number of samples in the dataset, which favor instance-based learning.

Table 10.

Comparing with state-of-the-art methods (MUG).

7.5. CK+ Dataset (Seven Classes Including Contempt)

To further assess the scalability of our method, we extended the CK+ dataset by including a seventh emotion category: CO. As shown in Table 11, the addition of this new class led to a slight drop in overall accuracy, from to . This decrease is mainly due to increased confusion between SA and CO, with the recognition rate for SA falling from to . Interestingly, contempt itself is recognized with a slightly higher accuracy of , indicating that although it is a challenging emotion to classify, the model is still able to distinguish it reasonably well.

Table 11.

Confusion matrix using CK+ (seven classes) dataset.

7.6. Detailed Metric Analysis

Table 12 presents a comprehensive evaluation of the proposed approach using five key performance metrics: F1-Score, Recall, Precision, Accuracy, and ∑ Attributes, which denotes the total number of attributes used for classification. These metrics provide detailed insights into both the predictive performance and the efficiency of the method.

Table 12.

Evaluation using various metrics.

From the table, it can be observed that the F1-score and recall are closely aligned with the overall accuracy, while the precision is slightly higher. This indicates a lower false positive rate, which is a favorable outcome for our method. Across all three benchmark datasets, the difference between accuracy and precision remains consistently just above , highlighting the robustness of the model.

In terms of feature vector size, the goal was to significantly reduce the number of attributes while maintaining strong recognition performance. Starting from an initial feature vector of 6834 dimensions, the dimensionality was reduced to 724 for the MUG dataset, 806 for MMI, and 1114 for CK+ (six-emotion configuration). For CK+ with seven emotions, the reduction was slightly less effective but still achieved a reduction relative to the original spatio-temporal representation. These results clearly demonstrate the effectiveness of our feature selection strategy, both in enhancing computational efficiency and preserving classification accuracy.

Table 13 provides an evaluation of the computational efficiency of the proposed method, focusing on both the training and prediction phases. As expected, there is little variation in prediction time across datasets, since the classification involves processing a single image sequence at a time. However, a clear difference emerges between the classifiers: the multi-class SVM consistently outperforms the k-NN in terms of prediction speed, achieving an average of s per sequence, while k-NN requires between and s. This slower performance is due to the fact that k-NN must scan, reorganize, and compare the input to the entire training set before making a decision.

Table 13.

Measure of the run-time during training and prediction.

In contrast, during the training phase, dataset size plays a more significant role. Larger datasets naturally require more time to process. The multi-class SVM, being a model-based approach, requires between and s to train, depending on the dataset. On the other hand, k-NN—as an instance-based learning method—does not involve explicit model training, resulting in negligible training time. This highlights the trade-off between training and inference efficiency depending on the chosen classifier.

8. Conclusions & Future Work

In this paper, we introduced a novel dynamic approach to AFER. Unlike traditional static methods that analyze isolated images, dynamic methods capture the temporal evolution of facial expressions by leveraging the transitional phases—onset, apex, and offset. To this end, we proposed a method that constructs a compact spatio-temporal representation from image sequences, allowing for a richer and more informative characterization of facial dynamics.

We evaluated our approach using three widely adopted benchmark datasets: CK+, MMI, and MUG. The experimental analysis was carried out in several stages. First, we assessed different classifiers and observed that SVM and k-NN consistently yielded the best performance. We then compared our method against recent state-of-the-art techniques. The results confirm that our sequence-based dynamic approach, particularly when coupled with SVM, achieves superior classification accuracy.

Beyond accuracy, our method also demonstrates excellent computational efficiency. It operates with a significantly reduced feature set while maintaining high performance and competitive training and inference times. This makes it suitable for deployment in real-time or resource-constrained environments.

Despite these promising results, the proposed approach has certain limitations. Most notably, it is currently restricted to frontal-view image sequences captured in controlled environments. While this was appropriate given the objectives and scope of the present study, it does not reflect the variability of real-world (“in-the-wild”) conditions, such as diverse poses, occlusions, and lighting. Extending our approach to handle multi-view or pose-invariant scenarios is therefore a compelling direction for future research. Such an extension would improve the applicability of our method in more unconstrained and practical settings.

Additionally, the recognition rates for more subtle emotions like sadness and fear remain relatively low, which may stem from the limited expressiveness of their geometric signatures and the exclusive use of landmark-based features in our pipeline. Integrating appearance-based or deep-learned descriptors could potentially address this gap and improve robustness across all emotion categories.

Finally, the overall performance of our system is closely tied to the accuracy of facial landmark detection. Errors in fiducial point localization can significantly impact the quality of the extracted features and the final classification. Strengthening this preprocessing step is essential to enhance the reliability of the full recognition pipeline.

Moreover, while our method shows strong comparative results, we acknowledge the absence of formal statistical significance testing in our evaluation. This limitation is partly due to the lack of access to individual run results or multiple evaluation splits for the compared methods, which prevents meaningful hypothesis testing. Performance differences—although often small—have thus not been statistically validated. We plan to incorporate appropriate statistical tests (e.g., paired t-tests or Wilcoxon tests) in future work to better assess the robustness of our comparative results when such data becomes available.

In summary, the proposed dynamic AFER framework provides a solid foundation for temporally aware and computationally efficient facial expression recognition. While it has proven effective in controlled scenarios, future work will aim to broaden its scope toward more realistic, “in-the-wild” applications, making it a more versatile tool for affective computing in everyday contexts.

Funding

This research received no external funding.

Data Availability Statement

Data supporting the reported results are based on publicly available reference datasets widely used in the field, which can be accessed upon request by signing a data usage agreement. These datasets are not owned by the author but were made available through formal access requests according to the specified instructions.

Acknowledgments

The authors would like to acknowledge the use of ChatGPT (OpenAI, GPT-4, accessed April 2025) for assistance in correcting grammar, spelling, and improving the overall clarity and formulation of the text during the preparation of this manuscript. The authors have reviewed and edited the generated content and take full responsibility for the final version of the publication. We would also like to express our gratitude to all individuals and organizations that provided the benchmark facial expression datasets—CK+ [10], MMI [12], and MUG [11]—which were instrumental in validating our approach.

Conflicts of Interest

The author declares no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| AAL | Ambient Assisted Living |

| AFER | Automatic Facial Expression Recognition |

| FER | Facial Expression Recognition |

| k-NN | k-Nearest Neighbors |

| AU | Action Unit |

| CK+ | Extended Cohn-Kanade |

| MUG | Multimedia Understanding Group |

| SVM | Support Vector Machine |

| SIFT | Scale-Invariant Feature Transform |

| ICT | Information and Communication Technologies |

| HCI | Human–Computer Interaction |

| FACS | Facial Action Coding System |

| Extra-Trees | Extremely Randomized Trees |

| ASM | Active Shape Model |

| AAM | Active Appearance Model |

| DWT | Discrete Wavelet Transform |

| LBP | Local Binary Pattern |

| CNN | Convolutional Neural Network |

| DBN | Deep Belief Network |

| uGMM-MIK | universal Gaussian Mixture Model Mean Interval Kernel |

| DAGSVM | Directed Acyclic Graph SVM |

| LSTM | Long short-term memory |

| WGFD | Windmill Graph-based Feature Descriptors |

| CLBP | Completed Local Binary Patterns |

| CyFD | Cyclopentane Feature Descriptor |

| CC-CNN | Cross-Connected Convolutional Neural Network |

| CRIP | Cross-Centroid Ripple Pattern |

| DCFA-CNN | Deep Cross Feature Adaptive Convolutional Neural Network |

| FTL | Feature Transfer Learning |

| LBP-TOP | Local Binary Pattern on Three Orthogonal Planes |

| DGNN | Directed Graph Neural Network |

| GLU | Gated Linear Units |

| PCA | Principal Component Analysis |

| DT | Decision Tree |

| MLP | Multi-Layer Perceptron |

| TL | Transfer Learning |

| acc | accuracy |

| HA | Happiness |

| AN | Anger |

| DI | Disgust |

| SA | Sadness |

| FE | Fear |

| SU | Surprise |

| CO | Contempt |

References

- Mehrabian, A. Communication without words. Psychol. Today 1968, 2, 51–52. [Google Scholar]

- Yaddaden, Y.; Adda, M.; Bouzouane, A.; Gaboury, S.; Bouchard, B. User action and facial expression recognition for error detection system in an ambient assisted environment. Expert Syst. Appl. 2018, 112, 173–189. [Google Scholar] [CrossRef]

- Ekman, P.; Friesen, W.V. Constants across cultures in the face and emotion. J. Personal. Soc. Psychol. 1971, 17, 124–129. [Google Scholar] [CrossRef]

- Ekman, P.; Rosenberg, E.L. What the Face Reveals Basic and Applied Studies of Spontaneous Expression Using the Facial Action Coding System (FACS); Oxford University Press: Oxford, UK, 2005. [Google Scholar] [CrossRef]

- Perveen, N.; Roy, D.; Chalavadi, K.M. Facial expression recognition in videos using dynamic kernels. IEEE Trans. Image Process. 2020, 29, 8316–8325. [Google Scholar] [CrossRef] [PubMed]

- Lopez-Gil, J.M.; Garay-Vitoria, N. Photogram classification-based emotion recognition. IEEE Access 2021, 9, 136974–136984. [Google Scholar] [CrossRef]

- Shahid, A.R.; Khan, S.; Yan, H. Contour and region harmonic features for sub-local facial expression recognition. J. Vis. Commun. Image Represent. 2020, 73, 102949. [Google Scholar] [CrossRef]

- Ngoc, Q.T.; Lee, S.; Song, B.C. Facial landmark-based emotion recognition via directed graph neural network. Electronics 2020, 9, 764. [Google Scholar] [CrossRef]

- Aghamaleki, J.A.; Ashkani Chenarlogh, V. Multi-stream CNN for facial expression recognition in limited training data. Multimed. Tools Appl. 2019, 78, 22861–22882. [Google Scholar] [CrossRef]

- Kanade, T.; Cohn, J.F.; Tian, Y. Comprehensive database for facial expression analysis. In Proceedings of the 4th IEEE International Conference on Automatic Face and Gesture Recognition, Grenoble, France, 28–30 March 2000; pp. 46–53. [Google Scholar] [CrossRef]

- Aifanti, N.; Papachristou, C.; Delopoulos, A. The MUG facial expression database. In Proceedings of the 11th International Workshop on Image Analysis for Multimedia Interactive Services, Desenzano del Garda, Italy, 12–14 April 2010; pp. 1–4. [Google Scholar]

- Pantic, M.; Valstar, M.; Rademaker, R.; Maat, L. Web-based database for facial expression analysis. In Proceedings of the IEEE International Conference on Multimedia and Expo, Amsterdam, The Netherlands, 6 July 2005. [Google Scholar] [CrossRef]

- Konar, A.; Halder, A.; Chakraborty, A. Introduction to Emotion Recognition. In Emotion Recognition; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 2015; pp. 1–45. [Google Scholar] [CrossRef]

- Yaddaden, Y.; Adda, M.; Bouzouane, A.; Gaboury, S.; Bouchard, B. One-class and bi-class SVM classifier comparison for automatic facial expression recognition. In Proceedings of the 2018 International Conference on Applied Smart Systems (ICASS), Medea, Algeria, 24–25 November 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Zhang, S.; Pan, X.; Cui, Y.; Zhao, X.; Liu, L. Learning affective video features for facial expression recognition via hybrid deep learning. IEEE Access 2019, 7, 32297–32304. [Google Scholar] [CrossRef]

- Yaddaden, Y.; Adda, M.; Bouzouane, A.; Gaboury, S.; Bouchard, B. Facial Expression Recognition from Video using Geometric Features. In Proceedings of the 8th International Conference on Pattern Recognition Systems, Madrid, Spain, 11–13 July 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Sen, D.; Datta, S.; Balasubramanian, R. Facial emotion classification using concatenated geometric and textural features. Multimed. Tools Appl. 2019, 78, 10287–10323. [Google Scholar] [CrossRef]

- Yaddaden, Y.; Adda, M.; Bouzouane, A.; Gaboury, S.; Bouchard, B. Hybrid-based facial expression recognition approach for human-computer interaction. In Proceedings of the 2018 IEEE 20th International Workshop on Multimedia Signal Processing (MMSP), Vancouver, BC, Canada, 29–31 August 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Yaddaden, Y. An efficient facial expression recognition system with appearance-based fused descriptors. Intell. Syst. Appl. 2023, 17, 200166. [Google Scholar] [CrossRef]

- Pham, T.D.; Duong, M.T.; Ho, Q.T.; Lee, S.; Hong, M.C. CNN-based facial expression recognition with simultaneous consideration of inter-class and intra-class variations. Sensors 2023, 23, 9658. [Google Scholar] [CrossRef]

- Vaijayanthi, S.; Arunnehru, J. Dense SIFT-based facial expression recognition using machine learning techniques. In Proceedings of the 6th International Conference on Advance Computing and Intelligent Engineering: ICACIE 2021, Bhubaneswar, India, 23–24 December 2021; Springer: Singapore, 2022; pp. 301–310. [Google Scholar] [CrossRef]

- Kartheek, M.N.; Prasad, M.V.; Bhukya, R. Windmill graph based feature descriptors for facial expression recognition. Optik 2022, 260, 169053. [Google Scholar] [CrossRef]

- Yang, B.; Li, Z.; Cao, E. Facial expression recognition based on multi-dataset neural network. Radioengineering 2020, 29, 259–266. [Google Scholar] [CrossRef]

- Singh, R.; Saurav, S.; Kumar, T.; Saini, R.; Vohra, A.; Singh, S. Facial expression recognition in videos using hybrid CNN & ConvLSTM. Int. J. Inf. Technol. 2023, 15, 1819–1830. [Google Scholar] [CrossRef]

- Mukhopadhyay, M.; Dey, A.; Kahali, S. A deep-learning-based facial expression recognition method using textural features. Neural Comput. Appl. 2023, 35, 6499–6514. [Google Scholar] [CrossRef]

- Kumar Tataji, K.N.; Kartheek, M.N.; Prasad, M.V. CC-CNN: A cross connected convolutional neural network using feature level fusion for facial expression recognition. Multimed. Tools Appl. 2024, 83, 27619–27645. [Google Scholar] [CrossRef]

- Verma, M.; Vipparthi, S.K. Cross-centroid ripple pattern for facial expression recognition. Multimed. Tools Appl. 2025, 84, 11707–11727. [Google Scholar] [CrossRef]

- Reddy, A.H.; Kolli, K.; Kiran, Y.L. Deep cross feature adaptive network for facial emotion classification. Signal Image Video Process. 2022, 16, 369–376. [Google Scholar] [CrossRef]

- Li, J.; Huang, S.; Zhang, X.; Fu, X.; Chang, C.C.; Tang, Z.; Luo, Z. Facial expression recognition by transfer learning for small datasets. In Security with Intelligent Computing and Big-data Services, Proceedings of the Second International Conference on Security with Intelligent Computing and Big Data Services (SICBS-2018), Guilin, China, 14–16 December 2018; Springer: Cham, Switzerland, 2020; pp. 756–770. [Google Scholar] [CrossRef]

- Samadiani, N.; Huang, G.; Luo, W.; Chi, C.H.; Shu, Y.; Wang, R.; Kocaturk, T. A multiple feature fusion framework for video emotion recognition in the wild. Concurr. Comput. Pract. Exp. 2022, 34, e5764. [Google Scholar] [CrossRef]

- Mao, J.; Xu, R.; Yin, X.; Chang, Y.; Nie, B.; Huang, A.; Wang, Y. Poster++: A simpler and stronger facial expression recognition network. Pattern Recognit. 2025, 157, 110951. [Google Scholar] [CrossRef]

- Liao, L.; Wu, S.; Song, C.; Fu, J. RS-Xception: A lightweight network for facial expression recognition. Electronics 2024, 13, 3217. [Google Scholar] [CrossRef]

- Grover, R.; Bansal, S. Enhancing facial expression recognition in uncontrolled environment: A lightweight CNN approach with pre-processing. Neural Comput. Appl. 2025, 37, 7363–7378. [Google Scholar] [CrossRef]

- Zhao, Z.; Li, Y.; Yang, J.; Ma, Y. A lightweight facial expression recognition model for automated engagement detection. Signal Image Video Process. 2024, 18, 3553–3563. [Google Scholar] [CrossRef]

- Wang, K.; Yu, W.; Yamauchi, T. MVT-CEAM: A lightweight MobileViT with channel expansion and attention mechanism for facial expression recognition. Signal Image Video Process. 2024, 18, 6853–6865. [Google Scholar] [CrossRef]

- Kopalidis, T.; Solachidis, V.; Vretos, N.; Daras, P. Advances in facial expression recognition: A survey of methods, benchmarks, models, and datasets. Information 2024, 15, 135. [Google Scholar] [CrossRef]

- Tagmatova, Z.; Umirzakova, S.; Kutlimuratov, A.; Abdusalomov, A.; Im Cho, Y. A Hyper-Attentive Multimodal Transformer for Real-Time and Robust Facial Expression Recognition. Appl. Sci. 2025, 15, 7100. [Google Scholar] [CrossRef]

- Khelifa, A.; Ghazouani, H.; Barhoumi, W. Rank-aware LDL hybrid MetaFormer for Compound Facial Expression Recognition in-the-wild. Inf. Fusion 2025, 126, 103525. [Google Scholar] [CrossRef]

- Viola, P.; Jones, M. Rapid object detection using a boosted cascade of simple features. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Kauai, HI, USA, 8–14 December 2001; Volume 1, pp. I–I. [Google Scholar] [CrossRef]

- Kazemi, V.; Sullivan, J. One millisecond face alignment with an ensemble of regression trees. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1867–1874. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Geurts, P.; Ernst, D.; Wehenkel, L. Extremely randomized trees. Mach. Learn. 2006, 63, 3–42. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Shalev-Shwartz, S.; Ben-David, S. Understanding Machine Learning: From Theory to Algorithms; Cambridge University Press: London, UK, 2014. [Google Scholar] [CrossRef]

- Aha, D.W.; Kibler, D.; Albert, M.K. Instance-based learning algorithms. Mach. Learn. 1991, 6, 37–66. [Google Scholar] [CrossRef]

- Lamouchi, D.; Yaddaden, Y.; Parent, J.; Cherif, R. Efficient Driver Drowsiness Detection Using Spatiotemporal Features with Support Vector Machine. Int. J. Intell. Transp. Syst. Res. 2025, 23, 720–732. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).