Abstract

Low-resource languages remain underserved by contemporary large language models (LLMs) because they lack sizable corpora, bespoke preprocessing tools, and the computing budgets assumed by mainstream alignment pipelines. Focusing on Kazakh, we present a 1.94B parameter LLaMA-based model that demonstrates how strong, culturally aligned performance can be achieved without massive infrastructure. The contribution is threefold. (i) Data and tokenization—we compile a rigorously cleaned, mixed-domain Kazakh corpus and design a tokenizer that respects the language’s agglutinative morphology, mixed-script usage, and diacritics. (ii) Training recipe—the model is built in two stages: causal language modeling from scratch followed by instruction tuning. Alignment is further refined with Direct Preference Optimization (DPO), extended by contrastive and entropy-based regularization to stabilize training under sparse, noisy preference signals. Two complementary resources support this step: ChatTune-DPO, a crowd-sourced set of human preference pairs, and Pseudo-DPO, an automatically generated alternative that repurposes instruction data to reduce annotation cost. (iii) Evaluation and impact—qualitative and task-specific assessments show that targeted monolingual training and the proposed DPO variant markedly improve factuality, coherence, and cultural fidelity over baseline instruction-only and multilingual counterparts. The model and datasets are released under open licenses, offering a reproducible blueprint for extending state-of-the-art language modeling to other under-represented languages and domains.

1. Introduction

Language models now underpin a wide spectrum of natural language processing (NLP) tasks—from translation and summarization to conversational agents. Yet low-resource languages such as Kazakh remain underserved: high-quality corpora are scarce, computational budgets are modest, and most existing benchmarks ignore their unique morphology and mixed-script usage. The present work responds to that gap.

We adopt LLaMA architecture because of its favorable parameter efficiency and proven adaptability across domains. Our goals are twofold:

- Create a reliable Kazakh LLM. We train a 1.94B parameter model from scratch, with a tokenizer customized for Kazakh’s agglutinative structure.

- Advance data and methodology for low-resource settings.

Objective of the study: The primary objective of this research is to develop an advanced Kazakh language model that effectively understands and generates Kazakh text, while addressing key challenges associated with limited data availability and computational resources. In pursuit of this overarching goal, the study focuses on three interrelated objectives:

- Developing a Kazakh Language Model:

- Data Collection and Preparation: Gather diverse, high-quality Kazakh textual data from reliable sources, ensuring coverage of various topics and writing styles to capture linguistic richness. This includes identifying and filtering out any non-Kazakh content deemed noise.

- Model Architecture and Training: We adopt the LLaMA (Large Language Model Meta AI) architecture due to its efficiency in autoregressive language modeling—specifically, its ability to achieve strong performance with relatively fewer parameters and lower memory requirements per token. These characteristics align well with our computational constraints. We train the model from scratch, adapting key architectural parameters—such as hidden size, number of layers, and attention heads—to better accommodate the morphological richness and syntactic flexibility of the Kazakh language.

- Performance Evaluation: Establish rigorous evaluation metrics (e.g., perplexity, fluency, linguistic accuracy) to systematically assess the model’s performance and guide iterative improvements.

- 2.

- Efficient Language Modeling:

- Instruction-Based Tuning: Curate and construct a high-quality instructional dataset, designed to represent a wide spectrum of tasks—ranging from general inquiries to specialized domain-specific queries—in Kazakh.

- Task-Specific Optimization: Apply state-of-the-art tuning methods, including DPO, to refine the model’s ability to follow task instructions, improve factual correctness, and reduce undesired outputs (e.g., hallucinations).

- Application and Utility: Demonstrate the model’s value in educational, professional, and digital contexts by testing its performance on real-world tasks, such as question answering, summarization, and interactive dialogue.

- 3.

- Advancing Low-Resource Language Modeling: While multilingual models offer partial solutions, their performance on low-resource languages remains suboptimal due to data scarcity, linguistic divergence, and insufficient specialization. To bridge this gap, we propose a holistic framework for building performant, culturally aligned models for low-resource settings, using Kazakh—a Turkic language spoken by over 13 million people but critically under-represented in NLP– as a case study. Our work addresses three systemic challenges: (i) the absence of curated, large-scale corpora and linguistically tailored preprocessing tools, (ii) the inefficiency of scaling multilingual models without targeted monolingual optimization, (iii) the computational and methodological barriers to reproducing alignment pipelines in resource-constrained environments. We introduce a suite of modular resources and a reproducible training methodology designed to empower researchers and practitioners, prioritizing transparency, computational efficiency, and linguistic specificity. By decoupling model performance from reliance on high-resource data and proprietary infrastructure, this framework advances the broader NLP community’s capacity to develop inclusive, equitable language technologies.

Kozov et al. (2024) highlight the societal integration of LLMs and the need for user preparedness, underscoring the advantage of accessible AI in education and industry [1]. Similarly, Wang and Li (2025) emphasize LLMs’ dual role in misinformation: while capable of generating misleading content, they also offer tools for detection and mitigation [2]. Our Kazakh LLM addresses these concerns by prioritizing transparency, robustness, and ethical standards to ensure trustworthy AI interactions. Huang et al. (2024) outline the shift from traditional LLMs to multimodal models (LMMs), stressing computational efficiency, architectural innovation, and data diversity over parameter scaling [3]. Drawing from this, our model employs architectural optimizations and culturally authentic datasets tailored to Kazakh’s linguistic features, operating within practical computational budgets.

This study releases original human-annotated and synthetic Kazakh datasets (e.g., ChatTune-DPO and Pseudo-DPO), designed to reduce annotation costs while ensuring cultural and linguistic accuracy. These resources address gaps in high-quality, ethically aligned data for under-represented languages, incorporating bias mitigation, factual consistency, and academic integrity standards.

Kuznetsova et al. (2024) frame language as a pillar of national identity and call for cultural values to be embedded in digital tools [4]. Responding to that mandate, we established LibOCR-KazNU, an ongoing effort that digitizes Al-Farabi Kazakh National University’s heritage collections via OCR and continuously enriches the corpus with newly scanned, high-quality Kazakh texts. This living archive feeds our model’s training data, ensuring that generated content reflects authentic linguistic usage and culturally salient knowledge.

By pairing that expanding resource with our preference-aligned LLM, we preserve cultural integrity while advancing state-of-the-art Kazakh text generation, countering the marginalization of low-resource languages in AI. The public release of both the model and corpus promotes equitable AI development and empowers educational, professional, and societal applications. Merging methodological innovation with ethical and cultural stewardship, the work offers scalable alignment strategies, lowers the dependence on costly human annotation, and fosters responsible deployment so that LLMs operate as tools of empowerment rather than misinformation [5].

1.1. Literature Review

Scholarly interest in developing large language models (LLMs) for low-resource languages like Kazakh has intensified, driven by the need for robust models in linguistically under-represented regions. This review evaluates existing approaches, identifies challenges, and highlights the contributions of our proposed LLaMA-based Kazakh model.

Pelofske et al. (2024) assessed GPT models across 50 languages, including Kazakh, using TED Talk translations. While top performers like ReMM-v2-L2-13B and Llama2-chat-AYT-13B excelled in high-resource languages, Kazakh accuracy lagged due to inadequate training data, emphasizing the need for targeted fine-tuning [6]. Complementing this observation, Kamshat et al. (2024) show how a pipeline that first digitizes Kazakh text with 85% OCR accuracy and then channels the cleaned output into NLP and speech-recognition modules can raise machine translation quality; their prototype universal translator improves by 44% over the previous state of the art, yet they note that their evaluation metrics remain ill-suited to low-resource contexts [7]. Li et al. (2023) reinforce these concerns with Language Ranker, an intrinsic metric revealing that LLMs trained on data-rich languages systematically lag on Kazakh, whose low similarity scores mirror its meager pre-training footprint [8]. Despite aims to bridge resource gaps, multilingual models (mBERT, XLM-R) prioritize high-resource languages, lacking Kazakh’s morphological and syntactic specificity. Crosslingual transfer (XLT) via Turkish data in the Kardeş-NLU benchmark yielded task-specific improvements for Turkic languages, but proved to be insufficient for comprehensive Kazakh modeling [9].

Monolingual efforts, though nascent, reveal limitations: GPT-4 outperformed others in Kazakh tasks but trailed high-resource language benchmarks. Studies on Turkish morphology, akin to Kazakh’s complexity, suggest that specialized approaches (e.g., VQ-VAE architectures) could enhance Kazakh NLP, advocating for dedicated monolingual models [10].

Recent findings indicate that data augmentation driven by large language models (LLMs) is becoming a viable and cost-effective alternative to traditional human annotation in low-resource settings. Ding et al. [11] provide a comprehensive review, demonstrating how synthetic data generated by foundation models can effectively expand under-resourced corpora, meet scaling law requirements, and, when appropriately filtered, even outperform manually annotated datasets in downstream tasks. This evidence supports our central claim: the deliberate generation of high-quality synthetic preference pairs presents a scalable and efficient solution for robust alignment, particularly in languages such as Kazakh, where authentic data remain scarce and annotation resources are limited.

1.2. Limitations of Existing Approaches

Despite advancements in multilingual language modeling, several limitations persist when it comes to effectively modeling Kazakh and other low-resource languages:

- Data Imbalance and Morphological Complexity. Current LLMs (e.g., GPT, transformer-based models) are trained on datasets dominated by high-resource languages (e.g., English, Chinese), limiting their ability to capture Kazakh’s morphological, syntactic, and semantic intricacies. Addressing this requires curated Kazakh corpora and specialized training approaches sensitive to morphological features.

- Limited Crosslingual Generalization. Multilingual models struggle with linguistically distant languages. Pelofske et al. (2024) showed significant translation performance drops for Kazakh without explicit fine-tuning, highlighting the need for language-specific adaptation over generic multilingual approaches [6].

- Inadequate Evaluation Metrics. Conventional metrics (BLEU, METEOR) fail to assess Kazakh’s linguistic subtleties, necessitating linguistically informed evaluation frameworks.

- Insufficient Targeted Fine-Tuning. While Kamshat et al. (2024) demonstrated the value of Kazakh-specific fine-tuning, NLP research lacks models optimized for its unique linguistic and digital context. Enhanced, tailored fine-tuning methods remain underexplored [7].

- Safety–Performance Trade-offs. Adversarial training reduces vulnerabilities but degrades performance. For example, adversarial fine-tuning led to lower MT-Bench scores than smaller models like LLaMA-2-7b-chat [12], underscoring challenges in balancing safety and efficacy [6]. Challenges in Preference-Based Optimization Reinforcement Learning (RL) methods (e.g., PPO) demand significant resources and stable preference data. Direct Preference Optimization (DPO) simplifies alignment via maximum likelihood classification, offering stability and lower computational costs [13]. However, DPO faces sensitivity to distribution shifts and biases. Emerging variants (CPO, IPO, Online DPO) aim to address these issues but require further refinement.

- Persistent Systemic Issues. Models face data quality, bias, and adaptability limitations. Wang and Li (2025) emphasize that these shortcomings heighten misinformation risks in low-resource languages like Kazakh, where fact-checking infrastructure is weak, necessitating transparent, linguistically informed models [2].

Advancing NLP for Kazakh requires targeted monolingual modeling, innovative preference optimization, and rigorous dataset curation to address linguistic inequities and enable practical applications.

1.3. Contributions of LLaMA-Based Kazakh Model

The development of this LLaMA 1.94B Kazakh model presents a novel approach to addressing the limitations identified in the literature. Unlike existing models, which are often multilingual and generalized, our model is monolingual and specifically tailored to the Kazakh language. This focus allows us to more effectively capture the intricate linguistic features unique to Kazakh. By training the model from scratch on a curated Kazakh dataset, we aim to enhance its ability to perform well on a variety of NLP tasks, including generative tasks that have been challenging for previous models.

Furthermore, our research incorporates fine-tuning strategies that address specific tasks relevant to Kazakh, such as question answering and instructional prompts. This approach not only improves the model’s utility in practical applications but also lays the foundation for future expansion into a multilingual model that retains a strong understanding of Kazakh. By demonstrating the feasibility of developing a high-performing Kazakh LLM using relatively modest computational resources, our work provides a blueprint for further advancements in low-resource language modeling.

The reviewed literature highlights the ongoing challenges and gaps in developing effective LLMs for Kazakh and other low-resource languages. While current efforts have made significant strides, they are often constrained by data limitations, inadequate fine-tuning, and a lack of comprehensive evaluation metrics. Our LLaMA 1.94B Kazakh model offers a targeted solution that directly addresses these challenges, paving the way for more sophisticated language models that can better serve low-resource language communities. Future research will focus on expanding the model’s capabilities to support multilingual tasks while maintaining its core strengths in Kazakh, thereby contributing to the broader field of NLP for low-resource languages.

2. Materials and Methods: Building Small-Scale Kazakh LLaMA

2.1. Model Development

To capture the linguistic nuances of Kazakh, we built a monolingual, 1.94B parameter LLaMA variant. Development followed three pillars: (i) data collection and preprocessing, (ii) model architecture, (iii) training setup.

2.1.1. Data Collection and Preprocessing

The initial step involved the careful collection and preprocessing of diverse datasets to create a comprehensive training corpus [14]. The datasets used encompassed a wide range of text sources:

- Kazakh news articles, to capture contemporary language usage and terminology.

- Kazakh literature, including classic and modern works to ensure the model understood literary forms and stylistic diversity.

- Academic dissertations and monographs, to introduce formal language structures and specialized terminology.

- Educational textbooks and Wikipedia dumps: The Kazakh Wikipedia dump (as of August 2024) was included to cover a broad range of topics and enhance the model’s general knowledge base [15].

- Mixed Kazakh–Russian texts, incorporated to reflect the real-world language use in Kazakhstan, where Kazakh and Russian are often used.

Data preprocessing removed noise, normalized scripts, and balanced domain proportions. Special care ensured the faithful handling of Kazakh-specific characters.

As part of this research, we launched LibOCR-KazNU, an initiative to digitize and preserve old research books using OCR technology. Our developers continuously expand this corpus with high-quality Kazakh-language texts. Al-Farabi Kazakh National University’s library, a rich source of scholarly knowledge, plays a key role, contributing essential linguistic and cultural insights. Integrated into our model training, LibOCR-KazNU enhanced the understanding of Kazakh’s academic and historical contexts. To support NLP research, we publicly released this dataset, reinforcing our commitment to preserving and promoting Kazakh linguistic heritage [16].

Dataset statistics:

- Training: 1.37 B tokens, 127,701 unique tokens.

- Validation: 1.35 M tokens, 85,815 unique tokens.

Both sets displayed the expected Zipfian “long tail”: a handful of very common tokens provide syntactic scaffolding, while >127 k rare tokens inject lexical breadth. This balance equipped the model to handle routine patterns and specialized or edge-case language, fostering robust generalization without domain bias.

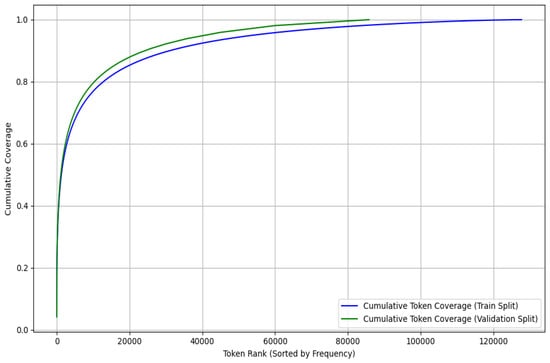

Moreover, cumulative token coverage analysis (see Figure 1) illustrated how efficiently the model learned from the token distribution. Although a significant portion of the dataset comprised frequent tokens, the long tail of rarer tokens enabled the model to expand its vocabulary beyond the basics. This ensured that, while the model excelled at common sentence structures, it could also capture rarer linguistic phenomena, contributing to its ability to produce both fluent and contextually appropriate outputs.

Figure 1.

Cumulative token coverage.

In summary, the dataset provided a comprehensive and well-balanced training environment for the causal language model (CLM). By exposing the model to both frequently used words and rare tokens, we enhanced its capacity for generalization, flexibility, and overall performance. This strategic token distribution equipped the model with the linguistic depth necessary for handling both common tasks and more specialized or complex use cases, ensuring that it could meet a wide range of user needs and scenarios.

2.1.2. Dataset for Supervised Fine-Tuning (SFT)

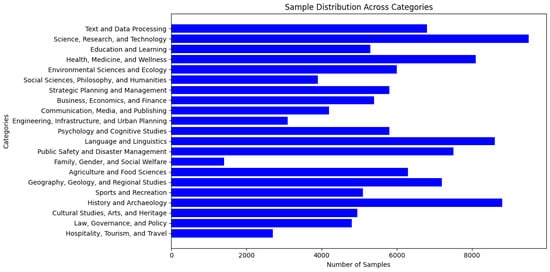

For the fine-tuning phase, we curated a dataset designed to enhance the model’s capabilities across a broad spectrum of topics, representing a rich and diverse corpus of academic and professional fields. The dataset comprised approximately 48,000 samples, each covering various areas of knowledge [17]. Although the dataset included metadata on the distribution of domains, this information was not directly leveraged during the current fine-tuning process. Instead, the dataset was treated as a heterogeneous collection of textual data, enabling the model to generalize across diverse subject areas without explicit domain-based supervision (see Figure 2). However, the domain metadata provided a valuable foundation for future enhancements, where domain-specific system prompts or targeted fine-tuning could be introduced to specialize the model for particular fields, even when operating under limited data availability.

Figure 2.

Distribution of domains across dataset.

Each sample may have spanned multiple subjects, reflecting the complex and interconnected nature of modern research and professional fields. While domain-specific categorizations were used only for internal evaluation and to understand the dataset’s breadth, they may be employed in future iterations for system-level instructions when a more refined dataset and system are available.

The primary goal during the fine-tuning stage was to enhance the language model’s ability to generate coherent, contextually relevant responses across a wide array of topics without biasing it toward any particular field. By exposing the model to general knowledge from various sectors, we aimed to develop its versatility and accuracy in generating responses without explicit domain-based instructions.

Although domain labels were not part of the fine-tuning phase, they served as critical tools for dataset analysis and strategic planning. These labels provided valuable insights into how the dataset was distributed across different fields, guiding future efforts to expand the dataset, balance under-represented domains, and incorporate system instructions for domain-specific tasks.

As the dataset grows and more samples become available, there may be opportunities to introduce domain-based system instructions. This will allow for more tailored responses from the model in domain-specific applications, such as providing expert-level outputs in healthcare, legal reasoning, scientific research, and more. To further enhance the model’s ability to generate coherent and contextually appropriate responses, we explored techniques such as RL and SFT. Incorporating Chain-of-Thought (CoT) methods, as suggested by Lee, A.V.Y. (2024), provided the necessary scaffolding to improve the model’s reasoning process, thereby aiding in knowledge creation and building purposes [18].

2.1.3. ChatTune-DPO: A Dataset for Direct Preference Optimization in Kazakh

During the primary fine-tuning stage, we trained an instruction-tuned model which was subsequently deployed using vLLM (Docker image vllm/vllm-openai:v0.6.1). To evaluate and enhance its performance, we conducted a two-month pilot study with a group of 52 users interacting with the model through our chatbot platform, Farabi-Lab. User interactions and feedback were systematically collected to construct a high-quality dataset from scratch, which is now publicly available on Hugging Face for researchers to utilize and extend.

ChatTune-DPO is a structured dataset containing user interactions with a large language model (LLM), with a focus on collecting user feedback for DPO training. It includes dialogue sessions in which users engage with the model, providing explicit feedback such as likes/dislikes and suggested corrections to the generated responses. This dataset is designed to improve model alignment with human preferences by incorporating both explicit and implicit user feedback. The ongoing development of this dataset ensures that it remains relevant for training and fine-tuning models to generate more accurate and contextually appropriate responses [19].

The dataset is actively growing as users continue to interact with the model. Regular updates are planned to incorporate new dialogues, ensuring broader coverage and improved response quality [20].

2.1.4. A Synthetic Pseudo-DPO Dataset from the Instruction-Based Fine-Tuning

To further enhance model alignment and training efficiency, an experiment was conducted to generate a Pseudo-DPO dataset by augmenting an existing dataset originally designed for instruction-based fine-tuning. The primary dataset contained two key fields: prompt (query) and answer (response). The objective of this augmentation was to simulate DPO training data by artificially generating and ranking alternative responses.

Methodology. To generate a diverse set of candidate responses, a transformer-based language model (vLLM) was employed with controlled variations in sampling parameters, particularly top-p and temperature. The process was structured as follows:

- 1.

- Generating Candidate Responses:

- ○

- For each query, five candidate responses were generated.

- ○

- Sampling parameters were varied, with top-p = 0.9 and temperature ranging from 0.5 to 1.0, with an increment of 0.1.

- 2.

- Selecting the Most Similar Response:

- ○

- Among the five generated candidates, the response most similar to the original dataset answer was selected.

- ○

- Similarity was measured using cosine similarity on embeddings extracted from the RoBERTa-Kaz-Large model (nur-dev/roberta-kaz-large).

- 3.

- Constructing the Pseudo-DPO Dataset:

- ○

- The most similar generated response was labeled as the rejected response.

- ○

- The original dataset answer was assigned as the accepted response.

This pseudo-labeling approach was closely aligned with the SemiEvol framework proposed by Luo et al. (2025), which similarly begins with a small, trusted seed of labeled examples and propagates knowledge to un-labeled data by generating multiple alternative responses from different LLM variants. In SemiEvol, an entropy-based confidence score is used to filter high-quality pseudo-responses, followed by fine-tuning on the selected pseudo-labeled set. This propagate-and-select loop mirrors our strategy of sampling several completions per prompt, ranking them, and constructing preference pairs where the most accurate completion becomes the accepted response and a contrasting variant the rejected one. In both cases, synthetic preference supervision is distilled directly from model outputs, reducing the reliance on costly manual annotations while preserving alignment quality. Furthermore, the entropy-based selection mechanism in SemiEvol plays an analogous role to our similarity-based filtering and contrastive penalty system, ensuring that only high-fidelity pseudo-preference pairs are used for model refinement [21].

2.2. Model Architecture

The core of our model was built upon the LLaMA (Large Language Model Meta AI) framework, an autoregressive language model renowned for its efficiency and adaptability. For the Kazakh language model, we utilized a variant with 1.94 billion parameters, which was relatively small compared to the larger LLaMA models designed for high-resource languages. This choice was intentional in order to effectively manage computational resources while still achieving a significant level of language understanding and generation capabilities. Future advancements may involve integrating innovative architectures beyond traditional transformers, as suggested by recent research [3] which emphasizes the potential of models like Mamba and Hawk to enhance computational efficiency and performance in large-scale language modeling. Existing models, such as GPT variants and LLAMA, have shown varying degrees of adaptability to new data features [22]. While LLAMA’s accuracy can improve with updated datasets, challenges remain in capturing deep domain knowledge and context-specific understanding, particularly in low-resource languages like Kazakh.

Key Aspects of Model Architecture

- Causal language modeling (CLM): The model was trained using the causal language modeling approach, where it learned to predict the next word in a sequence based on the context provided by the preceding words. This method was particularly well-suited for text generation tasks, enabling the model to produce coherent and contextually relevant Kazakh text [23].

- Parameter reduction and customization: We modified the standard LLaMA architecture to reduce the number of parameters to 1.94 billion. This reduction struck a balance between model complexity and computational feasibility, making it suitable for environments with limited resources. Additionally, we introduced specific customizations to better handle the morphological and syntactic nuances of the Kazakh language, which is highly agglutinative compared to more analytically structured languages like English. These customizations included adjustments in the embedding layers and attention mechanisms to accommodate the rich morphological variations inherent in Kazakh.

- Tokenizer customization: A specialized tokenizer was developed to effectively manage the unique characters and linguistic structures of the Kazakh language. This tokenizer ensured that the tokenization process preserved the linguistic integrity of the input text by correctly handling Kazakh-specific characters and affixes. By accurately segmenting words and morphemes, the tokenizer enhanced the model’s ability to understand and generate grammatically correct and meaningful text.

To optimize performance for Kazakh text processing, we carefully designed the model’s architecture, balancing complexity and efficiency. Below are the key internal configurations:

- ○

- Hidden and Intermediate Sizes: A hidden size of 2048 and an intermediate size of 5504 provided sufficient capacity to represent complex syntactic structures while keeping the model size manageable. This followed LLaMA’s approach of emphasizing dense layers (MLPs) in each transformer block, supporting a richer internal representation of language.

- ○

- Number of Layers and Attention Heads: With 32 transformer layers, each containing 32 total attention heads (8 key-value heads), the model maintained sufficient depth and multi-head parallelization to capture nuanced patterns in Kazakh text. These hyperparameters allowed for the handling of different semantic and syntactic aspects simultaneously during self-attention.

- ○

- Activation and Normalization: The SiLU (Sigmoid Linear Unit) activation function was used, in line with standard LLaMA configurations, along with RMS normalization for numerical stability. RMSNorm stabilizes gradients, which is especially beneficial for smaller or low-resource models.

- ○

- Positional Encoding and Long Context: The max_position_embeddings parameter was set to 8192 to support extended context windows, which was crucial for tasks such as document summarization and long-form question answering. A high rope-theta value ensured that larger contexts could be accommodated without the excessive distortion of positional signals, preserving coherence over longer sequences [24].

- ○

- Attention Dropout: This variable was set to 0.0 to prevent the loss of the vital signal, which was particularly important given the limited volume of high-quality Kazakh data. In larger datasets, a small attention dropout may serve as a regularization mechanism, but in this case, reducing it helped to retain essential features from each training step.

- ○

- Tie Word Embeddings: Weight tying was disabled, ensuring that the input and output embeddings remained separate. This allowed for greater flexibility in handling the subtleties of Kazakh vocabulary during both encoding and decoding.

2.3. Contrastive Loss and Entropy for Robust Preference Optimization

Due to the limited availability of high-quality Direct Preference Optimization (DPO) datasets for Kazakh, we augmented the conventional DPO objective with two targeted safeguards designed to mitigate its well-documented failure modes:

- Contrastive margin—prevented the model from lowering the log-probability of a preferred completion below that assigned by the reference model;

- Entropy/KL regularization—preserved distributional diversity and averted mode collapse [25].

To extend DPO, we implemented its core framework, which optimizes a language model to align with human preferences without explicit reward modeling. The core idea was as follows: (1) start from the standard DPO formulation, which uses pairs of “preferred vs. dispreferred” responses; (2) add a contrastive penalty that explicitly keeps the log-probability of the preferred completions high; (3) include an entropy or KL term to prevent mode collapse and stabilize training.

2.3.1. Problem Setup

Dataset. Let be a dataset of prompts x with one response labeled “preferred” and one labeled “dispreferred” .

Policies. We have a reference policy (typically an SFT checkpoint—a model that has already been supervised-fine-tuned). We fine-tune a new policy to better match the preference data.

DPO originates from the Bradley–Terry or Plackett–Luce family of pairwise ranking models. Its loss (omitting constant factors) can be written as follows:

where σ (⋅) is the logistic function and β > 0 controls implicit KL regularization. Although this guarantees > , it does not stop the optimiser from simultaneously degrading itself.

Adding Contrastive and Entropy Terms: A known failure mode of base DPO is that the model can inadvertently lower the log-probabilities of the “preferred” completions (as long as it lowers the dispreferred completions even more, the ratio still improves). A way to fix this is to incorporate ideas from contrastive learning (which enforces a margin or a penalty for reducing good-sample probabilities) and entropy regularization (to prevent the policy from collapsing onto one narrow set of tokens). Below is one way to introduce these terms:

- 1.

- Contrastive-Like Penalty. To anchor the preferred completion, we addedi.e., the policy pays a cost whenever it pushes below the reference value. λ ≥ 0 tunes the strictness of this margin.

- 2.

- Entropy or KL Regularization. To keep from collapsing or drifting too far, an additional penalty is often placed on the policy’s distribution. The common choices are as follows:

- KL to Reference. Add for each prompt.

- Entropy. Add if exploration needs to be preserved.

where α, γ ≥ 0 and H denotes entropy. In large-scale LMs, the KL term is often preferred because it measures drift directly against the well-behaved reference distribution.

2.3.2. Combined Loss Formulation

Putting these together, we can now write a single objective:

This single loss simultaneously achieves the following outcomes:

- Preserves the relative ordering demanded by DPO;

- Locks the probability of preferred answers above a reference floor;

- Maintains healthy output entropy.

2.4. Training Setup

The training of the causal LLaMA model was conducted using two NVIDIA A100 GPUs, each equipped with 80 GB of memory, to manage the computational demands of large-scale language model training. These GPUs were provided by the Institute of Information and Computational Technologies, located in Almaty, Kazakhstan. The training process was meticulously designed to ensure stability and convergence while efficiently utilizing the available resources. The extensive dataset used for training consisted of over 5.3 million examples drawn from a wide variety of domains. This diversity was critical for enabling the model to generalize across different text types, thereby ensuring robust language learning.

2.4.1. Data Preparation and Preprocessing for CLM

Prior to training, the data underwent extensive preprocessing and normalization to enhance its quality and suitability for the model. The preprocessing steps included the following:

- Data Cleaning: The removal of HTML tags, normalization of URLs to a generic <link> token, and elimination of noise such as excessive punctuation and formatting artifacts like empty brackets and parentheses.

- Kazakh Text Filtering: Special attention was given to Kazakh texts to ensure richness in Kazakh content. Specific character filters were applied to discard lines that did not meet certain criteria, thereby improving the dataset’s linguistic integrity.

- Normalization: Ensuring consistent formatting and representation of the Kazakh script, addressing issues related to unique characters and diacritics that are essential in Kazakh.

This thorough preprocessing resulted in a cleaner, more uniform dataset, which significantly improved the model’s ability to learn meaningful patterns and linguistic structures.

2.4.2. Training Configuration

The model training followed a well-structured plan to optimize performance and resource utilization:

- Computational Resources: We utilized two NVIDIA A100 GPUs with 80 GB memory each, employing Distributed Data Parallel (DDP) to distribute the training process across the GPUs. This parallelism enhanced computational efficiency and reduced overall training time.

- Training Data: We employed a dataset of over 5.3 million examples covering various domains and text types to ensure comprehensive language learning and generalization capabilities.

- Optimization Algorithm: We implemented a cosine annealing with warm restarts scheduler for optimization, allowing cyclic learning rates. This approach improved convergence rates and helped prevent the model from getting trapped in local minima.

- Warm-up Steps: We incorporated 8000 warm-up steps to gradually increase the learning rate at the beginning of training. This strategy helped to stabilize the training process and improved the initial convergence rate by preventing sudden shocks to the network weights.

- Hyperparameters: The careful tuning of hyperparameters such as batch size, sequence length, and learning rates was conducted to achieve optimal training efficiency and model performance.

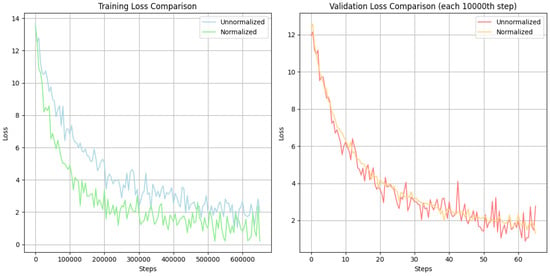

A significant challenge during training was managing fluctuations in loss values. Initially, both training and validation losses exhibited high volatility due to the lack of normalization. The unnormalized loss showed significant noise, particularly during the early training steps as the model weights were adjusting. To address this instability, loss normalization techniques were introduced, leading to a marked improvement in training dynamics.

The impact of loss normalization is illustrated in Figure 3, which compares the training and validation loss curves before and after normalization:

Figure 3.

Training and validation loss comparison: unnormalized vs. normalized.

- Unnormalized Loss displayed a high degree of volatility with large fluctuations, making it difficult to assess the model’s learning progress and convergence.

- Normalized loss showed a much smoother and more controlled trajectory. In the validation phase, the normalized loss consistently exhibited less variance and stabilized more quickly, indicating better generalization and a more reliable training process.

The normalization of the loss function was critical in mitigating the chaotic fluctuations observed during initial training, thereby enhancing the model’s learning stability and overall performance.

2.5. Training Models for Instructional Response Generation

2.5.1. Chat Templates

During the fine-tuning phase of our causal language model (CLM), we employed two distinct chat templates to structure conversation inputs: a localized template tailored specifically to Kazakh and the standard LLaMA-3 template designed for general use.

The localized template incorporated additional checks for specific roles, such as system messages, and featured role-specific tokens like the Kazakh term “көмeкшi” (meaning “assistant”) for the assistant role. This enhancement improved the model’s ability to handle non-English text effectively. Furthermore, this template explicitly included an eos_token at the end of conversations when generation prompts were disabled, ensuring a clear demarcation between message sequences.

In contrast, the LLaMA-3 template adopted a more streamlined approach, omitting role-specific logic and using a simpler method to handle the first message by attaching a bos_token based on message order. It used the English term “assistant” as the default generation prompt, making it better suited to English-based models. While the LLaMA-3 template was more efficient in terms of simplicity and performance, the localized template provided greater flexibility for multilingual contexts, particularly in supporting the nuances of Kazakh language processing. The following example illustrates our custom template:

- <|begin_of_text|><|start_header_id|>system<|end_header_id|>

- This is a system message.<|eot_id|><|start_header_id|>user<|end_header_id|>

- Hello! How are you?<|eot_id|><|start_header_id|>assistant<|end_header_id|>

- I’m fine, thank you. How can I assist you today?<|eot_id|><|end_of_text|>

2.5.2. Evaluation Setup: Task-Specific Assessment

To assess the effectiveness of the models, a comprehensive evaluation was conducted using a diverse set of 2000 test samples, covering a wide range of linguistic and reasoning tasks. The dataset was structured to reflect key challenges in Kazakh NLP, ensuring a balanced assessment of factual accuracy, contextual understanding, ethical considerations, and language generation capabilities.

The fact-based question answering (20%) segment tested the model’s ability to retrieve and generate accurate responses to knowledge-driven queries, while fact extraction from context (15%) evaluated its capability to identify and summarize key information from longer passages. Keyword extraction instructions (5%) measured precision in identifying salient terms, crucial for applications such as search indexing and document classification.

Tasks related to problem-solving (12%) and story generation (5%) were incorporated to examine the model’s logical reasoning, creativity, and narrative coherence. To address concerns regarding ethical AI alignment, ethical bias detection (10%) was included to evaluate responses for fairness, neutrality, and cultural sensitivity. The Kazakh cultural expertise (10%) category assessed the model’s proficiency in handling queries specific to Kazakh history, traditions, and societal norms, ensuring that it accurately reflected and respected cultural knowledge.

The language refinement tasks included paraphrasing (8%), spelling correction (8%), and question construction (7%), testing the model’s linguistic flexibility, grammatical accuracy, and ability to reformulate content while preserving meaning. This diverse evaluation framework ensured a holistic assessment of model performance across multiple functional domains, providing a detailed insight into its strengths and areas for further optimization.

3. Results

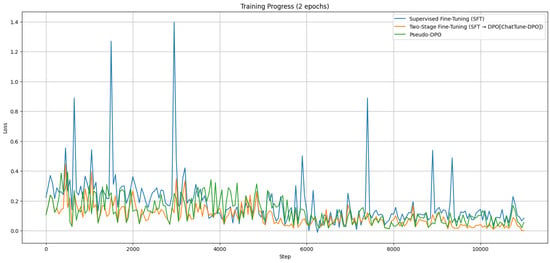

In our experiment, we aimed to enhance Kazakh language processing by fine-tuning the same base model (CLM) with different training strategies. To ensure the objectivity of our observations, we shuffled the dataset before training but did not apply any further shuffling during the actual training phase. This consistency helped to avoid randomization effects that might otherwise influence the loss behavior (see Figure 4). Because each approach involved different total numbers of training steps, we plotted them on the same graph by averaging the loss in a sliding window so that their progress could be compared side by side. We tested three main setups:

Figure 4.

Training loss comparison.

- Supervised Fine-Tuning (SFT);

- Two-Stage Fine-Tuning (SFT → DPO [ChatTune-DPO]) over two epochs (each epoch included an SFT phase followed by DPO);

- Pseudo-DPO, with the same step count as SFT.

Figure 4 shows that both ChatTune-DPO and Pseudo-DPO exhibit lower and more stable loss curves than the baseline SFT. A notable contributor to this stability is our strategic use of existing tokens in the tokenizer—particularly in incorporating the Kazakh word “көмeкшi” rather than creating entirely new embeddings. This approach minimized the need for learning unfamiliar token representations and helped stabilize the fine-tuning process.

Observations from Figure 4 confirm that the fine-tuned models achieve steady and consistent reductions in loss, with significantly fewer spikes compared to the baseline SFT model. Those spikes indicate that the baseline model struggles with convergence, whereas the ChatTune-DPO and Pseudo-DPO curves are smoother, reflecting improved optimization. By the end of training, the fine-tuned models maintain a lower final loss, emphasizing their effectiveness in handling instruction-based tasks tailored to the nuances of the Kazakh language. This experiment underscores the importance of customizing language models to specific languages, especially in low-resource contexts like Kazakh. Through our optimized fine-tuning strategies, we provide insights into developing NLP models for under-represented languages, demonstrating not only better overall performance but also an enhanced ability to capture the linguistic intricacies of Kazakh for real-world applications.

Table 1 compares the performance of various fine-tuning strategies applied to LLaMA-1.94B in the context of Kazakh language processing. The ChatTune-DPO configuration (62.7% accuracy) yields the highest score, surpassing both the Base Instruction setup (56.5%) and the Pseudo-DPO variant (61.3%). In addition to accuracy, the ChatTune-DPO approach shows notable gains in relevance, coherence, and context-awareness, underscoring the value of preference-based fine-tuning for aligning the model with human-evaluated quality measures.

Table 1.

Evaluation results summary.

For completeness, two publicly available Kazakh-supporting models were also included as benchmarks. The Kazakh mGPT 1.3B [26] system performed comparatively poorly—particularly in handling extended contexts and mitigating hallucinations—achieving 36.1% accuracy and 39.3% relevance. Meanwhile, Llama-3.1-8B-instruct (by ISSAI) [27] demonstrated a more competitive performance at 58.2% accuracy, although it still trailed the ChatTune-DPO model on multiple key metrics.

For additional evaluation, we built a simple benchmark set derived from the QThink-Task dataset, consisting of 200 manually selected samples for each task category [28]. The benchmark spans eleven diverse task types. This curated benchmark was designed to systematically measure model performance across both generation and reasoning challenges, with a particular focus on testing context understanding, logical inference, and linguistic robustness in the Kazakh language. It provides a lightweight but effective framework for comparing fine-tuning strategies and alignment techniques in low-resource scenarios (Table 2).

Table 2.

Task-level accuracy on the QThink-Task benchmark.

Overall, these results point to the effectiveness of targeted fine-tuning strategies in low-resource language environments. Notably, even a mid-scale model (1.94B parameters) can surpass larger, more generalized architectures (8B parameters) when optimized for Kazakh-specific tasks. Moreover, the improvements seen with Direct Preference Optimization (DPO)—particularly in instruction-following and context management—highlight its potential as a pivotal optimization approach for aligning language models in under-represented languages. The table also distinguishes between the standard DPO results (marked with an asterisk) and those obtained via enhanced DPO with contrastive and entropy-based regularization, demonstrating how additional adjustments can further improve alignment performance.

4. Discussion

4.1. Engineering Challenges and Solutions

The development and fine-tuning of the LLaMA 1.94B Kazakh language model presented several technical challenges, primarily due to the constraints associated with working with a low-resource language like Kazakh.

- Handling mixed-language data: Mixed-language data in the training corpus, particularly Russian text interspersed in the corpus, posed a significant challenge to maintaining the model’s primary focus on Kazakh while retaining its capacity to process code-switched contexts. To resolve this, we developed a targeted cleaning pipeline to filter non-Kazakh content and applied domain-specific tokenization strategies optimized for Kazakh morphological complexity. These steps balanced strict linguistic specificity with controlled flexibility for mixed-language inputs.

- Optimizing model performance: Optimizing the model’s performance with a relatively small parameter size of 1.94 billion, especially when compared to larger models trained on high-resource languages, posed another challenge. We adopted innovative techniques such as modifying the LLaMA architecture to suit the specific linguistic features of Kazakh, reducing the parameter size to manage computational load, and employing a cosine annealing with restarts scheduler to enhance convergence rates during training.

- Managing hardware constraints: Training a large language model typically requires significant computational resources. With only two NVIDIA A100 GPUs (80 GB each) available, we needed to efficiently manage these resources to prevent bottlenecks and ensure smooth training. To overcome this, we utilized Distributed Data Parallel (DDP) to distribute the computational load across GPUs, maximizing GPU usage and minimizing training time. We also carefully planned the training phases, including 8000 warm-up steps, to stabilize the model’s learning process and avoid issues related to overfitting or hardware limitations.

These solutions were integral to the successful development of the Kazakh language model, demonstrating that, even with limited resources, significant advancements in NLP for low-resource languages are achievable. The necessity for models that can efficiently handle long sequences and reduce computational demands is evident [3]. By exploring architectures that require less computation yet handle more tokens, we can better address the constraints posed by limited computational resources. Another significant challenge is ensuring the privacy and security of user data during model training. As highlighted by Sumit Kumar Dam et al. (2024), training LLM-based chatbots often requires large datasets that may include sensitive information like chat logs and personal details. To maintain trust and comply with privacy regulations, it is crucial to implement strict data anonymization, encryption protocols, and controlled access to data. This ensures that user privacy is protected throughout the development process [29].

4.2. Limitations

Despite encouraging results, several constraints temper our conclusions:

- Computational scale: Our experiments were limited to two A100-80 GB GPUs; larger context windows or deeper hyperparameter sweeps remain unexplored.

- Domain coverage: The training corpus, while diverse, is still skewed toward news and literary prose; highly technical sub-domains (e.g., legal or medical) are under-represented.

- Evaluation breadth: Automatic metrics target general language proficiency; human judgments of cultural adequacy and bias were only collected for a small pilot subset and are not reported here.

- Synthetic-pair quality: Pseudo-DPO relies on embedding-based similarity; errors in the similarity heuristic could mis-label the “rejected” side, introducing noisy gradients. Improving pair-selection heuristics is left to future work.

- Code-switching generalization: While mixed Kazakh–Russian text was present in the corpus, a systematic evaluation of code-switched text generation and comprehension is pending.

4.3. Scientific Contributions

This research presents several scientific contributions to the field of NLP for low-resource languages:

- Novel insights into training language models for low-resource languages: The study provides valuable insights into the specific challenges and strategies associated with training language models for low-resource languages like Kazakh. The use of a carefully curated dataset that includes diverse text types, combined with innovative data preprocessing and model customization techniques, underscores the importance of tailored approaches for different linguistic contexts.

- The development of new techniques for model fine-tuning: The instruction-based fine-tuning methodology developed for this model represents a novel approach in fine-tuning LLMs for specific tasks. By focusing on instruction-based datasets and utilizing supervised fine-tuning, the model demonstrates significant improvements in generating context-aware and relevant responses. This showcases the effectiveness of these techniques in enhancing model performance for specialized applications.

- Open-Source Dataset Contributions: A distinctive contribution of this study lies in the family of datasets we built and open-sourced, providing a valuable resource for the research community (Table 3).

Table 3. Summary of Key Datasets Developed for Kazakh NLP.

Table 3. Summary of Key Datasets Developed for Kazakh NLP.

By developing a Kazakh language model, we contribute to the democratization of AI technologies, enabling more inclusive access to LLMs as emphasized by Kozov et al. [1]. Additionally, our focus on creating a trustworthy and accurate model aligns with the need to combat misinformation, a concern highlighted in recent studies [2].

5. Conclusions

This study demonstrates a compact yet robust strategy for training and refining a Kazakh language model, achieving substantial performance gains in a low-resource setting. Adapting LLaMA architecture for Kazakh, lowering computational overhead, and applying Direct Preference Optimization (DPO) with contrastive and entropy-based regularization resulted in a stable, high-performing model that surpassed baseline instruction-tuned variants and even larger-scale multilingual systems in key metrics such as accuracy, coherence, and context-awareness.

Three primary insights emerge from this work:

- Focused Monolingual Training: Concentrating on Kazakh-specific morphological and syntactic structures yields more context-aligned outputs than general-purpose multilingual models that dilute low-resource data.

- Preference-Based Fine-Tuning: Integrating DPO—especially when enhanced by contrastive constraints and entropy regularization—addresses common weaknesses in preference ranking, aligns responses with human feedback, and reduces hallucinations.

- Feasibility Under Resource Constraints: Employing a moderate-scale model (1.94B parameters) on limited GPU resources demonstrates that advanced NLP performance is achievable without massive computational budgets.

Data collected from real-world interactions, such as the ChatTune-DPO dataset, has proven invaluable in capturing nuanced user feedback and driving systematic improvements. Rigorous data handling—especially the cleaning and balancing of multilingual components—remains essential for preserving linguistic integrity in low-resource settings.

A key outcome of this study is the Pseudo-DPO dataset, which adapts existing instruction-tuning corpora for preference learning. By creating alternative responses through controlled sampling and labeling the closest match as “rejected”, this approach improves preference alignment while limiting the need for manual annotation. Our experiments indicate that Pseudo-DPO fine-tuning attains a performance similar to manually annotated DPO, highlighting its potential for low-resource settings. The work also delivers dedicated datasets for each stage of the modeling pipeline. Notably, the publicly released Kazakh corpus—curated to reflect both everyday usage and historically significant texts—provides a foundation for continued research on culturally aligned, low-resource language modeling.

These findings suggest that combining well-curated data, language-specific tokenization, and preference-based fine-tuning can produce effective NLP models under constrained conditions. Future work will aim to expand domain coverage, refine DPO-style methods, and explore multilingual extensions to further enhance language technology for under-represented communities.

6. Future Work

6.1. Dataset Expansion and Quality Control

- Richer Domain Coverage: Collect more specialized texts (e.g., legal, medical, technical) to refine domain-aware generation and comprehension.

- Language Diversity: Incorporate code-switching texts (Kazakh–Russian–English) and dialectal data to capture broader real-world usage.

- Continuous Curation: Systematically improve data cleanliness and representation of under-sampled linguistic features for more robust downstream performance.

6.2. Query Decomposition and Multi-Turn Reasoning

Chain-of-Thought (CoT) Enhancements: Experiment with advanced prompting strategies and multi-step logical reasoning to manage complex queries.

- Hierarchical Query Splitting: Investigate pipeline methods that decompose intricate questions into simpler sub-queries, particularly beneficial for educational or domain-specific scenarios.

6.3. Fine-Tuning for Retrieval-Augmented Generation (RAG)

- Dynamic Document Retrieval: Integrate a high-fidelity retrieval module for real-time access to external knowledge bases, improving factual correctness and reducing hallucinations.

- Domain-Specific RAG: Tailor RAG components to specialized fields (e.g., law or healthcare) by combining curated reference documents with the language model’s generative capabilities.

6.4. Deployment and Optimization for Edge Devices

- Parameter and Quantization Strategies: Explore 8-bit or 4-bit quantization to shrink model size while maintaining acceptable performance.

- Hardware-Specific Tuning: Optimize inference pipelines for edge accelerators (e.g., mobile GPUs, FPGAs), ensuring low-latency responses in resource-constrained environments.

- On-Device Caching and Compression: Implement token caching mechanisms and advanced compression to reduce computational overhead and enable real-time inference on lower-powered devices.

Author Contributions

Conceptualization, N.K., M.M. and Z.T.; methodology, N.K.; software, N.K.; validation, N.K., V.V. and M.M.; formal analysis, N.K. and M.M.; investigation, N.K.; resources, N.K. and M.M.; data curation, N.K.; writing—original draft preparation, N.K.; writing—review and editing, N.K., V.V. and M.M.; visualization, N.K.; supervision, M.M.; project administration, M.M.; funding acquisition, M.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Ministry of Science and Higher Education of the Republic of Kazakhstan, grant number BR24993001, “Creation of a large language model (LLM) to maintain the implementation of Kazakh language and increase the technological progress”.

Data Availability Statement

The datasets generated and analyzed during this study are publicly available. The primary training data, including the Kazakh General Corpus, ChatTune-DPO, and QThink-Task datasets, have been open-sourced to promote transparency and reproducibility in low-resource language research. These datasets can be accessed via the following repositories: Kazakh General Corpus [17,30]: https://huggingface.co/datasets/farabi-lab/kaznu-lib-ocr-for-lm (accessed on 19 January 2025), https://huggingface.co/datasets/farabi-lab/raw-text-for-clm-v1 (accessed on 19 January 2025); ChatTune-DPO [19]: https://huggingface.co/datasets/farabi-lab/user-feedback-dpo (accessed on 19 January 2025); QThink-Task [28]: https://huggingface.co/datasets/nur-dev/QThink-Task (accessed on 19 January 2025). For any additional data or resources not included in these repositories, please contact the corresponding author. Where required, data has been anonymized to protect participant privacy in accordance with applicable ethical guidelines.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Kozov, V.; Ivanova, B.; Shoylekova, K.; Andreeva, M. Analyzing the Impact of a Structured LLM Workshop in Different Education Levels. Appl. Sci. 2024, 14, 6280. [Google Scholar] [CrossRef]

- Wang, Q.; Li, H. On Continually Tracing Origins of LLM-Generated Text and Its Application in Detecting Cheating in Student Coursework. Big Data Cogn. Comput. 2025, 9, 50. [Google Scholar] [CrossRef]

- Huang, D.; Yan, C.; Li, Q.; Peng, X. From Large Language Models to Large Multimodal Models: A Literature Review. Appl. Sci. 2024, 14, 5068. [Google Scholar] [CrossRef]

- Kuznetsova, I.; Mukhamejanova, G.; Tuimebayev, Z.; Myrzaliyeva, S.; Aldasheva, K. Axiological Approach as a Factor of University Curriculum Language. XLinguae 2024, 17, 268–279. [Google Scholar] [CrossRef]

- Papageorgiou, E.; Chronis, C.; Varlamis, I.; Himeur, Y. A Survey on the Use of Large Language Models (LLMs) in Fake News. Future Internet 2024, 16, 298. [Google Scholar] [CrossRef]

- Pelofske, E.; Urias, V.; Liebrock, L.M. Automated Multi-Language to English Machine Translation Using Generative Pre-Trained Transformers. arXiv 2024, arXiv:2404.14680. [Google Scholar] [CrossRef]

- Kamshat, A.; Auyeskhan, U.; Zarina, N.; Alen, S. Integration AI Techniques in Low-Resource Language: The Case of Kazakh Language. In Proceedings of the 2024 IEEE AITU: Digital Generation, Astana, Kazakhstan, 3–4 April 2024; IEEE: New York, NY, USA, 2024. [Google Scholar] [CrossRef]

- Li, Z.; Shi, Y.; Liu, Z.; Yang, F.; Liu, N.; Du, M. Quantifying multilingual performance of large language models across languages. arXiv 2024, arXiv:2404.11553v2. Available online: https://arxiv.org/html/2404.11553v2 (accessed on 25 August 2024).

- Kardeş-NLU: Transfer to Low-Resource Languages with the Help of a High-Resource Cousin—A Benchmark and Evaluation for Turkic Languages. Available online: https://aclanthology.org/2024.eacl-long.100 (accessed on 18 August 2024).

- Ataman, D.; Derin, M.O.; Ivanova, S.; Köksal, A.; Sälevä, J.; Zeyrek, D. Proceedings of the First Workshop on Natural Language Processing for Turkic Languages (SIGTURK 2024); Association for Computational Linguistics: Bangkok, Thailand, 2024. Available online: https://aclanthology.org/2024.sigturk-1 (accessed on 22 September 2024).

- Ding, B.; Qin, C.; Zhao, R.; Luo, T.; Li, X.; Chen, G.; Xia, W.; Hu, J.; Luu, A.T.; Joty, S. Data Augmentation Using Large Language Models: Data Perspectives, Learning Paradigms and Challenges. arXiv 2024, arXiv:2403.02990. [Google Scholar] [CrossRef]

- Jiang, F.; Xu, Z.; Niu, L.; Lin, B.Y.; Poovendran, R. ChatBug: A Common Vulnerability of Aligned LLMs Induced by Chat Templates. arXiv 2024, arXiv:2406.12935. [Google Scholar] [CrossRef]

- Rafailov, R.; Sharma, A.; Mitchell, E.; Ermon, S.; Manning, C.D.; Finn, C. Direct Preference Optimization: Your Language Model is Secretly a Reward Model. arXiv 2023, arXiv:2305.18290. [Google Scholar]

- Farabi-Lab/Kazakh Text for Language Modeling—Normalized Dataset; Hugging Face, 2024. Available online: https://huggingface.co/datasets/farabi-lab/kaz-text-for-lm-normalized (accessed on 13 August 2024).

- Farabi-Lab/Kazakh Wikipedia Dumps Cleaned Dataset; Hugging Face, 2024. Available online: https://huggingface.co/datasets/farabi-lab/wiki_kk (accessed on 14 August 2024).

- Nurgali, K. llama-1.9B-kaz-instruct Hugging Face, 2025. Available online: https://huggingface.co/nur-dev/llama-1.9B-kaz-instruct (accessed on 9 February 2025).

- Farabi Lab/KazNU-Lib-OCR-for-LM Dataset; Hugging Face: 2024. Available online: https://huggingface.co/datasets/farabi-lab/kaznu-lib-ocr-for-lm (accessed on 19 January 2025).

- Lee, A.V.Y.; Teo, C.L.; Tan, S.C. Prompt Engineering for Knowledge Creation: Using Chain-of-Thought to Support Students’ Improvable Ideas. AI 2024, 5, 1446–1461. [Google Scholar] [CrossRef]

- Nurgali, K. ChatTune-DPO Hugging Face, 2025. Available online: https://huggingface.co/datasets/farabi-lab/user-feedback-dpo (accessed on 9 February 2025).

- Nurgali, K. Instruct-KZ-RL Hugging Face, 2025. Available online: https://huggingface.co/datasets/nur-dev/kaz-instruct-rl (accessed on 12 January 2025).

- Luo, J.; Luo, X.; Chen, X.; Xiao, Z.; Ju, W.; Zhang, M. Semi-supervised Fine-tuning for Large Language Models. arXiv 2024, arXiv:2410.14745. [Google Scholar] [CrossRef]

- Maree, M.; Shehada, W. Optimizing Curriculum Vitae Concordance: A Comparative Examination of Classical Machine Learning Algorithms and Large Language Model Architectures. AI 2024, 5, 1377–1390. [Google Scholar] [CrossRef]

- Nurgali, K. llama-1.9B-kaz; Hugging Face, 2025. Available online: https://huggingface.co/nur-dev/llama-1.9B-kaz (accessed on 10 February 2025).

- Su, J.; Lu, Y.; Pan, S.; Murtadha, A.; Wen, B.; Liu, Y. RoFormer: Enhanced Transformer with Rotary Position Embedding. Computation and Language 2021. arXiv 2021, arXiv:2104.09864. [Google Scholar] [CrossRef]

- Feng, D.; Qin, B.; Huang, C.; Zhang, Z.; Lei, W. Towards Analyzing and Understanding the Limitations of DPO: A Theoretical Perspective. arXiv 2024, arXiv:2404.04626. [Google Scholar]

- AI Forever, Kazakh mGPT 1.3B, Hugging Face, 2024. Available online: https://huggingface.co/ai-forever/mGPT-1.3B-kazakh (accessed on 16 February 2025).

- ISSAI, LLama-3.1-KazLLM-1.0-8B, Hugging Face, 2024. Available online: https://huggingface.co/issai/LLama-3.1-KazLLM-1.0-8B (accessed on 16 February 2025).

- Kadyrbek, N. QThink-Task: A Task-Level Benchmark for Evaluating Kazakh Language Models; Hugging Face, 2025. Available online: https://huggingface.co/datasets/nur-dev/QThink-Task (accessed on 30 April 2025).

- Dam, S.K.; Hong, C.S.; Qiao, Y.; Zhang, C. A Complete Survey on LLM-based AI Chatbots. arXiv 2024, arXiv:2406.16937. [Google Scholar] [CrossRef]

- Kadyrbek, N. Raw Text for CLM V1 (Farabi-Lab/Raw-Text-for-Clm-V1); Hugging Face: 2024. Available online: https://huggingface.co/datasets/farabi-lab/raw-text-for-clm-v1 (accessed on 30 April 2025).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).