Speech Separation Using Advanced Deep Neural Network Methods: A Recent Survey

Abstract

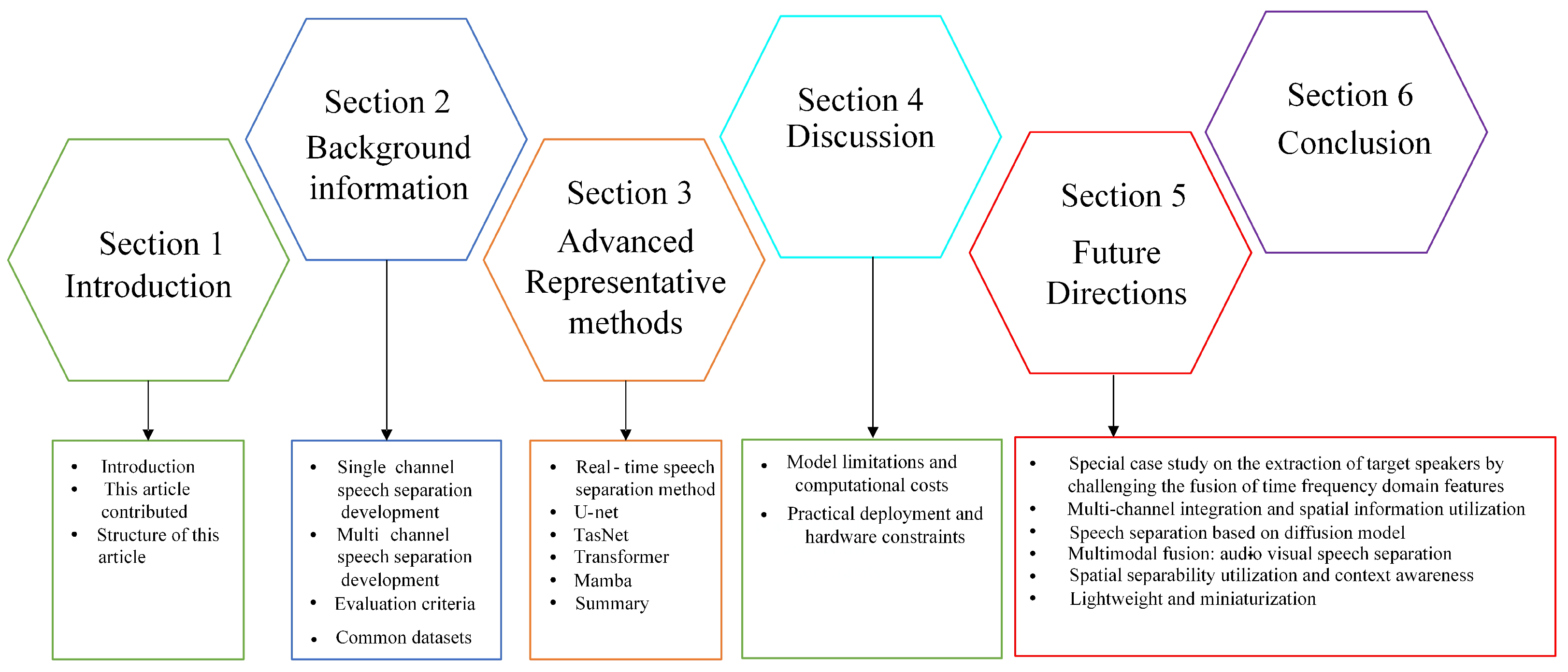

1. Introduction

- We conducted a systematic review and evaluation of the developmental trajectory and practical performance of various deep learning techniques in single-channel supervised speech separation.

- We clearly identified existing research gaps and core challenges within the field, while elucidating the critical role of rational deep learning architectures in addressing these issues.

- We prospectively outlined future directions and proposed a series of promising research avenues with the potential to drive sustained advancement in the field.

2. Background Information

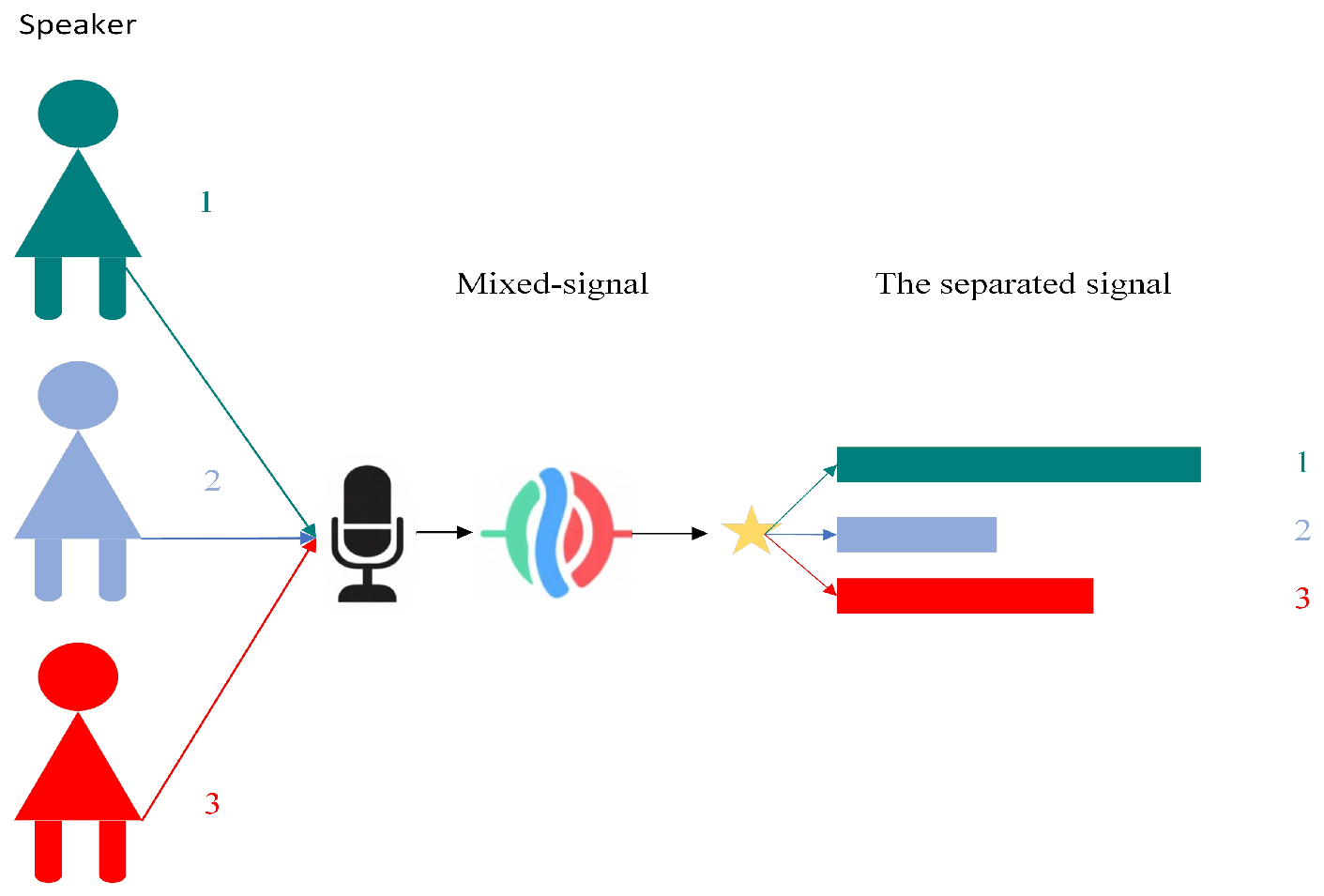

2.1. Overview of the Development of Single-Channel Speech Separation

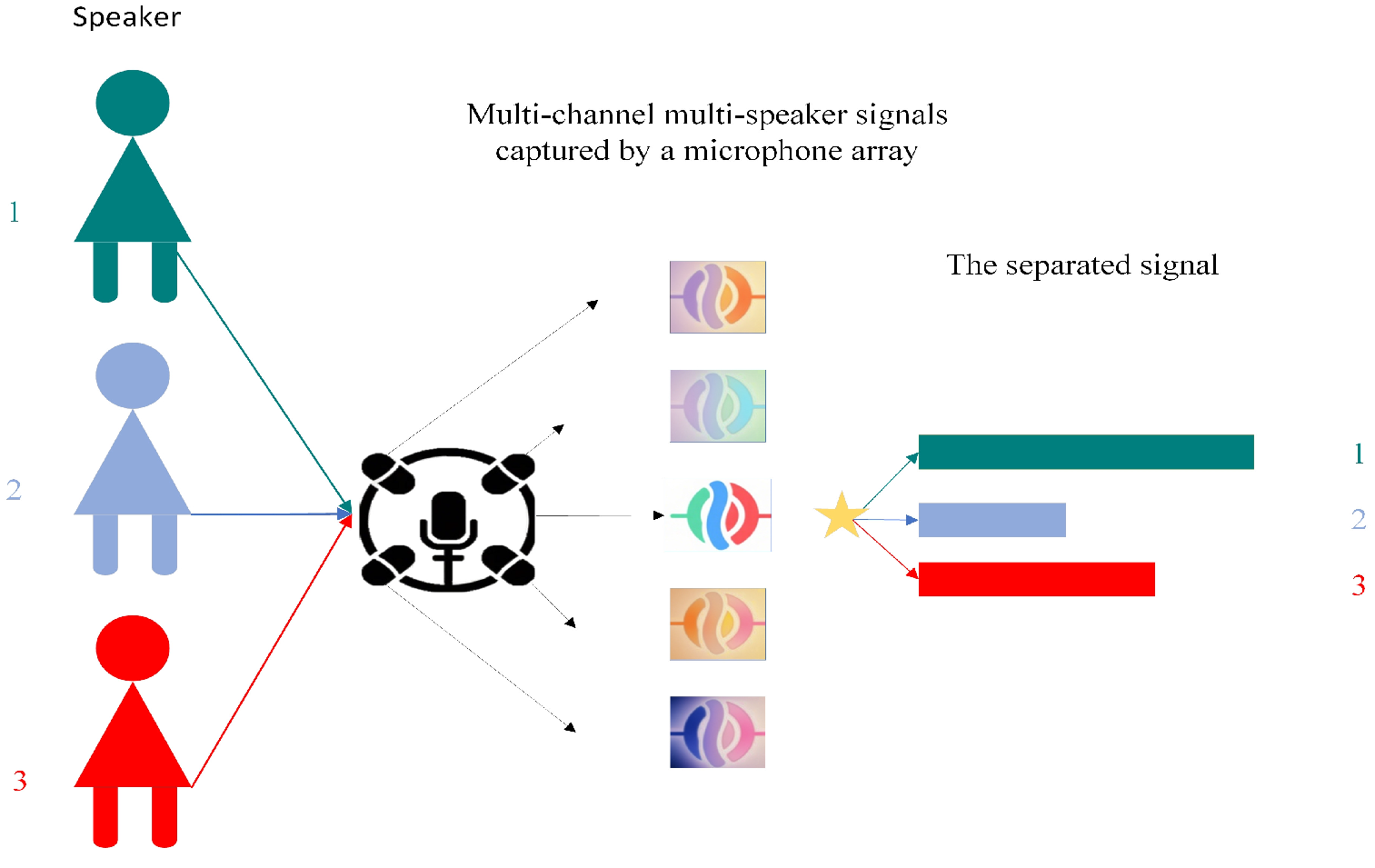

2.2. Overview of the Development of Multi-Channel Speech Separation

2.3. Evaluation Criteria

2.4. Commonly Used Data Sets

| Name | Refs. | Year | Content |

|---|---|---|---|

| TIMIT | [38] | 1993 | 8 US English dialects from 630 speakers; 6300 audio clips. |

| WSJ0 | [39] | 2007 | Mixed-speech dataset generated from WSJ0 corpus. |

| MIR-1K | [40] | 2009 | 1000 Chinese pop song clips (16 kHz); 352 CD-quality 30-s excerpts. |

| iKala | [41] | 2015 | 352 thirty-second music clips with instrumental solos. |

| LibriSpeech | [43] | 2015 | 1000 h of 16 kHz English speech. |

| MUSDB18 | [42] | 2017 | 150 full music tracks (10 h total duration). |

| VCTK | [44] | 2019 | Speech from 50 speakers across different regions. |

| LibriTTS | [45] | 2019 | 585 h of 24 kHz speech from 2456 speakers with text. |

| WHAM! | [46] | 2019 | WSJ0-2mix speech combined with unique noise backgrounds. |

| SMS_WSJ | [47] | 2019 | Multi-channel dataset (2–6 channels) based on WSJ0 and WSJ1. |

| LibriMix | [48] | 2020 | LibriSpeech speakers mixed with WHAM! noise samples. |

3. Advanced Representative Methods

3.1. Progress in Real-Time Speech Separation Methods Based on Traditional Methods

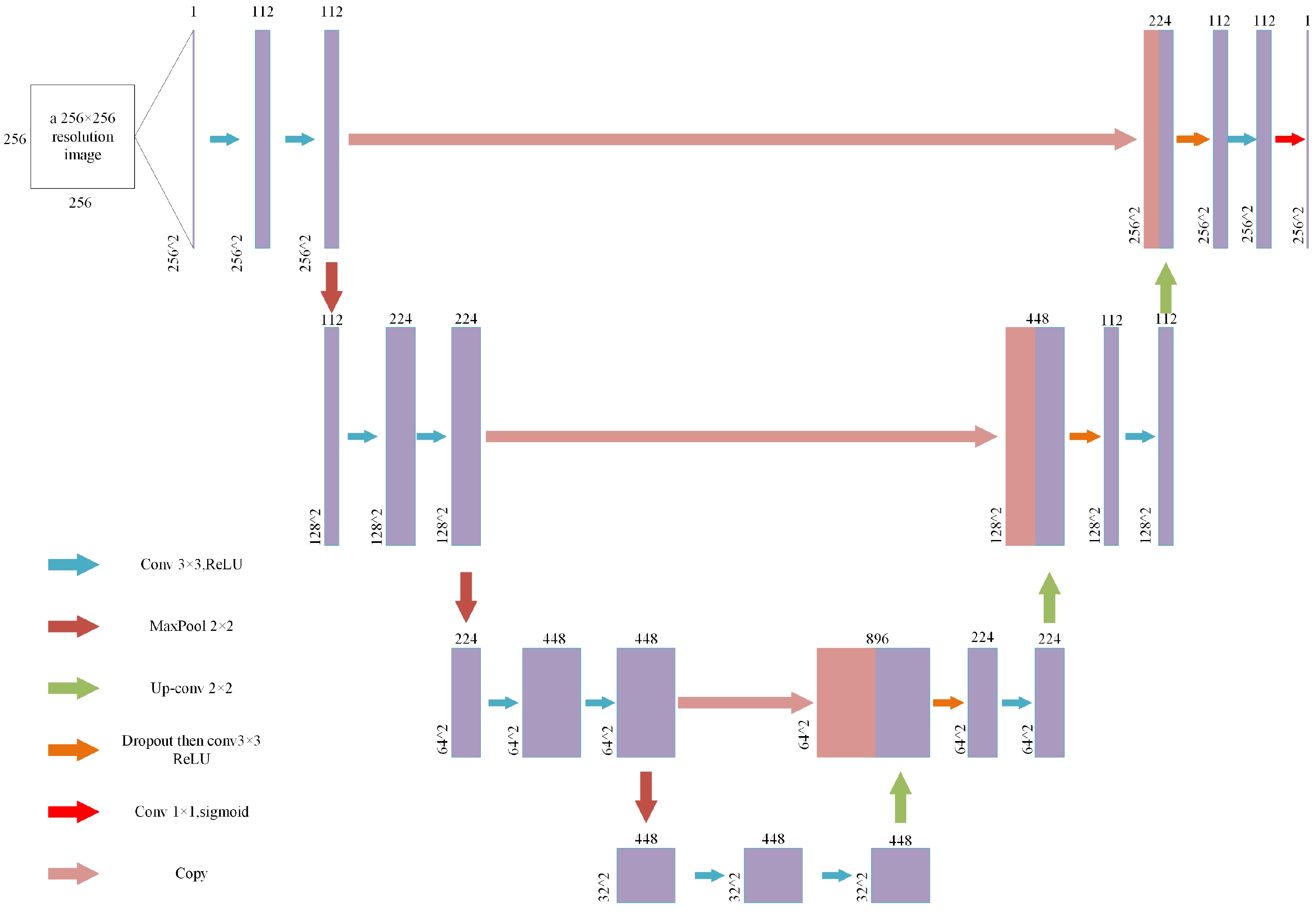

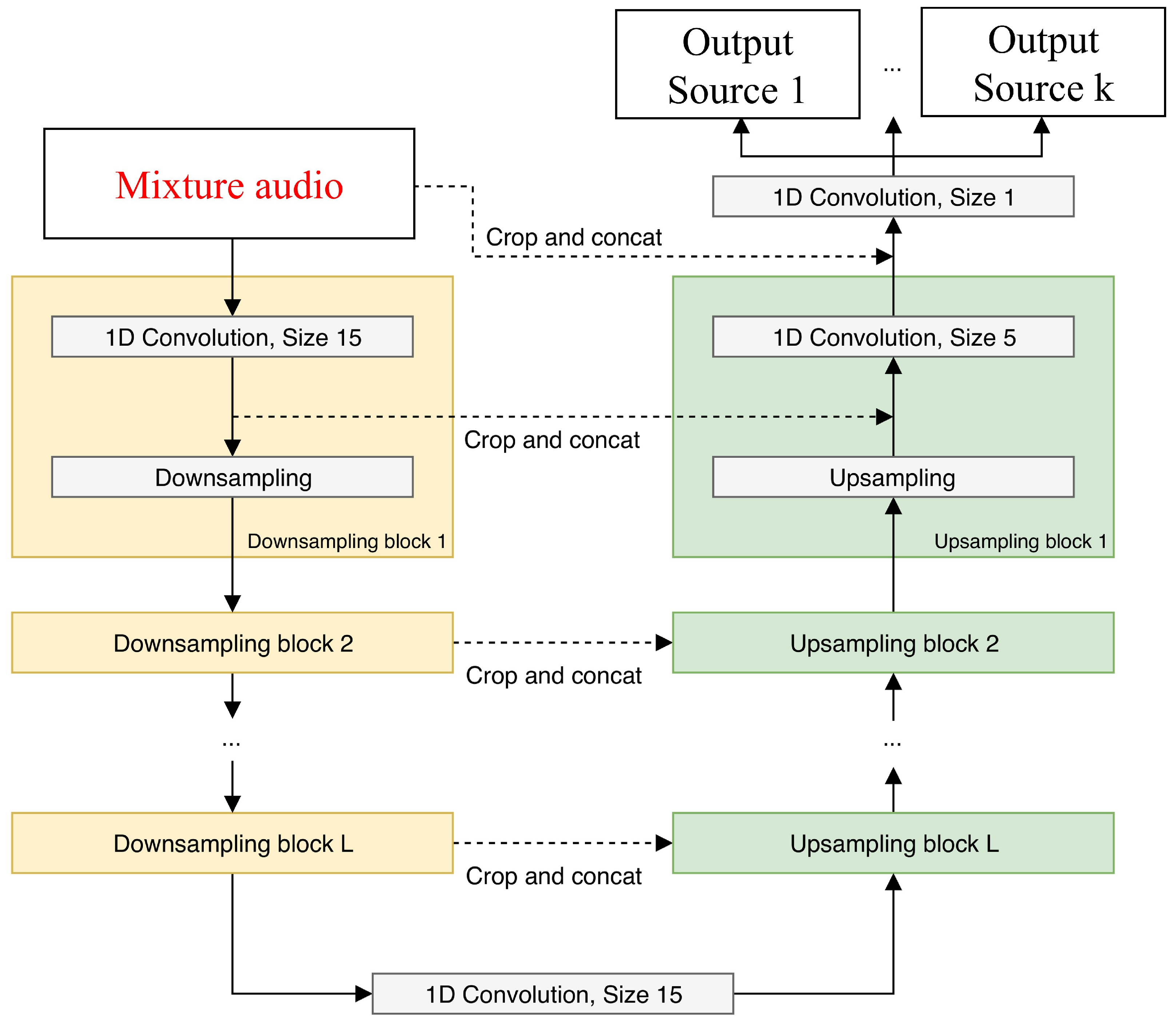

3.2. Progress in Speech Separation Methods Based on the U-Net Architecture

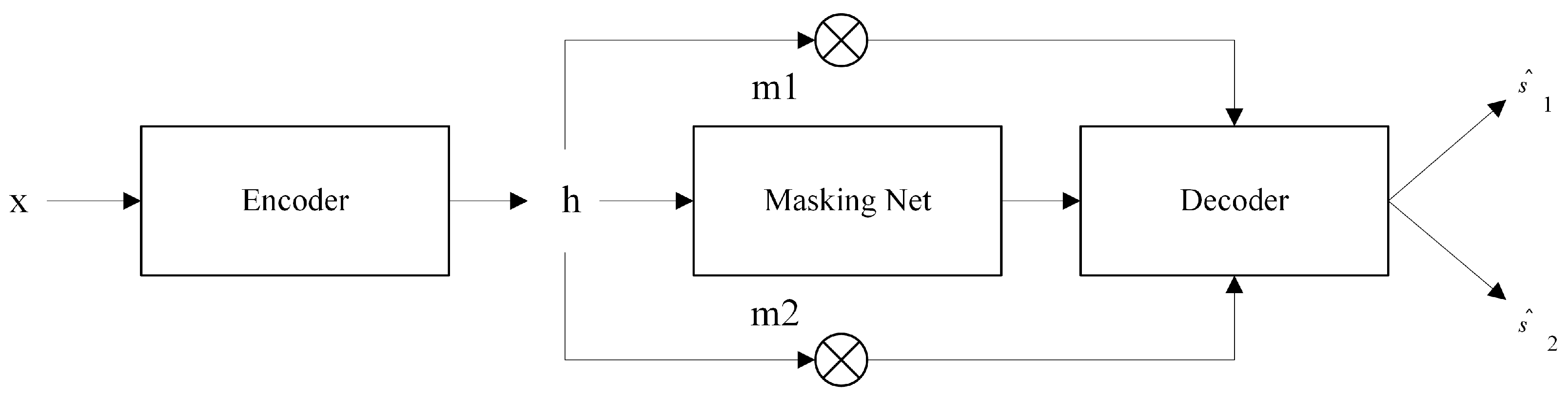

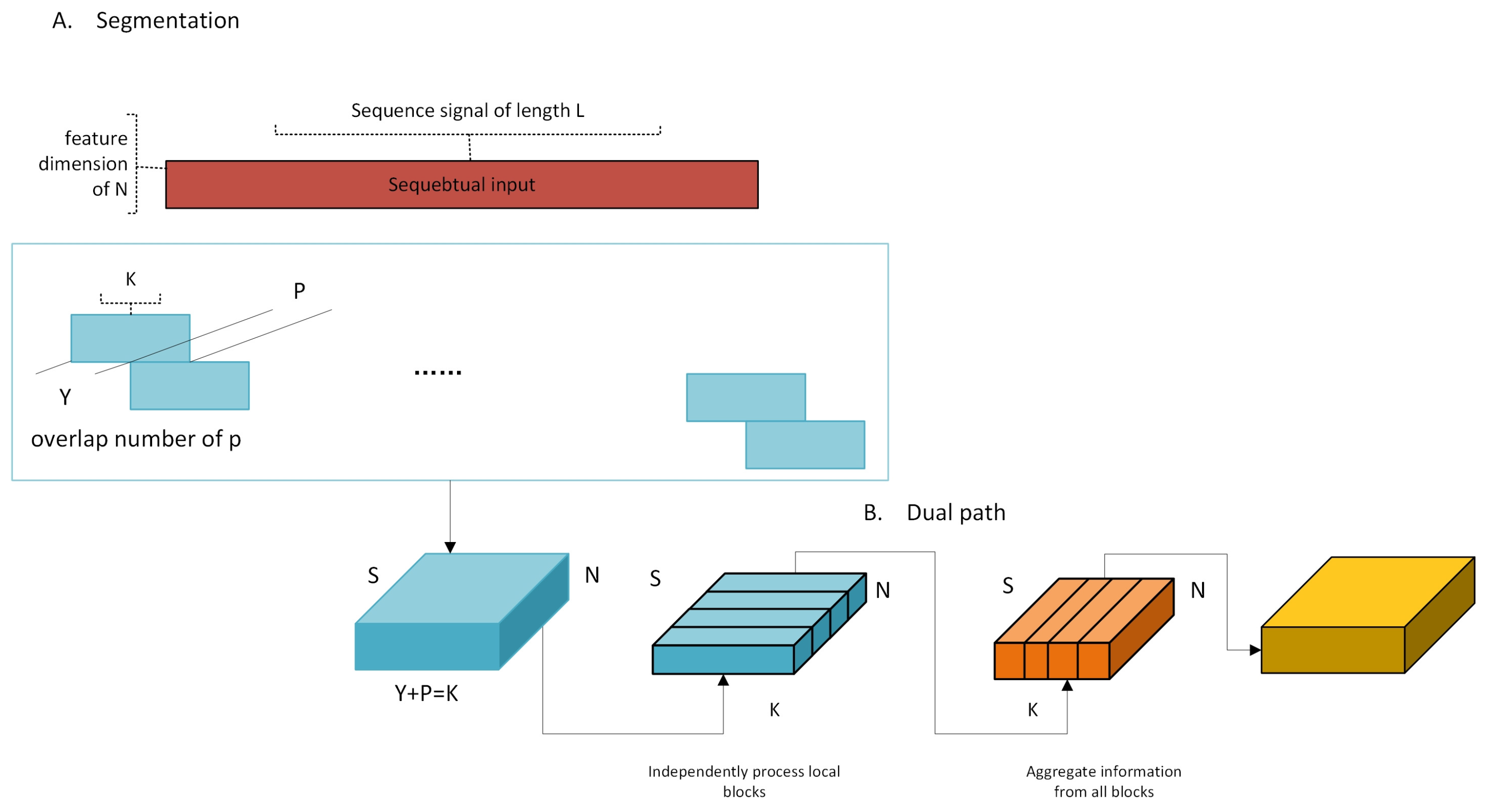

3.3. Progress in Speech Separation Methods Based on the TasNet Architecture

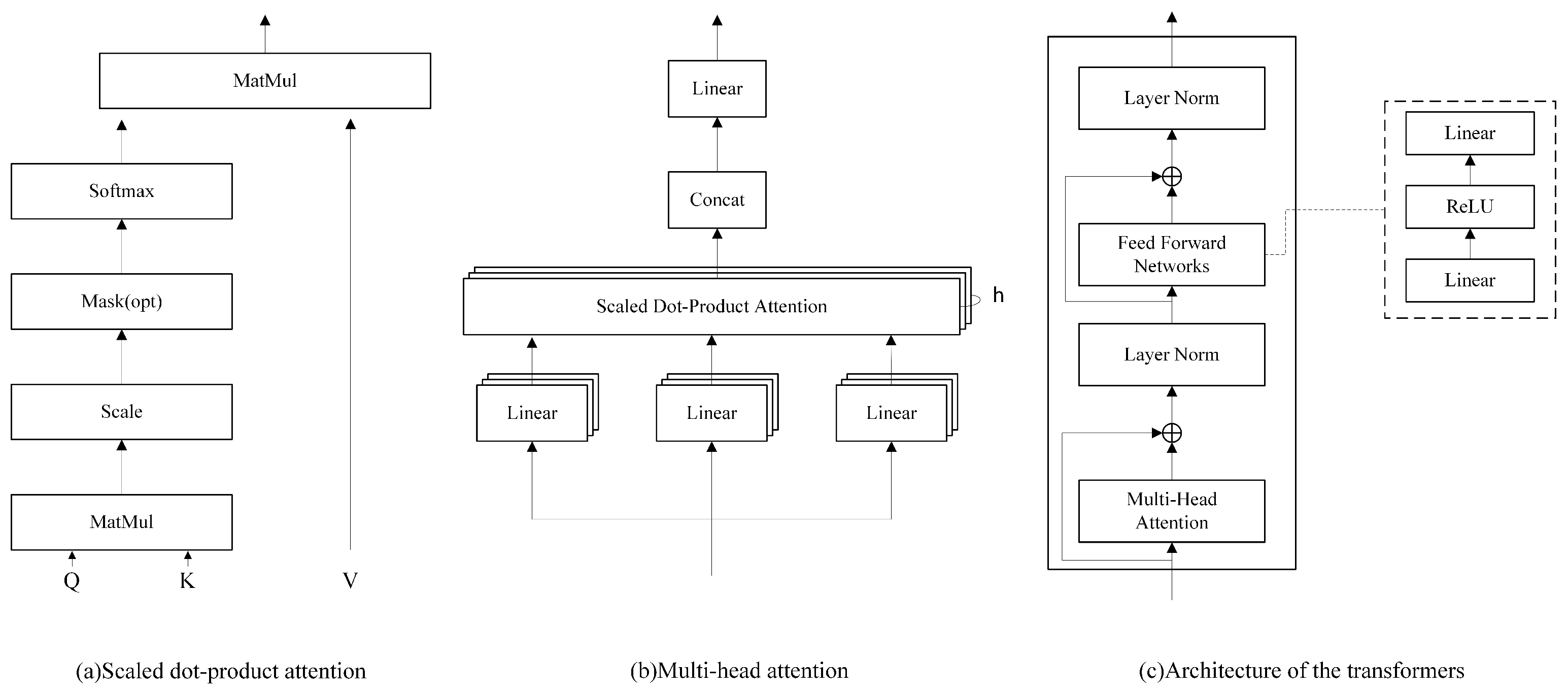

3.4. Progress in Speech Separation Methods Based on the Transformer Architecture

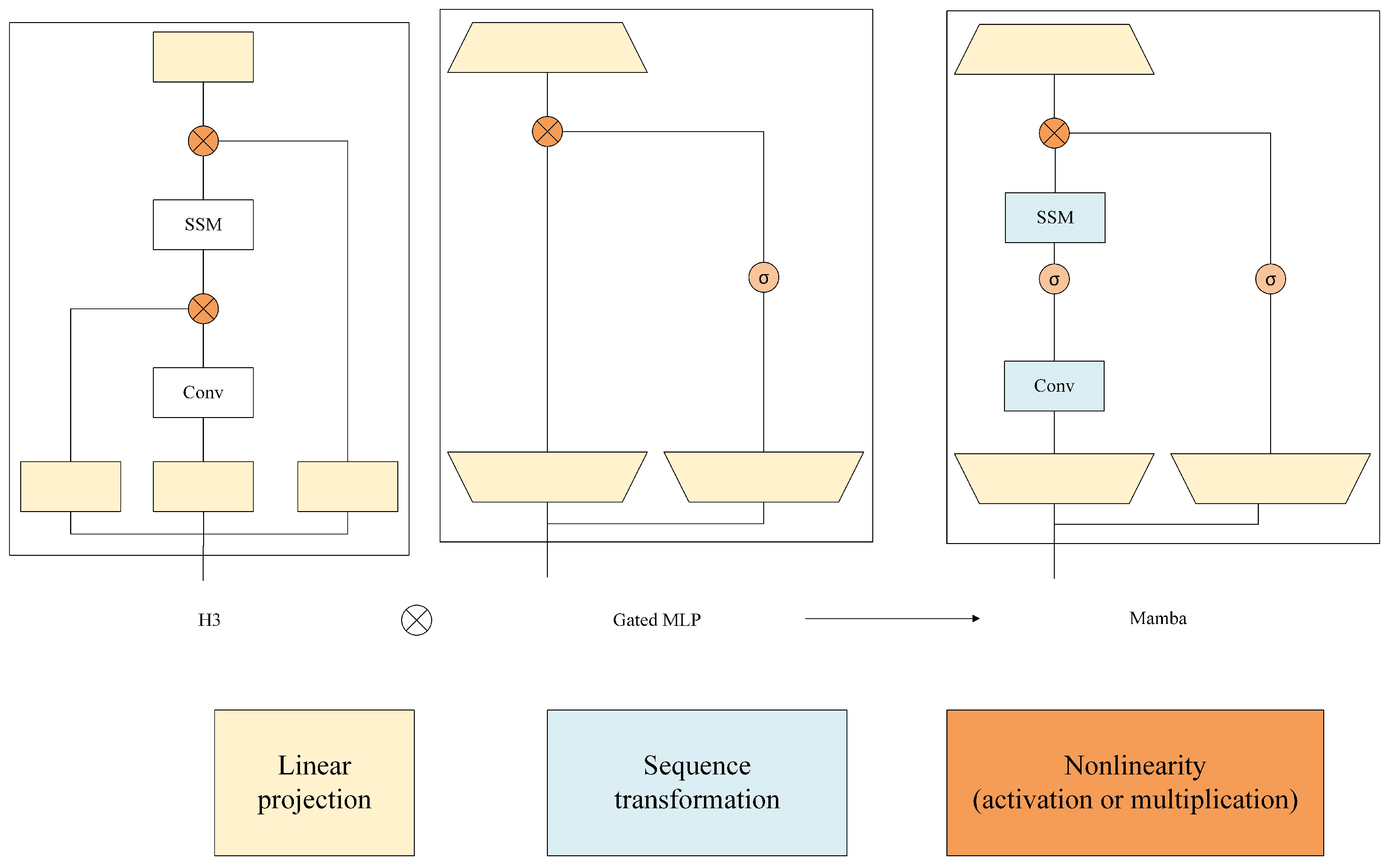

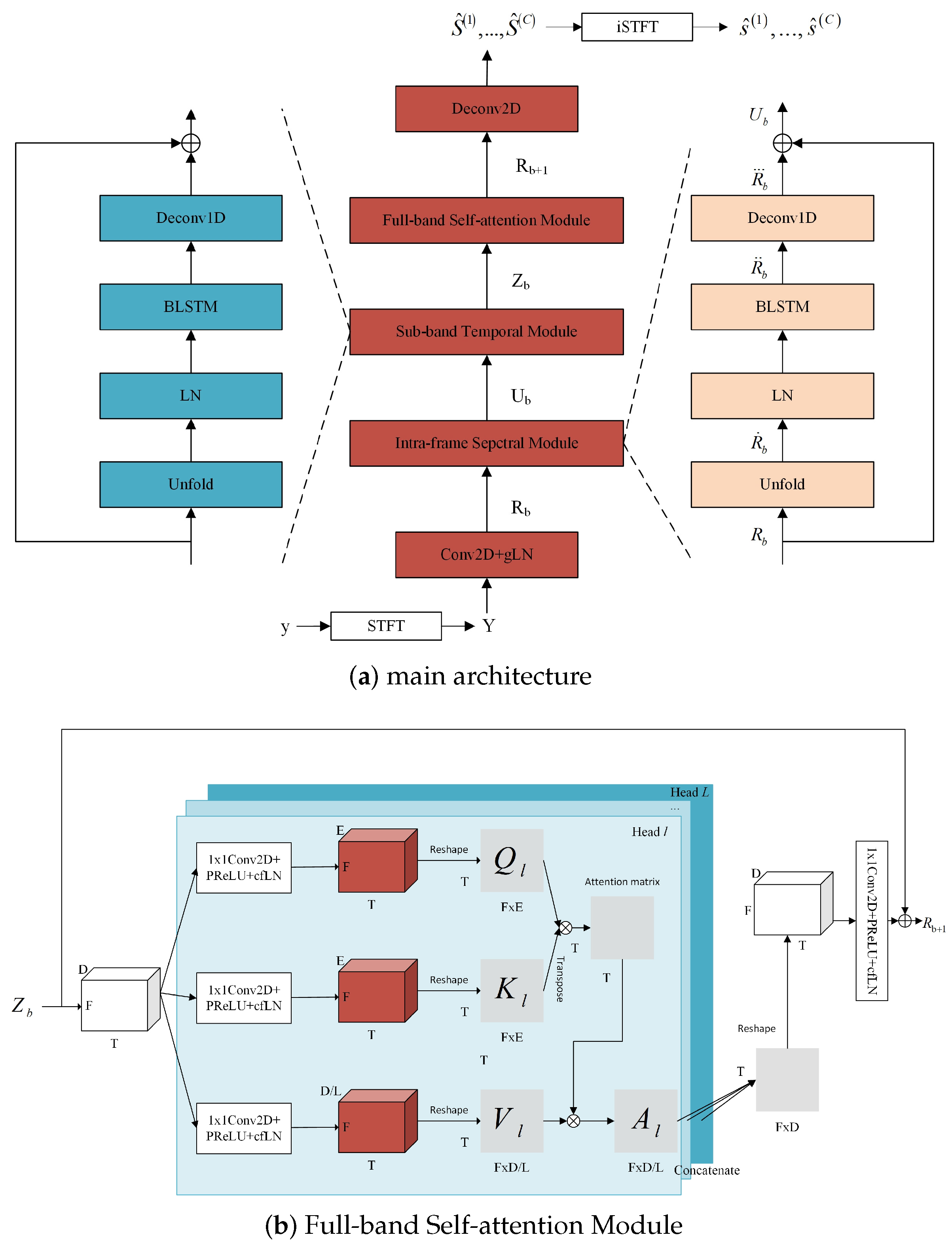

3.5. Progress in Speech Separation Methods Based on the Mamba Architecture

3.6. Summary

4. Discussion

4.1. Foundation

4.2. Critical Analytical Perspective

4.2.1. The Inherent Limitations of Model

4.2.2. Computational Costs, and Performance Efficiency of the Model

4.2.3. Deployment Challenges and Hardware Limitations

5. Future Directions

5.1. Challenges in Time–Frequency Domain Feature Fusion

5.2. A Study of Exceptions in Target Speaker Extraction

5.3. Multi-Channel Fusion and Spatial Information Utilization

5.4. Speech Separation Based on Diffusion Models

5.5. Multimodal Fusion: Audio-Visual Speech Separation

5.6. Utilization of Spatial Separability and Context Awareness

5.7. Lightweight Miniaturization While Maintaining Performance

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Ochieng, P. Deep neural network techniques for monaural speech enhancement and separation: State of the art analysis. Artif. Intell. Rev. 2023, 56, 3651–3703. [Google Scholar] [CrossRef]

- Wang, Y.; Skerry-Ryan, R.; Stanton, D.; Wu, Y.; Weiss, R.J.; Jaitly, N.; Yang, Z.; Xiao, Y.; Chen, Z.; Bengio, S.; et al. Tacotron: Towards end-to-end speech synthesis. arXiv 2017, arXiv:1703.10135. [Google Scholar]

- Nayeem, M.; Tabrej, M.S.; Deb, K.J.; Goswami, S.; Hakim, M.A. Automatic Speech Recognition in the Modern Era: Architectures, Training, and Evaluation. arXiv 2025, arXiv:2510.12827. [Google Scholar] [CrossRef]

- Cherry, E.C.; Taylor, W. Some further experiments upon the recognition of speech, with one and with two ears. J. Acoust. Soc. Am. 1954, 26, 554–559. [Google Scholar] [CrossRef]

- Li, K.; Chen, G.; Sang, W.; Luo, Y.; Chen, Z.; Wang, S.; He, S.; Wang, Z.Q.; Li, A.; Wu, Z.; et al. Advances in speech separation: Techniques, challenges, and future trends. arXiv 2025, arXiv:2508.10830. [Google Scholar] [CrossRef]

- Rafii, Z.; Liutkus, A.; Stöter, F.R.; Mimilakis, S.I.; FitzGerald, D.; Pardo, B. An overview of lead and accompaniment separation in music. IEEE/ACM Trans. Audio Speech Lang. Process. 2018, 26, 1307–1335. [Google Scholar] [CrossRef]

- Wang, D.; Chen, J. Supervised speech separation based on deep learning: An overview. IEEE/ACM Trans. Audio Speech Lang. Process. 2018, 26, 1702–1726. [Google Scholar] [CrossRef] [PubMed]

- Ansari, S.; Alatrany, A.S.; Alnajjar, K.A.; Khater, T.; Mahmoud, S.; Al-Jumeily, D.; Hussain, A.J. A survey of artificial intelligence approaches in blind source separation. Neurocomputing 2023, 561, 126895. [Google Scholar] [CrossRef]

- He, P.; She, T.; Li, W.; Yuan, W. Single channel blind source separation on the instantaneous mixed signal of multiple dynamic sources. Mech. Syst. Signal Process. 2018, 113, 22–35. [Google Scholar] [CrossRef]

- Drude, L.; Hasenklever, D.; Haeb-Umbach, R. Unsupervised training of a deep clustering model for multichannel blind source separation. In Proceedings of the ICASSP 2019-2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; IEEE: New York, NY, USA, 2019; pp. 695–699. [Google Scholar]

- Gannot, S.; Burshtein, D.; Weinstein, E. Iterative and sequential Kalman filter-based speech enhancement algorithms. IEEE Trans. Speech Audio Process. 1998, 6, 373–385. [Google Scholar] [CrossRef]

- Kim, J.B.; Lee, K.; Lee, C. On the applications of the interacting multiple model algorithm for enhancing noisy speech. IEEE Trans. Speech Audio Process. 2000, 8, 349–352. [Google Scholar] [CrossRef]

- Ephraim, Y.; Van Trees, H.L. A signal subspace approach for speech enhancement. IEEE Trans. Speech Audio Process. 1995, 3, 251–266. [Google Scholar] [CrossRef]

- Boll, S. Suppression of acoustic noise in speech using spectral subtraction. IEEE Trans. Acoust. Speech Signal Process. 1979, 27, 113–120. [Google Scholar] [CrossRef]

- Lim, J.S.; Oppenheim, A.V. Enhancement and bandwidth compression of noisy speech. Proc. IEEE 2005, 67, 1586–1604. [Google Scholar] [CrossRef]

- Martin, R. Spectral subtraction based on minimum statistics. In Proceedings of the EUSIPCO-94, Edinburgh, UK, 13–16 September 1994. [Google Scholar]

- Cohen, I.; Berdugo, B. Speech enhancement for non-stationary noise environments. Signal Process. 2001, 81, 2403–2418. [Google Scholar] [CrossRef]

- Gersho, A.; Cuperman, V. Vector quantization: A pattern-matching technique for speech coding. IEEE Commun. Mag. 1983, 21, 15–21. [Google Scholar] [CrossRef]

- Roweis, S. One microphone source separation. In Advances in Neural Information Processing Systems; Neural Information Processing Systems Foundation: South Lake Tahoe, NV, USA, 2000; Volume 13. [Google Scholar]

- Virtanen, T. Speech recognition using factorial hidden Markov models for separation in the feature space. In Proceedings of the Interspeech, Pittsburgh, PA, USA, 17–21 September 2006. [Google Scholar]

- Lee, D.D.; Seung, H.S. Learning the parts of objects by non-negative matrix factorization. Nature 1999, 401, 788–791. [Google Scholar] [CrossRef]

- Wang, D.; Brown, G.J. Computational Auditory Scene Analysis: Principles, Algorithms, and Applications; Wiley-IEEE Press: Hoboken, NJ, USA, 2006. [Google Scholar]

- Wang, Y.; Wang, D. Towards scaling up classification-based speech separation. IEEE Trans. Audio Speech Lang. Process. 2013, 21, 1381–1390. [Google Scholar] [CrossRef]

- Lu, X.; Tsao, Y.; Matsuda, S.; Hori, C. Speech enhancement based on deep denoising autoencoder. In Proceedings of the Interspeech, Lyon, France, 25–29 August 2013; Volume 2013, pp. 436–440. [Google Scholar]

- Erdogan, H.; Hershey, J.R.; Watanabe, S.; Le Roux, J. Phase-sensitive and recognition-boosted speech separation using deep recurrent neural networks. In Proceedings of the 2015 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), South Brisbane, Australia, 19–24 April 2015; IEEE: New York, NY, USA, 2015; pp. 708–712. [Google Scholar]

- Williamson, D.S.; Wang, Y.; Wang, D. Complex ratio masking for monaural speech separation. IEEE/ACM Trans. Audio Speech Lang. Process. 2015, 24, 483–492. [Google Scholar] [CrossRef]

- Hershey, J.R.; Chen, Z.; Le Roux, J.; Watanabe, S. Deep clustering: Discriminative embeddings for segmentation and separation. In Proceedings of the 2016 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Shanghai, China, 20–25 March 2016; IEEE: New York, NY, USA, 2016; pp. 31–35. [Google Scholar]

- Yu, D.; Kolbæk, M.; Tan, Z.H.; Jensen, J. Permutation invariant training of deep models for speaker-independent multi-talker speech separation. In Proceedings of the 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017; IEEE: New York, NY, USA, 2017; pp. 241–245. [Google Scholar]

- Fan, C.; Liu, B.; Tao, J.; Yi, J.; Wen, Z. Discriminative learning for monaural speech separation using deep embedding features. arXiv 2019, arXiv:1907.09884. [Google Scholar] [CrossRef]

- Kajala, M.; Hamalainen, M. Filter-and-sum beamformer with adjustable filter characteristics. In Proceedings of the 2001 IEEE International Conference on Acoustics, Speech, and Signal Processing. Proceedings (Cat. No. 01CH37221), Salt Lake City, UT, USA, 7–11 May 2001; IEEE: New York, NY, USA, 2001; Volume 5, pp. 2917–2920. [Google Scholar]

- Klemm, M.; Craddock, I.; Leendertz, J.; Preece, A.; Benjamin, R. Improved delay-and-sum beamforming algorithm for breast cancer detection. Int. J. Antennas Propag. 2008, 2008, 761402. [Google Scholar]

- Zhang, Z.; Xu, Y.; Yu, M.; Zhang, S.X.; Chen, L.; Yu, D. ADL-MVDR: All deep learning MVDR beamformer for target speech separation. In Proceedings of the ICASSP 2021-2021 IEEE International Conference on Acoustics, Speech and signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021; IEEE: New York, NY, USA, 2021; pp. 6089–6093. [Google Scholar]

- Wang, Y.; Narayanan, A.; Wang, D. On training targets for supervised speech separation. IEEE/ACM Trans. Audio Speech Lang. Process. 2014, 22, 1849–1858. [Google Scholar] [CrossRef]

- Tawara, N.; Kobayashi, T.; Ogawa, T. Multi-Channel Speech Enhancement Using Time-Domain Convolutional Denoising Autoencoder. In Proceedings of the Interspeech, Graz, Austria, 15–19 September 2019; pp. 86–90. [Google Scholar]

- Vincent, E.; Gribonval, R.; Févotte, C. Performance measurement in blind audio source separation. IEEE Trans. Audio Speech Lang. Process. 2006, 14, 1462–1469. [Google Scholar] [CrossRef]

- Rix, A.W.; Beerends, J.G.; Hollier, M.P.; Hekstra, A.P. Perceptual evaluation of speech quality (PESQ)-a new method for speech quality assessment of telephone networks and codecs. In Proceedings of the 2001 IEEE International Conference on Acoustics, Speech, and Signal Processing. Proceedings (Cat. No. 01CH37221), Salt Lake City, UT, USA, 7–11 May 2001; IEEE: New York, NY, USA, 2001; Volume 2, pp. 749–752. [Google Scholar]

- Taal, C.H.; Hendriks, R.C.; Heusdens, R.; Jensen, J. A short-time objective intelligibility measure for time–frequency weighted noisy speech. In Proceedings of the 2010 IEEE International Conference on Acoustics, Speech and Signal Processing, Dallas, TX, USA, 14–19 March 2010; IEEE: New York, NY, USA, 2010; pp. 4214–4217. [Google Scholar]

- Garofolo, J.S.; Lamel, L.F.; Fisher, W.M.; Fiscus, J.G.; Pallett, D.S. DARPA TIMIT acoustic-phonetic continous speech corpus CD-ROM. NIST speech disc 1-1.1. NASA STI/Recon Tech. Rep. N 1993, 93, 27403. [Google Scholar]

- Garofolo, J.S.; Graff, D.; Paul, D.; Pallett, D. CSR-I (Wsj0) Complete; Linguistic Data Consortium: Philadelphia, PA, USA, 2007. [Google Scholar]

- Hsu, C.L.; Jang, J.S.R. On the improvement of singing voice separation for monaural recordings using the MIR-1K dataset. IEEE Trans. Audio Speech Lang. Process. 2009, 18, 310–319. [Google Scholar]

- Chan, T.S.; Yeh, T.C.; Fan, Z.C.; Chen, H.W.; Su, L.; Yang, Y.H.; Jang, R. Vocal activity informed singing voice separation with the iKala dataset. In Proceedings of the 2015 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), South Brisbane, Australia, 19–24 April 2015; IEEE: New York, NY, USA, 2015; pp. 718–722. [Google Scholar]

- Rafii, Z.; Liutkus, A.; Stöter, F.R.; Mimilakis, S.I.; Bittner, R. The MUSDB18 Corpus for Music Separation; Zenodo: Geneva, Switzerland, 2017. [Google Scholar]

- Panayotov, V.; Chen, G.; Povey, D.; Khudanpur, S. Librispeech: An asr corpus based on public domain audio books. In Proceedings of the 2015 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), South Brisbane, Australia, 19–24 April 2015; IEEE: New York, NY, USA, 2015; pp. 5206–5210. [Google Scholar]

- Yamagishi, J.; Veaux, C.; MacDonald, K. CSTR VCTK Corpus: English Multi-Speaker Corpus for CSTR Voice Cloning Toolkit (Version 0.92); University of Edinburgh, The Centre for Speech Technology Research (CSTR): Edinburgh, UK, 2019; pp. 271–350. [Google Scholar]

- Zen, H.; Dang, V.; Clark, R.; Zhang, Y.; Weiss, R.J.; Jia, Y.; Chen, Z.; Wu, Y. Libritts: A corpus derived from librispeech for text-to-speech. arXiv 2019, arXiv:1904.02882. [Google Scholar]

- Wichern, G.; Antognini, J.; Flynn, M.; Zhu, L.R.; McQuinn, E.; Crow, D.; Manilow, E.; Roux, J.L. Wham!: Extending speech separation to noisy environments. arXiv 2019, arXiv:1907.01160. [Google Scholar] [CrossRef]

- Drude, L.; Heitkaemper, J.; Boeddeker, C.; Haeb-Umbach, R. SMS-WSJ: Database, performance measures, and baseline recipe for multi-channel source separation and recognition. arXiv 2019, arXiv:1910.13934. [Google Scholar]

- Cosentino, J.; Pariente, M.; Cornell, S.; Deleforge, A.; Vincent, E. Librimix: An open-source dataset for generalizable speech separation. arXiv 2020, arXiv:2005.11262. [Google Scholar]

- Nakatani, T.; Yoshioka, T.; Kinoshita, K.; Miyoshi, M.; Juang, B.H. Blind speech dereverberation with multi-channel linear prediction based on short time Fourier transform representation. In Proceedings of the 2008 IEEE International Conference on Acoustics, Speech and Signal Processing, Las Vegas, NV, USA, 31 March–4 April 2008; IEEE: New York, NY, USA, 2008; pp. 85–88. [Google Scholar]

- Ueda, T.; Nakatani, T.; Ikeshita, R.; Kinoshita, K.; Araki, S.; Makino, S. Low latency online blind source separation based on joint optimization with blind dereverberation. In Proceedings of the ICASSP 2021-2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021; IEEE: New York, NY, USA, 2021; pp. 506–510. [Google Scholar]

- Sunohara, M.; Haruta, C.; Ono, N. Low-latency real-time blind source separation for hearing aids based on time-domain implementation of online independent vector analysis with truncation of non-causal components. In Proceedings of the 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017; IEEE: New York, NY, USA, 2017; pp. 216–220. [Google Scholar]

- Ono, N. Stable and fast update rules for independent vector analysis based on auxiliary function technique. In Proceedings of the 2011 IEEE Workshop on Applications of Signal Processing to Audio and Acoustics (WASPAA), New Paltz, NY, USA, 16–19 October 2011; IEEE: New York, NY, USA, 2011; pp. 189–192. [Google Scholar]

- Scheibler, R.; Ono, N. Fast and stable blind source separation with rank-1 updates. In Proceedings of the ICASSP 2020-2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; IEEE: New York, NY, USA, 2020; pp. 236–240. [Google Scholar]

- Nakashima, T.; Ono, N. Inverse-free online independent vector analysis with flexible iterative source steering. In Proceedings of the 2022 Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA ASC), Chiang Mai, Thailand, 7–10 November 2022; IEEE: New York, NY, USA, 2022; pp. 749–753. [Google Scholar]

- Ueda, T.; Nakatani, T.; Ikeshita, R.; Kinoshita, K.; Araki, S.; Makino, S. Blind and spatially-regularized online joint optimization of source separation, dereverberation, and noise reduction. IEEE/ACM Trans. Audio Speech Lang. Process. 2024, 32, 1157–1172. [Google Scholar] [CrossRef]

- Mo, K.; Wang, X.; Yang, Y.; Makino, S.; Chen, J. Low algorithmic delay implementation of convolutional beamformer for online joint source separation and dereverberation. In Proceedings of the 2024 32nd European Signal Processing Conference (EUSIPCO), Lyon, France, 26–30 August 2024; IEEE: New York, NY, USA, 2024; pp. 912–916. [Google Scholar]

- He, Y.; Woo, B.H.; So, R.H. A Novel Weighted Sparse Component Analysis for Underdetermined Blind Speech Separation. In Proceedings of the ICASSP 2025-2025 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Hyderabad, India, 6–11 April 2025; IEEE: New York, NY, USA, 2025; pp. 1–5. [Google Scholar]

- Jansson, A.; Humphrey, E.; Montecchio, N.; Bittner, R.; Kumar, A.; Weyde, T. Singing voice separation with deep u-net convolutional networks. In Proceedings of the 18th ISMIR Conference, Suzhou, China, 23–27 October 2017. [Google Scholar]

- Stoller, D.; Ewert, S.; Dixon, S. Wave-u-net: A multi-scale neural network for end-to-end audio source separation. arXiv 2018, arXiv:1806.03185. [Google Scholar]

- Macartney, C.; Weyde, T. Improved speech enhancement with the wave-u-net. arXiv 2018, arXiv:1811.11307. [Google Scholar] [CrossRef]

- Défossez, A.; Usunier, N.; Bottou, L.; Bach, F. Music source separation in the waveform domain. arXiv 2019, arXiv:1911.13254. [Google Scholar]

- Fu, Y.; Liu, Y.; Li, J.; Luo, D.; Lv, S.; Jv, Y.; Xie, L. Uformer: A unet based dilated complex & real dual-path conformer network for simultaneous speech enhancement and dereverberation. In Proceedings of the ICASSP 2022-2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Singapore, 23–27 May 2022; IEEE: New York, NY, USA, 2022; pp. 7417–7421. [Google Scholar]

- Giri, R.; Isik, U.; Krishnaswamy, A. Attention wave-u-net for speech enhancement. In Proceedings of the 2019 IEEE Workshop on Applications of Signal Processing to Audio and Acoustics (WASPAA), New Paltz, NY, USA, 20–23 October 2019; IEEE: New York, NY, USA, 2019; pp. 249–253. [Google Scholar]

- He, B.; Wang, K.; Zhu, W.P. DBAUNet: Dual-branch attention U-Net for time-domain speech enhancement. In Proceedings of the TENCON 2022-2022 IEEE Region 10 Conference (TENCON), Hong Kong, China, 1–4 November 2022; IEEE: New York, NY, USA, 2022; pp. 1–6. [Google Scholar]

- Zhang, Z.; Xu, S.; Zhuang, X.; Qian, Y.; Zhou, L.; Wang, M. Half-Temporal and Half-Frequency Attention U 2 Net for Speech Signal Improvement. In Proceedings of the ICASSP 2023—2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 4–10 June 2023; IEEE: New York, NY, USA, 2023; pp. 1–2. [Google Scholar]

- Cao, Y.; Xu, S.; Zhang, W.; Wang, M.; Lu, Y. Hybrid lightweight temporal-frequency analysis network for multi-channel speech enhancement. EURASIP J. Audio Speech Music Process. 2025, 2025, 21. [Google Scholar] [CrossRef]

- Bulut, A.E.; Koishida, K. Low-latency single channel speech enhancement using u-net convolutional neural networks. In Proceedings of the ICASSP 2020—2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; IEEE: New York, NY, USA, 2020; pp. 6214–6218. [Google Scholar]

- Rong, X.; Wang, D.; Hu, Y.; Zhu, C.; Chen, K.; Lu, J. UL-UNAS: Ultra-Lightweight U-Nets for Real-Time Speech Enhancement via Network Architecture Search. arXiv 2025, arXiv:2503.00340. [Google Scholar]

- Ho, M.T.; Lee, J.; Lee, B.K.; Yi, D.H.; Kang, H.G. A Cross-Channel Attention-Based Wave-U-Net for Multi-Channel Speech Enhancement. In Proceedings of the Interspeech, Shanghai, China, 25–29 October 2020; Volume 2020. [Google Scholar]

- Aroudi, A.; Uhlich, S.; Font, M.F. TRUNet: Transformer-recurrent-U network for multi-channel reverberant sound source separation. arXiv 2021, arXiv:2110.04047. [Google Scholar]

- Ren, X.; Zhang, X.; Chen, L.; Zheng, X.; Zhang, C.; Guo, L.; Yu, B. A Causal U-Net Based Neural Beamforming Network for Real-Time Multi-Channel Speech Enhancement. In Proceedings of the Interspeech, Brno, Czechia, 30 August–3 September 2021; pp. 1832–1836. [Google Scholar]

- Luo, Y.; Mesgarani, N. Tasnet: Time-domain audio separation network for real-time, single-channel speech separation. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; IEEE: New York, NY, USA, 2018; pp. 696–700. [Google Scholar]

- Heitkaemper, J.; Jakobeit, D.; Boeddeker, C.; Drude, L.; Haeb-Umbach, R. Demystifying TasNet: A dissecting approach. In Proceedings of the ICASSP 2020—2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; IEEE: New York, NY, USA, 2020; pp. 6359–6363. [Google Scholar]

- Luo, Y.; Mesgarani, N. Conv-tasnet: Surpassing ideal time–frequency magnitude masking for speech separation. IEEE/ACM Trans. Audio Speech Lang. Process. 2019, 27, 1256–1266. [Google Scholar] [CrossRef] [PubMed]

- Kavalerov, I.; Wisdom, S.; Erdogan, H.; Patton, B.; Wilson, K.; Le Roux, J.; Hershey, J.R. Universal sound separation. In Proceedings of the 2019 IEEE Workshop on Applications of Signal Processing to Audio and Acoustics (WASPAA), New Paltz, NY, USA, 20–23 October 2019; IEEE: New York, NY, USA, 2019; pp. 175–179. [Google Scholar]

- Deng, C.; Zhang, Y.; Ma, S.; Sha, Y.; Song, H.; Li, X. Conv-TasSAN: Separative Adversarial Network Based on Conv-TasNet. In Proceedings of the Interspeech, Shanghai, China, 25–29 October 2020; pp. 2647–2651. [Google Scholar]

- Lee, J.H.; Chang, J.H.; Yang, J.M.; Moon, H.G. NAS-TasNet: Neural architecture search for time-domain speech separation. IEEE Access 2022, 10, 56031–56043. [Google Scholar] [CrossRef]

- Ditter, D.; Gerkmann, T. A multi-phase gammatone filterbank for speech separation via tasnet. In Proceedings of the ICASSP 2020—2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; IEEE: New York, NY, USA, 2020; pp. 36–40. [Google Scholar]

- Luo, Y.; Chen, Z.; Yoshioka, T. Dual-path rnn: Efficient long sequence modeling for time-domain single-channel speech separation. In Proceedings of the ICASSP 2020—2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; IEEE: New York, NY, USA, 2020; pp. 46–50. [Google Scholar]

- Wang, T.; Pan, Z.; Ge, M.; Yang, Z.; Li, H. Time-domain speech separation networks with graph encoding auxiliary. IEEE Signal Process. Lett. 2023, 30, 110–114. [Google Scholar] [CrossRef]

- Sato, H.; Moriya, T.; Mimura, M.; Horiguchi, S.; Ochiai, T.; Ashihara, T.; Ando, A.; Shinayama, K.; Delcroix, M. Speakerbeam-ss: Real-time target speaker extraction with lightweight Conv-TasNet and state space modeling. arXiv 2024, arXiv:2407.01857. [Google Scholar]

- Shi, H.; Wu, S.; Ye, M.; Ma, C. A speech separation model improved based on Conv-TasNet network. Proc. J. Phys. Conf. Ser. 2024, 2858, 012033. [Google Scholar] [CrossRef]

- Wazir, J.K.; Sheikh, J.A. Deep Speak Net: Advancing Speech Separation. J. Commun. 2025, 20. [Google Scholar]

- Ochiai, T.; Delcroix, M.; Ikeshita, R.; Kinoshita, K.; Nakatani, T.; Araki, S. Beam-TasNet: Time-domain audio separation network meets frequency-domain beamformer. In Proceedings of the ICASSP 2020—2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; IEEE: New York, NY, USA, 2020; pp. 6384–6388. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Advances in Neural Information Processing Systems; Neural Information Processing Systems Foundation: South Lake Tahoe, NV, USA, 2017; Volume 30. [Google Scholar]

- Gulati, A.; Qin, J.; Chiu, C.C.; Parmar, N.; Zhang, Y.; Yu, J.; Han, W.; Wang, S.; Zhang, Z.; Wu, Y.; et al. Conformer: Convolution-augmented transformer for speech recognition. arXiv 2020, arXiv:2005.08100. [Google Scholar] [CrossRef]

- Chen, J.; Mao, Q.; Liu, D. Dual-path transformer network: Direct context-aware modeling for end-to-end monaural speech separation. arXiv 2020, arXiv:2007.13975. [Google Scholar]

- Subakan, C.; Ravanelli, M.; Cornell, S.; Bronzi, M.; Zhong, J. Attention is all you need in speech separation. In Proceedings of the ICASSP 2021—2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021; IEEE: New York, NY, USA, 2021; pp. 21–25. [Google Scholar]

- Rixen, J.; Renz, M. Sfsrnet: Super-resolution for single-channel audio source separation. In Proceedings of the AAAI Conference on Artificial Intelligence, Online, 22 February–1 March 2022; Volume 36, pp. 11220–11228. [Google Scholar]

- Subakan, C.; Ravanelli, M.; Cornell, S.; Grondin, F.; Bronzi, M. Exploring self-attention mechanisms for speech separation. IEEE/ACM Trans. Audio Speech Lang. Process. 2023, 31, 2169–2180. [Google Scholar] [CrossRef]

- Chetupalli, S.R.; Habets, E.A. Speech Separation for an Unknown Number of Speakers Using Transformers with Encoder-Decoder Attractors. In Proceedings of the Interspeech, Incheon, Republic of Korea, 18–22 September 2022; pp. 5393–5397. [Google Scholar]

- Lee, Y.; Choi, S.; Kim, B.Y.; Wang, Z.Q.; Watanabe, S. Boosting unknown-number speaker separation with transformer decoder-based attractor. In Proceedings of the ICASSP 2024—2024 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Seoul, Republic of Korea, 14–19 April 2024; IEEE: New York, NY, USA, 2024; pp. 446–450. [Google Scholar]

- Zhao, S.; Ma, B. Mossformer: Pushing the performance limit of monaural speech separation using gated single-head transformer with convolution-augmented joint self-attentions. In Proceedings of the ICASSP 2023—2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 4–10 June 2023; IEEE: New York, NY, USA, 2023; pp. 1–5. [Google Scholar]

- Zhao, S.; Ma, Y.; Ni, C.; Zhang, C.; Wang, H.; Nguyen, T.H.; Zhou, K.; Yip, J.Q.; Ng, D.; Ma, B. Mossformer2: Combining transformer and rnn-free recurrent network for enhanced time-domain monaural speech separation. In Proceedings of the ICASSP 2024—2024 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Seoul, Republic of Korea, 14–19 April 2024; IEEE: New York, NY, USA, 2024; pp. 10356–10360. [Google Scholar]

- Shin, U.H.; Lee, S.; Kim, T.; Park, H.M. Separate and reconstruct: Asymmetric encoder-decoder for speech separation. Adv. Neural Inf. Process. Syst. 2024, 37, 52215–52240. [Google Scholar]

- Wang, C.; Liu, S.; Chen, S. A Lightweight Dual-Path Conformer Network for Speech Separation. In Proceedings of the CCF National Conference of Computer Applications, Harbin, China, 15–18 July 2024; Springer: Berlin/Heidelberg, Germany, 2024; pp. 51–64. [Google Scholar]

- Wang, C.; Jia, M.; Li, M.; Ma, Y.; Yao, D. Hybrid dual-path network: Singing voice separation in the waveform domain by combining Conformer and Transformer architectures. Speech Commun. 2025, 168, 103171. [Google Scholar] [CrossRef]

- Liu, D.; Zhang, T.; Christensen, M.G.; Ma, B.; Deng, P. Efficient time-domain speech separation using short encoded sequence network. Speech Commun. 2025, 166, 103150. [Google Scholar] [CrossRef]

- Gu, A.; Dao, T. Mamba: Linear-time sequence modeling with selective state spaces. arXiv 2023, arXiv:2312.00752. [Google Scholar] [CrossRef]

- Wang, Z.Q.; Cornell, S.; Choi, S.; Lee, Y.; Kim, B.Y.; Watanabe, S. TF-GridNet: Making time–frequency domain models great again for monaural speaker separation. In Proceedings of the ICASSP 2023—2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 4–10 June 2023; IEEE: New York, NY, USA, 2023; pp. 1–5. [Google Scholar]

- Wang, Z.Q.; Cornell, S.; Choi, S.; Lee, Y.; Kim, B.Y.; Watanabe, S. TF-GridNet: Integrating full-and sub-band modeling for speech separation. IEEE/ACM Trans. Audio Speech Lang. Process. 2023, 31, 3221–3236. [Google Scholar] [CrossRef]

- Li, K.; Chen, G.; Yang, R.; Hu, X. Spmamba: State-space model is all you need in speech separation. arXiv 2024, arXiv:2404.02063. [Google Scholar] [CrossRef]

- Avenstrup, T.H.; Elek, B.; Mádi, I.L.; Schin, A.B.; Mørup, M.; Jensen, B.S.; Olsen, K. SepMamba: State-space models for speaker separation using Mamba. In Proceedings of the ICASSP 2025—2025 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Hyderabad, India, 6–11 April 2025; IEEE: New York, NY, USA, 2025; pp. 1–5. [Google Scholar]

- Jiang, X.; Han, C.; Mesgarani, N. Dual-path mamba: Short and long-term bidirectional selective structured state space models for speech separation. In Proceedings of the ICASSP 2025—2025 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Hyderabad, India, 6–11 April 2025; IEEE: New York, NY, USA, 2025; pp. 1–5. [Google Scholar]

- Liu, D.; Zhang, T.; Wei, Y.; Yi, C.; Christensen, M.G. Speech Conv-Mamba: Selective Structured State Space Model With Temporal Dilated Convolution for Efficient Speech Separation. IEEE Signal Process. Lett. 2025, 32, 2015–2019. [Google Scholar] [CrossRef]

- Jiang, X.; Li, Y.A.; Florea, A.N.; Han, C.; Mesgarani, N. Speech slytherin: Examining the performance and efficiency of mamba for speech separation, recognition, and synthesis. In Proceedings of the ICASSP 2025—2025 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Hyderabad, India, 6–11 April 2025; IEEE: New York, NY, USA, 2025; pp. 1–5. [Google Scholar]

- Lee, D.; Choi, J.W. DeFT-Mamba: Universal multichannel sound separation and polyphonic audio classification. In Proceedings of the ICASSP 2025—2025 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Hyderabad, India, 6–11 April 2025; IEEE: New York, NY, USA, 2025; pp. 1–5. [Google Scholar]

- Venkatesh, S.; Benilov, A.; Coleman, P.; Roskam, F. Real-time low-latency music source separation using hybrid spectrogram-tasnet. In Proceedings of the ICASSP 2024—2024 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Seoul, Republic of Korea, 14–19 April 2024; IEEE: New York, NY, USA, 2024; pp. 611–615. [Google Scholar]

- Mishra, H.; Shukla, M.K.; Priyanshu; Dengre, S.; Singh, Y.; Pandey, O.J. A Lightweight Causal Sound Separation Model for Real-Time Hearing Aid Applications. IEEE Sens. Lett. 2025, 9, 6003504. [Google Scholar] [CrossRef]

- Yang, L.; Liu, W.; Wang, W. TFPSNet: Time–frequency domain path scanning network for speech separation. In Proceedings of the ICASSP 2022—2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Singapore, 23–27 May 2022; IEEE: New York, NY, USA, 2022; pp. 6842–6846. [Google Scholar]

- Saijo, K.; Wichern, G.; Germain, F.G.; Pan, Z.; Le Roux, J. TF-Locoformer: Transformer with local modeling by convolution for speech separation and enhancement. In Proceedings of the 2024 18th International Workshop on Acoustic Signal Enhancement (IWAENC), Aalborg, Denmark, 9–12 September 2024; IEEE: New York, NY, USA, 2024; pp. 205–209. [Google Scholar]

- Qian, S.; Gao, L.; Jia, H.; Mao, Q. Efficient monaural speech separation with multiscale time-delay sampling. In Proceedings of the ICASSP 2022—2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Singapore, 23–27 May 2022; IEEE: New York, NY, USA, 2022; pp. 6847–6851. [Google Scholar]

- Zhai, Y.H.; Hua, Q.; Wang, X.W.; Dong, C.R.; Zhang, F.; Xu, D.C. Triple-Path RNN Network: A Time-and-Frequency Joint Domain Speech Separation Model. In Proceedings of the International Conference on Parallel and Distributed Computing: Applications and Technologies, Jeju, Republic of Korea, 16–18 August 2023; Springer: Berlin/Heidelberg, Germany, 2023; pp. 239–248. [Google Scholar]

- Wang, L.; Zhang, H.; Qiu, Y.; Jiang, Y.; Dong, H.; Guo, P. Improved Speech Separation via Dual-Domain Joint Encoder in Time-Domain Networks. In Proceedings of the 2024 International Conference on Electronic Engineering and Information Systems (EEISS), Changsha, China, 13–15 January 2024; IEEE: New York, NY, USA, 2024; pp. 233–239. [Google Scholar]

- Hao, F.; Li, X.; Zheng, C. X-TF-GridNet: A time–frequency domain target speaker extraction network with adaptive speaker embedding fusion. Inf. Fusion 2024, 112, 102550. [Google Scholar] [CrossRef]

- Liu, K.; Du, Z.; Wan, X.; Zhou, H. X-sepformer: End-to-end speaker extraction network with explicit optimization on speaker confusion. In Proceedings of the ICASSP 2023—2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 4–10 June 2023; IEEE: New York, NY, USA, 2023; pp. 1–5. [Google Scholar]

- Zeng, B.; Suo, H.; Wan, Y.; Li, M. Sef-net: Speaker embedding free target speaker extraction network. In Proceedings of the Interspeech, Dublin, Ireland, 20–24 August 2023; pp. 3452–3456. [Google Scholar]

- Zeng, B.; Li, M. Usef-tse: Universal speaker embedding free target speaker extraction. IEEE Trans. Audio Speech Lang. Process. 2025, 33, 2110–2124. [Google Scholar] [CrossRef]

- Quan, C.; Li, X. SpatialNet: Extensively learning spatial information for multichannel joint speech separation, denoising and dereverberation. IEEE/ACM Trans. Audio Speech Lang. Process. 2024, 32, 1310–1323. [Google Scholar] [CrossRef]

- Kalkhorani, V.A.; Wang, D. TF-CrossNet: Leveraging global, cross-band, narrow-band, and positional encoding for single-and multi-channel speaker separation. IEEE/ACM Trans. Audio Speech Lang. Process. 2024, 32, 4999–5009. [Google Scholar] [CrossRef]

- Shin, U.H.; Ku, B.H.; Park, H.M. TF-CorrNet: Leveraging Spatial Correlation for Continuous Speech Separation. IEEE Signal Process. Lett. 2025, 32, 1875–1879. [Google Scholar] [CrossRef]

- Lu, Y.J.; Wang, Z.Q.; Watanabe, S.; Richard, A.; Yu, C.; Tsao, Y. Conditional diffusion probabilistic model for speech enhancement. In Proceedings of the ICASSP 2022—2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Singapore, 23–27 May 2022; IEEE: New York, NY, USA, 2022; pp. 7402–7406. [Google Scholar]

- Scheibler, R.; Ji, Y.; Chung, S.W.; Byun, J.; Choe, S.; Choi, M.S. Diffusion-based generative speech source separation. In Proceedings of the ICASSP 2023—2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 4–10 June 2023; IEEE: New York, NY, USA, 2023; pp. 1–5. [Google Scholar]

- Dong, J.; Wang, X.; Mao, Q. EDSep: An Effective Diffusion-Based Method for Speech Source Separation. In Proceedings of the ICASSP 2025—2025 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Hyderabad, India, 6–11 April 2025; IEEE: New York, NY, USA, 2025; pp. 1–5. [Google Scholar]

- Michelsanti, D.; Tan, Z.H.; Zhang, S.X.; Xu, Y.; Yu, M.; Yu, D.; Jensen, J. An overview of deep-learning-based audio-visual speech enhancement and separation. IEEE/ACM Trans. Audio Speech Lang. Process. 2021, 29, 1368–1396. [Google Scholar] [CrossRef]

- Afouras, T.; Chung, J.S.; Zisserman, A. The conversation: Deep audio-visual speech enhancement. arXiv 2018, arXiv:1804.04121. [Google Scholar] [CrossRef]

- Gao, R.; Grauman, K. Visualvoice: Audio-visual speech separation with cross-modal consistency. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; IEEE: New York, NY, USA, 2021; pp. 15490–15500. [Google Scholar]

- Wu, J.; Xu, Y.; Zhang, S.X.; Chen, L.W.; Yu, M.; Xie, L.; Yu, D. Time domain audio visual speech separation. In Proceedings of the 2019 IEEE Automatic Speech Recognition and Understanding Workshop (ASRU), Singapore, 14–18 December 2019; IEEE: New York, NY, USA, 2019; pp. 667–673. [Google Scholar]

- Kalkhorani, V.A.; Kumar, A.; Tan, K.; Xu, B.; Wang, D. Time-domain transformer-based audiovisual speaker separation. In Proceedings of the Interspeech, Dublin, Ireland, 20–24 August 2023; pp. 3472–3476. [Google Scholar]

- Kalkhorani, V.A.; Kumar, A.; Tan, K.; Xu, B.; Wang, D. Audiovisual speaker separation with full-and sub-band modeling in the time–frequency domain. In Proceedings of the ICASSP 2024—2024 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Seoul, Republic of Korea, 14–19 April 2024; IEEE: New York, NY, USA, 2024; pp. 12001–12005. [Google Scholar]

- Pan, Z.; Wichern, G.; Masuyama, Y.; Germain, F.G.; Khurana, S.; Hori, C.; Le Roux, J. Scenario-aware audio-visual TF-Gridnet for target speech extraction. In Proceedings of the 2023 IEEE Automatic Speech Recognition and Understanding Workshop (ASRU), Taipei, Taiwan, 16–20 December 2023; IEEE: New York, NY, USA, 2023; pp. 1–8. [Google Scholar]

- Lee, S.; Jung, C.; Jang, Y.; Kim, J.; Chung, J.S. Seeing through the conversation: Audio-visual speech separation based on diffusion model. In Proceedings of the ICASSP 2024—2024 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Seoul, Republic of Korea, 14–19 April 2024; IEEE: New York, NY, USA, 2024; pp. 12632–12636. [Google Scholar]

- Xu, X.; Tu, W.; Yang, Y. Efficient audio–visual information fusion using encoding pace synchronization for Audio–Visual Speech Separation. Inf. Fusion 2025, 115, 102749. [Google Scholar] [CrossRef]

- Lee, C.H.; Yang, C.; Saidutta, Y.M.; Srinivasa, R.S.; Shen, Y.; Jin, H. Better Exploiting Spatial Separability in Multichannel Speech Enhancement with an Align-and-Filter Network. In Proceedings of the ICASSP 2025—2025 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Hyderabad, India, 6–11 April 2025; IEEE: New York, NY, USA, 2025; pp. 1–5. [Google Scholar]

- Qian, Y.; Li, C.; Zhang, W.; Lin, S. Contextual understanding with contextual embeddings for multi-talker speech separation and recognition in a cocktail party. npj Acoust. 2025, 1, 3. [Google Scholar] [CrossRef]

- Wang, Q.; Du, J.; Dai, L.R.; Lee, C.H. Joint noise and mask aware training for DNN-based speech enhancement with sub-band features. In Proceedings of the 2017 Hands-Free Speech Communications and Microphone Arrays (HSCMA), San Francisco, CA, USA, 1–3 March 2017; IEEE: New York, NY, USA, 2017; pp. 101–105. [Google Scholar]

- Luo, Y.; Han, C.; Mesgarani, N. Ultra-lightweight speech separation via group communication. In Proceedings of the ICASSP 2021—2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021; IEEE: New York, NY, USA, 2021; pp. 16–20. [Google Scholar]

- Tan, H.M.; Vu, D.Q.; Lee, C.T.; Li, Y.H.; Wang, J.C. Selective mutual learning: An efficient approach for single channel speech separation. In Proceedings of the ICASSP 2022—2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Singapore, 23–27 May 2022; IEEE: New York, NY, USA, 2022; pp. 3678–3682. [Google Scholar]

- Elminshawi, M.; Chetupalli, S.R.; Habets, E.A. Slim-Tasnet: A slimmable neural network for speech separation. In Proceedings of the 2023 IEEE Workshop on Applications of Signal Processing to Audio and Acoustics (WASPAA), New Paltz, NY, USA, 22–25 October 2023; IEEE: New York, NY, USA, 2023; pp. 1–5. [Google Scholar]

- Rong, X.; Sun, T.; Zhang, X.; Hu, Y.; Zhu, C.; Lu, J. GTCRN: A speech enhancement model requiring ultralow computational resources. In Proceedings of the ICASSP 2024—2024 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Seoul, Republic of Korea, 14–19 April 2024; IEEE: New York, NY, USA, 2024; pp. 971–975. [Google Scholar]

- Yang, L.; Liu, W.; Meng, R.; Lee, G.; Baek, S.; Moon, H.G. FSPEN: An ultra-lightweight network for real time speech enahncment. In Proceedings of the ICASSP 2024—2024 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Seoul, Republic of Korea, 14–19 April 2024; IEEE: New York, NY, USA, 2024; pp. 10671–10675. [Google Scholar]

- Li, Y.; Li, K.; Yin, X.; Yang, Z.; Dong, J.; Dong, Z.; Yang, C.; Tian, Y.; Lu, Y. Sepprune: Structured pruning for efficient deep speech separation. arXiv 2025, arXiv:2505.12079. [Google Scholar] [CrossRef]

| Refs. | Years | Title |

|---|---|---|

| [6] | 2018 | An overview of lead and accompaniment separation in music |

| [7] | 2018 | Supervised speech separation based on deep learning: An overview |

| [1] | 2023 | Deep neural network techniques for monaural speech enhancement and separation: state of the art analysis |

| [8] | 2023 | A survey of artificial intelligence approaches in blind source separation |

| [5] | 2025 | Advances in speech separation: Techniques, challenges, and future trends |

| Category | Type | Method | Core Concept | Advantages | Limitations |

|---|---|---|---|---|---|

| Single-Channel | Traditional | Time-domain [11,12,13] | Direct parameter estimation or subspace decomposition | Low complexity, minimal hardware | Limited in non-stationary noise |

| Frequency-domain [14,15,16,17] | Spectral noise statistics estimation | Cost-effective, real-time capable | VAD and noise estimate dependent | ||

| VQ [18] | Discrete codebook feature modeling | Pioneered data-driven approaches | Limited generalization | ||

| GMM [19] | Continuous Gaussian mixture modeling | Superior to VQ for continuous features | Assumes frame independence | ||

| HMM [20] | Temporal dynamic modeling with factorial models | Captures speech temporal structure | Exponential complexity with speakers | ||

| NMF [21] | Spectral basis + weight decomposition | Flexible, assumption-free | Computationally intensive | ||

| CASA [22] | Human auditory perception simulation | Biologically plausible | Rule-limited generalization | ||

| Deep Learning | IBM [23] | Time–frequency binary classification | Intuitive, reliable baseline | Permutation ambiguity | |

| Autoencoder [24] | End-to-end spectral mapping | Feature learning automation | Permutation issues persist | ||

| PSM/cIRM [25,26] | Complex spectral mask estimation | Enhanced quality | Multi-speaker challenges remain | ||

| Deep Clustering [27] | Embedding space clustering | Solves permutation ambiguity | Computational overhead | ||

| PIT [28] | Minimum loss assignment training | End-to-end efficient | Data hungry | ||

| DC+PIT [29] | Hybrid clustering + PIT framework | Accuracy + efficiency balance | Increased complexity | ||

| Multi-Channel | Traditional | ICA/IVA | Statistical independence separation | Blind separation capable | Reverberation sensitive |

| Beamforming [30,31] | Spatial filtering | Robust interference rejection | Hardware cost, direction dependency | ||

| Deep Learning | Neural Beamforming [32] | DNN-based weight estimation | Physical + neural integration | Training complexity | |

| DNN Masks | Spatial + spectral masking | Mature mask approach | Layout sensitivity | ||

| DAE/CDAE | Implicit spatial learning | Delay robust, real-time | Debugging challenges |

| Characteristic | U-Net (for Images) | Wave-U-Net (for Audio) |

|---|---|---|

| Data Domain | 2D Spatia | 1D Temporal |

| Basic Operations | 2D Convolution/Transposed Convolution | 1D Convolution/Transposed Convolution |

| Downsampling | Reduces spatial size (H, W) | Reduces temporal length (L) |

| Upsampling | Restores spatial size (H, W) | Restores temporal length (L) |

| Skip Connection | Feature map concatenation | Feature map summation |

| Output | 2D map (pixel labels) | 1D waveform (samples) |

| Primary Concern | Spatial detail vs. context trade-off | Temporal/phase precision and artifact avoidance |

| Primary Objectives and Loss Functions | Predicted Spectrum-True Spectrum, L1 Norm | Reconstructed Target Waveform, MSE (Mean Square Error) |

| Refs. | Year | Description | Target | Dataset | Metrics | Model, Param. (M) | MACS (G/s) |

|---|---|---|---|---|---|---|---|

| [58] | 2017 | The U-Net architecture is proposed to address source separation tasks. | Transfer and Adaptive Refinement of the U-Net Architecture | iKala | NSDR Vocal: 11.094, NSDR Instrumental: 14.435, SIR Vocal: 23.960, SIR Instrumental: 21.832, SAR Vocal: 17.715, SAR Instrumental: 14.120 | U-Net , - | - |

| [59] | 2018 | Wave U-Net, which is a one-dimensional temporal-domain improvement of the U-Net architecture. | Mixed dataset | Med.SDR 4.46 | Wave-U-Net-M4, - | - | |

| [60] | 2018 | Discusses performance differences of Wave-U-Net variants with different layers | VCTK | PESQ: 2.41, CSIG: 3.54, CBAK: 3.23, COVL: 2.97, SSNR: 9.87 | Wave-U-Net (9-layer), - | - | |

| [61] | 2021 | Incorporates bidirectional LSTM | Long-range dependency modeling | MusDB | - | Demucs, Baseline model size > 1014 MB | - |

| [62] | 2022 | Achieves collaborative modeling of global context and local detail-level temporal information | Mixed dataset | PESQ: 2.4501 (SNR [−5, 0]), 2.7472 (SNR [0, 5]), 2.9511 (SNR [5, 10]) | UFormer, 9.46 | - | |

| [63] | 2019 | Introduces learnable local internal attention mask in skip connections | Optimization of skip connections | VCTK | PESQ: 2.53, CSIG: 3.77, CBAK: 3.12, COVL: 3.14, SSNR: 7.42 | Wave-U-Net WITH ATTENTION (16 kHz, no aug), - | - |

| [64] | 2022 | Dual-branch attention module, extracts spatial and channel features in parallel | VCTK | PESQ: 2.84, CSIG: 4.14, CBAK: 3.47, COVL: 3.50, SSNR: – | DBAUNet, 0.66 | - | |

| [65] | 2023 | Proposes channel-spectrum attention mechanism | ICASSP 2022 DNS Challenge | SIG: 2.988, BAK: 3.974, OVRL: 2.713 | U2Net, 8.947 | - | |

| [68] | 2025 | Explores efficient convolutional structures | Lightweight design and low-latency optimization | Mixed dataset | PESQ: 3.09 | UL-UNAS, 0.17 | 0.03 |

| [69] | 2020 | Adopts Wave-U-Net structure with cross-channel attention mechanism | Multi-channel | Synthetic datasets | SDRI: 18.032, STOI: 0.961, PER: 39.323 | - | - |

| [70] | 2021 | Estimates filter from multi-channel input spectrum | Mixed dataset | SDRI: 9.38, SIRI: 12.87, PESQi: 0.22 | TRUNER-MagPhase, 29 | - | |

| [71] | 2021 | Deeply integrates MISO U-net with beamforming structure | Mixed dataset | PESQ: 1.919, STOI: 0.857, E-TOI: 0.759, SI-SNR: 7.935 | MIMO-U-net + BF + PF, 197 | - |

| Refs. | Year | Innovation or Name of the Method | Experimental Dataset | SI-SNRi | SDRi | Model, Param. (M) | MACS (G/s) |

|---|---|---|---|---|---|---|---|

| [72] | 2018 | The information from the raw waveform is expressed in a learnable convolutional latent space. | WSJ0-2mix | 10.8 | 11.1 | TasNet-BLSTM, 23.6 | - |

| [74] | 2019 | Fully Convolutional Time-Domain Audio Separation Network | WSJ0-2mix | 15.3 | 15.6 | Conv-TasNet-gLN, 5.1 | * 10.5 |

| [75] | 2019 | Different combinations of network architecture concealment | WSJ0-2mix | * | * | * | - |

| [76] | 2020 | Integrating Conv-TasNet into Generative Adversarial Networks | WSJ0-2mix | 15.1 | 15.4 | Conv-TasSAN, * | - |

| [77] | 2022 | Neural Architecture Search (NAS) Strategy Reinforcement | WSJ0-2mix | 17.50 | 17.74 | NAS-Tasnet-GD, 6.3 | - |

| [79] | 2020 | Dual-path recurrent neural network | WSJ0-2mix | 18.8 | 19.0 | DPRNN-TasNet, 2.6 | * 88.5 |

| [78] | 2022 | Fully Convolutional Time-Domain Audio Separation Network | WSJ0-2mix | 15.9 | - | MP-GTF-128, 16.1 | - |

| [82] | 2024 | Deeply expanded encoder/decoder | WSJ0-2mix | 19.2 | 19.1 | DConv-TasNet, - | - |

| [83] | 2025 | Autocoders were used to simultaneously employ residual and skip connections. | TIMIT | 12.4 | 12.1 | EncoderLINEAR, 1.8 | - |

| Refs. | Year | Innovation or Name of the Method | Experimental Dataset | SI-SNRi | SDRi | Model, Param. (M) | MACS (G/s) |

|---|---|---|---|---|---|---|---|

| [87] | 2020 | Dual-path Transformer network | WSJ0-2mix | 20.2 | 20.6 | DPTNet, 2.69 | * 102.5 |

| Libri-2mix | 18.2 | 18.4 | |||||

| [88] | 2021 | Proposed the SepFormer model | WSJ0-2mix | 20.4 | 20.5 | SepFormer, 26 | 59.5 |

| [89] | 2022 | Incorporating super-resolution techniques after the decoder to address information loss caused by downsampling. | WSJ0-2mix | 22.0 | 22.1 | SFSRNet, 59 | - |

| [91] | 2023 | Integrating encoder-decoder attractors to handle an unknown number of speakers. | WSJ0-2mix | 21.2 | 21.4 | SepEDA2 *, 12.5 | 81.0 |

| [92] | 2024 | The TDA computing module is designed to effectively handle an unspecified number of speakers. | WSJ0-2mix | - | - | SepTDA2, 12.5 | - |

| [93] | 2023 | The self-attention-based module tends to emphasize longer-range, coarser dependencies. | WSJ0-2mix | 22.8 | - | Mossformer(S), 10.8 | - |

| [94] | 2024 | Introducing the innovative RNN-free recurrent module, FSMN. | WSJ0-2mix | 24.1 | - | Mossformer2, - | * 55.7 |

| [95] | 2024 | Adopts an asymmetric encoder-decoder architecture. | WSJ0-2mix | 24.2 | 24.4 | SepReformer-L, 59.4 | 155.5 |

| 22.4 | 22.6 | SepReformer-T, 3.5 | 10.4 | ||||

| [98] | 2025 | Combining a short-sequence encoder-decoder framework with a multi-temporal resolution Transformer-based separation network. | Libri2Mix | 13.24 | 14.08 | ESEDNet, 2.31 | 7.13 |

| Refs. | Year | Innovation or Name of the Method | Experimental Dataset | SI-SNRi | SDRi | Model, Param. (M) | MACS (G/s) |

|---|---|---|---|---|---|---|---|

| [100] | 2023 | A Novel Multipath Deep Neural Network Operating in the Time–Frequency (T-F) Domain | WSJ0-2mix | 22.8 | 23.1 | TF-GridNet, 14.43 | * 445.56 |

| WHAM! | 16.9 | 17.2 | |||||

| Libri-2mix | 19.8 | 20.1 | |||||

| [102] | 2024 | Based on the TF-GridNet architecture, the BLSTM module is replaced with a bidirectional Mamba module. | WSJ0-2mix | 22.5 | 22.7 | Spmamba, 6.14 | 238.69 |

| WHAM! | 17.4 | 17.6 | |||||

| Libri-2mix | 19.9 | 20.4 | |||||

| [103] | 2025 | Integrate Mamba layers into the U-Net architecture to learn the multi-scale structure in audio. | WSJ0-2mix | 22.7 | 22.9 | SepMamba(S), 7.2 | 12.46 |

| [104] | 2025 | Replace Transformer with Mamba | WSJ0-2mix | 23.4 | 23.6 | DPMamba(L), 59.8 | - |

| [105] | 2025 | Embed Mamba into the U-Net architecture. | Mixed dataset | 15.01 | 15.84 | Speech Conv-Mamba, 2.4 | 8.87 |

| [106] | 2025 | Adhering to the Conv-TasNet architecture Mamba | WSJ0-2mix | 22.4 | 22.6 | Mamba-TasNet(M), 15.6 | - |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Z.; Luo, Z. Speech Separation Using Advanced Deep Neural Network Methods: A Recent Survey. Big Data Cogn. Comput. 2025, 9, 289. https://doi.org/10.3390/bdcc9110289

Wang Z, Luo Z. Speech Separation Using Advanced Deep Neural Network Methods: A Recent Survey. Big Data and Cognitive Computing. 2025; 9(11):289. https://doi.org/10.3390/bdcc9110289

Chicago/Turabian StyleWang, Zeng, and Zhongqiang Luo. 2025. "Speech Separation Using Advanced Deep Neural Network Methods: A Recent Survey" Big Data and Cognitive Computing 9, no. 11: 289. https://doi.org/10.3390/bdcc9110289

APA StyleWang, Z., & Luo, Z. (2025). Speech Separation Using Advanced Deep Neural Network Methods: A Recent Survey. Big Data and Cognitive Computing, 9(11), 289. https://doi.org/10.3390/bdcc9110289