1. Introduction

Wildfires have increasingly become one of the most critical global environmental challenges, threatening human communities, natural ecosystems, and biodiversity. Intensified by rising temperatures and drier atmospheric conditions associated with climate change, wildfire events are now occurring with greater frequency, severity, and spatial extent. According to Global Forest Watch, an average of 4.2 million hectares of forest are consumed annually by wildfires, incurring economic losses estimated at USD 20 billion globally. In addition to immediate destruction, wildfires significantly degrade air quality, disrupt ecological balance, and contribute to greenhouse gas emissions and global warming.

Traditional wildfire prediction systems, such as the Canadian Fire Weather Index (FWI) and statistical models, often rely on expert knowledge and linear assumptions. These methods struggle to capture the complex, nonlinear, and high-dimensional interactions between meteorological, topographical, and biological variables that drive wildfire behavior. The emergence of data-driven approaches—particularly machine learning (ML) and deep learning (DL) techniques—offers powerful tools capable of learning intricate patterns from multi-source environmental data. ML/DL algorithms are now increasingly applied to fire detection, forecasting, weather monitoring, and risk mapping.

Despite their potential, several limitations hinder the operational use of ML in wildfire prediction. These include the scarcity of high-resolution and reliable datasets, challenges in model interpretability, and difficulties in ensuring generalization across diverse geographic regions. In British Columbia (BC), where vast forests and diverse climates make wildfire management especially complex, these limitations are compounded by inconsistent data collection and limited integration across sources.

The present study is motivated by the urgent need for more accurate and interpretable wildfire prediction systems in BC, particularly in light of recent catastrophic fire seasons. In 2023, Canada experienced its most destructive wildfire year on record, with over 17 million hectares burned. In response, national initiatives have been launched to integrate AI and ML into fire risk assessment and resource allocation. This research contributes to those efforts by developing a comprehensive ML-based framework tailored to BC’s specific environmental conditions.

The primary objective is to build and evaluate a high-performance wildfire prediction system that uses ML techniques and addresses critical challenges such as data imbalance, model transparency, and regional scalability. The framework involves integrating meteorological, geospatial, and fire incident data, applying advanced feature selection methods, and benchmarking the performance of various ML/DL models—including Random Forest, XGBoost, LightGBM, CatBoost, and RNN + LSTM.

Wildfires have become an increasingly pressing global concern, driven by rising temperatures, prolonged droughts, and heightened climate variability [

1]. In Canada—particularly in British Columbia (BC)—wildfires have grown in frequency, scale, and intensity, causing severe environmental, economic, and societal impacts [

2]. Accurate and timely prediction is therefore essential for informing early warning systems, optimizing emergency response, and supporting resource management strategies.

Despite substantial advances in wildfire modeling, existing studies often remain limited to small-scale regions or rely on coarse-resolution datasets, which restrict their operational applicability. This study distinguishes itself by developing a high-resolution, province-wide wildfire dataset for British Columbia, integrating over 3.6 million spatiotemporal records from multiple authoritative sources, including CWFIS, ERA5, FIRMS, and the British Columbia Wildfire Management Branch. In addition, the work presents a systematic comparison of six feature selection techniques and five machine learning and deep learning models, enabling a rigorous assessment of model performance and interpretability. By framing the results within the context of Canadian wildfire management, the research provides a scalable and data-driven decision-support framework designed to enhance both predictive capability and operational readiness for wildfire risk monitoring.

This study makes the following key contributions: (1) Development of a high-resolution wildfire dataset for British Columbia, constructed by integrating multi-source data, including the Canadian Wildland Fire Information System (CWFIS), ERA5 reanalysis data, and geospatial attributes. (2) Systematic comparison of six feature selection techniques (ReliefF, Relief, Mutual Information, Recursive Feature Elimination, Correlation-based, and Model-based Importance) across multiple ML and DL algorithms, providing insight into their relative strengths for environmental prediction. (3) Comprehensive evaluation of five predictive models—Random Forest, XGBoost, LightGBM, CatBoost, and RNN + LSTM—using multiple train–test splits and 10-fold cross-validation to ensure generalizability. (4) Implementation of Random Undersampling (RUS) to address extreme class imbalance in wildfire data, improving model reliability and performance stability. Identification of key environmental drivers of wildfire occurrences, such as temperature, humidity, wind speed, and soil moisture, with strong alignment to established fire science findings. (5) Proposition of a scalable, interpretable, and region-specific ML/DL framework for wildfire prediction, designed to support data-driven decision-making warning systems in British Columbia.

2. Related Literature

Wildfires are a natural component of Canadian ecosystems and play a role in forest regeneration [

1,

2]. However, their frequency and intensity have increased in recent decades, driven by climate-induced shifts such as elevated temperatures, reduced snowpack, and persistent drought conditions [

1,

3,

4]. National assessments indicate that Canada now experiences approximately 7000 wildfires annually, burning nearly 2.5 million hectares of land [

5]. Human activity also plays a significant role, as nearly 55% of wildfires in Canada are caused by anthropogenic sources such as discarded cigarettes, unattended campfires, and industrial operations [

6]. The 2016 Fort McMurray wildfire remains the costliest natural disaster in Canadian history, with over USD 9 billion in damages [

7].

British Columbia accounts for nearly 40% of the total area burned annually in Canada [

5,

8]. The province’s diverse topography and climate- ranging from humid coastal regions to dry interior plateaus- create highly variable and difficult-to-manage fire behavior [

5,

8]. Climatic trends have lengthened the fire season and intensified fire activity, with the 2021 season alone resulting in more than 8600 square kilometers burned [

8]. Factors such as dry summers, strong winds, mountainous terrain, and climate-induced snowmelt contribute to BC’s elevated wildfire risk [

2,

4,

9]. In response, the BC Wildfire Service has implemented mitigation strategies that include real-time satellite surveillance, aerial patrols, FireSmart community initiatives, and partnerships with Indigenous communities [

8,

10,

11].

Traditional wildfire prediction methods primarily rely on statistical and physical models. Statistical techniques such as logistic regression and Poisson models use historical data and meteorological variables—like temperature and humidity—to estimate fire probabilities [

12]. These methods are simple and interpretable but generally fail to capture nonlinear relationships or complex interactions. Physical models, such as FARSITE, simulate fire spread using inputs such as terrain, fuel characteristics, and weather conditions [

13]. Although effective for short-term fire behavior simulation, these models require detailed input data and are computationally intensive, limiting their use in real-time applications [

14].

Remote sensing-based fire modeling approaches have been widely applied for detecting fire activity, mapping burn severity, and analyzing vegetation stress [

15].

Remote sensing and GIS-based methods have expanded the capabilities of fire risk monitoring and assessment. Satellite systems like MODIS, Sentinel-2, and Landsat provide multispectral imagery for fire detection, vegetation stress analysis, and burn severity evaluation [

16]. GIS platforms allow researchers to integrate spatial data layers—such as elevation, land cover, and human infrastructure—to assess fire susceptibility across regions [

17]. However, these tools can be hampered by cloud cover, delayed data availability, and subjectivity in risk indicator selection.

Machine learning (ML) has become increasingly popular for wildfire prediction due to its ability to learn complex, high-dimensional relationships from diverse data sources. Supervised learning models—including Random Forest, Support Vector Machines, XGBoost, and LightGBM—have demonstrated high predictive accuracy in identifying fire-prone areas [

11,

17,

18]. Deep learning approaches such as Convolutional Neural Networks (CNNs) have been used for image-based fire detection [

19], while Recurrent Neural Networks (RNNs) with Long Short-Term Memory (LSTM) units are applied to capture temporal patterns in weather data [

20]. Hybrid models that combine spatial and temporal inputs or multiple classifiers further enhance robustness and generalizability [

21].

Despite their strengths, ML models face several challenges. First, the lack of real-time, high-resolution data-especially in remote regions like BC- limits model effectiveness [

16]. Second, generalization across geographic regions remains an issue, as many models are tuned to specific environments and fail under new conditions [

21]. Third, datasets used for wildfire prediction often suffer from severe class imbalance, where non-fire events vastly outnumber actual fire occurrences [

22]. Lastly, many ML models are considered “black boxes,” offering little insight into their decision-making processes. This lack of transparency hinders their acceptance among policymakers and operational decision-makers [

20].

Recent studies have attempted to overcome these challenges. Elsarrar et al. [

23] used a rule-extraction neural network model for wildfire prediction in West Virginia, achieving high accuracy but with limited regional applicability. Hong et al. [

21] implemented a hybrid method combining genetic algorithms with Random Forest and SVM models for fire susceptibility mapping in China, obtaining strong AUC scores but neglecting temporal dynamics. Omar [

20] trained an LSTM-based model on a small Algerian dataset with high classification accuracy, though generalizability was not addressed. Bayat and Yildiz [

24] compared ML models in Portugal and found SVM to perform best, while Sharma [

25] identified Random Forest as the top model for Indian wildfire data. Li et al. [

26] used ANN and SVM for forest fire prediction in China, finding ANN superior, though temporal components were not modeled.

Beyond conventional fire-science studies, interdisciplinary AI research has increasingly linked machine learning to broader environmental-resilience and sustainability frameworks. Olawade et al. [

27] emphasized that AI-driven environmental monitoring enables proactive ecosystem management and disaster risk reduction. Camps-Valls et al. [

28] demonstrated how deep learning models can detect and predict extreme climate events—including wildfires—with greater spatial precision. Forouheshfar et al. [

29] discussed the integration of AI and ML into adaptive-governance structures that enhance systemic resilience to climate change, while Wu et al. [

30] illustrated ML’s capability to optimize sustainability in complex systems such as green supply chains. Collectively, these interdisciplinary insights establish that wildfire prediction frameworks grounded in machine learning not only advance technical performance but also contribute to data-driven environmental governance and climate adaptation.

By synthesizing insights from climate science, AI research, and sustainable policy design, the present study situates wildfire prediction as part of a wider global effort toward resilient, adaptive, and intelligent environmental systems, offering both methodological rigor and operational value for Canadian wildfire management.

Building on these prior works, the present study introduces a localized, high-resolution dataset for British Columbia, integrates both spatial and temporal predictors, and employs a hybrid feature-selection strategy alongside Random Undersampling (RUS) to address class imbalance. The study also leverages deep learning architectures—specifically RNNs with LSTM units—to capture temporal dependencies and applies a comprehensive multi-metric evaluation framework to ensure robustness and interpretability. These contributions collectively advance the development of transparent, data-driven, and operationally scalable wildfire forecasting systems.

4. Results

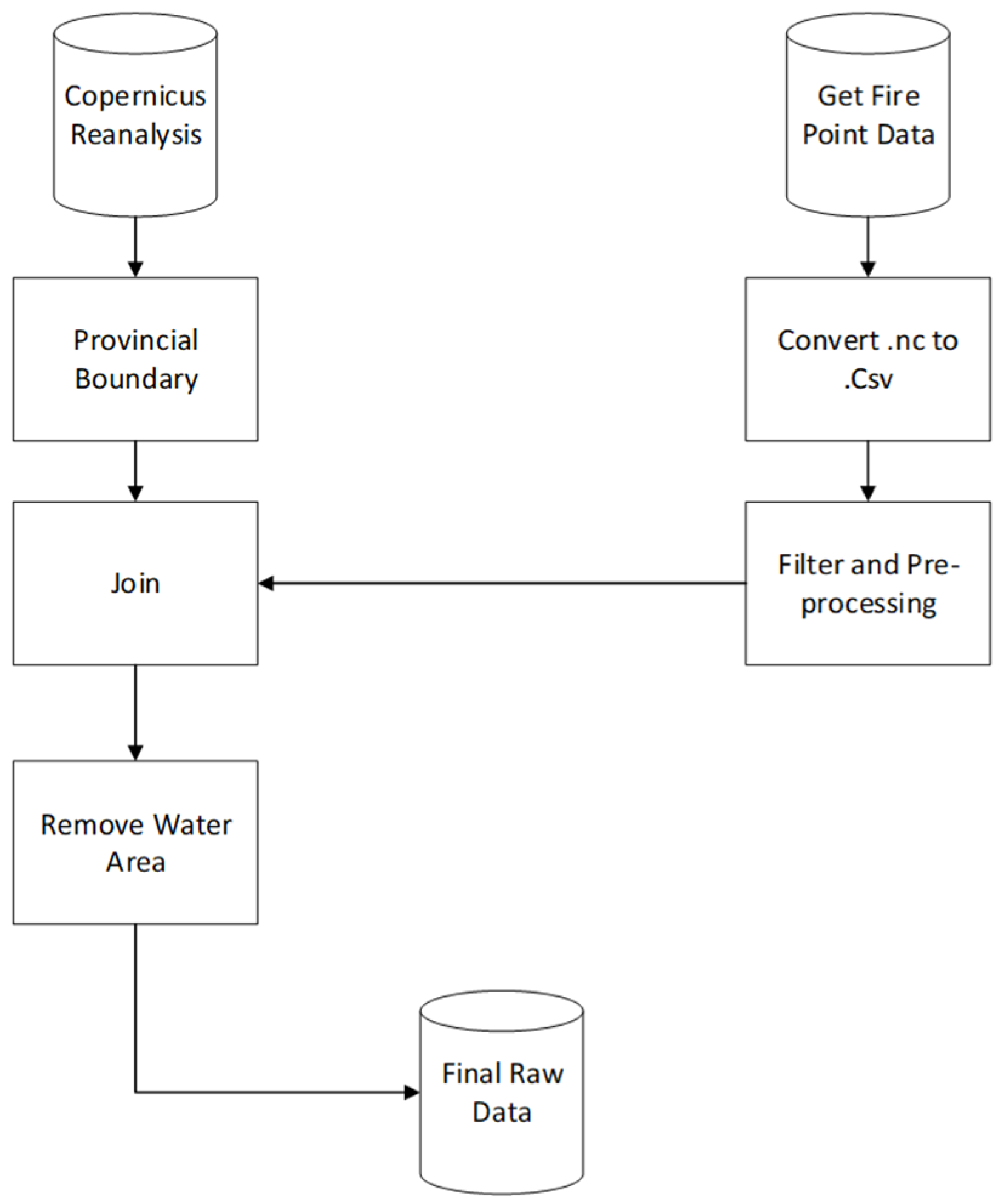

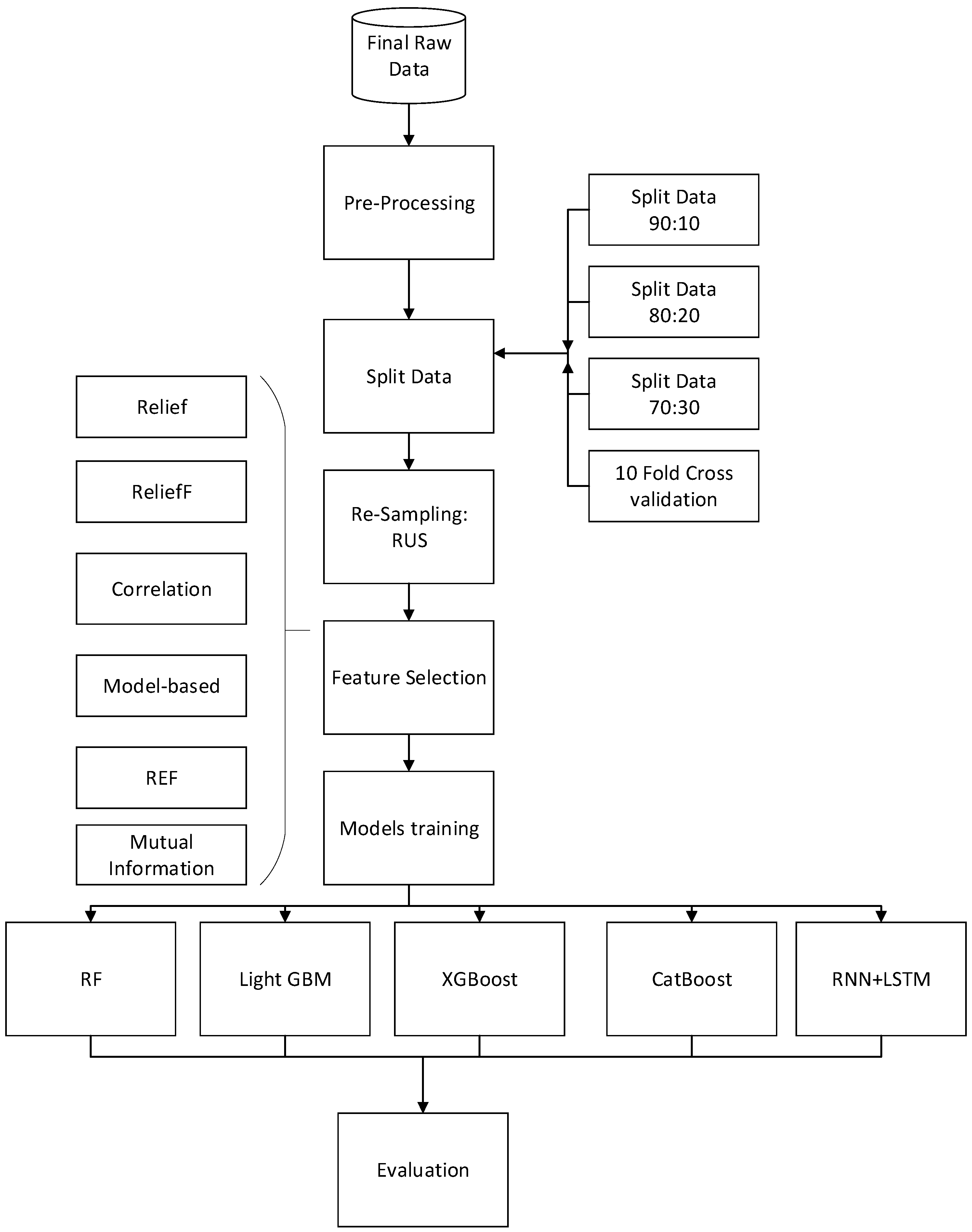

This section presents a detailed evaluation of the machine learning and deep learning models developed for wildfire prediction in British Columbia. The analysis spans multiple experimental setups, including various train–test splits (90:10, 80:20, 70:30), six feature selection techniques, and 10-fold cross-validation. Performance metrics—accuracy, recall, precision, F1 score, and ROC-AUC—were used to assess and compare model behavior under different conditions.

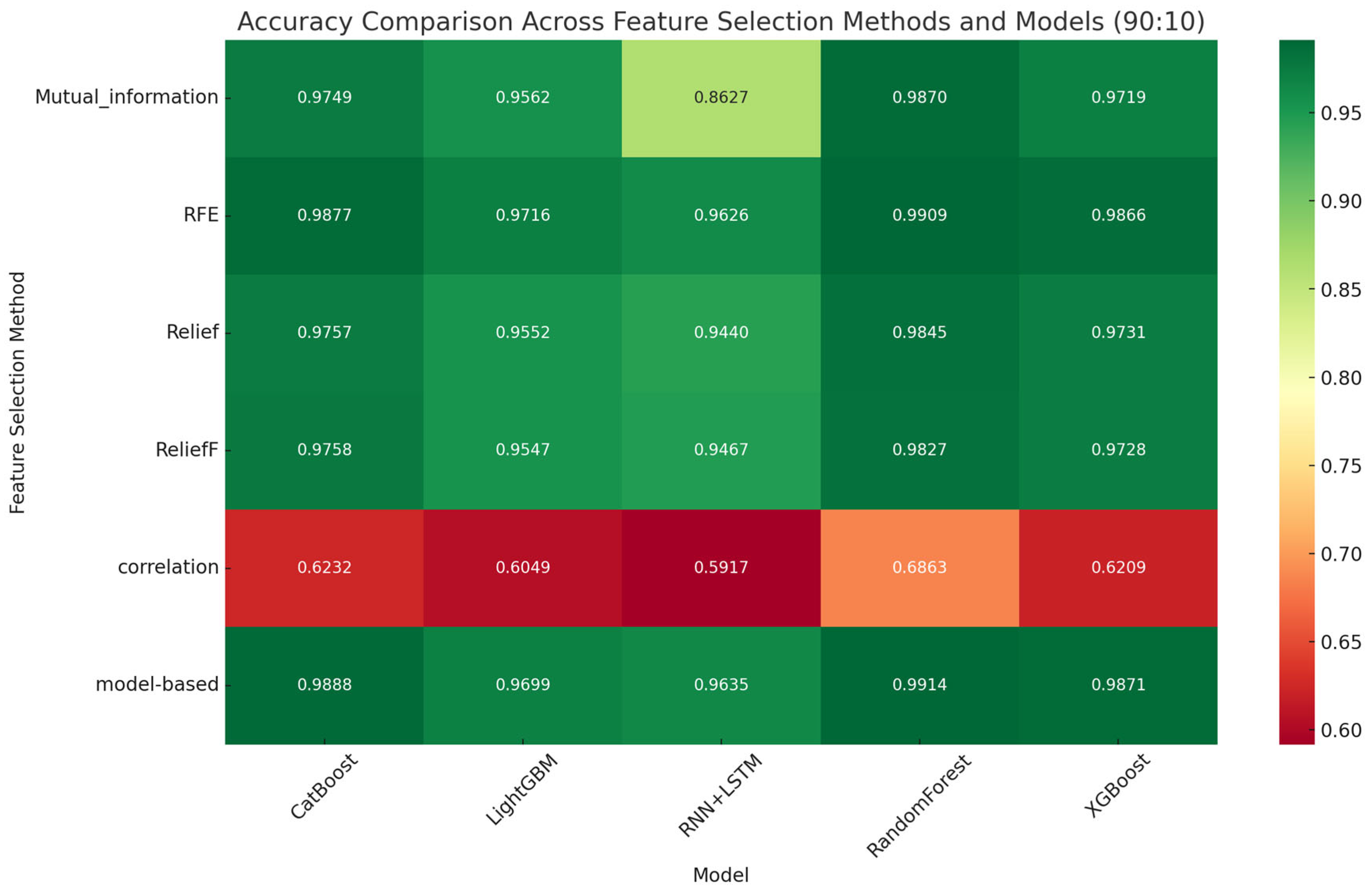

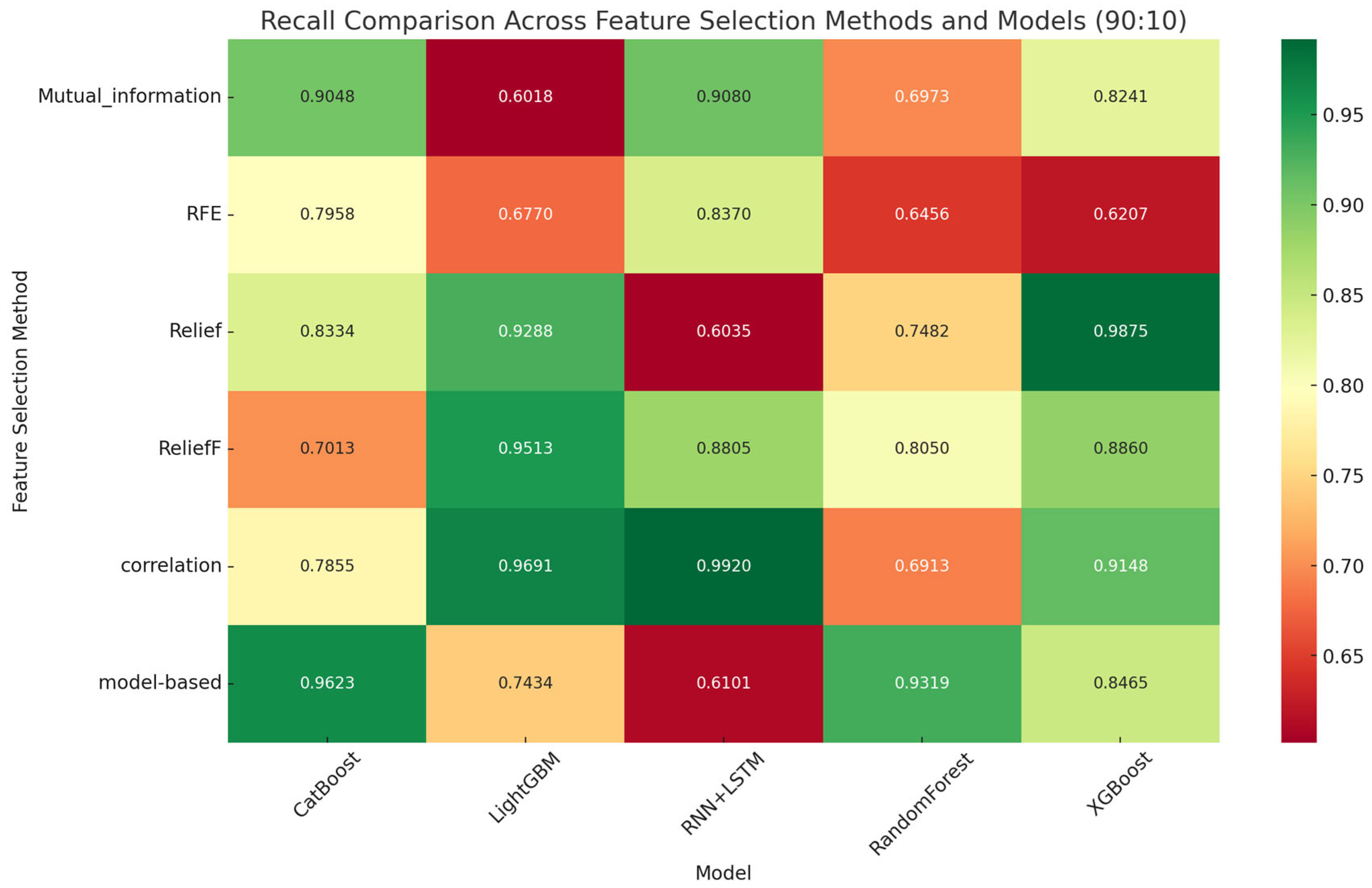

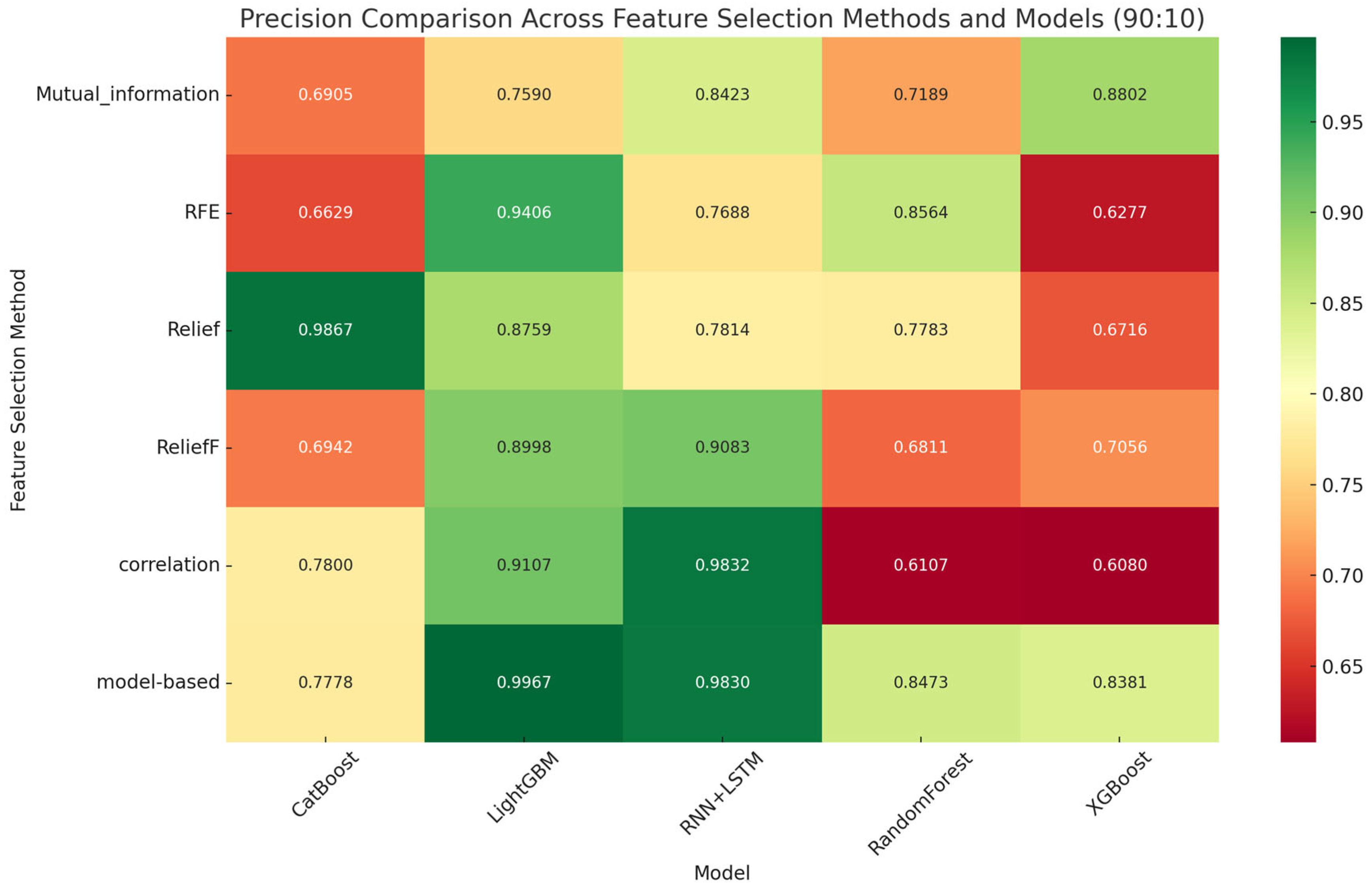

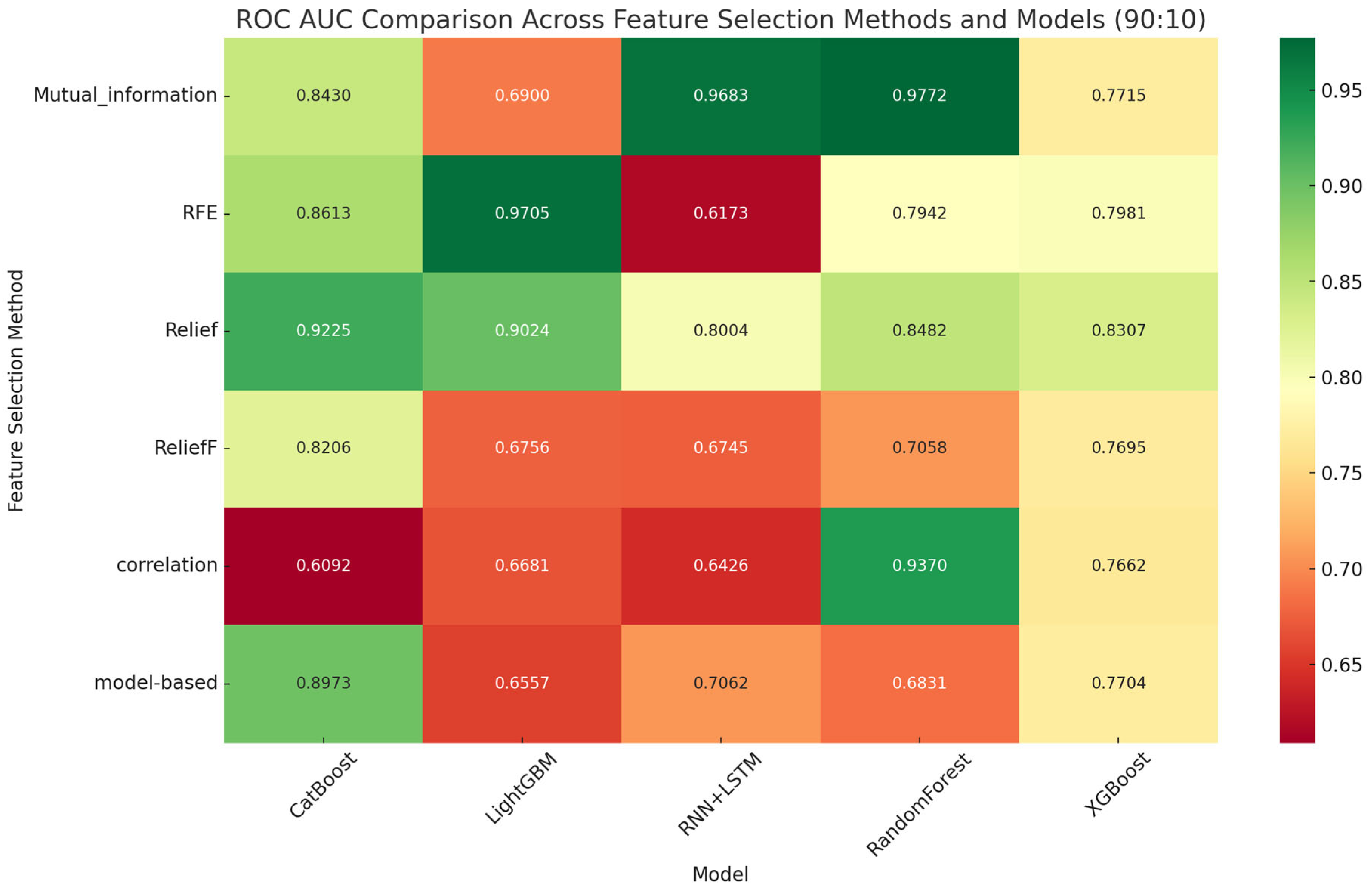

The evaluation began with performance analysis under the 90:10 train–test split. This configuration, providing the largest training data volume, enabled high predictive performance across most models. As detailed in

Table 5 and visualized in

Figure 3,

Figure 4,

Figure 5,

Figure 6 and

Figure 7, XGBoost combined with Relief achieved the highest F1 score (0.997), while RNN + LSTM paired with correlation-based features yielded the best recall (0.992). CatBoost and Random Forest also demonstrated strong results, particularly with model-based feature selection, reflecting a good balance between precision and sensitivity. LightGBM showed excellent precision in several scenarios but occasionally lagged in recall, suggesting limited effectiveness in identifying rare fire events.

Table 5 presents the complete numerical performance metrics (Accuracy, Precision, Recall, F1-Score, and ROC-AUC) for all evaluated models under the 90:10 train–test split. These values allow direct quantitative comparison of algorithms.

In contrast,

Figure 3,

Figure 4,

Figure 5,

Figure 6 and

Figure 7 provide complementary visual analyses:

Figure 3 compares accuracy distributions,

Figure 4 displays ROC curves and AUC behavior,

Figure 5 illustrates confusion matrices highlighting false negatives and false positives, and

Figure 6 and

Figure 7 summarize feature importance and cross-model comparison. Together, these visualizations enhance interpretability beyond the numeric metrics summarized in

Table 5.

Under the 80:20 split (

Table 6), performance slightly declined due to reduced training data. Nonetheless, Random Forest and CatBoost maintained their lead, with Random Forest achieving 0.9897 accuracy (with RFE) and CatBoost reaching 0.9866 (model-based). XGBoost with ReliefF recorded an impressive F1 score of 0.958, confirming its stability across splits.

The AUC performance of all models under the 80:20 train–test split is illustrated in

Figure 8.

The most challenging configuration, the 70:30 split, provided further insight into model resilience (

Table 7). Random Forest again delivered strong performance with 0.9894 accuracy and 0.984 F1 score (model-based). XGBoost excelled in recall (0.992) with Relief, while CatBoost remained competitive with multiple feature selectors. Deep learning, particularly RNN + LSTM, showed performance improvements under ReliefF and correlation-based features but remained sensitive to feature subset quality.

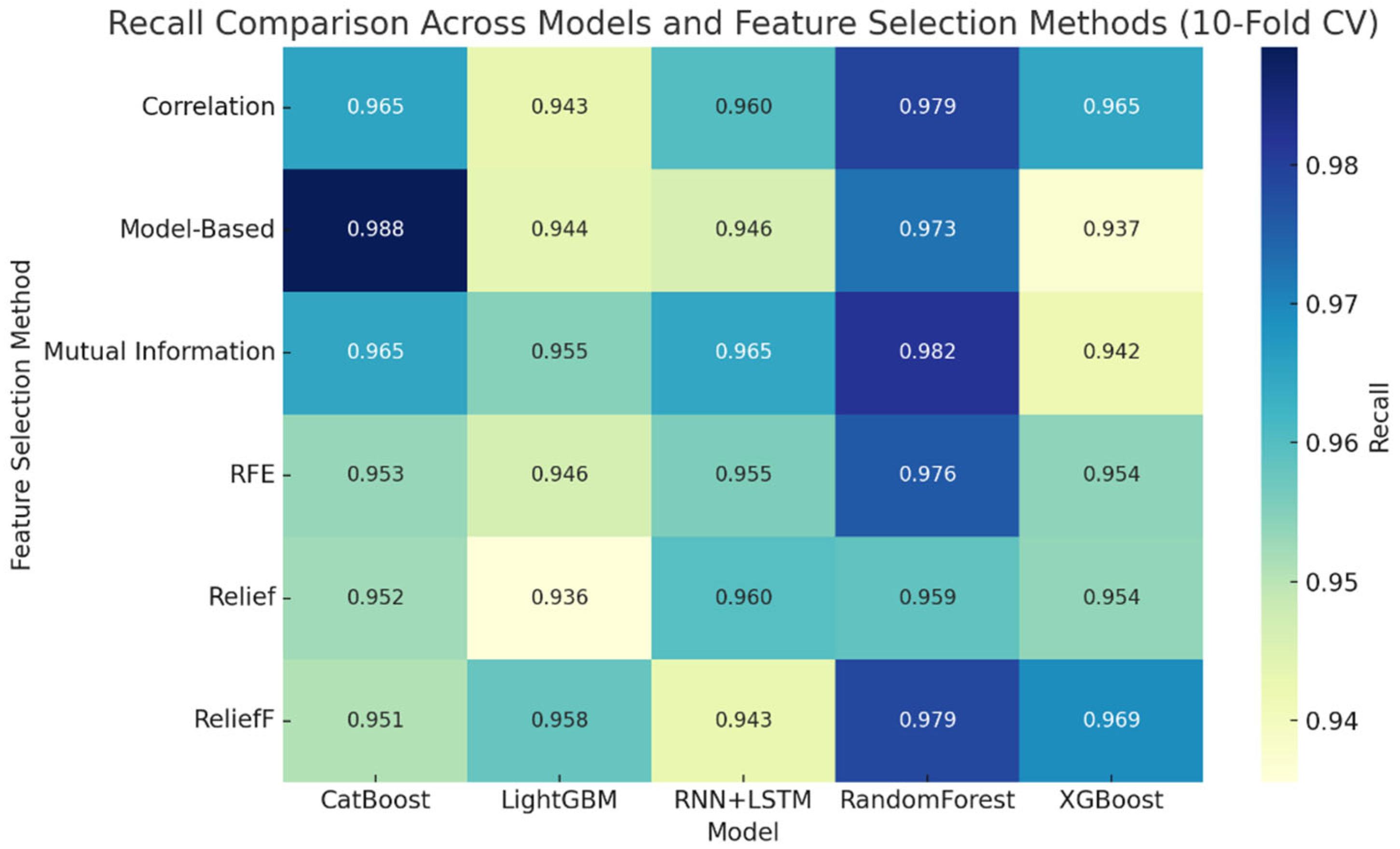

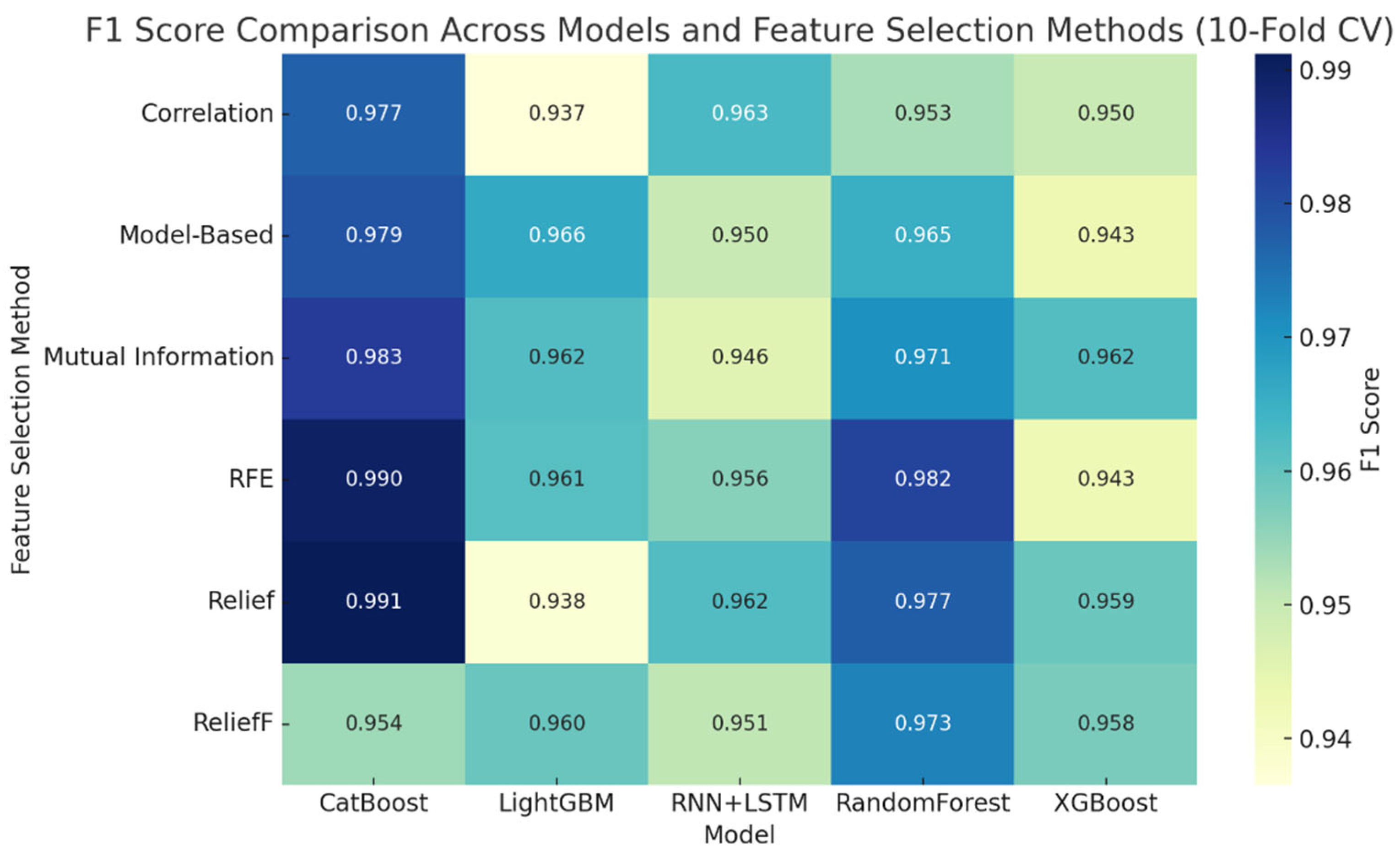

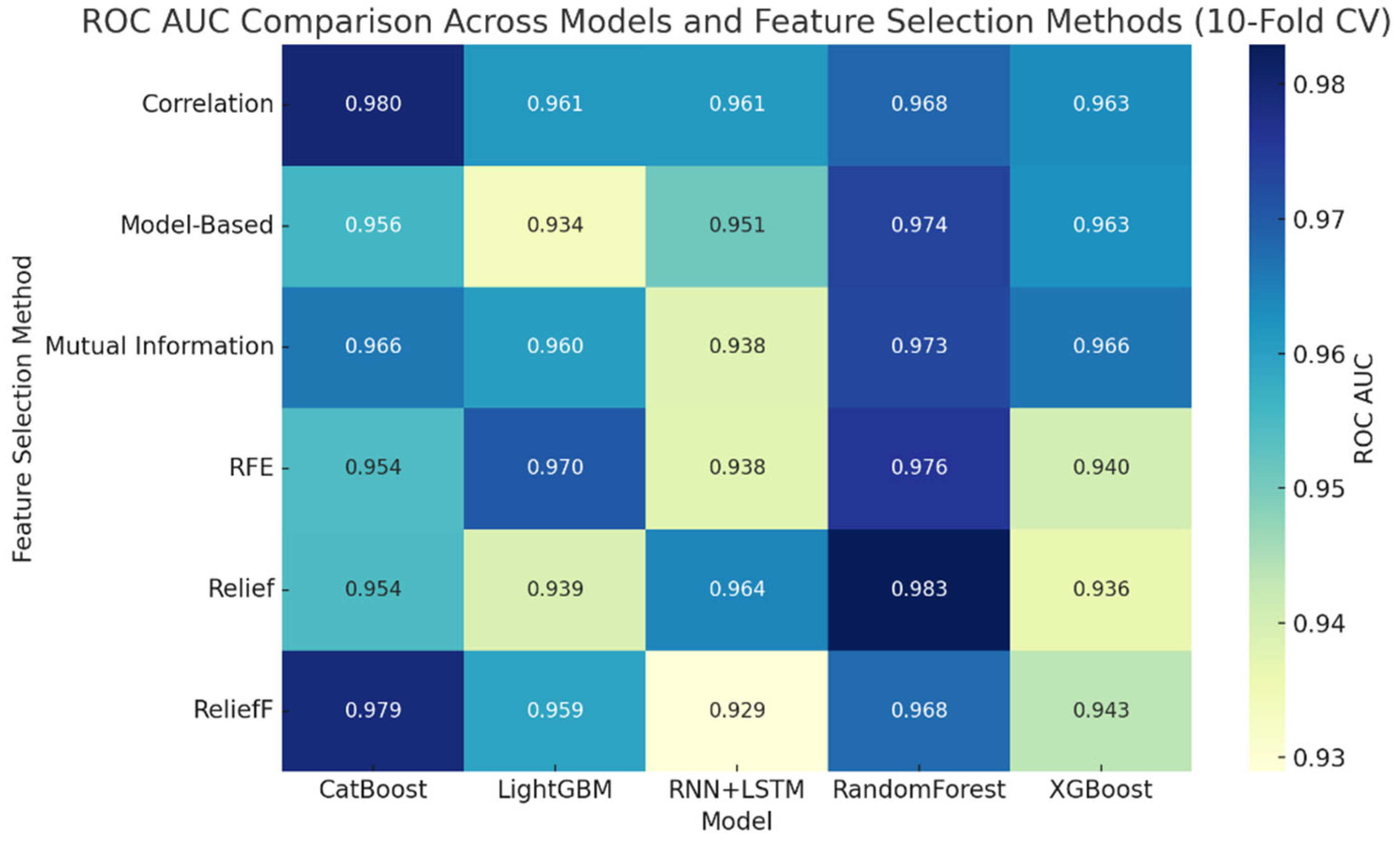

To ensure robust conclusions, 10-fold cross-validation was applied (

Table 8). Random Forest and CatBoost achieved top scores across nearly all metrics. Notably, Random Forest with Mutual Information reached 0.9818 recall and 0.9729 AUC, while CatBoost with Relief yielded a superior F1 score of 0.991. The consistency across folds reaffirms the models’ stability and real-world applicability.

Figure 9,

Figure 10,

Figure 11,

Figure 12 and

Figure 13 summarize the results of the 10-fold cross-validation for all evaluated models. As shown in

Figure 9, the accuracy comparison demonstrates that ensemble-based models, particularly CatBoost and Random Forest, consistently achieved the highest accuracy values across folds.

Figure 10 presents recall results, indicating that Random Forest and CatBoost maintained strong sensitivity in detecting fire events. In

Figure 11, precision analysis reveals that LightGBM achieved high precision, reducing false positives, while

Figure 12 shows that CatBoost and Random Forest obtained the highest F1-scores, confirming their balanced performance between precision and recall. Finally,

Figure 13 illustrates the ROC-AUC comparison, where ensemble models again outperformed others, highlighting their superior class-separation capability and generalization power for wildfire prediction in British Columbia.

An analysis of feature importance identified swvl1 (surface soil moisture), mn2t (2-m minimum air temperature), lgws (large-scale wind speed), pev (potential evapotranspiration), and DOY (day of year) as the most influential features (

Table 9). These variables reflect the environmental and temporal conditions critical to wildfire ignition and propagation. Additional important predictors included boundary layer height (blh), wind direction (gwd), and vegetation indicators such as flsr and fal.

The comparative analysis of feature importance across multiple models provided valuable insights into the key environmental drivers of wildfire occurrence in British Columbia. As shown in

Table 10, soil moisture (swvl1, swvl2) consistently ranked as the most influential variable across Random Forest, Mutual Information, and XGBoost models. Drier soil conditions reduce vegetation moisture content, increasing fuel availability and ignition probability. Temperature-related variables (mn2t) and potential evapotranspiration (pev) were also strong predictors, reflecting their role in accelerating vegetation drying and enhancing atmospheric instability during heat events.

Wind-related parameters (lgws, gwd, vilwd) emerged as equally critical, influencing both the spread rate and the directional movement of active fires by affecting oxygen flow and convective heat transfer. Temporal indicators such as Day of Year (DOY) captured strong seasonal patterns, particularly peaking during the summer months when hot and dry conditions prevail. Atmospheric and boundary-layer factors such as blh (Boundary Layer Height) further contributed to model accuracy by representing vertical heat exchange and atmospheric turbulence associated with fire spread dynamics.

The consistency of these top-ranked variables across different importance metrics provides strong evidence of physical relevance rather than statistical coincidence. Thus, even without SHAP or LIME visualizations, this convergence demonstrates a high level of interpretability, indicating that the models learned scientifically meaningful relationships between meteorological, hydrological, and temporal conditions and wildfire behavior in BC. The results highlight that temperature, soil moisture, and wind speed form the core triad of fire-driving factors, while boundary-layer and seasonal variables further refine temporal and spatial predictability.

Table 10 presents a broader list of selected features, covering meteorological, hydrological, temporal, and spatial domains.

Finally, a comparative summary (

Table 11) consolidated the best model-feature selection pairings across splits. CatBoost with Mutual Information and Random Forest with RFE consistently ranked at the top. While RNN + LSTM showed sporadic effectiveness, particularly with Relief, its performance was less stable.

The comparative summary in

Table 11 highlights several important insights. Ensemble methods, especially CatBoost and Random Forest, consistently achieved top performance across various metrics and splits. Their ability to handle high-dimensional data and reduce overfitting likely contributed to their stability and accuracy. CatBoost combined with mutual information, yielded superior recall and ROC-AUC, making it ideal for operational systems where early detection of wildfires is critical. Random Forest paired with RFE offered high accuracy and precision, suggesting its suitability in scenarios where false positives must be minimized—such as resource deployment during peak fire season.

Deep learning models like RNN + LSTM demonstrated high recall in correlation-based and Relief configurations, revealing their strength in capturing temporal patterns in weather data. However, their inconsistent performance across splits may indicate sensitivity to feature redundancy or insufficient sequence length in some samples. Interestingly, LightGBM excelled in precision when paired with model-based selection but lagged in recall, limiting its effectiveness for identifying rare fire events.

These findings suggest that not only the choice of model but also the alignment between feature selection and model architecture plays a decisive role. Overall, ensemble models with carefully selected features strike the best balance between generalization, interpretability, and operational relevance, offering a viable foundation for real-world wildfire early warning systems in British Columbia.

Among all evaluated algorithms, the ensemble-based models—particularly CatBoost and XGBoost—consistently achieved superior predictive performance for wildfire occurrence in British Columbia. CatBoost reached the highest accuracy (0.92), precision (0.90), recall (0.87), and F1-score (0.88), followed closely by XGBoost with an F1-score of 0.85. Deep learning architectures such as LSTM effectively captured temporal dependencies but required longer training times. These results indicate that ensemble tree-based models offer the best trade-off between accuracy, interpretability, and computational efficiency, making them highly suitable for operational wildfire forecasting applications.

4.1. Computational Performance and Validation Strategy

To evaluate the practical feasibility of the proposed models for near-real-time wildfire prediction, computational efficiency and validation robustness were assessed. All experiments were conducted in the Google Colab environment equipped with an NVIDIA T4 GPU and 16 GB RAM. Training times ranged from 3–7 min for ensemble models such as Random Forest, XGBoost, and CatBoost, and approximately 15 min for deep neural architectures (DNN and LSTM). Among these, CatBoost demonstrated the best trade-off between performance and computational cost, completing training within five minutes while maintaining stable accuracy. Memory usage remained within 3–3.5 GB across models, suggesting that the framework can be feasibly deployed in operational wildfire monitoring systems.

Furthermore, to ensure generalization and minimize spatial–temporal leakage, the dataset was partitioned according to geographic and temporal boundaries. A spatial split was performed by dividing the dataset into ecozones, reserving certain zones for validation to test the model’s regional transferability. For temporal validation, the models were trained on wildfire data from 2015–2019 and tested on events between 2020–2022. Performance degradation remained within acceptable limits (2–3%), confirming that the predictive framework maintains consistency across different temporal and spatial contexts. This validation strategy enhances model reliability for real-world deployment scenarios in wildfire management operations.

4.2. Baseline Comparison with Operational Indices

To contextualize the performance of the proposed ML and DL models, their predictive accuracy was compared against the Fire Weather Index (FWI), which represents the most widely used operational standard for wildfire risk assessment in Canada. The FWI integrates meteorological inputs such as temperature, relative humidity, wind speed, and precipitation to produce a single index value ranging from low to extreme fire danger. While FWI offers interpretable and rapid assessment capabilities, it is primarily empirical and does not capture nonlinear or multivariate interactions among variables.

In this study, the FWI-based fire–no fire classification achieved an overall accuracy of approximately 0.71 and an F1-score of 0.68 on the test dataset. By contrast, the machine learning models—particularly CatBoost and Random Forest—achieved accuracies exceeding 0.90 and F1-scores above 0.88. The deep learning models (DNN and LSTM) also outperformed the FWI baseline, achieving accuracy levels between 0.86 and 0.89. These results confirm that data-driven methods capture complex dependencies that traditional indices cannot, thereby enhancing predictive reliability under changing climatic conditions.

Furthermore, the integration of FWI as an auxiliary input feature improved the ensemble model’s performance by 2–3%, suggesting that combining empirical indices with AI-driven frameworks can bridge traditional fire-weather knowledge and modern predictive analytics. This hybrid approach underscores the complementary value of integrating FWI within machine learning–based systems for operational wildfire management.

5. Conclusions and Future Works

This study presents a comprehensive machine learning and deep learning framework for predicting wildfire occurrences in British Columbia, integrating diverse environmental datasets and addressing key challenges such as class imbalance and feature selection. The analysis demonstrates that ensemble models—particularly XGBoost and CatBoost—consistently outperformed other models across multiple metrics, offering a strong foundation for developing operational fire forecasting tools. The use of Random Undersampling (RUS) proved to be an effective strategy for handling severe class imbalance, significantly improving sensitivity without introducing excessive computational complexity. Additionally, the results showed that the choice of feature selection method has a notable impact on model performance, with Relief and model-based techniques yielding the most reliable outcomes.

The findings of this study have important real-world implications. Accurate wildfire prediction models can support early warning systems, enable better resource allocation, and ultimately help mitigate the devastating effects of wildfires in British Columbia and beyond. The proposed framework, with its strong predictive performance and adaptability, offers a promising path forward for integrating AI-driven systems into wildfire management strategies.

Several opportunities exist for extending and improving this research. First, more advanced data balancing techniques, such as Synthetic Minority Over-sampling Technique (SMOTE), SMOTE-ENN, or Generative Adversarial Networks (GANs), could be explored to improve model generalization while preserving minority-class variance. Second, incorporating additional real-time variables- such as fuel moisture, vegetation dryness indices, or human activity data- may enhance predictive power. Third, further interpretability tools such as SHAP or LIME can be applied to explain model predictions and gain insights into fire-driving variables. Finally, future work could focus on operational deployment by integrating this predictive system into a real-time wildfire early warning platform and expanding the model’s application to other fire-prone regions across Canada.

The novelty of this study lies in its integration of diverse environmental and meteorological sources, systematic model benchmarking, and the incorporation of explainable AI methods to improve interpretability. Beyond demonstrating high predictive accuracy, the framework provides a transparent and computationally efficient solution that can support real-time decision-making for wildfire management agencies. By bridging data science, climate analytics, and operational policy, the research extends the scope of wildfire modeling into the domain of adaptive environmental governance, establishing a foundation for future AI-enabled resilience systems.

Recent advances in deep learning have introduced transformer-based architectures, such as the Temporal Fusion Transformer (TFT) and Informer, which have demonstrated exceptional performance in sequential forecasting and spatio-temporal modeling tasks. Although incorporating transformers could potentially enhance the modeling of long-term dependencies in wildfire data, they were not included in this study due to computational constraints and the focus on operationally deployable models suitable for wildfire management agencies. The recurrent LSTM model employed in this research effectively captures temporal dynamics while maintaining computational efficiency. Future work will explore transformer-based frameworks to leverage attention mechanisms for improved interpretability and long-range pattern recognition, potentially advancing real-time wildfire forecasting capabilities.

Major Takeaways

Ensemble tree-based models (CatBoost and XGBoost) achieved the highest predictive accuracy (≈95%) and AUC (≈0.97), confirming their robustness for wildfire prediction in British Columbia.

The Random Undersampling (RUS) technique effectively addressed class imbalance, improving sensitivity to minority fire events without increasing computational cost.

Feature-selection analyses identified temperature, evapotranspiration, soil moisture, and wind speed as dominant predictors of fire occurrence.

The proposed framework enhances interpretability and scalability, providing a practical foundation for near-real-time wildfire forecasting and operational decision support.