1. Introduction

Emotion recognition is a rapidly evolving field with significant implications for human–computer interaction, affective computing, and mental health assessment. Accurately detecting and interpreting emotional states can enhance user experiences in various applications, including personalized content delivery, adaptive user interfaces, and intelligent virtual agents. Traditionally, emotion recognition has relied on external cues such as facial expressions, speech, and body language. These methods, however, are subject to limitations, including their dependence on visual or auditory stimuli, which can be affected by lighting conditions, background noise, or cultural differences in emotional expression.

EEG is a non-invasive method that directly measures electrical activity in the brain, providing a real-time and objective window into neural processes. EEG signals reflect the brain’s cognitive and emotional responses to various stimuli, making it a powerful tool for understanding underlying emotional states. Compared to other physiological signals, such as heart rate variability or skin conductance, EEG has the advantage of being highly time-sensitive and capable of capturing rapid emotional shifts in response to external stimuli.

Recent advances in wearable EEG devices and signal processing techniques have paved the way for smaller, more comfortable headsets. However, this adapting for real life comes with reduced spatial resolution. Overcoming this trade-off is a key challenge for widespread adoption in real-life emotion recognition tasks.

This paper presents a continuation of our research in the topic of 4-channel EEG data analysis for human psychophysiological state monitoring [

1,

2,

3]. In the paper we present an approach to emotion valence prediction as well as its evaluation. We develop a machine learning model that utilizes EEG data to classify emotional valence—whether the emotion is positive, negative, or neutral. Valence is a critical dimension of emotion that reflects the subjective pleasantness or unpleasantness of an emotional experience. Understanding the valence of emotions provides valuable insights into the emotional tone of an individual’s state and has applications in personalized emotional support systems, mental health diagnosis, and adaptive technologies that respond to user emotions. For evaluation of emotion valence prediction based on EEG data, we propose to compare the performance of developed model with traditional computer vision-based emotion recognition techniques, which analyse facial expressions and visual cues. By comparing the two approaches, we can assess the strengths and limitations of EEG-based emotion recognition and highlight its potential as a standalone solution for emotion detection.

One of the key advantages of using EEG for emotion recognition is its non-invasiveness and ease of use. EEG-based emotion recognition systems can be integrated into wearable devices with minimal user interference, making them suitable for continuous monitoring in real-world settings. Additionally, EEG allows for the detection of emotions that may not be externally visible, such as internal emotional states, thus providing a deeper level of insight into an individual’s feelings. The compact 4-channel EEG setup used in this study is an important step toward developing more accessible and cost-effective emotion detection systems. Despite the limited number of channels, the model is designed to extract meaningful patterns from the EEG signals that can accurately predict the emotional valence of an individual.

However, the use of EEG in emotion recognition presents several challenges. EEG signals are inherently noisy and prone to artifacts caused by muscle movements, blinking, and environmental factors. Moreover, individual variability in brain patterns makes it difficult to develop a model that generalizes across different people. In this study, we employ a convolutional neural network (CNN), a deep learning approach known for its ability to extract hierarchical features from data, to address these challenges. CNNs are particularly effective for processing time-series data like EEG, as they can capture both spatial and temporal dependencies within the signals. By applying this model to a dataset of 4-channel EEG recordings, we aim to achieve high classification accuracy for emotion valence recognition while minimizing the impact of noise and individual differences.

In summary, prior research has demonstrated that laboratory EEG systems with high-density electrode configurations are capable of accurately recognizing emotional states, offering valuable opportunities for affective computing and mental health assessment. Based on these findings, our study investigates whether emotion valence prediction can be effectively achieved using a 4-channel wearable EEG device. For this purpose, a convolutional neural network is trained on large public multi-channel EEG datasets and subsequently evaluated on data collected with a compact 4-channel headband.

The rest of the paper is divided as follows:

Section 2 explores related works on the similar emotion valence prediction approaches.

Section 3 describes the proposed approach, including data collection and preprocessing, model architecture, training procedures, evaluation metrics, and encountered challenges.

Section 4 concludes the study and discusses future directions for research.

2. Related Works

Emotion recognition involves several theoretical and technological approaches. One theoretical view treats emotions as discrete categories. For example, Plutchik proposes a “wheel of emotions” with eight basic emotional states that differ in intensity and polarity [

4]. Another approach places emotions on continuous dimensions, usually valence (pleasant–unpleasant) and arousal (high–low). Lang’s framework fits this idea, showing that each emotional experience can be placed on these two main axes [

5].

Emotion recognition has been widely explored in both computer vision and physiological signal processing, with EEG-based emotion classification gaining increasing attention due to its potential for detecting internal emotional states. Various datasets have been collected to facilitate research in this field, each employing different methodologies for emotion elicitation, recording modalities, and labeling strategies.

Various methods have been used to induce emotional states in participants while recording their brain activity. The choice of stimuli depends on the desired emotional responses. A common approach to eliciting emotions is through audiovisual stimuli, where participants are shown preselected video clips or images designed to trigger specific emotional states. Carefully curated film segments or standardized image sets can evoke positive, negative, or neutral emotions, while music can further amplify emotional responses. Another method involves self-referential or autobiographical recall, where individuals are asked to remember past experiences associated with strong emotions. This technique taps into personal memories and can evoke emotions that are more representative of real-world affective states.

Emotion labeling in EEG-based datasets typically relies on self-reported assessments using scales such as the Self-Assessment Manikin, which measures valence, arousal, and dominance. Participants provide their subjective ratings immediately after stimulus exposure, ensuring that the reported emotions reflect their immediate experience. In contrast, video-based emotion datasets often employ facial expression recognition frameworks combined with expert annotations or crowd-sourced labeling.

In earlier research on EEG-based emotion recognition, many studies used traditional machine learning methods. These methods relied on hand-selected features, such as power spectral density (PSD) in the alpha, beta, gamma, delta, and theta bands, and various statistical measures derived from time-domain signals. Their findings emphasize the role of EEG alpha rhythms as indicators of relaxation and autonomic regulation relevant for emotion recognition studies. Algorithms like support vector machines, k-nearest neighbors, discriminant analyses, or ensemble methods were then used for classification. Rahman et al. combined principal component analysis and a statistical test to refine features before training a support vector machine. Their system effectively separated neutral, positive, and negative emotional states [

6]. Jung et al. used several machine learning models, including XGBoost and support vector classifiers, to classify emotional states from EEG signals, achieving high accuracy, with the best results reaching 93.1% for high arousal versus baseline [

7]. Garg et al. investigated valence and arousal prediction using a portable Emotiv EEG headset with feature-based regression models, achieving RMSE values below 1 on DREAMER and demonstrating the feasibility of low-cost mobile EEG for continuous emotion decoding [

8]. Their results show that traditional features combined with KNN regression can effectively model continuous emotional states for finer-grained emotion recognition. While these methods can work well with clear data and carefully chosen features, they may not always capture complex patterns in EEG signals and often need a lot of domain knowledge to find the most useful features.

Deep learning methods have become popular in recent years because they can learn features directly from raw or slightly preprocessed EEG data. Convolutional neural networks are especially common for detecting local patterns across space (channels) and time. CNNs can learn robust representations of the data without extensive human-lead feature engineering. Wang et al. proposed a 2D CNN model that uses convolutional kernels of different sizes to extract emotion-related features in both the time and spatial directions, achieving recognition accuracies of up to 99.99% for arousal and 99.98% for valence binary classification on the DEAP dataset [

9].

Recurrent neural networks (RNNs) are another form of deep learning suited to sequential data like EEG. RNNs keep track of a hidden state that changes over time, which is useful for capturing the changing nature of emotional responses. Advanced types of RNNs, such as long short-term memory (LSTM) networks and gated recurrent units (GRUs), can reduce problems with vanishing or exploding gradients. Jiang et al. used a Bi-LSTM model to classify positive and negative emotions from EEG signals, achieving recognition accuracies of 90.8% and 95.8%, respectively, demonstrating the effectiveness of deep learning models in emotion recognition [

10]. Yao et al. proposed a hybrid Transformer-CNN model (EEG ST-TCNN) for spatial–temporal EEG emotion classification, achieving 96.67% accuracy on SEED and over 96% on DEAP valence/arousal, demonstrating that combining multi-head attention with CNN enhances feature extraction for emotion recognition [

11].

Recent work by Du et al. [

12] further expands the application of deep learning in emotion recognition by focusing on music-induced emotions. In their study, a hybrid 1D-CNN-BiLSTM model was proposed to classify valence and arousal from EEG signals evoked by Chinese ancient-style music. Their approach, validated on both public datasets (DEAP and DREAMER) and a self-acquired EEG dataset from Chinese college students, demonstrated high classification accuracies—especially in negative valence detection.

Traditional laboratory EEG emotion recognition systems often utilize high-density setups with many electrodes, which increases spatial resolution but also significantly raises computational complexity and reduces user comfort. To address this, Xiong et al. proposed a sparse EEG-based emotion recognition method using the CNN-KAN-F2CA model, which integrates frequency-domain and spatial features from a reduced number of EEG channels. Their approach demonstrated competitive performance, achieving 97.98% accuracy for a three-class task on the SEED dataset while using only four EEG channels [

13].

Another trend in emotion recognition research focuses on making EEG recording more user-friendly by using wearable or low-channel devices. Wu et al. reached 75–76% accuracy for valence detection with only two frontal channels [

14]. Nandini et al. proposed an ensemble deep learning framework for emotion recognition using wearable devices, including the MUSE 2 EEG headband, achieving high classification accuracies of 99.41% for discrete emotions and 97.81% for the Valence dimension in the 2D Valence-Arousal model [

15]. These results suggest that smaller EEG headsets can still support practical emotion recognition, especially if signal quality is managed well. However, they offer less spatial detail, so more research is needed to see how they perform in varied real-world conditions.

An important consideration for EEG-based emotion recognition is the temporal nature of emotions themselves. Emotions are inherently dynamic processes that typically unfold and dissipate within relatively short time spans. Verduyn et al. conducted two experience sampling studies and demonstrated that the majority of naturally occurring emotional episodes last less than 30 min [

16]. These findings imply that emotions are transient phenomena rather than stable, long-lasting states, which places clear requirements on the temporal resolution of recognition systems.

For EEG-based approaches, this means that analysis windows must be sufficiently short to capture the onset, peak, and decay of emotional responses without averaging them out. If the chosen time window is too long, subtle but critical changes in emotional dynamics may be lost, and the resulting model will fail to reflect the rapid shifts that characterize real affective experiences. On the other hand, very short windows may not contain enough discriminative information for robust classification, leading to unstable predictions.

This trade-off between capturing emotional variability and ensuring stable classification was systematically investigated by Ouyang et al., who analyzed the influence of EEG time window length on recognition accuracy [

17]. Their results showed that relatively short windows are sufficient for reliable emotion classification and that excessively long windows do not necessarily improve performance. In fact, shorter windows allow models to adapt more effectively to the dynamic character of affective processes while preserving computational efficiency.

3. Materials and Methods

The section includes data collection and reprocessing, model architecture, training procedures, evaluation metrics, and encountered challenges.

3.1. General Description

This study focuses on emotion recognition by utilizing EEG data and video-based emotion recognition models. Public datasets—FACED, containing 30-channel 250 Hz EEG data from 123 participants, and SEED, containing 62-channel 200 Hz EEG data from 15 participants—are used to train a convolutional neural network for emotion valence prediction. Since the recorded dataset consists of only 4-channel EEG data, a preprocessing step is applied to extract relevant features and align the data structure with the trained model.

The trained CNN model is then used to predict emotion valence for the recorded dataset based on EEG signals. In parallel, a video-based emotion recognition model from another study [

18] is used to analyze emotions from the recorded video data. The result of video-based emotion recognition is used as ground truth during the evaluation of the EEG-based model because our recorded dataset does not have labelled emotions. General overview of methodology is shown in

Figure 1. A central element of the proposed methodology, highlighted by the wide arrow, is the application of a CNN model trained on large public datasets to emotion valence prediction using wearable low-channel EEG recordings.

3.2. Our Dataset Collection

For this study, two primary devices were employed. The first device was the BrainBit Headband, California, USA, shown in

Figure 2, a wearable EEG device equipped with four dry electrodes that recorded raw EEG signals at a sampling rate of 250 Hz, providing data in volts. The second device was a standard web camera, which recorded video of the participant’s face in 720p resolution. Data collection setup is shown in

Figure 3.

The BrainBit Headband follows the international 10–20 electrode placement system and is equipped with four active electrodes positioned at O1, O2, T3, and T4. The reference electrode for the BrainBit device is positioned on the forehead.

The study involved 16 participants who were selected without specific criteria regarding age or gender.

Data collection was performed during computer-based sessions in which participants were seated in front of a laptop. Each session lasted approximately three hours and involved a variety of tasks designed to elicit a range of eye movements. These tasks included reading passages displayed on the screen, completing standardized Landolt C tests, and playing simple computer games.

3.3. Public Datasets

We incorporated two established EEG emotion datasets to expand the diversity and volume of training data. Both contain well-labeled EEG recordings from controlled emotion-elicitation experiments, and were adapted to the 4-channel BrainBit configuration used in our study for consistent preprocessing, model training, and cross-dataset evaluation.

3.3.1. FACED Dataset

The FACED dataset [

19] comprises recordings from 123 participants, each subjected to a series of emotion-eliciting stimuli. EEG signals were captured using a 30-channel system at a 250 Hz sampling rate, and is stored in volts.

Participants were exposed to 28 distinct video clips, each selected to evoke one of nine specific emotional states: amusement, inspiration, joy, tenderness, anger, fear, disgust, sadness, and neutrality. After viewing the stimuli, participants provided self-assessments of their emotional experiences. These subjective ratings were then used to label the EEG data in terms of valence (positive, negative, neutral) and discrete emotional categories. Record overview is shown in

Figure 4.

Since the EEG recordings in this dataset were captured using a high-density 30-channel system, a subset of four channels was selected to match the electrode placement of the BrainBit headband used in our study. The selected channels were O1 and O2, located over the occipital lobe, and C3 and C4, positioned over the central region of the scalp. These channels were chosen based on their proximity to the BrainBit electrodes, ensuring that the signal characteristics remained as similar as possible between the two datasets. The occipital electrodes (O1 and O2) primarily capture visual cortex activity, which is relevant given that the emotion-eliciting stimuli were presented in video format. The central electrodes (C3 and C4) are associated with motor and sensorimotor processing, which can be linked to emotional responses that involve physiological and muscular changes.

By extracting data from only these four channels, the FACED dataset was effectively adapted to the hardware constraints of the BrainBit headband, allowing the trained model to generalize better to real-world applications where compact EEG devices with a limited number of electrodes are commonly used. This channel selection also reduced computational complexity while preserving critical information relevant to emotion recognition.

3.3.2. SEED Dataset

The SEED dataset [

20,

21] is a widely used public dataset for EEG-based emotion recognition, collected to support research on affective computing and brain–computer interfaces. It comprises EEG recordings from 15 subjects (7 males and 8 females), aged between 23 and 26 years. The dataset was collected using a 62-channel EEG system based on the international 10–20 system at a sampling rate of 1000 Hz, later downsampled to 200 Hz for analysis.

Participants were shown 15 film clips (each approximately 4 min long) designed to elicit three emotional states: positive, neutral, and negative (see

Figure 5). Each participant viewed the same set of videos in randomized order across three separate sessions conducted on different days to evaluate cross-session stability. After watching each clip, subjects reported their subjective emotional states, which were used as labels for the EEG data.

To adapt the SEED dataset to our 4-channel BrainBit setup, we selected four electrodes that spatially corresponded most closely to the BrainBit electrodes and resampled dataset to 250 Hz. Specifically, we used channels O1, O2 (occipital), and C3, C4, matching the BrainBit placement of O1, O2, T3, and T4. This approach ensured consistent data structure between SEED, FACED, and our recorded dataset, enabling comparative evaluation and cross-dataset transfer learning experiments.

Using SEED in addition to FACED broadens the diversity of training data, covering different cultural and experimental conditions, thereby improving the generalizability of our CNN model to real-world applications.

3.4. Data Preprocessing

To ensure the quality of the EEG data, preprocessing steps were implemented to remove noise. A bandpass filter was applied, allowing frequencies between 0.5 and 40 Hz to pass through. Previous studies show that this filtering can improve effectiveness of EEG-based classification [

22]. More advanced artifact removal techniques such as ICA or wavelet-based filtering were not applied, as their effectiveness depends on having multiple spatially distributed channels. In low-channel systems like the 4-electrode BrainBit headband, such methods may introduce distortions rather than improvements. Therefore, a 0.5–40 Hz bandpass filter was used, which effectively suppresses high-frequency and muscle artifacts while preserving emotion-related EEG activity.

The continuous EEG signals were segmented into epochs with no overlapping. For the FACED dataset, each epoch ranged inside the onsets of each video clip with 0.8–6 s windows. Similarly, for our recorded dataset, we segmented signals into 0.8–6 s windows. Although emotions typically evolve over longer periods, short segments are sufficient for effective classification. Longer windows may blur rapid emotional transitions, reducing model short-term sensitivity.

Consistency of data between different dataset was achieved by applying same normalization and filtering steps. Also we ensured that both datasets have equal sampling rate.

3.5. Model Architecture and Training

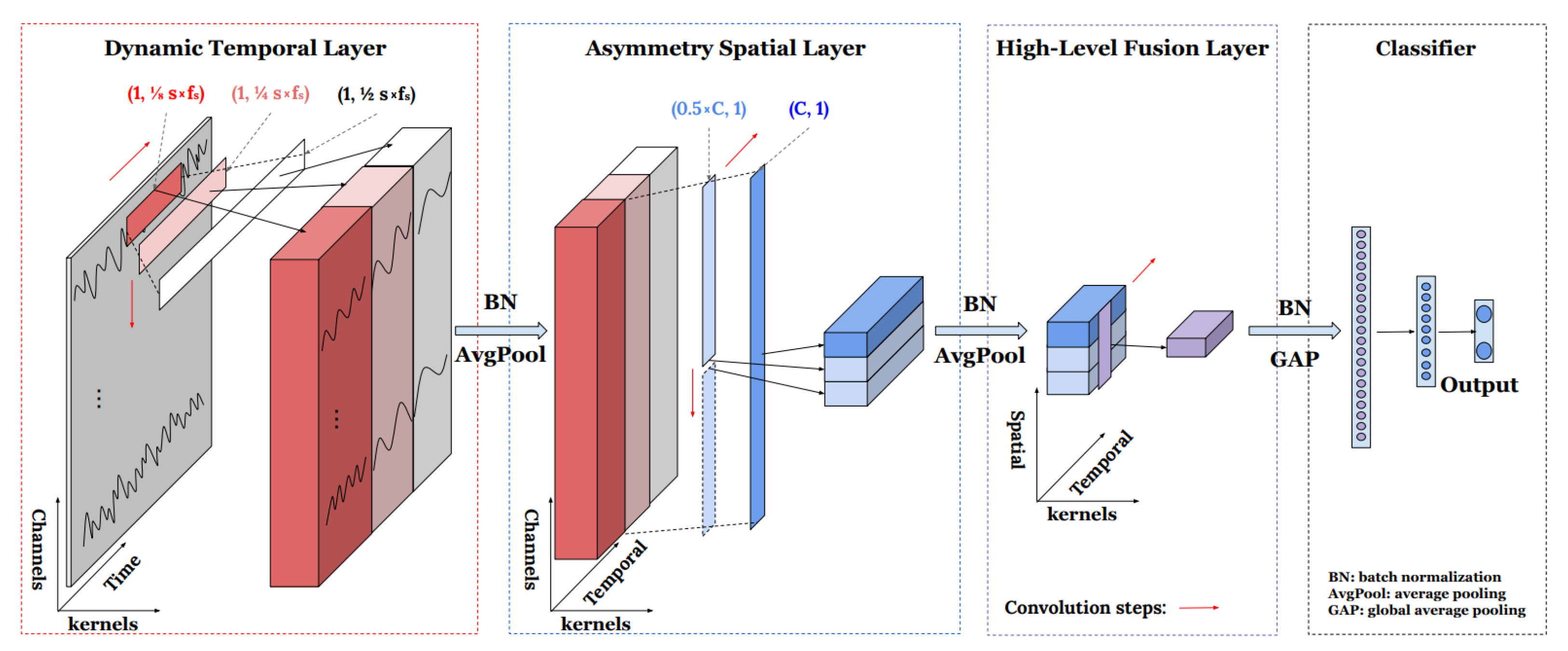

The classification tasks were performed using TSCeption [

23], a deep learning model specifically designed for EEG-based time-series classification. TSCeption utilizes both temporal and spatial convolutional layers to extract multi-scale features from EEG signals, effectively capturing both short-term and long-term dependencies in the data. In the TSCeption model, temporal convolutions with multiple kernel sizes are first applied to each EEG channel to capture both short- and long-term temporal dependencies. The outputs of these temporal layers are then processed by spatial convolutional layers that learn relationships among the all EEG channels. The temporal and spatial feature maps are concatenated before entering the fully connected classification layers. Model architecture is shown in

Figure 6.

We used the Adam optimizer with a maximum of 100 training epochs. PyTorch 2.9.0 was used for implementing TSCeption. The number of temporal and spatial convolutional kernels was 15 each, with hidden layer channels set to 512. A dropout rate of 0.5 was used to mitigate overfitting, while the learning rate was set to 0.001 with a weight decay of to introduce regularization. The loss function for training was cross-entropy, given the multi-class nature of the emotion valence classification task.

Because the dataset valence labels were unbalanced, a weighted data loader was used to adjust the sampling frequency of each class. This approach helps the model see more examples from underrepresented classes.

Two training strategies were employed to evaluate the model’s generalizability. In the first approach, the dataset was randomly split into training, validation, and test sets with an 80/10/10 ratio, ensuring that data from all participants contributed to model training. Early stopping was applied based on validation performance to prevent overfitting and optimize model convergence. In the second approach, a leave-one-subject-out cross-validation (LOSO-CV) scheme was used, where the model was trained on data from 123 participants and tested on the remaining participant. This process was repeated for each participant, allowing the evaluation of subject-independent performance and robustness across individuals.

3.6. Evaluation Metrics

Model performance was evaluated using accuracy, F1 score, and mean squared error (MSE). Accuracy reflects how often the model correctly predicts the emotional valence. The F1 score provides an all-in-one metric that summarizes precision, recall, and sensitivity to class imbalance within a single value, allowing for a more comprehensive understanding of classification performance. MSE was additionally used to measure the consistency between EEG- and video-based prediction probabilities. A similar evaluation design was applied in recent deep learning research [

24], supporting the suitability of these metrics for performance assessment.

3.7. Video-Based Emotion Recognition Comparison Approach

In this study, a video-based emotion recognition system EMO-AffectNetModel [

18] was used as a benchmark for evaluating the performance of the EEG-based model. This model analyzes facial expressions to classify emotional states. The system processes each video frame independently, extracting facial features and mapping them to an emotional category using a deep learning model trained on a large-scale facial expression dataset.

The video data of our dataset was recorded simultaneously with EEG signals, capturing the participants’ facial expressions in real-time. Since both video and EEG recordings were obtained using the same device, no additional time synchronization adjustments were necessary. However, as the recordings were manually started and did not begin at exactly the same moment, a common timeline was established based on the overlapping duration of both data sources. Only time segments where both EEG and video data were available were used for further analysis to ensure consistency in emotion classification.

The recorded data was divided into non-overlapping 1.1-s windows to allow a direct comparison between EEG-based and video-based predictions. For EEG-based recognition, a single emotion valence prediction was generated per 1.1-s window. In contrast, video-based recognition provided frame-level predictions, meaning multiple classifications were obtained within each second. To create a comparable output, the most frequently occurring valence prediction within a 1.1-s interval was used as the final classification for that window. This aggregation method ensured that both models produced a single, comparable prediction per time window.

To assess the consistency and reliability of EEG-based emotion recognition, its predictions were compared with those obtained from the video-based model. The analysis was conducted on a per-window basis, where each 1.1-s segment of data contained both EEG and aggregated video-based emotion predictions. This direct comparison allowed for an evaluation of agreement between the two methods.

4. Results

4.1. EEG-Based Emotion Recognition: FACED and SEED

Performance evaluation was conducted on two experimental setups to assess model generalizability across different datasets:

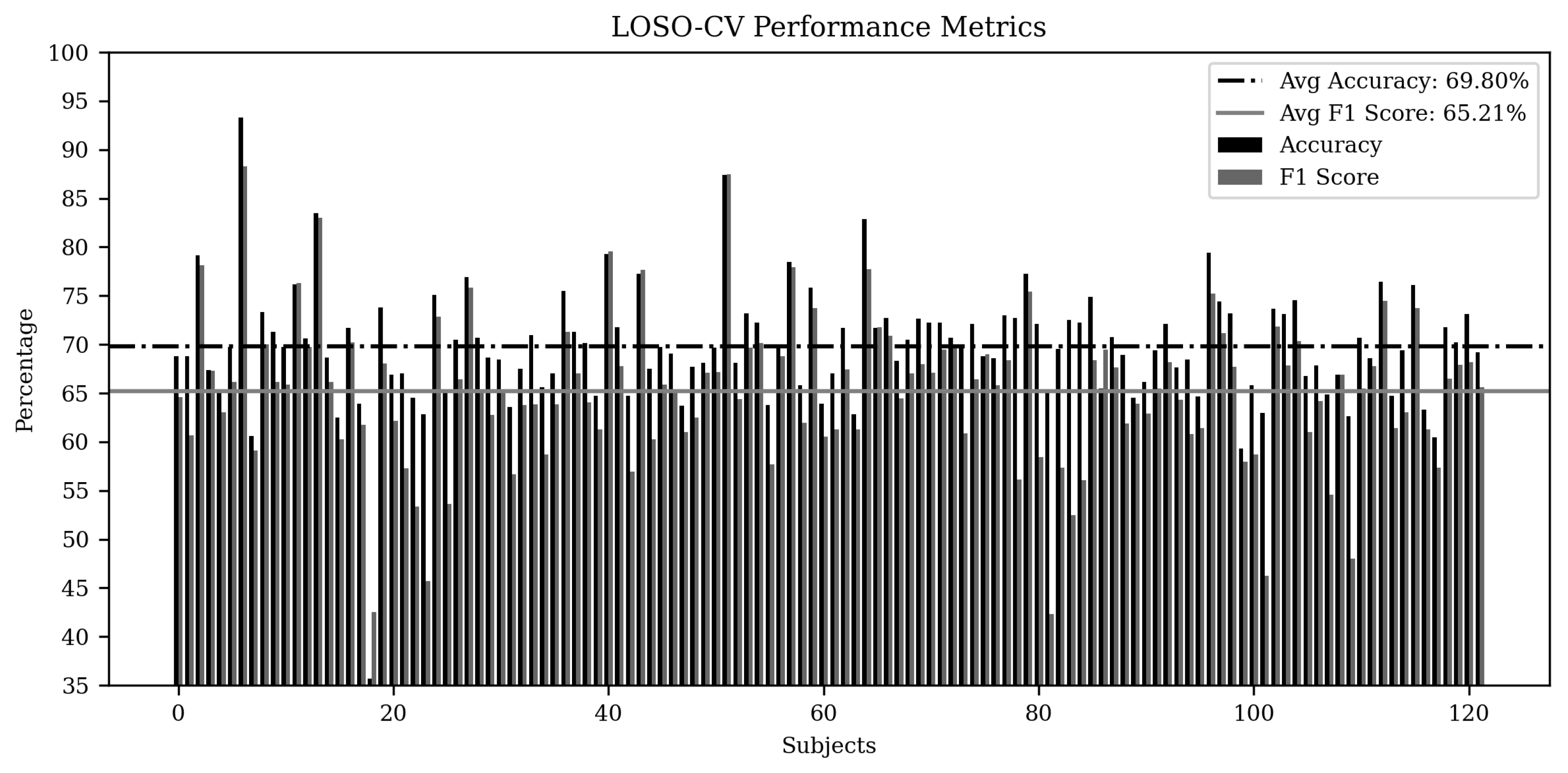

FACED dataset. Performance evaluation was conducted on FACED dataset under two training scenarios. In the first case, where all participants’ data were mixed and split into training, validation, and test sets, accuracy and F1 scores were averaged across multiple runs to ensure stability in results. the model achieved an accuracy of 66.22% and an F1 score of 64.98%, demonstrating competitive performance in emotion valence classification using a compact 4-channel configuration. In the second case, leave-one-subject-out cross-validation (LOSO-CV) was performed, where the model was trained on 122 participants and tested on one, with results aggregated across all iterations to evaluate subject-independent performance. The metrics are shown in

Figure 7 with average accuracy of 69.80% and F1 score 65.21%.

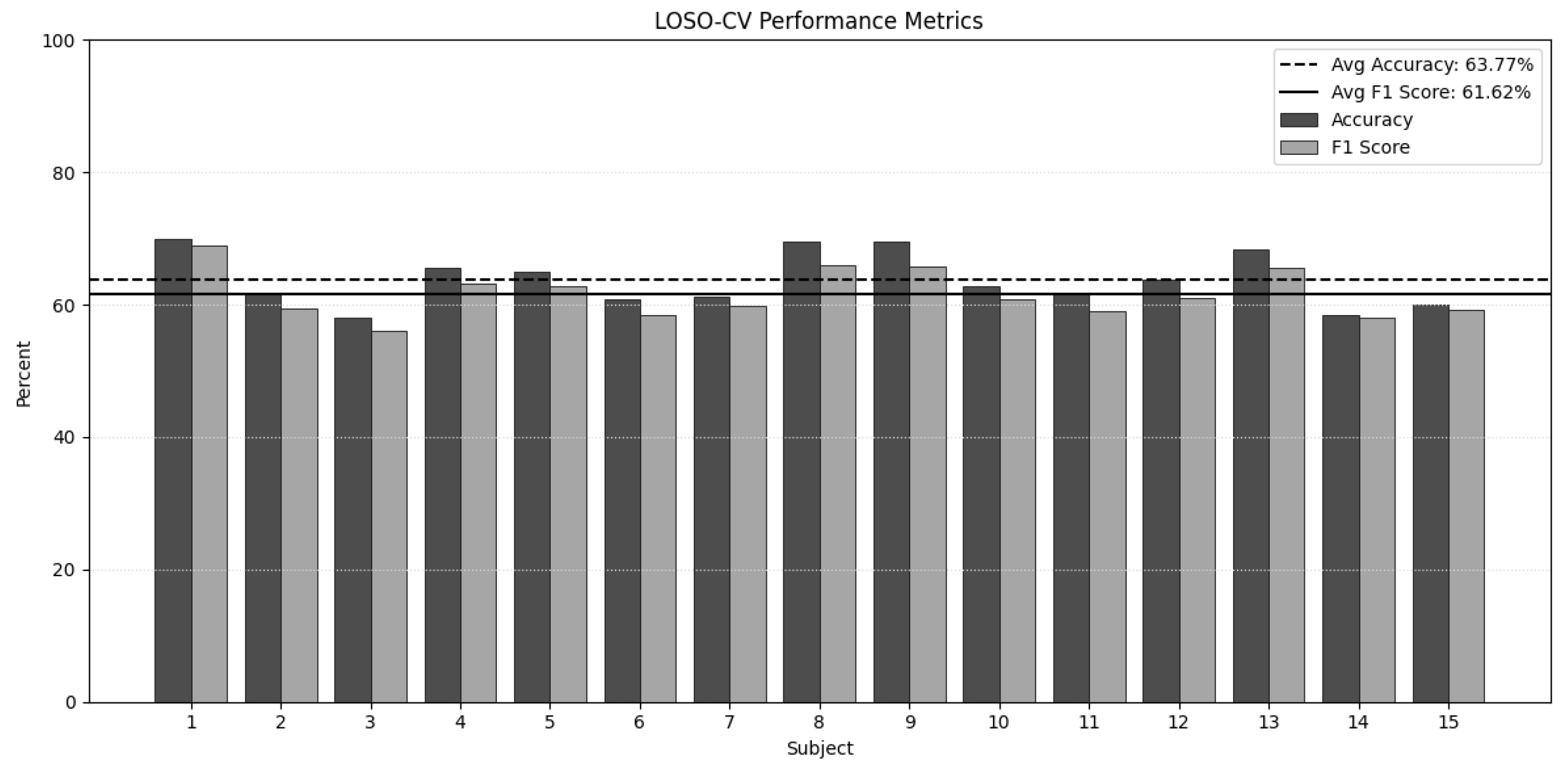

SEED dataset. The same architecture and training procedure were applied to SEED. Under the mixed-subject split, the model obtained 66.90% accuracy and 66.08% F1. To quantify subject-independent generalization on SEED, LOSO-CV was additionally conducted. Aggregating across folds yielded an average accuracy of 64.92% and an average F1 score of 63.15%. The per-subject metrics are showed in

Figure 8.

Table 1 reports the effect of the window length on accuracy/F1 for each dataset. Experiments showed that window length of 1.1 s have maximised metrics for each dataset and this window length is used for further performance evaluation.

Table 2 lists our best configuration per dataset alongside with reported results from other studies for comparison.

4.2. Video-Based Emotion Recognition Comparison

Because our recorded dataset does not include human-provided emotion labels, the outputs of the video-based model were used as ground truth to calculate the performance metrics of our trained model on this dataset.

To further evaluate the robustness of the EEG-based emotion recognition model on limited electrodes data from real wearable EEG device, we assessed performance for each participant of our recorded dataset across different sliding window overlap configurations (0–900 ms, step 100 ms).

Table 3 shows the accuracy and F1-score for each subject.

To further assess the consistency between EEG-based and video-based emotion recognition models, we calculated the Mean Squared Error (MSE) between their predicted probabilities for each emotion valence category. Lower MSE values indicate closer alignment in probability outputs between the two approaches.

Table 4 presents the average MSE for each valence class and the overall mean across all classes.

The results show that the lowest MSE was achieved for the positive valence class (0.0170), suggesting higher consistency between EEG and video-based models in detecting positive emotional states. In contrast, the neutral valence exhibited the highest MSE (0.0555), indicating greater variability between the two modalities when classifying the neutral expressions. This effect was most pronounced for participants whose video-based model outputs were heavily biased toward neutral expressions. In such cases, the video model consistently predicted neutrality even when EEG signals reflected more varied affective responses. Consequently, the EEG-based model produced a broader distribution across valence classes, while the video-based predictions remained clustered around neutrality, increasing the divergence between the modalities.

Additionally, to better capture the dynamics of emotional changes, we extracted the maximum number of non-overlapping 1.1-s intervals in which the participant’s emotional valence changed and the emotional state was most distinctly expressed.

Formally, let the EEG recording be represented as a time series

, and let

denote the emotional valence at time

t. We define an emotional change point as any time index

t such that:

where

is a predefined threshold indicating a significant change in valence.

Next, we define a set of dynamic segments , where each segment corresponds to a 1-s interval , satisfying the following conditions:

(non-overlapping);

contains a local maximum in emotional expressiveness, as measured by the magnitude of valence change or other signal-based metrics.

To determine the threshold for detecting emotional change, we analysed the distribution of differences in emotional valence over time. Delta was chosen to correspond to the top 25% of all observed valence changes.

This approach enables dynamic segmentation with variable start times (see

Figure 9), in contrast to the static, fixed-interval segmentation used in previous experiments. As a result, the analysed intervals correspond not only to genuine moments of valence transition but also to time points where emotions were most prominent. This allows the model to focus on the most informative and expressive segments of the EEG data, providing a more fine-grained and realistic assessment of emotion recognition performance. Results are shown in

Table 5.

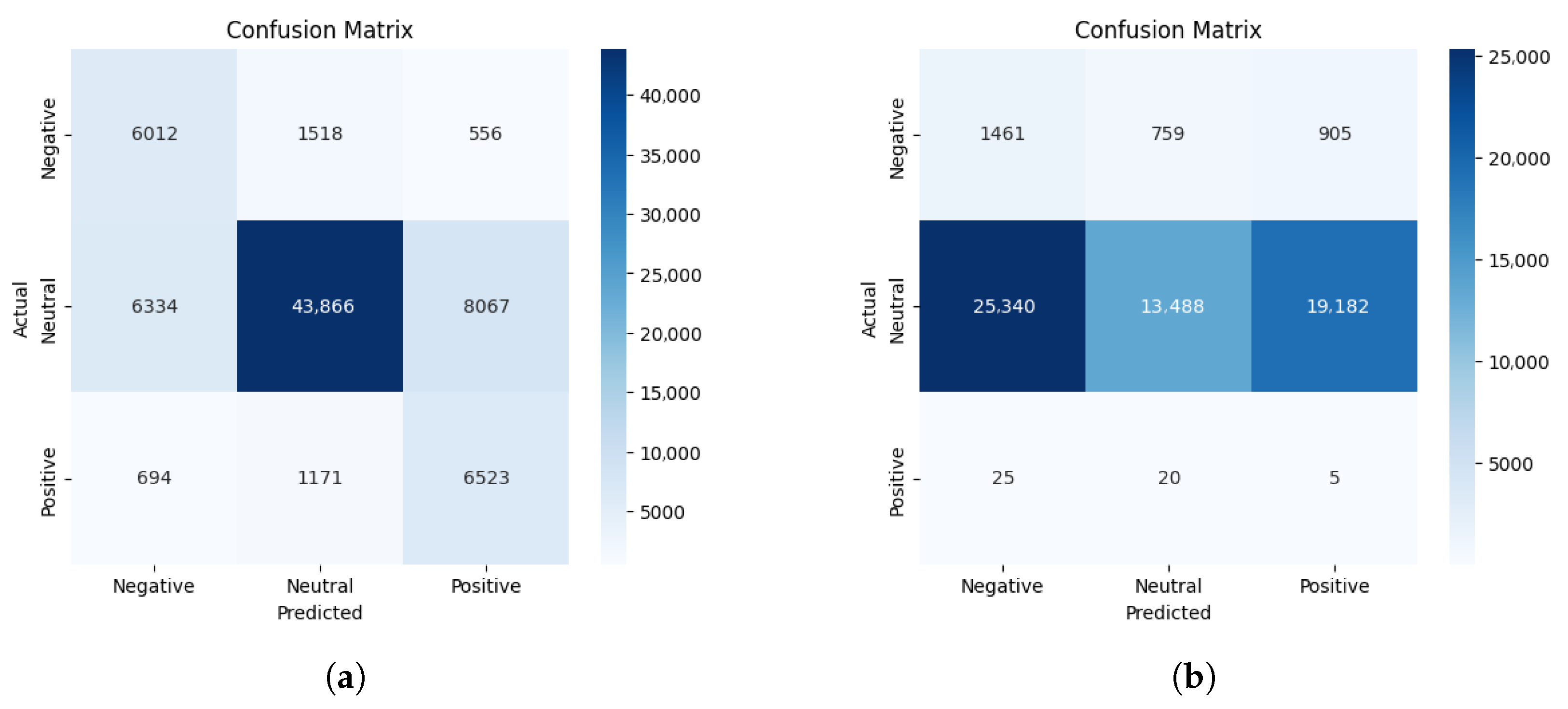

To understand why some participants fall well below the majority, we examined two confusion matrices while treating the video-based labels as ground truth and comparing EEG-based predictions against them.

Figure 10 contrasts a 75% case with a 30% case. In the low-accuracy case, the video method marks most states as neutral, whereas the EEG-based model spreads predictions across a wider range of emotions. In other words, the EEG might detect changes in emotional valence that the video method tends to miss. This supports the idea that EEG can reveal hidden or earlier emotional responses that do not always appear in facial expressions.

5. Challenges and Solutions

One of the main challenges encountered in this study was the need to address the variability in EEG patterns across different individuals. Each participant’s brain activity is unique, leading to differences in EEG signals even when exposed to the same emotional stimuli. Moreover, our dataset and the public datasets (FACED and SEED) have both within-dataset and between-dataset differences in participants’ age, gender, culture, and experimental protocols. Our dataset consists of 16 European males aged 20–41 years, while the FACED dataset includes 123 Chinese males and females (75 females) aged 17–38 years, and the SEED dataset contains 15 Chinese participants (7 males and 8 females) aged 23–26 years. This variability poses a challenge for training a generalized model capable of effectively classifying emotion valence across all participants and datasets. To mitigate this, a leave-one-subject-out cross-validation strategy was employed, allowing the model to train on data from multiple participants and be tested on individual subjects to assess its generalizability across diverse neural patterns. Additionally, transfer learning from large public datasets ensures model generalization and stable performance in the case of expanding our own recorded dataset.

Another challenge in comparing EEG-based and computer vision-based emotion recognition methods was the imbalance in the dataset. Most participants predominantly showed neutral emotions, which could lead to inflated accuracy due to class imbalance rather than the model’s actual ability to distinguish between different emotional states.

Additionally, computer vision-based emotion recognition does not achieve 100% accuracy and can not be used as 100% ground truth. Factors such as lighting conditions, facial occlusions, and individual differences in expressiveness can impact its performance. This further complicates direct comparisons between EEG-based and video-based recognition models, as inaccuracies in the video-based model could influence the evaluation of EEG-based classification.

Furthermore, our EEG-based approach have signal quality limitation. The preprocessing stage includes signal filtering using bandpass filter, but in situations were muscle activity or other artifacts highly presented in recording, this filtering may not be enough and model will fail due to highly noisy data.

The large inter-subject variability observed in

Table 3 and

Table 5 might arise from differences in EEG signal quality and individual expressiveness. For some participants, poor electrode contact or motion artifacts reduced EEG reliability, while weak or ambiguous facial expressions led to non-real video-based labels. Since these video outputs were used as reference labels, their inaccuracy directly lowered the apparent F1 score for specific subjects.

6. Discussion

Our approach intentionally employs only four EEG channels (O1, O2, T3, T4 or nearest possible) to match wearable hardware constraints. Consequently, absolute metrics of accuracy on public datasets may be lower than those reported by related works that exploit full 30 or 62 channel montages and/or subject-dependent protocols; the comparisons should be interpreted accordingly.

The public FACED corpus has been used in several recent investigations that rely on the full 30 montage and on epochs of four to six seconds that are temporally aligned with the video elicitors. The baseline reported by the dataset’s authors reached a cross-subject accuracy of 69.3% for binary valence and 35.2% for the nine discrete emotional categories [

19]. In the present work the electrode count has been reduced to the four positions available on the BrainBit headband (O1, O2, T3, T4), and the window length has been shortened to 1.1 s without overlap. Although these restrictions remove much of the spatial redundancy and greatly increase inter-subject variability, the proposed model still attains 69.8% accuracy in a leave-one-subject-out protocol. The numerical improvement should therefore not be interpreted as a direct outperformance of the prior systems; rather, it shows that competitive valence decoding is feasible with an approximately twelve-fold reduction in sensor budget and with a temporal resolution that is compatible with real-time applications.

The SEED dataset contains recordings from 15 subjects with 62 electrodes collected in three separate sessions. Methods that exploit the complete montage and that train and test on data from the same subjects routinely exceed 98% accuracy; recent examples include ACTNN [

25]. When the evaluation is made subject independent, performance typically decreases.

All datasets used in this study include self-reported emotion annotations that specify only one dominant emotion per recording. In practice, a single EEG segment may reflect a combination of emotions, but only the prevailing state is labelled. As a result, the proposed model focuses on recognising the dominant emotion within each window, consistent with the labelling schemes used for training.

The final TSCeption-based model requires approximately 66 MFLOPs per inference, making it lightweight enough for on-device processing. Modern microcontrollers can easily handle this computational load, suggesting that real-time emotion recognition with the proposed 4-channel EEG setup is possible for wearable implementations.

7. Conclusions

The study demonstrates the potential of using CNN-based models for emotion valence recognition from 4-channel EEG data. Despite challenges related to individual variability and noise in the EEG signals, the proposed model showed promising performance in classifying emotions based on the valence dimension. Employment of a compact EEG setup offers a non-invasive and efficient approach to emotion detection, which can be beneficial in real-time applications such as adaptive user interfaces and mental health monitoring systems.

Future work will focus on several directions. First, the model’s robustness and generalizability can be enhanced by incorporating advanced data augmentation and domain adaptation techniques to better address class imbalance and inter-subject variability. Additionally, integrating complementary physiological signals—such as heart rate variability and galvanic skin response—or integrating a computer-vision approach with an EEG-based approach could provide a more comprehensive understanding of emotional states. Finally, extensive testing in diverse and ambulatory settings will be essential to validate the system’s performance under varied conditions.