FedIFD: Identifying False Data Injection Attacks in Internet of Vehicles Based on Federated Learning

Abstract

1. Introduction

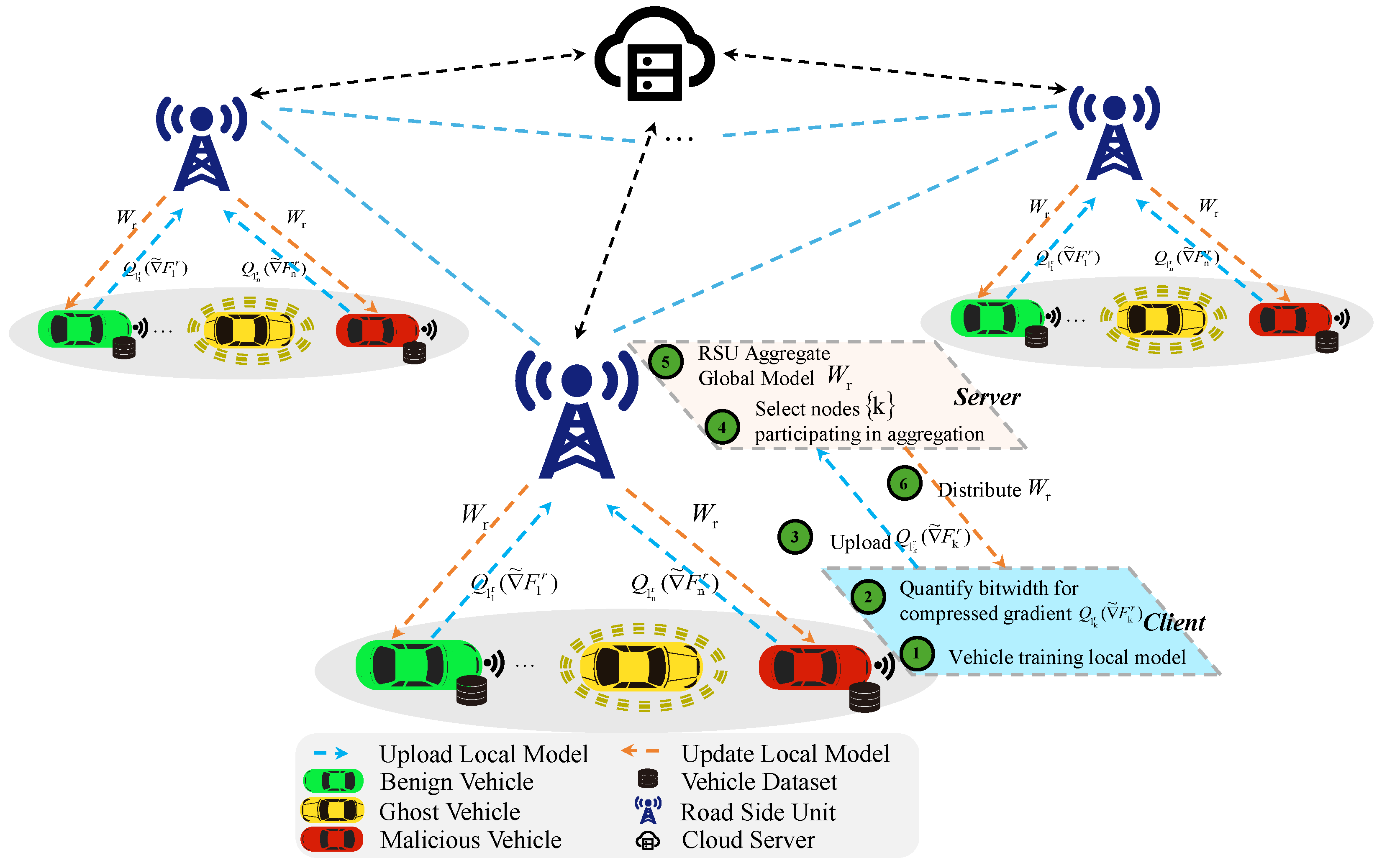

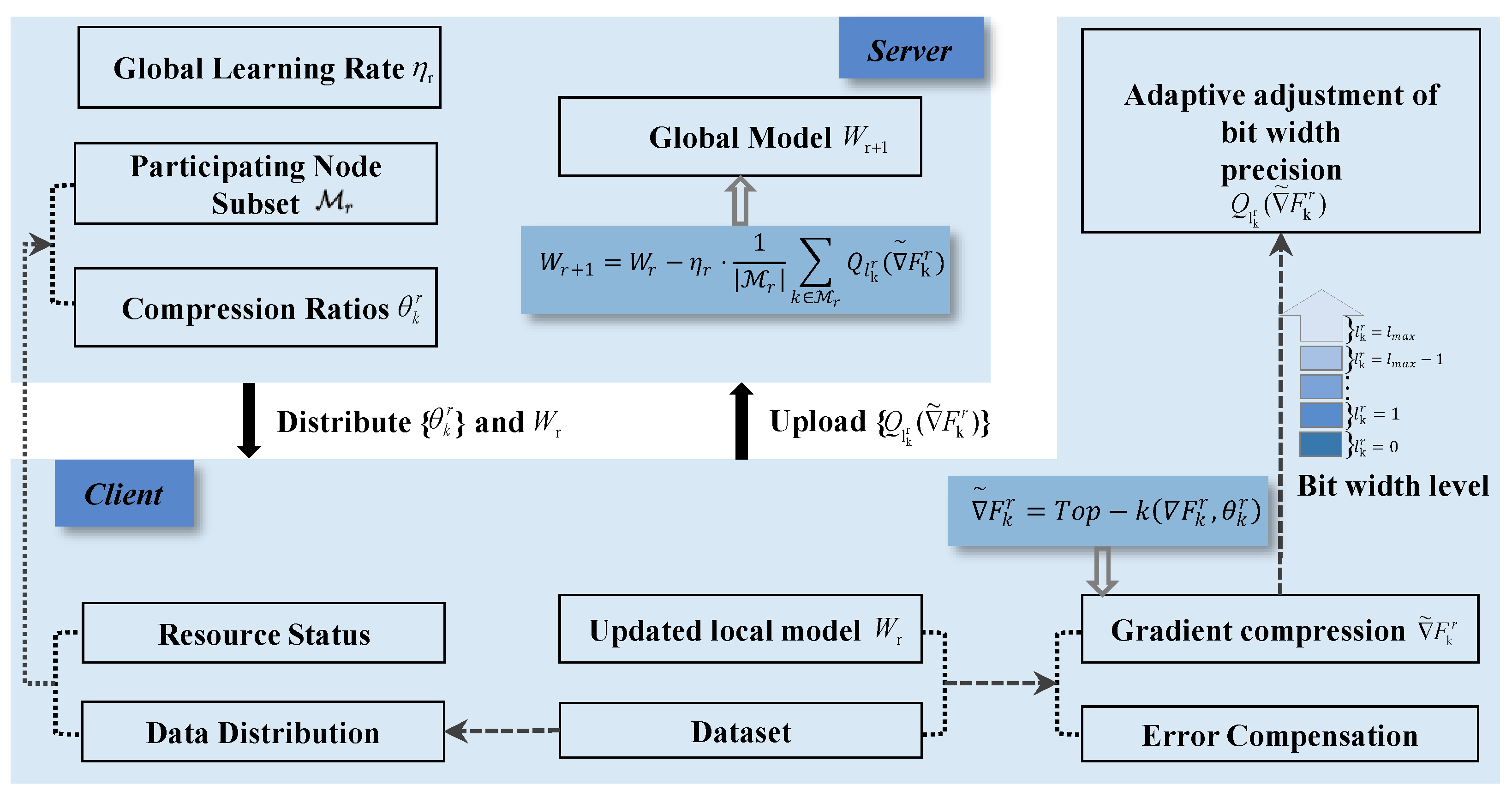

- A federated learning framework for detecting false data injection attacks in the Internet of Vehicles is proposed. A lightweight vehicle threat detection model is designed, which performs local incremental training using BSM containing information such as vehicle coordinates, speed, and signal strength. Gradients are compressed using the Q-FedCG algorithm and then uploaded to the RSU for global model aggregation.

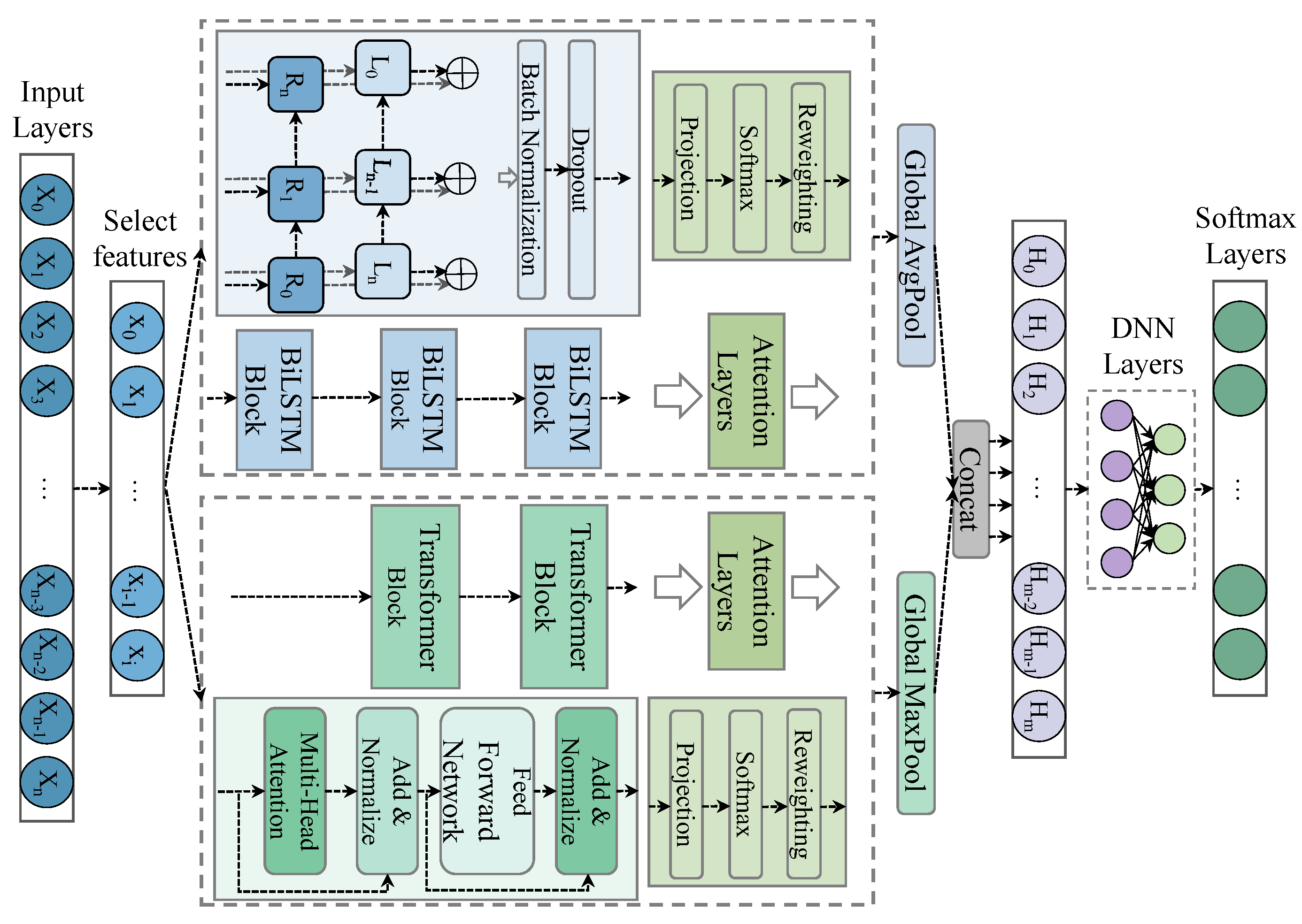

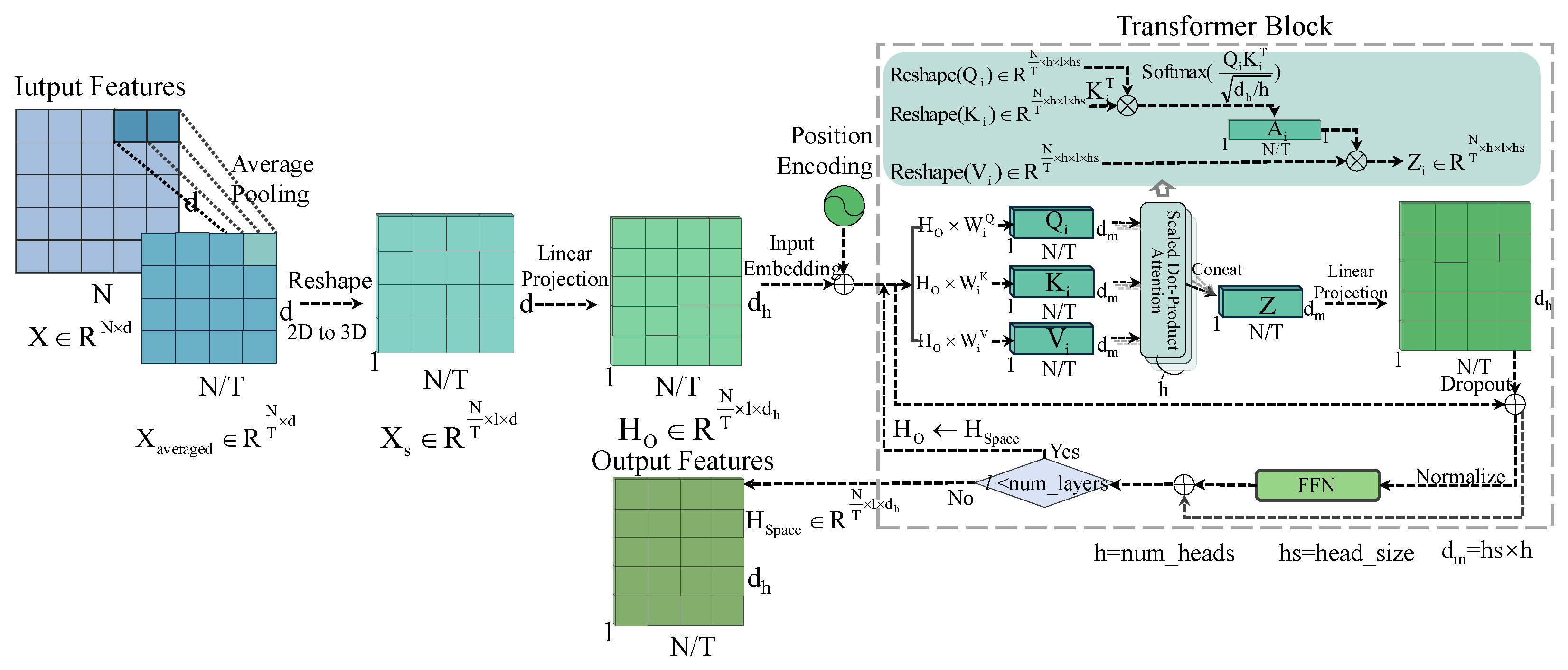

- A dual-branch spatiotemporal parallel feature extraction architecture is developed. The original features are restructured using a time window of , and a three-layer stacked BiLSTM is employed to capture temporal dependencies bidirectionally. A sliding average strategy is used for sample alignment, and a lightweight Transformer is applied to model global spatial relationships. Each branch outputs a 64-dimensional feature vector, enabling efficient parallel modeling of spatiotemporal features in the IoV.

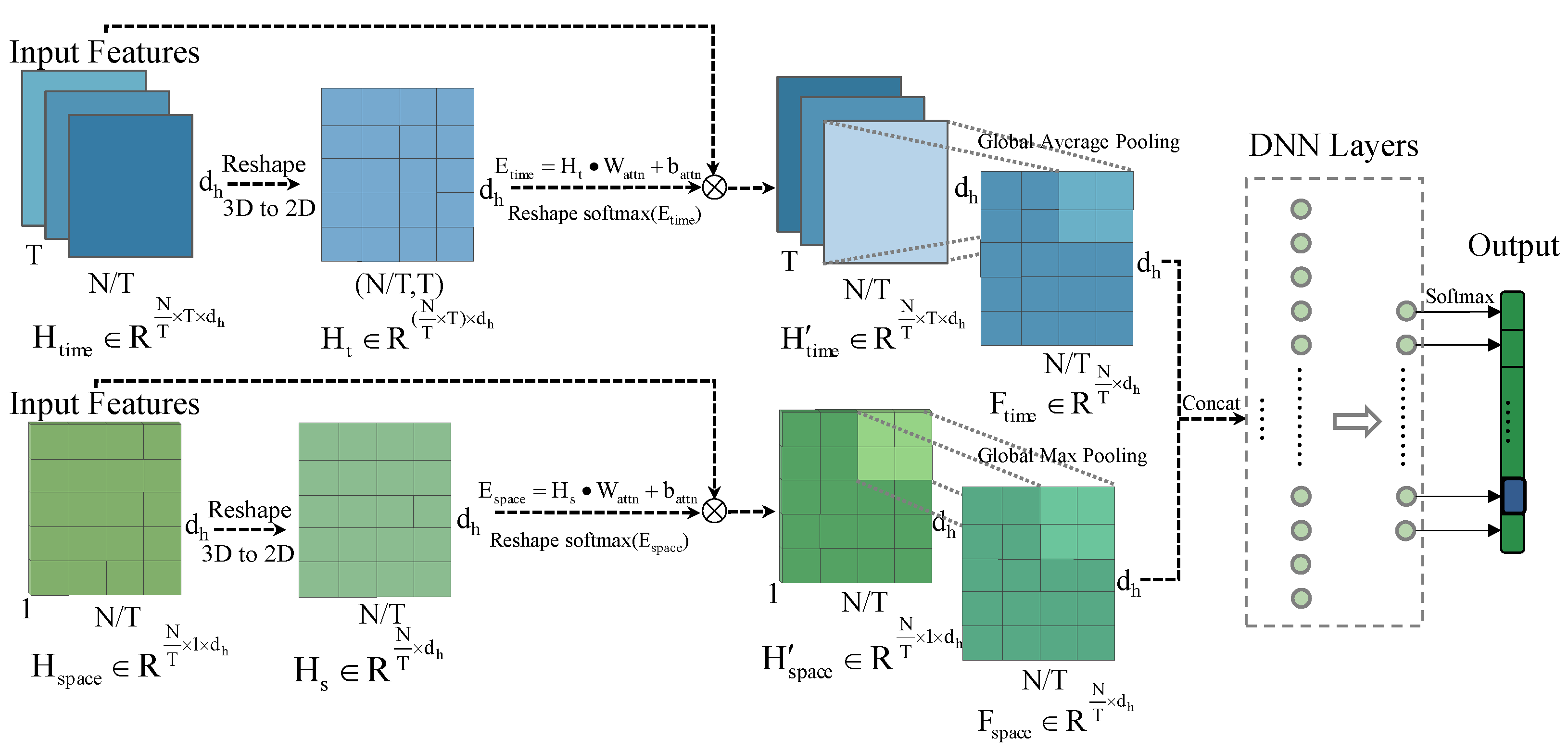

- An adaptive feature dimension weighting mechanism is designed. Each of the spatiotemporal dual branches employs a 64 × 64 feature fusion weight matrix to dynamically compute attention scores, enabling dynamic weighting at the dimensional level. A differentiated pooling strategy is then applied to the adaptively weighted output features, enhancing the representation of key features.

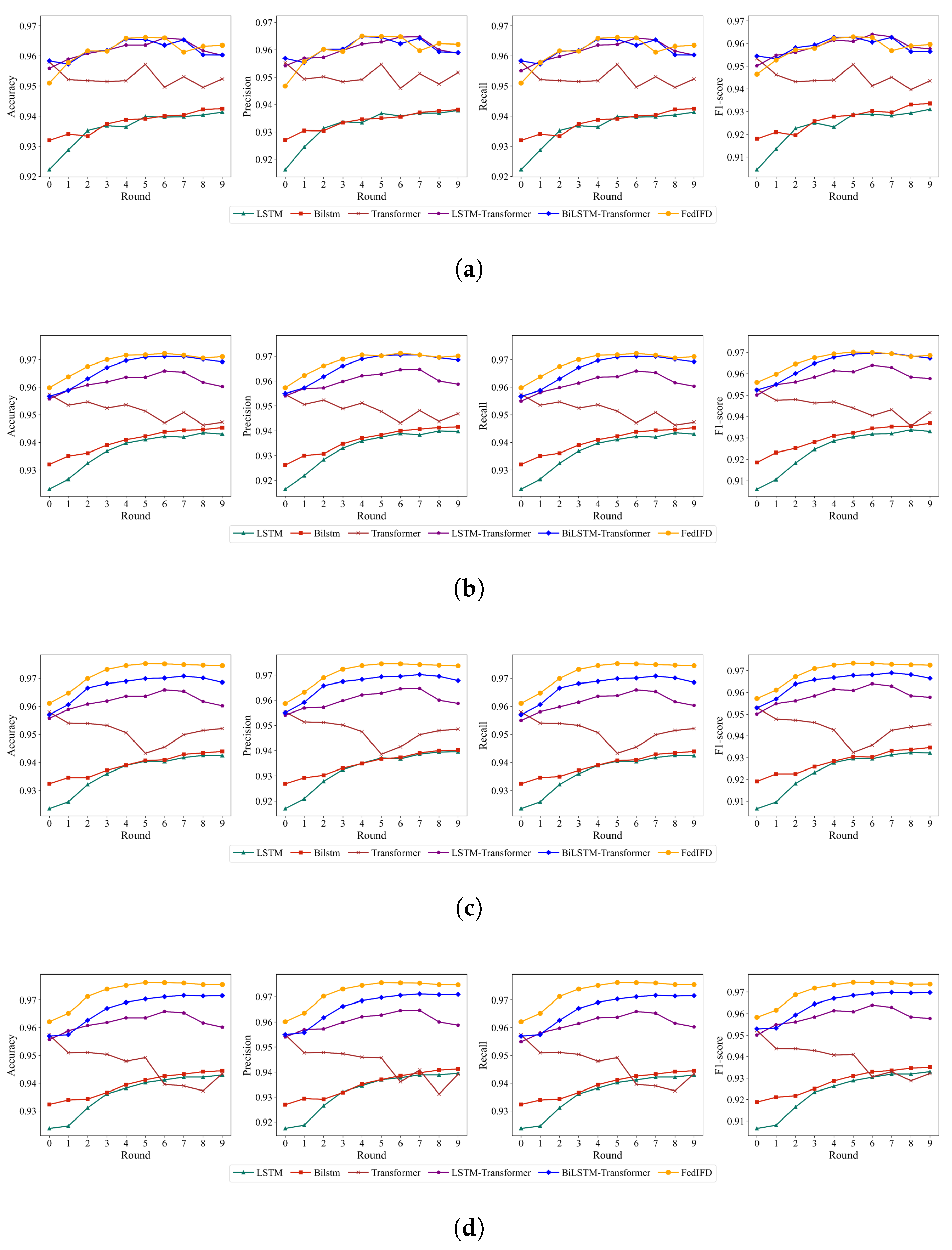

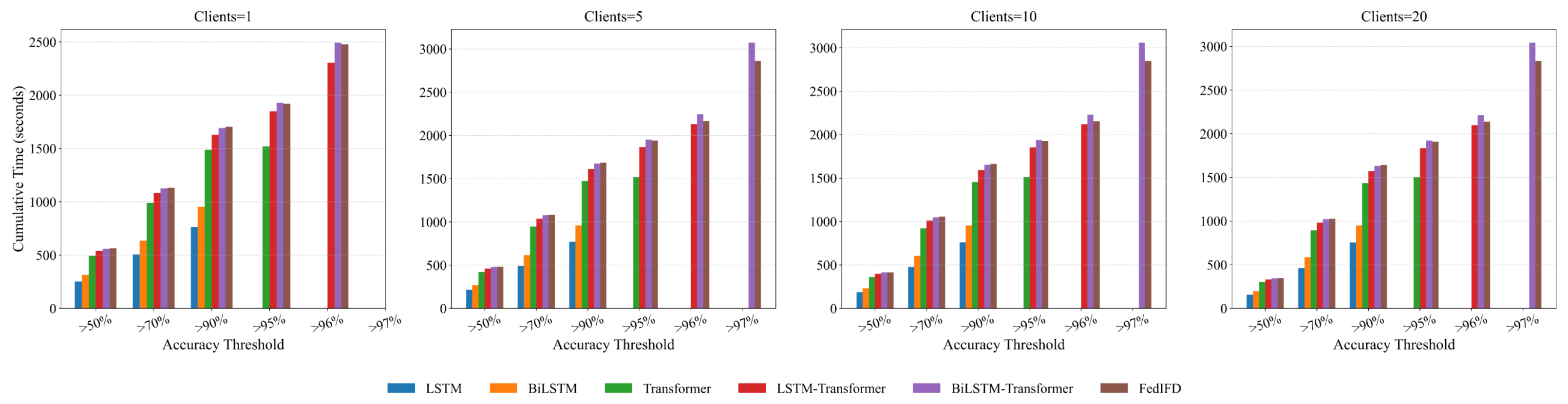

- Experimental results show that this method significantly improves performance compared to traditional attack detection methods in multi-client tests on the VeReMi dataset. The experimental accuracy reaches 97.8%, and the F1 score reaches 97.3%.

2. Related Work

3. Proposed Method

3.1. FedIFD Framework

3.2. System Model

3.2.1. Feature Extraction of Time Series

3.2.2. Extraction of Spatial Features

3.2.3. Feature Weighting and Fusion

3.3. Overall Federal Learning Process

3.3.1. Q-FedCG [32]

3.3.2. FedIFD Algorithm

| Algorithm 1 Federated Learning Framework for Vehicle Network Threat Detection |

|

4. Experiments and Analysis

4.1. Overview of VeReMi Datasets

4.2. Dataset Preprocessing

- (1)

- Data Parsing and Label Alignment: First, key features such as vehicle position, speed, reception time, and signal strength are extracted from the vehicle trajectory JSON log files. Next, each record’s ‘messageID’ in the log files is used as a key and matched with the ‘messageID’ in the ‘GroundTruthJSONlog.json’ file to determine the attack type for each record, thus achieving label alignment. Finally, by calculating the dynamic changes in trajectory data between two frames, including position and speed variations, the relative motion relationships between vehicles are captured.

- (2)

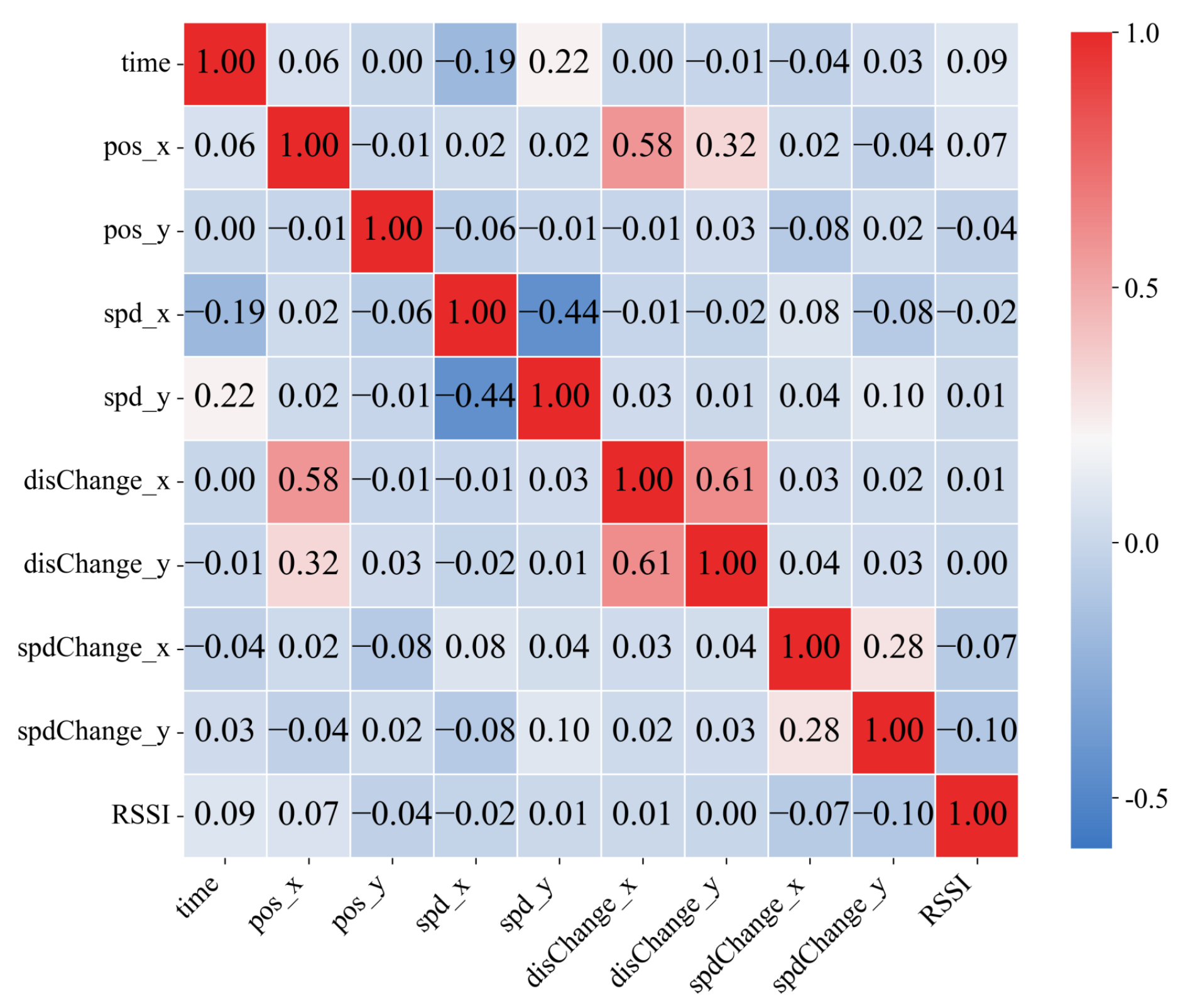

- Data Cleaning and Feature Selection: Missing values in the dataset are filled using mean imputation, duplicate records are removed, and outliers are corrected. In total, 32,557,534 records are retained, with the ratio of benign to attack records being approximately 8:2, and the proportions of the five attack types are approximately 9:9:10:7:8. Next, the Pearson correlation coefficient was used to analyze the linear relationships between key vehicle features extracted from the original log files. As shown in Figure 7, the absolute values of the correlation coefficients for all feature pairs are below 0.7, which is well below the commonly used threshold of 0.8 for feature redundancy elimination. Therefore, no highly correlated features needing removal were identified in this study. In the subsequent experiments, the retained key features demonstrated good discriminative ability and model performance.

- (3)

- Normalization and One-Hot Encoding: The Z-score normalization method is applied to adjust all features to zero mean and unit variance, and labels are one-hot encoded to meet the requirements for subsequent model training.

4.3. Evaluating Indicator

4.4. Experiment Content

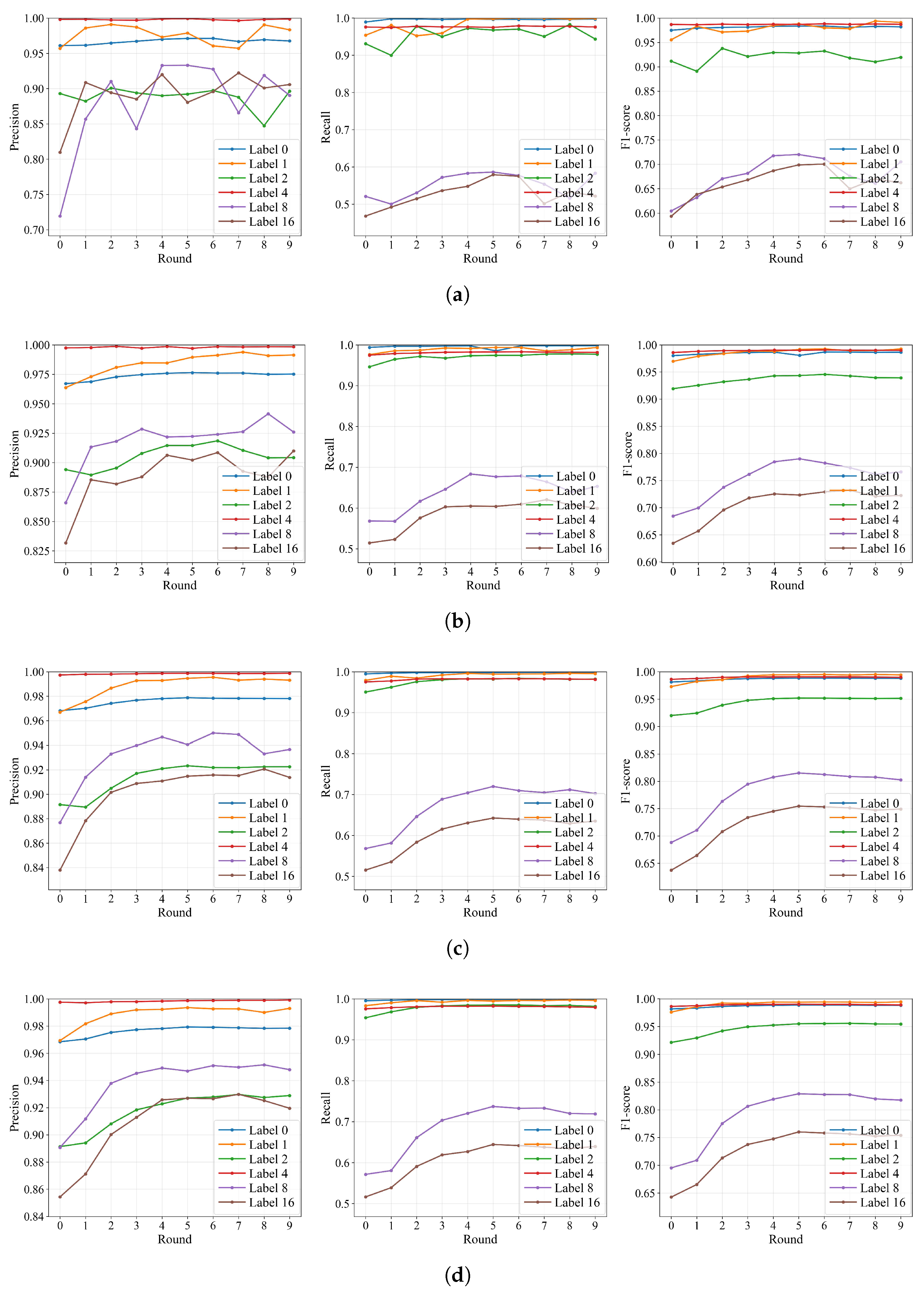

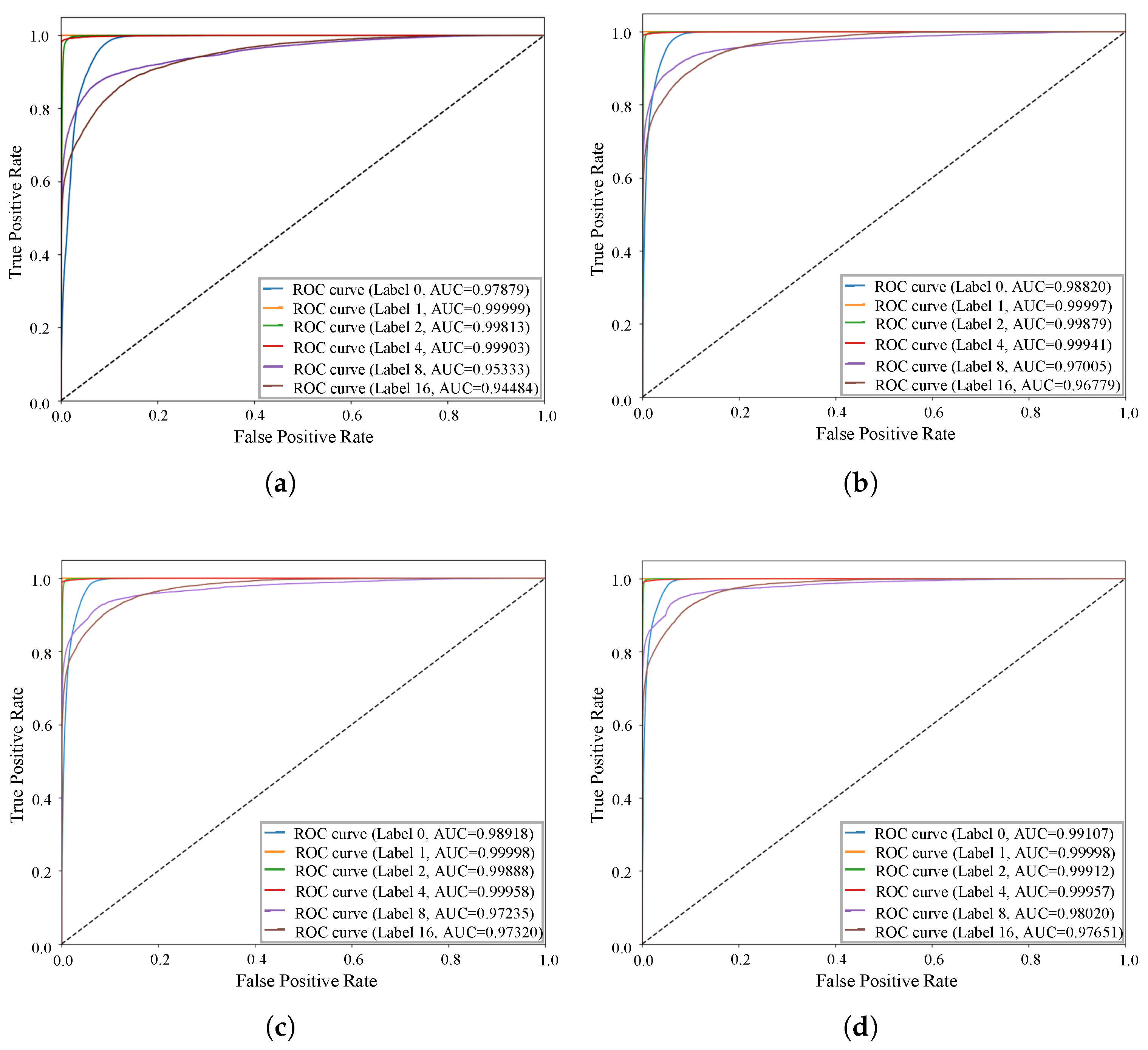

4.4.1. Performance for FedIFD

4.4.2. Ablation Experiment

4.4.3. Work Comparison

4.5. Limitations of the Study

- (1)

- Gap Between Experimental Assumptions and Real-World Conditions. First, regarding communication anomalies commonly found in the Internet of Vehicles—such as packet loss, delays, node disconnections, and data corruption—this study assumes that in typical scenarios involving such anomalies, vehicle nodes can ensure that local training results are eventually and effectively aggregated into the global model through methods like retries, data caching, or uploading at a later time. If a node remains offline for an extended period, its update is simply excluded from the current global aggregation process and does not affect the overall aggregation workflow. However, in real deployment, if there is a long-lasting high packet loss rate or severe latency—especially when attacks and network problems occur at the same time, such as attacks combined with poor communication to avoid detection—the global model’s detection performance can drop a lot. This can affect the model’s ability to recognize complex attacks (such as Label 8 and Label 16). In addition, the experiments mainly use one VeReMi simulation dataset to study five common attack types. The study assumes that data across different clients are similar and of good quality. However, simulation data cannot fully capture the complex changes, noise, and variety found in real vehicular networks. In real-world scenarios, data and attack patterns may be highly unbalanced due to different locations and driving behaviors. Also, the experiments only test a limited number of attack types. The study has not included more complex or newer attacks like Sybil attacks and replay attacks. This means the model’s performance may not fully show how well it works against real threats. To address these limitations, future research will incorporate real or hybrid vehicular network data and expand experiments to cover more diverse, collaborative, and stealthy attack scenarios. This will help to systematically improve the practicality and robustness of our approach and better meet real-world application needs. We will also consider extending our work by using models such as CNN, GNN, and Graph Transformer as future baselines.

- (2)

- Analysis of Computational Efficiency and Resource Consumption. The FedIFD approach improves detection performance while achieving a relative balance between accuracy and computational cost through careful design. It also enhances model training efficiency to some extent. However, this integrated multi-module structure inevitably increases the overall computational complexity. On vehicle terminals or RSUs with limited computing resources, the added computational load may lead to inference delays and deployment limitations. Therefore, the trade-off between model efficiency and accuracy must consider the resource constraints of real deployment environments. Further validation on edge devices is still needed to evaluate the efficiency in practical scenarios.

- (3)

- Privacy and Robustness Considerations. Although federated learning preserves the privacy of raw data, risks such as gradient inversion and membership inference attacks may still occur during collaborative training across clients. These attacks can be used to infer sensitive distribution features or whether an individual sample participated in training. In addition, when there are malicious or compromised clients, Byzantine attacks and poisoning attacks may arise. These can contaminate the global model, resulting in significant performance degradation, manipulated predictions, or even privacy data leakage. To address these issues, future work will focus on two directions: first, exploring differential privacy to protect uploaded gradients by adding carefully tuned noise that mitigates gradient inversion and membership inference attacks while keeping training stable, thereby preventing the leakage of sensitive information from individual clients; second, investigating a reputation-based mechanism to identify and exclude malicious nodes by tracking client behavior, update quality, and consistency to compute trust scores, flagging and isolating nodes with abnormally low or declining scores, and excluding their updates during aggregation to enhance robustness against Byzantine and poisoning attacks.

5. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| IoV | Internet of Vehicles |

| FL | Federated Learning |

| BSM | Basic Safety Messages |

| BiLSTM | Bidirectional Long Short-Term Memory |

| FDI | False Data Injection |

| ICT | Information and Communication Technology |

| GDPR | General Data Protection Regulation |

| RSU | Road-Side Units |

| VANET | Vehicle Ad Hoc Network |

| RNN | Recurrent Neural Networks |

| LSTM | Bidirectional Long Short-Term Memory |

| GRU | Gated Recurrent Unit |

| GAT | Graph Attention Network |

| CNN | Convolutional Neural Network |

| ReLU | Rectified Linear Unit |

| DNN | Deep Neural Network |

| FNN | Feed-forward Neural Network |

| non-IID | Non-Independent and Identically Distributed |

| HFL | Horizontal Federated Learning |

| CI | Confidence Interval |

| ROC | Receiver Operating Characteristic |

| AUC | Area Under the ROC Curve |

| TPR | True Positive Rate |

| FPR | False Positive Rate |

| ANN | Artificial Neural Network |

| MLP | Multilayer Perceptron |

References

- Biroon, R.A.; Biron, Z.A.; Pisu, P. False data injection attack in a platoon of CACC: Real-time detection and isolation with a PDE approach. IEEE Trans. Intell. Transp. Syst. 2021, 23, 8692–8703. [Google Scholar] [CrossRef]

- He, N.; Ma, K.; Li, H.; Li, Y. Resilient self-triggered model predictive control of discrete-time nonlinear cyberphysical systems against false data injection attacks. IEEE Intell. Transp. Syst. Mag. 2023, 16, 23–36. [Google Scholar] [CrossRef]

- Ahmad, F.; Kurugollu, F.; Adnane, A.; Hussain, R.; Hussain, F. MARINE: Man-in-the-middle attack resistant trust model in connected vehicles. IEEE Internet Things J. 2020, 7, 3310–3322. [Google Scholar] [CrossRef]

- Chen, C.; Hui, Q.; Xie, W.; Wan, S.; Zhou, Y.; Pei, Q. Convolutional Neural Networks for forecasting flood process in Internet-of-Things enabled smart city. Comput. Netw. 2021, 186, 107744. [Google Scholar] [CrossRef]

- Mahmood, M.R.; Matin, M.A.; Sarigiannidis, P.; Goudos, S.K. A comprehensive review on artificial intelligence/machine learning algorithms for empowering the future IoT toward 6G era. IEEE Access 2022, 10, 87535–87562. [Google Scholar] [CrossRef]

- Laghrissi, F.; Douzi, S.; Douzi, K.; Hssina, B. Intrusion detection systems using long short-term memory (LSTM). J. Big Data 2021, 8, 65. [Google Scholar] [CrossRef]

- Nguyen, M.T.; Kim, K. Genetic convolutional neural network for intrusion detection systems. Future Gener. Comput. Syst. 2020, 113, 418–427. [Google Scholar] [CrossRef]

- Chen, R.; Chen, X.; Zhao, J. Private and utility enhanced intrusion detection based on attack behavior analysis with local differential privacy on IoV. Comput. Netw. 2024, 250, 110560. [Google Scholar] [CrossRef]

- Yan, H.; Ma, X.; Pu, Z. Learning dynamic and hierarchical traffic spatiotemporal features with transformer. IEEE Trans. Intell. Transp. Syst. 2021, 23, 22386–22399. [Google Scholar] [CrossRef]

- Wang, Z.; Wang, Q.; Deng, W.; Guo, G. Learning multi-granularity temporal characteristics for face anti-spoofing. IEEE Trans. Inf. Forensics Secur. 2022, 17, 1254–1269. [Google Scholar] [CrossRef]

- Taslimasa, H.; Dadkhah, S.; Neto, E.C.P.; Xiong, P.; Ray, S.; Ghorbani, A.A. Security issues in Internet of Vehicles (IoV): A comprehensive survey. Internet Things 2023, 22, 100809. [Google Scholar] [CrossRef]

- Regulation, P. Regulation (EU) 2016/679 of the European Parliament and of the Council. Regulation 2016, 679, 2016. [Google Scholar]

- Zeng, Y.; Qiu, M.; Zhu, D.; Xue, Z.; Xiong, J.; Liu, M. DeepVCM: A deep learning based intrusion detection method in VANET. In Proceedings of the 2019 IEEE 5th Intl Conference on Big Data Security on Cloud (BigDataSecurity), IEEE Intl Conference on High Performance and Smart Computing,(HPSC) and IEEE Intl Conference on Intelligent Data and Security (IDS), Washington DC, USA, 27–29 May 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 288–293. [Google Scholar]

- Chhabra, R.; Singh, S.; Khullar, V. Privacy enabled driver behavior analysis in heterogeneous IoV using federated learning. Eng. Appl. Artif. Intell. 2023, 120, 105881. [Google Scholar] [CrossRef]

- Ilango, H.S.; Ma, M.; Su, R. A misbehavior detection system to detect novel position falsification attacks in the internet of vehicles. Eng. Appl. Artif. Intell. 2022, 116, 105380. [Google Scholar] [CrossRef]

- Anyanwu, G.O.; Nwakanma, C.I.; Lee, J.M.; Kim, D.S. Novel hyper-tuned ensemble random forest algorithm for the detection of false basic safety messages in internet of vehicles. ICT Express 2023, 9, 122–129. [Google Scholar] [CrossRef]

- Zhu, K.; Chen, Z.; Peng, Y.; Zhang, L. Mobile edge assisted literal multi-dimensional anomaly detection of in-vehicle network using LSTM. IEEE Trans. Veh. Technol. 2019, 68, 4275–4284. [Google Scholar] [CrossRef]

- Zhou, H.; Kang, L.; Pan, H.; Wei, G.; Feng, Y. An intrusion detection approach based on incremental long short-term memory. Int. J. Inf. Secur. 2023, 22, 433–446. [Google Scholar] [CrossRef]

- He, C.; Xu, X.; Jiang, H.; Jiang, J.; Chen, T. Cyber-attack detection for lateral control system of cloud-based intelligent connected vehicle based on BiLSTM-Attention network. Measurement 2025, 247, 116740. [Google Scholar] [CrossRef]

- Nguyen, T.P.; Nam, H.; Kim, D. Transformer-based attention network for in-vehicle intrusion detection. IEEE Access 2023, 11, 55389–55403. [Google Scholar] [CrossRef]

- Gu, K.; Ouyang, X.; Wang, Y. Malicious Vehicle Detection Scheme Based on Spatio-Temporal Features of Traffic Flow Under Cloud-Fog Computing-Based IoVs. IEEE Trans. Intell. Transp. Syst. 2024, 25, 11534–11551. [Google Scholar] [CrossRef]

- Li, X.; Hu, L.; Lu, Z. Detection of false data injection attack in power grid based on spatial-temporal transformer network. Expert Syst. Appl. 2024, 238, 121706. [Google Scholar] [CrossRef]

- Cheng, P.; Han, M.; Li, A.; Zhang, F. STC-IDS: Spatial–temporal correlation feature analyzing based intrusion detection system for intelligent connected vehicles. Int. J. Intell. Syst. 2022, 37, 9532–9561. [Google Scholar] [CrossRef]

- Xing, L.; Wang, K.; Wu, H.; Ma, H.; Zhang, X. Intrusion detection method for internet of vehicles based on parallel analysis of spatio-temporal features. Sensors 2023, 23, 4399. [Google Scholar] [CrossRef] [PubMed]

- Uprety, A.; Rawat, D.B.; Li, J. Privacy preserving misbehavior detection in IoV using federated machine learning. In Proceedings of the 2021 IEEE 18th Annual Consumer Communications & Networking Conference (CCNC), Las Vegas, NV, USA, 9–12 January 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1–6. [Google Scholar]

- Lv, P.; Xie, L.; Xu, J.; Wu, X.; Li, T. Misbehavior detection in vehicular ad hoc networks based on privacy-preserving federated learning and blockchain. IEEE Trans. Netw. Serv. Manag. 2022, 19, 3936–3948. [Google Scholar] [CrossRef]

- Bonfim, K.A.; Dutra, F.D.S.; Siqueira, C.E.T.; Meneguette, R.I.; Dos Santos, A.L.; Júnior, L.A.P. Federated learning-based architecture for detecting position spoofing in basic safety messages. In Proceedings of the 2023 IEEE 97th Vehicular Technology Conference (VTC2023-Spring), Florence, Italy, 20–23 June 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 1–5. [Google Scholar]

- Huang, H.; Hu, Z.; Wang, Y.; Lu, Z.; Wen, X.; Fu, B. Train a central traffic prediction model using local data: A spatio-temporal network based on federated learning. Eng. Appl. Artif. Intell. 2023, 125, 106612. [Google Scholar] [CrossRef]

- Yuan, X.; Chen, J.; Yang, J.; Zhang, N.; Yang, T.; Han, T.; Taherkordi, A. Fedstn: Graph representation driven federated learning for edge computing enabled urban traffic flow prediction. IEEE Trans. Intell. Transp. Syst. 2022, 24, 8738–8748. [Google Scholar] [CrossRef]

- Li, Z.; Fu, Y.; Tian, M.; Li, C.; Yu, F.R.; Cheng, N. FedSTDN: A Federated Learning-Enabled Spatial-Temporal Prediction Model for Wireless Traffic Prediction. IEEE Trans. Mob. Comput. 2025, 24, 8945–8958. [Google Scholar] [CrossRef]

- Tao, L.; Xiyang, Z. Spatial-temporal cooperative in-vehicle network intrusion detection method based on federated learning. IEEE Access 2025, 13, 97194–97207. [Google Scholar] [CrossRef]

- Xu, Y.; Jiang, Z.; Xu, H.; Wang, Z.; Qian, C.; Qiao, C. Federated learning with client selection and gradient compression in heterogeneous edge systems. IEEE Trans. Mob. Comput. 2023, 23, 5446–5461. [Google Scholar] [CrossRef]

- Van Der Heijden, R.W.; Lukaseder, T.; Kargl, F. Veremi: A dataset for comparable evaluation of misbehavior detection in vanets. In Proceedings of the Security and Privacy in Communication Networks: 14th International Conference, SecureComm 2018, Singapore, 8–10 August 2018; Proceedings, Part I. Springer: Berlin/Heidelberg, Germany, 2018; pp. 318–337. [Google Scholar]

- Mansouri, F.; Tarhouni, M.; Alaya, B.; Zidi, S. A distributed intrusion detection framework for vehicular ad hoc networks via federated learning and blockchain. Ad Hoc Netw. 2025, 167, 103677. [Google Scholar] [CrossRef]

- Ahsan, S.I.; Legg, P.; Alam, S. Privacy-preserving intrusion detection in software-defined VANET using federated learning with BERT. arXiv 2024, arXiv:2401.07343. [Google Scholar]

- Campos, E.M.; Hernandez-Ramos, J.L.; Vidal, A.G.; Baldini, G.; Skarmeta, A. Misbehavior detection in intelligent transportation systems based on federated learning. Internt Things 2024, 25, 101127. [Google Scholar] [CrossRef]

| Attack Label | Attack Type | Description |

|---|---|---|

| 0 | Benign | legal vehicles |

| 1 | Constant attack | Vehicle transmits fixed position instead of actual position |

| 2 | Constant offset attack | Add a fixed offset to the actual position of the vehicle |

| 4 | Random attack | Random position in vehicle transmission simulation area |

| 8 | Random offset attack | Randomly select the position in the pre configured rectangular area around the vehicle |

| 16 | Eventual stop attack | The vehicle performs normally at the initial stage, and then transmits the current position repeatedly |

| Category | Configuration Item | Sepcification |

|---|---|---|

| Hardware | Operating System | Ubuntu 22.04 |

| CPU | AMD EPYC 7T83 | |

| GPU | NVIDIA GeForce RTX 4090 | |

| Software | Python | 3.8.19 |

| Deep Learning Framework | TensorFlow 2.11.0 | |

| Federated Learning Framework | Flower framework 1.11.1 |

| Metrics | Mean | 95%CI |

|---|---|---|

| Accuracy | 0.978 | [0.974,0.982] |

| Precision | 0.975 | [0.970,0.980] |

| Recall | 0.977 | [0.972,0.982] |

| F1 score | 0.973 | [0.968,0.978] |

| Accuracy | Recall | Precision | |

|---|---|---|---|

| ANN [25] | 0.80 | 0.80 | 0.75 |

| CW-RNN [34] | 0.82 | 0.82 | 0.77 |

| FL-BERT [35] | 0.84 | 0.85 | 0.84 |

| MLP [36] | 0.93 | 0.93 | 0.92 |

| FedIFD | 0.97 | 0.97 | 0.97 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, H.; Yang, J.; Sun, J.; Wang, Z.; Liu, Q.; Luo, S. FedIFD: Identifying False Data Injection Attacks in Internet of Vehicles Based on Federated Learning. Big Data Cogn. Comput. 2025, 9, 246. https://doi.org/10.3390/bdcc9100246

Wang H, Yang J, Sun J, Wang Z, Liu Q, Luo S. FedIFD: Identifying False Data Injection Attacks in Internet of Vehicles Based on Federated Learning. Big Data and Cognitive Computing. 2025; 9(10):246. https://doi.org/10.3390/bdcc9100246

Chicago/Turabian StyleWang, Huan, Junying Yang, Jing Sun, Zhe Wang, Qingzheng Liu, and Shaoxuan Luo. 2025. "FedIFD: Identifying False Data Injection Attacks in Internet of Vehicles Based on Federated Learning" Big Data and Cognitive Computing 9, no. 10: 246. https://doi.org/10.3390/bdcc9100246

APA StyleWang, H., Yang, J., Sun, J., Wang, Z., Liu, Q., & Luo, S. (2025). FedIFD: Identifying False Data Injection Attacks in Internet of Vehicles Based on Federated Learning. Big Data and Cognitive Computing, 9(10), 246. https://doi.org/10.3390/bdcc9100246