1. Introduction

As digital platforms entangle human lives more and more, cybersecurity and user privacy have never been more important [

1]. From financial information and business strategies to political information and intellectual property, businesses and organizations share sensitive information almost every second, making them easy targets for cyber threats.

Gathering sensitive and private information without permission is a major security risk, and it makes attackers eager to devise methods to acquire that information. Among different cyber threats, phishing has remained among the most persistent and challenging over the last few years. To be more specific, phishing emails and malicious websites are specifically crafted to steal private and sensitive information, leading to breaches of confidentiality.

Organizations are concentrating on deploying technology such as artificial intelligence to enhance cybersecurity protections to combat these advanced threats, particularly those associated with well-crafted phishing attempts. With escalating cyber-attacks, threat detection is distinguished by artificial intelligence (AI), providing faster and more accurate reactions than traditional methods.

AI-based systems can stream through huge amounts of data and analyze everything to recognize and predict possible attacks, greatly increasing cybersecurity defenses. This helps take quick automated action to mitigate the threat, which minimizes the chances of severe damage and enables a safe continuation for the individual/organization from any cybercriminal [

2].

By harnessing AI, the complexities of cybersecurity can be simplified, aiding companies with a dynamic and resilient defense to cyber threats, including phishing attacks. With the AI integration in the cybersecurity architectures, phishing attacks and information exposure against humans and organizations would be minimized [

3].

In terms of practical applications, for example, artificial intelligence applications leverage machine learning algorithms to determine the content of an email and identify suspicious patterns within; these systems are able to analyze the content and structure of the email in search of a phishing email, resulting in the prevention of this email from reaching the inbox of a user.

Phishing is still a huge threat because it exploits human weaknesses despite advances in preventive AI-driven cybersecurity. Moreover, AI is a double-edged sword and offers opportunities but also threats, especially in terms of cybersecurity and user privacy. Phishing detection attacks: A component of artificial intelligence. The other side is creating phishing attacks, and for this, lots of criminals use AI to make it better.

Artificial Intelligence (AI) systems use ICT and data collection methods such as questionnaires to improve the learning and adaptation functions of existing AI systems. If this can create with different ICT tools, it will enhance the ability of AI systems to detect phishing attacks, thus, improving their performance in detecting abuses of cyber-attacks. It facilitates moving forward on the cyber front and helps to reduce the risk that phishing can entail in the age of the internet.

Cybercriminals often try to deceive people by sending fake emails or creating fake websites to steal important information. This can cause financial loss and security problems. Researchers are now using artificial intelligence (AI) to fight against phishing. AI systems can quickly analyze large amounts of data to identify phishing tricks and unusual activities more effectively than older methods. Ref. [

4] emphasize that educating people about phishing is key to stopping these attacks. Organizations can enhance their cybersecurity by training employees to recognize phishing attempts and encouraging them to report these threats. Combining advanced AI tools with human awareness creates a robust security strategy, making it more difficult for phishing attacks to succeed.

Phishing attacks are becoming more sophisticated by exploiting human trust and using social engineering tactics, making them harder to detect. Ref. [

5] propose an AI-driven solution that continuously learns and adapts to new phishing techniques. This intelligent system strengthens cybersecurity by employing real-time detection and adaptive learning, providing better protection for individuals and organizations. The ultimate aim is to establish a flexible and proactive defense against phishing scams, reducing their impact and bolstering security.

Traditional detection methods, which rely on blacklists and predefined rules, are no longer effective against evolving phishing techniques. To tackle this issue, researchers have explored various AI-based models to detect phishing websites more accurately. Among these, the Gradient Boosting Classifier by [

6] proved to be the most effective, achieving 96.6% accuracy and minimizing errors. This high level of precision significantly boosts cybersecurity by reducing the risk of phishing attacks, making AI an essential tool in protecting users from online scams.

However, recent studies by [

7,

8] indicate that some AI-based detection models struggle with identifying highly sophisticated phishing attempts designed to evade security measures. While AI has made significant progress, existing models still have limitations, particularly in adapting to new and evolving threats. There is a need for further research to assess how AI-based phishing detection aligns with human factors, regulatory frameworks, and educational initiatives to create a comprehensive defense strategy. This highlights a gap in phishing detection that requires further investigation.

This study aims to examine the role of AI-based phishing detection systems in cybersecurity education by assessing students’ awareness, trust, and response to phishing threats. By analyzing students’ awareness and trust in AI-driven solutions, this research seeks to identify gaps in cybersecurity knowledge and potential limitations of current detection methods. This study will focus on college students, assessing their ability to recognize and handle phishing attempts through a questionnaire. Additionally, it will assess students’ perspectives on AI-based phishing detection systems, examining their perceived strengths, weaknesses, and reliability in real-world scenarios.

Aims and Scope

This study aims to evaluate student awareness of phishing attacks and their trust in AI-based phishing detection systems. While a variety of artificial intelligence models have been proposed in the literature to combat phishing, there is limited research assessing how well these solutions align with user understanding, perceptions, and behaviors. The scope of the study includes (1) a review of recent AI-based phishing detection techniques to provide technical context, and (2) a quantitative analysis of student awareness and perceptions through structured questionnaires. By combining a technical overview with empirical user data, the study seeks to bridge the gap between algorithmic design and human-centered cybersecurity strategies.

This study is structured around three research hypotheses: H1: University students possess moderate to high awareness of phishing threats. H2: Students express moderate to high trust in AI-based phishing detection tools. H3: Exposure to cybersecurity education is positively associated with phishing detection accuracy. These hypotheses guide the study’s empirical investigation and aim to bridge the gap between technical advances in phishing detection and real-world user perception, especially within a digitally active but often under-trained demographic—university students.

2. Literature Review

Phishing attacks are a major issue in cybersecurity. Scammers pretend to be trusted companies to deceive people into giving away sensitive information. They often use fake emails, websites, or links to steal personal data. These scammers frequently change their methods to avoid being caught by security systems. Experts like [

2,

9] point out that traditional security measures often struggle to keep up with these new tactics.

2.1. AI and Machine Learning in Phishing Detection

AI and machine learning have made it easier to detect phishing by identifying unusual patterns and suspicious activities quickly. However, some very advanced phishing methods are still too complicated for AI to catch. Research by [

10] highlights that phishing detection must integrate both AI-based security systems and human awareness training to be truly effective. Because of this, it’s important to develop stronger security systems and educate people more about these threats.

2.2. Spear Phishing and Targeted Attacks

Spear phishing is a dangerous cyber threat aimed at specific individuals or organizations using highly personalized tricks. Unlike regular phishing, these attacks involve carefully crafted emails, social engineering, and malware to deceive people. Because they seem more genuine, they are harder to detect and can slip past normal security systems. Although AI and machine learning provide some defense, attackers constantly change their methods to avoid being caught. Ref. [

11] note that effectively fighting spear phishing requires advanced security technology, user education, stronger identity checks, and strict legal policies. Refs. [

12,

13] shows that the Social Engineering Attack Lifecycle (SEAL) framework highlights how phishing attacks operate, emphasizing the importance of user training in reducing these threats. Continuous research and collaboration are crucial to staying ahead of these ever-changing threats.

2.3. ICT and Behavioral Frameworks in Cybersecurity

According to the above research, it is very important to understand how Information and Communication Technology (ICT) can help make cybersecurity stronger against phishing attacks. As more people communicate digitally and do things online, cybercriminals find weak spots in ICT to create complex phishing scams. By enhancing security technologies such as AI for detecting threats and robust authentication methods, there are possibilities to minimize these risks. The study by [

14] is grounded in the Protection Motivation Theory (PMT), which explains how perceived threats and self-efficacy influence individuals’ decisions to adopt protective behaviors against phishing scams. This study will examine how advancements in ICT can help improve phishing prevention, safeguard user data, and bolster overall cybersecurity measures.

2.4. Awareness of Phishing and the Role of Education

Previous studies have shown that human error remains a leading cause of successful phishing attacks. According to the 2023 Verizon Data Breach Investigations Report, over 80% of breaches involve some element of social engineering, with phishing being the most prevalent tactic. Studies by [

15,

16] emphasize that while technological defenses are improving, user awareness and behavioral training remain critical. University students are increasingly targeted due to their high digital engagement and often limited exposure to formal cybersecurity education. These trends justify the need to assess awareness levels and explore how students perceive and interact with AI-based defense tools.

2.5. Comparative Review of Recent AI-Based Detection Research

Table 1 presents a comparative overview of recent advancements in AI-based phishing and spear-phishing detection methods. The selected studies address a diverse range of research problems, from the lack of interpretability in detection models to the challenges posed by increasingly sophisticated, AI-generated phishing attacks.

Table 1.

Comparative Summary of AI-Based Phishing Detection Studies.

Table 1.

Comparative Summary of AI-Based Phishing Detection Studies.

| Title | Research Problem | Methodology | Key Contributions |

|---|

| An Explainable Transformer-based Model for Phishing Email Detection [17] | Lack of interpretability in phishing detection models | Fine-tuned DistilBERT, LIME, Transformer Interpret | Achieved accurate and explainable email phishing detection |

| Prompted Contextual Vectors for Spear-Phishing Detection [18] | Ineffectiveness of traditional vectorization for spear-phishing | Prompt-based document embeddings using LLMs | Achieved 91% F1 score in spear-phishing classification |

| Advancing Phishing Email Detection: A Comparative Study of Deep Learning Models [19] | Comparative performance of DL models in phishing detection | Empirical evaluation of CNN, LSTM, GRU, BERT | Identified CNN as most effective model for phishing email detection |

| EXPLICATE: Enhancing Phishing Detection through Explainable AI [20] | Black-box nature of phishing classifiers | SHAP, LIME with ML classifiers and GPT-3 | Enhanced transparency and trust in AI detection systems |

| Evaluating LLMs’ Capability to Launch Fully Automated Spear Phishing Campaigns [21] | Risks of AI being used for offensive phishing | Prompt-engineering of LLMs to simulate attacks | Found AI-generated attacks comparable to human phishing |

| RAIDER: Reinforcement-aided Spear Phishing Detector [22] | Feature selection inefficiency in spear phishing detection | Reinforcement learning with SVM and RF classifiers | Improved accuracy through reward-based feature selection |

| Spear Phishing With Large Language Models [23] | Scalability of targeted phishing using AI | Prompt-engineering with GPT-3 | Demonstrated feasibility of highly personalized phishing at scale |

| Assessing AI vs. Human-Authored Spear Phishing SMS Attacks [24] | Human vs. AI effectiveness in social engineering | Experimental study with participant surveys | Found AI-written SMS attacks nearly as effective as human-written |

| Unveiling the Efficacy of AI-based Algorithms in Phishing Attack Detection [25] | Optimal algorithm selection for phishing detection | Evaluation of 14 AI algorithms (e.g., CNN, MLP, AdaBoost) | Identified top-performing algorithms for phishing detection |

| A Systematic Review of Deep Learning Techniques for Phishing Email Detection [26] | Lack of synthesis in DL-based phishing research | Literature review, taxonomy construction | Provided a taxonomy and trends in DL-based detection |

Methodologies span from deep learning approaches such as CNNs and LSTMs to novel applications of transformers, reinforcement learning, and explainable AI techniques like SHAP and LIME. Several works also investigate the offensive potential of large language models (LLMs) in crafting convincing phishing content. This comparison highlights the growing complexity of phishing threats and the corresponding evolution of AI-based defense mechanisms, underscoring the importance of combining technical solutions with interpretability, scalability, and user-centric design.

The

Table 1 also reveals a significant research focus on enhancing the realism and personalization of phishing detection, particularly through the use of advanced natural language processing (NLP) models and large language models (LLMs). Several studies demonstrate that AI-generated phishing content can closely mimic human-authored messages, making detection more challenging and necessitating more sophisticated detection systems. Additionally, the integration of user behavior analysis, reinforcement learning, and explainable AI into detection pipelines shows a trend toward more adaptive and transparent security solutions.

This evolving landscape suggests that future research should continue to explore hybrid approaches that combine automated intelligence with human awareness training, policy enforcement, and real-time feedback mechanisms to stay ahead of emerging phishing tactics. While the primary focus of this study is on student awareness and trust in AI-based detection,

Table 1 provides a comparative review of recent AI models for phishing detection. This overview is intended to offer technical context and illustrate the complexity and diversity of solutions that students may encounter or evaluate.

3. Material and Method

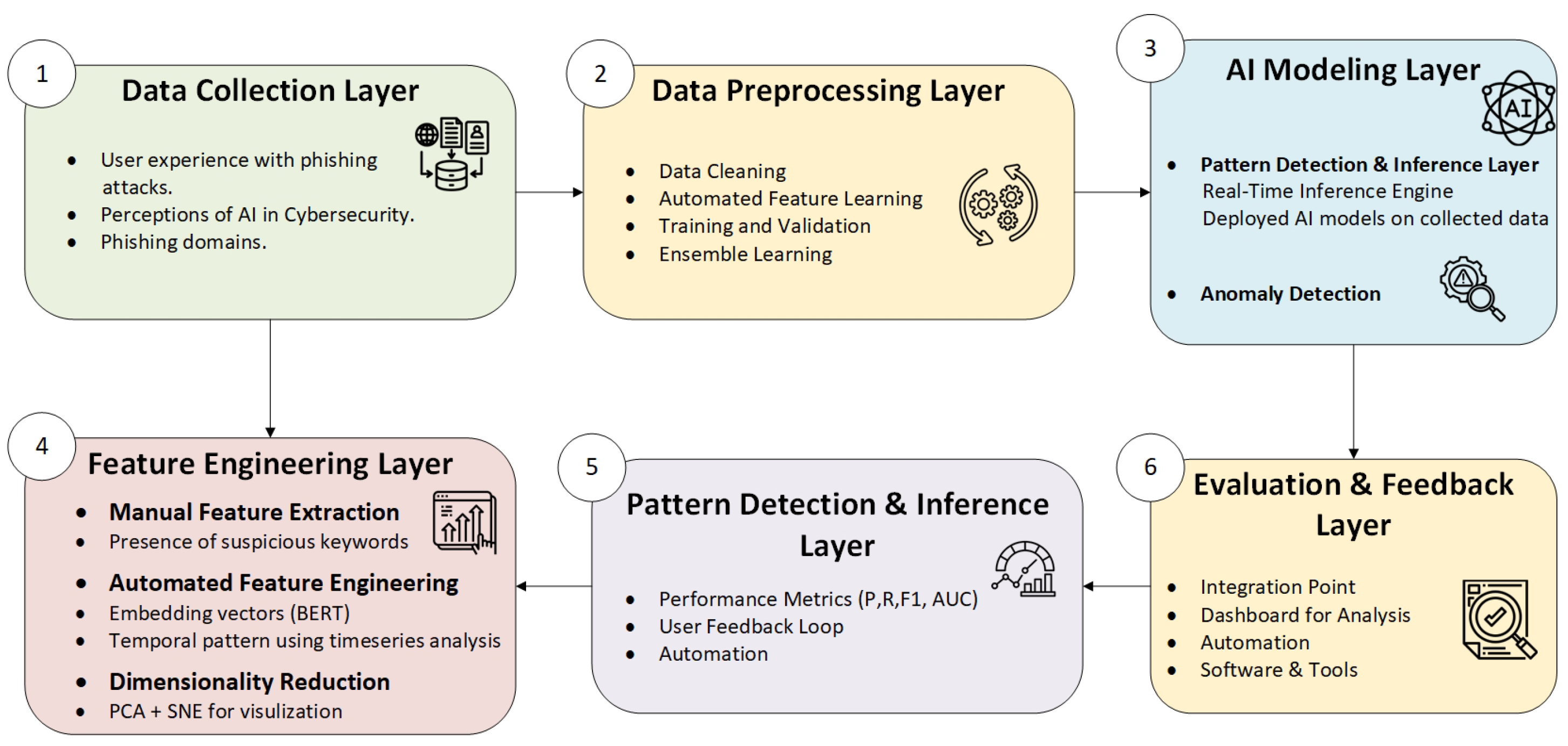

This section provides an in-depth description of the proposed AI-based phishing detection framework. The proposed architecture, shown in

Figure 1, is an original contribution of this study. It outlines a modular, multi-layered phishing detection framework that integrates technical analysis with user feedback and deployment considerations. The system is composed of seven main layers, each tailored to handle specific functions that collectively contribute to accurate, scalable, and interpretable phishing detection.

The Data Collection Layer is responsible for aggregating a wide array of data from multiple sources to ensure diversity and representativeness. This includes static phishing datasets, dynamic email traffic logs, user interaction histories (such as mouse movements, click behavior, and response time), system-generated reports, and threat intelligence feeds from cybersecurity platforms. Additionally, surveys and questionnaires are used to incorporate human-centric insights and behavioral data into the system.

The Data Preprocessing Layer ensures data integrity and readiness by performing a series of transformations. Raw data often contain noise, inconsistencies, or irrelevant features. This layer handles missing value imputation, normalization of textual and numerical attributes, tokenization of emails and URLs, stop-word removal, stemming or lemmatization for textual content, and data augmentation techniques to enrich underrepresented phishing samples. The preprocessed data is then labeled and partitioned for training, testing, and validation.

The Feature Engineering Layer serves as a bridge between raw data and intelligent learning. It combines domain knowledge with algorithmic techniques to extract informative features that enhance model learning. Manual feature extraction focuses on characteristics such as domain age, link obfuscation, sender IP reputation, lexical anomalies, and presence of urgent call-to-action phrases. Automated feature generation uses techniques such as Term Frequency-Inverse Document Frequency (TF-IDF), n-gram modeling, Word2Vec, and contextual embeddings from transformer-based NLP models. Dimensionality reduction techniques like PCA or t-SNE are employed to optimize feature space and mitigate overfitting.

The AI Modeling Layer is the core analytical engine of the framework. It integrates a diverse range of machine learning algorithms to maximize detection performance. Traditional supervised classifiers such as Support Vector Machines (SVM), Decision Trees, and Random Forests are combined with advanced deep learning models like Convolutional Neural Networks (CNN) for spatial pattern detection, Long Short-Term Memory (LSTM) networks for sequential data analysis, and Transformer models (e.g., BERT) for semantic understanding of phishing messages. Ensemble learning techniques (e.g., boosting, bagging, and stacking) are used to enhance model generalization and robustness. Hyperparameter tuning is conducted via grid search and cross-validation to ensure optimal performance. The Pattern Detection and Inference Layer is designed for real-time execution. Once trained, the models are deployed in this layer to analyze incoming data and detect phishing attempts instantly. This layer also incorporates Explainable AI (XAI) tools such as SHAP (SHapley Additive exPlanations) and LIME (Local Interpretable Model-agnostic Explanations) to interpret and visualize model decisions, which is essential for building user trust and complying with transparency requirements in security systems.

The Evaluation and Feedback Layer handles ongoing performance assessment and model refinement. Standard evaluation metrics such as accuracy, precision, recall, F1-score, and Receiver Operating Characteristic Area Under Curve (ROC-AUC) are computed. Furthermore, this layer includes a feedback mechanism where false positives, false negatives, and user reports are fed back into the system for retraining and calibration. This continuous learning loop ensures adaptability to emerging phishing techniques and evolving attacker behavior.

Finally, the Deployment Layer operationalizes the system by integrating it into real-world environments. This includes plug-ins for web browsers and email clients, APIs for enterprise-level security platforms, and user dashboards for monitoring alerts, predictions, and system activity. This layer also provides user notifications and interactive interfaces for educating end-users, thereby contributing to awareness-building and phishing prevention beyond algorithmic detection. Collectively, this architecture represents a holistic, adaptive, and human-centered approach to phishing detection, capable of addressing both technical and behavioral dimensions of cybersecurity threats.

3.1. Research Strategy

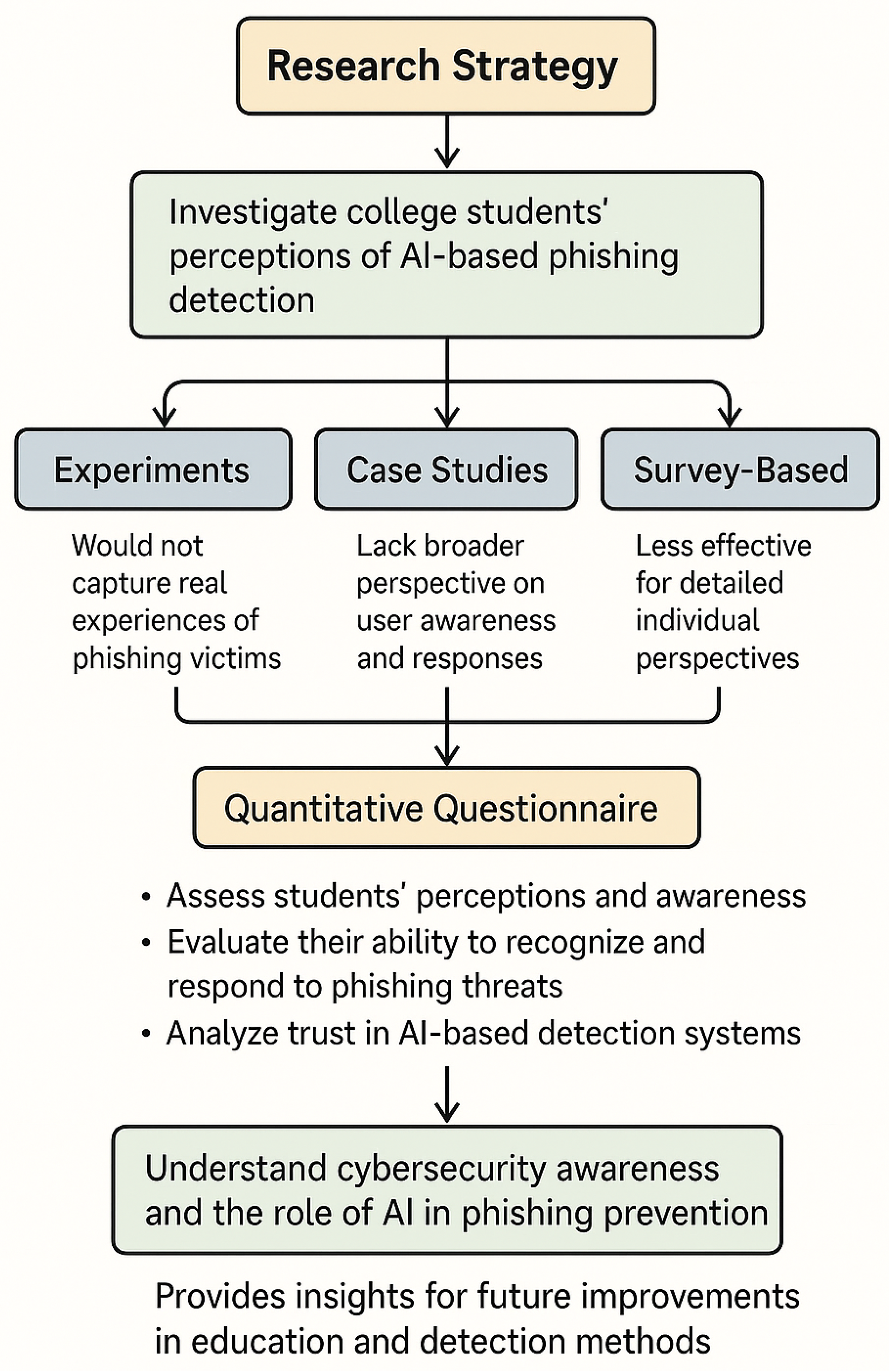

Various research methods exist, and choosing the right strategy depends on the study’s objectives, ethical considerations, and feasibility [

27]. This research specifically investigates how college students perceive AI-based phishing detection, aligning with the study’s focus on user awareness rather than technical performance.

This research aims to explore the effectiveness of AI-based phishing detection by gathering insights directly from individuals who have encountered phishing attempts. Although this paper proposes a modular AI architecture for phishing detection as part of its conceptual framework, the empirical component of the study is based on analyzing student awareness and perception using a structured questionnaire.

The AI architecture contextualizes the broader research problem, while the questionnaire provides data-driven insights into human-centered factors that influence the adoption and effectiveness of such systems. Several research strategies were considered, including experiments and case studies. While experiments could test AI detection models in controlled environments, they would not capture the real experiences of phishing victims. Case studies provide detailed insights into specific incidents but lack the broader perspective to understand user awareness and response patterns. A survey-based approach was also considered but was deemed less effective for capturing detailed individual perspectives on phishing awareness.

A quantitative questionnaire was selected as the most suitable research strategy. This approach involves gathering students’ perceptions and awareness of phishing attacks, allowing an evaluation of their ability to recognize and respond to such threats. University students were chosen as the focus group for this study because they represent a digitally active population that is frequently targeted by phishing attacks. While students often have familiarity with digital tools, prior studies highlight that their high exposure to online environments combined with limited formal cybersecurity training can make them susceptible to phishing. For example, ref. [

28] in 2018 found that digitally active users, including students, often underestimate phishing risks. Similarly, ref. [

29] in 2023 noted that younger adults may demonstrate overconfidence in their digital abilities, which can increase their vulnerability to social engineering techniques.

Unlike surveys that focus on statistical patterns, enables deeper insights into individual reasoning, concerns, and trust regarding AI-based detection. The study will also assess students’ trust in AI-based phishing detection systems by analyzing their perceived effectiveness, limitations, and challenges. This strategy aligns with the research aim of understanding subjective experiences and awareness, rather than testing AI models directly. This strategy ensures the research is focused on understanding cybersecurity awareness and the role of AI in phishing prevention, providing insights that can inform future improvements in education and detection methods.

Figure 2 shows the details of the research strategy of the proposed architecture. To enhance the transparency and reproducibility of the research process, the research strategy was mapped visually, detailing each step from problem formulation to data interpretation. This systematic approach not only improves clarity but also demonstrates the alignment between the research objectives and the selected methods. It highlights the justification for using questionnaires, the criteria for participant selection, and the logical flow from data collection to analysis, reinforcing the methodological rigor of the study.

3.2. Effectiveness of AI in Phishing Detection

The integration of Artificial Intelligence (AI) into phishing detection systems has significantly improved the speed, accuracy, and adaptability of cybersecurity measures. Traditional rule-based systems often rely on predefined signatures or blacklists, which are limited in detecting novel or sophisticated phishing attempts. In contrast, AI-based methods especially those using machine learning and natural language processing (NLP) are capable of identifying hidden patterns and anomalies in real time, enabling more dynamic threat recognition.

One of the key strengths of AI lies in its ability to analyze large volumes of data from emails, URLs, and user behavior. Machine learning models can be trained on labeled datasets to recognize characteristics of phishing attempts, such as misleading domain names, suspicious metadata, or deceptive linguistic patterns. These models continuously learn and adapt as new phishing tactics emerge, improving their effectiveness over time. Behavioral analytics further enhance detection by identifying unusual communication patterns and user interactions.

The effectiveness of AI is further amplified when combined with user-centered approaches. While AI accelerates threat detection, human feedback helps refine accuracy and reduce false positives. For example, user reports of phishing emails can be used to retrain models and improve detection precision. Explainable AI (XAI) techniques such as SHAP and LIME contribute to system transparency by helping users and analysts understand why certain messages are flagged.

Overall, the combination of technical capabilities and user engagement represents a shift from reactive to proactive cybersecurity. AI-based phishing detection systems offer scalable, adaptive, and transparent protection that not only responds to existing threats but evolves with them. This layered approach strengthens resilience against phishing and underscores the importance of combining algorithmic power with human awareness.

Figure 3 integrates deployment environments such as email clients, browsers, and mobile apps, where detection results are implemented in real time.

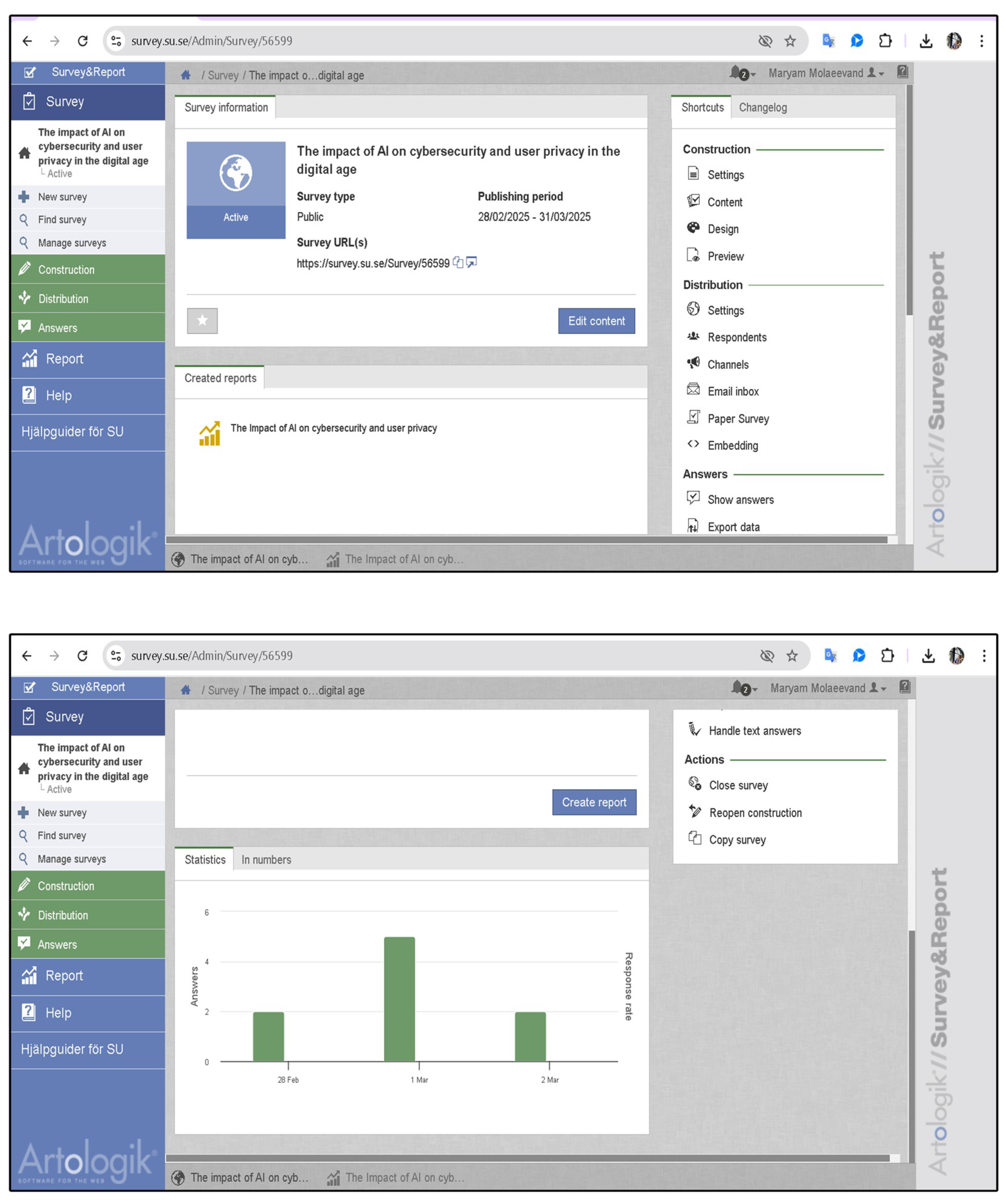

3.3. Data Collection Method

The structured questionnaire was chosen as the most effective research method in this study. A questionnaire is a type of survey tool used to collect standardized responses, distinguishing it from broader survey methodologies that may include interviews or observational methods. Recent studies indicate that questionnaires are excellent for gathering data from specific groups, which helps researchers examine trends and response patterns [

30].

Surveys, on the other hand, can incorporate multiple data collection methods, including interviews and open-ended responses, which were not suitable for this study’s objective. Questionnaires can be conducted in several ways, including online forms, face-to-face conversations, phone interviews, and paper questionnaires. An online questionnaire was selected for this study because it efficiently reaches a wide range of participants. Alternative data collection methods, such as interviews, were considered but were deemed impractical due to time constraints and the need for a larger sample size for statistical reliability. The study focuses on phishing awareness and AI-based detection methods, so a structured questionnaire with 20 multiple-choice questions was used for gathering quantitative data.

Questionnaires are ideal for collecting data on students’ awareness of phishing attacks and their perceptions of AI-based detection systems, as they provide standardized responses that are easy to analyze and compare among participants [

31]. Unlike interviews, which delve deeply into personal opinions, structured questionnaires are useful for measuring levels of cybersecurity knowledge and assessing students’ confidence in AI-driven phishing prevention. Additionally, focus groups were considered but were not chosen due to the potential influence of group dynamics on individual responses, which could introduce bias.

The questionnaire consisted of closed-ended questions to ensure objective responses and facilitate a systematic analysis of awareness trends. Participants were chosen from different fields of study to provide a broad assessment of phishing-related knowledge and attitudes toward AI detection tools. The questionnaire was conducted online, allowing for broader participation and efficient data collection within the available timeframe.

This approach is consistent with other quantitative studies, such as the research by [

32], which used structured questionnaires to evaluate cybersecurity knowledge among university students. Their findings underscored the effectiveness of quantitative methods in identifying gaps in awareness and confidence in security technologies, confirming that this method is appropriate for the current study. Similarly, ref. [

15] used online questionnaires to assess university students’ attitudes toward AI-driven security tools, further supporting the relevance of this method for analyzing user perceptions in cybersecurity research.

3.4. Sampling

The study included students from various academic fields to gather different views on phishing awareness. Phishing scams can target anyone, regardless of their background. By involving students from many areas, the study wanted to understand how people with different levels of cybersecurity knowledge recognize and handle phishing attempts.

Convenience sampling was used, as participants were recruited based on accessibility and willingness to participate rather than through a randomized or stratified process. The sample was not randomized or structured to reflect demographic or disciplinary proportions in the student body. Therefore, the findings may be subject to **selection bias** and are not fully generalizable to the wider student population. This limitation has been acknowledged and should be addressed in future work.

Three hundred fifty students participated in the questionnaire. They were invited through university emails and classroom announcements. Participation was voluntary, and all students were informed about the purpose of the study. Their privacy was protected. There were no restrictions based on academic background, which allowed for a mix of students with different familiarity with phishing.

Alternative sampling strategies, such as stratified sampling, were considered. However, due to time and resource limitations, a more structured selection process based on cybersecurity knowledge levels was not feasible. The study aimed to see how educational background and exposure to cybersecurity concepts affect awareness and responses to phishing. One potential limitation of the sampling approach is selection bias, as students interested in cybersecurity topics may have been more likely to participate, leading to an overrepresentation of individuals with higher awareness. Additionally, the reliance on university students limits the generalizability of the findings to broader populations, such as working professionals or non-students.

The Qualitative Analysis Clarification in this study was designed as a quantitative investigation only, using a structured questionnaire with closed-ended questions. No open-ended or interview-based data were collected, and therefore, qualitative analysis was not conducted. While this limits the depth of individual experiences captured, the approach allowed for efficient, standardized analysis across a sample of 350 students. The absence of qualitative data is acknowledged as a limitation, and future research will consider integrating mixed methods—including interviews or open-ended responses—to provide richer insights into student attitudes and behaviors around phishing and AI-based detection tools.

3.5. Rationale for Analytical Approach

The study employed a descriptive statistical approach due to the nature of the data collected primarily nominal (e.g., gender, academic field) and ordinal (e.g., Likert-scale responses). This method was chosen to capture and summarize trends in awareness and trust levels across different student groups. Inferential statistics (e.g., chi-square or correlation analysis) were considered but excluded due to the exploratory focus and absence of normally distributed continuous variables. Future studies will include these to test for statistical significance in observed relationships.

3.6. Data Analysis Method

This study gathered data through a structured questionnaire with multiple-choice and Likert-scale questions. The answers were converted into numbers for statistical analysis. The research uses a quantitative approach, focusing on nominal variables like academic field and gender and ordinal data from Likert-scale answers to assess participants’ awareness of phishing and their experiences. Descriptive statistical analysis was chosen for this data. This involves calculating frequencies, percentages, means, and standard deviations to identify patterns in how different student groups understand phishing. The study also compared responses from various academic fields to discover if some are more knowledgeable about phishing threats than others. Before analyzing the data, it was checked for any incomplete or inconsistent responses to ensure accuracy. Statistical software like SPSS version 31 and Excel version 2024 was used to process the numbers efficiently. The study focuses solely on numerical data, no qualitative analysis was conducted. Responses to the questionnaire were categorized and analyzed quantitatively to measure trends in phishing awareness and trust in AI-based detection systems. The results were interpreted based on statistical outputs, ensuring objective insights into students’ cybersecurity knowledge. This approach is similar to previous research, like the study by [

16], which examined cybersecurity awareness among university students using statistical methods. By relying on descriptive statistics, this study ensures a structured and systematic analysis of phishing awareness patterns without subjective interpretation.

Statistical Justification and Methodological Rationale

The study utilized descriptive statistics (frequencies, percentages, means, and standard deviations) to evaluate patterns of phishing awareness and AI trust among students. This choice was based on the nature of the data: most variables were nominal (e.g., gender, academic field) or ordinal (e.g., Likert-scale responses). Given the exploratory aim and absence of continuous or normally distributed variables, inferential statistics such as correlation, regression, or chi-square testing were not applied in this phase. While inferential techniques may provide additional insights in future work, the primary objective of this study was to identify baseline awareness levels and general trends in perception and trust across a diverse student population. Thus, descriptive analysis was deemed appropriate to ensure clarity and interpretability. Future studies may include hypothesis testing using inferential statistics to deepen the analysis of observed relationships.

3.7. Research Ethics

Ethics is very important in research because it ensures that no participants are harmed during the study. In this study, everyone who took part was aware that their participation was voluntary, meaning they could choose whether or not to join. Before they completed the questionnaire, they gave their informed consent. Participants were informed about the purpose of the study and how their responses would be utilized. To ensure privacy, no personal information was collected, and all responses were kept confidential. Participants were assured that their data would be used solely for research purposes and stored securely. This approach follows ethical guidelines that emphasize protecting participants’ interests and ensuring transparency. Additionally, the study followed the ethical principles outlined in the university’s research ethics policy, ensuring compliance with institutional guidelines. Since the study involved human participants, potential ethical concerns such as response bias and data security were carefully considered. Moreover, participants had the right to withdraw from the study at any stage without providing a reason. The study maintained high research standards and adhered to professional ethical practices to uphold scientific integrity. No legal risks were identified in the process.

4. Results

This section describes how the data collection and the data analysis methods were applied and presents the findings obtained using these methods.

4.1. Hypothesis Evaluation

The study’s hypotheses were evaluated using descriptive statistics derived from questionnaire data:

H1 Supported: 94.3% of students were aware of phishing, and 65.7% were able to identify a phishing attempt.

H2 Partially Supported: While 51.4% expressed high or very high trust in AI detection systems, 20% expressed low trust, indicating a polarized view.

H3 Supported: Students who had participated in cybersecurity courses or training were more likely to identify phishing attempts correctly, suggesting a positive association between educational exposure and detection capability.

4.2. Data Collection and Analysis

The first step in gathering data was to design a structured questionnaire as shown in

Figure 4. This questionnaire included 20 questions that were either multiple-choice or based on a scale. These questions aimed to understand students’ awareness, experiences, and responses to phishing attempts. Convenience sampling is used to select students from various academic disciplines to get a wide range of perspectives.

Table 2 presents the detail of students demographics in this research. A total of 3500 students responded to the questionnaire, with a relatively balanced gender distribution: 54.3% identified as male and 45.7% as female. Participants came from diverse academic backgrounds, providing a multidisciplinary perspective on phishing awareness. Engineering students represented the largest group (31.4%), followed by students in the Social Sciences (25.7%), Natural Sciences (22.9%), and Arts and Humanities (20.0%). This diversity helps ensure that the findings reflect a wide range of digital experiences and exposure to cybersecurity education.

The questionnaire distributed electronically through online platforms, which made it easy for students to access and participate. The questionnaire was anonymous to encourage honest responses and minimize information bias. You can see the questionnaire design used for this study in

Figure 2.

After gathering all the answers, the data was checked to be sure that the content was correct for the analysis. Any responses that were incomplete or obviously incorrect were removed to ensure data quality. Then, cleaned data imported into Microsoft Excel for preliminary handling. After that, SPSS (Statistical Package for the Social Sciences) was used for statistical analysis. The data analysis primarily used descriptive statistical methods, as the research questions focused on exploring awareness levels and student experiences rather than testing hypotheses. Frequencies and percentages were used to summarize responses to categorical variables (e.g., phishing experience, identification methods), and

Table 3 were generated to visualize it. This shows the student awareness of phishing threats is generally high. A striking 94.3% of respondents reported that they were aware of phishing, indicating that the concept is well known among university students. Furthermore, 72.9% had received a phishing email, demonstrating that many students are real targets of such attacks. Among those who encountered phishing, 65.7% reported successfully identifying the attempt, suggesting a moderate level of detection ability. However, only 40.0% had taken the extra step of reporting phishing incidents, highlighting a potential gap in security behavior despite relatively high awareness.

Table 4 explores how students have been exposed to information about phishing. Social media emerged as the most common channel, cited by 40.0% of respondents, followed by formal university courses (28.6%) and cybersecurity training programs (25.7%). Peer discussions were also a notable source, mentioned by 20.0% of students. Alarmingly, 14.3% indicated having no prior knowledge of phishing, underscoring the need for more structured educational efforts. These results suggest that while informal sources like social media play a significant role, there is a clear opportunity to strengthen formal training within the academic environment.

Table 5 illustrates the degree of trust students place in AI-based phishing detection tools. A combined 51.4% of students reported either “High” (37.1%) or “Very High” (14.3%) trust, indicating a generally positive perception of AI’s role in cybersecurity. Another 28.6% expressed “Moderate” trust, suggesting cautious optimism. However, 20.0% of students had “Low” or “Very Low” trust, pointing to lingering concerns about reliability, false positives, or lack of transparency in AI decision-making. These findings highlight the importance of improving AI explainability and building user confidence through education and feedback mechanisms.

To better quantify student attitudes, descriptive statistics computed for the Likert-scale trust question. Descriptive statistics (mean = 3.43, SD = 1.02) were calculated to summarize students’ overall trust in AI-based phishing detection tools, based on a 5-point Likert scale. These values reflect internal trends within the study population and are not used to infer broader population characteristics as shown in

Figure 5.

Table 6 provides insight into the methods students use to recognize phishing attempts. The most commonly reported indicators were suspicious links or attachments (75.0%) and unusual sender addresses (62.9%), which align with common phishing red flags taught in cybersecurity awareness programs. Other frequently cited clues included urgency in the message (48.6%), spelling and grammar mistakes (40.0%), and link mismatches (31.4%). These results suggest that many students rely on surface-level cues rather than deeper contextual or behavioral analysis, emphasizing the need for training that goes beyond basic indicators to cover advanced phishing tactics.

This process turned the gathered information into valuable insights, forming the base for the study’s conclusions and recommendations. By organizing this approach, the analysis was fair and clearly showed how well students recognize phishing. The data suggest that while most students are aware of phishing threats and demonstrate moderate-to-high trust in AI-based detection systems, there is still room for improvement in both awareness and confidence. These findings support the hypothesis that AI-based phishing detection tools are relevant to student populations but also highlight the importance of trust-building, transparency, and education in encouraging widespread adoption.

5. Discussion

Phishing attacks have become increasingly sophisticated, targeting unsuspecting individuals through deceptive emails, websites, and messages. This study aimed to analyze students’ awareness and experiences with phishing attacks and evaluate the effectiveness of AI-based phishing detection systems based on the data collected from a questionnaire. The study involved a questionnaire of students, and the responses were analyzed to identify key statistical patterns and trends. The data analysis relied on descriptive statistical methods to extract meaningful insights from the responses. Specifically, measures such as frequencies and percentages were used to summarize the data, as the questionnaire consisted primarily of closed-ended questions. SPSS was employed to perform the analysis, ensuring accuracy and clarity in data handling.

The results indicate that many students have encountered phishing attempts with varying degrees of awareness and response effectiveness. Analysis of questionnaire responses identified key trends related to phishing awareness, trust in AI-based detection systems, and challenges in adopting AI-based solutions. The statistical findings revealed three primary areas of interest: Awareness and Knowledge of Phishing, Trust in AI-based Detection Systems, and Challenges in Adopting AI-based Solutions.

The Awareness and Knowledge of Phishing theme highlights students’ understanding of phishing techniques, common red flags, and their ability to differentiate between legitimate and fraudulent communications. The data revealed that while some students were highly knowledgeable about phishing, others lacked sufficient awareness, making them more vulnerable to cyber threats. This suggests more extensive educational initiatives to enhance students’ cybersecurity awareness. The Trust in AI-based Detection Systems theme focuses on students’ perception of AI-driven solutions for phishing detection. The results indicate that while many students recognize the potential of AI in detecting phishing attempts, some expressed concerns regarding false positives and negatives. AI-based detection was generally viewed as a valuable tool, but participants emphasized the importance of transparency and continuous improvement to enhance reliability and user trust.

While the findings indicate that students generally express moderate to high trust in AI-based phishing detection systems, the questionnaire did not measure their familiarity with specific AI algorithms or technologies (e.g., SVM, CNN, transformers). Therefore, the conclusions reflect perceived trust in AI as a general concept rather than technical understanding.

The Challenges in Adoption of AI-based Solutions theme highlights students’ barriers to adopting AI-based phishing detection tools. Some students reported concerns about privacy, accuracy, and integration with their existing digital tools. Others expressed skepticism about relying entirely on AI for security and emphasized the need for a hybrid approach that combines AI with user awareness and manual verification mechanisms. The validity and reliability of this study were supported by using a standardized questionnaire and applying consistent coding procedures. The findings of this study provide several implications for cybersecurity education and AI-based phishing detection systems. The results highlight the necessity of increasing awareness and training among students to improve their ability to recognize phishing attempts. Furthermore, the research contributes to the broader literature by confirming trends seen in earlier studies, such as those by [

15], who found that trust in AI for cybersecurity is often limited by user experience and perceived control.

Like any research, this study has limitations that should be acknowledged. One key limitation of this study is the exclusive use of structured, closed-ended questionnaire items. While this approach allowed for standardized data collection and statistical analysis, it may have limited the richness of responses and missed important contextual nuances that open-ended questions or interviews could have captured. Qualitative insights such as specific examples of phishing experiences or detailed perceptions of AI-based detection tools would provide valuable depth and context to the quantitative results. Future research should consider using a mixed-methods design that includes interviews or open-ended survey questions to gain deeper understanding of user behaviors, attitudes, and decision-making processes in real-world phishing scenarios.

The sample size was limited to students from a specific academic setting, which may not fully represent the diverse range of phishing experiences and perceptions across different demographics. Future research could expand the participant pool to include individuals from various backgrounds and institutions to better understand phishing awareness and AI adoption.

Additionally, this study relied on self-reported data, which may be subject to recall or social desirability biases. Future research could incorporate experimental approaches, such as simulated phishing tests, to objectively measure participants’ responses to phishing attempts and evaluate the real-world effectiveness of AI-based detection systems.

Despite these limitations, this study provides valuable insights into students’ awareness of phishing threats and their perceptions of AI-based detection tools. It underscores the importance of education, trust, and continuous improvement in AI-driven cybersecurity solutions. Institutions can enhance phishing prevention efforts and strengthen cybersecurity defenses by addressing the identified challenges and leveraging AI advancements.

The findings of this study provide direct evidence related to the three stated hypotheses. H1 (Awareness of phishing is high) was supported by data showing that over 94% of students had heard of phishing, and nearly 66% were able to identify phishing attempts. H2 (Trust in AI-based phishing detection is moderate to high) was partially supported: while over half of the students reported high or very high trust in such systems, 20% expressed low or very low trust, indicating both confidence and skepticism coexist. H3 (Exposure to cybersecurity education improves phishing awareness) was supported by cross-tabulated findings showing students who received formal training were more likely to detect phishing threats. This hypothesis-based structure strengthens the analytical foundation of the research and aligns the results directly with the study’s objectives.

6. Future Research

This research has certain limits, so future studies could examine how well different AI-based phishing detection models perform. They can do this by comparing various machine learning algorithms. Researchers could test AI models in real-time phishing situations to see how accurate, efficient, and adaptable they are at spotting new phishing threats. As cybersecurity threats and AI technologies continue to change, studies could be conducted over a longer period. These would assess how students’ awareness and understanding of phishing evolve over time. Such research could show the long-term effects of using AI to detect phishing and evaluate how effective educational programs are at boosting students’ cybersecurity awareness. Furthermore, research could explore the ethical and privacy concerns associated with AI-based phishing detection tools, particularly regarding the collection and analysis of personal data. This could include investigating user trust, consent mechanisms, and transparent data-handling practices. By addressing these gaps, future studies could provide a better understanding of phishing detection methods. They could also enhance AI solutions and increase cybersecurity awareness among a wider audience.

This study also leaves several important areas for future exploration. First, while the research focused on user awareness and trust in AI-based phishing detection, it did not compare the effectiveness of different AI detection models such as SVM, CNN, LSTM, or Transformer-based approaches. Future studies could incorporate benchmark comparisons across AI techniques to identify optimal models for phishing detection under various real-world constraints. Second, the role of explainable AI (XAI) in building or eroding user trust was not directly assessed. Given the growing importance of model transparency, future research should investigate how different XAI methods (e.g., SHAP, LIME) influence user confidence, trust calibration, and adoption of AI tools in security contexts. Third, privacy implications related to AI-based phishing detection systems particularly regarding data collection, storage, and inference on user behavior were not fully addressed. Further work should explore how to balance AI model performance with ethical considerations such as data minimization, user consent, and compliance with privacy regulations (e.g., GDPR). In addition, future research would benefit from using more diverse participant samples, experimental or longitudinal methods, and mixed approaches that integrate qualitative and quantitative data. These enhancements could provide more actionable insights into how AI-based systems can effectively and ethically support phishing prevention.

While the sample of 350 students provides initial insights, its size and composition focused on university students from limited institutions may not fully capture broader population patterns. Future research should include more diverse participant pools across age groups, educational backgrounds, and geographic locations to enhance generalizability.

7. Conclusions

This study explored two complementary aspects of phishing prevention: a review of recent AI-based phishing detection systems, and an empirical investigation into student awareness and trust in such technologies. The literature review revealed significant progress in using machine learning and NLP models especially transformer-based architectures and explainable AI tools for phishing detection. However, gaps remain in model interpretability, user alignment, and privacy safeguards. The student survey results confirmed the hypothesis that while awareness of phishing is relatively high among university students, gaps persist in reporting behavior and trust in AI-based detection systems. Students generally expressed moderate to high trust in such tools but noted concerns about accuracy, transparency, and integration with daily digital tools. These findings highlight that technical innovation alone is insufficient awareness and human trust play a crucial role in effective phishing defense. By combining AI system analysis with real-world perceptions, this research underscores the need for interdisciplinary approaches that fuse technical capability with user-centered design and cybersecurity education. Future work should involve more diverse populations, test detection tools in real-world environments, and explore the impact of explainable AI on user trust and behavior. The study contributes to both the theoretical understanding of phishing defense and practical strategies for more secure, user-aware AI systems. The hypotheses outlined in the study were generally supported by the empirical data: students demonstrated high awareness of phishing (H1), a cautiously optimistic trust in AI-based detection systems (H2), and a correlation between cybersecurity training and phishing detection ability (H3). These findings reinforce the dual necessity of advancing AI tools and embedding user education as core pillars of phishing prevention strategies.