Cognitive Assessment Based on Electroencephalography Analysis in Virtual and Augmented Reality Environments, Using Head Mounted Displays: A Systematic Review

Abstract

:1. Introduction

- delta (δ) (0.5–4 Hz)—associated with deep sleep

- theta (θ) (4–8 Hz)—observed during quiet focus and sleep

- alpha (α) (8–14 Hz)—recorded with closed eyes and relaxing

- beta (β) (14–30 Hz)—associated with alertness and attentional allocation

- gamma (γ) (over 30 Hz)—linked with learning and high mental state

- α1 (7–10 Hz)—associated with a relaxed but alert state

- α2 (10–13 Hz)—linked to more active cognitive processing than α1

- β1 (13–18 Hz)—associated with active, attentive cognitive processing

- β2 (18–30 Hz)—associated with more complex cognitive processes

- γ1 (30–40 Hz)—linked to sensory processing and perception

- γ2 (40–50 Hz)—involved in higher-level cognitive processes and feature binding

- γ3 (50–80 Hz)—useful for research focused on exploring the synchronization of neural networks and its role in various cognitive functions

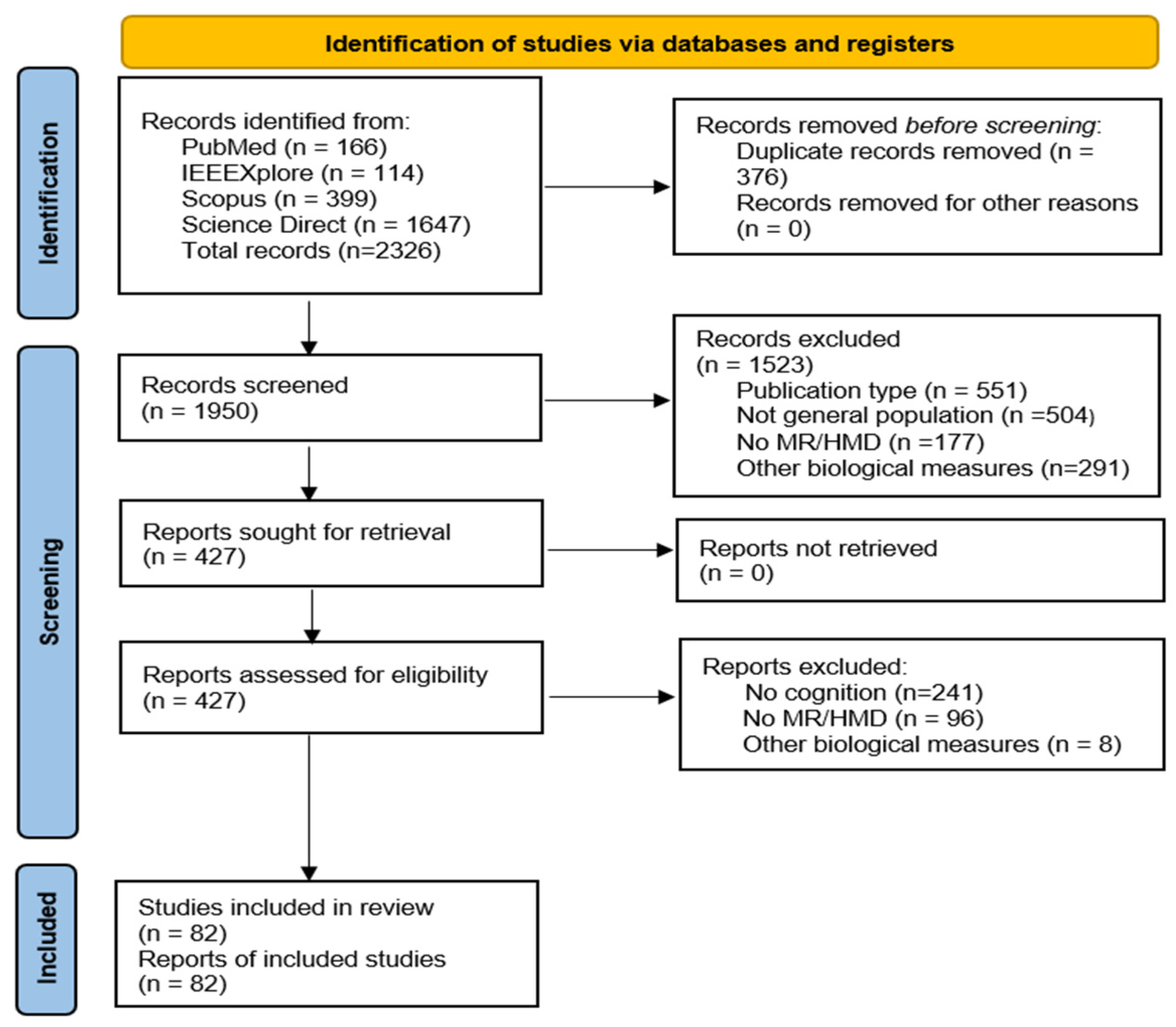

2. Research Methodology

2.1. Data Sources

2.2. Inclusion Criteria

- randomized controlled trials original primary research

- healthy participants from general population without pathological history or any kind of disorders

- a Head Mounted Display device (HMD) as the stimuli projection system

- EEG signals as the only neuroimaging measure

- at least one EEG-assessed cognition related research topic (i.e., cognitive load, immersion, spatial awareness, interaction with the digital environment, attention)

- at least one EEG-based neurobiological outcome

2.3. Exclusion Criteria

- conference articles and case studies

- theoretical studies, such as review articles, overviews, meta-analyses and book chapters

- research conducted on animals

- published in language other than English

- including participants with pathological history (e.g., Alzheimer’s disease, Parkinson, post-stroke patients, brain injury, autism, epilepsy, visual or cognitive impairment/decline, disabilities, etc.);

- including participants with disorders (e.g., alcoholism, attention disorder, anxiety disorder, psychosis, pathological gambling, etc.);

- including participants from expert groups (e.g., skiers, pilots)

- using biological measures other than EEG for the research outcomes (e.g., functional Magnetic Resonance Imaging (fMRI), Electrocardiogram (ECG), Electrooculogram (EOG), Electromyogram (EMG), Galvanic Skin Response (GSR), Heart Rate (HR), Electrocardiogram (EKG))

- with research objectives not in the field of cognition (e.g., emotion, pain, sleep, motor functions)

- not including VR, AR or MR stimuli

- with stimulus displayed on devices other than HMD (e.g., projectors, screens or specially designed spaces)

- not reporting results (e.g., study protocols, datasets).

2.4. Data Synthesis

3. Study Statistics

3.1. Publication Year

3.2. Total Number of Participants

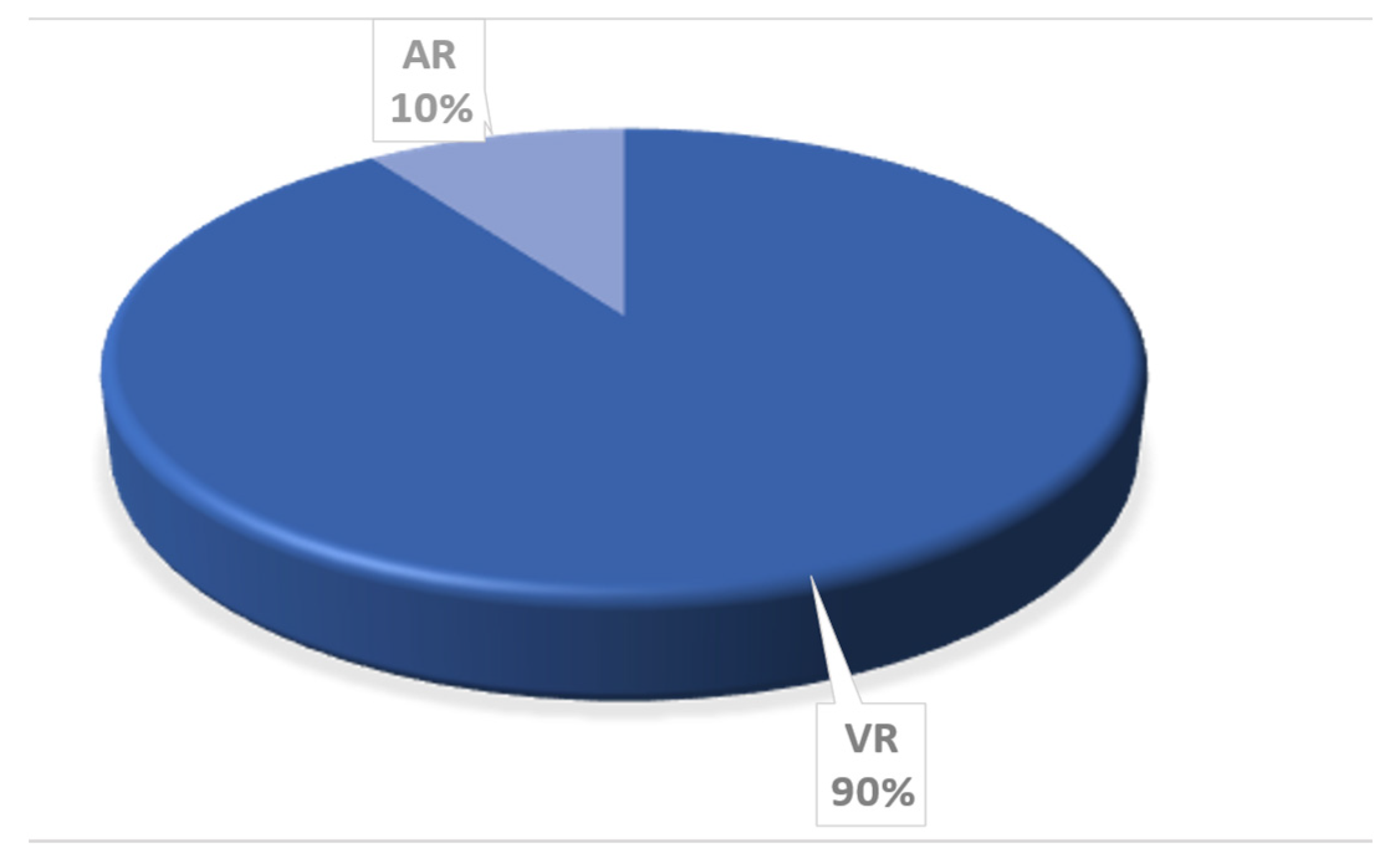

3.3. Digital Environment Type (VR, AR, MR) and Equipment

3.4. EEG Equipment

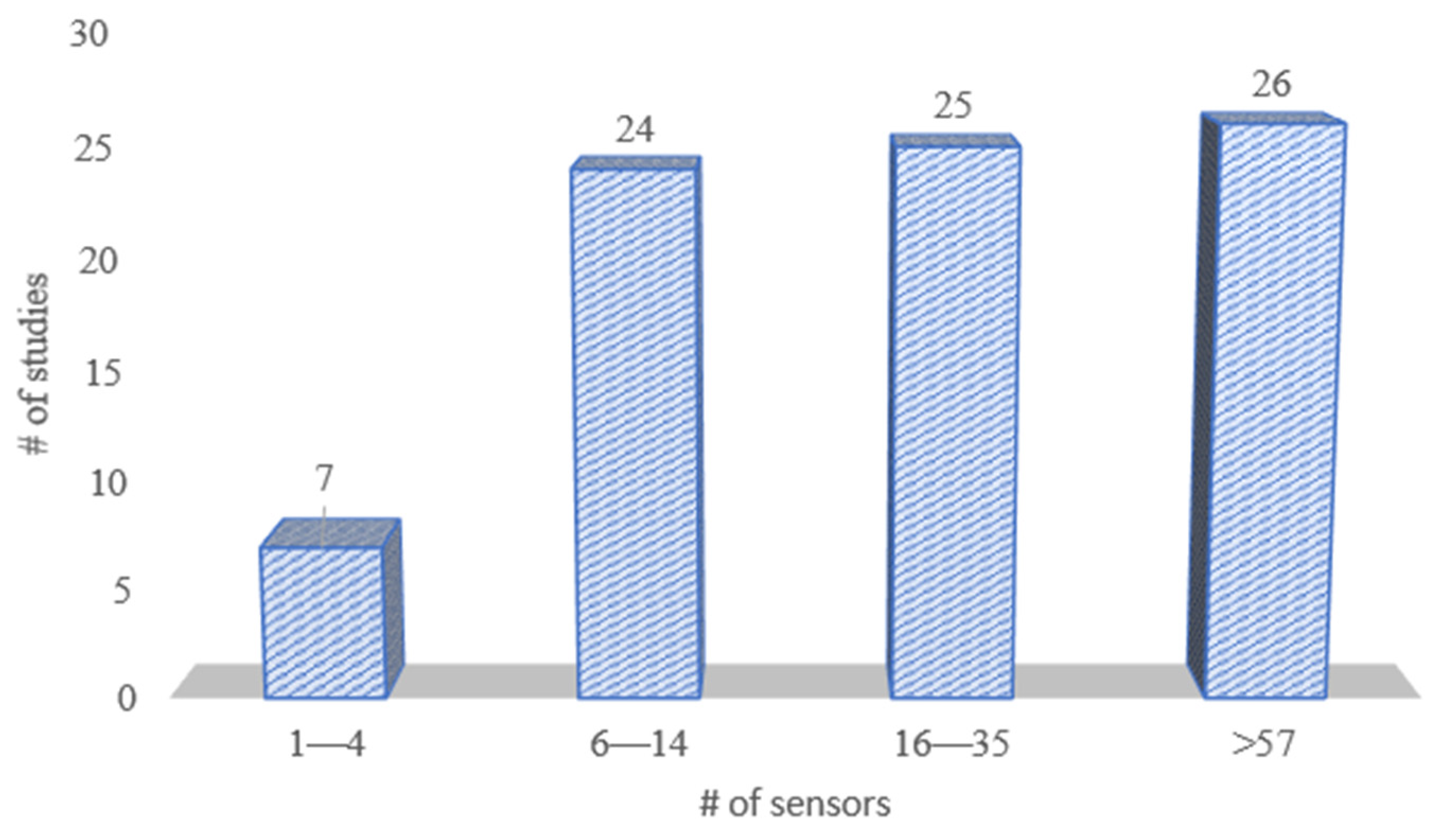

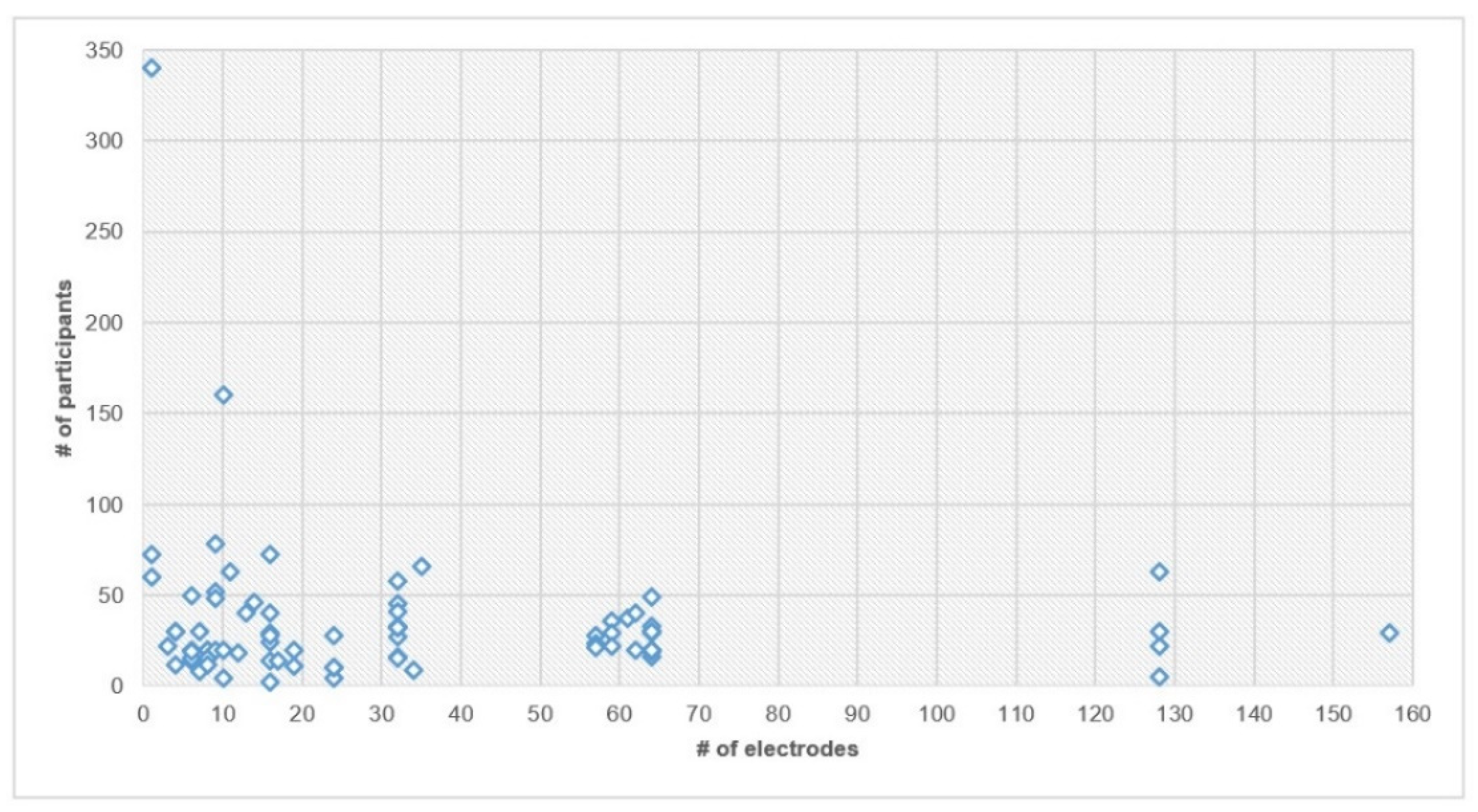

3.5. Number of Electrodes Used

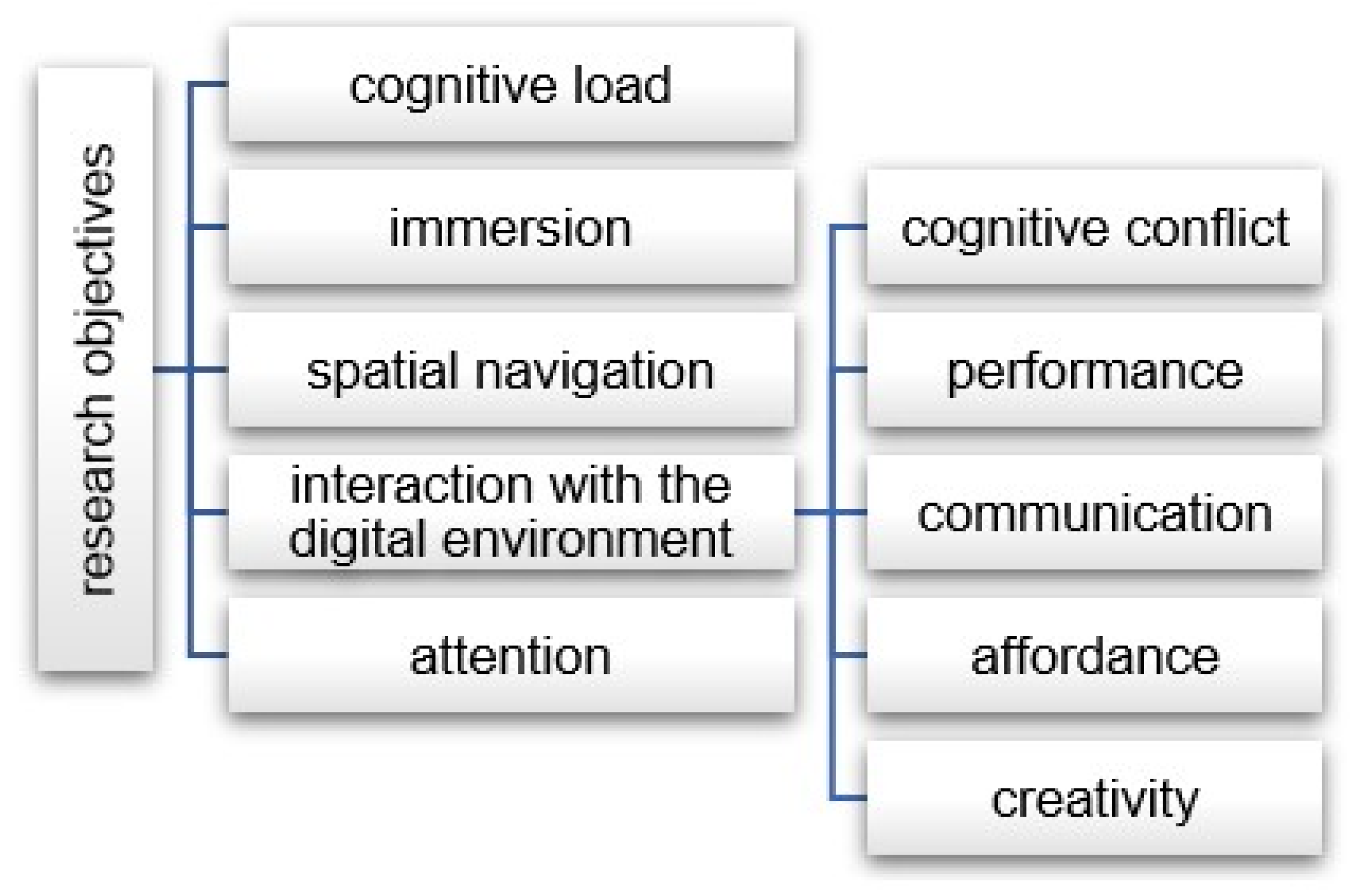

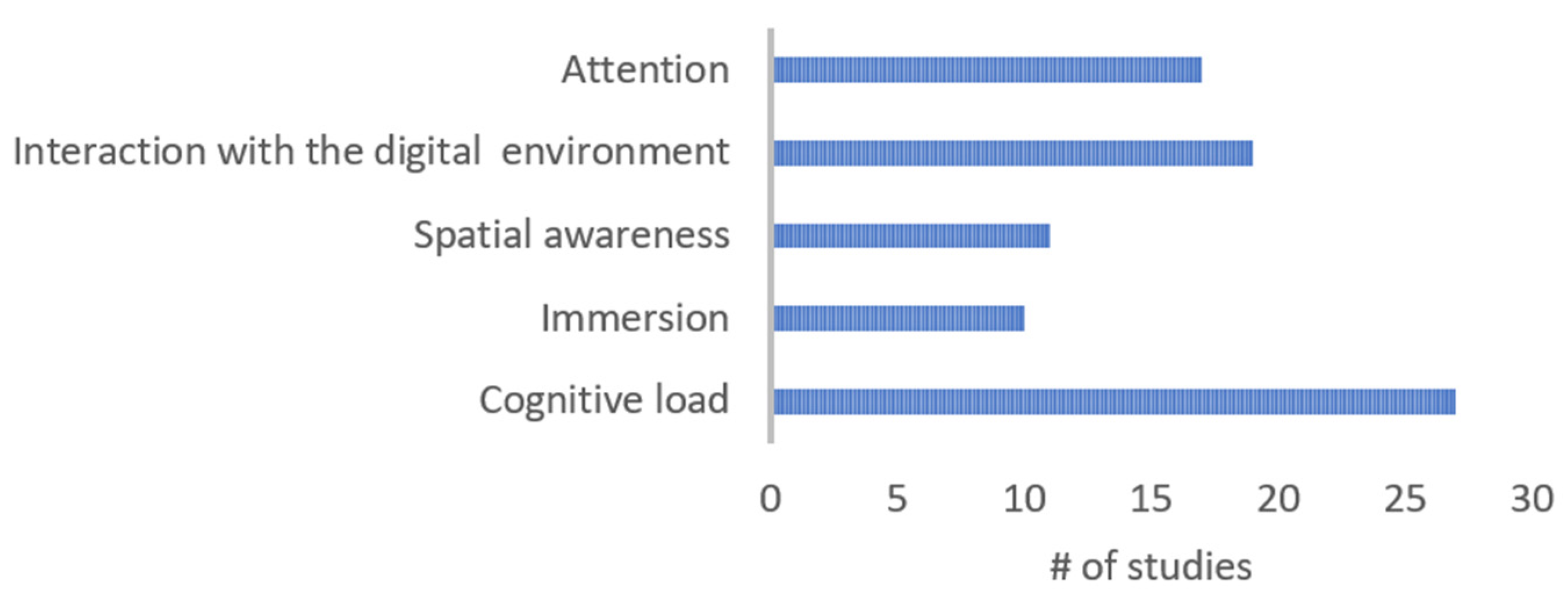

3.6. Objective Area

4. Results

4.1. Paper Layout

4.2. Cognitive Load

4.2.1. Objectives and Outcomes

4.2.2. Data Preprocessing and Artifact Removal

4.2.3. Signal Analysis

4.2.4. Statistical Analysis

4.2.5. Classification Methods

4.3. Immersion

4.3.1. Objectives and Outcomes

4.3.2. Data Pre-Processing and Artifact Removal

4.3.3. Signal Analysis

4.3.4. Statistical Analysis

4.4. Spatial Awareness

4.4.1. Objectives and Outcomes

4.4.2. Data Preprocessing and Artifact Removal

4.4.3. Signal Analysis

4.4.4. Statistical Analysis

4.4.5. Classification Methods

4.5. Interaction with the Digital Environment

4.5.1. Objectives and Outcomes

4.5.2. Data Preprocessing and Artifact Removal

4.5.3. Signal Analysis

4.5.4. Statistical Analysis

4.5.5. Classification Methods

4.6. Attention

4.6.1. Objectives and Outcomes

4.6.2. Data Preprocessing and Artifact Removal

4.6.3. Signal Analysis

4.6.4. Statistical Analysis

4.6.5. Classification Methods

5. Discussion

Comparative Study

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| AMICA | Adaptive Mixture ICA |

| ANOVA | Analysis of Variance |

| AR | Augmented Reality |

| ASR | Artifact Subspace Reconstruction |

| BC | Bonferroni Correction |

| BCI | Brain Computer Interface |

| CAR | Common Average Referencing |

| CNN | Convolutional Neural Network |

| CSP | Common Spatial Pattern |

| DFT | Discrete Fourier Transform |

| ECG | Electrocardiogram |

| EDA | Electrodermal Activity |

| EEG | Electroencephalogram |

| EEMD | Ensemble Empirical Mode Decomposition |

| EKG | Electrocardiogram |

| eLORETA | exact Low-Resolution Electromagnetic Tomography |

| EMG | Electromyogram |

| EOG | Electrooculogram |

| ERSP | Event-Related Spectral Perturbation |

| ERP | Event-Related Potential |

| FBCSP | Filter Bank Common Spatial Pattern |

| FFT | Fast Fourier Transform |

| ffDTF | full frequency Directed Transfer Function |

| fMRI | functional Magnetic Resonance Imaging |

| GA | Grand Average |

| GE | Global Efficiency |

| GSR | Galvanic Skin Response |

| HMD | Head Mounted Display |

| HR | Heart Rate |

| HSD | Honest Significant Difference |

| HT | Hilbert Transform |

| ICA | Independent Component Analysis |

| IEC | Inter-trial Coherence |

| IRASA | Irregular-Resampling Auto-Spectral Analysis |

| IS | Independent Samples |

| ITC | Inter-Trial Coherence |

| KWT | Kruskal–Wallis test |

| LDA | Linear Discriminant Analysis |

| LDFA | Linear Discriminant Function Analysis |

| LME | Linear Mixed Effects |

| LPP | Late Positive Potential |

| LSTM | Long Short-Term Memory |

| MAD | Mean Absolute Distance |

| MANOVA | Multivariate Analysis Of Variance |

| MANCOVA | Multivariate Analysis of Covariance |

| MARA | Multiple Artifacts Rejection Algorithm |

| MD | Mahalanobis Distance |

| MI | Modulation Index |

| MFN | Medial Frontal Negativity |

| MMANOVA | Multilevel Multivariate ANalysis Of VAriance |

| MR | Mixed Reality |

| MRCP | Movement-Related Cortical Potentials |

| PCA | Principal Component Analysis |

| PC | Pearson Correlation |

| PEN | Prediction Error Negativity |

| PLV | Phase Locking Value |

| pMFLR | penalized Multiple Functional Logistic Regression |

| REML | REstricted Maximum Likelihood |

| RF | Random Forest |

| rmANOVA | repeated measures ANOVA |

| ROC | Receiver Operating Characteristics |

| ROI | Region of Interest |

| sBEM | symmetric Boundary Element Method |

| SD | Standard Deviation |

| SNR | Signal-to-Noise Ratio |

| SVM | Support Vector Machine |

| TFR | Time-Frequency Analysis |

| VI | Visual Inspection |

| VE | Virtual Environment |

| VEP | Visual Evoked Potentials |

| VMA | Variance of the Maximal Activity |

| VR | Virtual Reality |

| WSRT | Wilcoxon Signed-Rank Test |

| WT | Wavelet Transformation |

References

- Kiely, K.M. Cognitive Function. In Encyclopedia of Quality of Life and Well-Being Research; Springer: Dordrecht, The Netherlands, 2014; pp. 974–978. [Google Scholar] [CrossRef]

- Virtual Reality|Definition, Development, Technology, Examples, & Facts|Britannica. Available online: https://www.britannica.com/technology/virtual-reality (accessed on 19 January 2023).

- Augmented Reality|Definition, Examples, & Facts|Britannica. Available online: https://www.britannica.com/technology/augmented-reality (accessed on 24 November 2022).

- Shen, S.; Chen, H.-T.; Raffe, W.; Leong, T.W. Effects of Level of Immersion on Virtual Training Transfer of Bimanual Assembly Tasks. Front. Virtual Real. 2021, 2, 597487. [Google Scholar] [CrossRef]

- Makransky, G.; Terkildsen, T.S.; Mayer, R.E. Adding Immersive Virtual Reality to a Science Lab Simulation Causes More Presence but Less Learning. Learn. Instr. 2019, 60, 225–236. [Google Scholar] [CrossRef]

- Moher, D.; Liberati, A.; Tetzlaff, J.; Altman, D.G. Preferred Reporting Items for Systematic Reviews and Meta-Analyses: The PRISMA Statement. J. Clin. Epidemiol. 2009, 62, 1006–1012. [Google Scholar] [CrossRef]

- Gramouseni, F.; Tzimourta, K.; Angelidis, P.; Giannakeas, N.; Tsipouras, M.G. Cognitive Assessment Based on Electroencephalography Analysis in Virtual and Augmented Reality Environments, Using Head Mounted Displays: A Systematic Review. 2023. Available online: https://osf.io/kfx5p (accessed on 2 March 2023).

- Ouzzani, M.; Hammady, H.; Fedorowicz, Z.; Elmagarmid, A. Rayyan—A web and mobile app for systematic reviews. Syst. Rev. 2016, 5, 210. [Google Scholar] [CrossRef] [PubMed]

- Gerry, L.; Ens, B.; Drogemuller, A.; Thomas, B.; Billinghurst, M. Levity: A Virtual Reality System That Responds to Cognitive Load. In Proceedings of the Conference on Human Factors in Computing Systems, Montréal, QC, Canada, 21–26 April 2018; Empathic Computing Laboratory, University of South Australia: Adelaide, Australia, 2018; Volume 2018. [Google Scholar]

- Dey, A.; Chatburn, A.; Billinghurst, M. Exploration of an EEG-Based Cognitively Adaptive Training System in Virtual Reality. In Proceedings of the 2019 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Osaka, Japan, 23–27 March 2019; pp. 220–226. [Google Scholar]

- Tremmel, C.; Krusienski, D.J. EEG Spectral Conditioning for Cognitive-State Classification in Interactive Virtual Reality. In Proceedings of the 2019 IEEE International Conference on Systems, Man and Cybernetics (SMC), Bari, Italy, 6–9 October 2019; pp. 2806–2811. [Google Scholar]

- Tremmel, C.; Herff, C.; Sato, T.; Rechowicz, K.; Yamani, Y.; Krusienski, D.J. Estimating Cognitive Workload in an Interactive Virtual Reality Environment Using EEG. Front. Hum. Neurosci. 2019, 13, 401. [Google Scholar] [CrossRef]

- Škola, F.; Rizvić, S.; Cozza, M.; Barbieri, L.; Bruno, F.; Skarlatos, D.; Liarokapis, F. Virtual Reality with 360-Video Storytelling in Cultural Heritage: Study of Presence, Engagement, and Immersion. Sensors 2020, 20, 5851. [Google Scholar] [CrossRef]

- Haruna, M.; Ogino, M.; Koike-Akino, T. Proposal and Evaluation of Visual Haptics for Manipulation of Remote Machine System. Front. Robot. AI 2020, 7, 529040. [Google Scholar] [CrossRef] [PubMed]

- Baceviciute, S.; Mottelson, A.; Terkildsen, T.; Makransky, G. Investigating Representation of Text and Audio in Educational VR Using Learning Outcomes and EEG. In Proceedings of the Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020; University of Copenhagen: Copenhagen, Denmark, 2020. [Google Scholar]

- Baceviciute, S.; Terkildsen, T.; Makransky, G. Remediating Learning from Non-Immersive to Immersive Media: Using EEG to Investigate the Effects of Environmental Embeddedness on Reading in Virtual Reality. Comput. Educ. 2021, 164, 104122. [Google Scholar] [CrossRef]

- Tian, F.; Zhang, Y.; Li, Y. From 2D to VR Film: A Research on the Load of Different Cutting Rates Based on EEG Data Processing. Information 2021, 12, 130. [Google Scholar] [CrossRef]

- Tian, F.; Wang, H.; Cheng, W.; Zhang, W.; Li, Y. A High-Density EEG Study Investigating VR Film Editing and Cognitive Event Segmentation Theory. Sensors 2021, 21, 7176. [Google Scholar] [CrossRef]

- Aksoy, M.; Ufodiama, C.E.; Bateson, A.D.; Martin, S.; Asghar, A.U.R. A Comparative Experimental Study of Visual Brain Event-Related Potentials to a Working Memory Task: Virtual Reality Head-Mounted Display versus a Desktop Computer Screen. Exp. Brain Res. 2021, 239, 3007–3022. [Google Scholar] [CrossRef]

- Baceviciute, S.; Lucas, G.; Terkildsen, T.; Makransky, G. Investigating the Redundancy Principle in Immersive Virtual Reality Environments: An Eye-Tracking and EEG Study. J. Comput. Assist. Learn. 2022, 38, 120–136. [Google Scholar] [CrossRef]

- Baka, E.; Stavroulia, K.E.; Magnenat-Thalmann, N.; Lanitis, A. An EEG-Based Evaluation for Comparing the Sense of Presence between Virtual and Physical Environments. In Proceedings of the Computer Graphics International 2018, Bintan Island, Indonesia, 11–14 June 2018; University of Geneva: Carouge, Switzerland, 2018; pp. 107–116. [Google Scholar]

- Raz, G.; Gurevitch, G.; Vaknin, T.; Aazamy, A.; Gefen, I.; Grunstein, S.; Azouri, G.; Goldway, N. Electroencephalographic Evidence for the Involvement of Mirror-Neuron and Error-Monitoring Related Processes in Virtual Body Ownership. NeuroImage 2020, 207, 116351. [Google Scholar] [CrossRef]

- Wang, Y.-Y.; Weng, T.-H.; Tsai, I.-F.; Kao, J.-Y.; Chang, Y.-S. Effects of Virtual Reality on Creativity Performance and Perceived Immersion: A Study of Brain Waves. Br. J. Educ. Technol. 2022, 54, 581–602. [Google Scholar] [CrossRef]

- Yang, C.-S.; Liu, J.; Singh, A.K.; Huang, K.-C.; Lin, C.-T. Brain Dynamics of Spatial Reference Frame Proclivity in Active Navigation. IEEE Trans. Neural. Syst. Rehabil. Eng. 2021, 29, 1701–1710. [Google Scholar] [CrossRef] [PubMed]

- Liang, M.; Zheng, J.; Isham, E.; Ekstrom, A. Common and Distinct Roles of Frontal Midline Theta and Occipital Alpha Oscillations in Coding Temporal Intervals and Spatial Distances. J. Cogn. Neurosci. 2021, 33, 2311–2327. [Google Scholar] [CrossRef] [PubMed]

- Hubbard, R.; Sipolins, A.; Zhou, L. Enhancing Learning through Virtual Reality and Neurofeedback: A First Step. In Proceedings of the ACM International Conference Proceeding Series; University of Illinois: Urbana, IL, USA, 2017; pp. 398–403. [Google Scholar]

- Singh, A.K.; Chen, H.; Cheng, Y.; King, J.; Ko, L.; Gramann, K.; Lin, C. Visual Appearance Modulates Prediction Error in Virtual Reality. IEEE Access 2018, 6, 24617–24624. [Google Scholar] [CrossRef]

- Foerster, F.R.; Borghi, A.M.; Goslin, J. Labels Strengthen Motor Learning of New Tools. Cortex 2020, 129, 1–10. [Google Scholar] [CrossRef]

- Singh, A.K.; Tao, X. BCINet: An Optimized Convolutional Neural Network for EEG-Based Brain-Computer Interface Applications. In Proceedings of the 2020 IEEE Symposium Series on Computational Intelligence (SSCI), Canberra, ACT, Australia, 1–4 December 2020; pp. 582–587. [Google Scholar]

- Singh, A.K.; Chen, H.; Gramann, K.; Lin, C. Intraindividual Completion Time Modulates the Prediction Error Negativity in a Virtual 3-D Object Selection Task. IEEE Trans. Cogn. Dev. Syst. 2020, 12, 354–360. [Google Scholar] [CrossRef]

- Singh, A.K.; Gramann, K.; Chen, H.-T.; Lin, C.-T. The Impact of Hand Movement Velocity on Cognitive Conflict Processing in a 3D Object Selection Task in Virtual Reality. NeuroImage 2021, 226, 117578. [Google Scholar] [CrossRef]

- Foerster, F.R.; Goslin, J. Tool Use and Function Knowledge Shape Visual Object Processing. Biol. Psychol. 2021, 164, 108143. [Google Scholar] [CrossRef]

- Rupp, G.; Berka, C.; Meghdadi, A.; McConnell, M.C.; Storm, M.; Ramsøy, T.Z.; Verma, A. EEG Acquisition During the VR Administration of Resting State, Attention, and Image Recognition Tasks: A Feasibility Study. Commun. Comput. Inf. Sci. 2019, 1033, 250–258. [Google Scholar]

- D’Errico, F.; Leone, G.; Schmid, M.; D’Anna, C. Prosocial Virtual Reality, Empathy, and EEG Measures: A Pilot Study Aimed at Monitoring Emotional Processes in Intergroup Helping Behaviors. Appl. Sci. 2020, 10, 1196. [Google Scholar] [CrossRef]

- Li, G.; Zhou, S.; Kong, Z.; Guo, M. Closed-Loop Attention Restoration Theory for Virtual Reality-Based Attentional Engagement Enhancement. Sensors 2020, 20, 2208. [Google Scholar] [CrossRef] [PubMed]

- Wan, B.; Wang, Q.; Su, K.; Dong, C.; Song, W.; Pang, M. Measuring the Impacts of Virtual Reality Games on Cognitive Ability Using EEG Signals and Game Performance Data. IEEE Access 2021, 9, 18326–18344. [Google Scholar] [CrossRef]

- Llinares, C.; Higuera-Trujillo, J.L.; Serra, J. Cold and Warm Coloured Classrooms. Effects on Students’ Attention and Memory Measured through Psychological and Neurophysiological Responses. Build. Environ. 2021, 196, 107726. [Google Scholar] [CrossRef]

- Tian, F.; Wang, H. Comparison of Repeated Viewing in Traditional 2D and VR Movies: A Study on Brain Waves. In Proceedings of the 2021 6th International Conference on Intelligent Computing and Signal Processing (ICSP), Xi’an, China, 9–11 April 2021; pp. 904–907. [Google Scholar]

- Qadir, Z.; Chowdhury, E.; Ghosh, L.; Konar, A. Quantitative Analysis of Cognitive Load Test While Driving in a VR vs. Non-VR Environment. In Pattern Recognition and Machine Intelligence. PReMI 2019; Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); 11942 LNCS; Springer: Cham, Switzerland, 2019; pp. 481–489. [Google Scholar]

- Lee, J.-T.; Rajapakse, R.P.C.J.; Miyata, K. EEG-Based Evaluation on Intuitive Gesture Interaction in Virtual Environment. In Proceedings of the 2022 International Conference on Cyberworlds (CW), Kanazawa, Japan, 27–29 September 2022; pp. 213–217. [Google Scholar]

- Tehrani, B.M.; Wang, J.; Truax, D. Assessment of Mental Fatigue Using Electroencephalography (EEG) and Virtual Reality (VR) for Construction Fall Hazard Prevention. Eng. Constr. Archit. Manag. 2022, 29, 3593–3616. [Google Scholar] [CrossRef]

- Zhu, B.; Cruz-Garza, J.G.; Yang, Q.; Shoaran, M.; Kalantari, S. Identifying Uncertainty States during Wayfinding in Indoor Environments: An EEG Classification Study. Adv. Eng. Inform. 2022, 54, 101718. [Google Scholar] [CrossRef]

- Kalantari, S.; Tripathi, V.; Kan, J.; Rounds, J.D.; Mostafavi, A.; Snell, R.; Cruz-Garza, J.G. Evaluating the Impacts of Color, Graphics, and Architectural Features on Wayfinding in Healthcare Settings Using EEG Data and Virtual Response Testing. J. Environ. Psychol. 2022, 79, 101744. [Google Scholar] [CrossRef]

- Immink, M.A.; Cross, Z.R.; Chatburn, A.; Baumeister, J.; Schlesewsky, M.; Bornkessel-Schlesewsky, I. Resting-State Aperiodic Neural Dynamics Predict Individual Differences in Visuomotor Performance and Learning. Hum. Mov. Sci. 2021, 78, 102829. [Google Scholar] [CrossRef]

- Yang, X.; Lin, L.; Cheng, P.-Y.; Yang, X.; Ren, Y. Which EEG Feedback Works Better for Creativity Performance in Immersive Virtual Reality: The Reminder or Encouraging Feedback? Comput. Hum. Behav. 2019, 99, 345–351. [Google Scholar] [CrossRef]

- Cao, R.; Zou-Williams, L.; Cunningham, A.; Walsh, J.; Kohler, M.; Thornas, B.H. Comparing the Neuro-Physiological Effects of Cinematic Virtual Reality with 2D Monitors. In Proceedings of the 2021 IEEE Virtual Reality and 3D User Interfaces (VR), Lisboa, Portugal, 27 March–1 April 2021; pp. 729–738. [Google Scholar]

- Redlinger, E.; Glas, B.; Rong, Y. Impact of Screen Size on Cognitive Training Task Performance: An HMD Study. Int. J. Psychophysiol. 2021, 166, 166–173. [Google Scholar] [CrossRef] [PubMed]

- Redlinger, E.; Shao, C. Comparing Brain Activity in Virtual and Non-Virtual Environments: A VR & EEG Study. Meas. Sens. 2021, 18, 100062. [Google Scholar]

- Redlinger, E.; Glas, B.; Rong, Y. Enhanced Cognitive Training Using Virtual Reality: Examining a Memory Task Modified for Use in Virtual Environments. In Proceedings of the 2021 5th International Conference on Artificial Intelligence and Virtual Reality (AIVR), Kumamoto, Japan, 23–25 July 2021; pp. 1–8. [Google Scholar]

- Nierula, B.; Spanlang, B.; Martini, M.; Borrell, M.; Nikulin, V.V.; Sanchez-Vives, M.V. Agency and Responsibility over Virtual Movements Controlled through Different Paradigms of Brain−computer Interface. J. Physiol. 2021, 599, 2419–2434. [Google Scholar] [CrossRef] [PubMed]

- Kakkos, I.; Dimitrakopoulos, G.N.; Gao, L.; Zhang, Y.; Qi, P.; Matsopoulos, G.K.; Thakor, N.; Bezerianos, A.; Sun, Y. Mental Workload Drives Different Reorganizations of Functional Cortical Connectivity Between 2D and 3D Simulated Flight Experiments. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 27, 1704–1713. [Google Scholar] [CrossRef] [PubMed]

- de Tommaso, M.; Ricci, K.; Delussi, M.; Montemurno, A.; Vecchio, E.; Brunetti, A.; Bevilacqua, V. Testing a Novel Method for Improving Wayfinding by Means of a P3b Virtual Reality Visual Paradigm in Normal Aging. SpringerPlus 2016, 5, 1297. [Google Scholar] [CrossRef]

- Sharma, G.; Kaushal, Y.; Chandra, S.; Singh, V.; Mittal, A.P.; Dutt, V. Influence of Landmarks on Wayfinding and Brain Connectivity in Immersive Virtual Reality Environment. Front. Psychol. 2017, 8, 1220. [Google Scholar] [CrossRef]

- Gehrke, L.; Gramann, K. Single-Trial Regression of Spatial Exploration Behavior Indicates Posterior EEG Alpha Modulation to Reflect Egocentric Coding. Eur. J. Neurosci. 2021, 54, 8318–8335. [Google Scholar] [CrossRef]

- Spapé, M.; Harjunen, V.; Ahmed, I.; Jacucci, G.; Ravaja, N. The Semiotics of the Message and the Messenger: How Nonverbal Communication Affects Fairness Perception. Cogn. Affect. Behav. Neurosci. 2019, 19, 1259–1272. [Google Scholar] [CrossRef]

- Berger, A.M.; Davelaar, E.J. Frontal Alpha Oscillations and Attentional Control: A Virtual Reality Neurofeedback Study. Neuroscience 2018, 378, 189–197. [Google Scholar] [CrossRef]

- Park, J.H.; Jeon, H.J.; Lim, E.C.; Koo, J.W.; Lee, H.J.; Kim, H.J.; Lee, J.S.; Song, C.G.; Hong, S.K. ·Sung Kwang Hong, Feasibility of Eye Tracking Assisted Vestibular Rehabilitation Strategy Using Immersive Virtual Reality. Clin. Exp. Otorhinolaryngol. 2019, 12, 376–384. [Google Scholar] [CrossRef] [PubMed]

- Van Goethem, S.; Adema, K.; van Bergen, B.; Viaene, E.; Wenborn, E.; Verwulgen, S. A Test Setting to Compare Spatial Awareness on Paper and in Virtual Reality Using Eeg Signals. Adv. Intell. Syst. Comput. 2020, 953, 199–208. [Google Scholar]

- Bogacz, M.; Hess, S.; Choudhury, C.F.; Calastri, C.; Mushtaq, F.; Awais, M.; Nazemi, M.; van Eggermond, M.A.B.; Erath, A. Cycling in Virtual Reality: Modelling Behaviour in an Immersive Environment. Transp. Lett. 2021, 13, 608–622. [Google Scholar] [CrossRef]

- Harjunen, V.J.; Sjö, P.; Ahmed, I.; Saarinen, A.; Farmer, H.; Salminen, M.; Järvelä, S.; Ruonala, A.; Jacucci, G.; Ravaja, N. Increasing Self-Other Similarity Modulates Ethnic Bias in Sensorimotor Resonance to Others’ Pain. Soc. Cogn. Affect. Neurosci. 2022, 17, 673–682. [Google Scholar] [CrossRef] [PubMed]

- Ellena, G.; Starita, F.; Haggard, P.; Romei, V.; Làdavas, E. Fearful Faces Modulate Spatial Processing in Peripersonal Space: An ERP Study. Neuropsychologia 2021, 156, 107827. [Google Scholar] [CrossRef]

- Cruz-Garza, J.G.; Darfler, M.; Rounds, J.D.; Gao, E.; Kalantari, S. EEG-Based Investigation of the Impact of Room Size and Window Placement on Cognitive Performance. J. Build. Eng. 2022, 53, 104540. [Google Scholar] [CrossRef]

- Darfler, M.; Cruz-Garza, J.G.; Kalantari, S. An EEG-Based Investigation of the Effect of Perceived Observation on Visual Memory in Virtual Environments. Brain Sci. 2022, 12, 269. [Google Scholar] [CrossRef]

- Gregory, S.E.; Wang, H.; Kessler, K. EEG Alpha and Theta Signatures of Socially and Non-Socially Cued Working Memory in Virtual Reality. Soc. Cogn. Affect. Neurosci. 2022, 17, 531–540. [Google Scholar] [CrossRef]

- Kweon, S.H.; Kweon, H.J.; Kim, S.-J.; Li, X.; Liu, X.; Kweon, H.L. A Brain Wave Research on VR (Virtual Reality) Usage: Comparison Between VR and 2D Video in EEG Measurement. Adv. Intell. Syst. Comput. 2018, 592, 194–203. [Google Scholar]

- Benlamine, M.S.; Dufresne, A.; Beauchamp, M.H.; Frasson, C. BARGAIN: Behavioral Affective Rule-Based Games Adaptation Interface–towards Emotionally Intelligent Games: Application on a Virtual Reality Environment for Socio-Moral Development. User Model. User-Adapt. Interact. 2021, 31, 287–321. [Google Scholar] [CrossRef]

- Choi, J.W.; Huh, S.; Jo, S. Improving Performance in Motor Imagery BCI-Based Control Applications via Virtually Embodied Feedback. Comput. Biol. Med. 2020, 127, 104079. [Google Scholar] [CrossRef]

- Ehinger, B.V.; Fischer, P.; Gert, A.L.; Kaufhold, L.; Weber, F.; Pipa, G.; König, P. Kinesthetic and Vestibular Information Modulate Alpha Activity during Spatial Navigation: A Mobile EEG Study. Front. Hum. Neurosci. 2014, 8, 71. [Google Scholar] [CrossRef] [PubMed]

- Tromp, J.; Peeters, D.; Meyer, A.S.; Hagoort, P. The Combined Use of Virtual Reality and EEG to Study Language Processing in Naturalistic Environments. Behav. Res. 2018, 50, 862–869. [Google Scholar] [CrossRef] [PubMed]

- Heyselaar, E.; Mazaheri, A.; Hagoort, P.; Segaert, K. Changes in Alpha Activity Reveal That Social Opinion Modulates Attention Allocation during Face Processing. NeuroImage 2018, 174, 432–440. [Google Scholar] [CrossRef] [PubMed]

- Erkan, İ. Examining Wayfinding Behaviours in Architectural Spaces Using Brain Imaging with Electroencephalography (EEG). Archit. Sci. Rev. 2018, 61, 410–428. [Google Scholar] [CrossRef]

- Gumilar, I.; Sareen, E.; Bell, R.; Stone, A.; Hayati, A.; Mao, J.; Barde, A.; Gupta, A.; Dey, A.; Lee, G.; et al. A Comparative Study on Inter-Brain Synchrony in Real and Virtual Environments Using Hyperscanning. Comput. Graph. 2021, 94, 62–75. [Google Scholar] [CrossRef]

- Zhang, T.; Guo, M.; Wang, L.; Li, M. Brain Fatigue Analysis from Virtual Reality Visual Stimulation Based on Granger Causality. Displays 2022, 73, 102219. [Google Scholar] [CrossRef]

- Burns, C.G.; Fairclough, S.H. Use of Auditory Event-Related Potentials to Measure Immersion during a Computer Game. Int. J. Hum.-Comput. Stud. 2015, 73, 107–114. [Google Scholar] [CrossRef]

- Li, H.; Du, X.; Ma, H.; Wang, Z.; Li, Y.; Wu, J. The Effect of Virtual-Reality-Based Restorative Environments on Creativity. Int. J. Environ. Res. Public Health 2022, 19, 12083. [Google Scholar] [CrossRef]

- Nenna, F.; Do, C.T.; Protzak, J.; Gramann, K. Alteration of Brain Dynamics during Dual-Task Overground Walking. Eur. J. Neurosci. 2020, 54, 8158–8174. [Google Scholar] [CrossRef]

- Sun, R.; Wu, Y.J.; Cai, Q. The Effect of a Virtual Reality Learning Environment on Learners’ Spatial Ability. Virtual Real. 2019, 23, 385–398. [Google Scholar] [CrossRef]

- Yi, Y.; Billor, N.; Liang, M.; Cao, X.; Ekstrom, A.; Zheng, J. Classification of EEG Signals: An Interpretable Approach Using Functional Data Analysis. J. Neurosci. Methods 2022, 376, 109609. [Google Scholar] [CrossRef] [PubMed]

- Li, J.; Jin, Y.; Lu, S.; Wu, W.; Wang, P. Building Environment Information and Human Perceptual Feedback Collected through a Combined Virtual Reality (VR) and Electroencephalogram (EEG) Method. Energy Build. 2020, 224, 110259. [Google Scholar] [CrossRef]

- Yu, M.; Li, Y.; Tian, F. Responses of Functional Brain Networks While Watching 2D and 3D Videos: An EEG Study. Biomed. Signal Process. Control 2021, 68, 102613. [Google Scholar] [CrossRef]

- Djebbara, Z.; Fich, L.B.; Petrini, L.; Gramann, K. Sensorimotor Brain Dynamics Reflect Architectural Affordances. Proc. Natl. Acad. Sci. USA 2019, 116, 14769–14778. [Google Scholar] [CrossRef]

- Vortmann, L.-M.; Kroll, F.; Putze, F. EEG-Based Classification of Internally- and Externally-Directed Attention in an Augmented Reality Paradigm. Front. Hum. Neurosci. 2019, 13, 348. [Google Scholar] [CrossRef]

- Giannopulu, I.; Brotto, G.; Lee, T.J.; Frangos, A.; To, D. Synchronised Neural Signature of Creative Mental Imagery in Reality and Augmented Reality. Heliyon 2022, 8, e09017. [Google Scholar] [CrossRef]

- Wang, W.; Hong, X.; Qu, J.; Xu, N.; Chen, T. Optimization and Improvement of Display Interaction System Based on Complex Command and Control Tasks. Aerospace 2022, 9, 367. [Google Scholar] [CrossRef]

- Ikiz, Y.D.; Atici-Ulusu, H.; Taskapilioglu, O.; Gunduz, T. Usage of Augmented Reality Glasses in Automotive Industry: Age-Related Effects on Cognitive Load. Int. J. Recent Technol. Eng. 2019, 8, 1–6. [Google Scholar] [CrossRef]

- Atici-Ulusu, H.; Ikiz, Y.D.; Taskapilioglu, O.; Gunduz, T. Effects of Augmented Reality Glasses on the Cognitive Load of Assembly Operators in the Automotive Industry. Int. J. Comput. Integr. Manuf. 2021, 34, 487–499. [Google Scholar] [CrossRef]

- Chen, X.; Cao, L.; Haendel, B.F. Differential Effects of Walking across Visual Cortical Processing Stages. Cortex 2022, 149, 16–28. [Google Scholar] [CrossRef] [PubMed]

- Škola, F.; Liarokapis, F. Examining the Effect of Body Ownership in Immersive Virtual and Augmented Reality Environments. Vis. Comput. 2016, 32, 761–770. [Google Scholar] [CrossRef]

- Seeling, P. Augmented Reality Device Operator Cognitive Strain Determination and Prediction. AIMS Electron. Electr. Eng. 2017, 1, 100–110. [Google Scholar] [CrossRef]

- Kirschner, P.A. Cognitive Load Theory: Implications of Cognitive Load Theory on the Design of Learning. Learn. Instr. 2002, 12, 1–10. [Google Scholar] [CrossRef]

- Souza, R.H.C.E.; Naves, E.L.M. Attention Detection in Virtual Environments Using EEG Signals: A Scoping Review. Front. Physiol. 2021, 12, 727840. [Google Scholar] [CrossRef]

- Shynu, R.V.; Kumar, K.G.S.; Sambath, R.D. Factors Influencing Environmental Perception: A Systematic Review. J. Phys. Conf. Ser. 2021, 1950, 012040. [Google Scholar] [CrossRef]

- Georgiev, D.D.; Georgieva, I.; Gong, Z.; Nanjappan, V.; Georgiev, G.V. Virtual Reality for Neurorehabilitation and Cognitive Enhancement. Brain Sci. 2021, 11, 221. [Google Scholar] [CrossRef] [PubMed]

- Bruni, L.E.; Dini, H.; Simonetti, A. Narrative Cognition in Mixed Reality Systems: Towards an Empirical Framework. In Virtual, Augmented and Mixed Reality; Chen, J.Y.C., Fragomeni, G., Eds.; Springer International Publishing: Cham, Switzerland, 2021; pp. 3–17. [Google Scholar]

- Mostafavi, A. Architecture, Biometrics, and Virtual Environments Triangulation: A Research Review. Archit. Sci. Rev. 2022, 65, 504–521. [Google Scholar] [CrossRef]

- Milgram, P.; Kishino, F. A Taxonomy of Mixed Reality Visual Displays. Available online: https://search.ieice.org/bin/summary.php?id=e77-d_12_1321 (accessed on 28 December 2022).

- Sweller, J. Cognitive Load During Problem Solving: Effects on Learning. Cogn. Sci. 1988, 12, 257–285. [Google Scholar] [CrossRef]

| Publication Year | # of Studies |

|---|---|

| 2013 | 0 |

| 2014 | 1 |

| 2015 | 1 |

| 2016 | 2 |

| 2017 | 3 |

| 2018 | 9 |

| 2019 | 14 |

| 2020 | 13 |

| 2021 | 16 |

| 2022 | 23 |

| Total | 82 |

| Participants | # of Studies |

|---|---|

| 1–10 | 8 |

| 11–20 | 23 |

| 21–30 | 25 |

| 31–40 | 9 |

| 41–60 | 9 |

| >60 | 8 |

| Total | 82 |

| Digital Environment Type | Device | References | # of Studies |

|---|---|---|---|

| VR | Samsung Gear VR | [5] | 74 |

| HTC Vive | [9,10,11,12,13,14,15,16,17,18,19,20,21,22,23,24,25,26,27,28,29,30,31,32,33,34,35,36,37,38] | ||

| HTC Vive Pro | [39,40,41,42,43,44,45,46] | ||

| HTC Vive Focus | [47,48,49] | ||

| Oculus Rift DK2 | [50,51,52,53,54,55,56,57] | ||

| Oculus Rift | [58,59,60,61,62,63] | ||

| Oculus Rift S | [64] | ||

| Oculus | [65,66] | ||

| Oculus Go | [67] | ||

| nVisor SX60 | [68,69,70] | ||

| 3DVR | [71] | ||

| HTC Vive/Samsung Odyssey | [72] | ||

| MIUI PLAY2 | [73] | ||

| Silicon Micro Display ST1080-10V1 | [74] | ||

| VIVE-P130 | [75] | ||

| ACER WMR | [76] | ||

| n/a | [77,78,79,80,81] | ||

| AR | Hololens | [82,83] | 8 |

| Hololens 2 | [84] | ||

| Sony SmartEyeglass SED-E1 | [85,86] | ||

| DreamWorld AR | [87] | ||

| Vuzix Wrap 1200DXAR | [88] | ||

| n/a | [89] | ||

| Total | 82 |

| EEG Type | References | # of Studies | Percentage | |

|---|---|---|---|---|

| Cap Patches Helmet | ABM X-10 | [5,15,16,20] | 71 | 87% |

| G-Tec | [9] | |||

| ASA Lab, ANT | [51,68] | |||

| Nihon Kohden | [39] | |||

| LiveAmp | [10,44] | |||

| g.LADYBIRD | [11,12,50] | |||

| g.GAMMAsys | [72] | |||

| g.USBamp | [36] | |||

| g.tec Nautilus | [82] | |||

| Brain Products | [77] | |||

| QuickAmp | [79] | |||

| actiCHamp | [62,63] | |||

| BrainAmp | [46] | |||

| BrainAmp Move System | [54,76] | |||

| Enobio 3 | [13] | |||

| Enobio-32 | [88] | |||

| StarStim 8 | [35] | |||

| EPOC Flex | [14] | |||

| Neuracle | [17,18] | |||

| OpenBCI | [47,48,49] | |||

| Biosemi Active Two | [21] | |||

| Biosemi Actiview | [42,43] | |||

| V-Amp | [22] | |||

| ANT Neuro | [52,53,64] | |||

| eegoSports | [81] | |||

| ActiCAP | [25,28,57,67,70,78] | |||

| BIOPAC MP160 | [75] | |||

| Mobita | [83] | |||

| EasyCap | [32,41,85,86] | |||

| Scan SynAmps2 Express | [30] | |||

| Curry 8 SynAmps2 Express | [31] | |||

| Neuracle | [74,80] | |||

| mBrainTrain | [87] | |||

| Nuamps7181 | [84] | |||

| B-Alert | [33,37] | |||

| n/a | [19,24,27,29,38,60,65,69,74,80] | |||

| Headband Headset | QUASAR DSI-7 | [58] | 11 | 13% |

| Looxid Link | [40] | |||

| EMOTIV EPOC+ | [34,59,66] | |||

| NeuroSky MindWave | [23,45,71] | |||

| MUSE | [26] | |||

| MyndPlay BrainBand XL | [56] | |||

| n/a | [89] |

| # of Sensors | References | # of Studies |

|---|---|---|

| 1–4 | [23,26,45,56,71,79,89] | 7 |

| 6–14 | [5,10,11,12,13,15,16,17,20,22,35,36,37,40,47,48,49,58,59,65,81,84,85,88] | 24 |

| 16–35 | [9,14,19,24,27,30,33,34,39,41,44,46,55,57,60,66,67,72,73,75,77,82,83,86,87] | 25 |

| >57 | [18,21,25,28,29,31,32,38,42,43,50,51,52,53,54,61,62,63,64,68,69,70,74,76,78,80] | 26 |

| Objective | References | # of Studies |

|---|---|---|

| Cognitive load | [5,9,10,11,12,13,14,15,16,17,18,19,20,39,40,41,47,48,49,51,58,76,77,84,85,86,89] | 27 |

| Immersion | [14,21,22,23,50,59,60,65,74,88] | 10 |

| Spatial awareness | [24,25,42,43,52,53,54,61,68,71,78] | 11 |

| Interaction with the digital environment | [23,26,28,29,30,31,32,44,55,62,64,67,69,72,79,80,81,83] | 19 |

| Attention | [33,34,35,36,37,38,45,46,56,57,63,66,70,73,75,82,87] | 17 |

| Authors, Year, Reference | Participants | Stimuli | Frequency Bands/ Range | Data Preprocessing | Artifact Removal | Classification Technique/Statistical Analysis | Main findings |

|---|---|---|---|---|---|---|---|

| (Seeling, 2017) [89] | 30 | VIEW dataset | θ, α, β | average α/θ, α/β average and variability, α and θ variability, R2, KNN |

| ||

| (Gerry et al., 2018) [9] | 2 | visual search task | α | notch, low pass | central tendency of α, ERD |

| |

| (Ikiz et al., 2019) [85] | 4 | automobile assembly line | β, γ | notch | BrainVision Analyzer 2 software | EEG graph area, One Sample t-test |

|

| (Makransky et al., 2019) [5] | 52 | text PC vs. VR, with/no narration | 1–40 Hz | ABM’s proprietary software | LDFA, stepwise regression, ANOVA |

| |

| (Kakkos et al., 2019) [51] | 29 | flight simulator (2D vs. VR) | δ, θ, α, β, γ | 0.5–40 Hz, notch | ICA | eLORETA, AAL-116, PLI, EG, EL, RFE-CBR, 10-fold cross-validation, LDA, ANOVA |

|

| (Qadir et al., 2019) [39] | 11 | driving simulator (2D vs. VR) | θ, α1, α2, β | CAR, EllipticalBPF, ICA, EEGLAB automatic tool | %ERD, %ERS, CIT2FS, e-LORETA |

| |

| (Dey et al., 2019) [10] | 14 | adaptive target training system | α | 9–13 Hz, notch, >0.3 Hz | threshold of max absolute values or variance, VI | mean of squares, mean of last 4 epochs, TFR, Monte Carlo permutation test |

|

| (Tremmel and Krusienski, 2019) [11] | 15 | n-back task | δ, α, β, γ | 0–58 Hz, Welch’s method | WCF EMG-surrogate Regression | LDA, 5-fold cross-validation |

|

| (Tremmel et al., 2019) [12] | 15 | n-back task | θ, α, β, γ, HF | >5 Hz | HF suppression | Welch’s method, Spearman’s correlation, rLDA, 4-fold cross-validation |

|

| (Sun et al., 2019) [77] | 28 | 2D vs. VR | 0.05–100 Hz | 100 μV threshold | N1, P2 mean, SD, 3-way rmANOVA, Greenhouse–Geisser correction |

| |

| (Van Goethem et al., 2020) [58] | 8 | 2D vs. 3D shapes | QStates software Paired Sample t-test |

| |||

| (Nenna et al., 2020) [76] | 22 | Visual discrimination task | θ, α1, α2, β, γ | BeMoBIL, FIR (0.2–90 Hz), <40 Hz | automated rejection, VI, AMICA | PSD, ANOVA, Mauchly’s test, Greenhouse–Geisser correction, BC, P3-SNR |

|

| (Škola et al., 2020) [13] | 15 | VR storytelling | θ, α, β2 | 1.5–100 Hz, notch | ASR, MARA, AMICA | PSD, neural de/synchronization |

|

| (Haruna et al., 2020) [14] | 9 | BCI-Haptic (with/without VR) | θ, α, β | 0.5 s epochs | ffDTF, SCoT, VAR |

| |

| (Baceviciute et al., 2020) [15] | 78 | text (3 formats) | θ, α | 0.5–100 Hz, notch, PSD (DFT) | ICA (MARA) | mean peak frequency, one-way ANOVA, Tukey’s HSD, KWT, BC post hoc Dunn’s tests |

|

| (Baceviciute et al., 2021) [16] | 48 | Text (book vs. VR) | θ, α, β | 0.5–100 Hz, notch | VI, ICA (MARA), automatic channel rejection | mean PSD, independent samples t-test, BC |

|

| (Tian, Zhang, et al., 2021) [17] | 40 | Films (VR vs. 2D) | θ, α, β | FIR, notch, WT | VI, ICA | frequency band energy |

|

| (Tian, Wang, et al., 2021) [18] | 30 | films | θ, α, β | notch, 0.5–90 Hz, 0.1–30 Hz, WT | ICA, 100 μV threshold | EEG energy, SVM |

|

| (Redlinger et al., 2021a) [49] | 20 | N-back memory task | θ, α, β1, β2 | notch, >4 Hz, FFT | EOG, VI | power index, rmANOVA, WSRT |

|

| (Redlinger et al., 2021b) [47] | 20 | N-back memory task | θ, α, β1, β2 | notch, >4 Hz, FFT | ICA, EOG | power index, rmANOVA, WSRT |

|

| (Redlinger and Shao, 2021) [48] | 12 | Game (2d vs. VR) | θ, α, β1, β2 | notch, >4 Hz, FFT | VI, EOG | power index, WSRT |

|

| (Aksoy et al., 2021) [19] | 20 | N-back memory task (VR vs. 2D) | 0.5–30 Hz | 100 μV threshold, VI | mean amplitude, peak amplitude, peak latency of N1, P1, P3, rmANOVA |

| |

| (Atici-Ulusu et al., 2021) [86] | 4 | automobile manufacturing factory | β, γ | β, γ wavelength filtering, notch | BrainVision Analyzer 2 software | EEG graph area, SD, One Sample t-test, Mean |

|

| (Lee et al., 2022) [40] | 15 | 3D objects | θ, α | 0.01–120 Hz, notch | ICA | Welch’s method |

|

| (Tehrani et al., 2022) [41] | 10 | VE construction field | θ, α, β | 0.5–60 Hz | ASR, ICA, VI | WPD, SE, Mann–Whitney U test |

|

| (Baceviciute et al., 2022) [20] | 63 | text, auditory, text and auditory | θ, α | 0.5–100 Hz, notch, PSD (DFT) | VI, ICA-MARA | mean peak frequency, SD, ANOVA |

|

| (W. Wang et al., 2022) [84] | 20 | flight simulator 2D vs. MR | P300 amplitude, P300 latency, paired t-test, WPD, SE |

|

| Authors, Year, Reference | Participants | Objective | Stimuli | Frequency Bands/ Range | Data Preprocessing | Artifact Removal | Classification Technique/Statistical Analysis | Main Findings |

|---|---|---|---|---|---|---|---|---|

| (Burns and Fairclough, 2015) [74] | 20 | immersion | auditory oddball task (2D/VR) | 0.1–30 Hz | GA ERP, mean amplitudes |

| ||

| (Škola and Liarokapis, 2016) [88] | 30 | body ownership | virtual hand (Physical, VR, AR) | δ, θ, α, β, γ | 1.5–95 Hz, notch, FFT | ICA (MARA) | PC |

|

| (Baka et al., 2018) [21] | 33 | sense of presence | VE realistic, non-realistic | θ, α, β1, β2 | 0.1–60 Hz, notch, FFT, 10 ROI | VI | Mann–Whitney, KWT |

|

| (Kweon et al., 2018) [65] | 20 | immersion | videos (2D/VR) | α, β | paired t-test, α, β wave difference 2D/VR |

| ||

| (Haruna et al., 2020) [14] | 9 | sense of oneness | visual haptics feedback | θ, α, β | 0.5 s epochs | ffDTF, SCoT, VAR |

| |

| (Raz et al., 2020) [22] | 18 | body ownership | virtual hand | mu rhythm | >0.1 Hz, Morlet WT | ICA, VI | ERSP, ERP, cluster-based permutation, PC, ANOVA, two-tailed signed rank test |

|

| (Nierula et al., 2021) [50] | 29 | body ownership, agency | BCI (no VR/VR) | α | 0.5–40 Hz, notch, CSP, HT | VI, VMA, MD, ICA | sBEM, ERD%, Tikhonov-regularized minimum-norm |

|

| (Bogacz et al., 2021) [59] | 14 | engagement | VE cycling | α | 1–20 Hz, Welch’s method | VI | ROI analysis, α power peak |

|

| (Harjunen et al., 2022) [60] | 58 | embodiment | VR hands, VR agents | β | CSD, FFT | ICA | ERD/ERS, average β ERD, rmANOVA, F-tests, type-III sum of squares, planned pairwise comparisons |

|

| (Y.-Y. Wang et al., 2022) [23] | 72 | immersion | images, game | θ, α, β, γ | average, log values, MANOVA, MANCOVA |

|

| Authors, Year, Reference | Participants | Stimuli | Frequency Bands/ Range | Data Preprocessing | Artifact Removal | Classification Technique/Statistical Analysis | Main Findings |

|---|---|---|---|---|---|---|---|

| (Ehinger et al., 2014) [68] | 5 | triangle completion task | α | 1–120 Hz | VI, AMICA, BEM | ERSP, PCA, k-means, ROI analysis (Monte Carlo) |

|

| (de Tommaso M et al., 2016) [52] | 28 | VE home colors | 0.5–80 Hz | 0.5–80 Hz | VI, ASA-ANT software, ICA | GA, P3b amplitude and latency, one-way ANOVA, MANOVA, scalp maps, BC |

|

| (Sharma et al., 2017) [53] | 30 | maze | θ | 4–8 Hz | VI, ICA | %θ change, ERD/ERS, DFT, IS t-tests, ANOVA, ROI analysis |

|

| (Erkan, 2018) [71] | 340 | maze | θ, α, β | EEG-Analyzer Tool, FFT | Gratton, Coles, and Donchin algorithm | θ, α, β activity |

|

| (Gehrke and Gramann, 2021) [54] | 29 | Maze | θ, α | 124–500 Hz | VI, ICA, AMICA | MAD, SD, MD, BEM, k-means clustering, LME, Tukey’s, Spectral maps, ERSP |

|

| (C.-S. Yang et al., 2021) [24] | 41 | spatial task | α, β | 1–45 Hz | VI, ASR, AMICA | k-means, ERSP, correlation analysis |

|

| (Liang et al., 2021) [25] | 19 | teleporter | δ, θ, α, β | 1–50 Hz, Morlet WT | ASR, ICA | mean, WSRT, SVM |

|

| (Ellena et al., 2021) [61] | 22 | avatar | 0.5–30 Hz | 0.5–30 Hz | voltage threshold, SD, ICA | N1 mean amplitudes, rmANOVA, Newman–Keuls |

|

| (Yi et al., 2022) [78] | 19 | Open dataset | δ, θ, α, β | 1–50 Hz, Morlet WT | ASR, ICA | pMFLR, PCA, cross-validation |

|

| (Zhu et al., 2022) [42] | 30 | VE hospital | θ, α, β | 1–50 Hz, PREP Pipeline, SSI, CSP | ASR, ICA, VI | log transform, RF, 5-fold cross-validation ROC |

|

| (Kalantari et al., 2022) [43] | 63 | VE hospital | δ, θ, α, β, γ | ICA | IC cluster analysis, one-way ANOVA, post hoc Tukey HSD, ERSP |

|

| Authors, Year, Reference | Participants | Objective | Stimuli | Frequency Bands/ Range | Data Preprocessing | Artifact Removal | Classification Technique/Statistical Analysis | Main Findings |

|---|---|---|---|---|---|---|---|---|

| (Hubbard et al., 2017) [26] | 12 | learning performance | Working memory task | α, β2 | FFT | ERP, TFR |

| |

| (Singh et al., 2018) [27] | 32 | cognitive conflict | object selection task | 0.5–50 Hz | VI | PEN, P300, rmANCOVA, mmANOVA |

| |

| (Tromp et al., 2018) [69] | 20 | language comprehension | VE restaurant | 0.01–40 Hz | 0.01–40 Hz | Brain Vision Analyzer | ERPs, N400, ANOVA, Greenhouse-Geisser correction |

|

| (Spapé et al., 2019) [55] | 66 | message meaning | game | 0.2–80 Hz, notch, <40 Hz, | ICA, VI, Autoreject algorithm | rmANOVA, N1, MFN, P3, LPP |

| |

| (Djebbara et al., 2019) [81] | 19 | transitional affordance | VE Go/No Go | 0.2–40 Hz | 1–100 Hz | ICA, VI, SD | VEP, MRCP, Peak Analysis, rmANOVA, Tukey’s HSD |

|

| (J. Li et al., 2020) [79] | 30 | work efficiency | 3 VEs/lighting | β | PC |

| ||

| (Foerster et al., 2020) [28] | 40 | motor learning | labeled novel tools | β | 1–50 Hz, notch, | voltage thresholds | ERD/ERS, pairwise comparison, two-tailed t-tests, cluster analysis (Monte Carlo) |

|

| (Choi et al., 2020) [67] | 14 | performance, presence | BCI | 8–36 Hz | data augmentation | FBCSP, LDA, ANOVA, 4-fold cross-validation, Mann–Whitney U test, BC, ERD ratio |

| |

| (Singh and Tao, 2020) [29] | 26 | cognitive conflict | CC, pHRC datasets | BCINet, EEGNet, DeepConvNet, ShallowNet |

| |||

| (Singh et al., 2020) [30] | 33 | cognitive conflict | object selection task | 0.5–50 Hz | ICA, VI | PEN, Pe, PC, rmANOVA |

| |

| (Singh et al., 2021) [31] | 20 | cognitive conflict | object selection task | δ, θ, α, β | 0.1–40 Hz | Kurtosis, ICA, DIPFIT, BESA | PEN, Pe, ANOVA, ANCOVA, One-sample t-tests, 1000-fold permutation test |

|

| (Immink et al., 2021) [44] | 45 | performance | game marksmanship | 0.1–40 Hz, IRASA | ICA, >150 μV, flat channels, EMG, ECG, EOG | REML, Type II Wald χ2-tests |

| |

| (Foerster and Goslin, 2021) [32] | 37 | affordance | virtual objects | θ, α, β, mu band | 0.1–40 Hz, Laplacian filter, FFT, TFR | Autoreject algorithm, frontal and prefrontal exclusion | ITC, rmANOVA |

|

| (Yu et al., 2021) [80] | 36 | reorganizations of functional brain networks | 2D, 3D videos | α, β, γ | SVM, RF |

| ||

| (Gumilar et al., 2021) [72] | 24 | inter-brain synchrony | real world vs. VR Avatar | δ, θ, α, β, γ | 0.5–60 Hz, notch, automated pipeline | VI, ICA | eLORETA, PLV |

|

| (Cruz-Garza et al., 2022) [62] | 23 | performance | VE classroom | δ, θ, α, β, γ | 0.5–50 Hz, frequency band-power, PDC | ASR, ICLabel, ICA | KWT, k-SVM |

|

| (Y.-Y. Wang et al., 2022) [23] | 72 | creativity | θ, α, β, γ | MANOVA, MANCOVA |

| |||

| (Gregory et al., 2022) [64] | 49 | Working memory performance | Memory task (Social/non-social cue) | θ, α | 0.5–36 Hz | VI, ICA | TFR (Morlet WT) |

|

| (Giannopulu et al., 2022) [83] | 27 | mental imagery, creativity | virtual objects | β, γ | 1–80 Hz, PSD, PDC | VI, ICA | Levene’s test, paired sample t-tests, rmANOVA, PC, PCA, Factor Analysis, Bartlett’s test |

|

| Authors, Year, Reference | Participants | Stimuli | Frequency Bands/ Range | Data Preprocessing | Artifact Removal | Classification Technique/Statistical Analysis | Main Findings |

|---|---|---|---|---|---|---|---|

| (Heyselaar et al., 2018) [70] | 30 | static photos, VR avatars | δ, θ, α, β | <150 Hz, TFR | VI, ICA | cluster randomization, ANOVA, Wald χ2 tests |

|

| (Berger and Davelaar, 2018) [56] | 22 | Stroop task (VR vs. 2D) | α | FFT | α average power | Gratton effect, factorial ANOVA, Regression analysis |

|

| (X. Yang et al., 2019) [45] | 60 | Virtual paintbrush with feedback | eSense algorithm | eSense algorithm |

| ||

| (Rupp et al., 2019) [33] | 10 | Attention and memory tasks (2D vs. VR) | 0.1–50 Hz, 1–40 Hz, FFT | ICA, GA of ERP, ABM software | ERP |

| |

| (Park et al., 2019) [57] | 15 | saccadic exercise | θ, α, β | 1–50 Hz | ICA, 80 μV threshold | PSD, ERSP, t-test, FCA |

|

| (Vortmann et al., 2019) [82] | 14 | VE (ring–sphere) | θ, α, β, γ | 1–50 Hz, notch, PSD | no artifact cleaning | hyperparameter optimization, LDA, Ledoit–Wolf lemma, ANOVA, 5-fold cross-validation |

|

| (D’Errico et al., 2020)) [34] | 40 | VE | θ, α, β, β1, β2 | 3–40 Hz, PSD | ICA, SNR | (θ/β), (β2/β1), (β/(α + θ)) |

|

| (G. Li et al., 2020) [35] | 50 | Oddball task | θ, α | 0.5–30 Hz, notch | ICA, 100 µV threshold | P3b latency, ITC(θ), IEC(θ), ANOVA, paired t-test |

|

| (Benlamine et al., 2021) [66] | 29 | game | θ, α, β | Distraction = θ/β Engagement = β/(α + θ) |

| ||

| (Wan et al., 2021) [36] | 20 | game (2D vs. VR) N-back paradigm | Notch, EEMD | ICA, Wavelet threshold denoising | P300, LSTM |

| |

| (Llinares et al., 2021) [37] | 160 | VE classroom | β, β2 | β, β2 relative power, Mann–Whitney |

| ||

| (Tian and Wang, 2021) [38] | 20 | Videos (2D vs. VR) | α, β | 0.1–95 Hz, notch, WT | ICA, 100 µV threshold | Mean energy, t-test |

|

| (Cao et al., 2021) [46] | 32 | VE (interior, street, park) | α | 0.1–30 Hz | 20 μV threshold, ICA | ERP, Linear Mixed Effects Models, Type II Kenward–Roger test |

|

| (Zhang et al., 2022) [73] | 16 | videos | θ, α | Granger causality, characteristic path length, GE, causal density and flow |

| ||

| (Chen et al., 2022) [87] | 28 | AR circles | θ, α, β | 0.1–30 Hz | VI, ICA, PSD, time series topography | α MI, α lateralization value, correlation analysis |

|

| (Darfler et al., 2022) [63] | 21 | visual memory task | θ, α, β | 0.5–50 Hz | ASR, ICA, ICLabel | ERSP, k-means, one-way ANOVA |

|

| (H. Li et al., 2022) [75] | 72 | restorative VE | δ, θ, α, β, β1, β2, γ | 1–40 Hz, notch | VI, 150 μV threshold | PSD, rmANOVA, alertness = β2/β1, engagement = β/(θ + α) |

|

| Time-domain Analysis | Amplitude Analysis | ERP, P300 amplitude, N1, P3, LPP, MFN, P3b, ERP mean amplitude, mean amplitudes (N1, P1, P3), N1, P2 mean and SD amplitudes, N400 | [19,26,27,33,52,55,69,74,77,84] |

| Peak Analysis | VEP, MRCP, PEN, Pe, peak amplitudes (N1, P1, P3), first maximal negative deflection after T1 | [19,27,30,31,61,81] | |

| Area Under the Curve Analysis | Area under the average EEG graph | [85,86] | |

| Latency Analysis | P300 latencies, P3b amplitude latency | [35,52,84] | |

| Time-series Analysis | VAR | [14] | |

| Frequency-domain Analysis | Spectral analysis | PSD, Welch | [13,15,16,40,57,75,76,83] |

| Directed connectivity analysis | DTF, ffDTF | [14,53] | |

| Non-linear analysis | PLV | [72] | |

| Energy and power measures | Energy, sum of power, absolute band power, relative band power, power peak, power index | [13,17,37,38,47,48,49,59] | |

| Band power ratio measures | α/β, θ/α, θ/β, (θ + α)/β, θ/β, β2/β1, β/(θ + α) | [66,75,84] | |

| Specific band activity measures | θ, α, β | [71,83] | |

| Other frequency domain measures | %θ change, ITC, IEC, α and β wave difference, mean peak frequency | [20,32,35,53,65] | |

| Time-frequency Analysis | WPD, TFR, ERSP, ERD, ERS | [9,10,13,22,26,28,39,41,43,50,53,57,60,63,64,67,84] | |

| Connectivity Analysis | Functional Connectivity Analysis, Granger causality, characteristic path length, causal density and flow, GE, PC, PCA, SCoT, VAR | [14,22,30,57,73,79,83,87,88] | |

| Topographical Analysis | Scalp maps, IC Cluster analysis, ROI analysis | [43,52,59] | |

| Nonlinear Analysis | entropy | [41,84] |

| Statistical analysis | Descriptive statistics | GA, sum of squares, mean of squares, Average log values, SD | [10,17,20,52,59,74,87] |

| ANOVA methods | ANOVA, rmANOVA, rmANOVA, MANOVA, uANOVA, fANOVA, ANCOVA, MANCOVA | [15,19,20,22,23,27,30,31,32,35,43,47,49,52,53,55,56,59,61,69,75,76,77,81,83] | |

| Nonparametric statistics | two-tailed signed rank test, permutation test, Mann–Whitney U test, Cluster-based permutation testing, WSRT, KWT | [10,15,21,22,28,31,37,41,47,48,49,65,84] | |

| Parametric tests | t-test, Type II Wald x2-tests, regression analysis, pMFLR | [16,28,31,35,38,44,53,56,57,58,65,78,81,83,84,85,86] | |

| Post hoc tests | Post hoc Tukey HSD, post hoc Dunn’s tests, Tukey’s HSD, Newman–Kleus | [15,43,56,61,81] | |

| Other methods | Levene’s test, Mauchly’s test, pairwise comparisons, REML, Gratton effect, Greenhouse-Geisser correction, BC, sBEM, Tikhonov-regularized minimum-norm, F-tests type-III sum of squares, MI lateralization | [15,16,28,44,50,52,59,69,76,83,87] | |

| Classification | Ensemble | CIT2FS, RF | [42,53] |

| Artificial Neural Networks | CNN, LSTM | [28,31,36] | |

| Other Methods | SVM, LDA, LDFA, rLDA, KNN | [5,11,12,18,25,51,62,67,80,82,89] | |

| Clustering | k-means | [63] |

Authors, Year, Reference | Review Type | Year Range | Articles Included | Main Objective | Sub-Categories | Conclusions |

|---|---|---|---|---|---|---|

| (Souza and Naves, 2021) [91] | Scoping | 2011–2020 | 40 | attention workload fatigue |

|

|

| (Shynu et al., 2021) [92] | Systematic | 2005–2020 | 44 | Environmental perception |

|

|

| (Georgiev et al., 2021) [93] | Literature | 1984–2021 | 240 | Neurorehabilitation and Cognitive Enhancement |

|

|

| (Bruni et al., 2021) [94] | Literature | 1999–2021 | 98 | narrative cognition |

|

|

| (Mostafavi, 2022) [95] | Systematic | 2015–2019 | 13 | spatial design evaluation |

|

|

| This study | Systematic | 2013–2022 | 63 | EEG cognitive assessment using HMD |

|

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gramouseni, F.; Tzimourta, K.D.; Angelidis, P.; Giannakeas, N.; Tsipouras, M.G. Cognitive Assessment Based on Electroencephalography Analysis in Virtual and Augmented Reality Environments, Using Head Mounted Displays: A Systematic Review. Big Data Cogn. Comput. 2023, 7, 163. https://doi.org/10.3390/bdcc7040163

Gramouseni F, Tzimourta KD, Angelidis P, Giannakeas N, Tsipouras MG. Cognitive Assessment Based on Electroencephalography Analysis in Virtual and Augmented Reality Environments, Using Head Mounted Displays: A Systematic Review. Big Data and Cognitive Computing. 2023; 7(4):163. https://doi.org/10.3390/bdcc7040163

Chicago/Turabian StyleGramouseni, Foteini, Katerina D. Tzimourta, Pantelis Angelidis, Nikolaos Giannakeas, and Markos G. Tsipouras. 2023. "Cognitive Assessment Based on Electroencephalography Analysis in Virtual and Augmented Reality Environments, Using Head Mounted Displays: A Systematic Review" Big Data and Cognitive Computing 7, no. 4: 163. https://doi.org/10.3390/bdcc7040163

APA StyleGramouseni, F., Tzimourta, K. D., Angelidis, P., Giannakeas, N., & Tsipouras, M. G. (2023). Cognitive Assessment Based on Electroencephalography Analysis in Virtual and Augmented Reality Environments, Using Head Mounted Displays: A Systematic Review. Big Data and Cognitive Computing, 7(4), 163. https://doi.org/10.3390/bdcc7040163