Abstract

The paper discusses the role of artistic expression and practices in extending realities. It presents a reflection and framework for VR/AR/MxR, Virtual Reality to Augmented or Mixed, or Extended Realities, as a continuum from completely virtual to mixed to completely real. It also reflects on the role of contemporary art practices and their role in extending or augmenting realities, from figurative to abstract, street art, to the scale of landscape art. Furthermore, the paper discusses the notion of live performance in art, which is common in music, contrasting a real-time ‘improvisation’ practice and composition practices, again a continuum as several mixed modes do exist. An effective technique for extending realities is video projection. This is discussed with examples from artistic expressions in various forms, and illustrated with some examples from the author’s practice in interactive audiovisual installations, and real time mobile projection performances and activities.

1. Introduction

The focus in this paper is on using projections to extend the real world, so that the computer generated (video patterns and audio in this case) is overlaid, mixed in with the physical, real world. This is a technique also used in design, to project textures on objects, interior models, and architectural models [1,2], enabling designers to quickly see what effects different textures and other projected features would have in the real world. Projections are also used in theatres to create mixed environments, and in film sets as a real-time alternative for blue or green screens and CGI (computer-generated imagery) in post-production.

The notion of using projections as a method for extending realities is illustrated and discussed in this paper with examples of video projections (and often sound), and further examples are presented in the companion paper [3] with projects on Cockatoo Island in Sydney Harbour, using several landmark buildings and structures such as walls, both indoors and outdoors, as projection surfaces. The island became a canvas for these audio-visual projections.

This paper is about extending realities or resonating environments by using interactive audiovisual projections. These projections are situated in the real world, in a meaningful way, and are generated and interacted with in real time. In that sense they are a kind of ‘extended reality’, Mixed Reality (MxR) or Augmented Reality (AR).

Virtual Reality (VR) is usually defined as ‘computer generated’. We often think of VR/AR as using goggles (Head Mounted Displays) or screens, looking at virtual worlds such as the Metaverse (the term Facebook adopted in 2021, and originally proposed by Science Fiction writer Neal Stephenson in the early 1990s). However, this paper focusses on using projections to place real-time video and audio in the existing environments (indoors and outdoors, urban and nature), creating shared environments for audiences to experience.

It is also good to emphasise that VR/AR is not just about the visual, potentially VR/AR is also addressing our other senses, such a sound, touch, movement, and smells. Many touch screens and trackpads nowadays generate a ‘virtual click’, in response to pressure simulating a mechanical key click (for instance Apple’s Taptic Engine). In AR/VR often motion platforms are used, to address the kinaesthetic sense of the participant.

In the paper virtuality is discussed as not just computer generated. Many human made artefacts represent an element of virtuality, such as objects that copy existing natural things (for instance, plastic flowers or fake fir trees), or figurative paintings (even many abstract paintings refer to other things). A definition of Virtual Reality, one that Gerrit van der Veer often uses, is that it is “known to be not real”. This is a useful definition as it steers us past the philosophical questions around what ‘real’ reality is: from Plato’s shadows in the cave perceived as reality, Baudrillard’s simulacra such as maps that replace the territory and seen as real [4], to living inside the computer simulation such as in William Gibson’s cyberspace, The Matrix, eXistenz, Tron, and many other science fiction stories and movies.

2. Background—An Extended Reality Spectrum

The work presented in this paper is about mixed reality. We can define an Extended Reality Spectrum, a continuum from VR, to MxR, and AR, a range from the completely virtual to the blending of the virtual and the real. Of course in most situations multiple areas of extended reality happen simultaneously, and not always in obvious ways, things may seem real that are in fact virtual and vice versa. VR and AR is not restricted to goggles, the audiovisual headsets that in the past were also known as head-mounted displays (HMDs) in the previous wave of Virtual Reality in the early 1990s [5]. VR and AR encompasses also screens, video projectors and loudspeakers.

2.1. Art and Extending Reality

Many forms of artistic expression engage with extending or augmenting reality. In visual art, artists have moved from painting realistic images to abstraction, particularly since the beginning of the 20th century. This progression is exemplified in a range of successive styles, to do with the interpretation of the reality (impressionism), bringing out or expressing the otherwise invisible or unperceivable (expressionism), combining multiple viewpoints in one image (cubism), and completely abstract painting [6]. An art form that deliberately plays on the human perceptual system is op-art, through painted patterns creating optical illusions such as movement or perspective shifts [7]. The pioneering work of Bridget Riley is the most well known in this field since the 1960s [8]. Early in the 20th century the Gestalt psychologists (who showed that we actively perceive reality ‘as a whole’, a ‘Gestalt’ (form or shape) in German), developed a range of optical illusions to show how the human perceptual system re-constructs reality in an implicit, direct way.

The work of graphic artist M. C. Escher since the 1950s show many of these elements, of nested and recursive image patterns and depictions of buildings with impossible perspective [9] (some of this was already explored by Piranesi in the 18th century [7]).

Lucio Fontana’s technique (since the 1940s) of cutting and piercing his canvasses show a desire to move away from the flat surface, and creating depth and alienation (he called them Concetto Spaziale, spatial concept) [10].

In installation art there are many examples of extended realities, as experienced by the audience who engage with the works of for instance Olafur Eliasson, who works with light, water vapour, ice, reflections [11], Rafael Lozano-Hemmer, who works with interactive projections and multimedia [12], or Cai Guo-Qiang who creates monumental scale art works of structures and animals [13] (often in a spatial configuration which suggests stop motion, like the chronophotography of Eadweard Muybridge in the late 19th century in which he studied human and animal movement). Guo-Qiang also works with fire and explosions.

It is also worth noting that ‘real’ reality is often incredibly rich and dynamic, and that our perceptual systems are exceptionally good at perceiving this as a stable and constant ‘reality’. Colour perception is a good example, the ‘colour’ of objects is perceived as constant and fixed, while the actual wavelength of the visual spectrum received through the optical input is actually constantly changing depending on the environmental light [14]. This is illustrated in the collage of images of one building under different lighting conditions in Figure 1. We are creating this awareness of stability effectively through illusion [15,16]. To paraphrase Van der Veer, ‘reality is what we know is real’.

Figure 1.

A collage of images (selection) of the Sydney Opera House, as part of a larger collection of several hundred images taken by the author when passing by on the ferry for over a decade.

2.2. Extending Reality through Public Art and Land Art

While art works in museums and galleries are often presented in the neutral environment of the ‘white cube’, isolated from the rest of the world (or at most, in relation to the other art works on display), public artworks are deliberately in dialogue with the real world. Many public artworks are ‘site-specific’: designed and developed to respond to the environment. This is particularly seen in urban environments, but also in parks and in nature, in the case of Land Art [17]. Artists such as Richard Long [18], Andy Goldsworthy [19], James Turrell [20], David Nash [21], and Antony Gormley have engaged with artistic expression on a landscape scale, interacting between the natural and artificial environments. This is a form of ‘extended reality’ as well. It is interesting to see what strategies they have used, in order to translate and relate the landscape interactions to the gallery or museum. Richard Long often undertakes walks in landscapes and documents the traces he makes in photographs and stories, presented to the public in the gallery and in publications. However, Long also often collects objects such as rocks and sticks, and re-situates these in a gallery or museum context, creating new patterns and sculptures out of these found objects. Antony Gormley’s outdoor sculptures made of iron engage with the landscape, conceptually but also physically: the rusting of the iron reflects the interaction with the environment, in an ever developing patina [22]. The same process can be seen in the site-specific monumental scale rusting iron sculptures of Richard Serra, and those of Eduardo Chillida (the latter often at the edge of the sea, to intensify the process).

Andy Goldsworthy often works in nature, creating sculptures and structures in situ, out of objects encountered in nature (sticks, rocks, pieces of slate, ice). These structures are often deliberately instable, and dynamic, using streams, tides, wind, melting snow, and other elements. In the documentary Rivers and Tides we can see Goldsworthy at work with an immense dedication to operating in harmony with nature. It shows through what lengths of trouble and hardship he goes, in order to create the pieces and achieve meaningful expressions [23].

David Nash, a sculptor who uses wood that is often young and still deforming, creating dynamic sculptures. Nash takes this notion of working with nature even further, in his ‘growing works’, which he started with in the late 1970s. Nash planted and pruned trees, taking decades to create a shape such as the Ash Dome in Wales—a circle of ash trees planted in a circle in 1977, and through pruning eventually forming a dome shape, slowly evolving over time.

Inspired by these works, an interactive installation was created and selected for the art exhibition of the conference on Tangible and Embedded Interaction in Sydney in February 2020. It used driftwood, pumice (floating in water), and glass found on beaches in Sydney harbour and elsewhere, combined with sensors, projections, video screens, loudspeakers and computers. Through the sensors, embedded in the found objects, as well as in the space, the audiences were enabled to manipulate the video projections and screens to create new patterns [24].

2.3. Urban and Street Art as Extended Reality

Large scale murals in the urban environment are not always graffiti, they are often commissioned by councils or building owners. A particular case is the trompe-l’œil technique in painting where hyper-realism is combined with forced perspective creating an illusion of depth on a flat surface. This is used in classical imagery, but particularly effective on large scale walls, for instance creating false façades as shown in Figure 2.

Figure 2.

An example of a trompe-l’œil painting emulating a façade with windows (the two rows of windows on the right are not real, as can be seen in the close-up in the right image). This is on a building near the Centre Pompidou in Paris.

A more extreme version of art in the urban public space is Street Art, mixing and extending the physical environment, often driven by individuals and communities rather than curators and officials (who, as Banksy humorously reflects on in his first book Wall and Piece [25]—as well as in his artworks themselves—are actively trying to eradicate any trace of this form of expression, considering all of it vandalism). Street Art is a strong example of extending reality, from the blunt vandalism to large scale artistic expressions in the form of murals, with their sophisticated messages in a range of modalities [26]. It is also very dynamic, walls get painted over regularly, creating layers of sometimes related meaning and expression, often responding to the urban environment.

Italian street artist Blu has taken this dynamic nature to an extreme level by creating stop motion animation of his murals—each frame is a single painting. For instance his eight minute animation MUTO from 2008 shows this fascinating process, creating a motion on otherwise static walls [27].

Although not as famous as the Melbourne laneways graffiti, Sydney has a rich tradition of street art and large murals in suburbs such as Newtown, Erskineville and Marickville [28]. Particularly notorious is May Lane and surroundings in the suburb of St Peter; even the road surfaces are covered in the most colourful and ludic imagery. The May Lane Street Art Project contributed to the Outpost festival on Cockatoo Island at the end of 2011 [3] as part of a touring exhibition (which I saw in Adelaide earlier in 2011) [29].

Other modes of expression also occur in Street Art, such as the ‘guerrilla knitting’ or ‘yarn bombing’ when knitted structures are added to the environment, on physical objects such as lamp posts, bike racks and traffic signs. In Sydney a common presence are the little (and sometimes not so little) concrete sculptures by the artist Will Coles. These are 1:1 facsimiles of everyday objects such as phones, remote controls, squashed cans, guns, radios, even televisions and washing machines cast in concrete and glued to walls, kerbsides, and other structures in the public space all over Sydney since 2007 (the sculptures that are sold are usually made out of more expensive materials and finishing). The objects often have words on them, commenting on their ways of use, and materialism and consumerism in general (as it is put on his web site [30]: “embedding dissonant words and sentences into his concrete casts of seemingly banal objects”). Coles has since left Sydney (in 2015), but his traces are still around. There is still an abundance of sculptures, often concentrated around art galleries where he exhibited (such as the Casula Powerhouse Arts Centre in 2014, and the Outpost festival). With my son, who was born in 2010, we often find new spots where the Will Coles sculptures still survive—even in an obscure alleyway in Chippendale (the suburb where we moved to in 2021) he found one.

Coles often used existing structures as surfaces to mount his sculptures on (with strong glue), appropriating raised horizontal surfaces as pedestals. These are often bland, sometimes leftover structures of building infrastructure, and by placing the artwork permanently on it it gets enriched and enhanced, raised to the status of pedestal.

There are several (semi-)official sites where councils support and maintain the sculptures, particularly the bigger ones (there is a concrete washing machine sculpture in Newtown close to the police station…) while others are more stealthily placed and obscure. The ones on walls often get graffitied, or spray-painted over. People often try to steal them, which usually results in the sculptures breaking, and over the years I have salvaged a few loose pieces that I found. However, I was also able to buy one of the pieces, a concrete phone sculpture #24/50, based on the Nokia 8250, with the slogan “H8” on it, at the Outpost Street Art festival on Cockatoo Island in 2011 (as can be seen in Figure 3).

Figure 3.

Concrete sculptures by Will Coles, from left to right: my original phone, and in the public space: a washing machine, a shoe, and a balaclava.

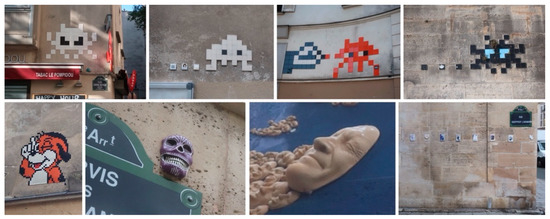

In other locations one can encounter other examples of physical objects as graffiti. On a recent visit to Paris I realised how much this mode occurs there, at least in the area where I was (Le Marais and surrounding arrondissements) almost at every street corner (often where the street signs are) there are sculptures by Invader (colourful mosaics made with small tiles often of the space invader character), Zigom.art (also mosaics, of cartoon characters), small ceramic skulls by Titiskull, the moulded face masks by Gregos, various printed tiles and mirrors, and small picture frames with images of refugees by Backtotestreet [31], as shown in Figure 4. Invader even developed a smartphone app, Flash Invaders, a ‘reality game’ with which people when ‘hunting’ for the sculptures can upload their own images of them and earn points. He also creates maps, so that his sculptures can be traced.

Figure 4.

Examples of physical street art in Paris: Invader (top row), and bottom row from left to right: Zigom.art, Titiskull, Gregos, Backtothestreet.

Another anonymous French street artist, Ememem, fills in cracks and holes in pavements and roads with beautifully designed colourful mosaics, particularly in Lyon.

Using video projections instead of paint or other materials, the content is less permanent, and potentially less controversial due to its impermanence, it does not alter or damage the surfaces addressed. Over the last decades, as video projectors have been becoming more powerful and more affordable, people have increasingly been using this medium for public expression, ‘projector bombing’ in the public space. While this can still be controversial, depending on the messages and meanings represented, it does not have any lasting effect on the environment and does not leave physical traces.

The projects described in this paper show the potential of this approach, with a particular focus on creating dialogues and reflective relationships with the surroundings, and even being able respond in real time to an environment.

2.4. Extending Realities in Real-Time

Video art does not have a strong culture of live performance like music does. In music, live performance is common, and with music performed on traditional instruments, even essential. Electronic music shifted the trend during the 20th century, with the invention of the tape machine (and disc-based recording before that) and computer generated music [32]. However, in the 1980s, with the invention of MIDI (musical instrument digital interface) protocol, new opportunities for live performance arose, leading to new instruments [33,34]. In music, performances can be even entirely improvised and spontaneous, as a result of a player’s imagination and often through the musical and conceptual communication between multiple players. However, most music we know and hear is composed, either by the traditional method of writing down notes on a score, to be performed by musicians live (and usually recorded), or in the studio by putting things together using multitrack recording and editing (on tape, in the second half of the 20th century, and in the computer since the early 1990s). Mixed forms are common here too, a band will perform their songs live and can create variations and improvisations (often the audience response or other aspects of the setting can play a role), improvising jazz musicians often have a set of rules or musically or gesturally communicated cues to guide the emergence of their music, or in the case of a composed piece of classical music traditionally there can be room for an improvisation within the structure for a soloist to express (a ‘cadenza’). So here too we have a continuum, from the completely spontaneous and unprepared improv on one extreme, to the composed and meticulously notated on the other end.

Painting is usually a composed rather than live performance activity, but there are exceptions such as action painting, with Jackson Pollock in the late 1940s and early 1950s as the most well-known example.

There is not much of a tradition in video art for performance, the closest to it would the art of VJing, where a VJ (Video Jockey) can play images mixing or contrasting with the music, or the even stronger connection when the same software that generates the music (under a performer’s control) also generates the images (Alva Noto is a good example). I have seen some very effective extended dance performances, and there are examples from theatre (particularly opera).

I have always tried to approach video in the same way as music, playing with it in real-time, either by myself, other performers and/or by the audience [35] (pp. 196–231).

3. Human–Computer Interaction (HCI) and Interactivation

The relationship between people and digital technology is studied in field of Human–Computer Interaction, including the design of interfaces [36,37,38].

In order to perform and control audiovisual content in real-time, objects and environments need to be ‘interactivated’. The term ‘interactivation’ means ‘making things interactive’, which is not a goal in itself but refers to designing things that are interactive so that people’s wishes, desires, and expressions are supported in work and play [35].

As interactive technology gets increasingly embedded in our environments and our artefacts, leading to Ubiquitous Computing, we can design or allow the emergence of electronic ecologies, going beyond mere Internet of Things (IoT). Interaction is defined as a mutual influence and exchange between people and technology [39], by using sensors (input) [40] and actuators (output), potentially addressing people’s multiple sensory modalities (such as sight, touch, hearing, movement), and being influenced by people’s multiple expressive modalities (such as gestures, speech, movement) (multimodality) [41]. This interaction can have multiple levels simultaneously or sequentially, and information can be represented in a range of (semiotic) modes, from the figurative (mimetic, iconic, such as the pictures used in this paper) to the abstract (symbolic, arbitrary and learned representations (such as the words used here) [42,43]. In addition to these modes of representation, objects, environments and artifacts also have their own presence. They present themselves including their affordances, the cues, hints, invitations or obligations, presenting their potential for certain activities, utilisations and behaviours. This is an ecological concept, emphasising the unique relationships and interactions between people and their environment, as proposed by J. J. Gibson in his approach to perception as an (inter)activity and unconscious process (‘direct perception’) since the 1950s and culminating in his 1979 book The Ecological Approach to Perception [44].

4. Interactive Video Projections for Extending Reality

Over the last two decades, projecting on buildings has become more common, often in the form of festivals such as Glow (Eindhoven, The Netherlands), and Vivid (Sydney, Australia). The large scale video projections are often mapped onto the building façade they address, correcting for projection angles and matching image features to the characteristics of the façade (‘projector mapping’, which for instance could project a virtual window on an actual window, or have an animated character progress along a the line of a ledge on the façade). The Vivid festival has since its first incarnation in 2009 almost yearly presented many projections, in the winter (May/June, here in the southern hemisphere) often with an emphasis on the popular and spectacular more than the artistic (it has become an event that draws huge crowd so in that sense it is very successful), although often some fascinating experiences with lights and projections (and sound) can be found in the more peripheral installations. The centre of the event is Circular Quay, the main ferry station on Sydney Harbour, with the largest projections on the Museum of Contemporary Art (MCA), Customs House, and the Opera House (“lighting up the sails” as Vivid advertises this, though Jørn Utzon never intended the characteristic shape of his architectural design of the Opera House to be “sails”, but rather representative of the architectural style of designers such as Oscar Niemeyer, Le Corbusier, and Eero Saarinen in the late 1950s and 1960s; Utzon and engineer Ove Arup thought of the shapes as ‘shells’). The iconic, curved and shaped outline of the building is a challenging projection surface, and Vivid and other events over the years developed more meaningful projections—initially it was more like splashing video images and animations on the surface of the Opera House but later projections were more integrated with the shape and meaning of the building.

Since the early 2000s I have experimented with the medium of video projection, realising its potential for animating and interactivating otherwise static environments. I have been exploring its expressive abilities in addressing and extending the environments, finding projection spaces indoors and outdoors, on architectural structures and in nature. Often this involved projection surfaces that deliberately distorted the image, through their shapes and surface texture (ornamented walls, towers, trees, rocks, cliff faces), making the surface properties part of the resulting image composition. I also experimented with moving projectors, and even mobile projections. These projects are presented in earlier publications [35], in particular the interactive kaleidoscopic projections (the Facets range of pieces) [45] and the mobile projections (VideoWalker events) [46]. In the sections below some of these are summarised, and a selection of more recent explorations are added and reflected on.

4.1. Facets Interactive Video Projections

The first Facets kaleidoscopic projection was in the Art Light exhibition, as part of the first Vivid light festival in Sydney, in May 2009. The projections were developed in my Interactivation Studio at UTS, first for the opening in August 2008 and then regularly on display, by projecting with multiple projectors on the back wall visible from the public courtyard outside, as semi-permanent and dynamic wallpaper. The project started as a means of experimenting with a range of sensors and custom-built interfaces, to explore the mappings between movements of the audience, and the video patterns. The videos in the kaleidoscope were pre-recorded, footage of patterns in nature that I collected over the years, including underwater imagery. However, there is also a video-feedback mode, where the camera points at the projection image, resulting in a very richly dynamic and unstable (depending on the environmental light parameters) often fractal like patterns. These videos were being manipulated in real-time to form the kaleidoscopic patterns. Several versions of this installations were developed, at various scales as can be seen in Figure 5 and described below.

Figure 5.

Some of the Facets pieces, bottom and top left the first Facets piece in the UTS Tower as part of the Vivid festival in 2009, and top right the piece in development in the Interactivation Studio as ‘interactive wall paper’. In the middle an image of Facets Pool in the Studio, and top middle the final Facets Expanding Architecture piece in 2011.

For the Facets piece in the Art Light exhibition as part of Vivid in 2009, in the public space of UTS main foyer, we had a custom made projection surface of 12 m × 3 m with three projectors. This screen was semi-transparent, with the aim to overlay the resulting video patterns projected while being able to see the space behind and around it, to extend the environment rather than replacing it. There were multiple sensors embedded in the furniture, so that the pressure and balance of people on the seats was detected, and several motion sensors built in wooden blocks that the audience could manipulate. Through these sensors, people were able to create the kaleidoscopic patterns, responding to their movements and in effect co-creating the images. This was always the intention, to research how audiences can participate and become engaged in generating their own imagery. The resulting video patterns projected were visible from the outside of the building at night, engaging in a dialogue with the architectural structure of the UTS Tower. Further versions, all described in the paper Interactive video projections as augmented environments [45], were Facets Through the Roof (five circular kaleidoscopic projections on the curved ceiling, commissioned by the Powerhouse Museum in Sydney—each projection was controlled by a unique set of interfaces, including a sensor jacket), Facets Kids (also in the Powerhouse museum, as an audience interaction study [47]), and Facets Pool on the floor of the Interactivation Studio as part of the Sydney Design Festival, all in 2009. The latter was further developed in the piece Facets Expanding Architecture commissioned by the Expanded Architecture exhibition in Carriage Works theatre in 2011 [48]. The projection used footage of architectural structures that I had collected, including the building itself (a converted former train workshop). It was presented in a circular projection on the floor, with kaleidoscopic patterns controlled by the audience by motion sensors hanging above them.

In 2014 I developed a smaller version of the Facets interactive kaleidoscopic patterns. This was a commission for a group exhibition in small gallery in the suburb of Chippendale, near UTS. The curator challenged all the exhibitors with a specific and very limiting brief: the works had to each fit in a volume of 20 cubic centimetres, and had to be black and white or grayscale, as reflected in the title of the exhibition 20 × 20 × 20/no colour, and in the title of my piece, Facets—shades of grey. It was interesting to explore the more intimate scale of this size, placing three proximity sensors around the screen which allowed the audience to create the patters by approaching it with hand or other movements, standing above it or crouching down as can be seen in Figure 6.

Figure 6.

The Facets—shades of Grey piece in the NG Gallery, Chippendale, May–June 2014.

To comply with the instruction to not use colour I programmed a filter in my software to turn the video patters into greyscale. Except when no one was around—a further sensor mounted on the front of the small plinth which housed the computer and interfaces, detected the presence of people (a PIR (Passive Infrared) motion sensor) so that when there was no one in the space the installation would resume full colour mode.

For this exhibition I also experimented with a logging system; I programmed in the software the functionality of storing the audience movement data, to gather insight in the actual interactions taking place with the installation. In this data I could see for instance that there was a lot of interaction on the opening night, except during the opening speech by the curator as this was on another floor. It showed that the logging worked, and that this data potentially can be used to analyse audience interaction in more detail. In an earlier version of the installation, Facets Kids, we observed and analysed the audience interactions by logging through observation [47] which was very labour intensive, having the automatic logging could be a great improvement.

4.2. VideoWalking

The VideoWalker performances have always been always about framing and re-framing audiovisual content, resituating the material in appropriate contexts, being able to respond to the environment both in real-time as well as through prepared spatial compositions [46]. It is about ‘liberating’ the projector; this type of equipment is usually bolted to the ceiling facing a fixed screen. Taking the projector for a walk, gives the freedom to potentially project anywhere, on buildings, walls, trees, cliffs, caves, etc., reframing the image in real-time. These explorations are inspired by the notion of the Dérive, or wandering, of the Situationist art movement from the 1960s. These wanderings are not aimless, but explorative and free, allowing for serendipitous encounters and opportunities. Carrying a mobile video projection instrument allows for this mode of exploration, one could call this nomadic projection a ViDérive.

The VideoWalker is also inspired by the silent movie by Dziga Vertov, Man with a Movie Camera from 1929 (accompanied by live music), in which we see (indeed) a man with a movie camera capturing a day in the life of people in the Soviet Union interacting with the technologies of that time. (The visual style of the movie (the patterns captured, particularly of mechanical motions), also has inspired some of my footage that I capture of patterns that I use in interactive video-collages and kaleidoscopes (Facets) and for real-time projection.) Furthermore, while we have developed the capturing of images in the real world to a great extent, from professional photography and film, to using smart phones by everyone, the placement of image material back in the real world is quite rare. The VideoWalker aims to actively address this unbalance, as an act of interactivism, placing the captured images back into the world.

4.3. VideoWalker Mobile Projection Set-Up

Since the first experiments and performances with a portable projection set-up in 2003, both outdoors and indoors, the instrument has been developed to a versatile and reliable level. The instrument consisted of a medium-size projector, speaker, large battery (8 or 12 kg, lasting about 40 or 60 min), inverter (converting the 12 V DC from the battery in 240 V AC to power the projector and speaker), and a dedicated set of sensors mounted on top of the projector which controlled dedicated audiovisual software on a laptop computer (Apple MacBook Pro). The software is written in the graphical programming language MaxMSP/Jitter [49], using the Jitter objects to play videos and sounds, controlled in real-time by the sensors.

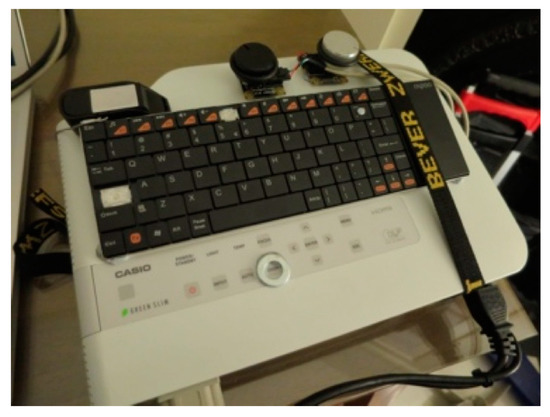

From early 2014 I used a Casio XJ-A141 XVGA Laser-LED projector, which has the advantage of not requiring a start-up or cooling down delay as the regular (mercury-lamp based) projectors do, which often interfered with the performances. The LED light source is also much longer lasting (>20,000 h) than the mercury lamps (<1000 h which are very wasteful), and starts up in 5 s. It is about the size of a sheet of A4 paper, and 4 cm thick, and weighs 2.2 kg. It outputs 2500 Lumens, strong enough to address walls and surfaces of buildings, trees, rock faces, etc. The audio is usually displayed on a Genelec 8020A active studio monitor speaker of 3.7 kg, 20 W (SPL 96 dB), though sometimes a lightweight less powerful Bluetooth speaker is used. All items (battery, inverter, laptop, speaker) are carried in a small but sturdy backpack. The projector is small enough to be carried with two hands, while operating the controls mounted on top of it in addition to some of the built-in controls. Most of these standard controls are hard to use in a live situation, for instance optical zoom and focus need to go through a nested menu, and hard to operate as these buttons are tiny—some tactual markers were added to make it easier to find them in the dark for the cases where these buttons had to be used (see Figure 7).

Figure 7.

Part of the videowalker instrument: the handheld projector and dedicated controls.

Digital equipment manufacturers tend to map continuous parameters such as brightness and volume to up/down buttons, to save costs. However, mapping an analogue (continuous) parameter such as zoom, to binary buttons (up/down) is never very appropriate in my view.

The custom controls were made up of standard components, such as a USB keyboard to choose the audiovisual material, and Phidget rotary control devices (also USB) [50] to manipulate the shape and size of the image and the volume of the audio, a bricolage of parts.

With these dedicated controls the player can select video (and audio) clips, change the speed of the clip, the sound volume, and the size of the image, and the shape (rectangular or ellipse). This zoom function is important in order to quickly fit or frame the video image in a chosen area on a building or nature, by just turning the knob. The circle shaped image is essential for larger surfaces where the image is not framed, it acts a bit like a search light or a torch, in these cases where the rectangular image looks very inappropriate. The rectangular shape is suitable when it can be framed in the features of the surroundings, such as on a building façade. Sometimes the auto-keystone correction feature of the projector is used. Of course holding the projector gives a lot of control over the placement of the image, it can be tilted easily and the size of the image can be changed by walking closer or further away from the projection surface.

Outdoor projections particularly on buildings often use projection mapping as mentioned above. This adapts the projection to the projection surface, such as cutting out areas in the projection to match a window or other feature on the façade. It is also used for shaping the image to compensate for projection angles (keystone correction). My portable set-up is not that sophisticated, but unlike these other situations this set-up is able to respond in real-time, with the projector mapping facilities described in this section (the dedicated controls, and the freedom of movement of the projector being held) it usually gives enough control over the placement of the image.

4.4. VideoWalker Events and Experiences

By always collecting video material from exhibitions visited, as well as other events, buildings, and landscapes and other features in nature, a bank of videos that are useful in VideoWalker projects has been developed. This enables the performer to respond quickly to the surroundings. Depending on the context, dedicated clips relevant for the situation are included in the set-up.

4.5. Night Garden

For a street art festival in the suburb of St Peters in Sydney, Night Garden by the Tortuga Studio in September 2014, video material was prepared from the dense graffiti and murals captured locally in addition to other suitable footage. It also included footage of some of the other artists in this event, from an event a couple of days earlier (Beams festival in Chippendale). In this case, I was able to project the image of a neon artwork (of the word “Dystopia” which partially flickered revealing the word “utopia”, by the artists Jasmin Poole and Mike Rossi) on the wall before the artists were finished installing it. They appreciated the act, which was presented as a homage. Later I projected the text on the iconic chimneys of the nearby former brick kilns in Sydney Park. This was part of the VideoWalk projecting the colourful graffiti patterns around the laneways and buildings in the area at the Night Garden event. At this event I also set up a version of Facets, using multiple video projections from the inside on several windows of an empty building overlooking the main laneway of the festival. The patterns were controlled by the audience movements through motion sensors hanging above the people. Some of the projections are shown in Figure 8.

Figure 8.

Projections at the Night Garden event: top row: video of the Dystopia/Utopia words on the location of the actual installation (left) and on the chimneys (mid and right) (camera by Wendy Neill), mid row: VideoWalker projections in the streets (camera by Annie McKinnon), bottom row: interactive video projections from the inside of a building.

4.6. Electrofringe BYOB

The same set-up and technology as the Night Garden was used to create a version of Facets for the Electrofringe BYOB (bring your own beamer) event as part of the Vivid light festival in May/June 2014, at Walsh Bay Pier (a large 1920s former wharf building, empty at the time and also often used by the Sydney Biennale). The VideoWalker was set up as an installation, eventually picked up and carried with me so that I could project on other surfaces in the building, creating dialogues with some of the other projections as shown in Figure 9, and then outside in the historic precinct of the former wharves at Walsh Bay.

Figure 9.

Projections at the Vivid festival at Walsh Bay; from left to right: picking up the projector (the projection on the right is Facets, the left is VideoWalker), taking the video for a walk, and responding to other projections with related video (camera by Josh Harle).

4.7. MCA Art Bar

On another occasion, as part of the Art Bar event in February 2014 at the Museum of Contemporary Art in Sydney, there was the opportunity to VideoWalk around in the museum, using footage from earlier exhibitions at the site to address walls and other surfaces (particularly the work of Olafur Eliasson in early 2010 [11], and interactive video works by Rafael Lozano-Hemmer in early 2012 [12], both artists whose work I greatly admire and am inspired by). Furthermore, imagery of events on the evening was captured, and of the current exhibitions, to re-situate and distribute in new ways. The latter needed to be done quick, because although it was a commissioned performance and included in the official programme for the evening, we got in trouble for projecting in certain areas of the museum. Which meant we could not do a practice run of filming the activities, and had to improvise without an attempt to rehearse. This eventually worked well with a piece by video-artist Shaun Gladwell, whose work I greatly appreciate. The piece, Storm Sequence (2000) is one of Gladwell’s best known works. It has been on display for years at the MCA, and was included in his solo exhibition in 2019 which also had AR versions of his pieces in specific locations so that it only showed on a handheld screen as if it was on the wall (through a smartphone or tablet app) [51]. The video shows the artist (in his trademark slow motion) doing tricks on a skateboard on a concrete surface at Bondi beach in Sydney, while dark storm clouds are approaching, eventually bring the first drops of rain. It is very atmospheric, a bit brooding and with a looming presence of the storm. On the evening a short (28 s) fragment was captured of the 7.59 min Storm Sequence piece, and loaded it in the VideoWalker set-up. The fragment was projected back on to the actual projection, and then slowly moved away from the screen, seemingly cloning the image by ‘peeling’ it off the screen, and then taking it ‘for a walk’ around the museum as shown in Figure 10. Great care was taken not to interfere with the other artworks encountered on the way, instead projecting on plinths, walls and nooks (and responding to questions of inquisitive museum visitors). It seemed appropriate to me, a sense of liberating Shaun Gladwell from his dark room at the back of the museum where he had been waiting for the storm for so many years, and taking him around the museum, meeting visitors, later joining other people dancing in the bar area, and eventually being projected on the hull of a large cruise ship docked at the terminal at Circular Quay.

Figure 10.

Taking a video art piece, Storm Sequence (2000) by Shaun Gladwell, for a VideoWalk in a museum (camera by Wendy Neill).

4.8. VideoWalker Approaches

Over time, working with the VideoWalker set-up, a set of rules, approaches and strategies were developed. With the image material at hand, the performer is able to create real-time audiovisual responses to the environment. Often meaningful connections are sought, between the projected content and the features of the environment, sometimes matching, at other times contrasting. For instance, on one cliff face on Cockatoo Island where there used to be a lift that brought the workers up to the buildings on the plateau above, footage was projected which was taken from inside a lift (of Gaudi’s building Casa Batlló from 1904, showing the blue tiles of the Modernista (Art Nouveau) staircase in vertical motion). Another example of matching was the projection of a video of neon coloured jellyfish on the water around Cockatoo Island, meeting the actual jellyfish floating around in the bay at that moment.

In other cases contrasts were created, for instance on a bland straight wall projecting colourful footage such as close up movements across graffiti murals, or by projecting images of driving through a tunnel to create depth on a flat surface. It is also often used to create motion and dynamic patters on otherwise static environments. However, there can also be a ‘resonance’, between the images chosen to be projected and the surfaces and context.

An important approach is the mode of reminiscing, creating ‘echoes’ or ‘reverberations’; recollections of past events, as in the example of the museum projections mentioned above, and particularly as used in the VideoWalks on Cockatoo Island (see the paper Island Design Camps—Interactive Video Projections as Extended Realities [3]).

Projecting on buildings is a kind of graffiti (particularly when using footage of close-up motions of actual murals), but generally not seen as vandalism as it is not permanent, not damaging, not leaving any traces.

The ability to control the material and framing in real-time is essential for these performances and explorations. This allows the player to improvise and respond to the environment, such as described above at the Art Bar event in the museum. In other instances it is possible to prepare and rehearse the material and the placement in space, such as at an earlier public performance at the SEAM Symposium Spatial Phrases [45], and at the Island projections [3]. This is on the same improvisation—composition continuum in musical practice, as described earlier.

VideoWalks usually take place at dusk. I have found that at night the projections can be quite overpowering, taking over the environment instead of creating a dialogue (resonating or contrasting) with it, while during daytime of course there is often too much light to be able to see the projections. At dusk, the audience is able to see enough of the surroundings to see how the video fits in the context (dawn would be good too, but that is a less popular time to get an audience). Particularly when capturing these events on video, as cameras tend to have less dynamic range in light levels than the human eye, the result is a focus on the image projected over the surroundings and mostly what you see in a videorecording of such an event is the projected image which makes it like watching TV or cinema again. Some environmental light is needed to capture the context in the video recording of the event. Furthermore, at night in many locations there is an abundance of artificial lights, and these are the worst enemy of the projections. Special considerations had to be taken to avoid these lights, if possible by having them turned off.

5. Discussion and Conclusions

This paper has presented some key terms and frameworks, approaching our ‘reality’ as an already multi-layered presence some of which is more ‘virtual’ than others. To explore and illustrate these notions of mixed and extended realities, projects have been discussed that use audiovisual projections to overlay layers of meaning and reminiscence, particularly through the re-projection of previous events relevant for a certain location, creating echos and resonances. At various points in the paper there has been a reflection on the blurring of the real and the virtual.

The mobile projector set-up presented here has been deliberately designed to be composed of mostly off-the-self parts, to demonstrate that anyone could build such an instrument. Further improvements to the set-up are considered. First of all the weight is an issue, still using lead-acid batteries as that was the most available technology 20 years ago and while a lighter battery has been used later it is still quite heavy. In the near future the use of LiPo (Lithium-Ion Polymer) batteries will be explored, and a different voltage convertor—or, even better, try to power a projector at their lower internal DC voltage (the earlier projectors, which I opened to inspect, were not suitable to by-pass the mains circuit). Meanwhile, ‘picoprojectors’ and other small LED based projectors have become a lot stronger in light levels, and while it does not yet seem feasible to use these at the monumental scale as applied in the VideoWalks, they are suitable for more intimate, subtle projections, under suitable (low environmental light). These are also usually DC powered, so that there is no need for an ‘inverter’ (mains AC to DC converter) and many already have a built-in LiPo battery. Recent experiments with a small 80 ANSI Lumens handheld picoprojector allowed certain uses. It was taken to Cockatoo Island and to try out some projections there. The picoprojector was taken on a visit to the Vivid light festival in May 2022 and used to project near other projections and light sculptures, using content that commented on the works presented, creating a dialogue like in the projection event in the museum (MCA) described above. This of course has to be done respectfully, not interfering with the actual art works. In street art it is common to ‘overlay’ one tag or mural with another, sometimes commenting and creating a dialogue, but more often just overriding or damaging the earlier message with new work, in some cases this is just straightforward vandalism. The spray can is a mighty tool (they are usually locked away in hardware stores!), and so is the projector but as mentioned light is not permanent, while paint is.

Banksy, as usual, has pioneered this, by smuggling his own are work into famous museums and putting it up on display. For instance, he successfully targeted the British Museum in 2005 to place his work in an exhibition as a guerrilla-act, with a fake piece of rock art (Peckham Rock—depicting mimicking a cave-painting with a figure pushing a shopping trolley) [25] (pp. 116–117). In early July 2022 climate change activists in the UK performed similar acts (possible inspired by Banksy), by overlaying a famous landscape painting (The Hay Wain by John Constable from 1821) with their own prints of their less idyllic version of the painting by adding aeroplanes, a road, air pollution, etc. (a technique that Banksy has used too, for instance in Happy Chopper Crude Oil or UFO, both in 2004, he calls these ‘vandalised’ [25] (p. 158) or ‘updated’ paintings). They then superglued themselves to the frame of the painting [52]. Clearly they took great care not to damage the original artwork, and while still illegal they made a strong statement.

There is also the issue of authorship when using images that show other peoples work. I am careful not to ‘steal the light’. Particularly in the case of Street Art, murals and images that have been erased or painted over, can it be plagiarism to use these images, if these are never to be seen again in the location? In any case, great care is taken to acknowledge the authors, if known, and put effort in tracing the origins of the works that are respectfully re-situated.

Video projections can be used to alter environments. In one experiment, where I projected a moving image (of jellyfish) on the road surface below where I lived at the time (as part of an informal interactive video installation I set up in the suburb of Birchgrove, on New Year’s Eve 2014), a passing car actually swerved in order to avoid driving through the image, presumably thinking it was real.

Under the right circumstances and with the right approaches, as outlined in this paper, video projections are an extremely effective technique to address and extend the environment, with the main advantage that it creates shared realities; all participants are experiencing the mixed, extended realities. Through the use of projections, people can interact in the real world, allowing for social contact, shared experiences, and use of physical space, which is much harder to establish when using the more traditional AR/VR goggles or HMDs which tend to isolate people from each other and from the environment. With the set-ups and approaches such as presented in this paper it is possible to rapidly explore future scenarios in situ, and by being portable the technology can be used in an agile and real-time interactive way, as a performance, testing out different alternatives of extending, modifying, and transforming the environment with projected images and sounds. Although this works also indoors the main advantage is that it can be applied outside, in the real world, not just in the lab. In a follow-up paper [3] about the projections on Cockatoo Island over a period of seven years, this is further illustrated and discussed. It presents how these set-ups are used in a performative way but also allowing audiences to influence the shared experiences in a more profound way, interacting and co-creating the audiovisual materials, through dedicated interfaces that are part of the set-up.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Some of the video-projection works presented in this paper can be viewed at www.vimeo.com/bertbon (accessed on 18 August 2022).

Acknowledgments

I am thanking all the curators for their support and commissioning some of the works presented in this paper: H Morgan-Harris (Night Garden), Chicks on Speed (MCA), Roslyn Helper (Electrofringe), Lawrence Wallen (20 × 20 × 20), and Sarah Breen Lovett & Lee Stickels (Expanded Architecture).

Conflicts of Interest

The author declares no conflict of interest.

References

- Verlinden, J.C.; De Smit, A.; Peeters, A.W.J.; Van Gelderen, M.H. Development of a Flexible Augmented Prototyping System. In Proceedings of the 11th International Conference on Computer Graphics, Visualization and Computer Vision, Plzeň, Czech Republic, 3–7 February 2003; pp. 496–503. [Google Scholar]

- Verlinden, J.; Horvath, I. Enabling Interactive Augmented Prototyping by a Portable Hardware and a Plug-In-Based Software Architecture. J. Mech. Eng. 2008, 54, 458–470. [Google Scholar]

- Bongers, A.J. Island Design Camps—Interactive Video Projections as Extended Realities. Big Data Cogn. Comput. 2022, submitted.

- Felluga, D. The Matrix: Paradigm of Postmodernism or Intellectual Poseur? In Taking the Red Pill—Science, Philosophy and Religion in the Matrix; Yeffeth, G., Ed.; BenBella Books: Dallas, TX, USA, 2013; pp. 71–84. [Google Scholar]

- Kalawsky, R.S. The Science of Virtual Reality and Virtual Environments; Addison Wesley: Boston, MA, USA, 1993. [Google Scholar]

- Gombrich, E.H. The Story of Art, 16th ed.; Phaidon: London, UK, 1995. [Google Scholar]

- Gombrich, E.H. Art and Illusion—A Study in the Psychology of Pictorial Representation, 6th ed.; Phaidon: London, UK, 2002. [Google Scholar]

- Kudielka, R. (Ed.) The Eye’s Mind: Bridget Riley Collected Writings 1965–1999; Thames & Hudson: London, UK, 1999. [Google Scholar]

- Locher, J.L. (Ed.) De Werelden van M. C. Escher; Meulenhoff: Amsterdam, The Netherlands, 1971. [Google Scholar]

- Winfield, S. Lucio Fontana; Hayward Gallery Publishing: London, UK, 1999. [Google Scholar]

- Grynsztejn, M. (Ed.) Take Your Time—Olafur Eliasson; Thames & Hudson: London, UK, 2007. [Google Scholar]

- Lozano-Hemmer, R. Recorders; Manchester Art Gallery: Manchester, UK, 2010. [Google Scholar]

- Storer, R. (Ed.) Cai Guo-Qiang—Falling Back to Earth; Queensland Art Gallery: Brisbane, Australia, 2014. [Google Scholar]

- Sacks, O.W. The Case of the Colourblind Painter. In An Anthropologist on Mars—Seven Paradoxical Tales; Picador: London, UK, 1995. [Google Scholar]

- Dennett, D.C. Consciousness Explained; Little Brown: Boston, MA, USA, 1991. [Google Scholar]

- Nørretranders, T. The User Illusion—Cutting Consciousness Down to Size; Sydenham, J., Translator; Penguin: New York, NY, USA, 1998. [Google Scholar]

- Kastner, J.; Wallis, B. Land and Environmental Art; Phaidon: London, UK, 1998. [Google Scholar]

- Long, R. Richard Long—Walking the Line; Thames & Hudson: London, UK, 2002. [Google Scholar]

- Goldsworthy, A. Andy Goldsworthy—Projects; Abrams: New York City, NY, USA, 2017. [Google Scholar]

- Molony, J.; McKendry, M. James Turrell—A Retrospective; Catalogue of the exhibition 13/12/2014-28/6/2015; National Gallery of Australia: Canberra, Australia, 2014. [Google Scholar]

- Nash, D. David Nash; Thames & Hudson: London, UK, 2007. [Google Scholar]

- Gormley, A. (Ed.) Inside Australia; Thames & Hudson: London, UK, 2005. [Google Scholar]

- Riedelsheimer, T. Rivers and Tides; New Video Group: New York, NY, USA, 2001. [Google Scholar]

- Bongers, A.J. Tangible Landscapes and Abstract Narratives. In Proceedings of the 14th International Conference on Tangible, Embedded, and Embodied Interaction (TEI), Sydney, Australia, 9–12 February 2020; pp. 689–695. [Google Scholar]

- Banksy. Wall and Piece; Century, Random House: London, UK, 2005. [Google Scholar]

- Peiter, S. (Ed.) Guerilla Art; Laurence King Publishing: London, UK, 2009. [Google Scholar]

- Yarhouse, B. Animation in the Street: The Seductive Silence of Blu. Animat. Stud. 2013, 8. Available online: https://journal.animationstudies.org/brad-yarhouse-animation-in-the-street-the-seductive-silence-of-blu/ (accessed on 18 August 2022).

- Dew, C. Uncommissioned Art—An A-Z of Australian graffiti; Melbourne University Publishing Ltd.: Melbourne, Australia, 2007. [Google Scholar]

- Balog, T. (Ed.) May’s: The May Lane Street Art Project; Exhibition Catalogue; Bathurst Regional Gallery: Bathurst, Australia, 2010. [Google Scholar]

- Will Coles. Available online: http://www.willcoles.com/ (accessed on 20 June 2022).

- Degoutte, C. Paris Street Art—Saison 2; Omniscience: Paris, France, 2018; pp. 150–152. [Google Scholar]

- Chadabe, J. Electric Sound—The Past and Promise of Electronic Music; Prentice-Hall: Hoboken, MJ, USA, 1997. [Google Scholar]

- Paradiso, J. New Ways to Play: Electronic Music Interfaces. IEEE Spectrum. 1997, 34, 18–30. [Google Scholar] [CrossRef]

- Miranda, E.R.; Wanderley, M.M. New Digital Musical Instruments: Control and Interactions Beyond the Keyboard; A-R Editions: Middleton, MI, USA, 2006. [Google Scholar]

- Bongers, A.J. Interactivation—Towards an E-cology of People, Our Technological Environment, and the Arts. Ph.D. Thesis, Vrije Universiteit Amsterdam, Amsterdam, The Netherlands, July 2006. [Google Scholar]

- Dix, A.; Finlay, J.; Abowd, G.; Beale, R. Human-Computer Interaction, 3rd ed.; Prentice Hall: Hoboken, MI, USA, 2004. [Google Scholar]

- Sharp, H.; Rogers, Y.; Preece, J. Interaction Design—Beyond Human-Computer Interaction, 5th ed.; John Wiley & Sons: Hoboken, NJ, USA, 2019. [Google Scholar]

- Jacko, J.A. (Ed.) The Human-Computer Interaction Handbook—Fundamentals, Evolving Technologies, and Emerging Applications, 3rd ed.; CRC Press: Boca Raton, FL, USA, 2012. [Google Scholar]

- Bongers, A.J. Understanding Interaction—The Relationships between People, Technology, and the Environment; CRC Press: Boca Raton, FL, USA, 2022. [Google Scholar]

- Wilson, D.W. Sensor- and Recognition Based Input for Interaction. In The Human-Computer Interaction Handbook—Fundamentals, Evolving Technologies, and Emerging Applications, 3rd ed.; Jacko, J.A., Ed.; CRC Press: Boca Raton, FL, USA, 2012; Chapter 7; pp. 133–156. [Google Scholar]

- Schomaker, L.; Münch, S.; Hartung, K. (Eds.) A Taxonomy of Multimodal Interaction in the Human Information Processing System; Report of the ESPRIT project 8579 MIAMI; NICI: Nijmegen, The Netherlands, 1995. [Google Scholar]

- Kress, G.R.; Van Leeuwen, T.J. Multimodal Discourse, the Modes and Media of Contemporary Communication; Oxford University Press: Oxford, UK, 2001. [Google Scholar]

- Kress, G.R. Multimodality—A Social Semiotic Approach to Contemporary Communication; Routledge: London, UK, 2010. [Google Scholar]

- Gibson, J.J. The Ecological Approach to Visual Perception; Houghton Mifflin: Boston, MA, USA, 1979. [Google Scholar]

- Bongers, A.J. Interactive Video Projections as Augmented Environments. Int. J. Arts Technol. 2012, 15, 17–52. [Google Scholar] [CrossRef]

- Bongers, A.J. The Projector as Instrument. J. Pers. Ubiquitous Comput. 2011, 16, 65–75. [Google Scholar] [CrossRef]

- Bongers, A.J.; Mery-Keitel, A.S. Interactive Kaleidoscope: Audience Participation Study. In Proceedings of the 23rd Australian Computer-Human Interaction Conference, Canberra, Australia, 28 November–2 December 2011; pp. 58–61. [Google Scholar]

- Bongers, A.J. Facets of Expanded Architecture: Interactivating the Carriage Works building. In Expanded Architecture—Avant-garde Film + Expanded Cinema + Architecture; Stickels, L., Lovett, S.B., Eds.; Broken Dimanche Press: Berlin, Germany, 2012; pp. 53–57. [Google Scholar]

- Cycling ’74. Available online: http://www.cycling74.com/ (accessed on 18 August 2022).

- Phidgets. Available online: http://www.phidgets.com/ (accessed on 18 August 2022).

- Bullock, N.; French, B. (Eds.) Shaun Gladwell: Pacific Undertow; Catalogue for the Exhibition; Museum of Contemporary Art (MCA): Sydney, Australia, 2019. [Google Scholar]

- Gayle, D. Climate Protesters Glue Themselves to National Gallery Artwork. The Guardian, 4 July 2022. Available online: https://www.theguardian.com/environment/2022/jul/04/climate-protesters-glue-themselves-to-national-gallery-artwork(accessed on 18 August 2022).

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).