Classification of Scientific Documents in the Kazakh Language Using Deep Neural Networks and a Fusion of Images and Text

Abstract

1. Introduction

- -

- A classification model for scientific documents that fuses information from the text and images and outperforms in performance the models that use either text or images only.

- -

- An application of the model in scientific documents written in Kazakh.

2. Literature Review

2.1. NLP Solutions for the Kazakh Language

2.2. Classification of Scientific Documents

2.3. Fusion of Text and Images

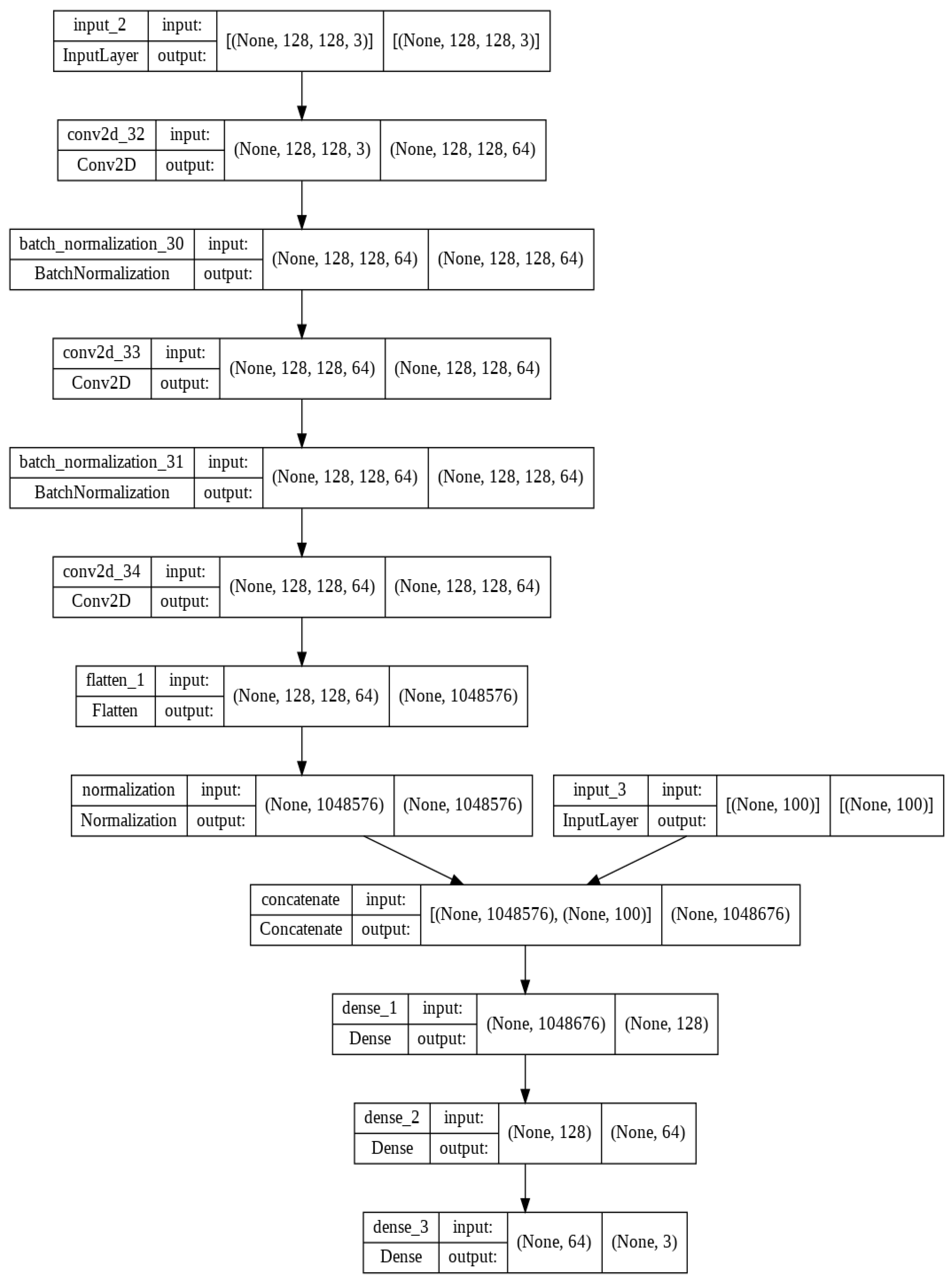

3. Methods and Materials

4. Experimental Evaluation

4.1. Dataset

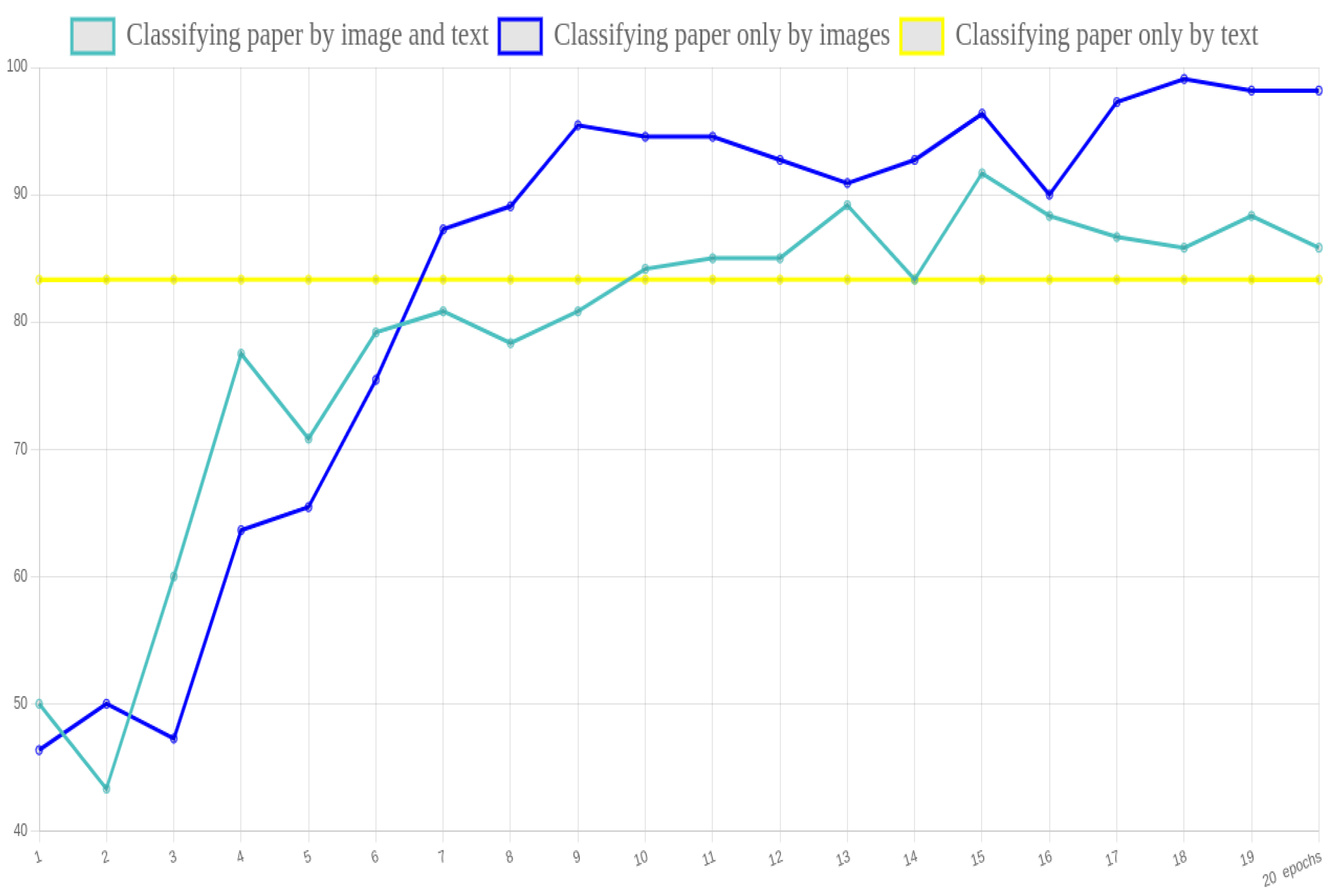

4.2. Results

4.3. Discussion of Results

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Halkidi, M.; Nguyen, B.; Varlamis, I.; Vazirgiannis, M. THESUS: Organizing Web document collections based on link semantics. VLDB J. 2003, 12, 320–332. [Google Scholar] [CrossRef]

- Bharathi, G.; Venkatesan, D. Improving information retrieval using document clusters and semantic synonym extraction. J. Theor. Appl. Inf. Technol. 2012, 36, 167–173. [Google Scholar]

- Tsoumakas, G.; Katakis, I. Multi-label classification: An overview. Int. J. Data Warehous. Min. (IJDWM) 2007, 3, 1–13. [Google Scholar] [CrossRef]

- Kowsari, K.; Jafari Meimandi, K.; Heidarysafa, M.; Mendu, S.; Barnes, L.; Brown, D. Text classification algorithms: A survey. Information 2019, 10, 150. [Google Scholar] [CrossRef]

- Kastrati, Z.; Imran, A.S.; Yayilgan, S.Y. The impact of deep learning on document classification using semantically rich representations. Inf. Process. Manag. 2019, 56, 1618–1632. [Google Scholar] [CrossRef]

- Osman, A.H.; Barukub, O.M. Graph-based text representation and matching: A review of the state of the art and future challenges. IEEE Access 2020, 8, 87562–87583. [Google Scholar] [CrossRef]

- Babić, K.; Martinčić-Ipšić, S.; Meštrović, A. Survey of neural text representation models. Information 2020, 11, 511. [Google Scholar] [CrossRef]

- Mikolov, T.; Deoras, A.; Povey, D.; Burget, L.; Černocký, J. Strategies for training large scale neural network language models. In Proceedings of the 2011 IEEE Workshop on Automatic Speech Recognition & Understanding, Waikoloa, HI, USA, 11–15 December 2011; pp. 196–201. [Google Scholar]

- Pollak, S.; Pelicon, A. EMBEDDIA project: Cross-Lingual Embeddings for Less-Represented Languages in European News Media. In Proceedings of the 23rd Annual Conference of the European Association for Machine Translation, Ghent, Belgium, 1–3 June 2022; pp. 291–292. [Google Scholar]

- Ulčar, M.; Robnik-Šikonja, M. High quality ELMo embeddings for seven less-resourced languages. In Proceedings of the 12th Conference on Language Resources and Evaluation, Marseille, France, 13–15 May 2020; pp. 4731–4738. arXiv 2019, arXiv:1911.10049. [Google Scholar]

- Khusainova, A.; Khan, A.; Rivera, A.R. Sart-similarity, analogies, and relatedness for tatar language: New benchmark datasets for word embeddings evaluation. arXiv 2019, arXiv:1904.00365. [Google Scholar]

- Yessenbayev, Z.; Kozhirbayev, Z.; Makazhanov, A. KazNLP: A pipeline for automated processing of texts written in Kazakh language. In Proceedings of the International Conference on Speech and Computer, St. Petersburg, Russia, 7–8 October 2020; Springer: Cham, Switzerland, 2020; pp. 657–666. [Google Scholar]

- Makhambetov, O.; Makazhanov, A.; Yessenbayev, Z.; Matkarimov, B.; Sabyrgaliyev, I.; Sharafudinov, A. Assembling the kazakh language corpus. In Proceedings of the Conference on Empirical Methods in Natural Language Processing, Seattle, WA, USA, 18–21 October 2013; pp. 1022–1031. [Google Scholar]

- Makazhanov, A.; Sultangazina, A.; Makhambetov, O.; Yessenbayev, Z. Syntactic annotation of kazakh: Following the universal dependencies guidelines. In Proceedings of the 3rd International Conference on Computer Processing in Turkic Languages, Kazan, Russia, 17–19 September 2015; pp. 338–350. [Google Scholar]

- Yelibayeva, G.; Sharipbay, A.; Bekmanova, G.; Omarbekova, A. Ontology-Based Extraction of Kazakh Language Word Combinations in Natural Language Processing. In Proceedings of the International Conference on Data Science, E-learning and Information Systems 2021, Petra, Jordan, 5–7 April 2021; pp. 58–59. [Google Scholar]

- Haisa, G.; Altenbek, G. Deep Learning with Word Embedding Improves Kazakh Named-Entity Recognition. Information 2022, 13, 180. [Google Scholar] [CrossRef]

- Cai, Y.L.; Ji, D.; Cai, D. A KNN Research Paper Classification Method Based on Shared Nearest Neighbor. In Proceedings of the NTCIR-8 Workshop Meeting, Tokyo, Japan, 15–18 June 2010; pp. 336–340. [Google Scholar]

- Zhang, M.; Gao, X.; Cao, M.D.; Ma, Y. Neural networks for scientific paper classification. In Proceedings of the First International Conference on Innovative Computing, Information and Control-Volume I (ICICIC’06), Beijing, China, 30 August–1 September 2006; pp. 51–54. [Google Scholar]

- Jaya, I.; Aulia, I.; Hardi, S.M.; Tarigan, J.T.; Lydia, M.S. Scientific documents classification using support vector machine algorithm. J. Phys. Conf. Ser. 2019, 1235, 12082. [Google Scholar] [CrossRef]

- Kim, S.W.; Gil, J.M. Research paper classification systems based on TF-IDF and LDA schemes. Hum.-Cent. Comput. Inf. Sci. 2019, 9, 30. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhao, F.; Lu, J. P2V: Large-scale academic paper embedding. Scientometrics 2019, 121, 399–432. [Google Scholar] [CrossRef]

- Risch, J.; Krestel, R. Domain-specific word embeddings for patent classification. Data Technol. Appl. 2019, 53, 108–122. [Google Scholar] [CrossRef]

- Lv, Y.; Xie, Z.; Zuo, X.; Song, Y. A multi-view method of scientific paper classification via heterogeneous graph embeddings. Scientometrics 2022, 127, 30. [Google Scholar] [CrossRef]

- Mondal, T.; Das, A.; Ming, Z. Exploring multi-tasking learning in document attribute classification. Pattern Recognit. Lett. 2022, 157, 49–59. [Google Scholar] [CrossRef]

- Harisinghaney, A.; Dixit, A.; Gupta, S.; Arora, A. Text and image based spam email classification using KNN, Naïve Bayes and Reverse DBSCAN algorithm. In Proceedings of the 2014 International Conference on Reliability Optimization and Information Technology (ICROIT), Faridabad, India, 6–8 February 2014; pp. 153–155. [Google Scholar]

- Audebert, N.; Herold, C.; Slimani, K.; Vidal, C. Multimodal deep networks for text and image-based document classification. In Proceedings of the Joint European Conference on Machine Learning and Knowledge Discovery in Databases, Würzburg, Germany, 16–20 September 2019; pp. 427–443. arXiv 2019, arXiv:1907.06370v1. [Google Scholar]

- Li, P.; Jiang, X.; Zhang, G.; Trabucco, J.T.; Raciti, D.; Smith, C.; Ringwald, M.; Marai, G.E.; Arighi, C.; Shatkay, H. Utilizing image and caption information for biomedical document classification. Bioinformatics 2021, 37 (Suppl. 1), i468–i476. [Google Scholar] [CrossRef] [PubMed]

- Jiang, X.; Li, P.; Kadin, J.; Blake, J.A.; Ringwald, M.; Shatkay, H. Integrating image caption information into biomedical document classification in support of biocuration. Database 2020, 2020, baaa024. [Google Scholar] [CrossRef] [PubMed]

- Kaur, G.; Kaushik, A.; Sharma, S. Cooking is creating emotion: A study on hinglish sentiments of youtube cookery channels using semi-supervised approach. Big Data Cogn. Comput. 2019, 3, 37. [Google Scholar] [CrossRef]

- Shah, S.R.; Kaushik, A.; Sharma, S.; Shah, J. Opinion-mining on marglish and devanagari comments of youtube cookery channels using parametric and non-parametric learning models. Big Data Cogn. Comput. 2020, 4, 3. [Google Scholar] [CrossRef]

| Task(s) | Dataset | Model | Language of Text | Reference |

|---|---|---|---|---|

| Keyword extraction, comment moderation, text generation | CoSimLex, cross-lingual analogy | ELMo embeddings, CroSloEngual BERT, LitLat BERT, FinEst BERT, SloRoberta and Est-Roberta | Estonian, Latvian, Lithuanian, Slovenian, Croatian, Finnish, Swedish | [9,10] |

| Word analogy relatedness and similarity | Custom | Skip-gram with negative sampling SG, FastText, GloVe | Tatar, English | [11] |

| Text normalization, word-sentence tokenization, language detection, morphological analysis | The Kazakh language corpus, Kazakh dependency treebank | Graph-based parser | Kazakh | [12] |

| Nominative word combination extraction | - | Syntactic descriptions | Kazakh | [15] |

| Named entity recognition | Tourism gazetteers | WSGGA model | Kazakh | [16] |

| Patent classification | Patents provided by the National Institute of Informatics from 1993 to 2002 | Shared Nearest Neighbor | Japanese, English | [17] |

| Scientific document classification | Cora scientific paper corpus | Feed forward Neural Networks | English | [18] |

| Classification | Papers from International Conference on Computing and Applied Informatics (ICCAI) and Springerlink collection | Support Vector Machines and BoW representation (using TF/IDF-based weights) | English | [19] |

| Classification | Papers from Future Generation of Computer Systems (FGCS) journal | K-means clustering based on TF-IDF | English | [20] |

| Paper classification, paper similarity, and paper influence prediction | Academic papers | Paper2Vector | English | [21] |

| Patents classification | Patents | fastText | English | [22] |

| Semantic similarity between papers, citation relationship between papers and the journals | The Microsoft Academic Graph, the Proceedings of the National Academy of Sciences, the American Physical Society | Decision tree, multilayer perceptron | English | [23] |

| Classification of document attributes | L3iTextCopies | Multi-Task learning, single task learning | Images | [24] |

| Classification | Enron corpus | k-Nearest Neighbors, Naïve Bayes and Reverse density-based spatial clustering of applications with noise | English | [25] |

| Classification | Tobacco 3482, RVL-CDIP | Document embedding, sequence of word embeddings, convolutional neural network, MobileNetV2 | English | [26] |

| Classification | GXD2000, DSP | Random Forest, CNNBiLSTM, HRNN, CNN | English | [27,28] |

| Clustering, Classification | Custom | DBScan, Random Forest, SVM, Logistic Regression | Hinglish, Marglish, Devanagari | [29,30] |

| Classification | Accuracy (%) |

|---|---|

| Naïve Bayes | 83.33 |

| CNN model with pure images | 83.23 |

| Deep NN model with images and text | 87.68 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bogdanchikov, A.; Ayazbayev, D.; Varlamis, I. Classification of Scientific Documents in the Kazakh Language Using Deep Neural Networks and a Fusion of Images and Text. Big Data Cogn. Comput. 2022, 6, 123. https://doi.org/10.3390/bdcc6040123

Bogdanchikov A, Ayazbayev D, Varlamis I. Classification of Scientific Documents in the Kazakh Language Using Deep Neural Networks and a Fusion of Images and Text. Big Data and Cognitive Computing. 2022; 6(4):123. https://doi.org/10.3390/bdcc6040123

Chicago/Turabian StyleBogdanchikov, Andrey, Dauren Ayazbayev, and Iraklis Varlamis. 2022. "Classification of Scientific Documents in the Kazakh Language Using Deep Neural Networks and a Fusion of Images and Text" Big Data and Cognitive Computing 6, no. 4: 123. https://doi.org/10.3390/bdcc6040123

APA StyleBogdanchikov, A., Ayazbayev, D., & Varlamis, I. (2022). Classification of Scientific Documents in the Kazakh Language Using Deep Neural Networks and a Fusion of Images and Text. Big Data and Cognitive Computing, 6(4), 123. https://doi.org/10.3390/bdcc6040123