Abstract

Text mining in big data analytics is emerging as a powerful tool for harnessing the power of unstructured textual data by analyzing it to extract new knowledge and to identify significant patterns and correlations hidden in the data. This study seeks to determine the state of text mining research by examining the developments within published literature over past years and provide valuable insights for practitioners and researchers on the predominant trends, methods, and applications of text mining research. In accordance with this, more than 200 academic journal articles on the subject are included and discussed in this review; the state-of-the-art text mining approaches and techniques used for analyzing transcripts and speeches, meeting transcripts, and academic journal articles, as well as websites, emails, blogs, and social media platforms, across a broad range of application areas are also investigated. Additionally, the benefits and challenges related to text mining are also briefly outlined.

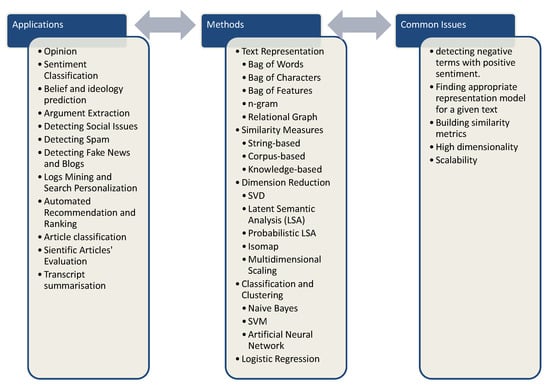

1. Introduction

In recent years, we have witnessed an increase in the quantities of available digital textual data, generating new insights and thereby opening up opportunities for research along new channels. In this rapidly evolving field of big data analytic techniques, text mining has gained significant attention across a broad range of applications. In both academia and industry, there has been a shift towards research projects and more complex research questions that mandate more than the simple retrieval of data. Due to the increasing importance of artificial intelligence and its implementation on digital platforms, the application of parallel processing, deep learning, and pattern recognition to textual information is crucial. All types of business models, market research, marketing plans, political campaigns, or strategic decision-making are facing an increasing need for text mining techniques in order to address the competition.

Large amounts of textual data could be collected as a part of a research, such as scientific literature, transcripts in the marketing and economic sectors, speeches in the field of political discourse, such as presidential campaigns and inauguration speeches, and meeting transcripts. Furthermore, online sources, such as emails, web pages, blogs/micro-blogs, social media posts, and comments, provide a rich source of textual data for research [1]. Large amounts of data are also being collected in semi-structured form, such as log files containing information from servers and networks. As such, text mining analysis is useful for both unstructured and semi-structured textual data [1].

Data mining and text mining differ on the type of data they handle. While data mining handles structured data coming from systems, such as databases, spreadsheets, ERP, CRM, and accounting applications, text mining deals with unstructured data found in documents, emails, social media, and the web. Thus, the difference between regular data mining and text mining is that in text mining the patterns are extracted from natural language text rather than from structured databases of facts [2]. Since all the written or spoken information can be represented in textual form, data mining requires all kinds of text mining tools when it comes to the interpretation and analysis of sentences, words, phrases, speeches, claims, adverts, and statements. This paper conducts an extensive analysis of text mining applications in big data analytics as used in various commercial fields and academic studies. While the vast majority of the literature deals with the optimization of a specific text mining technique, this paper seeks to summarize the features of all text mining methods, thereby summarizing the state-of-the-art practices and approaches in all the possible fields of application. It is centered around seven key applications of text mining in transcripts and speeches, meeting transcripts, and academic journal articles, as well as websites, emails, blogs, and social media networking sites; for each of these, we, respectively, provide a description of the field, their functionality, the most commonly used methods, the associated problems, and the related and relevant references.

The remaining sections of this paper are organized in the following manner. In the Section 2, we introduce the topic of text mining in transcripts and speeches. We explain the different classification techniques used in, for instance, the analysis of political speeches that classify opinions or sentiments in a manner that allows one to infer from a text or speech the ideology that a speaker most probably espouses. Furthermore, we explain the methods used in classifying transcripts and speeches and identify the shortcomings of these methods, which are primarily related to the behavioral nature of human beings, such as ironic or ideological behavior. In the Section 3, we take a closer look at blog mining, the dominance of news-related content in blogs and micro-blogging, and present the methods used in this area. Most of the methods applied in blog mining are based on dimensionality reduction, which is also found in other fields of text mining applications. Additionally, the relationship between blog mining and cybersecurity—which is an interesting and novel application of blog mining—is also covered in this section. In the Section 4, we analyze email mining and the techniques commonly used in relation to it. A very specific feature of email mining is its noisy data, which has been discussed in this section. Moreover, we explain the challenges to the identification of the content of the email body and how email mining is used in business intelligence. The web mining techniques that are used in screening and analyzing websites are studied in the Section 5. The features of a website, such as links, links between websites, anchor text, and html tags, are also discussed. Moreover, the difficulty of capturing unexpected and dynamically generated patterns of data is also explored. Additionally, the importance of pattern recognition and text matching in e-commerce is highlighted. In the Section 6, we present studies conducted on the use of Twitter and Facebook and explain the role of text mining in marketing strategies based upon social media, as well as the use of social media platforms for the prediction of financial markets. In Section 7 and Section 8, we round up our extensive analysis of text mining applications by exploring the text mining techniques used for academic journal articles and meeting transcripts. Section 9 discusses the important issue of extract hidden knowledge from a set of texts and building hypotheses. Finally, in the concluding section, we highlight the advantages and challenges related to text mining and discuss its potential benefits to society and individuals.

2. Text Mining in Transcripts and Speeches

Text mining refers to the extraction of information and patterns that are implicit, previously unknown, and potentially valuable in an automatic or semi-automatic manner from immense unstructured textual data, such as natural-language texts [3].

There are two types of text mining algorithms: supervised learning and unsupervised learning (the two terms originated in machine learning methods). Supervised learning algorithms are employed when there is a set of predictors to predict a target variable. The algorithm uses the target’s observed values to train a prediction model. Support vector machines (SVMs) are a set of supervised learning methods used for classification and prediction. On the other hand, unsupervised learning methods do not use a target value to train their models. In other words, the unsupervised learning algorithms use a set of predictors (features) to reveal hidden structures in the data. Non-negative matrix factorization is an unsupervised learning method [4].

Transcripts are a written or printed version of material originally presented in another medium, such as in speeches. Therefore, the analysis of transcripts can be treated in the same manner as the analysis of speeches, as spoken words need to be pre-processed through, for instance, a voice-recognition API or manual transcription. Despite its extensive application in transcripts from other fields, such as marketing and political science, text mining as a technique in economics has historically been less explored. Bholat et al. [5] presented a comprehensive overview of the various text mining techniques used for research topics of interest to central banks for analyzing a corpus of documents, including, amongst others, the verbatim transcripts of meetings. Recently, three years of speeches, interviews, and statements of the Secretary General of Organization of the Petroleum Exporting Countries (OPEC) were analyzed using text-minin techniques [6].

The ideology, as a key factor affecting an individual’s system of beliefs and opinions that controls their acts, is an important feature in text mining when it comes to political (or religious) textual data. Ideology provides the “knowledge of what-goes-with-what” [7] and shapes each individual’s perception of any given issue [8]. However, the main issue in taking ideology as a feature for text and opinion mining is that, in many cases, the ideology of the speaker is not very clear, especially when it comes to politicians. To overcome the issue, one may use the texts with known ideological background and build a classification model to classify ideology behind a text, based on the textual data. Applying the trained classification model to a political text would help understand the ideology behind the speech or a text and, consequently, the opinion of a person.

Two approaches are extensively used in text mining: opinion classification and sentiment classification [9].

2.1. Opinion Classification

The main concern in opinion mining is to determine to what extent a text in-hand supports or opposes a specific subject. Although opinion mining is vastly used to analyze political texts, from speeches to short text on Twitter [8], it is very useful in other fields, too. For instance, one may use opinion mining to determine the opinion of the customers on features of a product, an audience’s opinion on a movie, or to find the people’s favorite asset in a market [10,11,12]. Most applications, in the context of political speeches, target the curation of general-purpose political opinion classifiers, given their potential and significant uses in e-rulemaking and mass media analysis [13,14,15,16]. The steps involved in the implementation of opinion mining are as follows [17]:

- Determining text polarity to decide whether a given text is factual in nature (i.e., it unbiasedly describes a particular situation or event and refrains from providing a positive or a negative opinion on it) or not (i.e., it comments on its subject matter and expresses specific opinions on it), which amounts to the categorization of binary texts into subjective and objective [18,19].

- Determining text polarity to decide if a given subjective text posits a positive or negative opinion on the subject matter [18,20].

- Determining the extent of text polarity to categorize the positive opinion extended by a text on its subject matter as weakly positive, mildly positive, or strongly positive [21,22].

The literature related to opinion mining is growing see [23,24]. A highly beneficial source for opinion classification is Wordnet [25] by Princeton University, a lexical database of the English language containing nouns, verbs, adjectives, and adverbs grouped into 117,000 sets of cognitive synonyms (synsets), with each set expressing a distinct concept. A detailed description of Wordnet can be found in an article by Miller et al. [26].

2.2. Sentiment Classification

Sentiment classification is closely related to opinion mining and is mainly based on a technique called sentiment scoring. The basic idea behind the technique is to extract effective content from a text based on the appraisal, polarity, tone, and valence [27]. In order to build a sentiment score, one may use a set of predefined lists of terms with allocated quantitative weights for positive and negative connotations. Then, counting the positive and negative terms will get a score showing how much a text opposes or approves a given subject [28]:

Taking the weights into account (if weights already exist):

where is the sentiment weight for ith positive term, and is the sentiment weight for jth negative term.

This measure is subsequently interpreted as a relative gap between positively and negatively connoted language. In a seemingly convenient manner, it ranges between −1 and + 1, where a score of 0.5, for example, is interpreted as 50% points overweight for positively connoted language, implying a fairly positive sentiment guiding the text [27].

However, despite its strengths, such as implementation transparency, relevance, replicability, intuitiveness, and a high level of human supervisory, sentiment classification also bears some drawbacks, such as context dependence, which might hold a positive connotation in their original context (e.g., commercial reviews) but convey a negative tone in political contexts, or vice versa. Furthermore, estimating the positive and negative weights is not always straightforward.

According to Rauh [27], the more technically advanced literature has recently explored context-specific machine-learning approaches (e.g., Ceron et al. [29], Hopkins et al. [30], Oliveira et al. [31], and van Atteveldt et al. [32]). They also addressed its challenges, such as oversimplification, irony, and negation.

2.3. Functionality

One interesting application of opinion and sentiment classification is to use them for predicting someone’s opinion or their system of beliefs and ideology based on their speech or written messages (e.g., text on social media, books, articles, etc.). Klebanov et al. [33] were the first researchers in the area of text classification to examine whether two people hold differing opinions or the same opinion but phrase it differently [34]. Moreover, they offered some insight into the conceptual structure that governs political ideologies, such as how these ideologies succeed in creating coherent belief systems, and determine (for the benefit of those who follow them) what goes with what. However, the results obtained in this manner provide a negligible amount of information regarding the structure of ideologies or the extent to which they are cohesive or convincing. In contrast, the studies conducted by Lakoff [35], Lakoff and Johnson [36], and Klebanov et al. [33] identify the underlying belief systems based on the cognitive structure and metaphors of liberal and conservative ideologies by employing an automatic lexical cohesion detector on Margaret Thatcher’s 1977 speech for the Conservative Party Conference. The identification of the underlying belief systems requires the pre-processing of the text. Miner et al. [37] proposed a pre-processing method by removing stopwords (e.g., “the”, “a”, “an”, etc.), prefixes (e.g., “re”, “pre”, etc.), and suffixes (e.g., “ing”, “ation”, “fy”, “itis”, etc.). Unifying words’ spellings and typesettings (lower and upper cases) and correcting misspelled words is another step in their pre-processing scheme. The pre-processing will make the words normalized in the text and reduce the noise in unstructured text data. Sarkar et al. [38] described a classification algorithm based on a SVM, which allows for 80–89% accuracy.

Sentiment and opinion classification is used for classification of discussion treads and reviews, too. Lu et al. [39] used the opinion classification methods to automatically discover the opposed opinion and build an opposing opinion network for a social thread. In order to build the network, they analyzed the agree/disagree relations between posts in a social network platform (i.e., a forum). The sentiment and opinion classification methods are developed based on machine learning methods (e.g., SVM, neural networks, naive Bayes, maximum entropy, and stochastic gradient descent) to classify the large number of online reviews [40,41]. Kennedy and Inkpen [40] and Tripathy et al. [41] applied classification methods to classify the Internet Movie Database (IMDB) movie reviews, though they did not build a network of opinions on the movies.

A challenge in the classification of political speeches is that political speeches feature far fewer sentiment words—typically, adjectives or adverbs—that have been identified to be most indicative of opinions, as in the case of movie reviews. Instead, political speeches tend to express opinions in the choice of the nouns. Moreover, nouns that hold no political connotations in common usage may come across as heavily-laden with political intentions when expressed in the context of a specific debate [8].

Acharya et al. [34] used classification algorithms that, by comparing the performance of logistic regression, SVM, and naive Bayes models (NB), analyzes the speech of a given United States (U.S.) presidential candidate from 1996–2016 to predict the candidate’s political party affiliation, as well as the region and year in which the speech was delivered. They found a superior performance of the logistic regression, followed by SVM and NB methods. These results are supported by the work conducted by Joachims [42], who found that logistic regression classifies a presidential candidate’s speech as democratic or republican most accurately. Thus, predicting the candidate’s political party and the year in which the speech was delivered is relatively easy, while predicting the location in which the speech was delivered, proves to be significantly more difficult.

2.4. Arguments Extraction

As another application, text mining is used to extract facts and arguments, specifically from political speeches and documents. An argument, in a certain context, usually consists of two main parts: a claim and a series of minor and major premises to support the claim. The premises are known and already proven facts. Argument extraction is closely related to opinion mining and belief classifications. Extracting the arguments from a large amount of textual data, not only helps building a knowledge base for a given subject or a task, it also helps to reexamine different arguments, with different common bases, and produce new ones in large scale. Extracting arguments requires distinguishing between the claims and the fact, which will result in extracting the arguments, along with the underlying facts. The big potential of argument extraction in political textual data has attracted many researchers in text mining. For instance, Sardianos et al. [43] proposed a supervised technique, based on conditional random fields, to extract arguments and their underlying facts. They applied the method to web pages containing news and tagged speeches. Florou et al. [44] applied a variate of argument extraction methods to Greek language social media to estimate the public support for an unannounced (unpublished or unfinished) policies. They took into account the structure of the sentences and discourse markers, like connectives, as well as the tense and mood of the verbal construction, and showed the importance of verbal construction in argument extraction. Goudas et al. [45] developed a two-stage approach to extract the arguments made by bloggers and others in social media related to the arguments made by policymakers, as well as new arguments in social media. The proposed model is applied to a vast number of Greek language social media contents. Their method has a high accuracy rate in extracting arguments and building relational links between arguments and policies. Lippi and Torroni [46] used the machine learning methods to extract arguments from seven party leaders’ debates during the 2015 UK general election. Their results show the importance of using voice features, along with textual data, when it comes to extracting claims and facts from a speech.

2.5. Methods

Generally, the first step in text mining (after cleansing the textual data and reducing the noise) is to represent the text using a proper model. A common text representation model represents a document as a vector of features. The feature vector represents text with its frequent words or phrases, or grammatical structure of the sentences [47]. Most simple vector representations of text consider a text as a Bag-of-Words (BOW) or combination of BOW with Bag-of-Characters. More advanced versions look at the text as a Bag-of-Features [48]. Another common text representation method is to use a graph or a diagram to represent the relation of the words and segments in a document [49]. The diagram representation can be used to demonstrate the relation between terms in a text and use these terms to classify/categorize the text [48]. The next problem in text mining is to find a similarity measure and a classification function to properly classify the texts. One approach is to employ semantic similarity measures [50]. In addition to similarity measures, one may use machine or statistical learning models to classify textual data. The logistic regression is a binary classification algorithm that applies the logistic function as the hypothesis. The model subsequently locates the optimal that minimizes the associated cost function that will then determine a separating sigmoid curve between the two classes [34]. SVM is a statistical classification method that was suggested by Cortes and Vapnik [51]. SVM, exploiting the structural risk minimization principle of computational learning theory, seeks a decision surface to categorize the training data points into two classes and forms decisions based on the SVMs which are identified as the only competent elements in the training set. According to Vinodhini and Chandrasekaran [52], SVMs separate the classes by building a margin in an effort to minimize the distance between each class and that margin.

NB models learn probabilities based on prior distribution across classes from the training data, under the assumption that all the features are independent; this specifically holds true when predicting a class based on training [34].

Yu et al. [8] tested the party classifiers for congressional speech data. They found that the classifiers which were trained on the house speeches are more efficient with processing senate speeches than vice versa and that the best overall classifier is SVM, which has equally weighted features.

In addition to the application of logistic regression, SVM, and NB, other statistical methods are also available for classification in natural language processing, such as maximum entropy and maximum likelihood [53], which use Candide, an automatic machine translation system developed by IBM, to test the performance of both the methods. These methods find a significant efficacy of maximum entropy techniques for performing context-sensitive modeling.

Other evidence for detectable patterns associated with ideological orientation in the political speech were found by Diermeier et al. [54], Evans et al. [55], and Laver et al. [56], as these studies achieve a high classification accuracy. Piryani et al. [57] presented an extensive scientometric analysis of the research work undertaken on opinion mining and sentiment analysis during 2000–2016.

Wilson et al. [22] created a system called the OpinionFinder, which performs a subjectivity analysis. Thus, it can automatically identify texts with opinions, sentiments, speculations, and other private states. OpinionFinder seeks to identify subjective sentences and to highlight the various aspects of subjectivity in these sentences, including the source (holder) of the subjectivity and words that are included in phrases expressing positive or negative sentiments. It encompasses four components:

- An NB classifier that applies several lexical and contextual features to distinguish between subjective and objective sentences [58,59];

- A component for identifying speech events (e.g., “stated” and “according to”) and directing subjective expressions (e.g.,, “appalled” and “is sad”);

- A source identifier combining a conditional random field sequence tagging model [60] and extraction pattern learning [61] to determine the sources of the speech events and subjective expressions [62];

- A component that applies two classifiers to identify the words contained in phrases that express positive or negative sentiments [63].

2.6. Shortcomings

Sentiment analysis, however, faces a predominant challenge with its classification of text under one particular sentiment polarity, whether positive, negative or neutral [24,64,65,66,67]. In order to solve this problem, Fang [68] proposed a general process for the categorization of sentiment polarity.

Another field of application is the detection of offensive language in the so-called hate speech, which refers to submitting to stereotypes to express and propagate an ideology of hate [69,70].

A key problem in speech recognition is that transcripts with high word error rate are obtained for documented speeches in poor audio conditions and spontaneous speech recorded in actual conditions, as pointed out by the NIST Rich Transcription Meeting program [71]. Recordings from Call centers and telephone surveys are of poor audio quality due to the use of cell phones and/or surrounding noise, unconstrained speech, variable utterance length, and various disfluencies, such as pauses, repetitions, and rectifications. Consequently, speech mining is extremely difficult on this type of corpora [72]. Camelin et al. [72] proposed a sampling and information extraction strategy as the solution to these problems. In order to evaluate the accuracy, as well as the representativeness, of the extracted information, they suggested several solutions based on the Kullback-Leibler divergence.

3. Blog Mining

Blogs allow authors to maintain entries that are continuing and arranged in reverse chronology for an audience that can interact with the authors through the comments section. Blogs can belong to a broad variety of genres, ranging from diaries of personal and mundane musings to corporate business blogs; however, they tend to be associated with more personal and spontaneous forms of writing. Social researchers have capitalized on blogs as a source of data in several cases, from performing content analysis related to gender and language use to determining ethnographic participation in blogging communities [73]. After the creation of the very first blog, Links.net, in 1994 [74], the internet became home to hundreds of millions of blogs. Due to the large numbers of existing blog posts, the blogosphere content may seem haphazardly and chaotic [75]. Consequently, effective mining techniques are required to aid in the analysis and comprehension of blog data. Webb and Wang [76] reviewed the general methodological options that are frequently used when studying blogs and micro-blogs; the options investigated included both quantitative and qualitative analyzes, and the study was undertaken in an effort to offer practical guidance on how a researcher can reasonably sift through them.

Many of the blog mining techniques are similar to those used for text and web documents; however, the nature of blog content may lead to various linguistic and computational challenges [77,78]. Current research in blog mining reflects the prominence of news or news-related content and micro-blogging. Blog mining, furthermore, overlaps with features of social media mining [79,80].

Apart from the text content, blogs also provide other information, such as details regarding the title and author of the blog, its date and time of publication, and tags or category attributes, among others. Similar to other social media data, blog content also undergoes changes over time. New posts are uploaded, novel topics are deliberated over, perceptions change, and new communities spring up and mature. Identifying and understanding the topics that are trending in the blogosphere can provide credible information regarding product sales, political views, and potentially attention-garnering social areas [81,82].

Methods in blog mining that have gained popularity over the years include classification and clustering [83], probabilistic latent sentiment analysis (PLSA) or latent Dirichlet allocation (LDA), mixture models, time series, and stream methods [80]. Existing text mining methods and general dimensionality reduction methods have been used by a number of studies [75,77,84,85,86,87] on blog mining; however, the analysis that can be undertaken with these methods is limited to mono- or bi-dimensional blog data, while the general dimensionality methods may not be effective in preserving information retrieved from blogs [88]. Tsai [78] applied the tag-topic model for blog data mining. Dimensionality reduction was performed with the spectral dimensionality reduction technique Isomap to show the similarity plot of the blog content and tags. Tsai [88] presented an analysis of the multiple dimensions of blog data by proposing the unsupervised probabilistic blogger-link-topic (BLT) model to address the challenges in determining the parties most likely to blog about a specific topic and in identifying the associated links for a given blog post on a given topic and detect splog. The results indicated that BLT obtained the highest average precision for blog classification with respect to other techniques that used the blogger-date-topic (BDT), author-topic (AT), and LDA models. In the study of Tsai [89], the AT model based on the LDA was extended to the analyzes and visualization of blog authors, associated links, and time of publication, and a framework based on dimensionality reduction was suggested to visualize the dimensions of content, tags, authors, links, and time of publication. This study was the first to analyze the multiple dimensions of blogs by using dimensionality reduction techniques, namely multidimensional scaling (MDS), Isomap, locally linear embedding (LLE), and LDA, on a set of business blogs.

Sandeep and Patil [90], after conducting a brief review of the literature on blog mining, proposed a multidimensional approach to blog mining by defining a method that combines the blog content and blog tags to discern blog patterns. However, the proposed method can only be applied to text-based blogs.

Blogs can be categorized, influential blogs can be promoted, and new topics can be identified. It is also possible to ascertain perspectives or sentiments from the blogosphere through data mining techniques [81]. Although several solutions are available that can effectively handle information in small volumes, they are static in nature and usually do not scale up accurately owing to their high complexity. Moreover, such solutions have been designed to run once or in a fixed dataset, which is not sufficient for processing huge volumes of streamed data. In response to this issue, Tsirakis et al. [91] suggested a platform focusing on real-time opinion mining from news sites and blogs. Hussein [92] presented a survey on the challenges relevant to the approaches and techniques of sentiment analysis. Furthermore, the research of Chen and Chen [93] applied big data and opinion mining approaches to the analysis of investors’ sentiments in Taiwan. First, the authors reviewed previous studies related to sentiment mining and selection of features; subsequently, they analyzed financial blogs and news articles available online for creating a public mood dynamic prediction model exclusively for Taiwanese stock markets by taking into account the views of behavioral finance and the features of financial communities on the internet.

The filtering of spam blogs is another predominant theme in blog mining, and it can considerably misrepresent any estimation of the number of blog posts made [78] and the evaluation of cybersecurity threats. Most intelligence analysis studies have focused on analyzing the news or forums for security incidents, but few have concentrated on blogs. Tsai and Chan [85] analyzed blog posts for identifying posts made under various categories of cyber security threats related to the detection of cyber attacks, cybercrime, and terrorism. PLSA was used for detecting keywords from various cyber security blog entries pertaining to specific topics. Along similar lines, Tsai and Chan [94] proposed blog data mining techniques for assessing security threats. They used LDA-based probabilistic methods to detect keywords from security blogs with respect to specific topics. The research concluded that the probabilistic approach can enhance information retrieval related to blog search and keyword detection. Recognition of cyber threats from open threat intelligence can prove beneficial for incident response in very early stages. Lee et al. [95] proposed a free web service for examining emerging cybersecurity topics based on the mining of open threat intelligence, which is dedicated to locating various emerging topics in cyber threats (i.e., nearly zero-day attacks) and providing possible solutions for organizations. The demonstration showed that with information collected from experts on Twitter and specific targeted RSS blogs, Sec-Buzzer promptly recognizes the emerging information security threats and, subsequently, publishes related news, technical reports, and solutions in time.

Applications of blog mining vary and, among others, include opinion mining for agriculture [96], prospective industrial technologies [97], decision support in fashion buying processes [98], detection of major events [99], retrieval of information regarding popular tourist locations, and travel routes [100], the summarization of popular information from massive tourism blog data [101], summarization of news blogs and detecting the copy and reproduced multi-lingual contents [102,103,104] and detecting the fake news [105].

4. Email Mining

Email is a convenient and common means of textual communication. It is also intrinsically connected to the overall internet experience since an email account is required for signing up for any form of online activity, including to create accounts for social networking platforms and instant messaging. The Radicati Group, Inc., executive summary of email statistics report 2012–2019 [106] predicts that the total number of business and consumer emails sent and received daily will exceed 293 billion in 2019; this statistic is forecasted to increase to over 347 billion by the end of 2023. To optimize the use of emails and explore its business potential, email mining has been extensively undertaken and has observed commendable progress in terms of both research and practice.

Email mining is similar to text mining since they both pertain to textual data. However, specific characteristics of email data separate it from text mining. To begin with, email data can be highly noisy. More specifically, it may include headers, signatures, quotations, or program codes. It may also carry extra line breaks or spaces, special character tokens, or spelling and grammar mistakes. Moreover, spaces and periods may be mistakenly absent from it. Hence, the email data needs to be cleaned in depth before high quality mining [107]. In addition, an email is a data stream targeted towards a specific user and the concepts or distributions of the target audiences of the messages may vary over time with respect to the messages received by that user. It is also problematic to obtain public email data for experiments due to privacy issues [108].

Emails contain links to a vast social network with data on the person or organization in charge, thus making email mining more resourceful [109]. During email mining, the links can be exploited for their content and a better understanding of behavior. On the other hand, the techniques currently in use are not able to effectively handle the vast amount of data. There is heterogeneity, noise, and variety, and mining techniques cannot be easily modified to adjust to big data environments [110]. Another challenge in email mining is data visualization, which makes decision-making considerably harder. Hidden information cannot be extracted or visualized due to the lack of scalable visualization tools. If the data is not presented more comprehensibly, visualization and decision-making also become difficult for the data miners [111].

Initial studies on email mining predominantly focused on the existing tools for personal collection management since large and diverse collections were not accessible for research use [112]. That changed, most notably with the Enron Corporation email collection [113]. However, the LingSpam corpus, compiled by Androutsopoulos et al. [114], was one of the first publicly available datasets.

A number of email mining studies have focused on people-related tasks, including name recognition and reference resolution, contact information extraction, identity modeling and resolution, role discovery, and expert identification, as well as the generation of access to large-scale email archives from multiple viewpoints by using a faceted search [115]. Moreover, some significant applications of email mining include tasks, such as filtering emails based on priority and identifying spam and phishing emails, as well as automatic answering, thread summarization, contact analysis, email visualization, network property analysis, and categorization [116,117].

Tang et al. [116] conducted a brief but exhaustive survey on email mining. The authors introduced the feature-based and social structure-based representation approaches, which are often performed in the pre-processing phase. Following this, they identified five email mining tasks—spam detection, email categorization, contact analysis, email network property analysis, and email visualization. Later, the commonly used techniques for each task were discussed. These included NB, SVMs, rule-based and content-based models, and random forest (RF), as well as K-nearest neighbour (K-NN) classifiers (pertaining to the classification problem in the email content detection) and the K-means algorithm (pertaining to the semi-supervised clustering problem). The methods based on principal component analysis (PCA), LDA, and term frequency-inverse document frequency (TF-IDF) were presented as well.

The study by Mujtaba et al. [117] comprehensively reviewed 98 articles published between 2006–2016 on email classification from the Web of Science core collection databases and the Scopus database. In this study, the methodological decision analysis was performed in the following five aspects: (1) email classification application areas, (2) the datasets used in each application area, (3) feature space utilized in each application area, (4) email classification techniques, and (5) the use of performance measures.

Sentiment analysis of online text documents has been a flourishing field of text mining among researchers and scholars. In contrast to the content of public data, the real sentiment is often expressed in personal communications. Emails are frequently used for sending emotional messages that reflect deeply meaningful events in the lives of people [118]. On the other hand, sentiment analysis on large business emails could reveal valuable patterns useful for business intelligence [119,120]. The study of Hangal et al. [118] proposed the use of sentiment analysis techniques on the personal email archives of users to aid the task of personal reflection and analysis. The authors built and publicly released the Muse email mining system. The system helps users to analyze, mine, and visualize their own long-term email archives. Moreover, Liu and Lee [120] proposed a framework for email sentiment analysis that uses a hybrid scheme of algorithms, combined with K-means clustering and SVM classifier, and is to be applied to the Enron email corpus. The evaluation for the framework is conducted by comparing three labeling methods, namely, SentiWordNet, K-means, and polarity, and five classifiers, namely, SVM, NB, logistic regression (LR), decision tree (DT), and OneR. The empirical results indicated that the combined K-means and SVM algorithm achieved high accuracy compared to other approaches. In continuation of their previous studies, Liu and Lee [121] conducted sentiment clustering on Enron email data with a novel sequential viewpoint. This involved the transformation of sentiment features into a trajectory representation for implementing the trajectory clustering (TRACLUS) algorithm, along with the combination of sentiment temporal clustering, so as to discover sentiment flow in email messages in the topical and temporal distribution.

While insider threats in cybersecurity are often associated with malicious activities, insider threat is one of the most significant threats faced in business espionage [122]. Chi et al. [123], focused on the detection of insider threats by combining linguistic analysis and K-means algorithm to analyze communications, such as emails, to ascertain whether an employee meets certain personality criteria and to deduce the risk level for each employee. Soh et al. [124] focused on an aspect-based sentiment analysis that can provide more detailed information. Moreover, they presented a novel employee profiling framework equipped with deep learning models for insider threat detection, which is based on aspect-based sentiment and social network information. The authors evaluated the new presented framework, ASEP, on the dataset of the augmented Enron emails, and demonstrated that the employee profiles retrieved from ASEP can effectively encode the implicit social network information and, more significantly, their aspect-based sentiments.

The continued growth in the number of email users has led to a massive increase in spam emails. The global average of the daily spam volume for June 2019 was 459.40 billion, while the corresponding average of the daily (legitimate) email volume was 79.82 billion [125]. The large volume of spam emails moving through computer networks has a debilitating effect on the memory space available to email servers, communication bandwidth, CPU power, and user time. On the other hand, if we consider the fact that the majority of cyber attacks start with a phishing email [126] into accounts, there can be no doubt that phishing is a high-risk attack vector for organizations and even government agencies. Therefore, a predominant challenge in the email mining process is to identify and isolate spam emails.

Two general approaches are adopted for mail filtering: knowledge engineering (KE) and machine learning (ML) [127]. Spam filtering techniques based on knowledge engineering use a set of predefined rules. These rules are implemented to identify the basic characteristics of the email message. The ML techniques construct a classifier by training it with a set of emails called the training dataset. Several filtering methods based on ML have been extensively adopted when addressing the problem of email spam.

Bhowmick and Hazarika [128] presented an exhaustive review of some of the frequently used content-based email spam filtering methods. They mostly focused on ML algorithms for spam filtering. The authors studied the significant concepts, efforts initiated, effectiveness, and trends in spam filtering. They comprehensively discussed the fundamentals of email spam filtering, the changing nature of spam, and spammers’ tricks to evade the spam filters of email service providers (ESPs). Moreover, they examined the popular machine learning techniques used in combating the menace of spam.

Dada et al. [129] examined the applications of ML techniques to the email spam filtering process of leading internet service providers (ISPs), such as Gmail, Yahoo, and Outlook, and focused on revisiting the machine learning techniques used for filtering email spam over the 2004–2018 period, such as K-NN, NB, Neural Networks (NN), Rough set, SVM, NBTree classifiers, firefly algorithm (FA), C4.5/J48 decision tree algorithms, logistic model tree induction (LMT), and convolutional neural network (CNN). Stochastic optimization techniques, such as evolutionary algorithms (EAs), have also been explored by Dada et al. [129], as the optimization engines are able to enhance feature selection strategies within the anti-spam methods, such as the genetic algorithm (GA), particle swarm optimization (PSO), and ant colony algorithm (ACO).

Most of relevant works on this topic classify emails using the term “occurrence” in the email. Some works, additionally, focus on the semantic properties of the email text. In the study conducted by Bahgat et al. [130], the email filtering was based on the introduction of semantic modeling to address the high dimensionality of features by examining the semantic attributes of words. Various classifiers were studied to gauge their performance in segregating emails as spam or ham experiments on the Enron dataset. Correlation-based feature selection (CFS) which was introduced as a technique for feature selection, improved the accuracy of RF and radial basis function (RBF) network classifiers, while CFS ensured the accuracy of other classifiers, such as SVM and J48.

A phishing attack that uses sophisticated techniques that direct online customers to a new web page that has not yet been included in the black-list is called a zero-day attack [131]. Chowdhury et al. [132] in their overview of the work on the filtering of phishing emails and pruning techniques, proposed a multilayer hybrid strategy (MHS) for the zero-day filtering of phishing emails that emerge during a separate time span, which uses the training data collected previously during another time span. MHS was based on a new pruning method, the multilayer hybrid pruning (MHP). The empirical study demonstrated that MHS is effective and that the performance of MHP is better than that of other pruning techniques.

In a newer approach aimed at studying the detection of phishing emails, Smadi et al. [133] discussed the relevant work on protection techniques, as well as their advantages and disadvantages. Moreover, they proposed a novel framework that combines a dynamic evolving neural network, based on reinforcement learning (RL), to detect phishing attacks in the online mode for the first time. The proposed model, phishing email detection system (PEDS), was also the first work in this field that used reinforcement learning to detect a zero-day phishing attack. NN was used as the core of the classification model, and a novel algorithm, called the dynamic evolving neural network, which used reinforcement learning (DENNuRL), was developed to allow the NN to evolve dynamically and build the best NN capable of solving the problem. It was demonstrated that the proposed technique can handle zero-day phishing attacks with high levels of performance and accuracy, while comparison with other similar techniques on the same dataset indicated that the proposed model outperformed the existing methods.

5. Web Mining

The World Wide Web (or the web) is at present a popular and interactive medium for disseminating information. The most commonly accessed type of information on websites is textual data, such as emails, blogs, social media, and web news articles. Web data differs from the data retrieved from other sources because of certain characteristics that make it more advantageous. In fact, website information is readily available to the public at large, is cost-effective in terms of access, and can be extensive with respect to coverage and the volume of data contained [134]. However, locating information on the web is a daunting and challenging task because of the immense volume of data and noise contained [135].

Etzioni [136] referred to web mining as the application of data mining techniques to automatically discover and extract knowledge in a website, while Cooley et al. [137] further highlighted the importance of considering the behavior and preferences of the users. Web mining has enabled the analysis of the increasing volume of data accessible on the web. Furthermore, it has indicated that conventional and traditional statistical approaches are inefficient in undertaking this task [138]. Besides, clean and consolidated data is closely connected to the quality and utility of the patterns discerned through these tools since they are directly dependent on the data to be used [139].

Web usage mining (WUM), web structure mining (WSM), and web content mining (WCM) are the three predominant categories of web mining [136,140,141]. WCM and WSM utilize the primary web data, while WUM mines the secondary data [136].

WCM adopts the concept and principles of data mining to discover information from the text and media documents [142]. WCM mainly focuses on web text mining and web multimedia mining. WSM emphasizes the hyperlink structure of the web to link the different objectives together [143]. A typical web graph is structured with web pages as nodes and hyperlinks as edges, establishing a connection between two related pages. WSM primarily works on link mining, internal structure mining and URL mining. In addition, WSM can be used for categorizing web pages and is useful for gathering information, such as that pertaining to the similarities and relationships between different websites. The typical applications of WSM are (a) link-based categorization of web pages, (b) ranking of web pages through a combination of content and structure, and (c) reverse engineering of website models [144]. Link-based classification pertains to the prediction of a web page category, which is based on the words on the page, links existing between the pages, anchor text, HTML tags, and other potential attributes on a web page. WUM is the process of applying data mining techniques to the discovery of usage patterns from the web data [145]. When a user interacts with a website, web log data is generated on a web server in the form of web server log files. Different types of usage log files, such as access log, error log, referrer log, and agent log, are created on a server [146]. Web logs are the type of data that prove the most resourceful when performing a behavioral analysis on a website user [137]. Web usage mining consists of three phases: (1) pre-processing, (2) discovery of usage patterns, and (3) analysis of the pattern. Typical applications are (a) the ones based on user modeling techniques, such as web personalization, (b) adaptive web sites, and (c) user modeling [144]. However, this personalization process that contains rebuilding a user’s session has raised important legal and ethical concerns. Velásquez [139] adopted an integrative approach based on the distinctive attributes of web mining to identify the harmful techniques.

Analyzing the patterns generated from a typical web user’s complex behavior is a daunting task since, most of the time, a user is responsible for the spontaneous and dynamic generation of patterns of data [147,148]. The exploration of the web for outliers, such as noise, deviation, incongruent observations, peculiarities, and exceptions, has received attention in the mining community. Chandola et al. [149] provided a general and broad overview of the extensive research conducted on anomaly detection techniques, spanning multiple research areas and application domains, including web applications and web attacks. Gupta and Kohli [148], Gupta and Kohli [150], Gupta and Kohli [151] made experimental attempts to identify outliers in regression algorithm outputs by using web-based datasets. In fact, various regression algorithms are extensively adopted by several online portals operating in varying application domains, especially e-commerce websites [148]. Specifically, Gupta and Kohli [151] formulated a framework with the help of ordered weighted operators (OWA) as a multicriteria decision-making (MCDM) problem. The results proved that the proposed framework can aid in considerably reducing the outliers; however, its testing was restricted to a static purpose and a small dataset and the data were scattered for over a year. This work was an extension of an earlier study by Gupta and Kohli [150] in which a small experiment was conducted on a web dataset through the application of an ordered weighted geometric averaging operator. A recent study by Gupta and Kohli [148] detected outliers based on the principle of multicriteria decision-making (MCDM) and utilized ordered weighted operators for the purpose of aggregation.

On a daily basis, news websites feature an overwhelming number of news articles. While several text mining techniques can be applied to web news articles, the constantly changing data characteristics and the real-time online learning environment can prove to be challenging.

Two recent studies, conducted by Iglesias et al. [152] and Za’in et al. [153], proposed a different approach based on evolving fuzzy systems (EFS). It allows the updating of the structure and parameters of an evolving classifier, aids in coping with huge volumes of web news, and enables the processing of data online and in real time, which is essential in real-time web news articles. Iglesias et al. [152] developed a web news mining based on eClass0 classifier, while Za’in et al. [153] proposed a web news mining framework built on fuzzy evolving type-2 classifier (eT2Class), which outperforms other consolidated algorithms.

With the effective use of e-commerce, the internet increases the accessibility of customers from all over the world without having to deal with any marketplace restrictions. Web mining research is emerging in many aspects of e-services with the aim of improving online transactions and making them more transparent and effective [154]. The owners of e-commerce websites depend considerably on the analysis and summarization of customer behaviors so as to invest efforts towards influencing user actions and optimizing the success metric. The application of web mining techniques on the web and e-commerce for the sake of improving profits is not new, and a significant amount of research has been conducted in this field, especially pertaining to usage data. Recently, Dias and Ferreira [155] proposed an all-in-one process, improved by the crossing of data secured from diverse sources, for collecting and structuring data from an e-commerce website’s content, structure, and users. Finally, they presented an information model for an e-commerce website which contained the recorded and structured information resulting from the intersection of various sources and tasks for pattern discovery. Moreover, Zhou et al. [156] proposed three new types of automatic data acquisition strategies, based on web crawlers and the Aho-Corasick algorithm, to improve the text matching efficiency by considering the Chinese official websites for agriculture, the wholesale market websites of agricultural products, and websites for agricultural product e-commerce.

In the current era of vibrant electronic and mobile commerce, the financial transactions conducted online on a daily basis are massive in number, which creates the potential for fraudulent activity. A common fraudulent activity is website phishing, which involves creating a replica of a trustworthy website for deceiving users and illegally obtaining their credentials. A report published by Symantec Corporation Inc. [157] substantiated that the number of malicious websites detected rose by 60% in 2018 with respect to 2017.

The phishing phenomena, which mostly focused on web-based phishing detection methods than email-based detection methods, were discussed in detail by the study of Mohammad et al. [158], which provided a comprehensive evaluation of the blacklist-based, whitelist-based, and the heuristics-based detection approaches. The study concluded that, despite only heuristics-based detection approaches having the ability to recognize these websites, their accuracy may reduce considerably in case of change in the environmental features. A successful phishing detection model should also be adept at adapting its knowledge and structure in a continuous, self-structuring, and interactive manner in response to the changing environment that is characteristic of phishing websites. Yi et al. [159] proposed a class of deep neural network, namely the deep belief model (DBN), to detect web phishing. They evaluated the effectiveness of the detection model on DBN based on the true positive rate (TPR) with different parameters. The TPR was found to be approximately 90%.

Diverse disciplines have been interested in and have extensively undertaken the analysis of human behavior. Therefore, a broad theoretical framework is available with remarkable potential for application in other areas, particularly in the analysis of web user browsing behavior. With respect to web user browsing behavior, a prominent source of data is web logs that store every website visitor’s actions [160]. A recent study by Apaolaza and Vigo [161] addressed the challenges of mining web logs and proposed a set of functionalities into workflows that addresses these challenges. The study indicated that assisted pattern mining is perceived to be more useful and can produce more actionable knowledge for discovering interactive behaviors on the web. The requirement for more accurate and objective data for describing the navigation and preferences of web users led the researchers to study a combination of different data sources, from web and biometric data to traditional WUM research or experiments. Slanzi et al. [162] provided an extensive overview of the biometric information fusion applied to the WUM field.

6. Social Media

With the advent of social media, information related to various issues started going viral. Dealing with this flow has become an indispensable societal daily routine [163]. Moreover, social media creates new ways for people from various communities to engage with each other [164]. Social media is a perfect platform for the public to transfer opinions, thoughts, and views on any topic in a manner that significantly affects their opinions and decisions. Many companies simultaneously analyze the information available on social media platforms to collect the opinions of their customers and implement market research. Additionally, social media has started attracting researchers from several fields, including sociology, marketing, finance, and computer [24].

6.1. Twitter

Twitter is a social media platform where users can share their opinions, follow others, and comment on their opinions. In recent years, several researchers have focused on Twitter. With over 140 million tweets being posted in a day, Twitter serves as a valuable pool of data for many researchers [165]. Studies on topics ranging from the prediction of box office results of a movie to the changes in the stock market are based on Twitter data. Nisar and Yeung [166] collected a sample of 60,000 tweets made over a six-day period before, during, and after the local elections in the United Kingdom to investigate the relationship between their content and the changes in the London FTSE100 index [166]. Similarly, many other researchers use the information available on Twitter to make stock market predictions [167,168,169,170,171,172,173,174,175]. Öztürk and Ayvaz [163] studied Turkish and English tweets for evaluating their sentiments towards the Syrian refugee crisis and found that Turkish tweets are remarkably different from English tweets [163]. A study on the Arabic Twitter feed is proposed by Alkhatib et al. [176] with the objective of offering a novel framework for events and incidents management in smart [176]. Gupta et al. [177] presented a research framework to examine the cybersecurity attitudes, behavior, and their relationship by applying sentiment analysis and text mining techniques on tweets for gauging people’s cybersecurity actions based on what they say in their texts.

Regarding tourist sentiment analysis, Philander and Zhong [178] expounded on the application of tourist sentiment series from Twitter data for building low-cost and real-time measures of hospitality customer attitudes/perceptions.

Twitter data has also been studied by researchers from various fields to analyze (a) the Twitter usage behaviors of journalists [179] and cancer patients [180], (b) the sentiments of political tweets during the 2012 U.S. presidential election [181], (c) the effects of Twitter on brand management [182] and a given smartphone brand’s supply chain management [183], (d) the opinions held by people on the issue of terrorism [184], (e) the social, economic, environmental, and cultural factors pertaining to the sustainable care of both the environment and public health which most concern Twitter users [185], and (f) the tweet posting comments of academic libraries [186].

6.2. Facebook

Facebook is an American social media platform that is considered to be one of the biggest technology companies besides Amazon, Apple, and Google. According to Social Times, Facebook has 1.59 billion monthly active users. The Pew Research Center [187] determined that Facebook is the most extensively used social media platform. Facebook allows its users to express their thoughts, views, and ideas in the form of comments, wall posts, and blogs [187].

Kim and Hastak [188] analyzed Facebook data during the 2016 Louisiana flood, when parishes in Louisiana used their Facebook accounts to share information with people affected by the disaster. They discussed the critical role played by social media in emergency plans with the aim of helping emergency agencies in creating better mitigation plans for disasters [188].

In the context of business, companies need to monitor customer-generated content, not only on their personal social media page but also on the page of their competitors, to increase their competitive advantages. In this regard, He et al. [189] apply text mining to the Facebook and Twitter pages of three of the largest pizza chains in the U.S. pizza industry in order to help businesses in utilizing social media knowledge for decision-making [189].

Salloum et al. [190] classified the Facebook posts of Arabic newspapers through different text mining techniques. They found that the UAE is a country that shares the most number of posts on Facebook and also that videos are the most attracting part of the Facebook pages of Arabic newspapers [190].

Text mining on Facebook is also used to help institutions with their marketing strategies. Al-Daihani and Abrahams [191] implemented a text analysis on the Facebook posts of the academic libraries of the top 100 English-speaking universities. Their findings can be applied by academic libraries to develop their marketing, engagement, and visibility strategies.

6.3. Other Social Media Platforms

Text mining on social media is also utilized to improve transport and tourism planning. In this regard, Serna and Gasparovic [192] conducted a study on transportation modes using TripAdvisor comments and proposed a dashboard platform with graphical items that analyzes this data. This dashboard would facilitate the results collected from social media and its effect on tourism would be discussed [192]. Furthermore, Sezgen et al. [193] investigated the primary drivers of customer satisfaction and dissatisfaction of both full-service and low-cost carriers and of economy and premium class cabins using TripAdvisor passenger reviews for fifty (50) airlines. Text mining in social media platforms has been used to automatically rank different brands and make recommendations. Suresh et al. [194] applied opinion mining to the real life reviews from Yelp. They used the reviews given by restaurants’ customers to build a recommendation list. Saha and Santra [195] applied a similar idea to textual feedback from Zomato.

Existing literature on text mining on social media have predominantly discussed English texts and semantics [196] since most of the available packages have been developed for English-speaking users. However, several studies have focused on Chinese social media and semantics. Liu et al. [197] used discussion forums related to the Chinese stock market, namely the East Money forum, and opinion classification to predict stock volatilities [197].

Chen et al. [198] focused on Sina Weibo, a Chinese social media platform, to predict stock market volatilities. Moreover, they used the deep recurrent neural network [198].

A study by Liu et al. [199] sought to assess social media effects in the big data era. They used Chinese platforms, such as Hexun and Sina Weibo, and considered the stock index from 77 corporate companies in Shanghai and Shenzhen. The experimental results highlighted the positive relationship between the trading volumes/financial turnover ratios and the media activities [199].

The axis of the work of Zhang et al. [200] is Xueqiu, a Chinese platform that is similar to Twitter but specifically for investors. In their study, they classified the tweets by polarity, implementing the naive Bayes network, and predicted the stock price movements by using SVM and the perceptron network [200].

Recently, Pejic-Bach et al. [201] applied text mining on publicly accessible job advertisements on LinkedIn—one of the most influential social media networks for business—and developed a profile of Industry 4.0 job advertisements.

7. Published Articles

The enormous number of scientific publications provides an extremely valuable resource for researchers; however, their exponential growth represents a major challenge. On the other hand, a literature review is an essential component of almost any research project. Text mining enhanced the review of academic literature, and more papers are being published over the years using this technique. Text mining techniques can identify, group, and classify the key themes of a particular academic domain and highlight recurrence and popularity of topics over a period of time.

In the field of business, management, and information technology contexts, Moro et al. [202] performed topic detection on 219 articles between 2002 and 2013 through text mining when detecting terms pertaining to business intelligence in banking literature. They used the Bayesian topic model LDA and a dictionary of terms to group articles in several relevant topics. A similar study by Amado et al. [203], based on the study of Moro et al. [202], outlined a research literature analysis based on the text mining approach over a total of 1560 articles framed in the 2010–2015 period with the objective of identifying the primary trends on big data in marketing. Furthermore, Moro et al. [204] summarized the literature collected on ethnic marketing in the period 2005–2015 using LDA.

Cortez et al. [205] focused on analyzing 488 research articles published on a specific journal within a 17-year timeline on the domain of expert systems. The authors adopted LDA and followed a methodology similar to that applied by Moro et al. [202] and Moro and Rita [206] for branding strategies on social media platforms in the hospitality and tourism field. Guerreiro et al. [207] used the topic model cluster algorithm Correlated Topic Model (CTM), which is based on LDA, to conduct an analysis of 246 articles published in 40 different journals between 1988 and 2013 on the subject of cause-related marketing (CRM). The study revealed the most discussed topics on CRM. The study of Loureiro et al. [208] explored a text-mining approach using LDA to conduct an exhaustive analysis of 150 articles on virtual reality in 115 marketing-related journals, indexed in Web of Science. Galati and Bigliardi [209] implemented text mining methodologies for conducting a comprehensive literature review of Industry 4.0 to identify the main overarching themes discussed in the past and track their evolution over time.

Literature review articles on text mining have also recently emerged in the field of operations management, thus providing a framework for identifying the predominant topics and terms in the field. Guan et al. [210] used latent semantic analysis (LSA) to identify the core areas of production research based on the abstracts of all articles published in a specific journal since its inception and revealed how the focus extended on topics has evolved over time. Demeter et al. [211] applied two text mining tools on 566 papers in 12 special issues of a specific journal between 1994 and 2016 to gather a comprehensive review of the entire field of inventory research.

Literature reviews within several domains have also benefited from text mining. Grubert [212] investigated the Life Cycle Assessment (LCA) literature by applying unsupervised topic modeling to more than 8200 environment-related LCA journal article titles and abstracts published between 1995 and 2014. Yang et al. [213] mined 1000 abstracts from the Google Scholar database for search results for technology infrastructure of solar forecasting, classified the concepts of solar forecasting on the full texts of 249 papers from Science Direct, and also undertook the keyword analysis and topic modeling on six handpicked papers on emerging technologies related to the subject. Moro et al. [214] performed text mining over the whole textual contents of papers, excluding only the references and authors’ affiliations, published in a tourism-related journal from 1996 to 2016. In the field of agriculture, Contiero et al. [215] analyzed through text mining the abstracts of 130 peer-reviewed papers that were published between 1970 and 2017 dealing with the pain issue in pig production and its correlation with the welfare in pigs.

Text mining was further employed as a tool in literature review-based studies in public health and medical sciences for various key themes, such as the adolescent substance and depression [216], cognitive rehabilitation and enhancement through neurostimulation [217], the protein factors related to the different cancer types [218], and diseases and syndromes in neurology [219].

Text mining methods are also employed to extract metadata from published articles. Kayal et al. [220] developed a method to automatically extract funding information from scientific articles. Yousif et al. [221] developed a model based on deep learning to extract the purpose of a citation to an article. Coupling this information with the number of citation and the applications of the articles results gives good measure to evaluate the efficiency of the funding.

However, text mining comes under the microscope of copyright, contracts, and licenses. Invoking the fundamental principles of copyright in the context of new technologies, Sag [222] explains that copying expressive works for non-expressive purposes should not be considered as infringement and should instead be labeled as fair use. In his article, Sag deals with the U.S.’s current legal framework and the feasibility of its adjustment to the text data mining processes, especially in the aftermath of the decisions in the cases of Authors Guild v. HathiTrust and Authors Guild Inc. v. Google. Recently, the European’s parliament voted in favor of a copyright exception (Directive (EU) 2019/790) on text and data mining for research purposes, as well as for individuals and institutions with legal access to protected works [223].

8. Meeting Transcripts

Most of the time, the meeting transcripts are too long, making reading and analyzing the core content infeasible; therefore, providing a framework that can extract the keywords automatically from the meeting transcripts is instrumental. Towards this end, Sheeba and Vivekanandan [224] proposed a model in which the keywords and key phrases are extracted from meeting transcripts. They claimed that the difficulty of this work is tied to the occurrence of synonyms, homonyms, hyponymy, and polysemy in the transcripts. Keywords were extracted by using the MaxEnt and SVM classifiers, and the extraction of bigram and trigram keywords was ensured through the N-gram-based approach.

The way meeting transcripts are written varies in terms of their style and details compared to the written text style. Liu et al. [225] presented a list with the differences that could negatively impact a keyword extraction system, such as the low lexical density, the lack of a perfect structure, the poor structure, and the varied speaking styles and word usage of a multiplicity of participants.

Liu et al. [225] extended the previous work of [226] by proposing a supervised framework for the extraction of keywords from meeting transcripts based on various features, such as decision-making sentence features, speech-related features, and summary features, that reflect the meeting transcripts more efficiently. The authors conducted experiments using the ICSI meeting corpus for both human transcripts and different automatic speech recognition (ASR) outputs, and they showed that the method suggested outperforms the TF-IDF weighting and a predominant state-of-the-art phrase extraction system.

Song et al. [227] published two different articles on the extraction of keywords from meeting transcripts. The authors proposed a just-in-time keyword extraction method by considering two factors that make their work different from that of others. These factors are (1) the temporal history of preceding utterance, which gives more importance to recent utterance, and (2) the topic relevance, which considers only the preceding utterances that are relevant to the current ones. Their method was applied on two English and Korean datasets, including the National Assembly transcripts in Korean and the ICSI meeting corpus. The results indicated that including these factors can enhance keyword extraction. Furthermore, in a more recent study [228], they added the participant factor to their graph-based keyword extraction method.

Xie end Liu [229] applied a framework on the ICSI meeting corpus to summarize meeting transcripts. In this framework, only the noteworthy sentences are selected to form the summary. Therefore, to specify whether a sentence should be included in the summary or not, they used supervised classification. Moreover, various sampling methods were implemented for avoiding the problem of imbalanced data.

Sharp and Chibelushi [230] presented an algorithm for the text classification of meeting transcripts. This study focused on the analysis of spoken meeting transcripts of discussions on software development, with the aim to determine the topics discussed in these meetings and, thereby, extract the decisions made and issues discussed. The authors suggested an algorithm that is appropriate for segmenting meeting transcripts that are spoken by combining semantically complex lexical relations with speech cue phrases to build lexical chains in determining topic boundaries. They argue that the results can help project managers in avoiding rework actions.

9. Knowledge Extraction

As the number of digital documents has grown exponentially, almost on any topic, automated knowledge extraction methods have become popular in many sections, from science to marketing and business. Many text mining techniques deal with extracting knowledge authors represented in their texts, e.g., extracting arguments [43,44,45,46] and extracting opinions [13,14,15,16]. Knowledge extraction is usually concerned with finding and extracting the key elements from textual data (e.g., articles, short notes, tweets, blogs, etc.) to extract hidden knowledge or build new hypotheses. For instance, a set of articles by an author, (or group of authors) contains knowledge about writing style, reasoning methodology, etc. [231,232,233]. For another example, the set comments on the problem may contain information about different aspects of an issue and possible (proposed) solutions [234]. Extracting such knowledge requires the modeling of the relational structure of textual data, i.e., the relation between words, paragraphs, and other fractions of texts. Networks, as a powerful tool, have been used to demonstrate relational structure of textual data in many knowledge extraction applications.

The idea of using networks to represent the structure of a given text (or set of texts) has been a practical one in literary analysis. For instance, Amancio et al. [231] used a complex network to quantify characteristics of a text (e.g., intermittency, burstiness, and words’ co-occurrence) to authorship attribution. Although the method successfully classified eight authors from the nineteenth century, they concluded that the accuracy of the results may depend on the text database, features extracted from the network, and the attribution algorithm. They suggest that different algorithms and features should be tested before engaging the real application. Amancio [233] employed the same idea and combined it with Fuzzy classification and traditional stylometry methods to classify texts based on authors’ writing style. The results show improvement in authorship attribution and genre identification when a hybrid algorithm is used to classify texts. Although Amancio et al. [231] shade light on authorship attribution using networks and made it possible to think about automated author attribution, their study did not take the structural changes into account. It is very common among authors to change style over time due to change in socio-economic characteristics, personal changes, etc. Amancio et al. [232] employed a similar idea of using networks to detect and model the literary movements through time. The used the books published between 1590 to 1922 to draw the literary movements in five centuries.

Nuzzo et al. [235] developed a set of tools to discover new relations between genes and between genes and diseases so it can be used to build more likely hypotheses on gene-disease associations. In order to find new possibilities, they applied an algorithm with the following steps on the abstracts of published articles from ”PubMed“ databases:

- Extract concepts on terms describing genes and diseases from abstracts.

- Derive genes-disease annotation.

- Use similarity metrics to demonstrate the relevance between genes, which measures the terms shared between genes to identifies the possible relations.

- Summarize the resulting annotation network as a graph.

The method shows its power to identify new possible gene-disease relations and builds possible hypotheses, as well as extracts the existing knowledge from the abstracts. Although their method is effective, it cannot be easily used for other applications since it uses an already existing structured knowledge base (e.g., Unified Medical Language system) to extract concepts. In many applications, such a knowledge base either does not exist or is very limited.