Abstract

Mid-air collision is one of the top safety risks in general aviation. This study addresses the description and experimental assessment of multimodal Augmented Reality (AR) applications for training of traffic procedures in accordance with Visual Flight Rules (VFR). AR has the potential to complement the conventional flight instruction by bridging the gap between theory and practice, and by releasing students’ time and performance pressure associated with a limited simulator time. However, it is critical to assess the impact of AR in the specific domain and to identify any potential negative learning transfer. Multimodal AR applications were developed to address various areas of training: guidance and feedback for the correct scanning pattern, estimation if an encountering traffic is on collision course and application of the relevant rules. The AR applications also provided performance feedback for collision detection, avoidance and priority decisions. The experimental assessment was conducted with 59 trainees (28 women, 31 men) assigned to an experimental group (AR training) and a control group (simulator training). The results of tests without AR in the flight simulator show that the group that trained with AR obtained similar levels of performance like the control group. There was no negative training effect of AR on trainees’ performance, workload, situational awareness, emotion nor motivation. After training the tasks were perceived as less challenging, the accuracy of collision detection has improved, and the trainees reported less intense negative emotions and fear of failure. Furthermore, a scanning pattern test in AR showed that the AR training group performed the scanning pattern significantly better than the control group. In addition, there was a significant gender effect on emotion, motivation and preferences for AR features, but not on performance. Women liked the voice interaction with AR and the compass hologram more than men. Men liked the traffic holograms and the AR projection field more than women. These results are important because they provide experimental evidence for the benefits of multimodal AR applications that could be used complementary to the flight simulator training.

1. Introduction

The term Augmented Reality (AR) first appeared in a paper by Caudell and Mizell in 1992 working at Boeing. AR was considered to enable cost reductions and to improve efficiency by replacing physical assembly guides, templates, wiring lists, etc. with an AR system [1]. In the following years AR systems were implemented and tested in multiple areas such as healthcare, construction, gaming, and education [2]. Various studies show that, especially for the educational purpose AR can improve the user’s performance and learning experience [3]. AR features investigated have been the augmentation of written information such as in the magic book [4], the facilitation of collaboration [5], the provision of stereo-sound assisted guidance [6] and remote guidance [7]. Benefits of AR-supported training have been reported across a multitude of domains ranging from visual art [8] to science laboratories [9,10], technical training [11], and aviation training [12,13,14]. Research on pilot training indicates that AR has the potential to improve both the teaching of contents and the conditions of learning in ab initio [15,16] and advanced type rating courses [17,18]. A study on conventional advanced pilot training showed that time pressure can increase the perceived difficulty of the course for some trainees, especially since advanced pilot training requires travelling, has a duration of several weeks and is associated with high costs and performance expectations [17]. AR-supported pilot training is expected to enable a more learner-centered, immersive, inclusive and sustainable pilot education [17].

Gaming concepts, especially the features and user interactions with computer games are an interesting area of research for AR games designed for teaching and learning. Immersion is an intense experience that was studied extensively in relation to computer games and AR learning games. Immersion is considered a state of physical, mental and emotional involvement in a given context [19]. Immersion was associated with the game narrative and features that involve challenge and skills, curiosity, control, concentration, comprehension, familiarity and empathy [20]. A qualitative investigation of gamers’ experiences identified three levels of immersion that develop as the user is involved with a game: engagement, engrossment and total immersion [21]. An engaged user is interested in the game and wants to continue using it, but for reaching the level of engrossment, “the gamers’ emotions are directly affected by the game” [21]. Reaching the highest level of total immersion requires “presence”, which is defined as the feeling of being in the game world [21]. For most of the participants, empathy and atmosphere contribute to total immersion. Atmosphere can be created by graphics and sounds that are relevant to the actions and location of the game characters and require the use of attention [21]. As shown in [21], total immersion seems to be facilitated by the multimodal interaction with the game and engagement of visual, auditory and mental attention. This finding was also confirmed by research [22] showing that multimedia features and game-based challenges contribute to gamers’ immersion. In addition to presence, the experience of flow [23] was associated with the highest level of total immersion [24]. Research shows a potential for AR science games to increase students’ interest and to facilitate the experience of flow during learning manifested in stronger intensity of engagement, a feeling of discovery and the desire for better performance [25]. Interestingly, a study with middle school students [25] did not find a significant effect of gender nor interest in science to predict the experience of flow when using an AR game for learning.

Preferences for gaming concepts in relation to flight training have been reported in a study with 60 aircraft pilots (12 women and 48 men) [26]. The majority of female and male pilots considered the following game features satisfying: achieving a target to finish tasks, receiving feedback for correct actions and receiving points if you successfully finish a task [26]. Answering questions during the game and collecting assets or information to proceed were slightly more popular for women, than for men. However, including a narrative in the game was slightly more preferred by men than by women. Less popular gaming features were setting a time limit to finish tasks and solving puzzles to proceed [26]. For the purpose of this study a number of gaming features are designed into AR applications for collision avoidance training such as achieving a target to finish tasks, answering questions, receiving feedback and receiving points if you successfully finish a task [26]. In this study, the effects of AR-supported training will be assessed in terms of performance, immersion, emotion, motivation and perceived workload.

Emotions influence the learning process [27] and emotional regulation also plays a role in the preparation for motor behavior and sport performance [28]. Besides practice, motivational factors such as fear of failure, probability of success, interest, and challenge can also influence learning outcomes [29,30,31]. Practical training for managing critical flight maneuvers had a significant effect to improve pilots’ performance and positive emotions in simulator [32] and in real flight [33]. Simulator training reduced the intensity of trainees’ negative emotions [32]. However, high learning performance in ab initio student pilots was associated with less intense negative emotions [34]. Beyond the practical training itself, fear of failure was also shown to be affected by support given to students in form of coaching [35]. In this study, trainees’ emotions and motivation are assessed in addition to learning performance and AR immersion.

1.1. Collision Avoidance Training

Preventing mid-air collisions is one of the top safety priorities in general aviation due to the high risk of mid-air collisions [36]. In Visual Flight Rules (VFR) flight the pilots are responsible for see-and-avoid which is taught mainly theoretically during the course on aviation law. In addition to the risks identified in practice from incident and accidents [36], research shows that pilots encounter various problems with the detection of traffic, with the estimation of conflicts and application of the rules-of-the-air for collision avoidance [37,38,39,40]. A system-theoretic analysis of pilot training for VFR flight [41] identified recommendations to introduce specific elements of practical training and proficiency examination related to the detection, estimation and management of traffic conflicts.

The estimation of collision parameters involves anticipative cognitive processes that have as a prerequisite the formation and improvement of a mental model through practice [42]. Anticipation is seen as the highest level of situational awareness, beyond the perception and understanding of the key situational elements [43]. Anticipation means that pilots cannot wait for a collision to occur in order to perceive it, but they have to detect potentially conflicting traffic and estimate the time-to-collision and relative distance. The estimation mechanisms can be refined by practice and feedback. Research on learning to estimate such collision parameters has been successfully conducted in conventional flight simulators [39] and in a network of flight simulators which reproduced congested traffic scenarios at an airport [40,44]. Research also shows that particular scanning patterns of Air Traffic Controllers (ATCOs) such as a larger spatial distribution of visual gaze are associated with better response accuracy in the detection of accelerating aircraft on a two-dimensional display with mixed traffic [45], but individual characteristics such as experience also play a significant role [46]. Furthermore, immersion of ATCOs in a three-dimensional Virtual Reality (VR) display led to improved collision detection and analytical skill with increased speed and better accuracy compared to two-dimensional displays [47].

Simulator training is usually conducted in aviation for scenarios that are too dangerous for practice in real flight. However, AR features bring new opportunities for pilot training by using multimodal interactions and by making trainees’ gaze point visible to the instructor [48]. Furthermore, an analysis of flight instruction methods for collision detection and avoidance [49] suggested that AR learning applications could add value in particular to the teaching of correct scanning patterns. However, using the HoloLens in an experimental setting for simulating relative motion in AR requires a good handling of the device, especially when trying to achieve a standardized procedure for all participants [50]. The effective detection of other traffic in VFR flight requires particular “block” scanning techniques [51]. “Traffic can only be detected when the eye is not moving, so the viewing area (wind-shield) is divided into segments. The pilot methodically scans by stopping his eye movement in each block in sequential order” ([51], p. 13). In the side-to-side method of scanning the pilot starts at the far left of the visual field and proceeds to the right, pausing for a second in each 10° block to focus their eyes [51]. In the front-to-side method of scanning the pilot starts, for example, in the center block, moves to the far right, back to center and to the far left by focusing for a second in each block. In addition to traffic detection, the trainee has to estimate if the encountered traffic is on collision course or not, and to apply the rules for priority and collision avoidance [49]. In this study, the latter training elements were implemented in a second AR application. For the purpose of this research, a multimodal training system consisting of an AR application and a light aircraft flight simulator was designed to guide the trainee in learning the scanning pattern and applying it in simulated flight situations. The multimodality of this system was characterized by various input modalities (haptic button of the flight simulator and voice recognition, gaze and gesture recognition of the HoloLens). Multiple output modalities were audio, holographics and visual displays outputs.

1.2. Gender and Flight Training

Female pilots represent less than 10% of the pilot population worldwide [52]. Due to the current pilot shortage, many airlines are interested to attract talented women and to rethink the training of the next generation of pilots [53]. Gender differences in flight training have been described in various studies [40,54,55,56,57]. Causes for gender differences related to flight training are attributed to different experiences and starting conditions [54]. In the general population women tend to perform better in verbal tasks and men tend to be better in visuospatial tasks [58]. Interestingly, research shows that gender differences can vanish after training, when the learning methods are appropriately designed. Thus, trainees of both genders can reach similar levels of performance despite initial gender differences. Research on gender-sensitive pilot training [32] showed that trainees from both gender groups reached similar levels of performance in post-test, despite gender differences in the pre-test. Thus, the appropriate, inclusive method of instruction can support trainees in reaching similar levels of performance with the same amount of training. Research on the potential of AR to improve flight instructions shows that despite many similarities, there are also gender-specific needs and preferences regarding AR training applications in the ab initio [15] and in the advanced pilot courses [17]. In this study, the AR applications for collision avoidance training have been designed in a gender-sensitive manner by implementing features that match various gender preferences and by including a diverse team of experts in the design [59]. Furthermore, the effects of the applications will be assessed in a gender-sensitive manner with female and male samples divided in experimental and control groups.

1.3. Research Questions

The purpose of this study is to investigate the effect of the AR application for learning traffic procedures (e.g., collision avoidance) on the learning performance, situational awareness, emotion and motivation of the trainees in a gender-sensitive manner with an experimental and a control group. In addition, the effect of the AR scanning application on trainees’ scanning performance will be evaluated. The AR applications will be assessed in terms of features, immersion and gender preferences.

2. Materials and Methods

2.1. Participants

The experiment was conducted with 59 volunteers recruited by university announcements. Twenty-eight women with a mean age of 23.43 years (standard error 0.88) volunteered for the experiment and were randomly assigned to the experimental (N = 14) or the control group (N = 14). Thirty-one male volunteers with a mean age of 23.03 years (standard error 0.59) were assigned to the experimental (N = 15) and the control group (N = 16). Informed consent was obtained from all subjects involved in the study, and they were offered a compensation of EUR 50 for participation. The study was conducted in accordance with the ethical guidelines of the Declaration of Helsinki and the General Data Protection Regulation of the European Union.

2.2. Equipment

For the AR experiment a Microsoft HoloLens was used, which is a standalone AR device that does not require any additional hardware. It has a binocular display using the liquid crystal on silicon technology with a resolution of 1268 × 720 and 60 Hz per eye. The field of view is 30° horizontally and 17° vertically. The device has built-in speakers, microphone, camera and WiFi. With a battery life of about three hours, it weights 579 g and gives the user six degrees of freedom [60].

The implemented AR HoloLens applications addressed three contents:

- Moving ball guidance and assessment of the scanning pattern

- Simulation of traffic with an additional quiz to assess the collision detection accuracy, the avoidance maneuver, and the identification of the right-of-way.

- Spatial orientation exercises and assessment

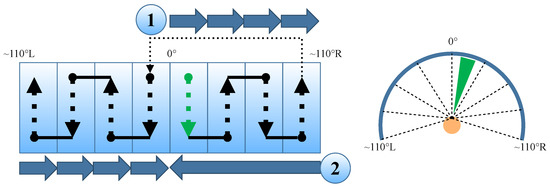

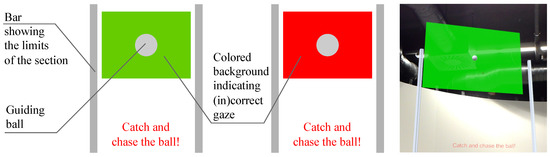

To make the applications more engaging for the trainees, a number of gamification features were used such as rating user actions with a score, providing feedback, using friendly colors and texts, using visually attractive 3D models. With the AR application for scanning pattern training (see Figure 1), the user was introduced to the specific front-to-side block scanning pattern for VFR. Thus, the trainees received a demonstration of the correct scanning procedure that shall be used by pilots in VFR to search for traffic flying in the surrounding area. The application used a ball hologram, moving with the right speed in the surrounding visual field of the user (±110° to right and left), and showing the correct viewing directions, starting in the middle to the right (marked with 1 in Figure 1), then back to the middle and to the left. In addition, the direction of movement was described by a voice output. The application indicated correct gazes (directed at the ball) with a green colored background around the ball (see Figure 2) and incorrect gazes with a red colored background. Due to the limited projection field of the HoloLens, the trainees had to control the gaze using also head movements. During this procedure the application measured the time of correct and incorrect gazes to evaluate users’ performance and provided a score as an output, which is calculated for each attempt as follows and displayed as percentage:

Figure 1.

Augmented Reality (AR) Moving ball scheme for the scanning pattern.

Figure 2.

AR Moving ball guidance for the scanning pattern in the application.

The second AR application simulated the traffic encounters. The user conducted the scanning pattern to detect an approaching aircraft. Predefined encounter scenarios were used with aircraft approaching from different directions in level flight, climbing or descending. Additionally, the approach was announced via audio output using the typical phraseology for traffic information of the flight information service. As soon as the user detected the aircraft and was able to decide, if a collision will happen or not, she/he had to press a button. Subsequently, a quiz started and asked the user, if the traffic was on a collision course or not. If the aircraft was on a collision course, the user was asked who had the right-of-way and about the correct avoidance maneuver procedure. The user received feedback as text (correct or incorrect) and in form of a game score. The game score (Equation (2)) represents the percentage of correct answers per scenario. The sum of the points was normalized, i.e., scaled to a value between 0% and 100% depending on the number of possible scores in the scenario, because the existence of the and is determined by the scenario (collision or no-collision). Therefore, represents the number of possible scores per scenario.

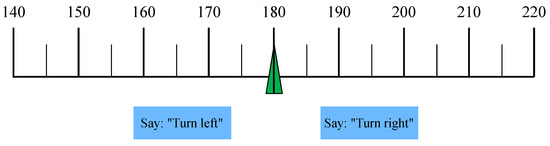

After each traffic encounter, the user conducted a spatial orientation exercise with the AR application. The user saw a compass-strip-hologram which showed the current heading (see Figure 3). Then he/she received a voice command to turn to a new heading (e.g., “Turn heading 090”) and was asked which direction, left or right, was shorter for turning to the new heading. The user responded by saying left or right. The developed app used speech recognition as input for the quiz questions and gave immediate visual feedback for correct or wrong answers. Finally, the application presented as feedback the trainees’ scores.

Figure 3.

AR Heading exercise.

Flight Simulator

The flight simulator was a generic, fixed-base aircraft simulator with a cabin in original size of a light aircraft equipped with glass-cockpit and genuine cockpit controls. A cylindrical projection screen with 7 m diameter provided 190 degrees horizontal vision angle and 40 degrees vertical vision angle. Thus, the projection screen of the simulator provided realistic peripheral vision stimulation and a visual scenery. The controller station contained a data logger, making it possible to set the flight scenarios and to save the data. The flight simulator was connected with the AR application. The simulator was flying straight and level with the autopilot engaged and it was mainly used to generate the traffic data for the scenarios shown in AR. Furthermore, a button in the flight simulator cockpit was used by the participants to indicate, that they were able to decide if a collision would happen or not. The input signal of the button was sent to the AR application, where it was used for the control flow of the application. The communication between the flight simulator and the AR application was realized by User Datagram Protocol (UDP) messages.

2.3. Procedure

Initially, both groups received a written briefing and a familiarisation session with the flight simulator. Afterwards both groups attended a pre-test without AR in the flight simulator and conducted eight collision detection and avoidance maneuvers. Next, the experimental group also received a familiarisation with the HoloLens. The experimental group used the AR application for scanning training at least three times or more often, if they did not reach an accuracy of 70%. This was followed by training with the AR application (eight scenarios) and without the AR application in the simulator (eight scenarios). Each exercise consisted of one scanning pattern training, one collision detection exercise and one orientation exercise. Finally, both groups conducted a post-test without AR in the flight simulator (eight scenarios) followed by a scanning test with AR. The control group used conventional written and graphical instruction for scanning training, and they attended the training sessions (16 scenarios) and orientation exercises without AR in the flight simulator. Finally, the control group received a familiarization with the HoloLens and conducted a scanning test with AR. Table 1 gives an overview of the procedure. The same traffic scenarios were used for the experimental and control group. The participants received feedback during training (experimental group by HoloLens, control group by an application on a laptop), but not during tests. The compass orientation exercise was included to increase the workload of the exercises and to bring more variety to the training. Each block of traffic scenarios (training and test) contained four collision and four non-collision scenarios presented in a random order. A collision was considered when the closest point of approach was smaller than 50 meters. In the training scenarios, in two out of four collision scenarios nobody had the right-of-way (head-on approach), one time the other traffic had priority, and one time the participant him-/herself had the right-of-way. In the test scenarios, in two out of four collision scenarios nobody had the right-of-way and in the other two the other traffic had the right-of-way. In the simulator not only the traffic scenarios, but also the scenery (landscape) was changed to reduce the recognition effect. Questionnaires were filled by participants of both groups before and after the tests and after the training.

Table 1.

Procedure for the experimental and control group.

2.4. Independent Variables

Effects of training with the AR applications were assessed in a pre-test and post-test design with an experimental and a control group. Gender subgroups were created within the experimental and the control groups. Thus, independent variables were the test (pre-test vs. post-test), the group treatment (experimental vs. control group), and the gender group (female vs. male). The control groups did not use the AR applications, but instead they used conventional training means.

2.5. Dependent Measures

The scanning performance was calculated as the ratio between the duration of user’s gaze at the virtual ball, and the duration of the entire scanning pattern procedure (Equation (1)). Thus, the scanning accuracy scores vary between 0 and 1. The collision detection accuracy was assessed during each test by asking the trainee for each scenario if the traffic encounter was on collision course or not. Correct answers scored one and incorrect answers scored zero points. Thus, the maximum collision detection accuracy (MCDA) (Equation (3)) total per pre-test and post-test, calculated as the sum of individual scenario scores could be 8.

Furthermore, the selection of the collision avoidance maneuver was assessed during each test by asking the trainee for each collision scenario what avoidance maneuver was required in accordance with the law. Correct answers scored one and incorrect answers scored zero points. Thus, the maximum total for the selection of the collision avoidance maneuver (MCAS) (Equation (4)) per pre-test and post-test, calculated as the sum of individual collision scenario scores could be 4.

The identification of the right-of-way was assessed during each test by asking the trainee for each collision scenario which aircraft had the right-of-way in accordance with the law. Correct answers scored one and incorrect answers scored zero points. Thus, the maximum total for the selection of the right-of-way (MRWS) (Equation (5)) per pre-test and post-test, calculated as the sum of individual collision scenario scores could be 4.

The trainees self-rated their subjective situation awareness after each test using the Situation Awareness Rating Technique (SART) [61] with item scales ranging from 1 (low) to 7 (high). Subjective workload was assessed after each test using the Task Load Index (NASA–TLX) [62] that contains six items: mental demand, physical demand, and temporal demand of the task, effort, performance, and frustration. The NASA-TLX scales ranged from 1 (very low) to 7 (very high). For the total NASA-TLX score the performance scale was inverted. Emotion was assessed after each test in terms of arousal intensity and valence (positive vs negative emotion) using the Positive and Negative Affect Schedule (PANAS) [63]. In PANAS the item scales ranged from 1 (very slightly or not at all) to 6 (extremely). Motivation was self-assessed by the trainees after each test using the Questionnaire on Current Motivation (QCM) [29] that consists of 18 items measuring four factors: challenge, interest, probability of success and anxiety/fear of failure. Each QCM item had a scale ranging from 1 (disagree) to 7 (agree). The AR applications were assessed by the trainees after using them. The gesture interaction, voice interaction, traffic holograms, compass hologram, the quiz and the projection field were assessed on a scale ranging from 1 (very poor interaction) to 5 (very good interaction). The comfort and trust in the AR applications were rated from 1 (very low) to 5 (very high). After using the AR applications, the trainees assessed their interaction using the Augmented Reality Immersion (ARI) questionnaire [24] with 42 items grouped in three scales: AR engagement, AR engrossment and AR total immersion. Each ARI item was self-rated on a scale ranging from 1 (totally disagree) to 7 (totally agree).

2.6. Data Analysis

For the collision avoidance application, data analysis was conducted using the repeated measures analysis of variance with one within-subjects factor (test) and two between-subjects factors (group treatment and gender). One-way analyses of variance were used to calculate differences between the gender groups in the assessment of the AR immersion and in the appreciation of the AR features. For the scanning application a one-way analysis of variance was used to calculate differences in the scanning performance between the experimental and control group, and between the gender groups in the AR post-test. The Bonferroni correction was applied to pairwise comparisons. Alpha was set at 0.05.

3. Results

3.1. Collision Detection and Avoidance Training

Main training effects, independent of the type of training have been assessed by comparing the post-test with the pre-test. Descriptive data is presented in Table 2. The training had a positive effect to improve trainees’ collision detection accuracy [F(1,45) = 4.93, p < 0.03, = 0.10], to decrease the intensity of their negative emotion [F(1,45) = 22.32, p < 0.0001, = 0.33], and their fear of failure as a motivational factor [F(1,45) = 18.96, p < 0.0001, = 0.30]. In addition, the tasks were estimated as significantly less challenging after the training [F(1,45) = 28.33, p < 0.0001, = 0.39]. Differences between post-test and pre-test in trainees’ selection of the avoidance maneuver and identification of the right-of-way, subjective situation awareness, workload, interest and success probability did not reach statistical significance.

Table 2.

Descriptive data of trainees’ performance, situational awareness, workload, emotion and motivation in post-test and pre-test (SE—Standard Error).

3.2. Effects of the Type of Training

The effect of the type of training was assessed in terms of differences between the experimental group that trained with the AR applications and the control group that trained only in the flight simulator (Table 3). The main factor type of training did not have a significant effect on trainees’ collision detection accuracy, workload, situational awareness, positive nor negative emotion. The motivational variables challenge, interest, success probability, and fear of failure were also not significantly affected by the type of training.

Table 3.

Descriptive data of trainees’ performance, workload, situational awareness, emotion and motivation in the experimental and control group.

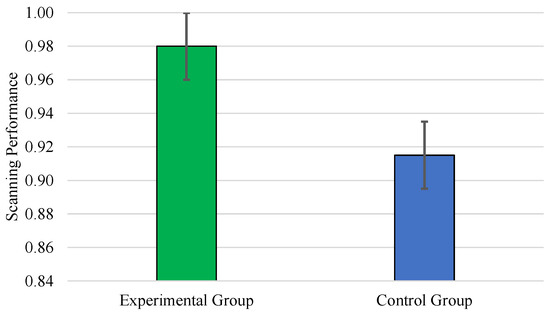

3.3. AR Scanning Training

As illustrated in Figure 4, the scanning performance in post-test was significantly affected by the type of training [F(1, 37) = 6.11, p < 0.02, = 0.14]. The experimental group (Mean = 0.98, SE = 0.02) performed better than the control group (Mean = 0.92, SE = 0.02). The gender effect [F(1,37) = 2.79, p < 0.10, = 0.07] and the interaction term gender and group [F(1,37) = 1.10, p < 0.30, = 0.03] did not reach statistical significance.

Figure 4.

Scanning performance of the AR and conventional training groups in AR post-test. Error bars represent standard errors.

3.4. Gender Effects

There was a significant effect of the main factor gender on trainees’ negative emotion [F(1,45) = 7.28, p < 0.01, = 0.14] and fear of failure as a motivational factor [F(1,45) = 6.95, p < 0.01, = 0.13]. Women (Mean = 13.63, SE = 0.58) reported stronger negative emotions than men (Mean = 11.48, SE = 0.55) during tests. Women (Mean = 9.52, SE = 0.62) also reported stronger fear of failure than men (Mean = 7.27, SE = 0.59) during tests. The interaction term gender and trial was significant for the probability of success [F(1,45) = 7.37, p < 0.009, = 0.14], showing that women (Mean = 7.80, SE = 0.42) estimated their probability of success in post-test lower than men (Mean = 9.14, SE = 0.39) despite similar levels in pre-test for women (Mean = 8.78, SE = 0.44) and men (Mean = 8.74, SE = 0.42).

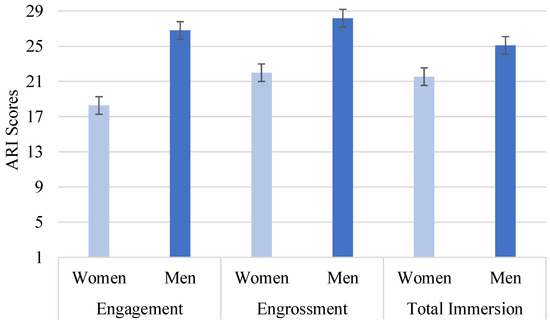

3.5. Assessment of the AR Application and the Subjective AR Experience

Within the experimental group that trained with the AR applications, gender differences in AR immersion and in the appreciation of various features of the AR applications were explored. As Figure 5 shows, men scored higher than women in AR engagement, engrossment and total immersion. However, there was only a statistically significant gender effect on engagement with AR [F(1,19) = 6.36, p < 0.02, = 0.25]. The appreciation of various features of the AR applications in female and male trainees is presented in Table 4. The statistical analyses show that women liked the voice interaction with the AR applications [F(1,19) = 6.01, p < 0.02, = 0.24] and the compass hologram significantly more than men [F(1,19) = 4.36, p < 0.05, = 0.19]. Men liked significantly more than women the traffic holograms used in the application [F(1,19) = 15.73, p < 0.0001, = 0.45], and the projection field of the AR device [F(1,19) = 5.24, p < 0.03, = 0.22]. No significant gender differences were obtained for gesture interaction with the AR applications, quiz, nor for comfort and trust in AR.

Figure 5.

Mean scores of the ARI subscales in female and male trainees from the experimental group that used the AR applications. Error bars represent standard errors.

Table 4.

Appreciation of various features of the AR applications in female and male trainees.

4. Discussion

In this study, two specific multimodal AR applications that have been developed for flight training purposes were assessed. One AR application was used for training to estimate if encountered traffic is on collision course or not, and to apply the rules for priority and collision avoidance. A second AR application was used to guide and assess the visual “block” scanning technique of the trainee which is specific for VFR flight The effects of the multimodal AR applications were compared to training in the flight simulator and theoretical instruction. This AR-supported training is seen as an add-on to the theoretical instruction on aviation law and the use of traffic advisory systems [64,65]. Although many studies claim that AR has the potential to improve both the teaching of aviation contents and the conditions of flight training [12,13,14,15,16,17,18,26], to our knowledge this is the first controlled experiment that provides statistical evidence on the training and gender effects.

4.1. Effects of the Traffic Detection and Collision Avoidance Training

The results of this study show significant benefits of practical training on collision detection when training with either the AR application or the flight simulator. For this, differences between the post-test and pre-test conducted in the flight simulator without AR were assessed. The practical training had a positive effect to significantly improve trainees’ collision detection accuracy in terms of estimating if an encountered aircraft is on collision course or not. However, trainees’ selection of the avoidance maneuver and identification of the right-of-way was not significantly improved in post-test as compared to pre-test, indicating that more research is necessary in determining the amount of training required to improve. Other significant training effects were the decrease in intensity of negative emotions and fear of failure of the trainees. The collision detection and avoidance tasks were also estimated as significantly less challenging after practical training, while the interest and perceived success probability remained constant. Emotions and motivational factors have been shown to influence learning outcomes and performance in various domains [27,28,29] including flight training [32,33,34,40]. Thus, improvements in the trainees’ emotional state and motivation indicate a positive training effect. Differences between post-test and pre-test in trainees’ subjective workload and situation awareness did not reach statistical significance. This indicates that maintaining situational awareness in traffic is associated with a certain amount of workload and requires effort that may not decrease with practice. Overall, the results of this study indicate significant benefits of practical training on collision detection when training with either the AR application or with the flight simulator.

When comparing the experimental and the control group in a non-AR environment no specific effects of the AR training were identified. The results did not show significant effects of AR training on trainees’ collision detection and avoidance performance, situational awareness, workload, emotion and motivation in a conventional flight simulation environment. The effect of AR training depends on the developed scenario and its implementation. The rather simple scenario used in this study did not show a significant effect. However, more research is needed to clarify, if AR training with a more elaborated scenario could give a good effect. In this study the AR training group could successfully transfer the skills to the simulation environment that was not AR-supported. No negative effect of training with AR was observed. The group that trained with AR and the group that trained in the flight simulator achieved similar performance in the collision detection accuracy, application of the collision avoidance and right-of-way rules in the flight simulator, when not supported by AR. Subjective workload, situational awareness, positive and negative emotion and motivation were also similar in the group that trained with the AR application and a control group that trained in the conventional flight simulator.

Nevertheless, significant effects of the AR application for guiding and assessing the trainees’ visual “block” scanning technique were found. In VFR flight the amplitude of eye movements, the speed and the order of the blocks is critical [51], and the AR application had a significant training benefit on trainees’ scanning performance in post-test. The experimental group performed the scanning for traffic significantly better than the control group. Research shows that AR features bring new opportunities for pilot training by making trainees’ gaze point visible to the instructor [48]. In this study, the scanning application also used objective data to assess the speed and range of eye-movements required for proper scanning.

4.2. Gender Diversity and AR Immersion

The results did not show significant gender differences in performance, perceived workload, situational awareness, positive emotion, interest and challenge related to the collision avoidance tests. However, there were significant gender differences in negative emotion and motivation. Although the practical training significantly decreased the intensity of negative emotions and fear of failure of all trainees, the gender-specific analysis shows that the decrease was larger in men as compared to women. Women also estimated the probability of success in post-test lower than men. Flight training organizations would focus traditionally on performance and workload, but it is important to understand differences in subjective experience and drives when new training methods are explored [59] for making this domain more inclusive. Thus, gender differences in the experience of AR and in the appreciation of various features of the AR applications were further investigated within the experimental group that trained with the AR applications.

Trainees’ mental and emotional involvement in the AR training were assessed in terms of immersion [19,24]. Immersion was associated with features that involve among others challenge, skills, curiosity, control, concentration, comprehension [20]. The results show that men scored significantly higher than women in AR engagement, meaning that they were more interested in the application and wanted to continue using it [21,24] more than women. However, no significant gender differences were found for engrossment and total immersion [21,24], meaning that the trainees’ positive emotions were affected by the AR applications in a comparable manner. Total immersion seems to be facilitated by the multimodal interaction with the application and engagement of visual, auditory and mental attention [21,22]. Although no significant gender differences were found for total immersion, gender differences were found in the appreciation of various AR features. Men liked significantly more than women the traffic holograms used in the application and the projection field of the AR device. Women liked the voice interaction with the AR applications and the compass hologram providing orientation cues significantly more than men. Considering findings from the general population indicating that women perform better than men in verbal tasks [58], in this study the women may have experienced the voice interaction as easier and more supportive for their task performance. However, although in the general population women did not perform better than men in visuospatial tasks [58], in this study the women appreciated the compass hologram which may have been a compensatory aid. In fact, the compass is a mandatory essential spatial orientation and navigation aid for VFR flight. No significant gender differences were obtained for gesture interaction with the AR applications and with the quiz. In this case it is important to support the trainees by building on their strengths and providing support to overcome their limitations. Thus, the multimodality of the AR applications, which facilitates immersion [21,22], can also address various use preferences and needs.

These findings are in line with previous research on the potential of AR to improve flight instructions showing many similarities, but also gender-specific needs and preferences regarding AR training [15,17]. Nevertheless, addressing gender specific preferences for AR-supported training is important because aviation flight training is a domain where women are underrepresented [52] and thus, their needs and preferences are not known. Furthermore, there is a bias towards considering mens’ needs and preferences as the sufficient standard [59], thus missing opportunities for gendered innovations. This topic becomes more important because of the current changes in the industry and the enhanced interest in attracting talented women and rethinking the training of the next generation of pilots [53]. Thus, gender differences in flight training need to be investigated [40,54,55,56,57] and addressed by appropriate, inclusive methods of flight instruction [32]. Besides fulfilling business needs in terms of staffing and performance, by addressing gender specific needs and preferences the aviation industry can also become more socially sustainable [17].

5. Conclusions

This study provides experimental evidence for the benefits and limitations of AR-supported training and its potential for preventing the risk of mid-air collisions in VFR flight. The results show that the interactive and multimodal AR application for learning the VFR traffic scanning technique significantly improved trainees’ performance as compared to the use of traditional written and graphical materials for instruction. Gender differences were found for AR engagement, but not for AR engrossment, total immersion, performance, workload, situational awareness, positive emotion, interest and challenge related to the collision detection and avoidance tasks. Although the practical training significantly decreased the intensity of negative emotions and fear of failure of all trainees, the gender-specific analysis shows that the decrease was larger in men as compared to women. Training with the AR application for collision detection and avoidance did not differ significantly from training in the simulator in terms of performance and workload. Also, trainees’ selection of the avoidance maneuver and identification of the right-of-way did not significantly improve, thus more research is needed to address these issues. In addition, the familiarization with the assessment criteria and with the collision detection and avoidance tasks in a dynamic environment had positive benefits to decrease the intensity of trainees’ negative emotions and fear of failure. Based on this experimental evidence, AR-supported training could be used complementary to conventional training to practice and provide objective feedback on trainees’ scanning and collision estimation accuracy.

Author Contributions

Conceptualization, B.M., R.B., W.V. and I.V.K.; methodology, B.M., R.B., W.V., H.S. and I.V.K.; software, H.S. and R.B.; investigation, B.M. and R.B.; resources, R.B. and W.V.; data curation, B.M.; writing—original draft preparation, B.M., W.V., H.S., R.B. and I.V.K.; project administration, R.B., B.M. and W.V.; funding acquisition, R.B. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Austrian Federal Ministry for Climate Action, Environment, Energy, Mobility, Innovation, and Technology and the Austrian Research Promotion Agency, FEMtech Program “Talente”, grant number 866702.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Not applicable.

Acknowledgments

The authors would like to thank all the volunteers involved in the study for their time, interest, and their participation in the experiments. Furthermore, the authors gratefully acknowledge all colleagues involved in the set-up phase, and specially the experiment supervisors and administrators for their commitment and professional work.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript, or in the decision to publish the results.

References

- Caudell, T.; Mizell, D. Augmented reality: An application of heads-up display technology to manual manufacturing processes. In Proceedings of the Twenty-Fifth Hawaii International Conference on System Sciences, Kauai, HI, USA, 7–10 January 1992; Volume 2, pp. 659–669. [Google Scholar] [CrossRef]

- Garzón, J. An Overview of Twenty-Five Years of Augmented Reality in Education. Multimodal Technol. Interact. 2021, 5, 37. [Google Scholar] [CrossRef]

- Wu, H.K.; Lee, S.W.Y.; Chang, H.Y.; Liang, J.C. Current status, opportunities and challenges of augmented reality in education. Comput. Educ. 2013, 62, 41–49. [Google Scholar] [CrossRef]

- Billinghurst, M.; Kato, H.; Poupyrev, I. The magicbook-moving seamlessly between reality and virtuality. IEEE Comput. Graph. Appl. 2001, 21, 6–8. [Google Scholar]

- Billinghurst, M.; Kato, H. Collaborative augmented reality. Commun. ACM 2002, 45, 64–70. [Google Scholar] [CrossRef]

- Feng, S.; He, X.; He, W.; Billinghurst, M. Can you hear it? Stereo sound-assisted guidance in augmented reality assembly. Virtual Real. 2022, 1–11. [Google Scholar] [CrossRef]

- Huang, W.; Wakefield, M.; Rasmussen, T.A.; Kim, S.; Billinghurst, M. A review on communication cues for augmented reality based remote guidance. J. Multimodal User Interfaces 2022, 16, 239–256. [Google Scholar] [CrossRef]

- Bower, M.; Howe, C.; McCredie, N.; Robinson, A.; Grover, D. Augmented reality in Education—Cases, places, and potentials. In Proceedings of the 2013 IEEE 63rd Annual Conference International Council for Education Media (ICEM), Singapore, 1–4 October 2013; pp. 1–11. [Google Scholar] [CrossRef]

- Lasica, I.E.; Katzis, K.; Meletiou-Mavrotheris, M.; Dimopoulos, C. Augmented reality in laboratory-based education: Could it change the way students decide about their future studies? In Proceedings of the 2017 IEEE Global Engineering Education Conference (EDUCON), Athens, Greece, 25–28 April 2017; pp. 1473–1476. [Google Scholar] [CrossRef]

- Vergara, D.; Extremera, J.; Rubio, M.P.; Dávila, L.P. The proliferation of virtual laboratories in educational fields. ADCAIJ Adv. Distrib. Comput. Artif. Intell. J. 2020, 9, 85–97. [Google Scholar] [CrossRef]

- Vergara, D.; Rubio, M.P.; Lorenzo, M. New approach for the teaching of concrete compression tests in large groups of engineering students. J. Prof. Issues Eng. Educ. Pract. 2017, 143, 05016009. [Google Scholar] [CrossRef]

- Brown, L. The Next Generation Classroom: Transforming Aviation Training with Augmented Reality. In Proceedings of the National Training Aircraft Symposium (NTAS), Daytona Beach, FL, USA, 14–16 August 2017. [Google Scholar]

- Brown, L. Holographic Micro-simulations to Enhance Aviation Training with Mixed Reality. In Proceedings of the National Training Aircraft Symposium (NTAS), Daytona Beach, FL, USA, 15 August 2018. [Google Scholar]

- Brown, L. Augmented Reality in International Pilot Training to Meet Training Demands; Instructional Development Grants, Western Michigan University: Kalamazoo, MI, USA, 2019. [Google Scholar]

- Moesl, B.; Schaffernak, H.; Vorraber, W.; Braunstingl, R.; Herrele, T.; Koglbauer, I.V. A Research Agenda for Implementing Augmented Reality in Ab Initio Pilot Training. Aviat. Psychol. Appl. Hum. Factors 2021, 11, 118–126. [Google Scholar] [CrossRef]

- Schaffernak, H.; Moesl, B.; Vorraber, W.; Braunstingl, R.; Herrele, T.; Koglbauer, I. Design and Evaluation of an Augmented Reality Application for Landing Training. In Human Interaction, Emerging Technologies and Future Applications IV; Advances in Intelligent Systems and Computing; Ahram, T., Taiar, R., Groff, F., Eds.; Springer International Publishing: Cham, Switzerland, 2021; Volume 1378, pp. 107–114. [Google Scholar] [CrossRef]

- Moesl, B.; Schaffernak, H.; Vorraber, W.; Holy, M.; Herrele, T.; Braunstingl, R.; Koglbauer, I.V. Towards a More Socially Sustainable Advanced Pilot Training by Integrating Wearable Augmented Reality Devices. Sustainability 2022, 14, 2220. [Google Scholar] [CrossRef]

- Schaffernak, H.; Moesl, B.; Vorraber, W.; Holy, M.; Herzog, E.M.; Novak, R.; Koglbauer, I.V. Novel Mixed Reality Use Cases for Pilot Training. Educ. Sci. 2022, 12, 345. [Google Scholar] [CrossRef]

- Brooks, K. There Is Nothing Virtual about Immersion: Narrative Immersion for VR and Other Interfaces; Motorola Labs/Human Interface Labs. 2003. Available online: http://desres18.netornot.at/md/there-is-nothing-virtual-about-immersion/ (accessed on 1 November 2022).

- Qin, H.; Patrick Rau, P.L.; Salvendy, G. Measuring player immersion in the computer game narrative. Int. J. Hum.-Comput. Interact. 2009, 25, 107–133. [Google Scholar] [CrossRef]

- Brown, E.; Cairns, P. A Grounded Investigation of Game Immersion. In Proceedings of the CHI ’04 Extended Abstracts on Human Factors in Computing Systems, Vienna, Austria, 24–29 April 2004; Association for Computing Machinery: New York, NY, USA, 2004; pp. 1297–1300. [Google Scholar] [CrossRef]

- Ermi, L.; Mäyrä, F. Fundamental Components of the Gameplay Experience: Analysing Immersion. In Proceedings of the Digital Games Research Conference 2005, Changing Views: Worlds in Play, Vancouver, BC, Canada, 16–20 June 2005; pp. 16–20. [Google Scholar]

- Csikszentmihalyi, M.; Csikzentmihaly, M. Flow: The Psychology of Optimal Experience; Harper & Row: New York, NY, USA, 1990. [Google Scholar]

- Georgiou, Y.; Kyza, E.A. The development and validation of the ARI questionnaire: An instrument for measuring immersion in location-based augmented reality settings. Int. J. Hum.-Comput. Stud. 2017, 98, 24–37. [Google Scholar] [CrossRef]

- Bressler, D.; Bodzin, A. A mixed methods assessment of students’ flow experiences during a mobile augmented reality science game. J. Comput. Assist. Learn. 2013, 29, 505–517. [Google Scholar] [CrossRef]

- Schaffernak, H.; Moesl, B.; Vorraber, W.; Koglbauer, I.V. Potential Augmented Reality Application Areas for Pilot Education: An Exploratory Study. Educ. Sci. 2020, 10, 86. [Google Scholar] [CrossRef]

- Schutz, P.A.; Pekrun, R. Emotion in Education—A Volume in Educational Psychology; Elsevier Inc.: Amsterdam, The Netherlands, 2007. [Google Scholar] [CrossRef]

- Eccles, D.W.; Ward, P.; Woodman, T.; Janelle, C.M.; Scanff, C.L.; Ehrlinger, J.; Castanier, C.; Coombes, S.A. Where’s the Emotion? How Sport Psychology Can Inform Research on Emotion in Human Factors. Hum. Factors 2011, 53, 180–202. [Google Scholar] [CrossRef]

- Rheinberg, F.; Vollmeyer, R.; Burns, B.D. QCM: A questionnaire to assess current motivation in learning situations. Diagnostica 2001, 47, 57–66. [Google Scholar] [CrossRef]

- Vollmeyer, R.; Rheinberg, F. Motivation and metacognition when learning a complex system. Eur. J. Psychol. Educ. 1999, 14, 541–554. [Google Scholar] [CrossRef]

- Vollmeyer, R.; Rheinberg, F. Does motivation affect performance via persistence? Learn. Instr. 2000, 10, 293–309. [Google Scholar] [CrossRef]

- Bauer, S.; Braunstingl, R.; Riesel, M.; Koglbauer, I. Improving the method for upset recovery training of ab initio student pilots in simulated and real flight. In Proceedings of the 33rd Conference of the European Association for Aviation Psychology, Dubrovnik, Croatia, 24–28 September 2019; Schwarz, M., Lasry, J., Schnücker, G.N., Becherstorfer, H., Eds.; 2019; pp. 167–179. [Google Scholar]

- Koglbauer, I.; Kallus, K.W.; Braunstingl, R.; Boucsein, W. Recovery Training in Simulator Improves Performance and Psychophysiological State of Pilots During Simulated and Real Visual Flight Rules Flight. Int. J. Aviat. Psychol. 2011, 21, 307–324. [Google Scholar] [CrossRef]

- Braunstingl, R.; Baciu, C.; Koglbauer, I. Learning Style, Motivation, Emotional State And Workload of High Performers. In Proceedings of the ERD 2018 Social & Behavioural Sciences, Cluj-Napoca, Romania, 6–7 July 2018; pp. 374–381. [Google Scholar] [CrossRef]

- Koglbauer, I. Evaluation of A Coaching Program with Ab Initio Student Pilots. In Proceedings of the ERD 2017 Social & Behavioural Sciences, Cluj-Napoca, Romania, 6–7 July 2018; pp. 663–669. [Google Scholar] [CrossRef]

- European Aviation Safety Agency. The European Plan for Aviation Safety EPAS 2019–2023; European Aviation Safety Agency: Cologne, Germany, 2018. [Google Scholar]

- Haberkorn, T.; Koglbauer, I.; Braunstingl, R.; Prehofer, B. Requirements for Future Collision Avoidance Systems in Visual Flight: A Human-Centered Approach. IEEE Trans. Hum.-Mach. Syst. 2013, 43, 583–594. [Google Scholar] [CrossRef]

- Koglbauer, I. Gender differences in time perception. In The Cambridge Handbook of Applied Perception Research, 1st ed.; Cambridge University Press: Cambridge, UK, 2015; pp. 1004–1028. [Google Scholar]

- Koglbauer, I. Simulator Training Improves the Estimation of Collision Parameters and the Performance of Student Pilots. Procedia-Soc. Behav. Sci. 2015, 209, 261–267. [Google Scholar] [CrossRef][Green Version]

- Koglbauer, I.; Braunstingl, R. Ab initio pilot training for traffic separation and visual airport procedures in a naturalistic flight simulation environment. Transp. Res. Part F Traffic Psychol. Behav. 2018, 58, 1–10. [Google Scholar] [CrossRef]

- Koglbauer, I.; Leveson, N. System-Theoretic Analysis of Air Vehicle Separation in Visual Flight. In Proceedings of the 32nd Conference of the European Association for Aviation Psychology, Cascais, Portugal, 26–30 September 2016; Schwarz, M., Harfmann, J., Eds.; 2017; pp. 117–131. [Google Scholar]

- Koglbauer, I.; Braunstingl, R. Anticipation-Based Methods for Pilot Training and Aviation Systems Engineering. In Aviation Psychology: Applied Methods and Techniques; Koglbauer, I., Biede-Straussberger, S., Eds.; Hogrefe Verlag GmbH & Co. KG: Göttingen, Germany, 2021; pp. 51–68. [Google Scholar]

- Endsley, M. Theoretical underpinnings of situation awareness: A critical review. In Situation Awareness Analysis and Measurement; Endsley, M., Garland, D., Eds.; Lawrence Erlbaum Associates Inc.: Mahwah, NJ, USA, 2000; pp. 3–28. [Google Scholar]

- Koglbauer, I. Training for Prediction and Management of Complex and Dynamic Flight Situations. Procedia-Soc. Behav. Sci. 2015, 209, 268–276. [Google Scholar] [CrossRef]

- Lanini-Maggi, S.; Ruginski, I.T.; Shipley, T.F.; Hurter, C.; Duchowski, A.T.; Briesemeister, B.B.; Lee, J.; Fabrikant, S.I. Assessing how visual search entropy and engagement predict performance in a multiple-objects tracking air traffic control task. Comput. Hum. Behav. Rep. 2021, 4, 100127. [Google Scholar] [CrossRef]

- Maggi, S.; Fabrikant, S.I.; Imbert, J.P.; Hurter, C. How do display design and user characteristics matter in animations? An empirical study with air traffic control displays. Cartogr. Int. J. Geogr. Inf. Geovisualization 2016, 51, 25–37. [Google Scholar] [CrossRef]

- Lee, Y.; Marks, S.; Connor, A.M. An Evaluation of the Effectiveness of Virtual Reality in Air Traffic Control; Association for Computing Machinery: New York, NY, USA, 2020. [Google Scholar]

- Vlasblom, J.; Van der Pal, J.; Sewnath, G. Making the invisible visible—Increasing pilot training effectiveness by visualizing scan patterns of trainees through AR. In Proceedings of the International Training Technology Exhibition & Conference (IT2EC), Bangkok, Thailand, 1–2 March 2019; pp. 26–28. [Google Scholar]

- Braunstingl, R.; Baciu, C.; Koglbauer, I. A review of instruction methods for collision detection and avoidance. In Proceedings of the ERD 2018 Social & Behavioural Sciences, Cluj-Napoca, Romania, 6–7 July 2018; pp. 382–388. [Google Scholar]

- Keil, J.; Edler, D.; Dickmann, F. Preparing the HoloLens for user studies: An augmented reality interface for the spatial adjustment of holographic objects in 3D indoor environments. KN-J. Cartogr. Geogr. Inf. 2019, 69, 205–215. [Google Scholar] [CrossRef]

- European General Aviation Safety Team. Collision Avoidance; European General Aviation Safety Team: Cologne, Germany, 2010. [Google Scholar]

- Mitchell, J.; Kristovics, A.; Vermeulen, L.; Wilson, J.; Martinussen, M. How Pink is the Sky? A Cross-national Study of the Gendered Occupation of Pilot. Employ. Relations Rec. 2005, 5, 43–60. [Google Scholar]

- Woods, B. How Airlines Plan to Create a New Generation of Pilots Amid Fears of Decade-Long Cockpit Crisis. 2022. Available online: https://www.cnbc.com/2022/11/11/how-airlines-plan-to-create-new-generation-of-pilots-at-time-of-crisis.html (accessed on 16 December 2022).

- Hamilton, P. The Teaching Women to Fly Research Project. In Absent Aviators, Gender Issues in Aviation; Bridges, D., Neal-Smith, J., Mills, A., Eds.; Ashgate Publishing, Ltd.: Surrey, UK, 2014; pp. 313–331. [Google Scholar]

- Sitler, R. Gender differences in learning to fly. In Tapping Diverse Talents in Aviation: Culture, Gender, and Diversity; Turney, M.A., Ed.; Ashgate: Aldershot, UK, 2004; pp. 77–90. [Google Scholar]

- Turney, M.A.; Bishop, J.C.; Karp, M.R.; Niemczyk, M.; Sitler, R.L.; Green, M.F. National survey results: Retention of women in collegiate aviation. J. Air Transp. 2002, 7, 1–24. [Google Scholar]

- Turney, M.; Henley, I.; Niemczyk, M.; McCurry, W. Inclusive versus exclusive strategies in aviation training. In Tapping Diverse Talents in Aviation: Culture, Gender, and Diversity; Turney, M.A., Ed.; Ashgate: Aldershot, UK, 2004; pp. 185–196. [Google Scholar]

- Neubauer, A.C.; Grabner, R.H.; Fink, A.; Neuper, C. Intelligence and neural efficiency: Further evidence of the influence of task content and sex on the brain–IQ relationship. Cogn. Brain Res. 2005, 25, 217–225. [Google Scholar] [CrossRef]

- Koglbauer, I.V. Forschungsmethoden in der Verbindung Gender und Technik: Research Methods Linking Gender and Technology. Psychol. Österreich 2017, 37, 354–359. [Google Scholar]

- Microsoft. HoloLens-Hardware (1. Generation). Available online: https://learn.microsoft.com/de-de/hololens/hololens1-hardware (accessed on 16 November 2022).

- Taylor, R. Situation Awareness Rating Technique (SART): The development of a tool for aircrew systems design. In Proceedings of the Situational Awareness in Aerospace Operations (AGARD-CP-478), Neuilly-Sur-Seine, France; NATO-AGARD, Ed. 1990; pp. 3.1–3.17. Available online: https://ext.eurocontrol.int/ehp/?q=node/1608 (accessed on 1 November 2012).

- Hart, S.G.; Staveland, L.E. Development of NASA-TLX (Task Load Index): Results of Empirical and Theoretical Research. Adv. Psychol. 1988, 52, 139–183. [Google Scholar] [CrossRef]

- Watson, D.; Clark, L.A.; Tellegen, A. Development and validation of brief measures of positive and negative affect: The PANAS scales. J. Personal. Soc. Psychol. 1988, 54, 1063–1070. [Google Scholar] [CrossRef] [PubMed]

- Santel, C.; Klingauf, U. A review of low-cost collision alerting systems and their human-machine interfaces. Tech. Soar. 2012, 36, 31–39. [Google Scholar]

- Haberkorn, T.; Koglbauer, I.; Braunstingl, R. Traffic Displays for Visual Flight Indicating Track and Priority Cues. IEEE Trans. Hum.-Mach. Syst. 2014, 44, 755–766. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).