Comparing Interaction Techniques to Help Blind People Explore Maps on Small Tactile Devices

Abstract

1. Introduction

2. Materials and Methods

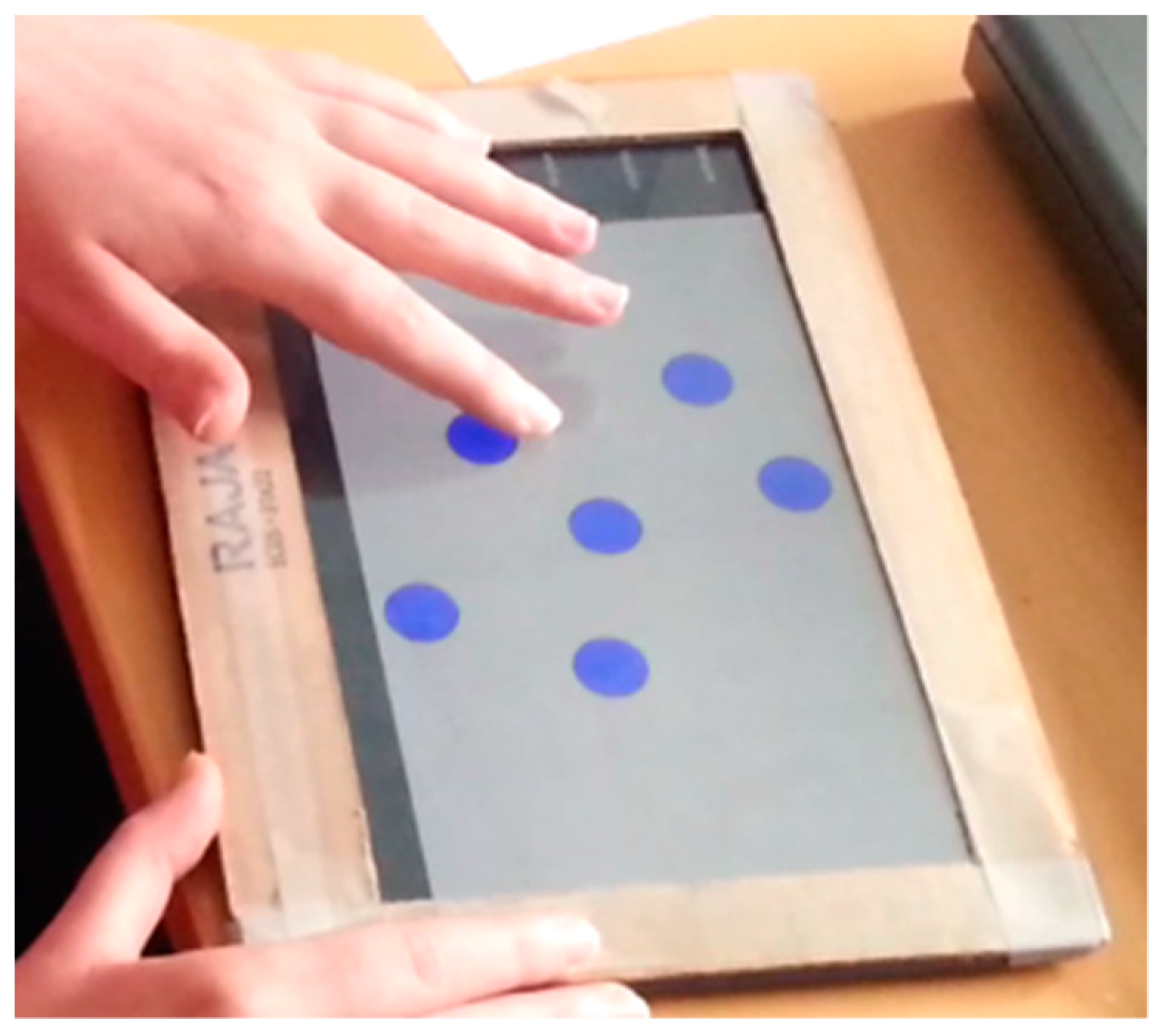

2.1. Prototype

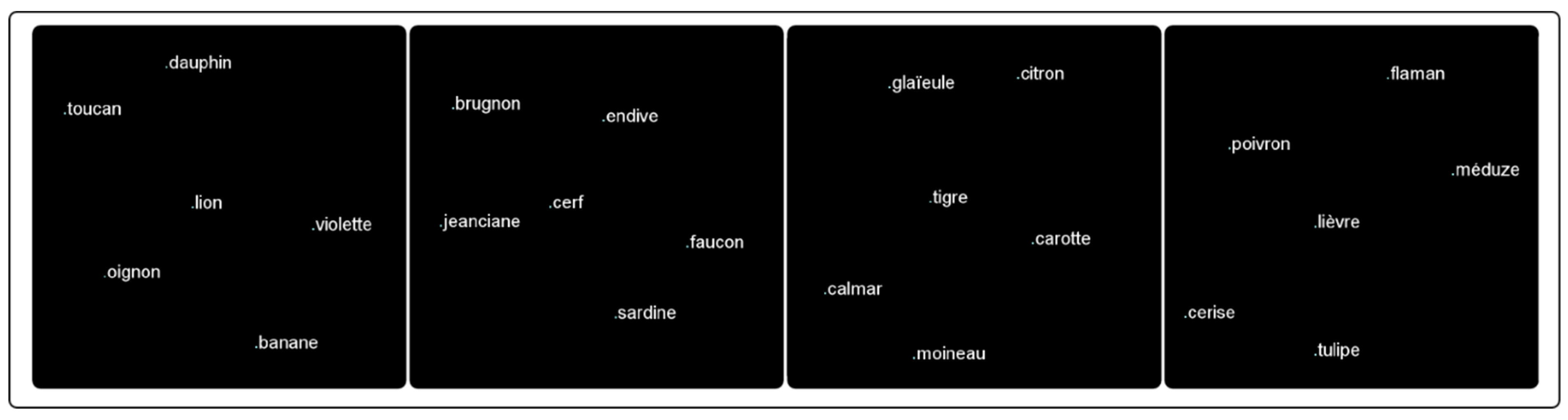

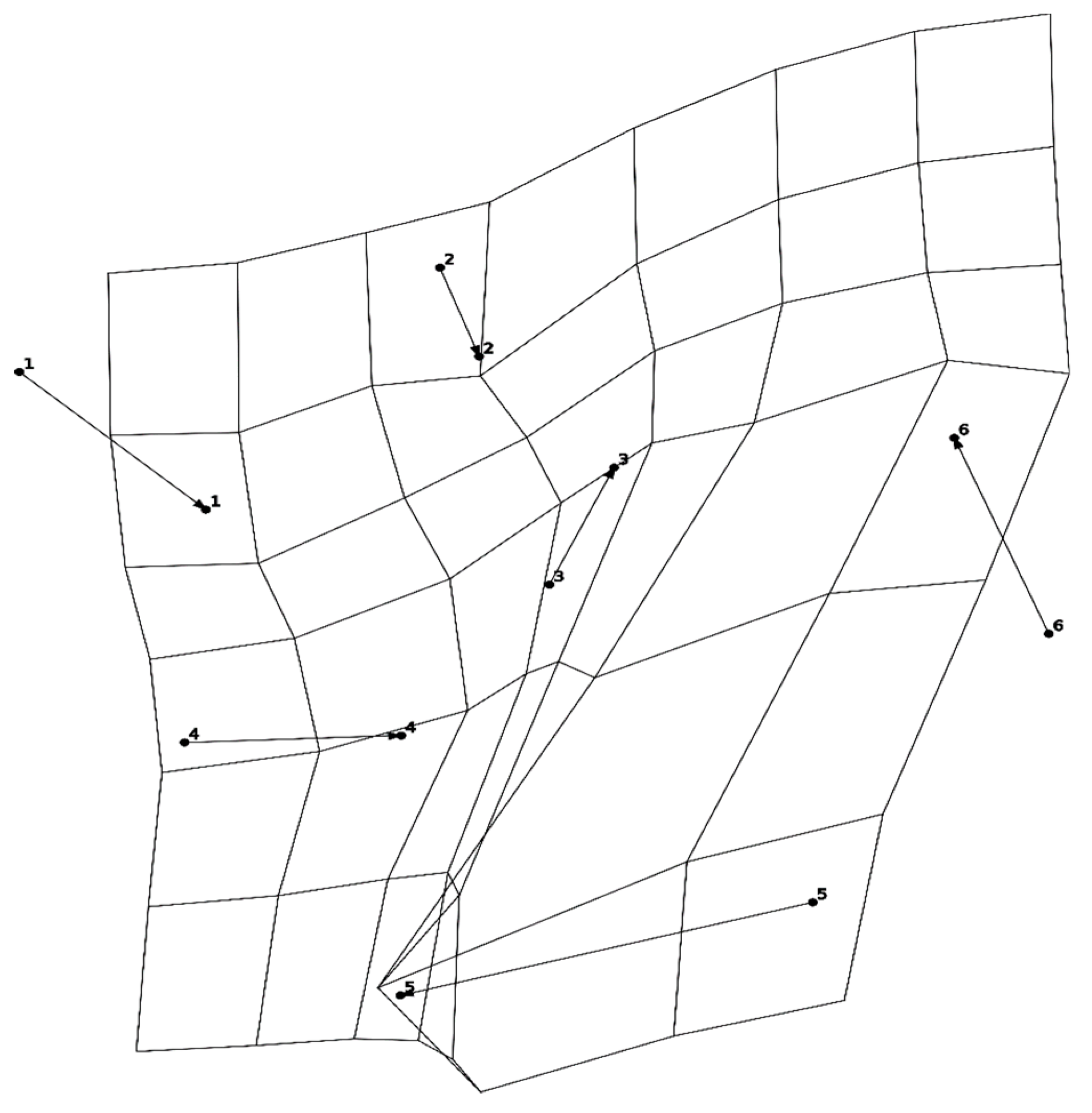

2.2. Maps

2.3. Interaction Techniques

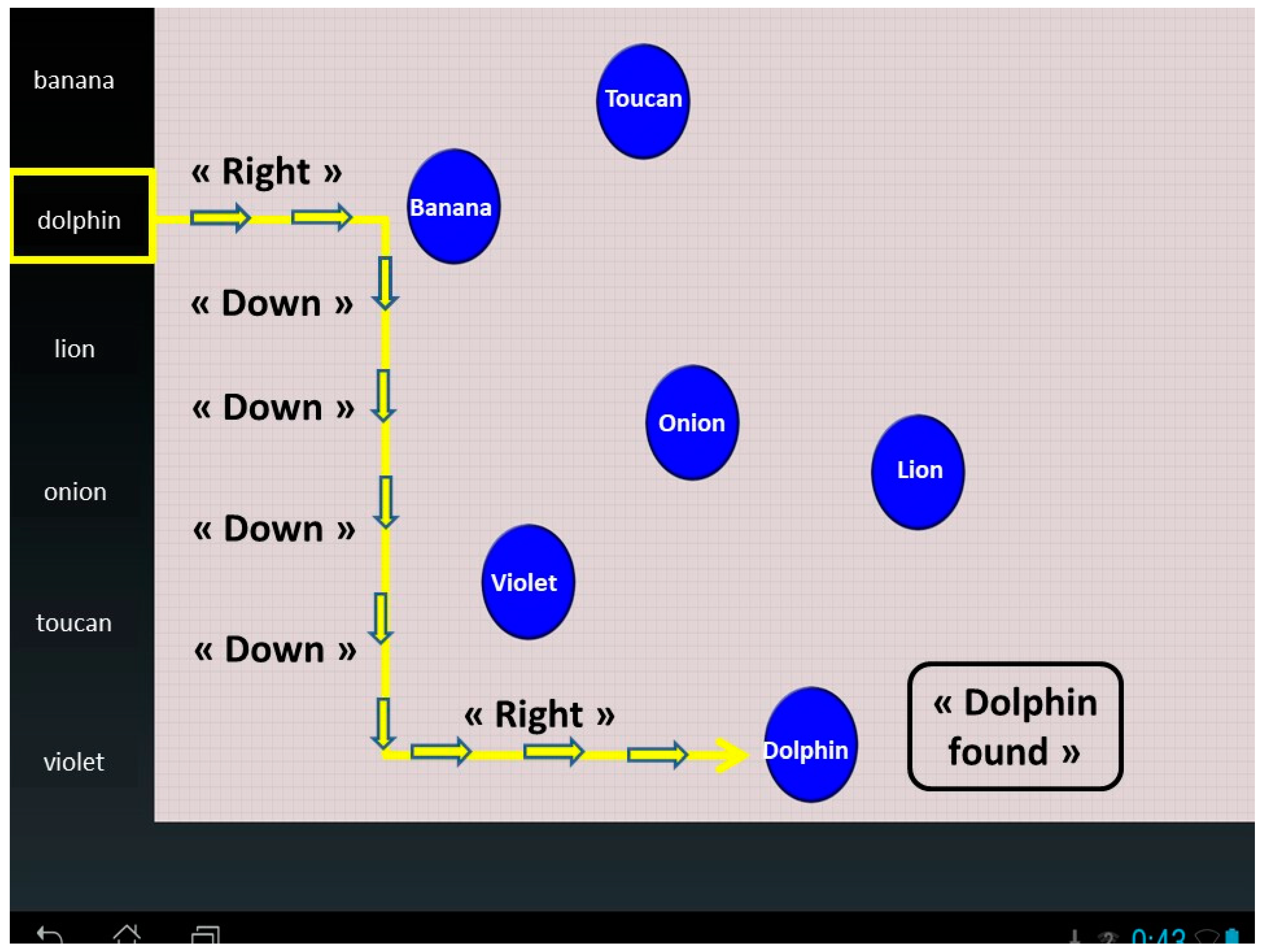

2.3.1. Direct Guidance (DIG)

2.3.2. Edge Projection (EDP)

2.3.3. Grid Layout (LAY)

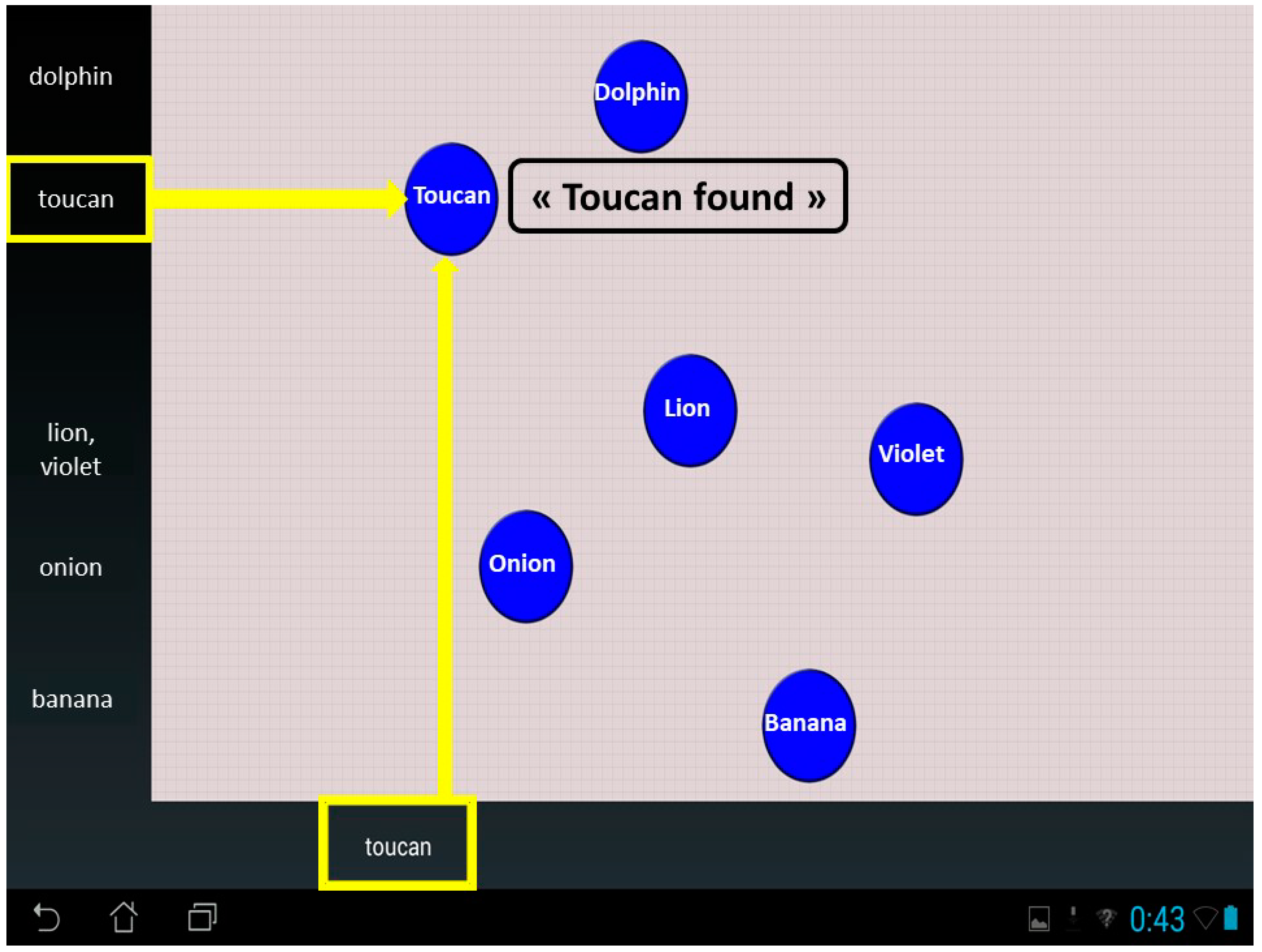

2.3.4. Control (Screen-Reader like Implementation)

2.4. Participants

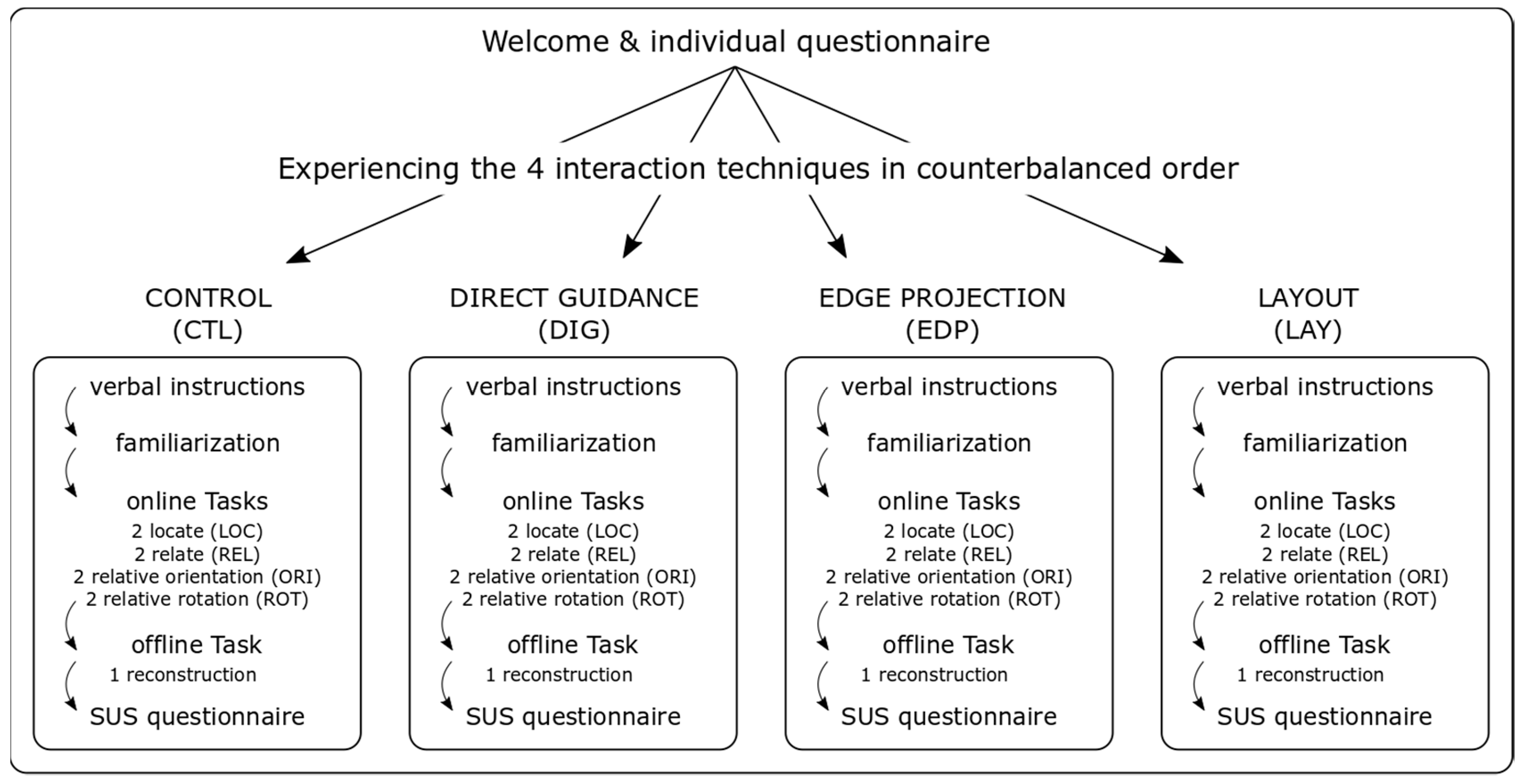

2.5. Procedure

2.5.1. Online Tasks

- Locate (LOC). The subject was required to locate a target as quickly as possible. This task was also used by Kane et al. [19]. The task was considered as complete when the participant found the target element. Response time and finger path were collected.

- Relate (REL). The experimenter indicated the names of three targets (e.g., A, B, and C). After exploration, the participant had to indicate whether the distance between A and B was longer/smaller than the distance between A and C. Response time, correctness of the answers and finger path were collected.

- Relative orientation (ORI). Using the clock face system, the participants had to determine the direction towards a target when being at the center of the screen, facing the North. The clock face system is a metaphor used to indicate directions, and consists of virtually placing the user in the middle of an analogue clock. The user is always facing 12:00. He would indicate 15:00 for a direction to the right side. This task has also been used by Giraud et al. [15]. Here, response time, precision (error in direction), and finger path were collected.

- Relative orientation with a rotation (ROT). This task was similar to the previous one, except that the user had to mentally imagine that he was facing another direction than North. Consequently, he had to do a mental rotation to find the answer. This task is interesting as people with visual impairments commonly face problems performing mental rotations [24]. As with the previous task, response time, precision (error in direction), and finger path were collected.

2.5.2. Offline Tasks

2.6. Variables and Statistics

3. Results

3.1. Online Tasks

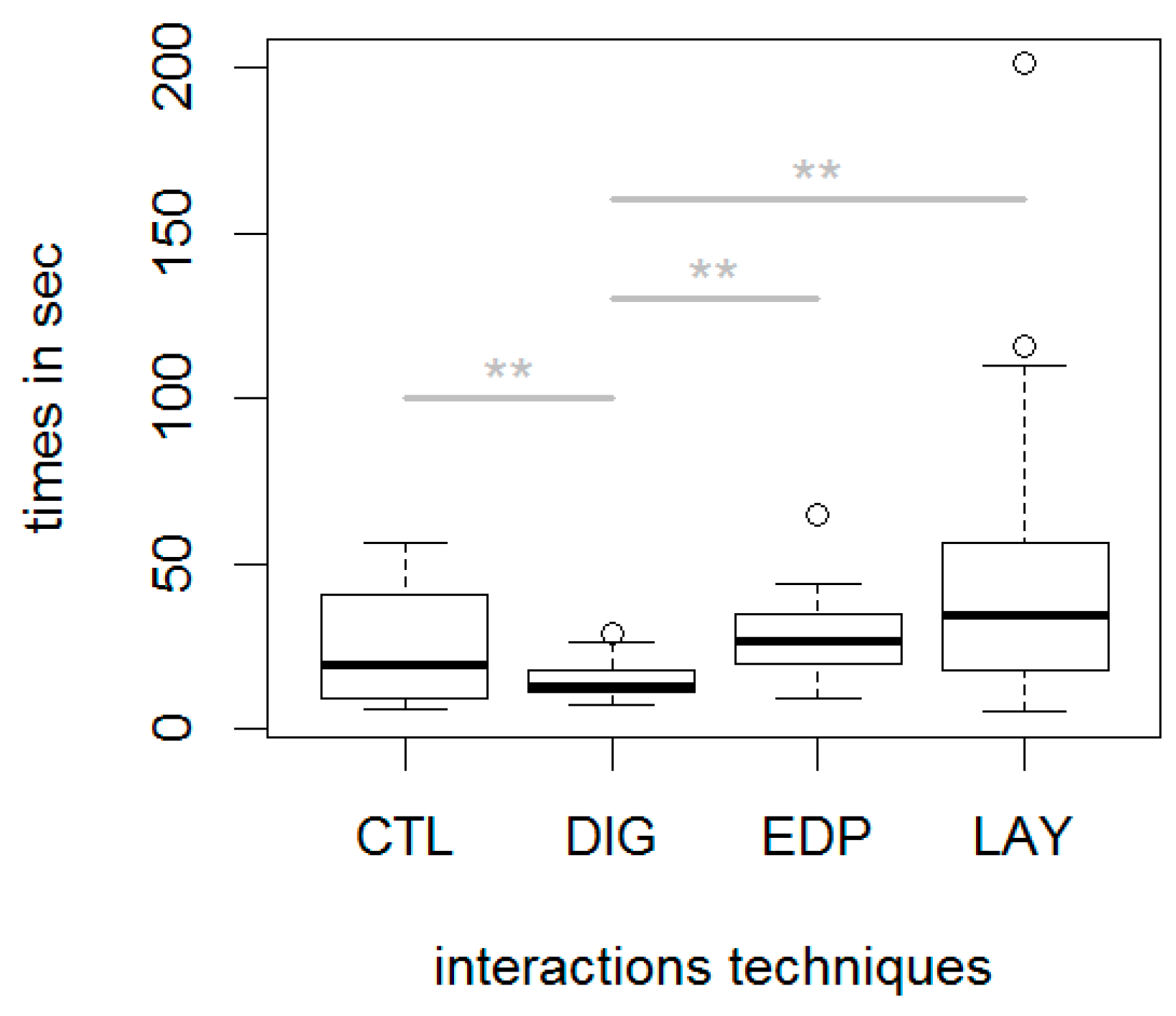

3.1.1. Location Task (LOC) Efficiency

3.1.2. Relate Task (REL) Efficiency

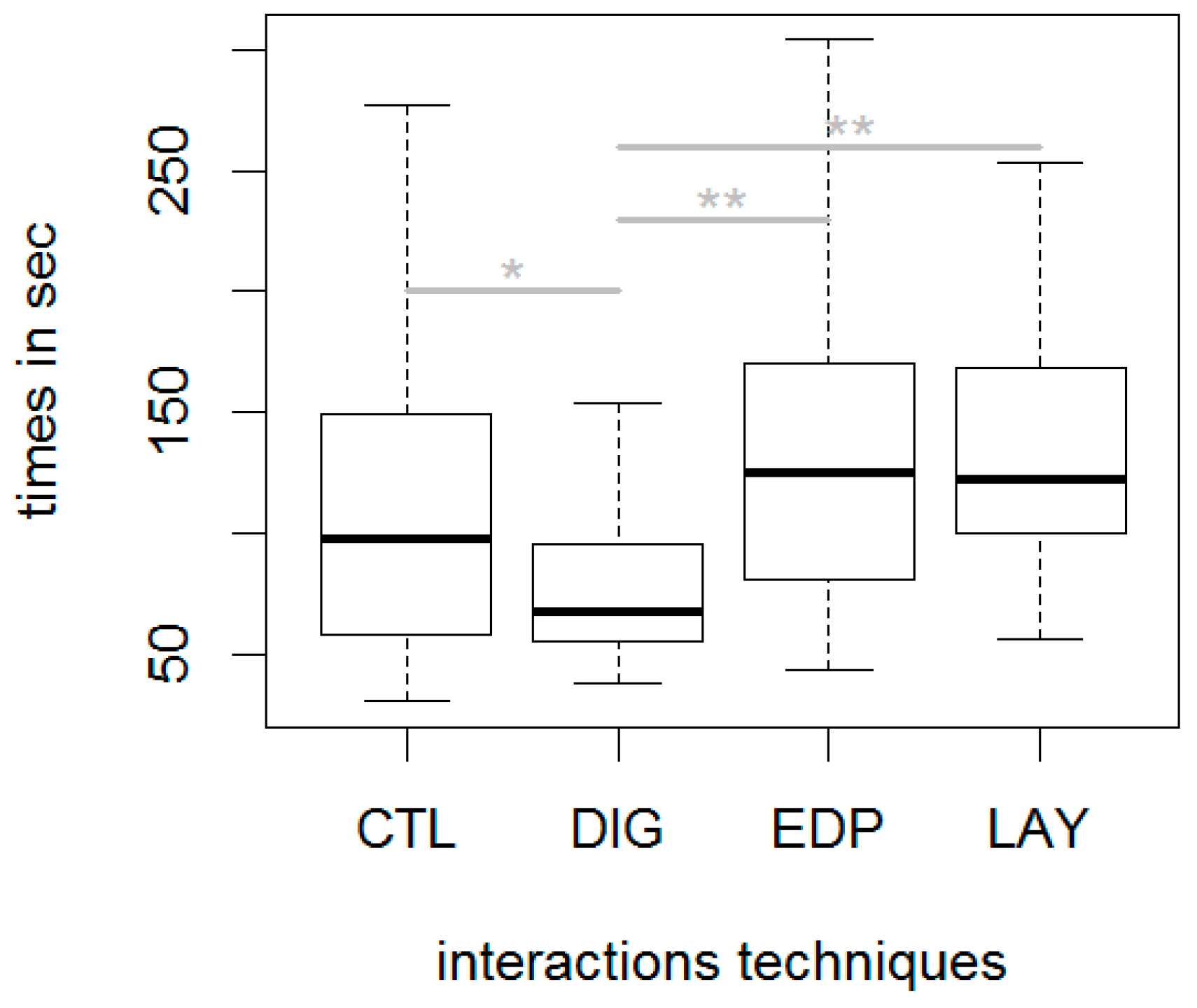

3.1.3. Relative Orientation Task (ORI) Efficiency

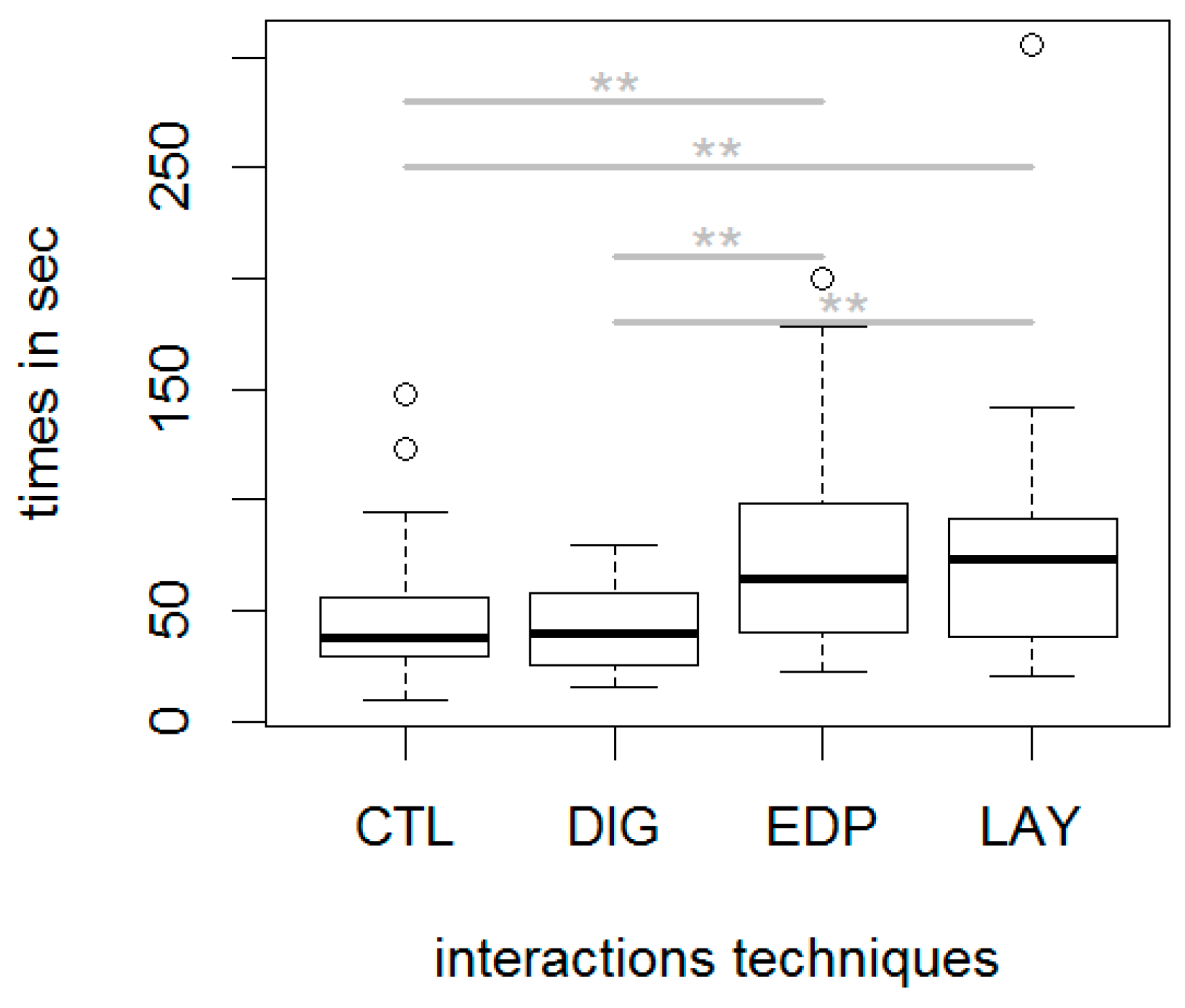

3.1.4. Relative Orientation Task with Mental Rotation (ROT) Efficiency

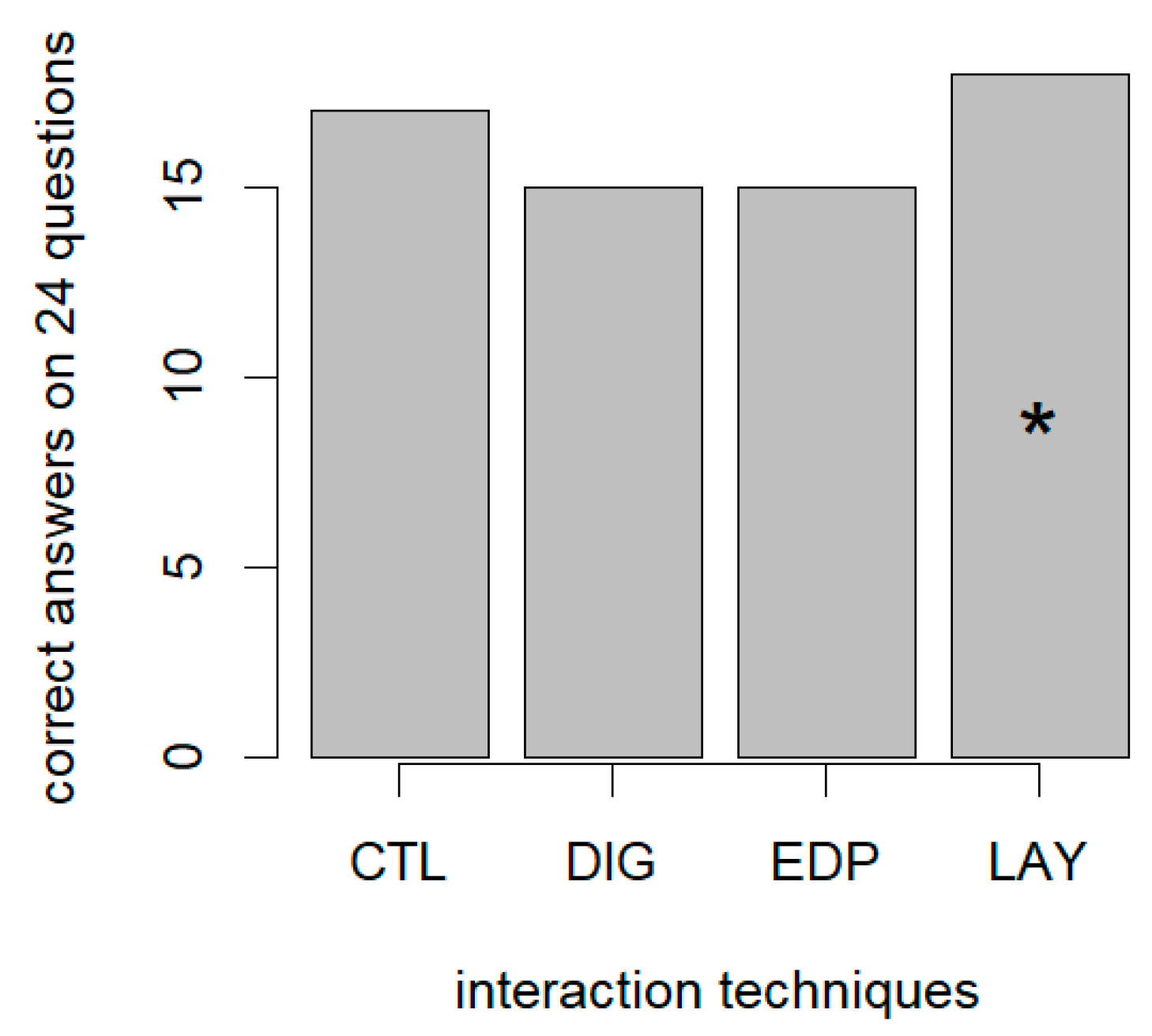

3.1.5. Effectiveness of the Different Tasks

3.1.6. Synthesis for the Online Tasks

3.2. Offline Task

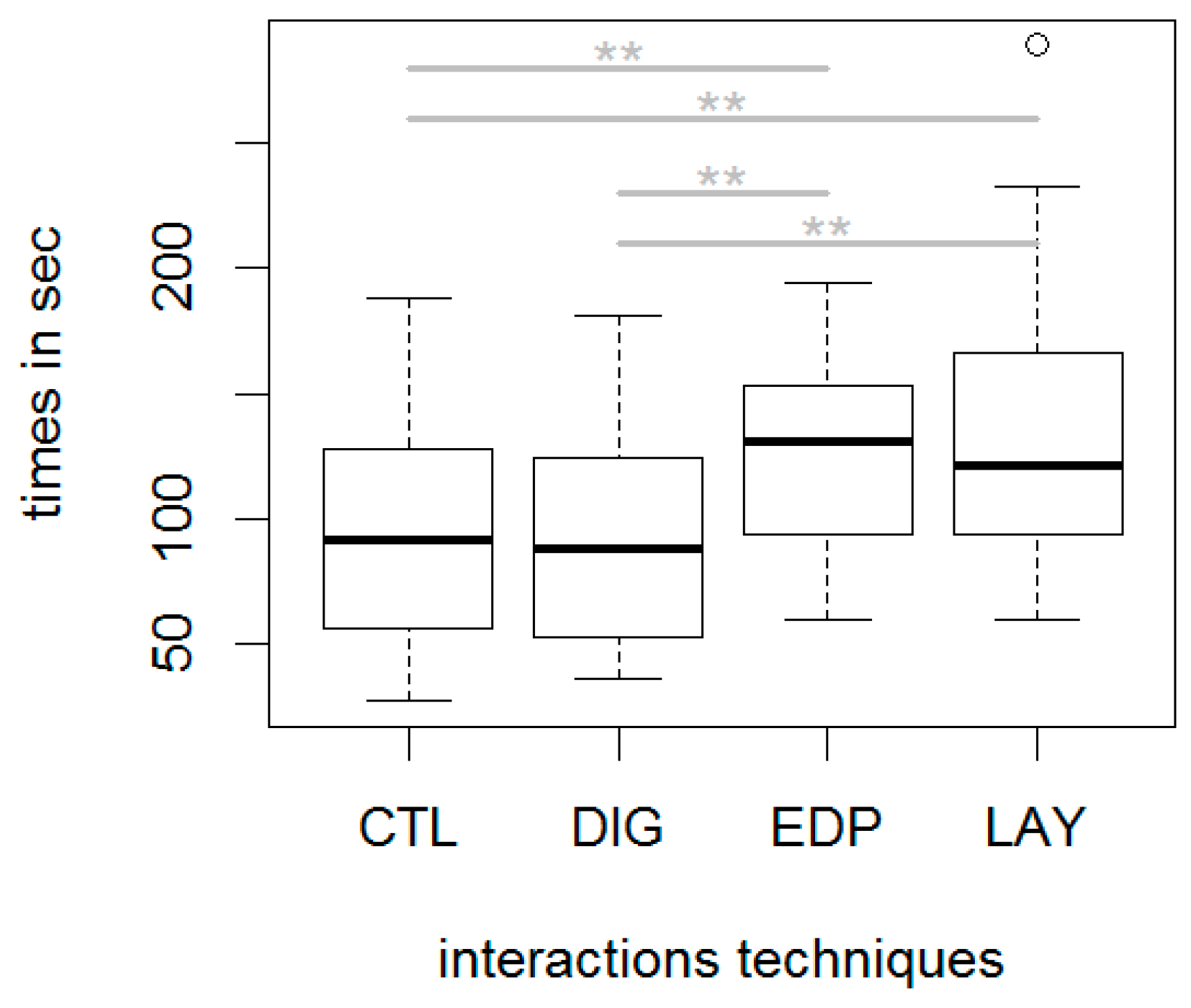

3.2.1. Exploration Time Observed before the Offline Task

3.2.2. Exploration Distance Observed before the Offline Task

3.2.3. Back-and-Forth Strategy

3.2.4. Cyclic Strategy

3.2.5. Point of Reference Strategy

3.2.6. Visual Status Influence on Observed Exploration Strategies

3.2.7. Reconstruction Similarity

3.2.8. Synthesis for the Offline Task

3.3. User Satisfaction

4. Discussion

4.1. Discussion about Online Tasks

4.1.1. Response Times

4.1.2. Spatial Skills

4.2. Discussion about Offline Tasks

4.2.1. The Layout Technique Requires Shorter Exploratory Movements

4.2.2. The Layout Technique Requires Less Cognitive Exploratory Strategies

4.2.3. The Layout Technique is not Quicker

4.3. Discussion about the Influence of Visual Status on Tactile Exploration and Spatial Memorization

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- WHO. Visual Impairment and Blindness Fact Sheet N° 282; World Health Organization: Geneva, Switzerland, 2018. [Google Scholar]

- Froehlich, J.E.; Brock, A.M.; Caspi, A.; Guerreiro, J.; Hara, K.; Kirkham, R.; Schöning, J.; Tannert, B. Grand challenges in accessible maps. Interactions 2019, 26, 78–81. [Google Scholar] [CrossRef]

- Gorlewicz, J.L.; Tennison, J.L.; Palani, H.P.; Giudice, N.A. The graphical access challenge for people with visual impairments: Positions and pathways forward. In Interactive Multimedia; Cvetković, D., Ed.; IntechOpen: London, UK, 2018. [Google Scholar]

- Tatham, A.F. The design of tactile maps: Theoretical and practical considerations. In Proceedings of International Cartographic Association: Mapping the Nations; International Cartographic Association: Bournemouth, UK, 1991; pp. 157–166. [Google Scholar]

- Ungar, S. Cognitive mapping without visual experience. In Cognitive Mapping: Past Present and Future; Kitchin, R., Freundschuh, S., Eds.; Routledge: Oxon, UK, 2000; pp. 221–248. [Google Scholar]

- Hampson, P.J.; Daly, C.M. Individual variation in tactile map reading skills: Some guidelines for research. J. Vis. Impair. Blind. 1989, 83, 505–509. [Google Scholar]

- Hill, E.W.; Rieser, J.J. How persons with visual impairments explore novel spaces: Strategies of good and poor performers. J. Vis. Impair. Blind. 1993, 87, 8–15. [Google Scholar]

- Gaunet, F.; Thinus-Blanc, C. Early-blind subjects’ spatial abilities in the locomotor space: Exploratory strategies and reaction-to-change performance. Perception 1996, 25, 967–981. [Google Scholar] [CrossRef] [PubMed]

- Golledge, R.G.; Klatzky, R.L.; Loomis, J.M. Cognitive mapping and wayfinding by adults without vision. In The Construction of Cognitive Maps; Springer: Berlin, Germany, 1996; pp. 215–246. [Google Scholar]

- Tellevik, J.M. Influence of spatial exploration patterns on cognitive mapping by blindfolded sighted persons. J. Vis. Impair. Blind. 1992, 86, 221–224. [Google Scholar]

- Guerreiro, T.; Montague, K.; Guerreiro, J.; Nunes, R.; Nicolau, H.; Gonçalves, D. Blind people interacting with large touch surfaces: Strategies for one-handed and two-handed exploration. In Proceedings of the 2015 International Conference on Interactive Tabletops & Surfaces, Madeira, Portugal, 15–18 November 2015; ACM: New York, NY, USA, 2015; pp. 25–34. [Google Scholar]

- Ducasse, J.; Brock, A.M.; Jouffrais, C. Accessible interactive maps for visually impaired users. In Mobility in Visually Impaired People—Fundamentals and ICT Assistive Technologies; Pissaloux, E., Velasquez, R., Eds.; Springer: Berlin, Germany, 2018; pp. 537–584. [Google Scholar]

- Brock, A.M.; Truillet, P.; Oriola, B.; Picard, D.; Jouffrais, C. Interactivity improves usability of geographic maps for visually impaired people. Hum. Comput. Interact. 2015, 30, 156–194. [Google Scholar] [CrossRef]

- Simonnet, M.; Vieilledent, S.; Jacobson, R.D.; Tisseau, J. Comparing tactile maps and haptic digital representations of a maritime environment. J. Vis. Impair. Blind. 2011, 105, 222–234. [Google Scholar] [CrossRef]

- Giraud, S.; Brock, A.M.; Macé, M.J.M.; Jouffrais, C. Map learning with a 3D printed interactive small-scale model: Improvement of space and text memorization in visually impaired students. Front. Psychol. 2017, 8, 10. [Google Scholar] [CrossRef] [PubMed]

- Zeng, L.; Weber, G. ATMap: Annotated tactile maps for the visually impaired. In COST 2102 International Training School, Cognitive Behavioural Systems, LNCS; Springer: Berlin, Germany, 2012; Volume 7403, pp. 290–298. [Google Scholar]

- Siegel, A.W.; White, S. The development of spatial representations of large-scale environments. Adv. Child Dev. Behav. 1975, 10, 9–55. [Google Scholar] [PubMed]

- New, B.; Pallier, C.; Ferrand, L.; Matos, R. Une base de données lexicales du français contemporain sur internet: LEXIQUE. Annee Psychol. 2001, 101, 447–462. [Google Scholar] [CrossRef]

- Kane, S.K.; Morris, M.R.; Perkins, A.Z.; Wigdor, D.; Ladner, R.E.; Wobbrock, J.O. Access overlays: Improving non-visual access to large touch screens for blind users. In Proceedings of the 24th Annual ACM Symposium on User Interface Software and Technology—UIST ‘11; ACM: New York, NY, USA, 2011; pp. 273–282. [Google Scholar]

- Kane, S.K.; Wobbrock, J.O.; Ladner, R.E. Usable gestures for blind people: Understanding preference and performance. In Proceedings of the 2011 Annual Conference on Human Factors in Computing systems—CHI ‘11, Vancouver, BC, Canada, 7–12 May 2011; ACM: New York, NY, USA, 2011; pp. 413–422. [Google Scholar]

- Bardot, S.; Serrano, M.; Jouffrais, C. From tactile to virtual: Using a smartwatch to improve spatial map exploration for visually impaired users. In Proceedings of the 18th International Conference on Human-Computer Interaction with Mobile Devices and Services—MobileHCI ‘16, Florence, Italy, 6–9 September 2016; ACM: New York, NY, USA, 2016; pp. 100–111. [Google Scholar]

- Hegarty, M.; Richardson, A.E.; Montello, D.R.; Lovelace, K.; Subbiah, I. Development of a self-report measure of environmental spatial ability. Intelligence 2002, 30, 425–447. [Google Scholar] [CrossRef]

- Albouys-Perrois, J.; Laviole, J.; Briant, C.; Brock, A. Towards a multisensory augmented reality map for blind and low vision people: A participatory design approach. In Proceedings of the CHI’18—CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 21–26 April 2018; ACM: New York, NY, USA, 2018. [Google Scholar]

- Thinus-Blanc, C.; Gaunet, F. Representation of space in blind persons: Vision as a spatial sense? Psychol. Bull. 1997, 121, 20–42. [Google Scholar] [CrossRef] [PubMed]

- Brooke, J. SUS: A ‘quick and dirty’ usability scale. In Usability Evaluation in Industry; Jordan, P.W., Thomas, B., Weerdmeester, B.A., McClelland, I.L., Eds.; Taylor & Francis: London, UK, 1996; pp. 189–194. [Google Scholar]

- Bangor, A.; Kortum, P.T.; Miller, J.T. An empirical evaluation of the system usability scale. Int. J. Hum. Comput. Interact. 2008, 24, 574–594. [Google Scholar] [CrossRef]

- Friedman, A.; Kohler, B. Bidimensional regression: Assessing the configural similarity and accuracy of cognitive maps and other two-dimensional data sets. Psychol. Methods 2003, 8, 468–491. [Google Scholar] [CrossRef] [PubMed]

- Cattaneo, Z.; Vecchi, T. Blind Vision: The Neuroscience of Visual Impairment; MIT Press: Cambridge, MA, USA, 2011. [Google Scholar]

| Category | Name (French) | Translation (English) | Frequency | Syllables | Map Nr |

|---|---|---|---|---|---|

| Water Animals | Dauphin | Dolphin | 1.76 | 2 | 1 |

| Méduse | Jellyfish | 0.66 | 2 | 2 | |

| Sardine | Sardine | 0.84 | 2 | 3 | |

| Calmar | Squid | 0.7 | 2 | 4 | |

| Birds | Toucan | Toucan | 0.03 | 2 | 1 |

| Flamand | Flamingo | 0.39 | 2 | 2 | |

| Faucon | Falcon | 4.07 | 2 | 3 | |

| Moineau | Sparrow | 2.92 | 2 | 4 | |

| Mammals | Lion | Lion | 14.58 | 1 | 1 |

| Lièvre | Hare | 3.36 | 1 | 2 | |

| Cerf | Deer | 6.17 | 1 | 3 | |

| Tigre | Tiger | 11.14 | 1 | 4 | |

| Flowers | Violette | Violet | 0.77 | 2 | 1 |

| Tulip | Tulipe | 1.53 | 2 | 2 | |

| Gentiane | Gentian | 0.14 | 2 | 3 | |

| Glaïeul | Gladiolus | 0.01 | 2 | 4 | |

| Vegetables | Oignon | Onion | 4.35 | 2 | 1 |

| Poivron | Bell Peppers | 0.51 | 2 | 2 | |

| Endive | Chicory | 0.03 | 2 | 3 | |

| Carotte | Carrot | 2.45 | 2 | 4 | |

| Fruits | Banane | Banana | 6.09 | 2 | 1 |

| Cerise | Cherry | 2.75 | 2 | 2 | |

| Brugnon | Nectarine | 0.14 | 2 | 3 | |

| Citron | Lemon | 8.1 | 2 | 4 |

| Participant | B1 | B2 | B3 | B4 | B5 | B6 |

|---|---|---|---|---|---|---|

| Gender | F | F | M | F | M | F |

| Age | 43 | 41 | 61 | 22 | 56 | 61 |

| Education | University | College | High School | College | Primary education | University |

| Profession | Music Teacher | Secretary | Retired (formerly piano tuner) | Secretary | Furniture maker | Administrative officer |

| Onset of Blindness | Birth | Birth | Birth | Birth | 18 years | 15 years |

| Braille Reading Expertise | 5 | 4 | 4 | 5 | 4 | 4 |

| Braille Hands | Two sequential | Two parallel | Two sequential | Left hand | Left hand | Two parallel |

| Tactile Graphics Ease | 2 | 1 | 4 | 1 | 4 | 4 |

| Dexterity | Right | Right | Right | Right | Right | Right |

| Phone | Android | iPhone | iPhone | Button-based | Android | Button-based |

| Screen Reader | NVDA, Talkback | Jaws, VoiceOver | Jaws, VoiceOver | Jaws, NVDA | NVDA, Jaws, Talkback | Jaws, NVDA |

| Interactive Technology Ease | 5 | 4 | 4 | 4 | 4 | 4 |

| SBSOD | 4.33 | 4.73 | 5.33 | 1.86 | 5.26 | 5.46 |

| Online Tasks Measures | LOC | REL | ORI | ROT |

|---|---|---|---|---|

| Time | DIG | DIG | DIG | DIG |

| Distance Estimation | Not measured | LAY | no diff | no diff |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Simonnet, M.; Brock, A.M.; Serpa, A.; Oriola, B.; Jouffrais, C. Comparing Interaction Techniques to Help Blind People Explore Maps on Small Tactile Devices. Multimodal Technol. Interact. 2019, 3, 27. https://doi.org/10.3390/mti3020027

Simonnet M, Brock AM, Serpa A, Oriola B, Jouffrais C. Comparing Interaction Techniques to Help Blind People Explore Maps on Small Tactile Devices. Multimodal Technologies and Interaction. 2019; 3(2):27. https://doi.org/10.3390/mti3020027

Chicago/Turabian StyleSimonnet, Mathieu, Anke M. Brock, Antonio Serpa, Bernard Oriola, and Christophe Jouffrais. 2019. "Comparing Interaction Techniques to Help Blind People Explore Maps on Small Tactile Devices" Multimodal Technologies and Interaction 3, no. 2: 27. https://doi.org/10.3390/mti3020027

APA StyleSimonnet, M., Brock, A. M., Serpa, A., Oriola, B., & Jouffrais, C. (2019). Comparing Interaction Techniques to Help Blind People Explore Maps on Small Tactile Devices. Multimodal Technologies and Interaction, 3(2), 27. https://doi.org/10.3390/mti3020027