Virtual Reality in Cartography: Immersive 3D Visualization of the Arctic Clyde Inlet (Canada) Using Digital Elevation Models and Bathymetric Data

Abstract

:1. Introduction

2. Related Work

3. Methodology

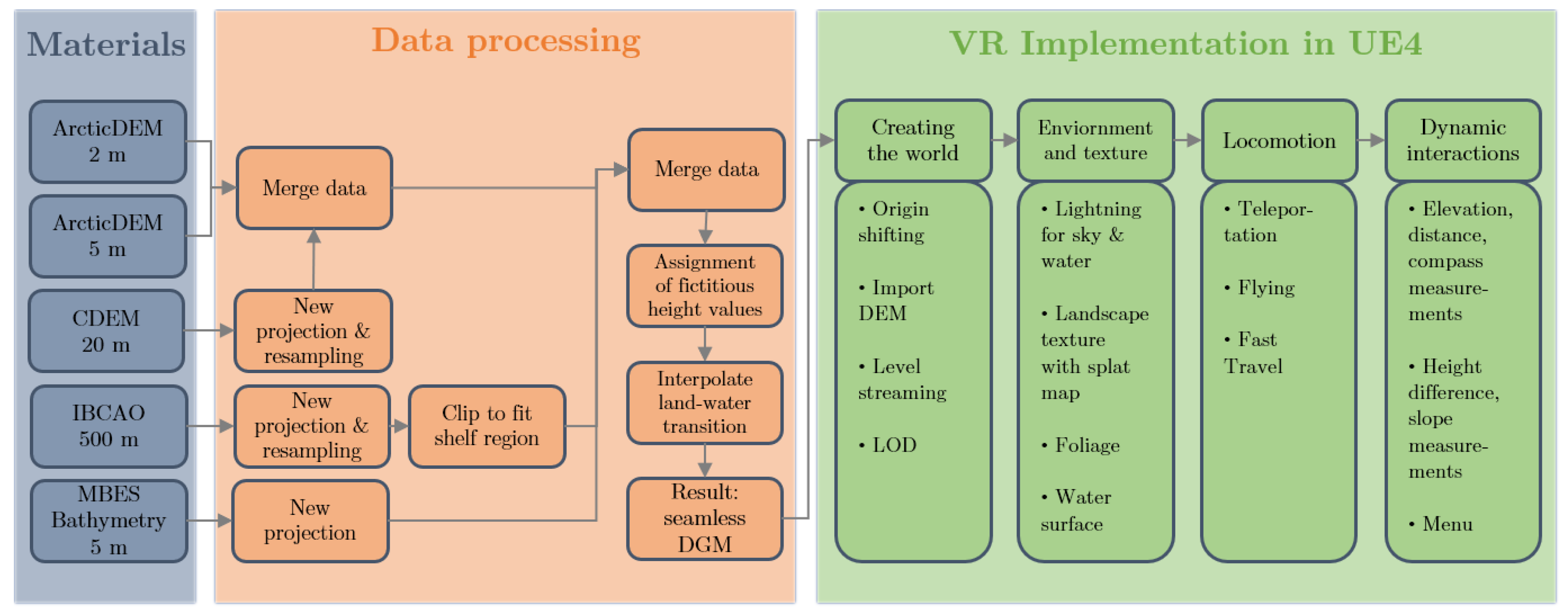

3.1. Project Workflow

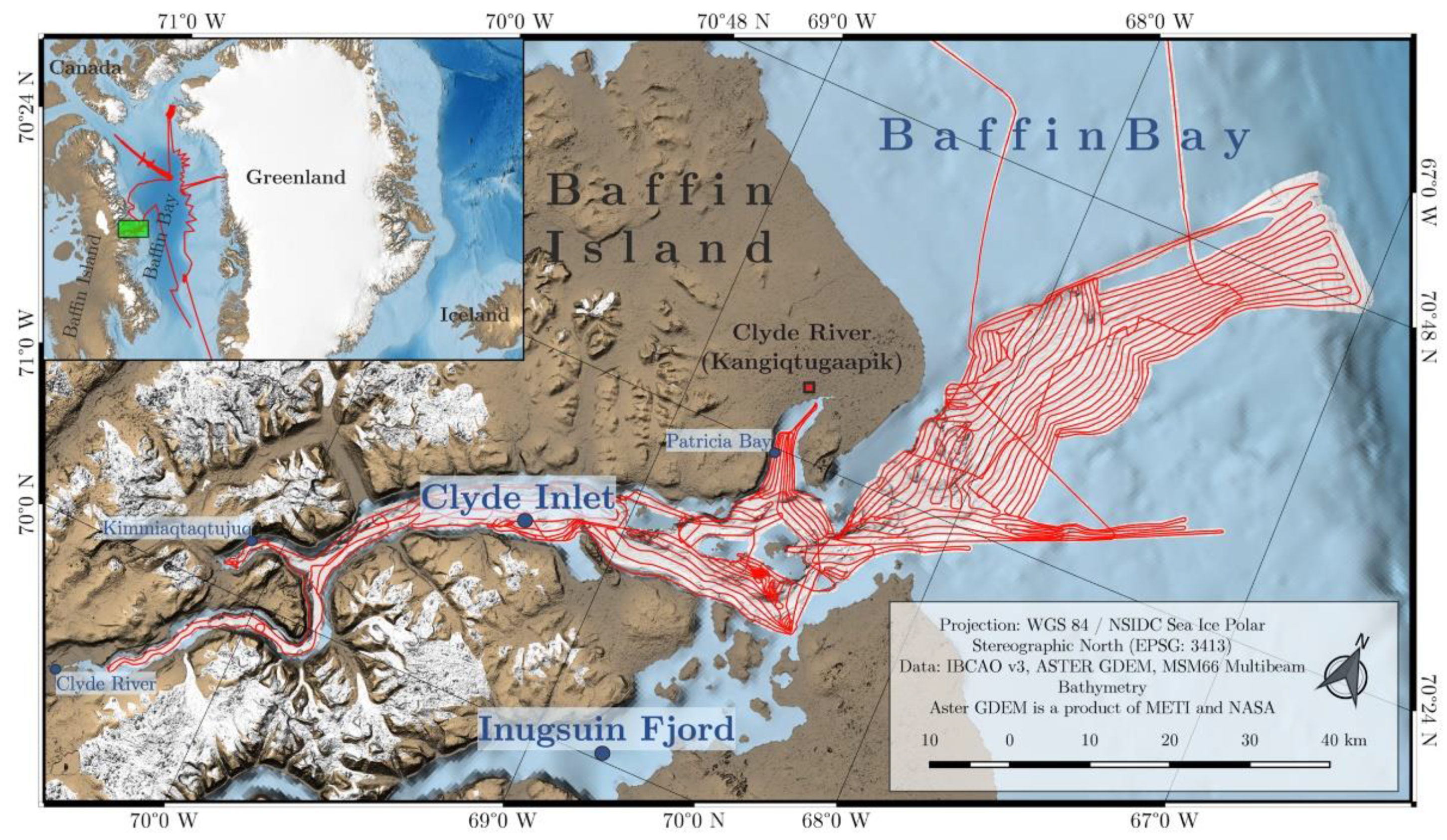

3.2. Area of Investigation and Materials

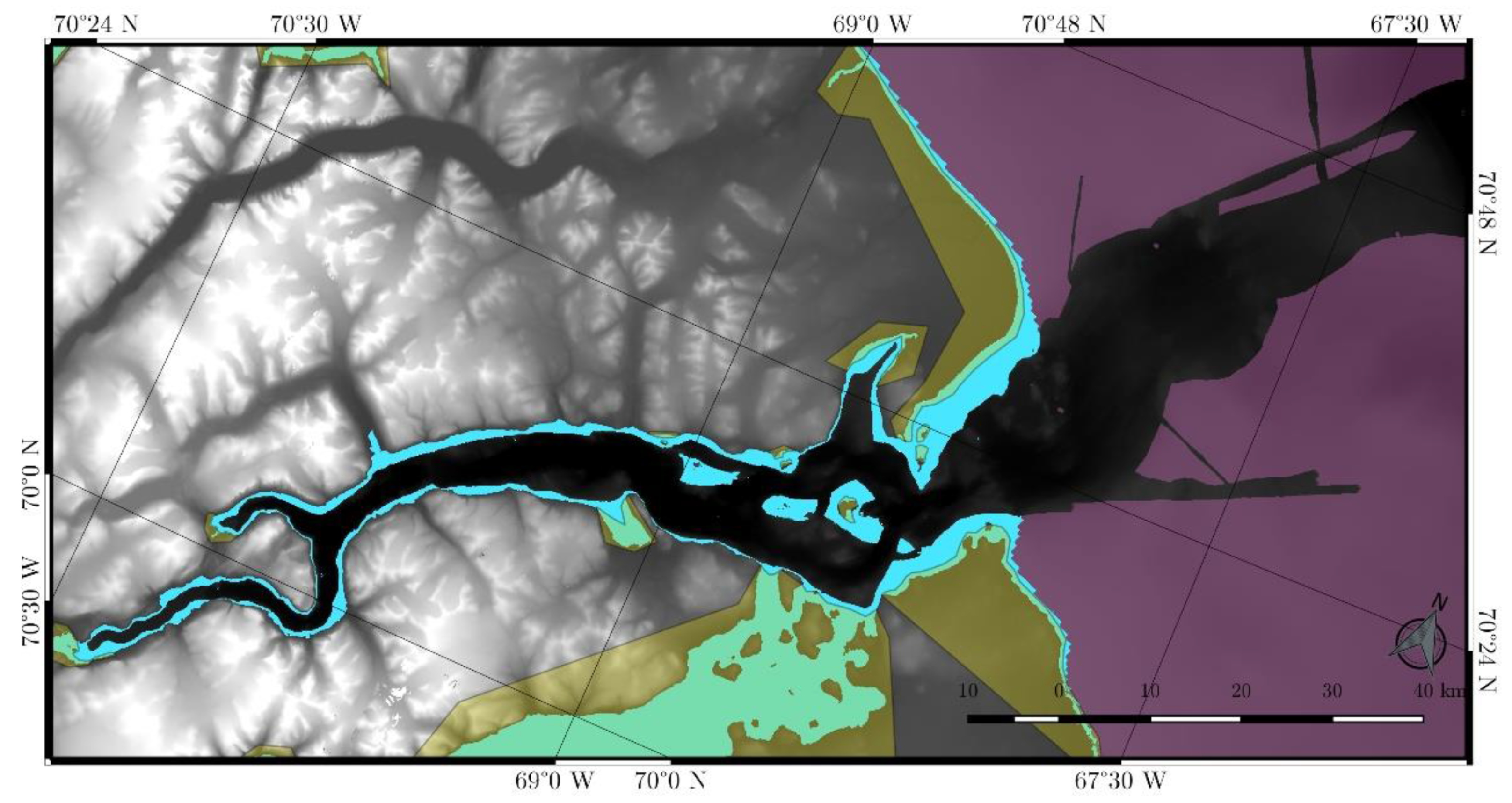

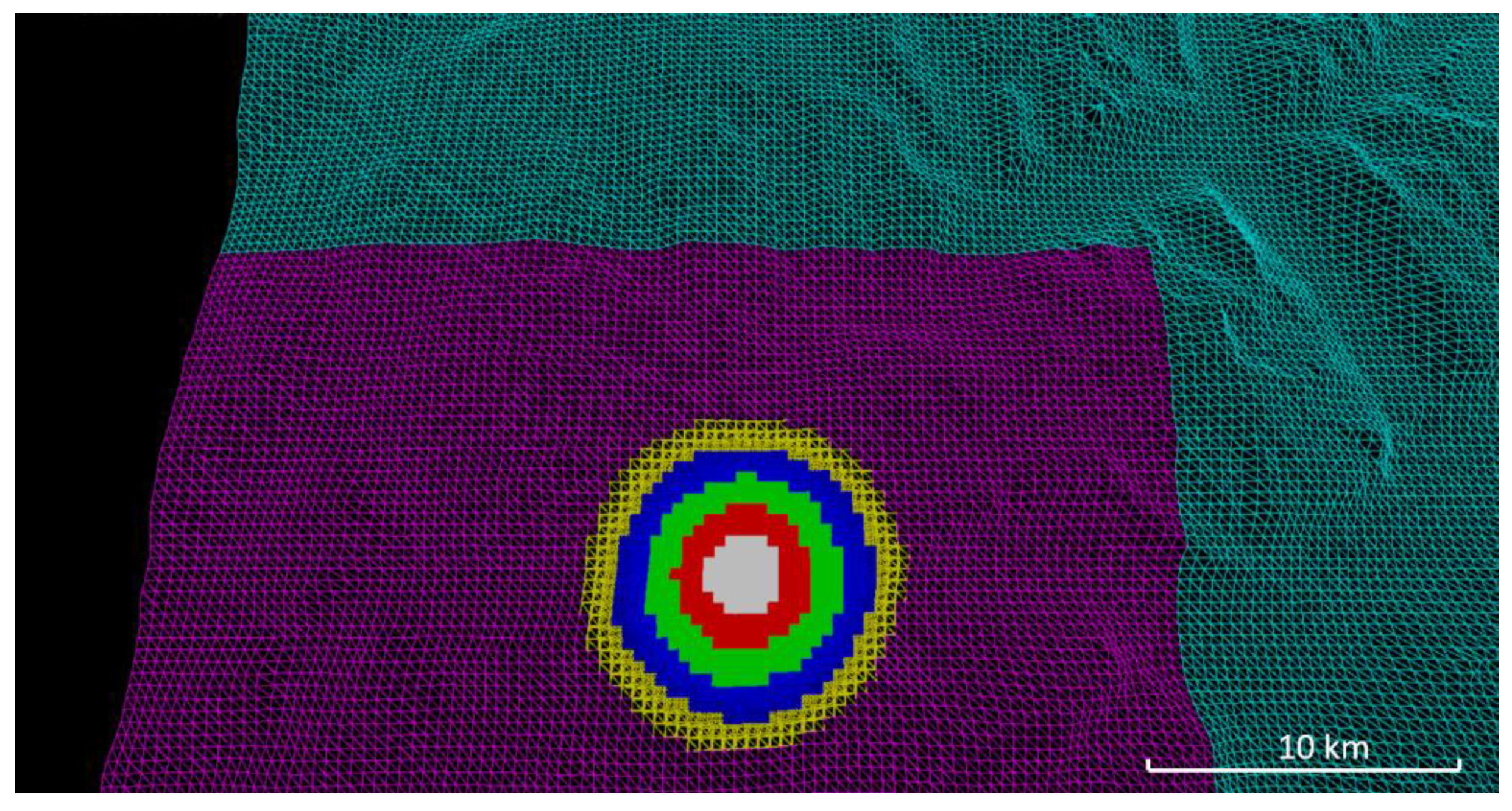

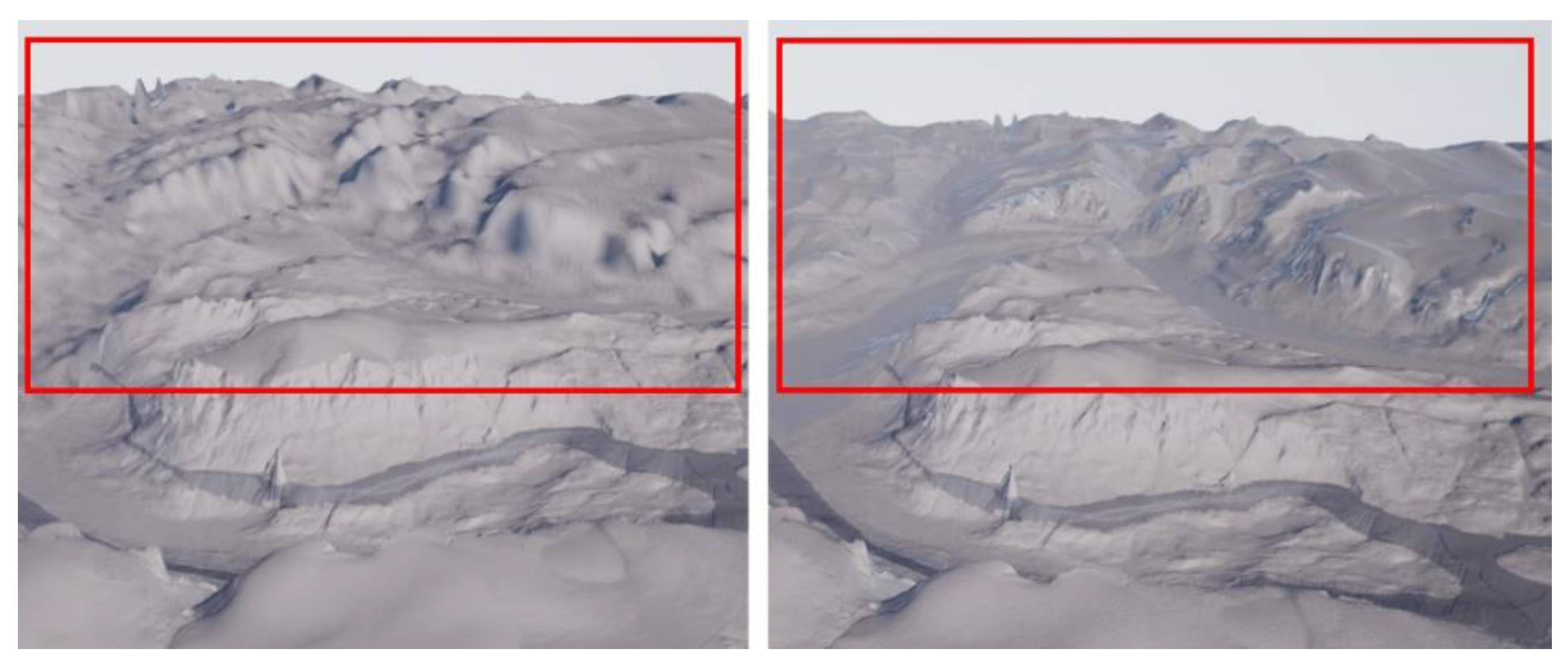

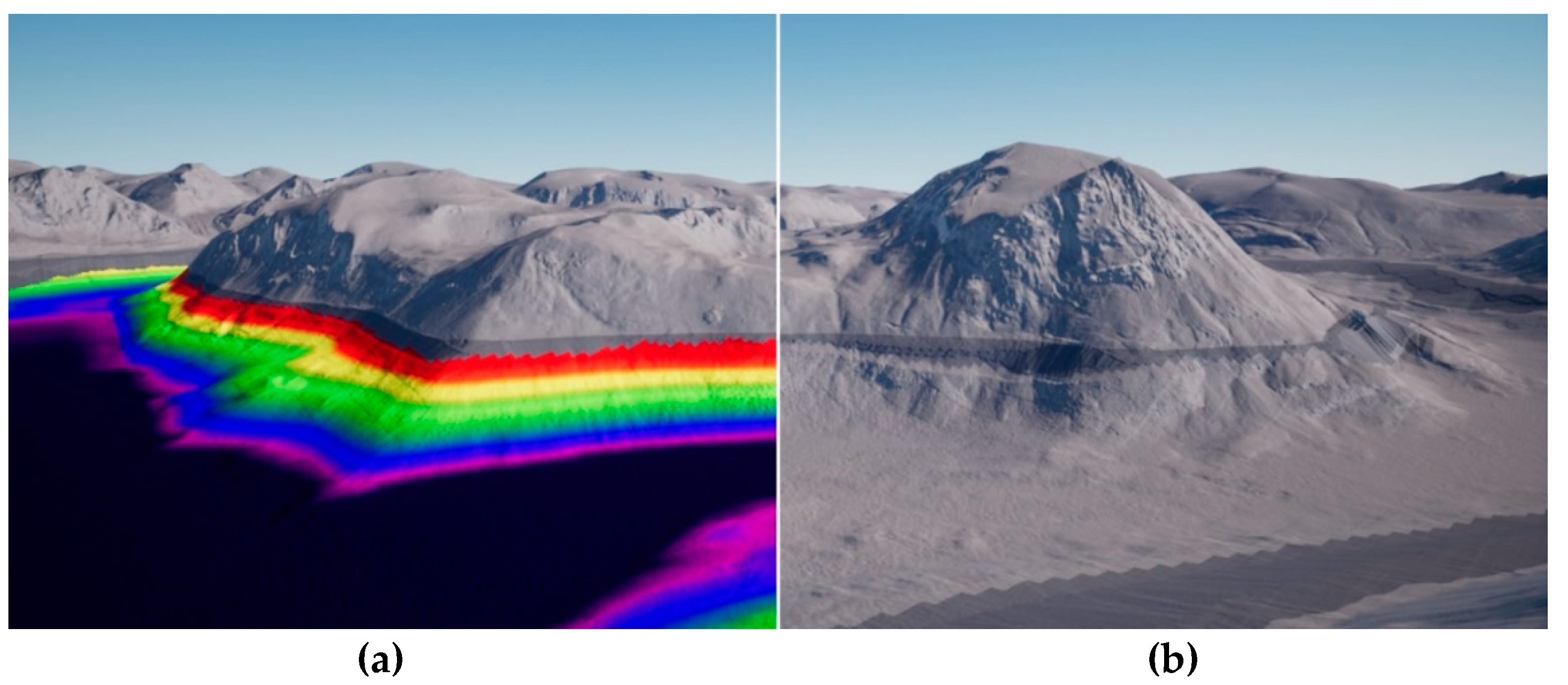

3.3. Data Processing and Generation of Coherent Digital Elevation Model

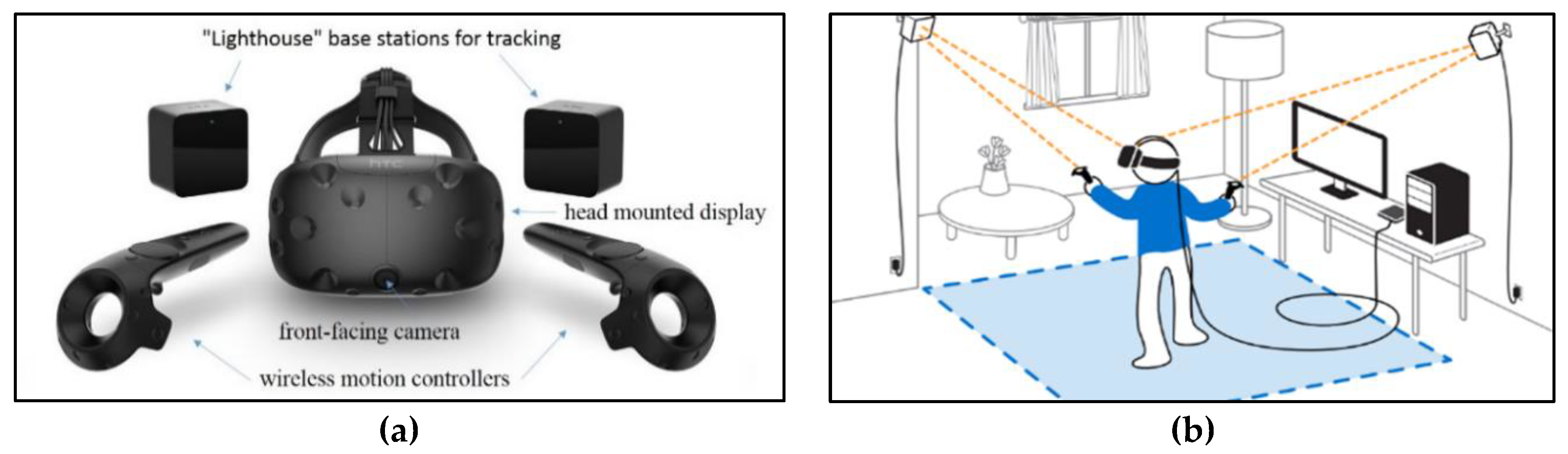

3.4. Game Engine Unreal and VR System HTC Vive

3.5. Implementation into Virtual Reality

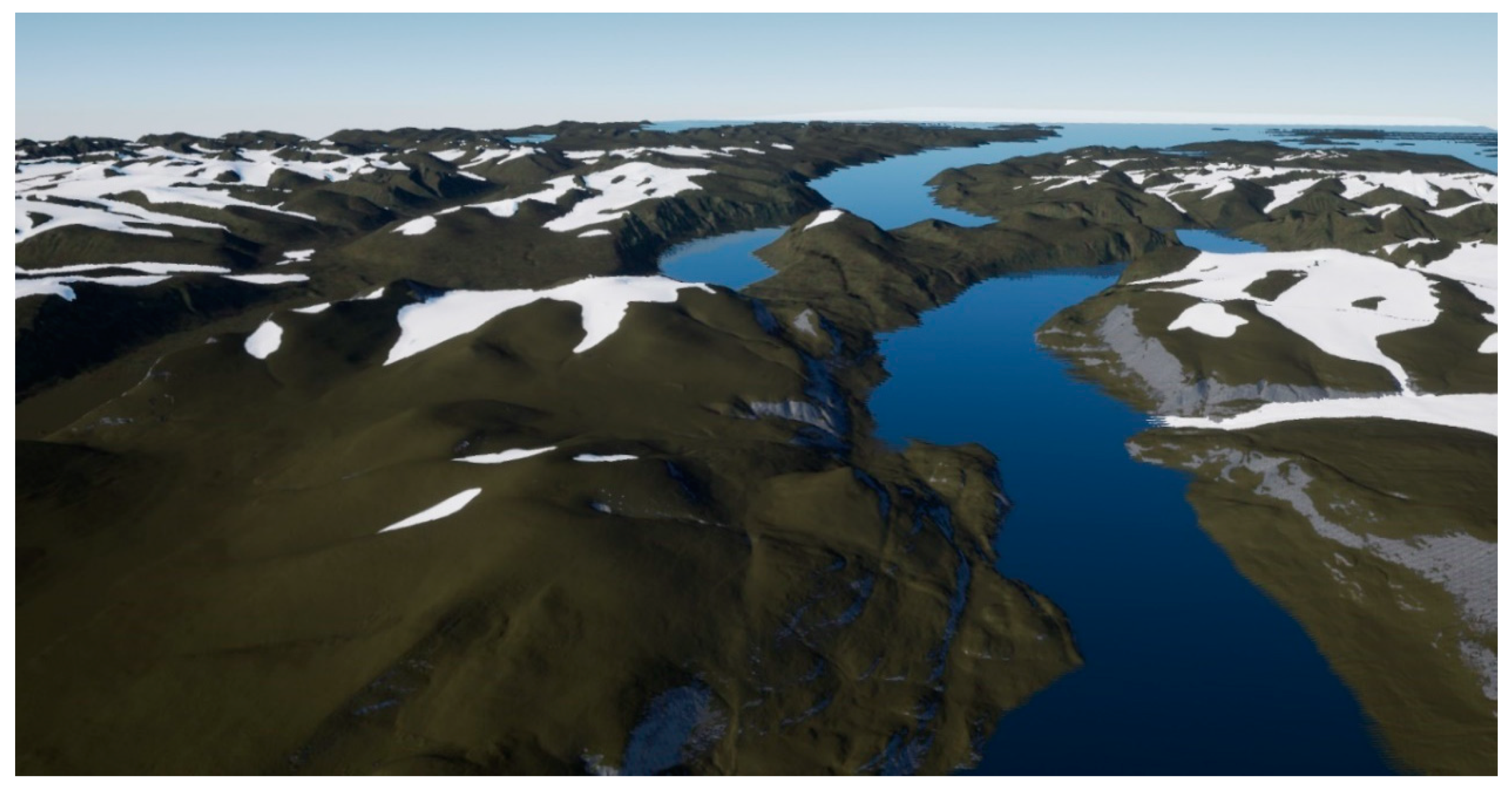

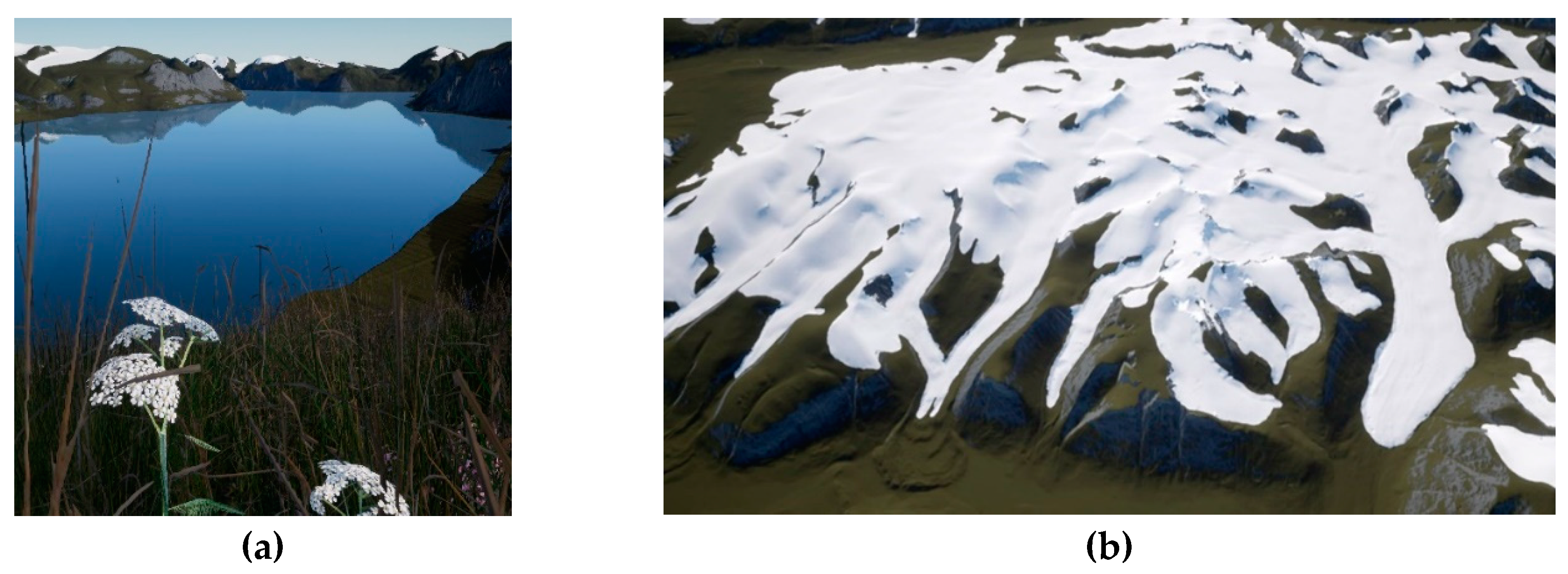

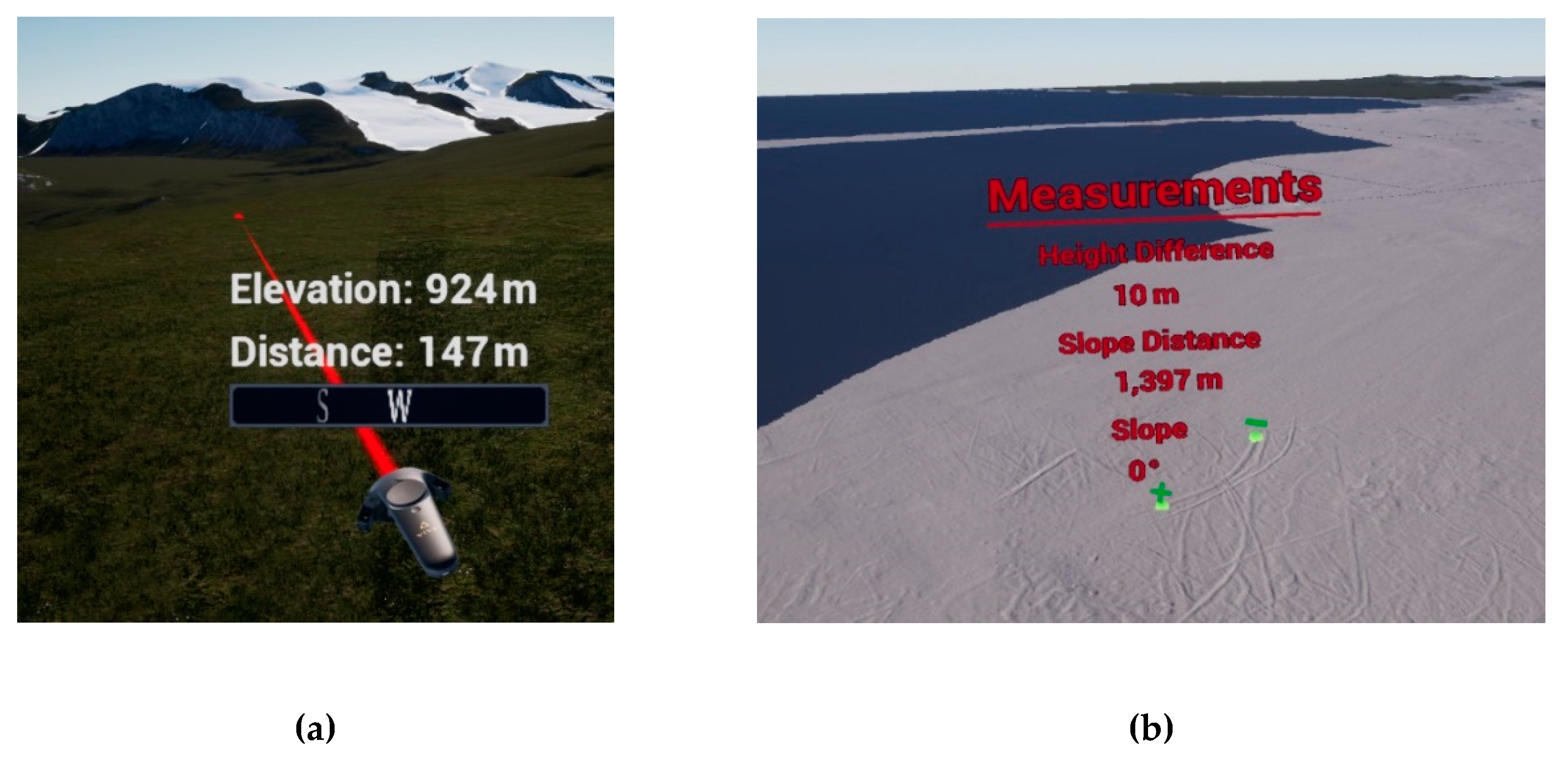

4. Results

5. Usability and Utility Assessment

6. Conclusions and Outlook

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Imhof, E. Kartographische Geländedarstellung; Walter de Gruyter: Berlin, Germany, 1965. [Google Scholar]

- Zanini, M. Dreidimensionale synthetische Landschaften: Wissensbasierte Dreidimensionale Rekonstruktion und Visualisierung Raumbezogener Informationen. Available online: http://www.igp-data.ethz.ch/berichte/Blaue_Berichte_PDF/66.pdf (accessed on 9 January 2019).

- Virtual Reality. Available online: https://www.dictionary.com/browse/virtual-reality (accessed on 5 December 2018).

- Dörner, R.; Broll, W.; Grimm, P.; Jung, B. Virtual und Augmented Reality (VR/AR): Grundlagen und Methoden der Virtuellen und Augmentierten Realität; Springer: Berlin, Germany, 2014. [Google Scholar]

- Freina, L.; Ott, M. A Literature Review on Immersive Virtual Reality in Education: State of The Art and Perspectives. eLearning & Software for Education. Available online: https://www.researchgate.net/publication/280566372_A_Literature_Review_on_Immersive_Virtual_Reality_in_Education_State_Of_The_Art_and_Perspectives (accessed on 9 January 2019).

- Portman, M.E.; Natapov, A.; Fisher-Gewirtzman, D. To go where no man has gone before: Virtual reality in architecture, landscape architecture and environmental planning. Comput. Environ. Urban Syst. 2015, 54, 376–384. [Google Scholar] [CrossRef]

- Jerald, J. The VR Book: Human-Centered Design for Virtual Reality; Association for Computing Machinery and Morgan & Claypool: New York, NY, USA, 2016. [Google Scholar]

- McCaffrey, M. Unreal Engine VR Cookbook; Addison-Wesley Professional: Boston, MA, USA, 2017. [Google Scholar]

- Fuchs, P. Virtual Reality Headsets—A Theoretical and Pragmatic Approach; CRC Press: London, UK, 2017. [Google Scholar]

- Gutiérrez, M.A.; Vexo, F.; Thalmann, D. Stepping into Virtual Reality; Springer: London, UK, 2008. [Google Scholar]

- Kersten, T.; Tschirschwitz, F.; Deggim, S. Development of a Virtual Museum including a 4D Presentation of Building History in Virtual Reality. Inter. Arch. Photogramm. Remote Sens. Spat. Inf. Sc 2017, 42, 361–367. [Google Scholar] [CrossRef]

- Tschirschwitz, F.; Richerzhagen, C.; Przybilla, H.-J.; Kersten, T. Duisburg 1566–Transferring a Historic 3D City Model from Google Earth into a Virtual Reality Application. PFG J. Photogramm. Remote Sens. Geoinf. Sci. 2019, 87, 1–10. [Google Scholar] [CrossRef]

- Deggim, S.; Kersten, T.; Tschirschwitz, F.; Hinrichsen, N. Segeberg 1600–Reconstructing a Historic Town for Virtual Reality Visualisation as an Immersive Experience. Inter. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 42, 87–94. [Google Scholar] [CrossRef]

- Kersten, T.; Büyüksalih, G.; Tschirschwitz, F.; Kan, T.; Deggim, S.; Kaya, Y.; Baskaraca, A.P. The Selimiye Mosque of Edirne, Turkey—An Immersive and Interactive Virtual Reality Experience using HTC Vive. Inter. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017. [Google Scholar] [CrossRef]

- Kersten, T.; Tschirschwitz, F.; Lindstaedt, M.; Deggim, S. The historic wooden model of Solomon’s Temple: 3D recording, modelling and immersive virtual reality visualisation. J. Cult. Herit. Manag. Sustain. Dev. 2018, 8, 448–464. [Google Scholar] [CrossRef]

- Mat, R.C.; Shariff, A.R.M.; Zulkifli, A.N.; Rahim, M.S.M.; Mahayudin, M.H. Using Game Engine for 3D Terrain Visualisation of GIS Data: A Review. Available online: https://pdfs.semanticscholar.org/3c03/9566fdf573dad91058c9bfab98f98a1c7e22.pdf (accessed on 9 January 2019).

- Herwig, A.; Paar, P. Game Engines: Tools for Landscape Visualization and Planning? Available online: https://www.researchgate.net/profile/Philip_Paar/publication/268212905_Game_Engines_Tools_for_Landscape_Visualization_and_Planning/links/5464ab2b0cf2cb7e9dab8bc5.pdf (accessed on 9 January 2019).

- Thöny, M.; Billeter, M.; Pajarola, R. Vision paper: The future of scientific terrain visualization. In Proceedings of the 23rd SIGSPATIAL International Conference on Advances in Geographic Information Systems, Seattle, WA, USA, 3–6 November 2015. [Google Scholar]

- Mat, R.C.; Mahayudin, M.H. Using Game Engine for Online 3D Terrain Visualization with Oil Palm Tree Data. J. Telecommun. Electron. Comput. Eng. 2018, 10, 93–97. [Google Scholar]

- Wang, J.; Wu, F.; Wang, J. The Study of Virtual Reality Scene Making in Digital Station Management Application System Based on Unity3D. In Proceedings of the 2015 International Conference on Electrical, Computer Engineering and Electronics, Jinan, China, 29–31 May 2015. [Google Scholar]

- Tsai, F.; Chiu, H.C. Adaptive Level of Detail for Large Terrain Visualization. Available online: http://www.isprs.org/proceedings/xxxvii/congress/4_pdf/103.pdf (accessed on 9 January 2019).

- Jakobsson, M.; Mayer, L.; Coakley, B.; Dowdeswell, J.A.; Forbes, S.; Fridman, B.; Hodnesdal, H.; Noormets, R.; Pedersen, R.; Rebesco, M.; et al. International Bathymetric Chart of the Arctic Ocean (IBCAO) Version 3.0. Encyclo. Mar. Geosci. 2014. [Google Scholar] [CrossRef]

- ArcticDEM. Harvard Dataverse 2018. Available online: https://dataverse.harvard.edu/dataverse.xhtml?alias=pgc (accessed on 9 January 2019).

- Government of Canada. Canadian Digital Elevation Model. Natural Resources Canada 2018. Available online: https://open.canada.ca/data/en/dataset/7f245e4d-76c2-4caa-951a-45d1d2051333 (accessed on 19 December 2018).

- Lütjens, M. Immersive Virtual Reality Visualisation of the Arctic Clyde Inlet on Baffin Island (Canada) by Combining Bathymetric and Terrestrial Terrain Data. Master Thesis, HafenCity University, Hamburg, Germany, 2018. [Google Scholar]

- O’Flanagan, J. Game Engine Analysis and Comparison. Available online: https://www.gamesparks.com/blog/game-engine-analysis-and-comparison/ (accessed on 27 January 2019).

- Lawson, E. Game Engine Analysis. Available online: https://www.gamesparks.com/blog/game-engine-analysis/ (accessed on 27 January 2019).

- Painter, L. Hands on with HTC Vive Virtual Reality Headset. 2015. Available online: http://www.pcadvisor.co.uk/feature/gadget/hands-on-with-htc-vive-virtual-reality-headset-experience-2015-3631768/ (accessed on 28 January 2019).

- HTC Corporation. Vive PRE User Guide. Available online: http://www.htc.com/managed-assets/shared/desktop/vive/Vive_PRE_User_Guide.pdf (accessed on 6 December 2018).

- Epic Games, Inc. World Composition User Guide. Available online: https://docs.unrealengine.com/en-us/Engine/LevelStreaming/WorldBrowser (accessed on 3 December 2018).

- Den, L. World Machine to UE4 using World Composition. Epic Games, Inc., 2015. Available online: https://wiki.unrealengine.com/World_Machine_to_UE4_using_World_Composition (accessed on 4 December 2018).

- RGI Consortium. Randolph Glacier Inventory—A Dataset of Global Glacier Outlines: Version 6.0. Available online: https://www.glims.org/RGI/00_rgi60_TechnicalNote.pdf (accessed on 9 January 2019).

- HTC Corporation. SPECS & DETAILS. Available online: https://www.vive.com/uk/product/#vive-spec (accessed on 15 December 2018).

- Lurton, X.; Lamarche, G.; Brown, C.; Lucieer, V.; Rice, G.; Schimel, A.; Weber, T. Backscatter Measurements by Seafloor-Mapping Sonars. Guidelines and Recommendations. 2015. Available online: http://geohab.org/wpcontent/uploads/2013/02/BWSG-REPORT-MAY2015.pdf (accessed on 5 December 2018).

| Type | Origin | Resolution |

|---|---|---|

| Bathymetric | Collected from the cruise MSM66 in 2017 in collaboration with Alfred-Wegener Institute (AWI) | 5 m |

| Bathymetric | International Bathymetric Chart of the Arctic Ocean (IBCAO) [22] | 500 m |

| Terrestrial | ArcticDEM [23] | 2 m & 5 m |

| Terrestrial | Canadian Digital Elevation Model (CDEM) [24] | ~20 m |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lütjens, M.; Kersten, T.P.; Dorschel, B.; Tschirschwitz, F. Virtual Reality in Cartography: Immersive 3D Visualization of the Arctic Clyde Inlet (Canada) Using Digital Elevation Models and Bathymetric Data. Multimodal Technol. Interact. 2019, 3, 9. https://doi.org/10.3390/mti3010009

Lütjens M, Kersten TP, Dorschel B, Tschirschwitz F. Virtual Reality in Cartography: Immersive 3D Visualization of the Arctic Clyde Inlet (Canada) Using Digital Elevation Models and Bathymetric Data. Multimodal Technologies and Interaction. 2019; 3(1):9. https://doi.org/10.3390/mti3010009

Chicago/Turabian StyleLütjens, Mona, Thomas P. Kersten, Boris Dorschel, and Felix Tschirschwitz. 2019. "Virtual Reality in Cartography: Immersive 3D Visualization of the Arctic Clyde Inlet (Canada) Using Digital Elevation Models and Bathymetric Data" Multimodal Technologies and Interaction 3, no. 1: 9. https://doi.org/10.3390/mti3010009

APA StyleLütjens, M., Kersten, T. P., Dorschel, B., & Tschirschwitz, F. (2019). Virtual Reality in Cartography: Immersive 3D Visualization of the Arctic Clyde Inlet (Canada) Using Digital Elevation Models and Bathymetric Data. Multimodal Technologies and Interaction, 3(1), 9. https://doi.org/10.3390/mti3010009