1. Introduction: An Affordable World

In 1966, the psychologist James Gibson introduced a new concept [

1], “affordances”, to define an action-centric perspective on the visual perception of the external environment. His best-known definition is taken from his seminal 1979 book,

The Ecological Approach to Visual Perception [

2]: “The affordances of the environment are what it offers the animal, what it provides or furnishes, either for good or ill. The verb to afford is found in the dictionary, the noun affordance is not. I have made it up. I mean by it something that refers to both the environment and the animal in a way that no existing term does. It implies the complementarity of the animal and the environment”.

As it became a fundamental concept during the next decades a vast literature about its meaning has being produced [

3,

4,

5,

6]. It is beyond the scope and aims of this paper to discuss in detail the very notion of affordance; however, we do explain in which context we apply it, and what is the special value of our model. Specifically, here we investigate how the concept of affordances, that was originally formalised in the context of visual perception of action-related properties of objects and spaces, can relate to emotions, and how could it be used in Human-Robot Interaction (henceforth, HRI) scenarios. Indeed, the use of affordances in HRI, can facilitate social and functional interactions between humans and robots or, more generally, between humans and machines (see for example [

7,

8]). Moreover, emotions are a fundamental cognitive and social aspect of human life [

9,

10] and their value has also been considered of the utmost importance for the design of artificial cognitive architectures [

11].

Through previous research on emotional modelling [

12,

13,

14] we have contributed to the design of a multimodal model for the use of emotional affordances in HRI [

15,

16]. Most of HRI literature that includes emotional affordances is biased by two important aspects: first, it maintains an over-simplistic emotional mapping based on 6 Ekman’s emotions [

17,

18], and secondly it defends an egocentric spatial imagery, which involves a self-to-object representational system. Most of current studies on robotics and emotions are based on very basic aspects of emotional clues, mainly, visual ones (and based on a one-to-one direct connection with Ekman’s thesis). Instead, here we want to consider the temporal nature of emotional modulations as well as the multidimensional elucidators of emotional values. At least two works are worth mentioning in these regards: Breazeal’s emotional model for the robot Kismet [

19] and the emotional model used for the robot WE-4 [

20]. Both of them rely on a model based on three dimensions: while those are interesting approaches, we go beyond that architecture, extending to the concepts of affordances and learning.

We have previously discussed some of the issues related to emotions [

21,

22], and with this paper we extend those discussions and provide a new way to consider emotional affordances in HRI. In our previous research we have studied how to identify a fundamental tree of basic emotions and how this mapping can be transformed into a dynamical semantic model, which includes temporal variations and a “logic of transitions” (that is, to model mood changes that look as real in average humans). The increase of the complexity of such model as well as the affordance approach has helped us to define the several parameters that must be considered for complex HRI modelling.

In the present manuscript, we propose a viable model for implementation into HRI. We implement the case of 2-way affordances:

In order to make the implementation generic and adaptable, the proposed model encapsulates a library that can be customised through robot-specific and scenario-specific tunable parameters that will have different initializations depending on the situation and will also be adapted online based on the feedback that the robot receives during its interaction with the environment and with the human users.

The rest of the manuscript is structured as follows: in

Section 2, we introduce the background and the concepts related to affordances; in

Section 3 we describe the model, and we then show some examples of numerical simulation in

Section 4. In

Section 5 we discuss possible application scenarios, and in

Section 6 we summarise and conclude the paper.

2. Background

In the following subsections we motivate our attempt to integrate the concepts of emotions and affordances for a successful HRI, and we outline the fundamental aspects that characterise our model.

2.1. The Grounded Nature of Emotional Syntax

Not only emotions lie at the basis of any informational-cognitive event, but they become also a mechanism to manage internal data as well as to share information with other agents. In that sense, emotions are embodied [

23] and are the result of a natural evolutionary process that has increased survival ratios among those entities which use them, because of the greater adaptive skills they make possible [

24,

25,

26]. This embodied nature of emotions affects also the way by which we perceive and construct our emotional experiences [

27].

Notably, Darwin [

28] was the first one to think about the expression of emotions in humans and animals from an evolutionary and naturalistic perspective. The idea is simple: the arousal of emotions provides information to other beings about our internal states and help to synchronise possible actions together. Indeed, people locate and identify health states thanks to emotional awareness [

29]. People with autism or Alexithymia, for example, are bad at identifying not only other’s emotions but also their own ones, and as a consequence they are not able to identify their own health problems [

30,

31]. Thus, introducing a functional perspective, emotions serve as identifiers and labellers of informational states individual as well as in social contexts. For that reason emotions are clues for the understanding of the state and intentions of an agent. Until now, most of roboticists and engineers have followed what we will call the ‘‘Skinnerian approach to emotions’’. Due to the complexities about emotional nature understanding and the myriad of divergent emotional models (and, obviously, the impossibility at this historical point to have true emotional machines, [

11]), most experts have reduced their interests to observable external aspects of emotions. Despite of the utility of such over-simplistic approach for HRI or HCI, this Skinnerian approach turns blind systems to the grounded causes that connect emotional states and manage at the same time their temporal syntax. The emotional dysfunction of our intelligent systems is deep, because it is not only necessary to capture basic external emotional signals, but also to connect them to create a map of the grounded nature that produces them. Our empathy is not only a moral value but also a sensorimotor mechanism to copy actions and learn by imitation [

32,

33].

2.2. Affordances and Emotions

Although this paper does not aim to revisit the long debates on the meaning of ‘affordance’ (see [

6], and [

34] for a recent survey on affordance related studies in different disciplines), let us consider a general definition: an affordance is a relation between an informational event (objects, environments, even virtual or imaginary things) and an organism which, through the related stimuli and the heuristically processing of them, affords the opportunity for that organism to perform an action. Easily: it’s the availability of possible uses of an object by an entity, like a cord affords pulling. Following original Gibson uses [

3], affordances are resources that any animal use for some purpose. Thus, it is the animal who perceives something as an affordance, something ‘useful for’ or ‘interesting to’, and the affordance has an ecological nature. Although the performer of the experiences creates the use, it is also true that the correlated nature of the object/environment together with the expectations, skills and intentionality of the user (both actual and perceived properties) are what make the emergence of affordances possible.

Notably, emotions set the context for action selection, hierarchically ordered following biological priorities [

35,

36]. In this sense, as stated in [

37], emotions lie at every core goal of a living entity. Emotions are then not just behavioural external actions that need to be scanned and interpreted for secondary purposes of human actions analysis. This would justify an over-simplistic Skinnerian approach to emotional expression (while the semantic values that produce emotional expression would remain hidden). In order to avoid such bias into our models (both as a result of embodied and/or social neglections), we need to give emotions a more fundamental role: to be the basic indicators of the deep architecture and motivations that regulate human activities. Emotions ground and regulate any kind of informational interaction with the environment. Consequently, any approach to cognitive systems must consider them not because of their plain behaviourist outcome, but also for their role into the design of action responses.

Therefore, from a functional perspective, we can say that emotions are affordances, or that the process of feeling emotions is related to that of perceiving affordances, since both processes modulate the immediate sensing and prepare the agent for some possible future actions (e.g., the visual and/or olfactory perception of a ripe fruit generates a joyful feeling, and suggests the action of eating, but also the production of a smile). The evolutionary role of such affordances is obvious: the cry of a newborn gives instructions of care to her parents, or the fear reaction to a menace activates flight-or-fight individual and collective responses.

2.3. Emotional Affordances of Objects

Objects own emotional values, thanks to a transfer process made by human agents [

38]. During this process humans even add and transfer anthropomorphic evaluations to objects (and to robots) [

39,

40,

41]. For such reason, any serious emotional affordance library should not only include human bodily aspects related to emotional activities, but also a way to connect emotional values to the emotional actions in which humans tag those objects with emotional values. Therefore, our model includes (see

Figure 1 and

Figure 2) the capture and combined evaluation of objects and emotional expressions, using a global multimodal approach, which is related to an allocentric analysis of human agency. This is very useful: imagine a care robot providing support to an old woman with dementia; such woman can react unexpectedly with fear towards an object that was not previously considered as dangerous, for example a pillow. The skill of the robot to identify a neutral object as having the property of being considered harmful by the patient is the key for the correct management of the emotional equilibrium of the patient. This transferring of emotional affordances is, then, a basic mechanism that most of time remains invisible to us because it is shared automatically by a community of users.

2.4. Physical Affordances of Emotions

This is the classic approach to emotional analysis: to consider faces and other related basic bodily expressions of emotions [

28,

42,

43]. Despite of the possible debates about the universality or culturally-related aspect of emotions elicitation, the basic consideration with respect to these approaches is to understand how do they capture external emotional expressions and connect them by linear models to emotional states. In our model we will use them as well but as part of complex behavioural and dynamical contexts. A Skinnerian approach to emotions (one-to-one direct relationships between expressions and emotional descriptions) does not provide the best model for a complete HRI process: the robot must be able to understand the natural temporary and emotionally transitions between moods and how the emotional states follow a coherent and even predictable dynamical evolution. There are fundamental biochemical mechanisms that explain these modulatory processes, like neuromodulatory ones [

44]: in our robotic model we do not need a detailed representation of the physical mechanisms that make emotions possible in humans, but we do include the dynamical analysis of emotional variations to support natural and effective HRI.

2.5. Allocentric Affordances

Although it is true that an affordance is processed by an agent inside an ecological niche, it has been a common bias in the HRI literature to focus only on a egocentric (subject-object) spatial analysis, mainly because of the laboratory experimental restrictions. Real human actions are performed in rich environments, which involve object-to-object spatial relationships as well, and present therefore the need to encode information about the location of one object or its parts with respect to other objects (i.e., allocentric spatial analysis). This is clear when we analyse cultural psychology studies in which the visualization patterns of different communities were investigated [

45,

46]. It is this enriched social scenario in which emotional affordances are processed, and because of it we can understand how HRI can be manipulated in order to obtain agents responses (which are not purely automated) [

47]. We use the “allocentric” definition following the studies on anthropology of cognition which have shown that humans think following cultural patterns that include individualistic vs. collective views [

48,

49,

50]. This can also be defined as a community-minded process and offers interesting tools when we try to explain spatial processing. It is not just an automated process, but relies on linguistic anchors and cultural metaphors on space and time, and it even affects visual cognition.

2.6. Multimodal Binding

Consequently, our ontological approach includes (See

Figure 1 and

Figure 2) an affordance phase in which the perceptual stimuli related to either objects or emotions are processed through separate streams, that are then merged together in the appraisal phase. Both streams are intrinsically multimodal, i.e., they include visual, auditory and tactile information. For the objects, we consider a multimodal classification process, that can be realized by extending classic visual object recognition and classification approaches [

51] with additional sensory inputs (e.g., speech/sounds, tactile) processed by multimodal machine learning techniques [

52]. For the emotions, beyond the over-simplistic Ekman’s model, we suggest a more precise Plutchik’s wheel [

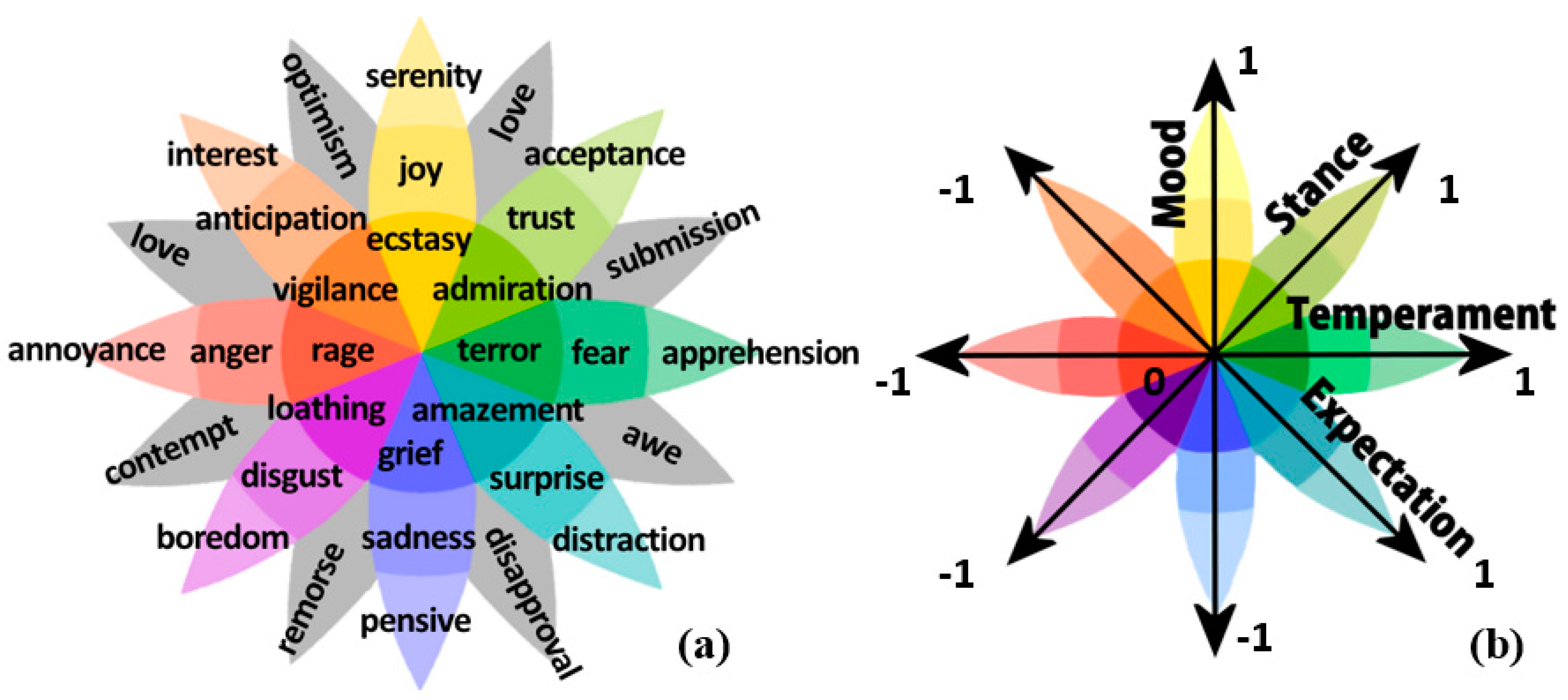

53], that enables a more comprehensive mapping in which emotions are also classified by intensity. Thanks to an allocentric perspective, both input streams can be combined (in the appraisal phase) to obtain reliable information about the emotional value of the currently perceived context.

2.7. Dynamic Processing and Libraries

A fundamental aspect of our model is the dynamical nature of libraries which must combine departing emotions with concluding related actions. A combination of parametric emotion recognition with their connection with the value of the object inside a taxonomy of recognised objects provides a dyadic affordance appraisal mechanism: first, the output performance module is able to connect variable and dynamic emotional states inside a holistic framework in which the answer is also emotionally-valued; second, the motor tasks can be calculated according to possible aims.

2.8. A Computational Model for Effective Emotional Binding in HRI

Therefore, summarising our main contributions, the allocentric, multimodal, and dynamic nature of our model makes a real emotional binding between robots and humans possible, because the enrichment of the variables and mechanisms that rule these variables (i.e., emotional affordances) make it possible to define a richer model for the capture of human interactions than can be shared with robotic devices. Social robots that interact with humans (who at their turn also are socially bonded with other humans) need to understand not only the emotional data from human beings but how to connect them with the affordability of their available set of objects and the expected value of their related final actions. The arousal phase is also part of the expected outcome planning to-be-performed, because emotional processes imply not only the immediate physical response but also the evaluation of the elicitation mechanisms that explain and attribute meaning to agents’ actions. The term arousal here refers to the triggering process, rather than to a “level of activation” of a certain emotional state, which is sometimes referred to in other models [

19].

The main difference between our approach and most of previous emotional HRI systems is that our model provides a multimodal and allocentric (holistic) way to capture emotional affordances. This allows a robot, for example, to understand not only the utility of a tool, but also how this tool is perceived (both emotionally and functionally) by one user, with mappings that can be dynamically adapted through time. A medical pediatric robotic assistant should be able to make diagnoses as well as to provide emotional support to patients; these patients can be scared by the absence of their parents, the disgusting taste of medicines, the fears towards needles, as some example, and such a robot should be able to understand and assist humans all throughout this process of bodily and spiritual (psychological) care. There are ways to help kids to accept such treatments without necessity of causing unnecessary pain. Similar global approaches could be present into terminal care robots, or elderly care robots: robots that can understand the emotional value of things and events, besides of simply scanning bodily affordances.

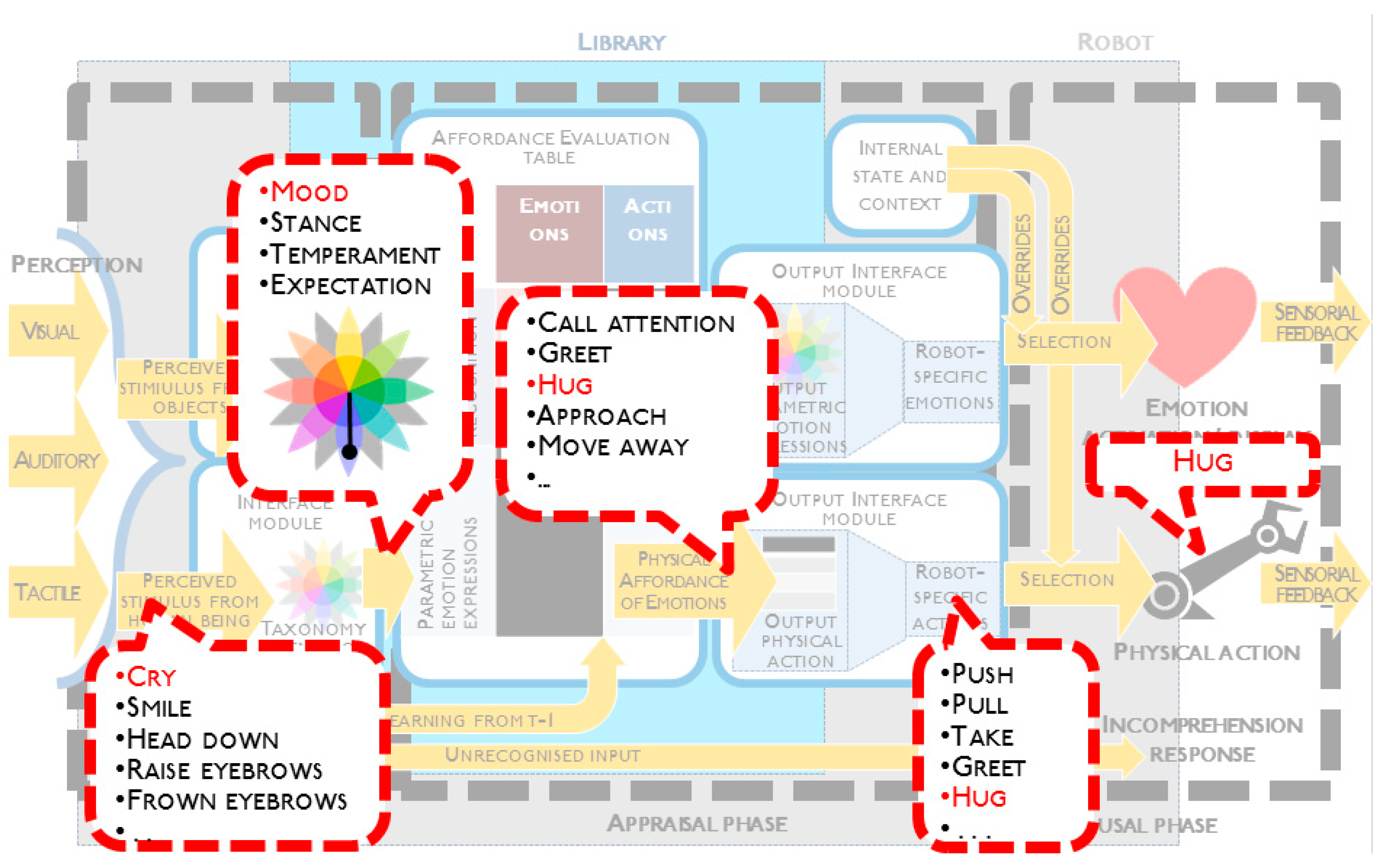

3. AAA Model

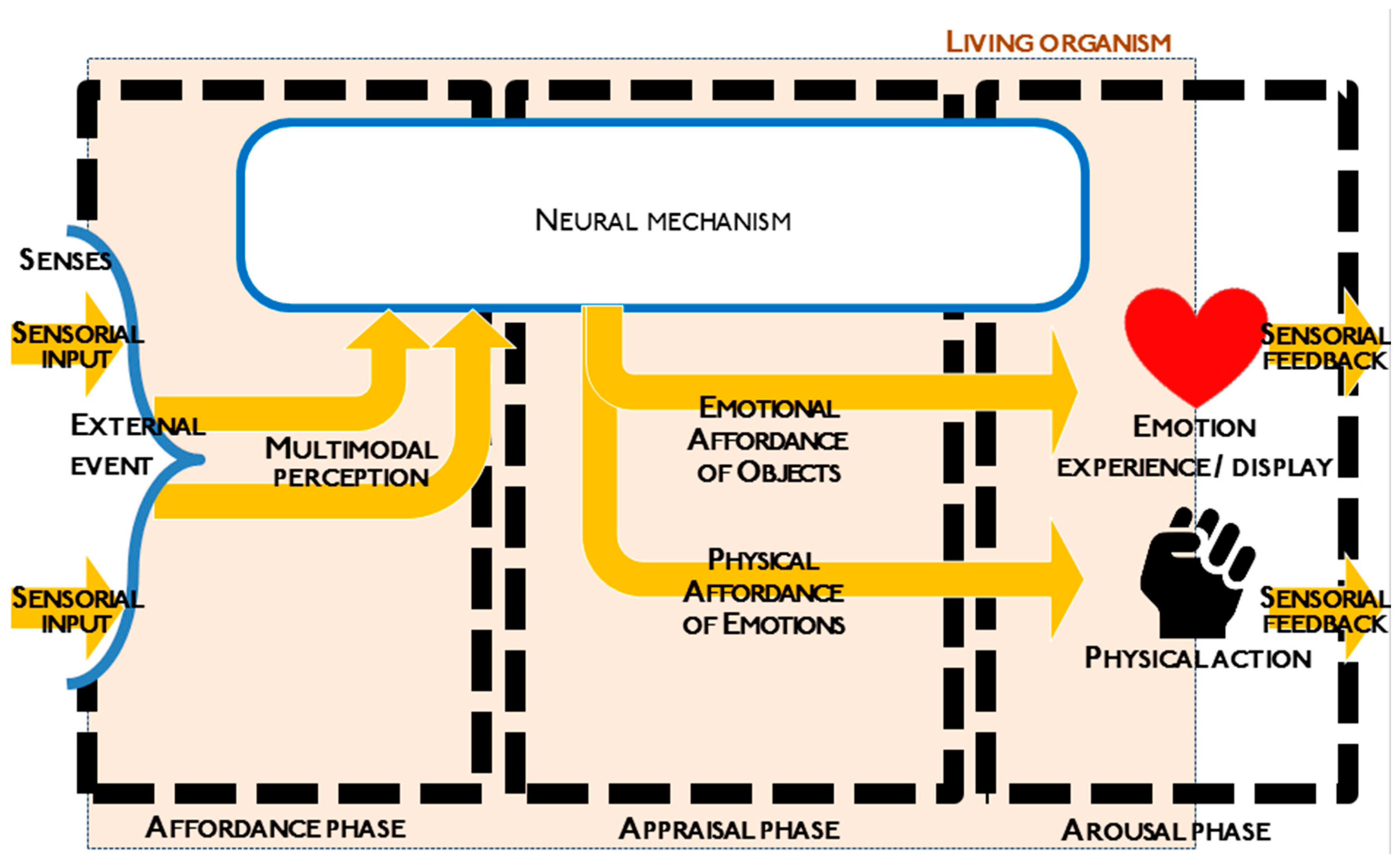

We introduce the AAA (Affordance-Appraisal-Arousal) model, which generalises several mental processes happening in humans, and translates them to the case of robots. These three phases happen subsequently in the process that goes from the perception to the behavioural response. In

Figure 1, the perceived sensorial feedback goes through a neural mechanism that generates affordance perception. Since the detailed description of the neural mechanisms that produce affordance perception in the living organism’s brain is not in the scope of this research, we leave it as a black box, of which we know the inputs (some multimodal perception) and the possible outputs (an emotion display or a physical action, suggested by the affordance mechanism).

In

Figure 1, the boundaries of the human or of the living organism are marked in the pink box. Out of it, we can observe the external events and the feedback to those events. The diagram is divided in three phases, namely Affordance, Appraisal and Arousal.

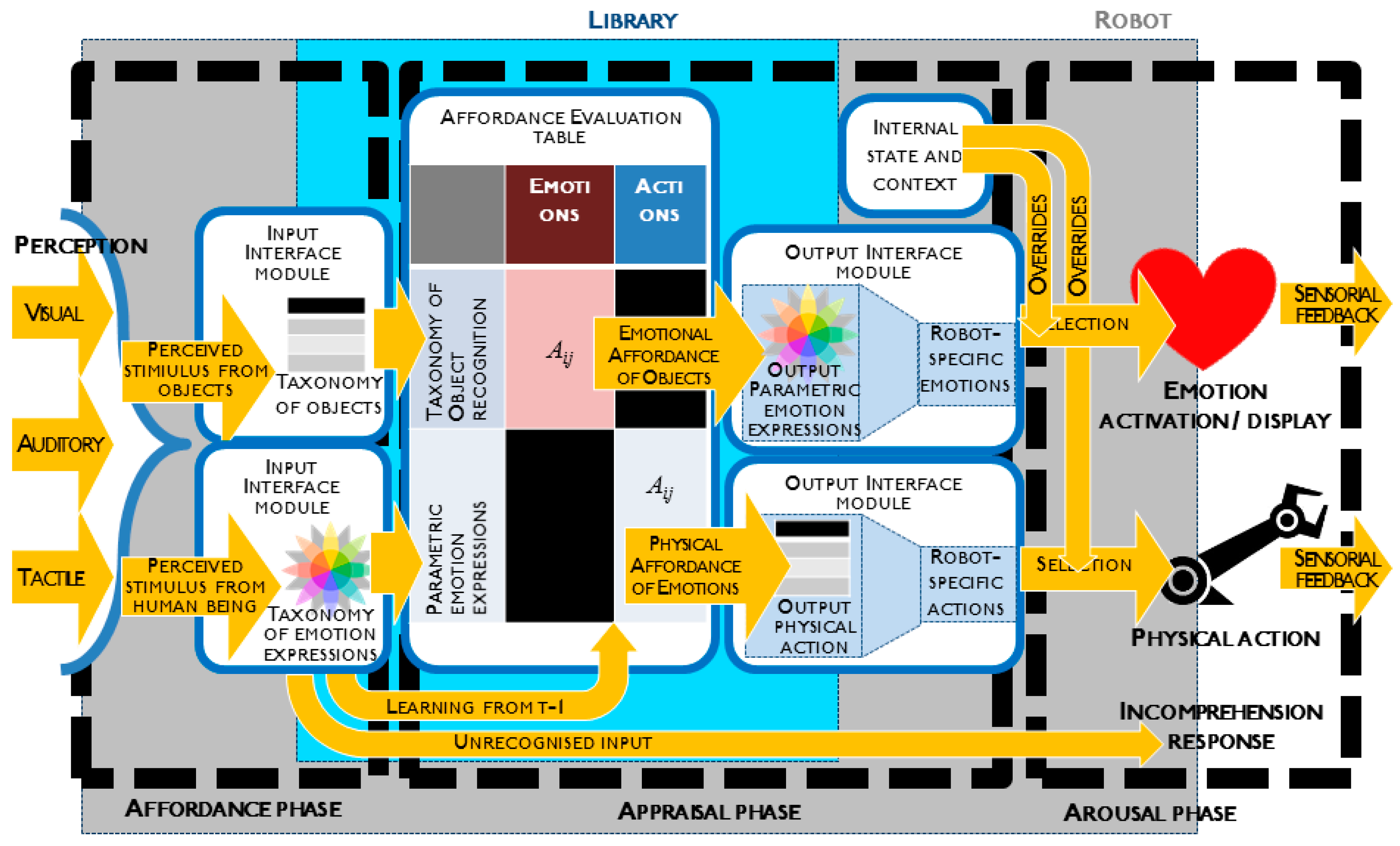

For robots, we apply the same structure, except that the area marked in the light blue box in

Figure 2 consists of the components of the library, which interface with the robot (in dark grey).

In the following subsections, we describe the content of the modules shown in

Figure 2.

3.1. Perception

Robots typically sense the environment through different sensory channels, most commonly visual, auditory and tactile. The visual channel is the most commonly associated with affordances [

54,

55]. In our model, we examine the generic case of a multimodal input, without entering deep into the analysis of problems such as symbol emergence [

56], grounding [

57] and anchoring [

58]. These problems refer to the processes by which symbols (such as words) are created in the first place (emergence), they acquire meaning (grounding), and are then attached to specific entities perceived in the world (anchoring), and how is this made by a consciousness. Obviously, there is not a single conscious robot, even our mechanistic understanding of human consciousness is still not even partially complete, but robots can acquire or create meaning using predefined heuristics and (large) sets of data. Here, we assume that a generic robot is able to perceive the environment and classify a certain number of stimuli. Our intention is to justify a more ambitious ontological classification, once we divide these stimuli in two broad categories: objects and humans. In the latter case, we focus specifically on human emotion expressions. This combination provides a richer framework that make it possible not only to analyze existing emotions but to be able to create semantic connections between animate and inanimate entities, imitating a fundamental human skill. This allows the root to be able to manage personal semantic meanings that work at different linguistic or expressive contexts.

3.2. Input Interfaces

The possible inputs that the robot can recognise must be interfaced with the taxonomies in the library. This mapping is left here as a stub (in software development it is a piece of code used to stand in for some other programming functionality), containing some simple mapping of inputs associated with the library counterpart. The mapping can be customised. There are two interfaces: one for the objects, one for human expressions.

In the former case, the mapping is made by simple correspondence operated by the function f on the raw input . For example:

“cup” -> “cup” (direct correspondence)

“mug” -> “cup” (when the library taxonomy only has a similar entry)

“wrench” -> null (when the library taxonomy does not have such entry)

null -> “spoon” (when the robot does not have the ability to recognise an object, such as a spoon, that is in the library)

The class memberships to each label, in the form of an array, get copied when there is a correspondence, or set to zero in case of null correspondence. Subsequently, only the highest value is kept, while all the others are set to zero. The resulting data structure is also an array, which contains the class memberships to the labels of the objects available in the library. Within them, only one is set as different from zero. Of the resulting vector, only the element with the maximum value is kept, and the rest is set to 0 (Equation (1)), resulting in

(Equation (2)).

In case of human expressions, the process is slightly more complicated, as it involves the use of an emotional model to parameterise the emotional content. For this purpose, we use Plutchik’s Wheel of Emotions [

22], shown in

Figure 3a. Plutchik’s model is particularly convenient because it features emotions that are specular: anger is the opposite of fear; disgust is the opposite of trust, and so on. In a previous research [

59], each of these emotions pair was referenced by an axis. By using 4 axes (one for each pair of leaves of Plutchik’s Wheel, as in

Figure 3b), the emotion can be parameterised. The four axis are called Mood, Stance, Temperament and Expectation, coherently with [

59].

The interface in the case of human emotion expressions is therefore containing a mapping p between the raw input expressions and 4 parameters, each one bounded between −1 and 1, resulting in (Equation (3)). Example:

The mapping does not convert all the inputs into emotions: it is executed only on the input with the maximum value among those which have a correspondence in the library (

).

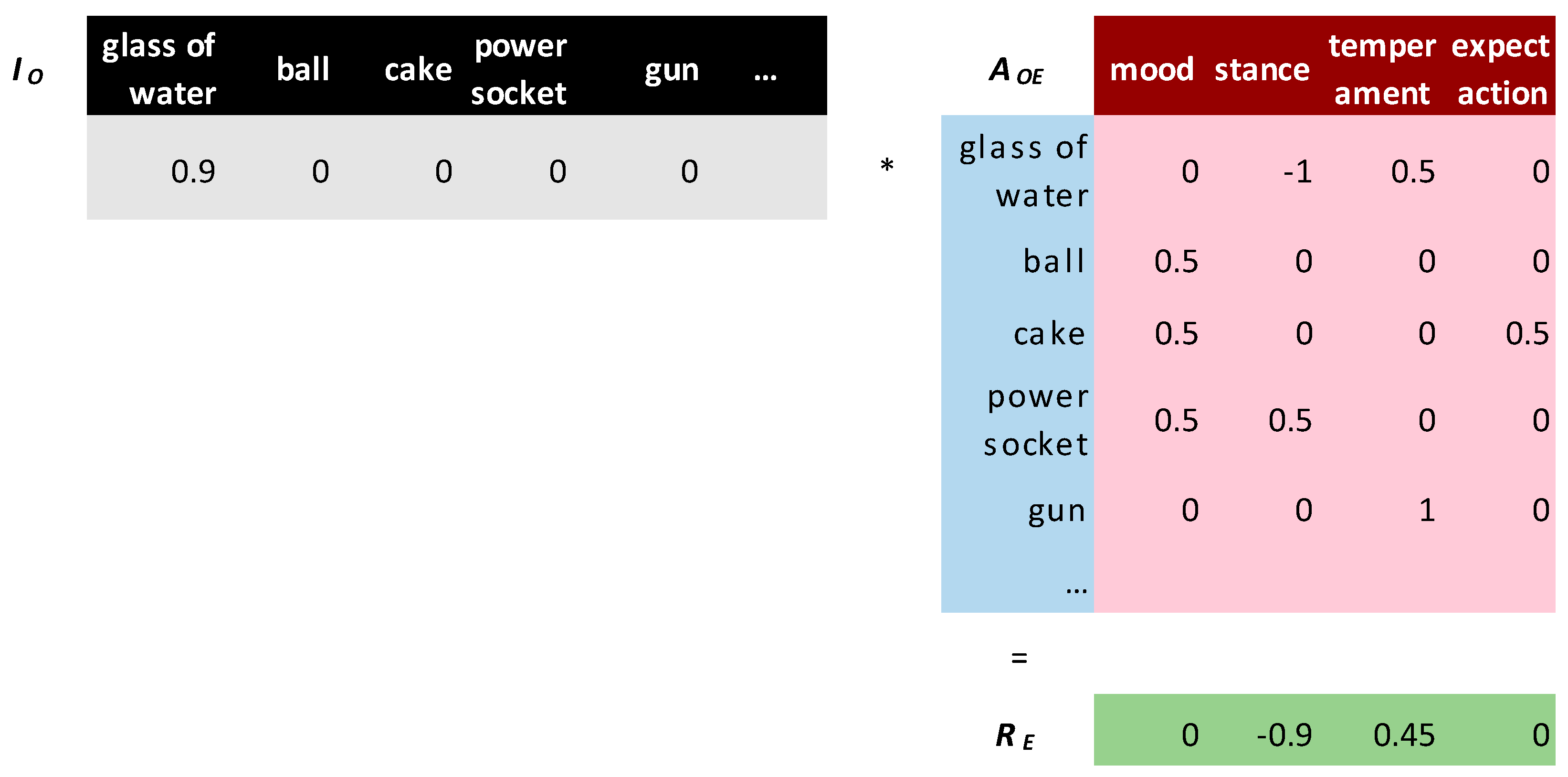

3.3. Affordance Evaluation Table

In

Table 1, we examine the four partitions of the Affordance Evaluation Table, case by case.

Case A: from an input consisting in the perception of an object, the emotional affordance selects a suggested emotion according to the formula:

where

is the row vector consisting in the input of

n objects recognition class membership;

is a matrix

n × 4 consisting in the mapping; and

is the output, a row array of the 4 Plutchik’s parameters. This approach is similar to the sensing personality table made for the robot WE-4 [

20]. It contained a multimodal input of stimuli and their association to positive, neutral or negative activation, pleasantness and certainty.

Case B: perceiving the affordance of objects is a topic that has been studied in numerous previous researches [

6]. Here we focus only on emotion affordances, as aspect that has been so far overlooked in the literature.

Case C: when an emotion expression triggers an emotion in the other party, it is a case of empathy [

60,

61]. Empathy is the capacity to understand what another person is feeling, as is captured by popular wisdom with expressions like “put oneself in someone’s shoes”. It also encodes basic sensorimotor processes of learning, and it has been widely studied in the Human-Robot Interaction literature [

47,

62]. For this reason, we do not add this part to our affordance model.

Case D: from an input consisting in the perception of a human emotion expression, the emotional affordance selects a suggested action according to the formula:

where

is the row vector consisting in the input of the 4 components of Plutchik’s parametric model;

is a matrix 4 ×

m consisting in the mapping; and

is the output, a row array of the

m output action class memberships.

3.3.1. Learning

At any given time,

T, the tables

A get updated at each input

. The values of

A affected are the ones related to the latest (

T − 1) emotion expression or action executed by the robot. We define the valence

v of human reaction, categorised according to the following formula:

where

l is the emotion in each leaf of Plutchik’s model. By default, a positive valence is associated to Happiness, Trust. Negative valence is associated to Sadness, Disgust, Anger and Fear. The term valence is intended in this strict sense of characterization of positiveness and negativeness of each emotion, and not related to a single parameter.

The resulting

v acts as modifier, together with the learning rate

α (bounded between 0 and 1), the element of

A related to

j, given that the robot performed the

jth action or emotion expression, subsequently to the input

i.

This equation is inspired to the method used in [

63] and successfully applied on two robots adapting to different contexts. The additional use of the sign functions and of the absolute value of the previous element of

A make sure that through a single equation, the results can span between 1 and

in any of the four cases:

Positive and positive valence of human reaction (positive )

Positive and negative valence of human reaction (negative )

Negative and positive valence of human reaction (positive )

Negative and negative valence of human reaction (negative )

The resulting affordance table evaluation will be boosted or reduced, according to human reaction, and bounded between 1 and . A human encouraging an affordance will strengthen its value (bounded at 1), while discouraging an affordance will not make its value converge to 0, but rather change sign. This design decision is motivated by the fact that our implementation of Plutchik’s Wheel is parametric, and passing beyond the 0 will prompt the robot to explore a different affordance (related to the opposite emotion) rather than just inhibiting that affordance.

The value of the learning rate α will be critical to determine in how many trials the robot will change its behaviour, down to a single trial when α is 1 and the valence is also maximum.

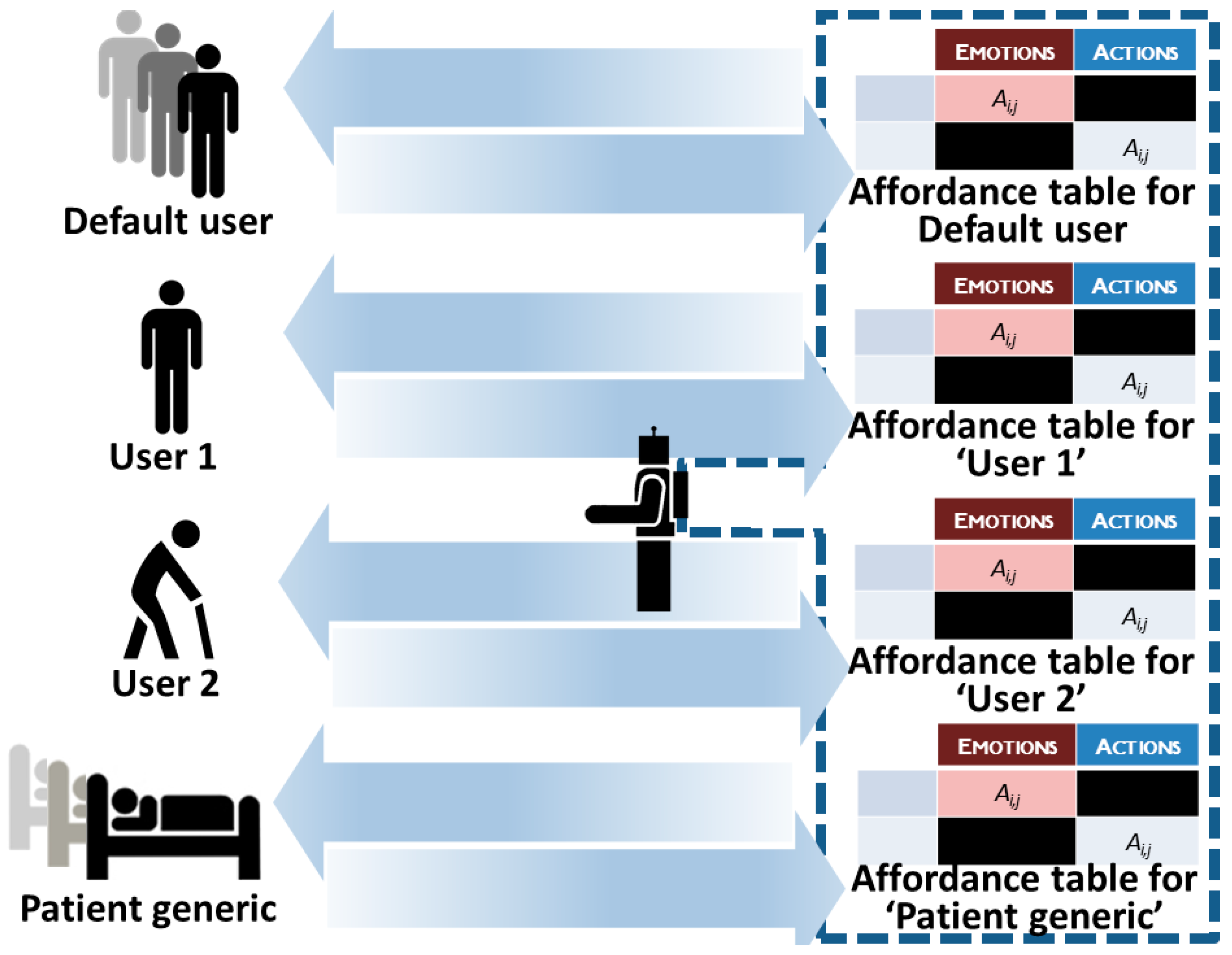

3.3.2. Customization

One possibility offered by this library is to customise the robot’s affordance behaviour for different users or group of users. This will make the affordance mechanism flexible and applicable to a range of scenarios and users, each with different preferences (

Figure 4).

3.4. Output Interfaces

The output interfaces work in a similar way to the input ones. Their role is to convert the output of the affordance evaluation table to the specific robot. There are two interfaces: one for the output emotions, one for the output actions.

The emotions as obtained by the previous step are a vector of 4 values, which can be positive or negative. They are converted into a vector of membership to an extensive list of all the emotions present in Plutchik’s (function e in Equation (8)), and subsequently undergo a process of filtering (function f in Equation (8)), in order to match the robot’s specific emotion capabilities. For example, if the robot is based on Ekman’s standard 6 emotions, there will be a specific Plutchik-to-Ekman conversion process. We do not define the implementation of such conversion; however, it can be as straightforward as a single correspondence between each primary emotion in Plutchik’s model to one of the 6 Ekman’s emotions.

The suggested output of emotions

will be the result of these operations:

On the other hand, the

m output actions of

will feature positive or negative values, due to the previous matrical product involving the emotional parameters. For this reason, the first step consists in setting to zero all the negative values as specified in the activation function

a of Equation (9):

Each membership of an action j among the m in the library will be filtered in the same way as it happens with emotion, in order to match robot’s action capabilities.

The suggested output of objects

will be the result of these operations:

3.5. Internal State and Context

The internal state of the robot, and the degree of understanding the context, are specific of robot implementation. This part, necessary for reasoning, may contain its own emotional model and other cognitive constructs. Our affordance model is intended to work in parallel to robot reasoning and provide a faster feedback. The implementation of the robot can decide to let the result of the affordance reach the arousal phase, or to use that data for other calculations, or to completely override it.

3.6. Arousal

This part is the output of the model. It represents the execution of the emotion expression (as consequence of an affordance of an object) or the execution of an action (as consequence of an affordance of a human emotion expression). An additional action or emotion expression can be triggered in the case of incomprehension.

Generically speaking, the arousing emotion or action is determined by the maximum value of the vector produced by one of the output interfaces. These actual outputs can be overridden by the robot internal state, which is not part of the library. As the arousal is robot-specific, in this paper we do not focus on its implementation.

3.7. Case of Incomprehension

In one of the following cases:

when the raw input gets classified as “unknown” (either unknown object or unknown human expression)

when each value of class membership of or is below a user-defined threshold

when each value of the filtered input or is below a user-defined threshold (possibly as result of a missing match), an affordance of incomprehension is triggered.

Comprehension is a very important aspect of communication: according to Poggi et al. [

64,

65], the performative (namely, the actual action of interaction) of a communication act is defined by six parameters, one of which is the certainty of the communication content. In humans, the degree of certainty also influences facial cues [

20,

65]. In robots, this is sometimes neglected, as affective communication only takes into consideration standard emotions: one of the few exception was KOBIAN-R [

59], featuring a facial expression with corrugated eyebrows especially for the case of incomprehension.

In our proposed architecture, when incomprehension happens, the whole process of affordance does not take place, and the suggested affordance is just “incomprehension”: this might then generate a related emotion expression or responsive action, depending on the specific robot implementation.

4. Sample Operation

In this section we show some examples of how affordance perception and behaviour generation can be produced through the AAA model, by using the stub of the implementation described in the previous section, with simulated sample data.

4.1. Emotional Affordance of Objects

Let us suppose that a robot is surrounded by several objects. Through the combination of visual, auditory and tactile sensing, it can produce a vector of raw input consisting in the class memberships, which may also include some noise.

, which refer to the labels {‘white ball’, ‘plate’, ‘pen’, ‘glass of water’, ‘box’, …}.

In the input interface, the filtering process results in a new vector , referring to the labels available in the library {‘glass of water’, ‘ball’, ‘cake’, ‘power socket’, ‘gun’, …}. In some cases (glass of water), there was direct correspondence; in other cases (ball) there was a correspondence of a similar entry; in all the cases with 0s (cake, power socket, gun) there was no correspondence.

The next step consists in processing the affordance evaluation table, as in

Figure 5.

The resulting vector has 4 components. In its extended form , the vector indicates for the labels {‘ecstasy’, ‘joy’, ‘serenity’, ‘admiration’, ‘trust’, ‘acceptance’, ‘terror’, ‘fear’, ‘apprehension’,…}. The maximum value corresponds to ‘loathing’ (the most intense form of disgust, with 0.9).

Let us now suppose that our robot can only display happiness, sadness, anger and fear. The filtering process will eliminate the values referring to disgust or loathing (unless specified by the user in a customised output interface), and just keep the value related to fear.

is the final output of the library. The robot can decide to follow the suggested emotional affordance of fear and arouse an expressive behaviour, or to override it.

Figure 6 summarises the whole process.

4.2. Physical Affordance of Emotions

In this second example, the robot identifies a human face crying. The vector of raw noisy input sample data contains , which refer to the labels {‘cry’, ‘smile’, ‘head down’, ‘raise eyebrows’, ‘frown eyebrows’, ‘wrath’, …}.

The filtering process results eliminates the entries that are not found in the library (i.e., ‘wrath’), and in the resulting data, the maximum entry gets selected (cry, 0.8).

The mapping p evaluates the emotion of the current input parametrically. The resulting for ‘cry’ is .

Figure 7 illustrates the product between

and

. The resulting vector

contains the affordance of actions. These actions contained in the library are mostly social actions, as they are supposed to be pertinent to respond to some human emotion expression.

However, the robot might not have all of these social actions implemented. The robot in our case can only perform the following actions: {‘push’, ‘pull’, ‘take’, ‘greet’, ‘approach’, ‘hug’}. The filtering process results in

. The whole process is summarised in

Figure 8.

4.3. Learning Example

Let us suppose that the robot performs the hug suggested by the affordance in the process we have just described. Subsequently, the human reacts. Now at the time T the robot recognises another input, which is an expression of anger.

Before processing further a new affordance, the affordance evaluation table is updated according to the formula in Equation (7): . Anger has a valence of . . is also negative ().

Let us define the learning rate α as 0.4. In the present case, . This update will result in a lower likelihood of the robot to perform the action of hugging in response to a crying human expression.

In a hypothetical next round in which the robot faces the same stimulus, the robot may or may not perform a hug, depending on whether there are other greater values in the affordances table. Supposing that is still the winning value that gets the hug action selected, we perform one additional step: .

What happens now is that for the same, negative input value of mood ( for ‘cry’), the matrix calculation will produce a negative value and the hug will never be selected. Conversely, for positive mood value there is a chance that the robot will explore the action of hug.

This mechanism works also in the other case we discussed, of emotional affordance of objects: the affordance value changing sign will cause the opposite emotion to be explored towards the same object.

5. Sample Scenarios of Application

Our affordances libraries allow us to consider real scenarios in which such mechanisms would help researchers to implement multimodal and functional HRI. For the brevity of this paper we only present two cases which summarise and encapsulate the vast range of possibilities that our approach enables: elderly care and service robot at home.

5.1. Elderly Care

In the first of our sample scenarios we consider a relevant problem: (impaired) elderly care by robots [

66,

67,

68]. In this scenario we consider that our libraries could be easily adapted to the specific sensory or cognitive requests of elderly people: loss of hearing, diminished vision, sensorimotor difficulties, memory losses, loss of expressivity, among others, something that explains why older people report fewer negative emotional experiences and greater emotional control [

69,

70,

71]. Hence, gerontechnologies [

72] must still to be adapted to robotic assistants [

73], and the key is to know which affordances thresholds should be modified in order to offer a good service. Consider a possible case: an old man suffering from Alzheimer. He feels uncomfortable and disoriented and the robot observes an abnormal and repetitive pattern of moving, which is understood as a situation of trouble and therefore the robot offers help to the human. The same patient is feeling pain but because of his limited capacity of self-report that assessment relies in large part on observational methods. We will follow affordances in order to allow our robot to evaluate the pain situation [

74]:

Facial expressions: slight frown; sad, frightened face, grimacing, wrinkled forehead, closed or tightened eyes, any distorted expression, rapid blinking.

Verbalizations, vocalizations: sighing, moaning, groaning, grunting, chanting, calling out, noisy breathing, asking for help, verbally abusive.

Body movements: rigid, tense body posture, guarding, fidgeting, increased pacing, rocking, restricted movement, gait or mobility changes.

Changes in interpersonal interactions: aggressive, combative, resisting care, decreased social interactions, socially inappropriate, disruptive withdrawn.

Changes in activity patterns or routines: refusing food, appetite change, increase in rest periods, sleep, rest pattern changes, sudden cessation of common routines, increased wandering.

Mental status changes: crying or tears, increased confusion, irritability or distress.

Given the detection from video-cameras and voice recording systems, our library could combine data from those specific affordances in order to detect specific requirements previously requested by medical services. All these facial, voice, and body expressions are fundamental clues for the good monitoring of the patient. In this case, the robot could detect simple pain affordances and offer a response to the patient, or even notice it to the medical personnel. Establishing adequate correlations between medical drugs intake and expected reactions over time, the robot could help to monitor and even to adapt these prescriptions.

5.2. Service Robot at Home

The second scenario is related to service robots which could be placed in our homes. From anthropomorphic no non-anthropomorphic robots, these machines will need to interact closely with humans, which are fundamentally emotional [

75]. Consider a possible scenario: a robot pet [

76]. The user comes back at home being too much tired for playing activities: consequently, she is annoyed when the robot tries to play with her. The robot detects this mood and avoids activity although follows the user through the house, as a silent companion. At some point the user addresses to the robot and speaks to it, trying to beg for pardon and asking for direct contact. The robot detects voice tone and combines it with visual clues which show a pleading attitude: therefore, the robot approaches to the user and interact with her.

The range of most salient affordances in this scenario would be those related to the robot monitoring of the humans living in that space. The robot must be obviously able to answer to the voice commands of humans, but also should anticipate possible necessities according to the context. Imagine the following scenario: the human user is alone, taking a shower. The robot evaluates the possible danger situation and decides to be there in case of being requested to for any action. Once there, the human asks to the robot for a towel, and it provides a new one to the human. At that point the human looks angry, and the robot evaluates which can be the cause: perhaps it needs a specific kind of towel and some mistake has happened. The robot asks to the human whether the current size of the towel is wrong and whether is needed a bigger or smaller one. The human answers affirmatively: a bigger one. After using it, the human slips and falls. The robot checks the emotional signals: unconscious attitude (closed eyes, without movement), and the same time the bodily analysis shows a lack of movement not related to a contextual space like that. The robot also monitors heart rate and skin conductance deciding that the human is in danger and call to the medical services. Meanwhile the ambulance in on the road, the human starts to moan and the robot offers basic touch response to calm him at the same time that use a confident and quiet voice tone to explain the human with the minimal amount of information that a human medical doctor has been called and she will arrive in a few minutes. At the doctor arrival, the robot provides a basic inform of the process as well as explain the emotional responses of the human at every moment of the report. Both cases show how the monitoring of emotions (or its lack of presence) using face-bodily-tone data and contextual evaluations make possible a better support for the human.

6. Discussion and Conclusions

In our implementation of the affordance emotional system, we followed three fundamental requirements:

● Generality:

We are aiming to make this model widespread and usable by different robots. For this reason, we mainly focus our description on the inputs/outputs and interconnections of the various sub-modules of the architecture, but we do not elaborate too much on the content of each sub-module, that will largely depend on the specific application scenario.

● Low complexity:

The architecture performs simple operations, because this is supposed to give a fast response that imitates the neural process of affordance perception occurring in the human mind. For this reason, it does not propose complex calculations that would be more appropriate for the reasoning component of a robot’s mind.

● Adaptability:

In affordances, learning and adaptation are fundamental components [

4,

77,

78]. Notably, the dynamical nature of our model, which is also multimodal, captures this aspect and allows to adapt the emotional responses of the robot based on the feedback received through repeated interactions with different individual users or groups of users. Indeed, the whole emotional social life is based on emotional interactions, some of them verbal but also non-verbal, a fundamental aspect of HRI that our models covers with detail.

As mentioned earlier, a few emotional affordances models for HRI purposes. Basically, it can be affirmed that all existing models are based on very basic multimodal approaches to affordances. Secondly, these models are non-taking into account contextual and allocentric aspects of emotional human dimensions (including objects, scenarios, and emotions). Thirdly, these models cannot be transferred between different robotic morphologies/designs. Fourth, only our model contemplates a user’s dynamical variation across time according a complex mapping of emotional syntax. Finally, our approach is more complete, using a broad range of emotional variations (Plutchik’s model), beyond the classic approaches using the basic Ekman’s 6 emotional values; this makes possible obtain a more accurate monitoring, understanding and evaluation of emotional affordances for HRI purposes.

Future work. We plan to obtain a more accurate monitoring, understanding and evaluation of emotional affordances for HRI purposes. Our intention is to use the libraries in different morphological robotic systems, checking the adaptability of our libraries to the data integration made using several general designs. The most important focus of the experiments will be on the adaptation process, in order to demonstrate and evaluate the effectiveness of learning in an empirical manner.