Catch My Drift: Elevating Situation Awareness for Highly Automated Driving with an Explanatory Windshield Display User Interface †

Abstract

1. Introduction

2. Background

2.1. Driving Automation Levels, Technology Acceptance and Situation Awareness

- Perception of the data and elements of the environment. In our case, this includes monitoring current car status information and the surrounding traffic situation, including the detection of other traffic participants, road conditions and infrastructure.

- Comprehension of the meaning and significance of the situation. This would correspond to the driver’s understanding of the current traffic rules (e.g., who has right of way, which road signs apply, etc.) and the situation on the road. This includes interpreting and evaluating the actions of other traffic participants.

- Projection of future events or actions. In the driving scenario, this includes predicting the behavior of the other traffic participants and also the driving decisions of the vehicle. Drivers need to deduce imminent actions by projecting their knowledge from Stages 1 and 2 into an upcoming time frame. High awareness in this stage should reduce confusion when an AC needs make sudden reactive maneuvers.

2.2. User Interfaces for Automated Cars

3. Design of the Prototype

3.1. Human-Centered Design Approach

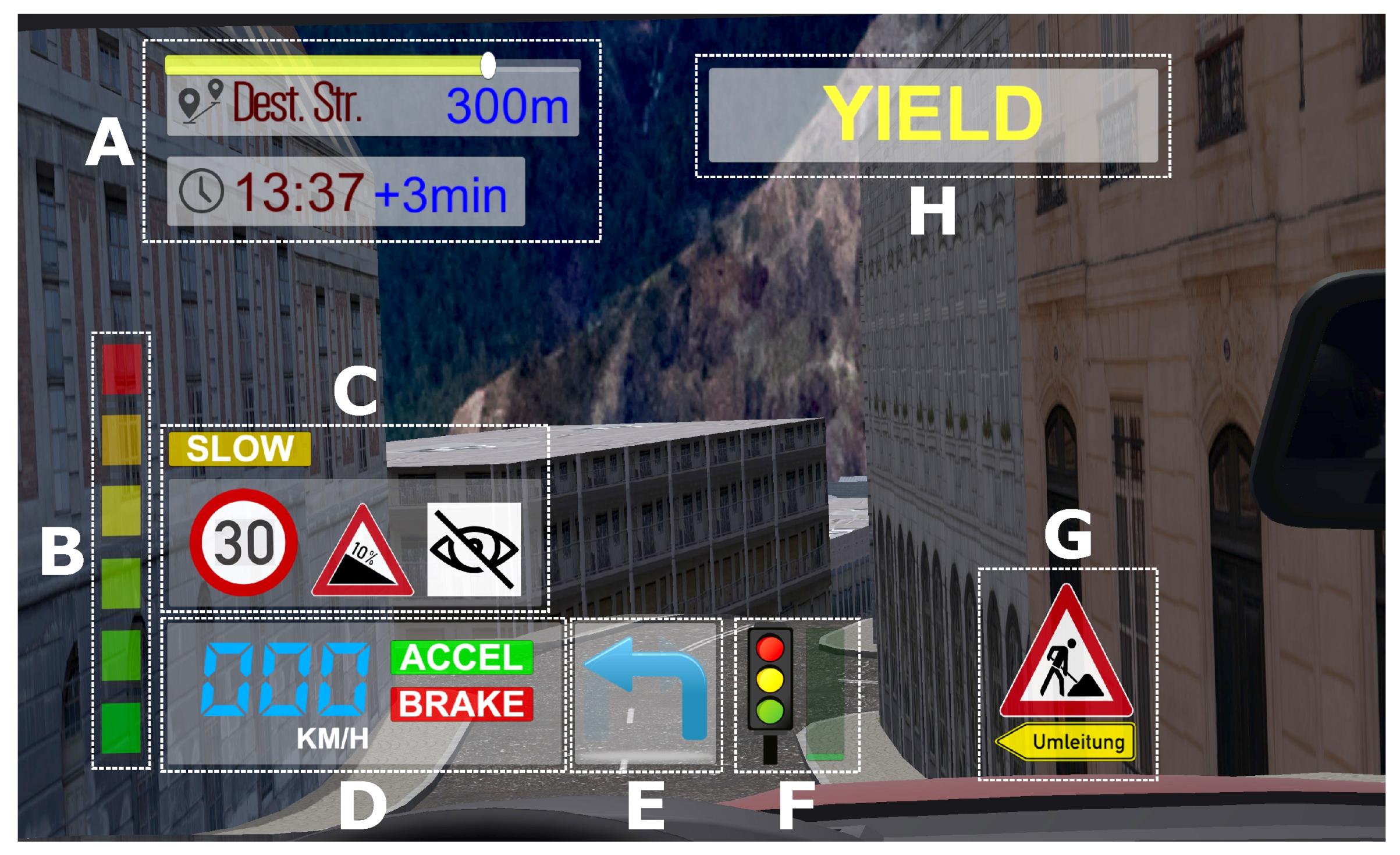

3.2. Prototype of an Explanatory Windshield Display Interface for Automated Cars

- A

- Trip info panel with destination, remaining distance and time to arrival and progress indicator.

- B

- Automation confidence bar similar to [37], alerting the driver to the current system reliability level: the more the bar extends upwards, the less predictable the situation for the automation system.

- C

- Traffic regulations and info panel showing dynamic content depending on the environment and situation, including detected road signs and other factors. Examples shown in Figure 2: 30 kph speed limit; steep grade ahead; impaired visibility; car going slow due to potential hazards.

- D

- Primary driving panel with speedometer and binary indicators for imminent acceleration or braking.

- E

- Navigation panel showing the next step in the planned route, i.e., upcoming turns.

- F

- Traffic light panel showing a remaining time indicator which only becomes visible on approaching lights. It gives the driver a heads-up as to when the drive continues. This feature assumes availability of infrastructure data, e.g., via vehicle-to-infrastructure (V2I) communication.

- G

- High-priority panel showing rare occurrences when needed. It is briefly blinking on appearance and accompanied by an alert sound to guide driver attention. Examples for content are construction or accident sites, detours and approaching emergency vehicles.

- H

- Traffic priority panel indicating the right of way for an upcoming intersection. It gives drivers a heads-up as to how the AC will handle an intersection. YIELD is shown on approaching if the AC plans to decelerate and wait. DRIVE is shown when the AC assumes right of way, either already on approaching an intersection or after yielding.

4. Methods

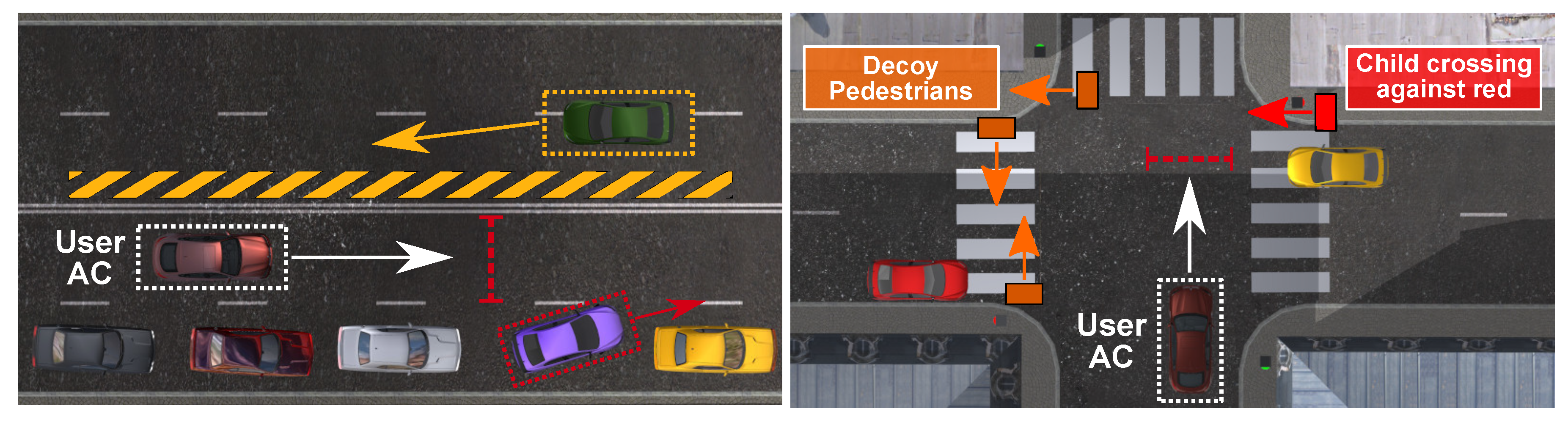

4.1. Urban Driving Situations

4.2. User Study

4.2.1. Participants and Apparatus

4.2.2. Experimental Design

- Visibility (VIS): High (i.e., clear weather) vs. Low (i.e., fog with maximum viewing distance of approximately 50 m; see Table 1 (c) for a visual example)

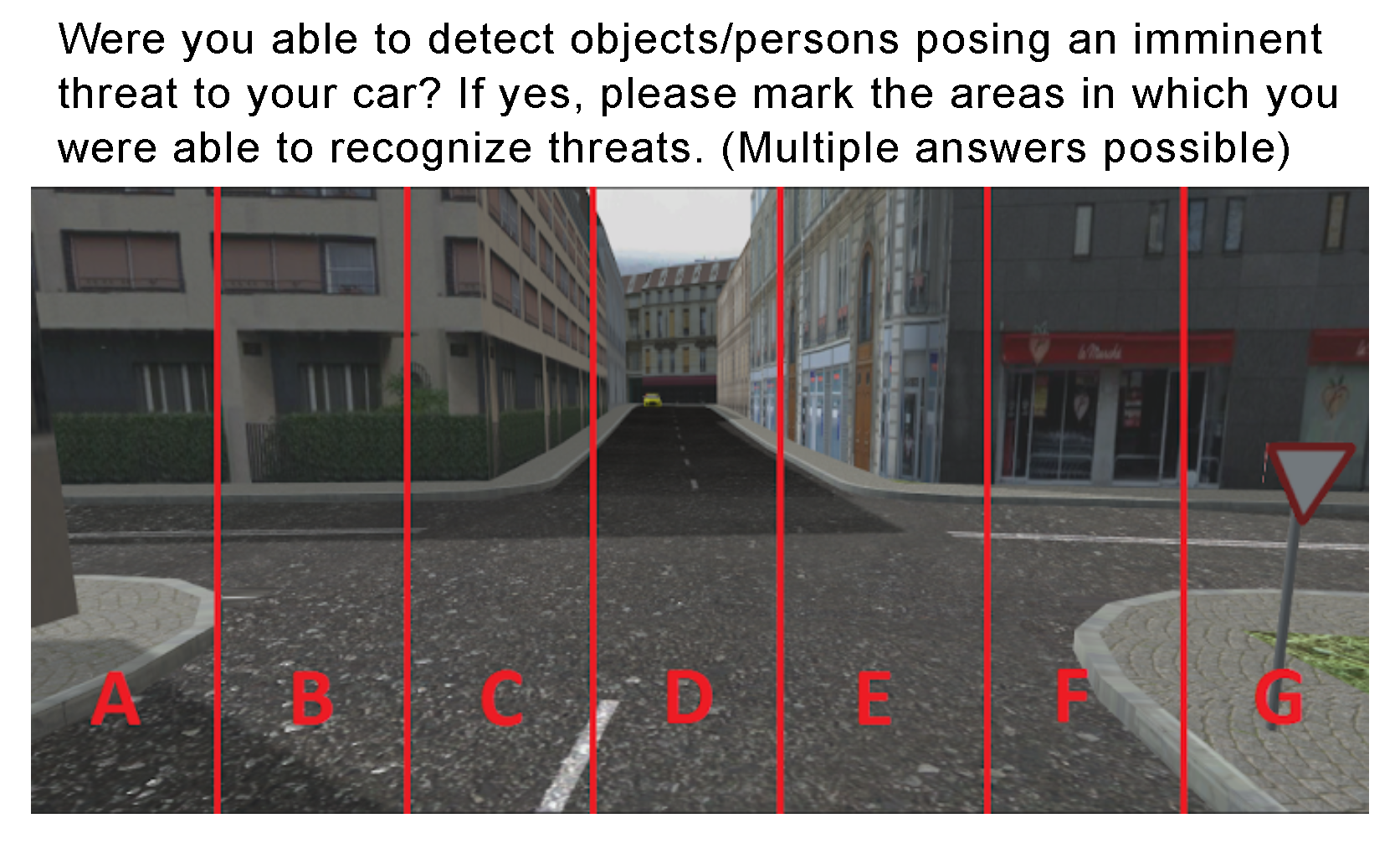

4.2.3. Data Collection

- Perception:

- How many pedestrians could you recognize in the scene?

- From which directions are other cars entering the intersection you just reached?

- Comprehension:

- Why did your vehicle just decelerate considerably?

- What is the reason for the unplanned detour your vehicle is taking?

- Projection:

- What do you expect your vehicle to do next?

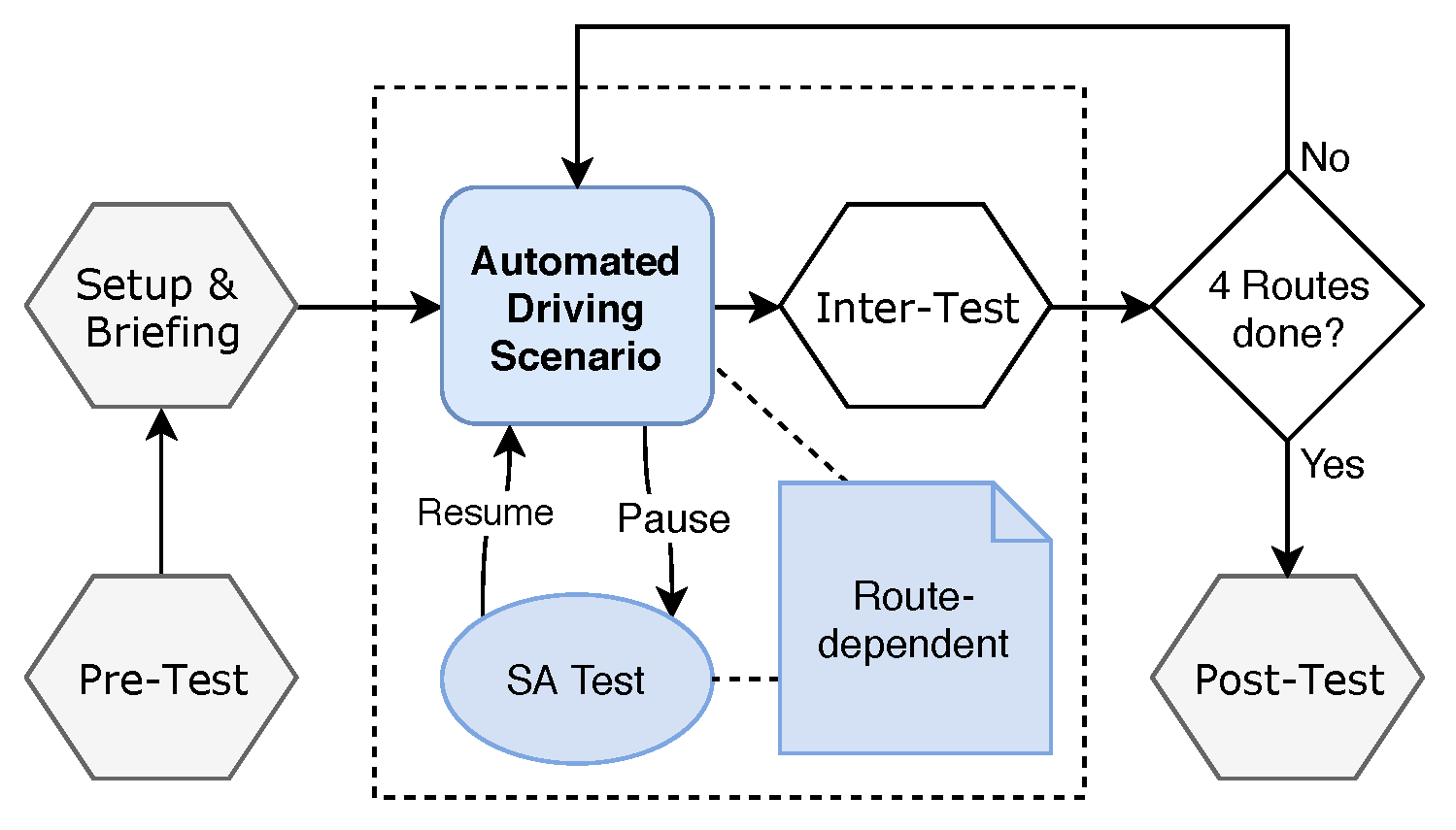

4.2.4. Procedure

- A calibration of the virtual driver position to the actual head position of the participant. This is to ensure that each participant has a correct perspective with full visibility of all virtual WSD elements and a similar initial view of the virtual scene, independent of body or sitting height.

- An explanation of the WSD interface elements and their meaning to the participants, both for the screen-registered and world-registered features.

- A test drive of approximately five minutes in the simulator, allowing participants to get used to the driving simulation, the head-tracking, the urban environment with traffic objects and the virtual WSD. The examiner only proceeded after each WSD UI element had been experienced.

5. Results

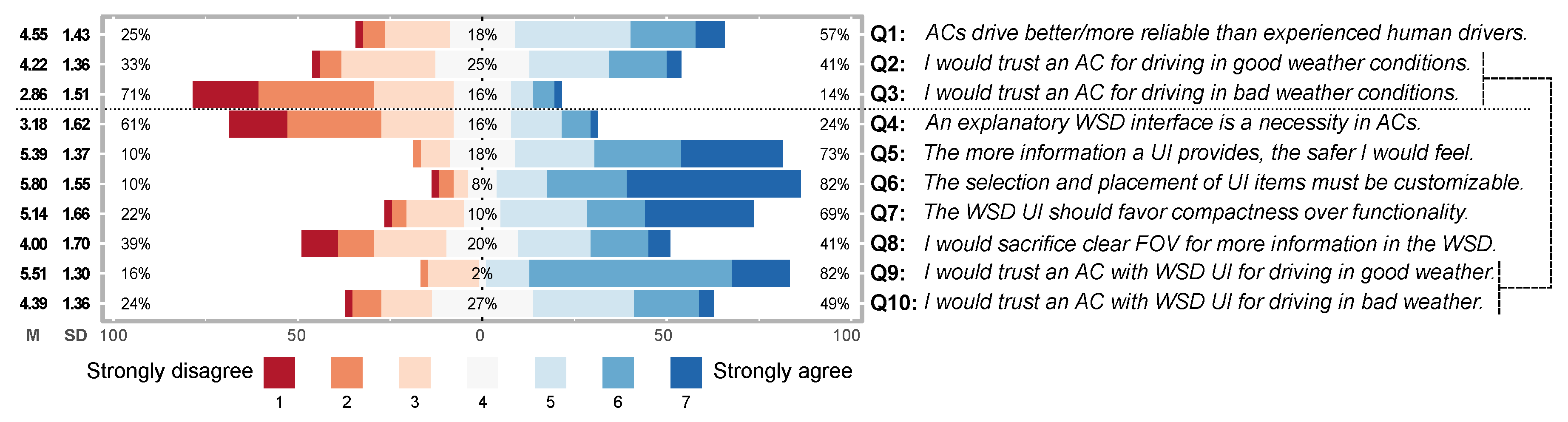

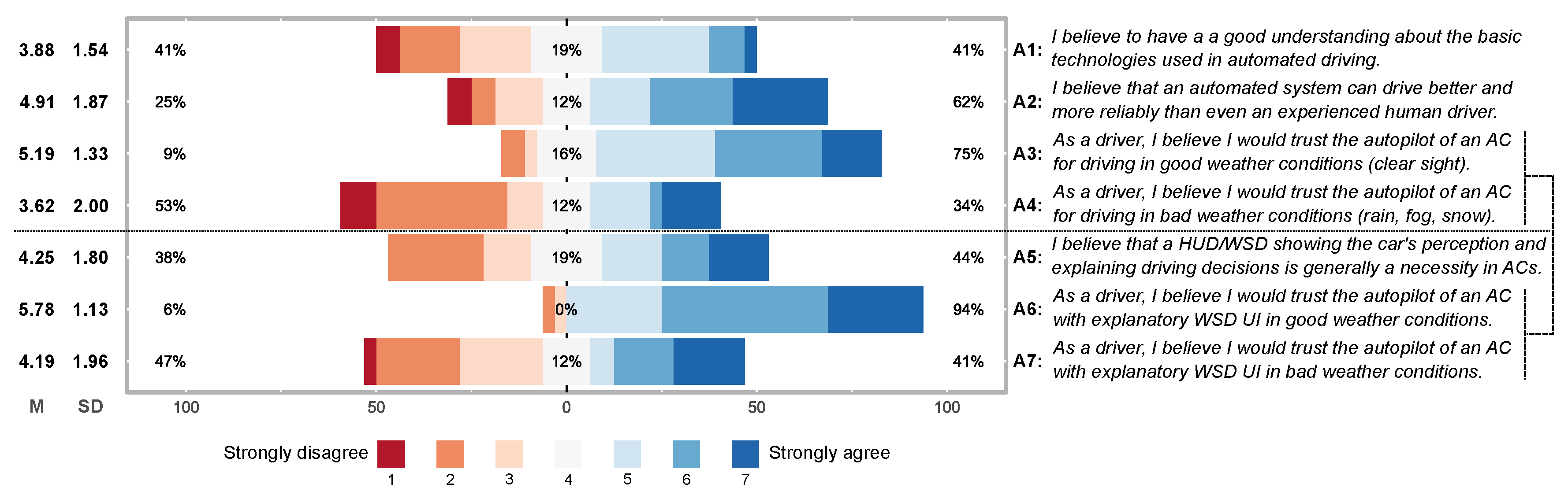

5.1. Pre-Study Opinions

- A3 vs. Q2: trust in ACs without UI in clear weather (, ES: medium),

- A5 vs. Q4: necessity of an explanatory UI in ACs (, ES: medium).

- A3: , ES: medium,

- A5: , ES: large.

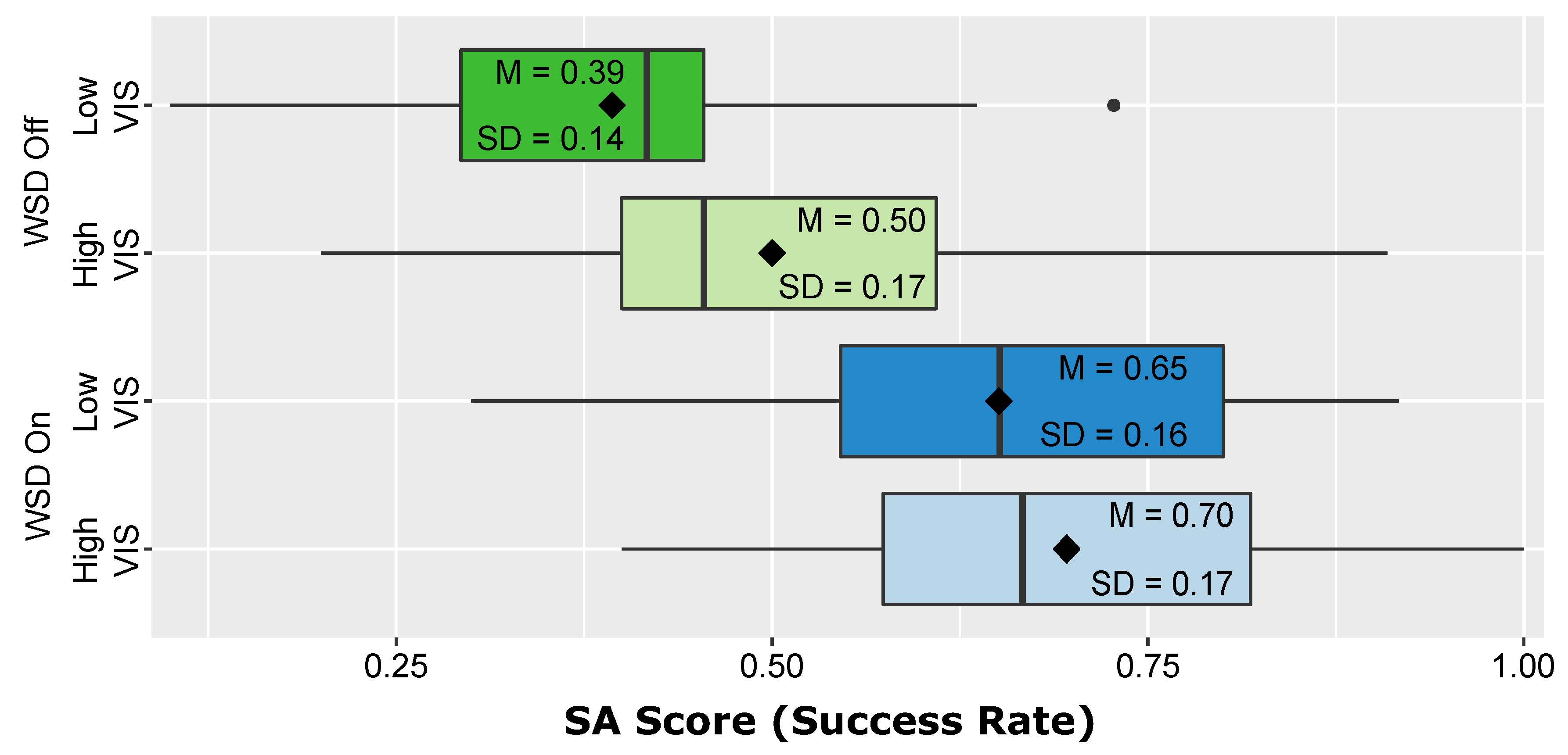

5.2. Driver SA Test Scores

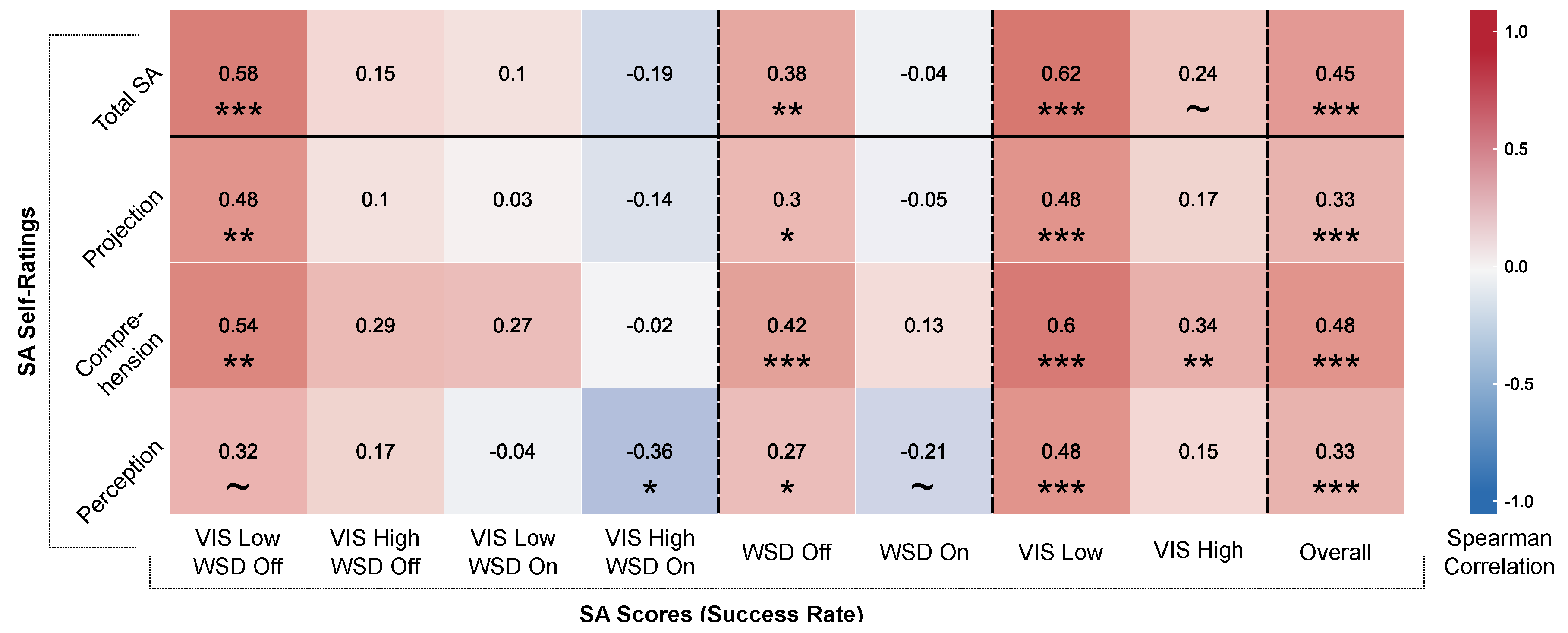

5.3. Driver SA Self-Ratings

- Perception: I felt always able to perceive/detect relevant elements and events in the environment.

- Comprehension: I could always comprehend how my vehicle acted and reacted in traffic situations.

- Projection: I could always predict how my vehicle was going to behave in traffic situations.

5.4. Correlation between Scores and Self-Ratings

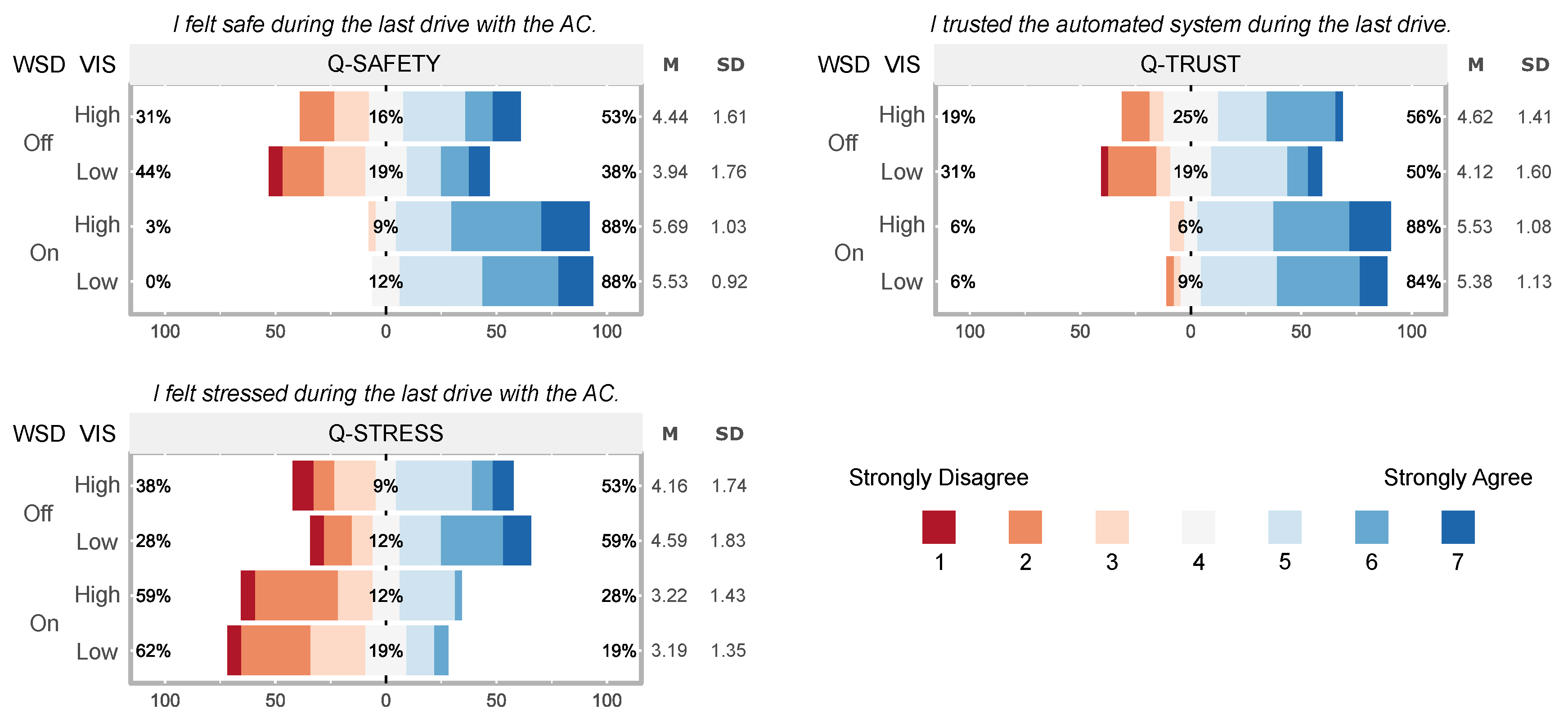

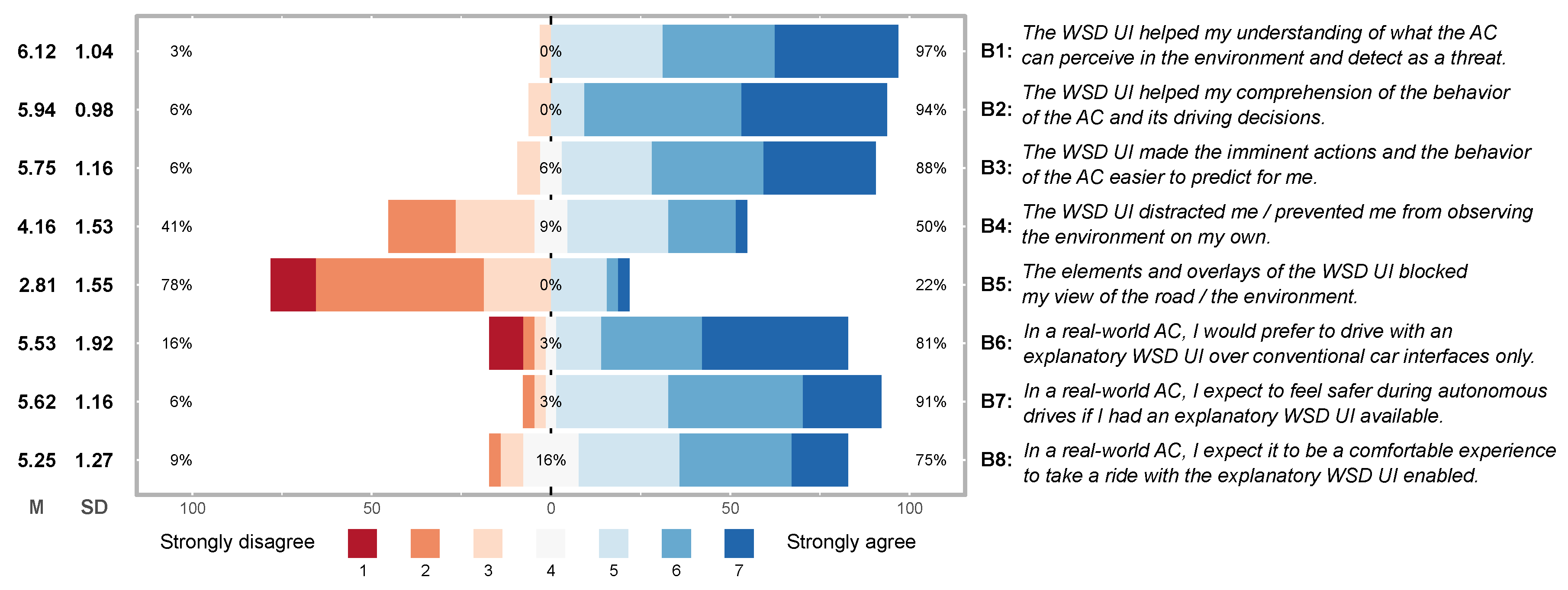

5.5. User Experience Ratings

- feeling of safety: under high (, , , ES: medium)and under low (, , , ES: large) visibility,

- feeling of stress: under high (, , , ES: medium)and under low (, , , ES: medium) visibility,

- trust in the car: under high (, , , ES: medium)and under low (, , , ES: large) visibility.

6. Discussion

6.1. Limitations

7. Conclusions and Future Work

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| AC | Automated Cars (here: SAE level 4–5 [21]) |

| AD | Autonomous Driving |

| AR | Augmented Reality |

| CAVE | Cave Automatic Virtual Environment |

| CTAM | Car Technology Acceptance Model |

| ERTRAC | European Road Transport Research Advisory Council |

| ES | Effect Size |

| FOV | Field of View |

| HUD | Head-Up Display |

| MIP | Mood Induction Procedure |

| MR | Mixed Reality |

| NASA-TLX | NASA Task Load Index |

| RSME | Rating Scale Mental Effort |

| SA | Situation Awareness |

| SAE | Society of Automotive Engineers |

| TAM | Technology Acceptance Model |

| TOR | Take-Over Request |

| UEQ | User Experience Questionnaire |

| UI | User Interface |

| UTAUT | Unified Theory of Acceptance and Use of Technology |

| UX | User Experience |

| V2I | Vehicle to Infrastructure |

| VIS | Visibility (here: independent variable in study) |

| WSD | Windshield Display (also used as independent variable in study) |

References

- ERTRAC Working Group Connectivity and Automated Driving. Automated Driving Roadmap. 2017. Available online: http://www.ertrac.org/uploads/images/ERTRAC_Automated_Driving_2017.pdf (accessed on 5 May 2018).

- Kyriakidis, M.; de Winter, J.C.; Stanton, N.; Bellet, T.; van Arem, B.; Brookhuis, K.; Martens, M.H.; Bengler, K.; Andersson, J.; Merat, N.; et al. A human factors perspective on automated driving. Theor. Issues Ergon. Sci. 2017, 1–27. [Google Scholar] [CrossRef]

- Shladover, S.E. The Truth about “Self-Driving” Cars. Sci. Am. 2016, 314, 52–57. [Google Scholar] [CrossRef] [PubMed]

- Smith, A.; Anderson, M. Automation in Everyday Life. 2017. Available online: http://assets.pewresearch.org/wp-content/uploads/sites/14/2017/10/03151500/PI_2017.10.04_Automation_FINAL.pdf (accessed on 5 May 2018).

- UK Autodrive. Public Attitudes Survey, Executive Summary. 2017. Available online: http://www.ukautodrive.com/wp-content/uploads/2017/08/Executive-Summary-FINAL.pdf (accessed on 5 May 2018).

- DETECON International GmbH. Autonomes Fahren: Wenn das Lenkrad zur Sonderausstattung wird. 2016. Available online: http://www.detecon.com/sites/default/files/gladbach_-_studie_autonomes_fahren_final.pdf (accessed on 5 May 2018).

- Davis, F.D. Perceived Usefulness, Perceived Ease of Use, and User Acceptance of Information Technology. MIS Q. 1989, 13, 319–340. [Google Scholar] [CrossRef]

- Osswald, S.; Wurhofer, D.; Trösterer, S.; Beck, E.; Tscheligi, M. Predicting Information Technology Usage in the Car: Towards a Car Technology Acceptance Model. In Proceedings of the 4th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Portsmouth, NH, USA, 17–19 October 2012; ACM: New York, NY, USA, 2012; pp. 51–58. [Google Scholar]

- Endsley, M.R. Design and Evaluation for Situation Awareness Enhancement. Proc. Hum. Factors Soc. Annu. Meet. 1988, 32, 97–101. [Google Scholar] [CrossRef]

- Kyriakidis, M.; Happee, R.; de Winter, J. Public opinion on automated driving: Results of an international questionnaire among 5000 respondents. Transp. Res. Part F Traffic Psychol. Behav. 2015, 32, 127–140. [Google Scholar] [CrossRef]

- Bansal, P.; Kockelman, K.M.; Singh, A. Assessing public opinions of and interest in new vehicle technologies: An Austin perspective. Transp. Res. Part C Emerg. Technol. 2016, 67, 1–14. [Google Scholar] [CrossRef]

- Mayer, R.C.; Davis, J.H.; Schoorman, F.D. An Integrative Model of Organizational Trust. Acad. Manag. Rev. 1995, 20, 709–734. [Google Scholar] [CrossRef]

- Kaur, K.; Rampersad, G. Trust in driverless cars: Investigating key factors influencing the adoption of driverless cars. J. Eng. Technol. Manag. 2018, 48, 87–96. [Google Scholar] [CrossRef]

- Merritt, S.M.; Heimbaugh, H.; LaChapell, J.; Lee, D. I Trust It, but I Don’t Know Why: Effects of Implicit Attitudes Toward Automation on Trust in an Automated System. Hum. Factors 2013, 55, 520–534. [Google Scholar] [CrossRef] [PubMed]

- Parasuraman, R.; Riley, V. Humans and Automation: Use, Misuse, Disuse, Abuse. Hum. Factors 1997, 39, 230–253. [Google Scholar] [CrossRef]

- Lee, J.D.; See, K.A. Trust in Automation: Designing for Appropriate Reliance. Hum. Factors 2004, 46, 50–80. [Google Scholar] [CrossRef] [PubMed]

- Merritt, S.M.; Lee, D.; Unnerstall, J.L.; Huber, K. Are Well-Calibrated Users Effective Users? Associations Between Calibration of Trust and Performance on an Automation-Aided Task. Hum. Factors 2015, 57, 34–47. [Google Scholar] [CrossRef] [PubMed]

- Wintersberger, P.; von Sawitzky, T.; Frison, A.K.; Riener, A. Traffic Augmentation As a Means to Increase Trust in Automated Driving Systems. In Proceedings of the 12th Biannual Conference on Italian SIGCHI Chapter, Cagliari, Italy, 18–20 September 2017; ACM: New York, NY, USA, 2017; pp. 17:1–17:7. [Google Scholar]

- Lindemann, P.; Lee, T.Y.; Rigoll, G. An Explanatory Windshield Display Interface with Augmented Reality Elements for Urban Autonomous Driving. In Proceedings of the 2018 IEEE International Symposium on Mixed and Augmented Reality (ISMAR-Adjunct), Munich, Germany, 16–20 October 2018. [Google Scholar]

- Lindemann, P.; Lee, T.Y.; Rigoll, G. Supporting Driver Situation Awareness for Autonomous Urban Driving with an Augmented-Reality Windshield Display. In Proceedings of the 2018 IEEE International Symposium on Mixed and Augmented Reality (ISMAR-Adjunct), 2018 International Workshop on Comfort Intelligence with AR for Autonomous Vehicle 2018, Munich, Germany, 16–20 October 2018. [Google Scholar]

- SAE International. Taxonomy and Definitions for Terms Related to Driving Automation Systems for On-Road Motor Vehicles. Stand. J3016 2016. [Google Scholar] [CrossRef]

- Bazilinskyy, P.; Kyriakidis, M.; de Winter, J. An International Crowdsourcing Study into People’s Statements on Fully Automated Driving. Procedia Manuf. 2015, 3, 2534–2542. [Google Scholar] [CrossRef]

- Venkatesh, V.; Davis, F.D. A Theoretical Extension of the Technology Acceptance Model: Four Longitudinal Field Studies. Manag. Sci. 2000, 46, 186–204. [Google Scholar] [CrossRef]

- Endsley, M.R. Measurement of Situation Awareness in Dynamic Systems. Hum. Factors 1995, 37, 65–84. [Google Scholar] [CrossRef]

- Endsley, M.R. Theoretical underpinnings of situation awareness: a critical review. In Situation Awareness Analysis and Measurement; Endsley, M.R., Garland, D.J., Eds.; Lawrence Erlbaum Associates: Mahwah, NJ, USA, 2000. [Google Scholar]

- McKnight, D.; Chervany, N. Trust and distrust definitions: One bite at a time. In Lecture Notes in Computer Science; Springer: Berlin, Germany, 2001; Volume 2246, pp. 27–54. [Google Scholar]

- Lee, J.; Kim, N.; Imm, C.; Kim, B.; Yi, K.; Kim, J. A Question of Trust: An Ethnographic Study of Automated Cars on Real Roads. In Proceedings of the 8th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Ann Arbor, MI, USA, 24–26 October 2016; ACM: New York, NY, USA, 2016; pp. 201–208. [Google Scholar]

- Rogers, M.; Zhang, Y.; Kaber, D.; Liang, Y.; Gangakhedkar, S. The Effects of Visual and Cognitive Distraction on Driver Situation Awareness. In Engineering Psychology and Cognitive Ergonomics; Harris, D., Ed.; Springer: Berlin/Heidelberg, Germany, 2011; pp. 186–195. [Google Scholar]

- Tufano, D.R. Automotive HUDs: The Overlooked Safety Issues. Hum. Factors 1997, 39, 303–311. [Google Scholar] [CrossRef] [PubMed]

- De Winter, J.C.; Happee, R.; Martens, M.H.; Stanton, N.A. Effects of adaptive cruise control and highly automated driving on workload and situation awareness: A review of the empirical evidence. Transp. Res. Part F Traffic Psychol. Behav. 2014, 27, 196–217. [Google Scholar] [CrossRef]

- Paxion, J.; Galy, E.; Berthelon, C. Mental workload and driving. Front. Psychol. 2014, 5. [Google Scholar] [CrossRef] [PubMed]

- Zijlstra, F. Efficiency in Work Behavior. A Design Approach for Modern Tools. Ph.D. Thesis, Delft University of Technology, Delft, The Netherlands, 1993. [Google Scholar]

- Hart, S.G.; Staveland, L.E. Development of NASA-TLX (Task Load Index): Results of empirical and theoretical research. In Advances in Psychology; Elsevier: Amsterdam, The Netherlands, 1988; Volume 52, pp. 139–183. [Google Scholar]

- Pauzie, A. A method to assess the driver mental workload: The driving activity load index (DALI). IET Intell. Transp. Syst. 2008, 2, 315–322. [Google Scholar] [CrossRef]

- Bazilinskyy, P.; de Winter, J. Auditory interfaces in automated driving: an international survey. PeerJ Comput. Sci. 2015, 1, e13. [Google Scholar] [CrossRef]

- Bazilinskyy, P.; Petermeijer, S.; Petrovych, V.; Dodou, D.; de Winter, J. Take-over requests in highly automated driving: A crowdsourcing survey on auditory, vibrotactile, and visual displays. Transp. Res. Part F Traffic Psychol. Behav. 2018, 56, 82–98. [Google Scholar] [CrossRef]

- Filip, G.; Meng, X.; Burnett, G.; Harvey, C. Designing and calibrating trust through situational awareness of the vehicle (SAV) feedback. In Proceedings of the IET International Conference on Intelligent and Connected Vehicles (ICV 2016), Chongqing, China, 22–23 September 2016; Institution of Engineering and Technology: Stevenage, UK, 2016; pp. 1–6. [Google Scholar]

- Dzindolet, M.T.; Peterson, S.A.; Pomranky, R.A.; Pierce, L.G.; Beck, H.P. The role of trust in automation reliance. Int. J. Hum.-Comput. Stud. 2003, 58, 697–718. [Google Scholar] [CrossRef]

- Helldin, T.; Falkman, G.; Riveiro, M.; Davidsson, S. Presenting System Uncertainty in Automotive UIs for Supporting Trust Calibration in Autonomous Driving. In Proceedings of the 5th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Eindhoven, The Netherlands, 28–30 October 2013; ACM: New York, NY, USA, 2013; pp. 210–217. [Google Scholar]

- Johns, M.; Mok, B.; Sirkin, D.M.; Gowda, N.M.; Smith, C.A.; Talamonti, W.J., Jr.; Ju, W. Exploring Shared Control in Automated Driving. In Proceedings of the Eleventh ACM/IEEE International Conference on Human Robot Interaction, Christchurch, New Zealand, 7–10 March 2016; IEEE Press: Piscataway, NJ, USA, 2016; pp. 91–98. [Google Scholar]

- Wintersberger, P.; Riener, A. Trust in Technology as a Safety Aspect in Highly Automated Driving. i-com 2016, 15, 297–310. [Google Scholar] [CrossRef]

- Häuslschmid, R.; von Bülow, M.; Pfleging, B.; Butz, A. Supporting Trust in Autonomous Driving. In Proceedings of the 22nd International Conference on Intelligent User Interfaces, Limassol, Cyprus, 13–16 March 2017; ACM: New York, NY, USA, 2017; pp. 319–329. [Google Scholar]

- Lee, C.; Rincon, G.A.; Meyer, G.; Höllerer, T.; Bowman, D.A. The Effects of Visual Realism on Search Tasks in Mixed Reality Simulation. IEEE Trans. Vis. Comput. Gr. 2013, 19, 547–556. [Google Scholar] [CrossRef] [PubMed]

- Ren, D.; Goldschwendt, T.; Chang, Y.; Höllerer, T. Evaluating wide-field-of-view augmented reality with mixed reality simulation. In Proceedings of the 2016 IEEE Virtual Reality (VR), Greenville, SC, USA, 19–23 March 2016; pp. 93–102. [Google Scholar]

- Cruz-Neira, C.; Sandin, D.J.; DeFanti, T.A.; Kenyon, R.V.; Hart, J.C. The CAVE: Audio Visual Experience Automatic Virtual Environment. Commun. ACM 1992, 35, 64–72. [Google Scholar] [CrossRef]

- Gabbard, J.L.; Fitch, G.M.; Kim, H. Behind the Glass: Driver Challenges and Opportunities for AR Automotive Applications. Proc. IEEE 2014, 102, 124–136. [Google Scholar] [CrossRef]

- Häuslschmid, R.; Shou, Y.; O’Donovan, J.; Burnett, G.; Butz, A. First Steps Towards a View Management Concept for Large-sized Head-up Displays with Continuous Depth. In Proceedings of the 8th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Ann Arbor, MI, USA, 24–26 October 2016; ACM: New York, NY, USA, 2016; pp. 1–8. [Google Scholar]

- Diels, C.; Bos, J.E. User Interface Considerations to Prevent Self-driving Carsickness. In Proceedings of the 7th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Nottingham, UK, 1–3 September 2015; ACM: New York, NY, USA, 2015; pp. 14–19. [Google Scholar]

- Lee, J.; McGehee, D.; Brown, T.; Nakamoto, J. Driver sensitivity to brake pulse duration and magnitude. Ergonomics 2007, 50, 828–836. [Google Scholar] [CrossRef] [PubMed]

- Endsley, M.R. Direct measurement of situation awareness: Validity and use of SAGAT. Situat. Aware. Anal. Meas. 2000, 10, 147–173. [Google Scholar]

- Laugwitz, B.; Held, T.; Schrepp, M. Construction and Evaluation of a User Experience Questionnaire. In HCI and Usability for Education and Work; Holzinger, A., Ed.; Springer: Berlin/Heidelberg, Germany, 2008; pp. 63–76. [Google Scholar]

- Cohen, J. Statistical Power Analysis for the Behavioral Sciences, 2nd ed.; Lawrence Erlbaum Associates: Hillsdale, NJ, USA, 1988. [Google Scholar]

- Rosenthal, J.A. Qualitative Descriptors of Strength of Association and Effect Size. J. Soc. Serv. Res. 1996, 21, 37–59. [Google Scholar] [CrossRef]

- Kučera, D.; Haviger, J. Using Mood Induction Procedures in Psychological Research. Procedia-Soc. Behav. Sci. 2012, 69, 31–40. [Google Scholar] [CrossRef]

- Jeon, M.; Yim, J.B.; Walker, B.N. An Angry Driver is Not the Same As a Fearful Driver: Effects of Specific Negative Emotions on Risk Perception, Driving Performance, and Workload. In Proceedings of the 3rd International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Salzburg, Austria, 30 November–2 December 2011; ACM: New York, NY, USA, 2011; pp. 137–142. [Google Scholar]

| Visual Example | AR Element Description |

|---|---|

| (a) Threat and warning markers |

| Colored object outlines warning drivers of potential hazards on or beside the road. Warnings for possible threats are given as yellow markers. Direct threats in the vehicle’s path are marked in red. In case of an imminent threat, other display elements are temporarily hidden to guide attention to the position of the hazard. | |

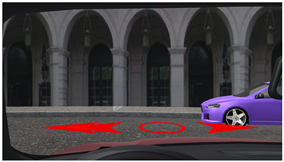

| (b) Oncoming traffic indicators |

| Superimposed on the road when the automated car approaches an intersection. Red arrows indicate from where oncoming traffic is detected. Their current distance is roughly indicated by the amount of arrowheads (up to 3). It allows drivers to notice approaching cars without leaning or head-turning. | |

| (c) Brake and stopping bar |

| A stopping bar is superimposed on the road whenever it comes to a situation in which the vehicle must brake hard due to unforeseen circumstances. It indicates where the car is expected to stop completely. It may reassure drivers by giving a point of reference for safe stopping but could also facilitate grasping the cause of the situation. | |

| (d) Moving object markers |

| Rotating cubic markers are overlayed above moving objects when relevant to driving decisions. For example, dangerous drivers ignoring the right of way may be highlighted by a red cube. Yellow is used for unusual behavior (e.g., in picture (c) above). A car classified as safe (e.g., yielding) might be marked in green to reassure the driver. | |

| (e) Road sign overlay |

| Yield, priority or stop road signs in front of intersections are superimposed on a large scale onto the road surface. For the yield/stop signs, the position of the overlay also marks where the car may need to stop. Priority signs might reassure drivers in cases where the automated car is not waiting to yield. |

| Basic Situation Description | Properties and Variations |

|---|---|

| Cross-traffic in intersections | Speed violations by other cars, obstructed view |

| Oncoming traffic (intersections & straights) | Dangerous overtaking by other cars, obstructed view |

| Rule-ignoring traffic | Cars ignoring priority or traffic lights |

| Standing traffic hazards | Car pulling out of parking spot, obstructed pedestrians |

| Unplanned detours | Construction site, accident site |

| Imminent head-on collision | Crossing children or dogs, thrown balls |

| Forced braking | Unusual preceding car behavior, obstacles |

| Debris on road | Fallen road signs, balls, car parts |

| VIS | High | Low | High | Low | High | Low | ||

|---|---|---|---|---|---|---|---|---|

| WSD | On | Off | On | Off | On | Off | ||

| Mean | 0.70 | 0.50 | 0.65 | 0.39 | 0.70 | 0.65 | 0.50 | 0.39 |

| SD | 0.17 | 0.17 | 0.16 | 0.14 | 0.17 | 0.16 | 0.17 | 0.14 |

| p | <0.001 | <0.001 | 0.172 | 0.022 | ||||

| Z | 3.53 | 4.55 | 1.37 | 2.29 | ||||

| g* | 0.74 | 1.13 | 0.21 | 0.43 | ||||

| ES | Medium | Large | Small | Small | ||||

| n = 32 | Perception | Comprehension | Projection | Total SA (1–19) | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| VIS | High | Low | High | Low | High | Low | High | Low | ||||||||

| On | Off | On | Off | On | Off | On | Off | On | Off | On | Off | On | Off | On | Off | |

| Mean | 5.25 | 4.16 | 4.75 | 3.25 | 5.53 | 4.50 | 5.56 | 3.78 | 4.97 | 4.03 | 5.06 | 3.25 | 13.75 | 10.69 | 13.38 | 8.28 |

| SD | 1.16 | 1.39 | 1.08 | 1.44 | 1.16 | 1.59 | 1.24 | 1.52 | 1.23 | 1.51 | 1.13 | 1.46 | 2.99 | 3.87 | 2.80 | 3.64 |

| p | 0.001 | <0.001 | 0.007 | <0.001 | 0.008 | <0.001 | 0.002 | <0.001 | ||||||||

| Z | 3.23 | 4.73 | 2.69 | 4.40 | 2.63 | 4.22 | 3.12 | 4.89 | ||||||||

| g | 0.54 | 1.24 | 0.49 | 1.10 | 0.48 | 1.03 | 0.59 | 1.49 | ||||||||

| ES | Medium | Large | Small | Large | Small | Large | Medium | Very Large | ||||||||

| WSD | On | Off | On | Off | On | Off | On | Off | ||||||||

| VIS | High | Low | High | Low | High | Low | High | Low | High | Low | High | Low | High | Low | High | Low |

| p | 0.022 | 0.004 | 0.845 | 0.016 | 0.521 | 0.006 | 0.390 | 0.002 | ||||||||

| Z | 2.29 | 2.86 | 0.20 | 2.41 | 0.64 | 2.74 | 0.86 | 3.04 | ||||||||

| g | 0.38 | 0.53 | 0.02 | 0.46 | 0.08 | 0.53 | 0.13 | 0.61 | ||||||||

| ES | Small | Medium | Trivial | Small | Trivial | Medium | Trivial | Medium | ||||||||

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lindemann, P.; Lee, T.-Y.; Rigoll, G. Catch My Drift: Elevating Situation Awareness for Highly Automated Driving with an Explanatory Windshield Display User Interface. Multimodal Technol. Interact. 2018, 2, 71. https://doi.org/10.3390/mti2040071

Lindemann P, Lee T-Y, Rigoll G. Catch My Drift: Elevating Situation Awareness for Highly Automated Driving with an Explanatory Windshield Display User Interface. Multimodal Technologies and Interaction. 2018; 2(4):71. https://doi.org/10.3390/mti2040071

Chicago/Turabian StyleLindemann, Patrick, Tae-Young Lee, and Gerhard Rigoll. 2018. "Catch My Drift: Elevating Situation Awareness for Highly Automated Driving with an Explanatory Windshield Display User Interface" Multimodal Technologies and Interaction 2, no. 4: 71. https://doi.org/10.3390/mti2040071

APA StyleLindemann, P., Lee, T.-Y., & Rigoll, G. (2018). Catch My Drift: Elevating Situation Awareness for Highly Automated Driving with an Explanatory Windshield Display User Interface. Multimodal Technologies and Interaction, 2(4), 71. https://doi.org/10.3390/mti2040071