1. Introduction

As people interact daily with their surrounding environment, their environmental settings can affect their psychological states through active and passive environmental perception [

1]. Urban populations have seen a rise in the prevalence of mental health disorders [

2]. Among other factors, this is believed to be linked to the perceived disconnection from nature in the expanding built environment [

3]. Humans have an innate desire to seek out natural stimuli that evoke an immediate, active, affective response in our brains and neuroendocrine systems [

4]. The presence of these natural elements in and around the environments we live in are therefore associated with beneficial mental wellbeing [

5,

6,

7]. An abundance of research has indicated that one major component of nature, greenspace, lowers the risk of psychosocial and psychological stress [

2,

8,

9] as well as stress-related disorders like depression and anxiety [

10,

11,

12,

13]. Urban greenspace has also been shown to contribute to residents’ happiness [

14]. Greater integration of natural elements such as greenspace into our growing urban environment, therefore, provides a promising solution that promotes mental health for urban dwellers worldwide [

15,

16].

Much of the previous research on urban environmental perception and mental health has used aerial imagery to quantify environmental properties. For example, the Normalized Differential Vegetation Index (NDVI), derived from aerial imagery, has been popularly used to quantify environmental properties pertaining to greenspace [

17,

18,

19,

20,

21,

22,

23,

24]. The spatial resolution of the aerial imageries used ranges from 0.5 m to 30 m [

17,

18,

21,

24,

25,

26]. A resolution of 30 m has been found effective in categorizing greenness on a national scale when studying its impact on depression and anxiety [

18]. With a fine resolution of 0.5 m, micro-scale studies have been conducted to investigate the dynamic environmental settings pertaining to individuals’ environmental perceptions and psychological states [

27,

28]. When correlating the attributes of mental wellbeing with the prevalence of greenspace, these studies used buffer zones around either postal code addresses or individuals’ whereabouts [

21,

27,

28,

29]. Most studies identified positive associations between the amount of greenspace and the presence of attributes of positive mental wellbeing, regardless of the choice of buffer size [

21,

28].

There has also been an increasing trend in utilizing street view imageries to measure the level of greenery at eye level in recent years. A variety of street view imageries, including Google Street View (GSV), Tencent Online Map (TOM), and Baidu maps (BM) databases [

17,

19,

22,

23,

24,

26], have been used to derive environmental elements through image segmentation. Studies have commonly calculated a Green View Index (GVI), Panoramic View Green Index (PVGI), or Blue View Index (BVI), or a combination of these. The environmental elements segmented from street view images vary but often include vegetation, grass, trees, water, buildings, roads, and sky [

30,

31,

32,

33], from which the indices are calculated. Studies have shown that individuals’ emotional states lean more positively toward a street landscape when the GVI is above a certain threshold [

19,

30,

32,

33,

34]. The level of greenery derived from street view images, however, is found to be affected by factors such as image segmentation methods and data collection seasons [

31,

32]. Results relating to the impact of identifiable greenspace on individuals’ mental wellbeing were also inconsistent. Some studies identified a strong positive correlation between a high GVI and BVI, and good mental wellbeing [

17,

23,

24,

25]. Other studies only found correlations between a high GVI and BVI, and a lack of negative effects [

18,

19,

20,

22,

35].

Several studies have sought to utilize both aerial and street view imagery to identify environmental elements as related to mental wellbeing. For example, one study derived NDVI from aerial imagery within a 1 km buffer of the centroid of the dissemination area, while deriving GVI from GSV to measure the active living environment of Ottawa [

22]. The study evaluated the two sets of environmental properties separately. It found that NDVI was not associated with participation in recreational activities by residents, while GVI was positively associated with participation in recreational activities during the summer. Another study incorporated NDVI derived from aerial imageries and GVI derived from Baidu Maps on several Chinese university campuses [

24] to study the correlation between greenspace exposure and mental health. Its results demonstrate a negative correlation between greenspace exposure on campuses and the level of mental health issues among university students. A third study derived NDVI from aerial imagery within a 50 m buffer of the GSV latitude/longitude coordinates, while deriving GVI from GSV to create a GVI/NDVI ratio to capture the vertical dimension of greenspace [

23]. This study found that utilizing both NDVI and GVI captures more characteristics of the street view greenspace environment than assessments based solely on either single measure [

23].

There is a consistent agreement that aerial and street view imagery capture different aspects of the urban environment’s properties [

18,

22,

23,

24] and that a combination of both may offer a more comprehensive characterization of humans’ perception of the environment [

23]. Such combination, however, is challenged by the inconsistency of the correlations between environmental properties derived from aerial and street view images found in the different studies. For example, there are conflicting results from studies in Amsterdam [

18], Beijing [

36], and Singapore [

37] when correlating the GVIs derived from aerial and street view images. The buffer sizes applied on aerial images are also found to play inconsistent roles in these studies [

18].

At the same time, studies examining the associations between mental health and environmental perception continue to grow rapidly, and the most popular approach is still utilizing either aerial or street view images. Among the most recent studies, aerial images have been used to either capture people’s dynamic activity space at the micro-scale [

28] or to catalog urban greenspace at a large scale to develop a comprehensive typology representing various environmental settings [

38,

39]. Street view images, on the other hand, have been used to capture residents’ perceived environments at sampling locations where such images are available in various cities around the world [

40,

41,

42,

43]. In other words, aerial images have the advantage of capturing environments with no fixed viewing points and also doing so at a large scale, while street view images provide environmental characterizations in a sampled manner because they cannot cover the entire study area.

In summary, there has been an abundance of studies examining the relationships between urban environmental perception and people’s psychological wellbeing, and valuable findings have been made both evidentially and theoretically. Imageries, both aerial and street view, have been used as data essential for quantifying environmental properties. We recognize several common issues when reviewing these previous studies. First, the prevalent use of NDVI or GVI compounds all forms of greenspace into a single measurable number that does not account for specific environmental elements working as visual stimuli triggering psychological responses [

18,

19,

44]. According to prevalent theories on the mechanisms of how the environment affects our psychological responses [

4,

45,

46], it is the specific visual stimuli in the environment and not the overall greenness that have either a restorative effect or trigger active affect and functions in the pathway to stress reduction, promoting happiness and positive mental health. It is thus necessary to capture the specific visual elements in environments when examining their relation to mental health. Secondly, most studies have only focused on greenspace and the natural elements in the environment. There is a shortage of studies examining the impact of negative environmental stimuli and artificial environmental elements such as buildings and cars, among others. Thirdly, when using aerial imagery to derive environmental properties, there is still the question of what buffer size provides the most information on how an individual is affected by their environment [

22,

29,

30,

47]. And lastly, as aforementioned, there has been an increasing use of street view images in recent years, as they capture environmental properties at eye level and thus are believed to directly reflect people’s perceived environment. This implies its advantages over aerial images, which do not directly reflect how humans perceive their surrounding environment. The disadvantage, however, is that street view images do not fully cover the landscape and can only provide sample-based measures of people’s perceived environment. This limits their use in both micro-scale studies where humans’ activity space is dynamic and continuous over space, and large-scale studies where full coverage of a city or country is desired. It is thus worth combining the two types of images in future studies on environmental perception. However, research is still scarce and results conflict with regard to how the environmental properties derived from aerial and street view images correspond and contrast with each other.

The current study was designed to address the above issues and provide further insights into how the two ways of deriving environmental properties can be used to supplement each other when capturing not only the objective environmental attributes but also those relevant to humans’ subjective perceptions. We compared the environmental properties derived from aerial and street view images in two study sites. Two different image segmentation approaches were implemented for processing street view images and different buffer sizes were employed for processing high-resolution aerial imageries. Our goal was to examine not only the overall greenness level but also specific environmental stimuli, both positive and negative, in people’s immediate settings with both aerial and street view imagery, and to determine potential correspondences. Rather than utilizing compound indices such as the NDVI and GVI, we identified individual elements of both the natural and built environments. Our specific hypotheses were as follows: (1) The amount of greenness and individual environmental elements derived from street view versus aerial images may be quite different at the same locations. (2) For some environmental elements, the two might be in greater concordance than others. (3) The correspondence might be better in some environmental settings but not others. (4) There may exist a buffer size with which the two are more in agreement and thus both could be used together, either to derive composite indices or as compensating factors.

The results can be useful in future studies for a more accurate and comprehensive account of our perceived environment using available data sources in the forms of both aerial and street view images. This can benefit diverse fields, including public and private urban planning for environmental designs promoting psychological wellbeing, integrative therapeutic landscape architecture, as well as recreational environmental and tourism development.

2. Materials and Methods

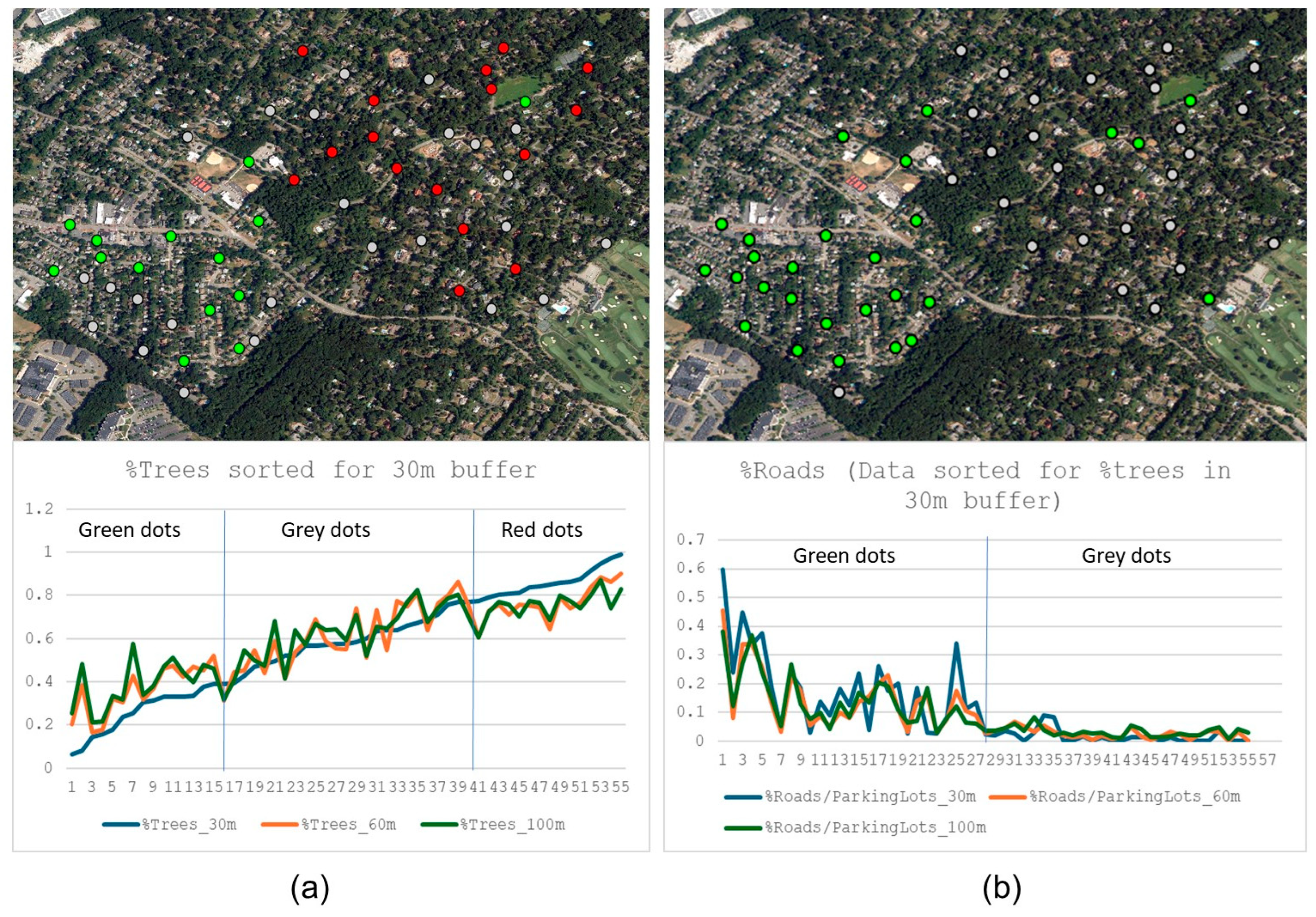

In our efforts to derive environmental properties from aerial and street view images representing different environmental settings, we selected two study sites located in the state of New Jersey in the United States. Both sites are three square kilometers in size. One is located in a town with an average household income similar to the median household income of the state of New Jersey. The study site contains mostly residential neighborhoods. The northeast portion is marked by higher-value homes widely spaced out, with abundant greenness in the environment. The southwest portion has clustered lower-value homes with notably less greenness. Study site 2 has a similar average household income but a greater diversity in its environment. On the west side of this site is a nature reservation, and forest is the main land cover. The east side, in contrast, is a busy downtown district with many developments. Residential neighborhoods lie in between, with moderate housing density and sporadic greenness.

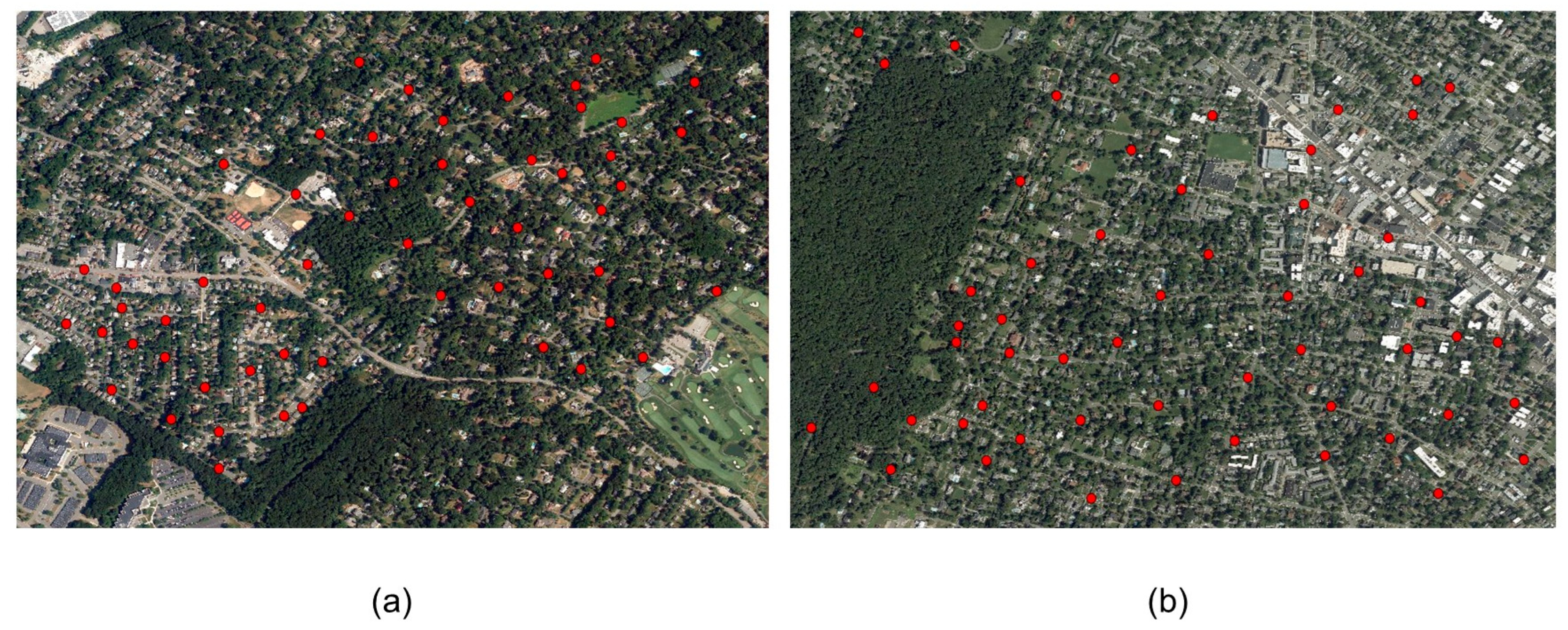

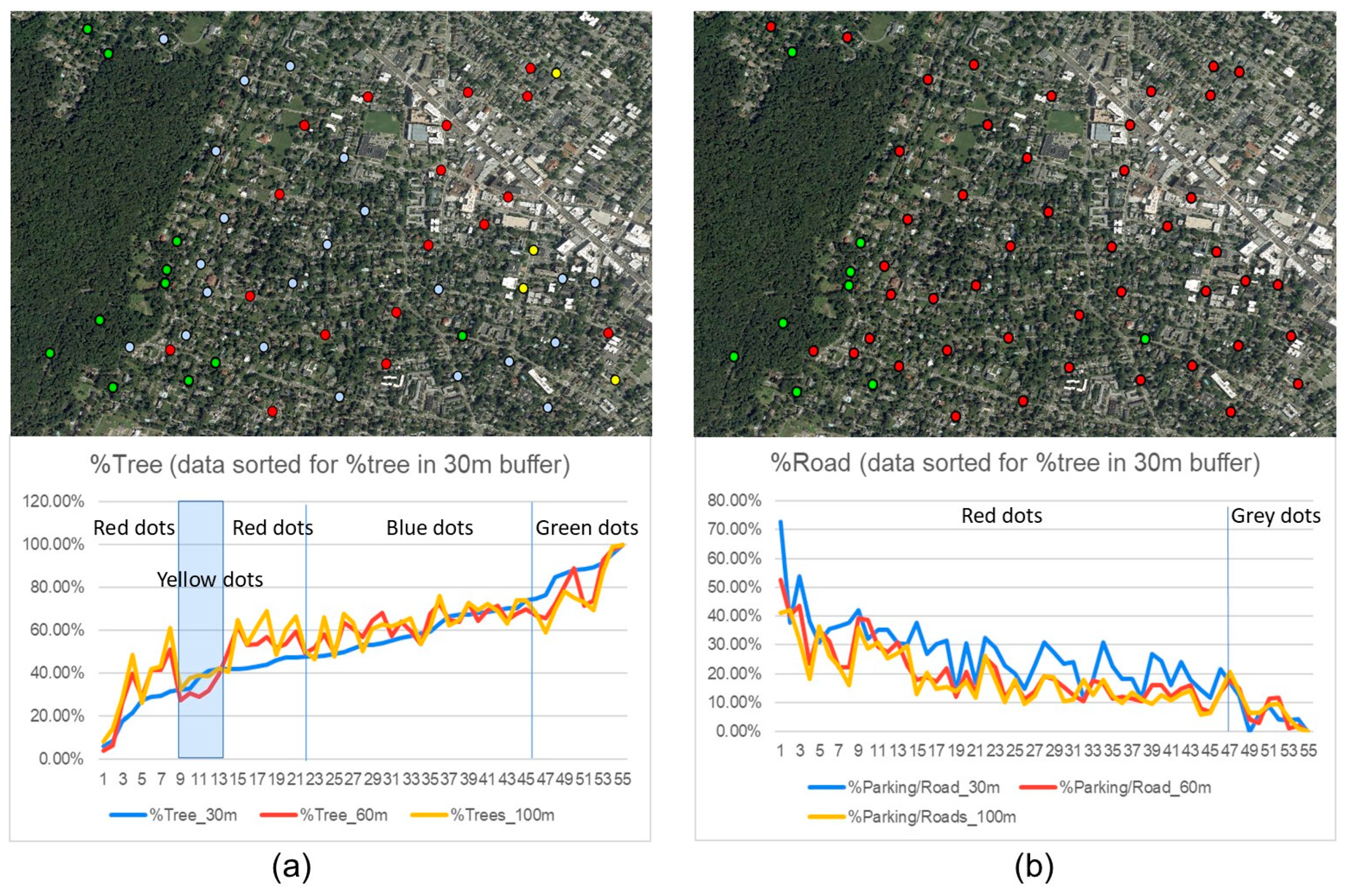

Figure 1a shows the aerial imagery of site 1, and

Figure 1b that of site 2.

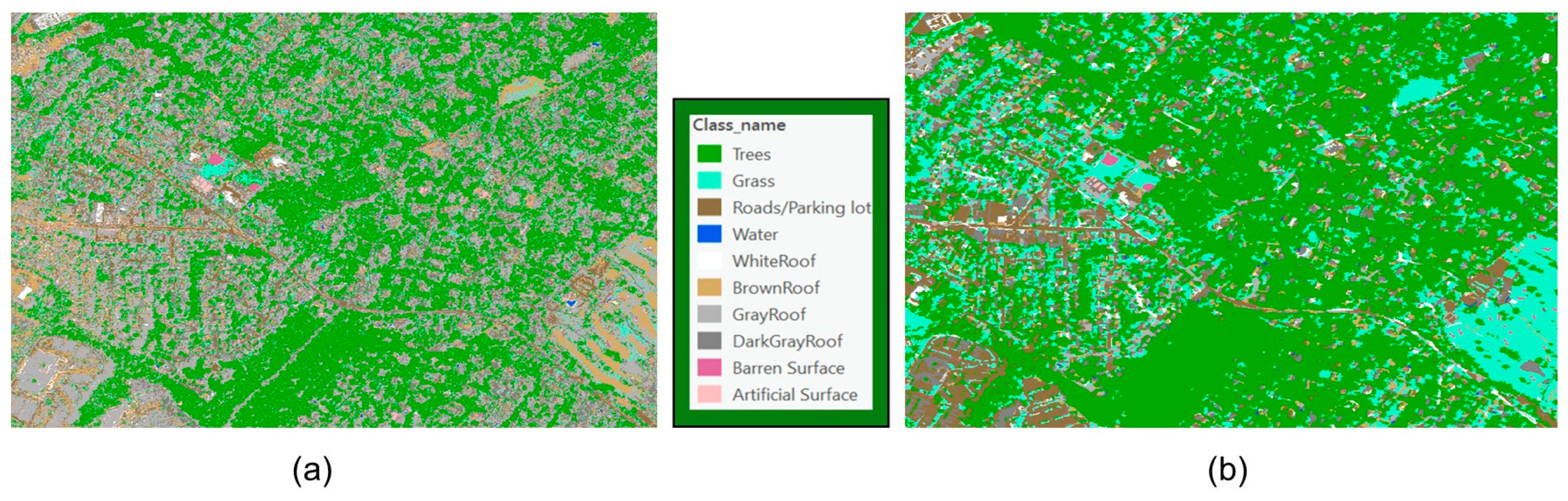

Aerial imageries with a 30 cm spatial resolution were obtained from the USA NAIP multispectral digital orthophoto database for both study sites. Supervised classification was utilized following the previous fine-scale study [

27] to identify a set of environmental elements comparable to those identified from street view images. Both pixel-based image classification and object-based image classification were experimented with using ArcGIS Pro 3.0. Training samples were digitized as polygons to capture variant instances of the major environmental elements present in the two study sites, including trees, grass/lawn, roads, rooftops, barren land, water, and artificial surfaces. Rooftops had subclasses with different colors. The Maximum Likelihood Classification (MLC) method was first used following previous studies [

27,

28]. The Support Vector Machine (SVM) classifier built in ArcGIS Pro 3.0 was also tested. While MLC assumes normal distribution and classifies pixels by maximizing probability, SVM is a non-parametric classifier that seeks an optimal separating hyperplane to maximize the distance between each class in an N-dimensional space, making it more robust to non-normal distributions and complex datasets. A range of settings of spectral and spatial details and minimum segment sizes was also tested. Close visual inspections on the resulting maps were conducted to choose an optimal classified map for each site that required minor manual re-classifications.

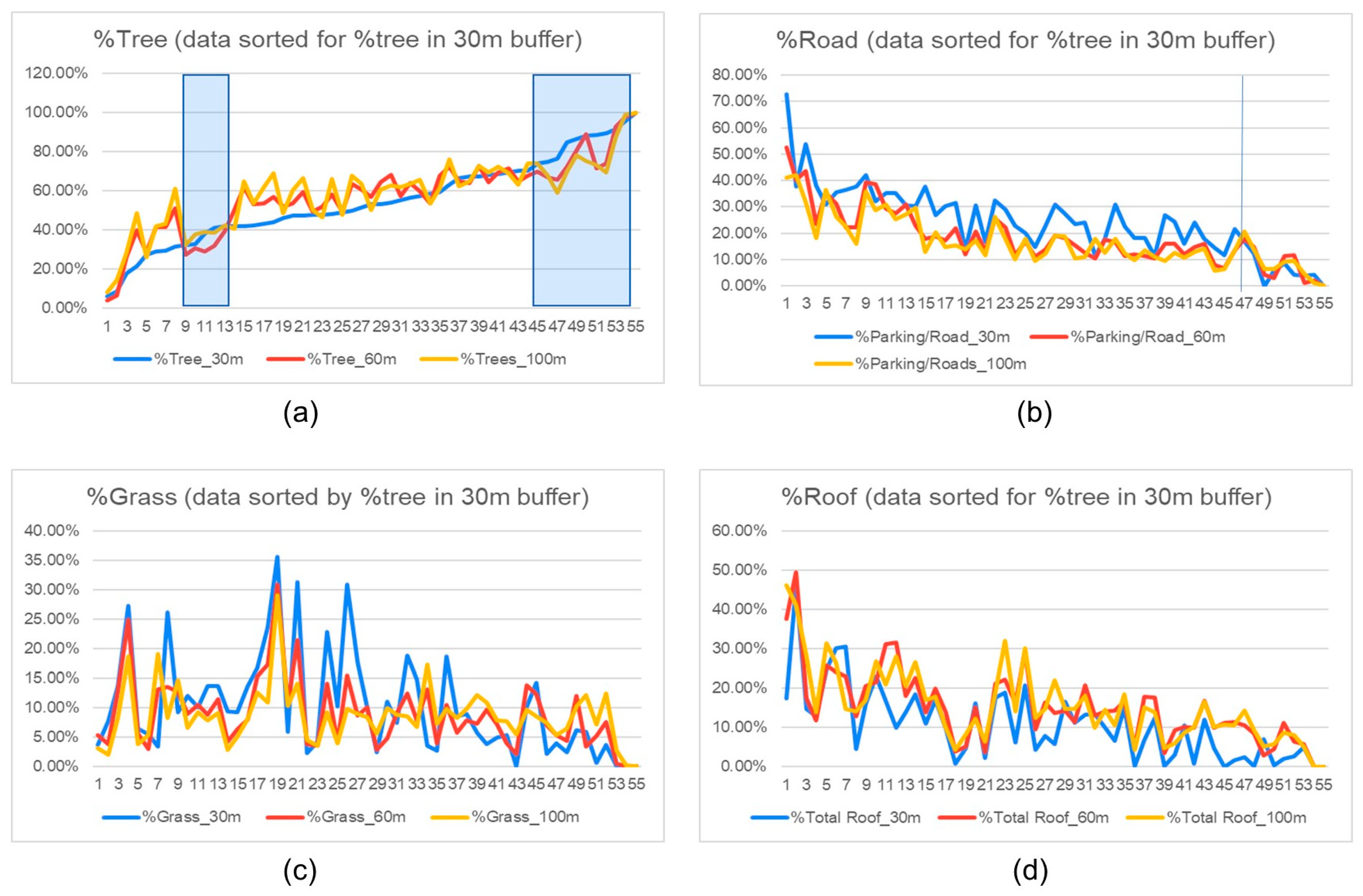

After manual re-classifications, the final classified images were used to derive environmental properties within fixed-distance buffers around locations where GSV images were randomly sampled in the two study sites. Buffer sizes of 30 m, 60 m, and 100 m were experimented with. The choice of a 30 m buffer was based on previous fine-scale studies [

27,

28] on environmental properties’ effect on humans’ psychological states. The choice of 100 m was because common buffer sizes used by a variety of previous studies are often 100 m+ [

18,

19,

20,

21,

22,

25,

29,

35]. The 60 m buffer is in the middle of the two, double the 30 m buffer size. The percentage of the area in the buffers was calculated for each of the major environmental elements following the previous fine-scale studies [

27,

28].

A total of 110 locations were randomly sampled from the two study sites (55 in each) and street view panoramas were downloaded from the GSV repository.

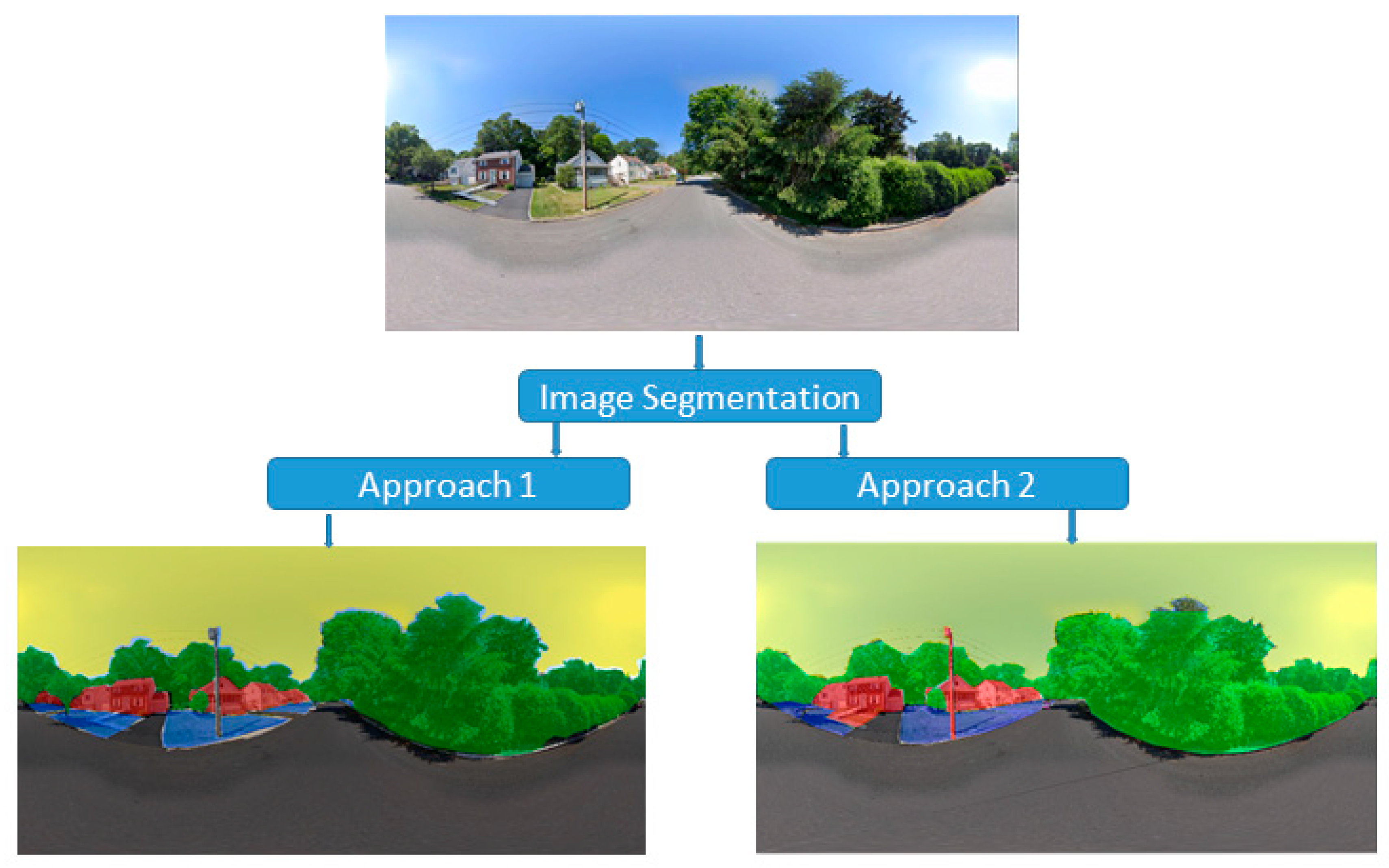

Figure 1 also shows the GSV locations. Two image segmentation approaches were employed to identify the specific environmental elements corresponding to those classified from aerial images. The first approach utilized a pre-trained DeepLabV3 model [

48] using the ResNet-101 backbone [

49]. DeepLab is a semantic segmentation model based on deep learning, which uses atrous convolutions to capture multi-scale contextual information without the need to greatly reduce spatial resolution. DeepLabV3 also uses improved Atrous Spatial Pyramid Pooling (ASPP) to consider objects at different scales and segment with much improved accuracy. Equation (1) describes the atrous convolution algorithm [source: 48]. With two-dimensional signals, for each location

i on the output

y and a filter

w, atrous convolution is applied over the input feature map

x following Equation (1), where the atrous rate

r corresponds to the stride with which the input signal is sampled, equivalent to convolving the input

x with up-sampled filters produced by inserting

r − 1 zeros between two consecutive filter values along each spatial dimension.

The ResNet-101 backbone adopts a 101-layer deep convolutional neural network (CNN) known for its strong feature extraction capabilities by using “skip connections” to overcome the vanishing gradient problem [

49]. The Microsoft COCO dataset [

50] was used for training the model. It is a large-scale image dataset used for training and benchmarking computer vision models for object detection, segmentation, and captioning tasks. It comprises over 330,000 images containing about 1.5 million object instances across 80 common object categories. The dataset includes detailed annotations for object categories, bounding boxes, pixel-level segmentation masks, and multiple captions per image, making it a widely used dataset for large-scale object detection and segmentation applications.

The second approach utilized the PaddleSeg image segmentation toolkit version 2.9 [

51] with the PP-LiteSeg model for semantic segmentation [

52]. PaddleSeg is a high-efficiency, open-source toolkit for image segmentation based on Baidu’s PaddlePaddle deep learning framework [

51]. It supports a wide range of segmentation capabilities, including semantic segmentation. The toolkit has a modular structure, supporting various mainstream segmentation network architectures, among which PP-LiteSeg is a convolutional neural network incorporating a lightweight encoder–decoder structure in order to optimize both speed and accuracy. It does not rely on a specific pre-trained backbone. The key modules in PP-LiteSeg—the Flexible and Lightweight Decoder (FLD), Spatial–Temporal Deformable Convolution (STDC), Unified Attention Fusion Module (UAFM), and Simple Pyramid Pooling Module (SPPM)—are designed to enhance segmentation performance while reducing computational cost. These modules make the model more efficient compared to the traditional deep CNNs like ResNet. We utilized the Cityscapes [

53] and Mapillary Vistas datasets [

54] as training data to segment environmental elements. The Cityscapes dataset contains 5000 annotated images with fine annotations and 20,000 more images with coarse annotations from fifty cities around the world. The Mapillary Vistas dataset contains 25,000 manually annotated images from around the world, featuring diverse conditions and geographic locations. Both are known for their use in street scenes, with the former particularly in urban street scenes.

5. Conclusions

As studies continue to investigate the relationships between environmental settings and humans’ mental wellbeing using aerial and/or street view images, it is necessary to examine how the environmental properties derived from these two types of images correlate with each other for their use as compensating factors or combine them as composite indices. Our hypotheses included the following: (1) The amount of greenness and individual environmental elements derived from the two types of images may be different. (2) Some environmental elements might have greater concordances between the two than others. (3) The agreements might be greater in some environmental settings. (4) There may exist a buffer size with which the two are more agreeable and thus both could be used together.

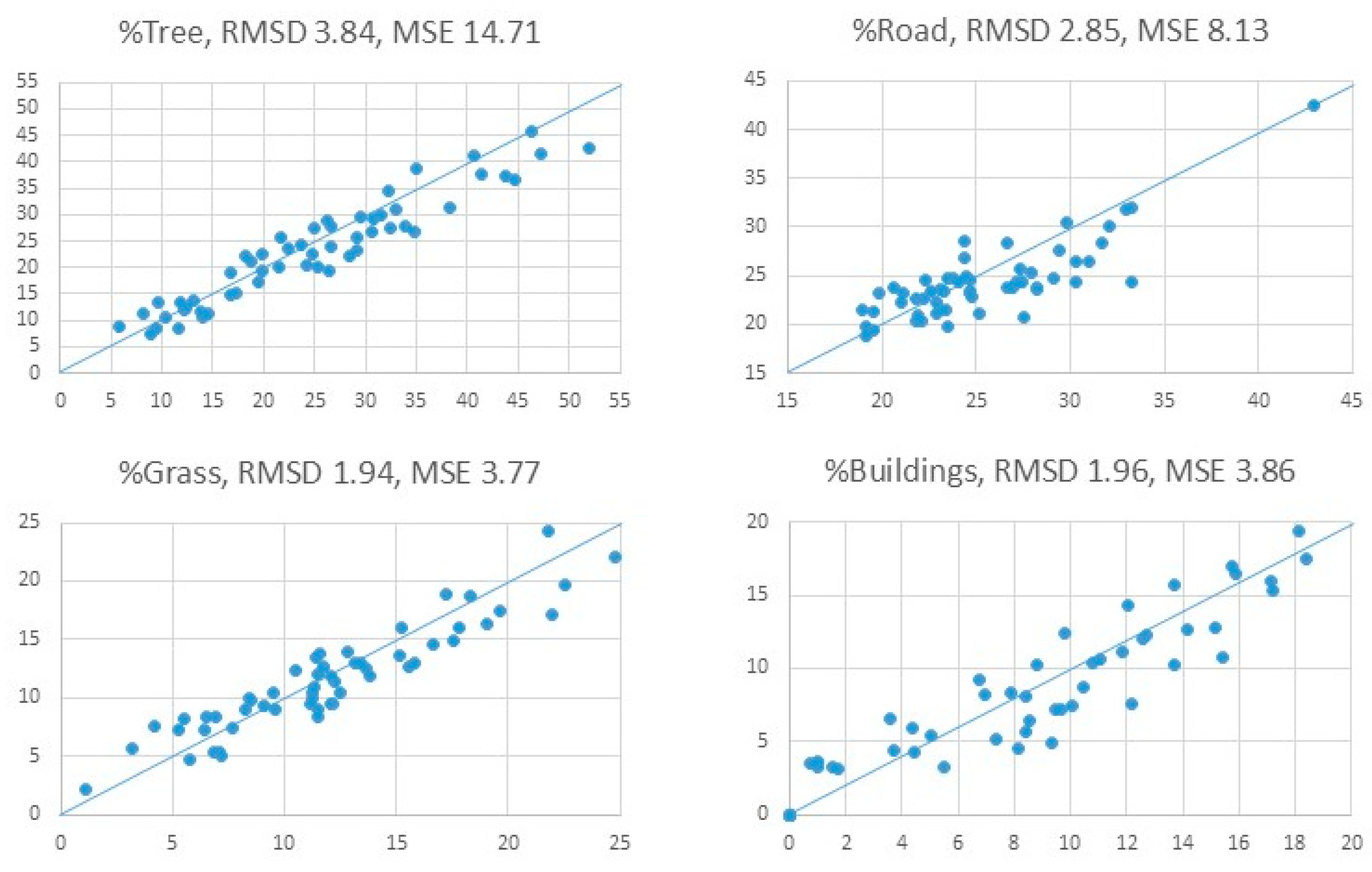

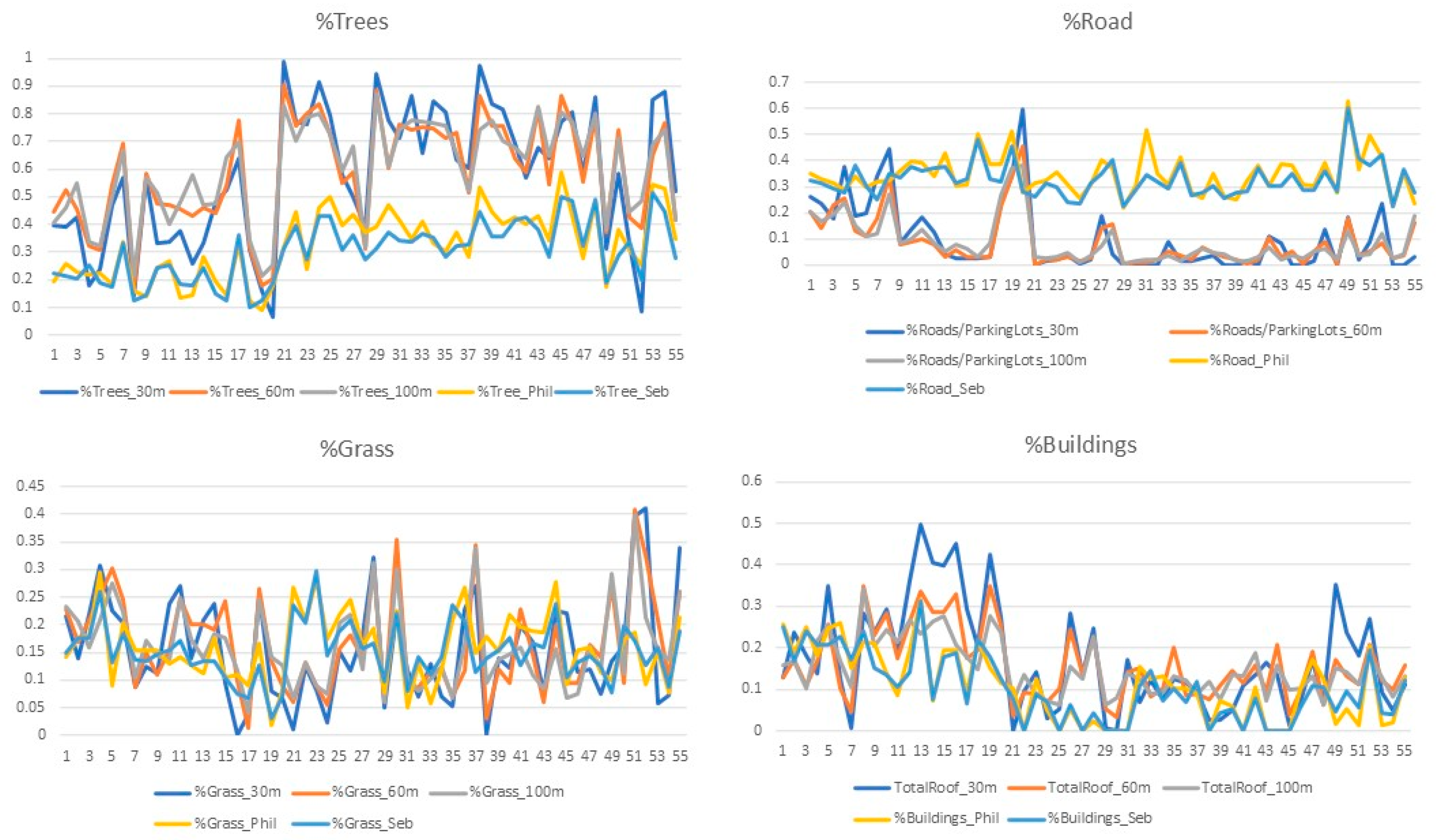

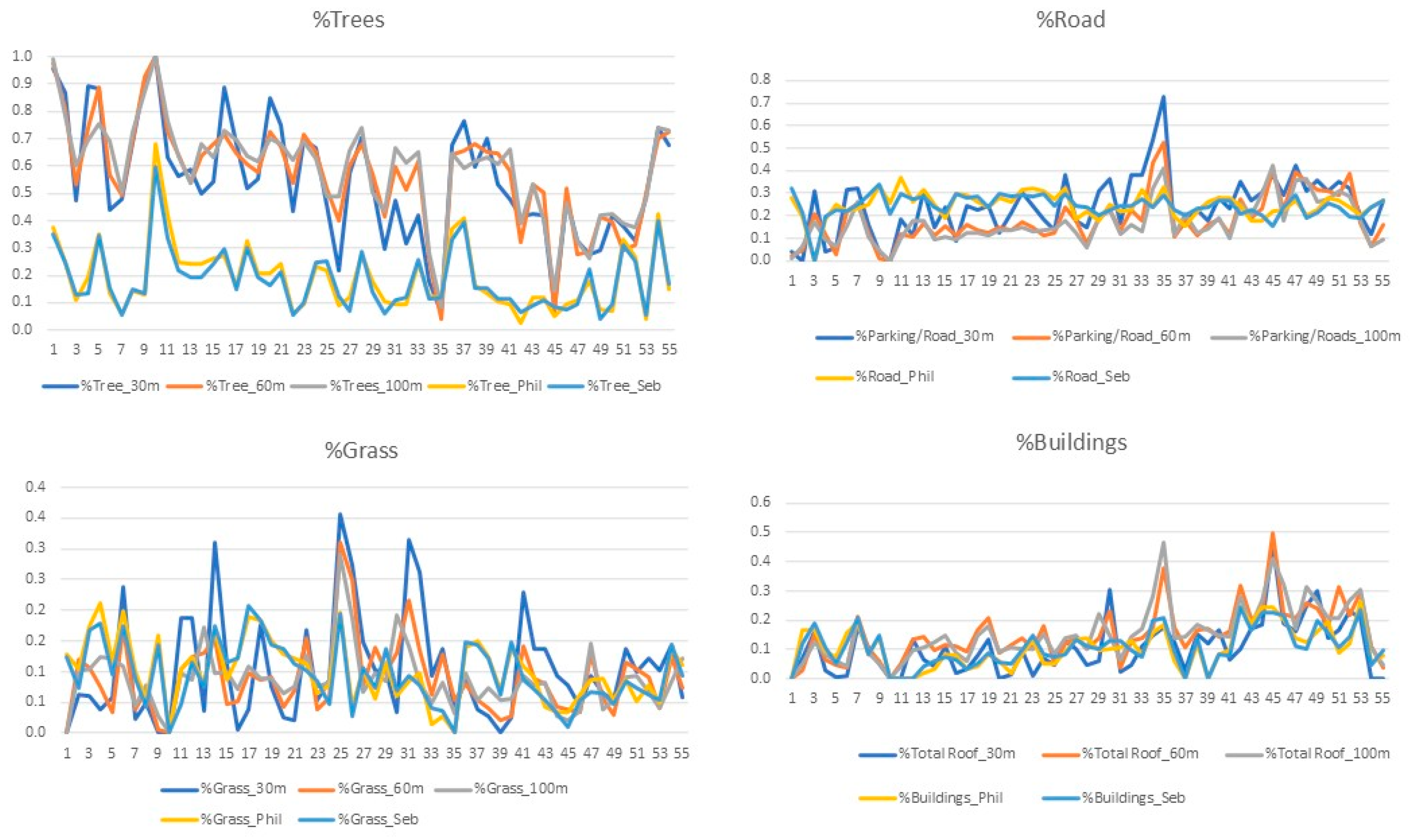

Firstly, our experiments showed that among the four major environmental elements examined, the coverage of trees that is calculated from aerial images with varying buffer sizes is always higher than those derived from street view images. That of roads is the opposite. This confirms our first hypothesis. Secondly, among the different environmental elements, trees are in the most concordance between the aerial and street views. Buildings are somewhat agreeable, while grass and roads are not. This confirms our second hypothesis. Thirdly, in residential neighborhoods with abundant greenness (study site 1), the coverage of trees corresponds better than in largely developed environments with limited greenness (study site 2). Buildings show the opposite pattern. This confirms our third hypothesis. And lastly, for the three buffer sizes experimented with for aerial images, trees are in greater concordance with street view images using the 30 m buffer. Low-rise buildings and grass agree better with the larger buffer sizes (60 m and 100 m), especially in relatively open environments. Roads agree better with larger buffers in green environments, but with smaller buffers in less green environments. This indicates that no single buffer size is optimal for all environmental elements and environmental settings, which disconfirms our fourth hypothesis. The choice of which buffer size to use when combining environmental properties derived from aerial and street view images together should consider both the environmental elements involved and the heterogeneity of environmental settings.

The current study is limited in scale as our two study sites are both located in the state of New Jersey in the United States. Although the two sites have different types of neighborhoods, their differences do not capture a wide range of environmental settings. Future studies could include more environmental types. Furthermore, our image segmentation approaches were based on well-established algorithms and existing training datasets. Limited by the scale of our study, we did not use a customized training dataset. Future studies at larger scales could collect a set of street view images to be annotated manually for such purposes. Our study also found the large areas of sky and roads in GSV images affect their concordances with aerial images. In future studies, preprocessing strategies could be considered before segmenting the GSV images, such as truncating the images to leave out the top and bottom quarters (or another portion, worth experimenting to find out) to better match a person’s visual field.