1. Introduction

Structural health monitoring (SHM) encompasses methods for assessing the condition and integrity of civil infrastructure through continuous or periodic measurement of structural responses. The fundamental objective is to detect, localize, and characterize damage at the earliest possible stage to enable timely maintenance interventions and prevent catastrophic failures. Reliable and continuous SHM is critical for ensuring the safety and serviceability of bridge infrastructure. Bridge SHM integrates sensing, data acquisition, and analytical models to monitor structural responses under operational and environmental loads. Traditional vibration-based methods extract modal parameters (such as natural frequencies, damping ratios, and mode shapes) to infer changes in stiffness and detect damage [

1]. Building on the statistical pattern recognition paradigm developed over the past three decades, researchers have advanced from traditional modal analysis to machine learning and probabilistic methods that improve reliability and enable population-based SHM [

2]. However, data-driven approaches are typically developed using laboratory or simulated data and do not easily transfer to in-service structures. This limitation comes from the scarcity of labeled damage data in the field and from domain shift introduced by operational conditions such as temperature variation, traffic loading, and material aging [

3]. Addressing these challenges is a necessary step toward developing deployable, real-time systems for damage detection.

Originally developed for speech and audio processing, cepstral analysis has been effectively extended to SHM applications, offering reliable damage-sensitive features that remain largely unaffected by variations in operational or environmental conditions. Balsamo et al. first introduced Mel-Frequency Cepstral Coefficients (MFCCs) for SHM purposes, showing they were more robust and efficient than traditional autoregressive features for damage detection [

4]. Morgantini et al. later linked power cepstral coefficients to modal properties, employing Principal Component Analysis to improve robustness [

5]. The utility of these features grows when combined with machine learning. For instance, Tronci et al. used cepstral coefficients and applied transfer learning with a neural network to classify damage despite data scarcity [

6]. These studies confirm the diagnostic power of cepstral analysis in SHM.

Early applications of artificial neural networks for SHM employed vibration-based features, such as modal parameters or statistical properties of train-induced responses, to estimate damage location and severity and to develop novelty-detection indices [

7]. However, these shallow architectures and simplified features limited the ability to exploit temporal information in vibration signals. These limitations motivated the adoption of deep sequence models, particularly Long Short-Term Memory (LSTM) networks, which can directly process raw or minimally processed time-series data and capture long-range dependencies in structural responses [

8]. LSTMs have been used in classification tasks to identify multiple damage states on a full-scale bridge, outperforming convolutional neural networks (CNNs) in scenarios with non-localized damage [

9]. In another study, researchers combined LSTMs with CNNs to enhance spatial feature learning and improve damage localization [

10]. Recent studies have shown that adding attention mechanisms [

11] to sequence models can enhance feature extraction and improve damage discrimination in vibration-based SHM [

12]. Sun et al. reviewed recent advances in bridge SHM enabled by big data and artificial intelligence. The paper outlines how modern sensing systems and large-scale data infrastructures have transformed SHM from traditional feature-based approaches to intelligent, data-driven monitoring. Machine learning and deep learning models (such as CNNs, LSTMs, and autoencoders) are shown to improve damage detection and pattern recognition, while integration with cloud computing and digital twins enhances real-time assessment and predictive maintenance. The review emphasizes persistent challenges in data quality, interpretability, and cross-domain generalization, suggesting that hybrid, physics-informed, and transfer learning frameworks are critical to advancing intelligent SHM for large-scale bridge systems [

13]. Similarly, Zinno et al. provided a comprehensive review of AI-based SHM of bridges, categorizing algorithms such as SVM, random forest, CNN, and LSTM within a unified pattern-recognition framework and highlighting their integration with IoT and big-data platforms for real-time bridge monitoring [

14]. In a broader context, Yodo et al. advocate for condition-based monitoring as a key strategy for resilient infrastructure management, highlighting how AI- and ML-driven approaches, including ANN, SVM, and LSTM algorithms, enable early fault detection and predictive maintenance across complex energy systems [

15].

A persistent challenge for data-driven SHM is the domain-shift problem [

16]. Models trained on simulated or laboratory data often perform poorly when applied to real bridges because of differences in structural properties and operating conditions. Yano et al. demonstrated that feature-based domain adaptation can align hand-crafted features between source and target domains and validated this approach on multiple in-service bridges, including the Z24 benchmark, providing a crucial real-world proof-of-concept for transfer learning in SHM [

17]. Bao et al. addressed the same problem at the model level by pre-training a convolutional network on a Finite Element dataset and fine-tuning it with only 1% of real data from a laboratory structure, achieving over 98% accuracy [

18]. Pan et al. pre-trained a convolutional network on a large anomaly database from one bridge and fine-tuned it for a second bridge, improving the F1-score by 60% with very little target data and demonstrating the efficiency of model-based transfer for large populations of structures [

19]. Giglioni et al. developed a domain adaptation framework for population-based SHM that transfers damage information between bridges using vibration-based features. By aligning feature distributions from labeled and unlabeled structures through Normal Condition Alignment and Joint Domain Adaptation, the method enables accurate cross-bridge damage detection and classification. The study demonstrated that domain adaptation can overcome data scarcity and distribution shifts, supporting network-level bridge monitoring [

20].

Despite recent advances in feature engineering and deep learning for SHM, several fundamental challenges remain unresolved. First, most data-driven approaches depend on extensive labeled data from each monitored structure for training or calibration, a requirement that is impractical given the scarcity of damage observations in operational infrastructure. Second, although transfer learning methods have shown promise in adapting models across domains, they typically require target-domain data for fine-tuning, limiting their applicability to structures where no damage history exists. Third, while LSTMs have demonstrated strong classification performance on laboratory datasets, their effectiveness in strict zero-shot settings on full-scale bridges remains largely unexplored. Finally, existing frameworks rarely integrate real-time processing capability with cross-domain generalization; these requirements are essential for deployable systems but are typically addressed in isolation.

This work directly addresses these limitations by proposing a framework that operates without requiring any calibration data from the target structure. The approach combines damage-sensitive cepstral features with a streaming LSTM architecture that preserves temporal dependencies through state carry-over, enabling low-latency inference suitable for continuous monitoring. By training on laboratory datasets and applying probabilistic scoring through PLDA, the framework performs both damage classification and novelty detection in a true zero-shot setting. Validation on the full-scale Z24 Bridge benchmark demonstrates that this integration of robust feature representation with streaming sequence modeling provides a practical pathway toward deployable SHM systems capable of transferring knowledge across structures without retraining.

The remainder of the paper is organized as follows.

Section 2 outlines the proposed methodology.

Section 3 introduces the experimental datasets and evaluation framework.

Section 4 reports the results, highlighting both laboratory performance and zero-shot application to the Z24 Bridge. Finally,

Section 5 concludes with key findings and future research directions.

2. Methodology

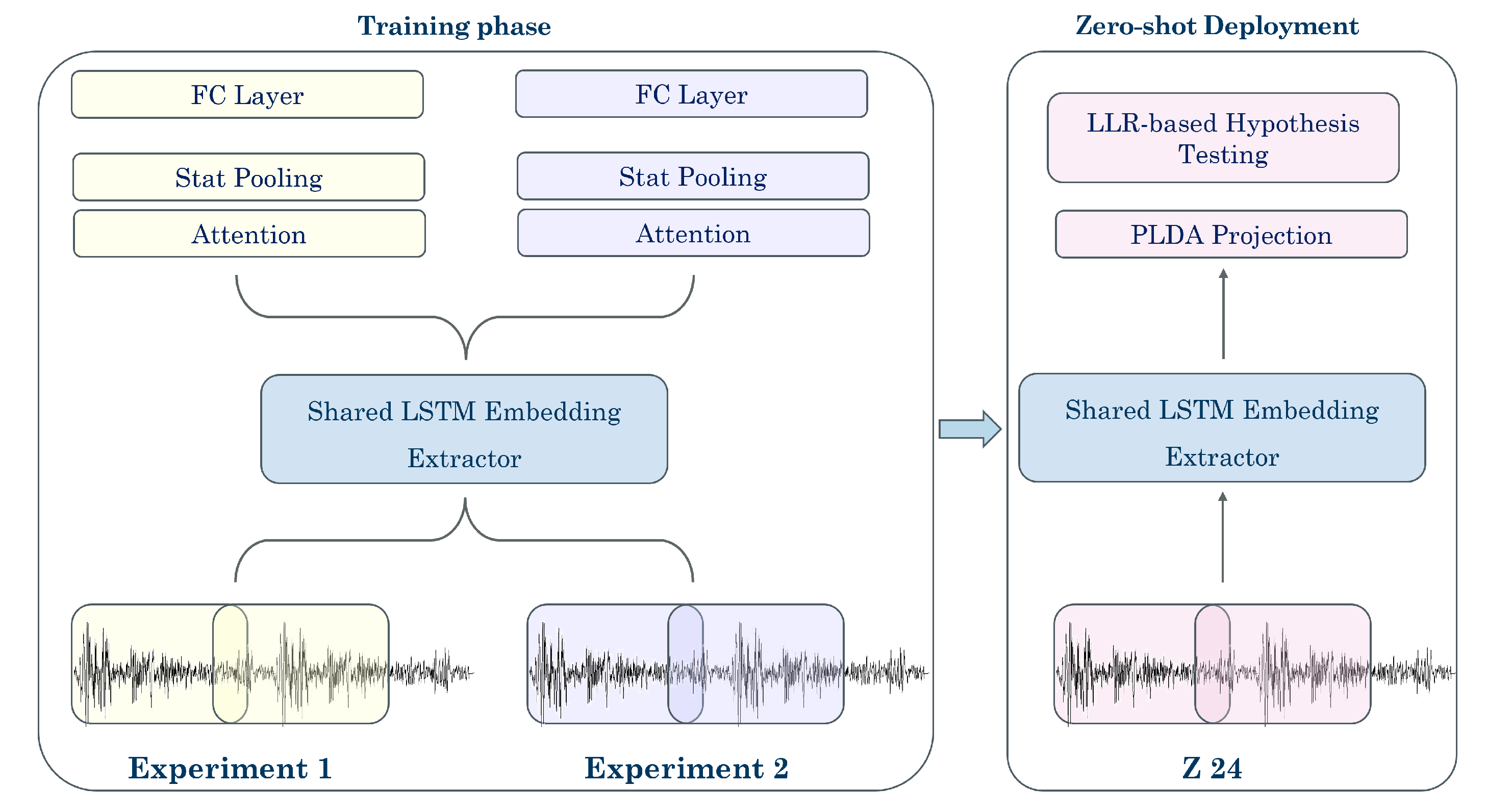

The proposed framework consists of three main stages, summarized below and illustrated in

Figure 1. First, signal pre-processing converts raw acceleration time histories into damage-sensitive cepstral coefficients through windowing, frequency-domain transformation, and the discrete cosine transform. Second, LSTM encoding with attention processes the resulting sequence of cepstral feature vectors using a stacked LSTM network that preserves temporal dependencies through state carry-over between overlapping windows. A multi-head attention mechanism emphasizes the most informative portions of the response, and statistical pooling aggregates sequence information into a fixed-length embedding. Third, PLDA-based classification and novelty detection project these embeddings into a latent space where damage states are represented as Gaussian distributions, enabling both supervised classification and zero-shot hypothesis testing without retraining. This modular design allows the LSTM encoder to be trained on laboratory data and subsequently applied directly to unseen structures through the PLDA back-end.

The proposed framework begins by transforming raw acceleration signals into a sequence of damage-sensitive cepstral coefficients [

21]. All acceleration signals are first resampled to a uniform sampling rate of 100 Hz. The resampled time histories are then divided into overlapping windows of 400 samples (4.0 s) with 60% overlap (240 samples), yielding a hop length of 1.6 s between consecutive frames. Each window is pre-emphasized with a coefficient of 0.97, multiplied by a Hamming window, and converted to the frequency domain via Fast Fourier Transform. A triangular filter bank is applied, log energies are computed, and an inverse discrete cosine transform produces 20 cepstral coefficients per window, with the first coefficient replaced by log energy. This representation reduces sensitivity to environmental variation while retaining information related to structural dynamics. More details on the derivation of the cepstral coefficients can be found in [

22].

Having established this feature representation, the next stage of the framework employs a deep stacked LSTM network with

l layers to model temporal dependencies. At each layer

, a single cell with shared parameters

processes all windows. At window step

w, the first layer receives the current input,

, and the previous state

to produce its new state. This hidden state is passed as follows: (1) upward to the next layer at the same step, and (2) carried forward to the next window step

. State propagation across layers and time is defined by

with

and zero initial states. Overlap between windows mitigates boundary artifacts, while state carry-over preserves continuity in the stream. The sequence of hidden states from the final layer represents a high-level abstraction of the temporal dynamics.

After the LSTM encoder, the sequence of hidden states is processed by a multi-head self-attention mechanism. Instead of averaging or taking the last state, this module learns to assign different weights to different time steps, effectively highlighting the most informative portions of the signal. Multiple attention heads operate in parallel so that each head can focus on a different temporal pattern (e.g., sudden transients or slower trends). Their outputs are concatenated to form a context-aware sequence representation. The context-aware sequence is then converted into a fixed-length embedding by computing summary statistics across time. In this work, both the element-wise mean and standard deviation are taken to capture first- and second-order information about the signal. This pooled vector is fed into a fully connected output layer, which produces raw class scores and, after a softmax transformation, damage-state probabilities for each time window. The streaming LSTM benchmark is schematically shown in

Figure 2:

Because the available experimental datasets represent very different structures and damage scenarios, the framework uses a multi-task learning (MTL) approach. Each dataset (e.g., NEESR, LANL) is treated as a separate but related task. The deep recurrent layers of the streaming LSTM act as a shared backbone that learns general patterns of structural damage, while task-specific heads (attention, pooling, and classifier layers) model the distinct patterns of each experiment. For a set of

N tasks with datasets

, the total training loss is

where

is the categorical cross-entropy for task

k, and where

weights the contribution of each task. To balance the influence of tasks with potentially different numbers of classes, the weight for each task is set inversely proportional to its number of output classes:

where

denotes the number of classes in task

k and

represents the sum of classes in all the other tasks. For the present study, both NEESR (Task A) and LANL (Task B) had 4 damage states, yielding

. This weighting scheme ensured that tasks with more classes did not dominate the gradient updates during training, allowing the shared backbone to learn equally from both experimental datasets.

Once the multi-task backbone is trained, it is frozen and used only as a feature extractor. Each incoming window from the unseen Z24 Bridge is mapped to an embedding

at the output of the shared LSTM encoder. Because these raw embeddings may still carry both damage information and nuisance variation from their original domain, they are projected into the latent space learned by a Probabilistic Linear Discriminant Analysis (PLDA) [

23] model fitted on the laboratory datasets. PLDA explicitly models between-class (damage) and within-class (nuisance/domain) variability, creating a representation in which damage states form well-separated Gaussian clusters and domain effects are down-weighted. This projection means that new embeddings from an unseen structure are expressed in the same coordinates as the laboratory datasets’ embedding, making class likelihoods directly comparable without retraining. Class membership for each projected embedding is evaluated by a log-likelihood ratio (LLR),

and the predicted label is

. Once class membership scores are obtained, the same generative model can be used to test whether a sample belongs to any known class at all. This extends the scoring step naturally into novelty detection:

A decision is made by thresholding the log-likelihood ratio:

The threshold is chosen on held-out validation data to set the desired trade-off between false alarms and missed detections, and it can be adjusted for different operating points in practice. It should be noted that the metric here is inverse LLR. Finally, to reflect realistic deployment, sensor-level scores are aggregated rather than evaluated independently. Log-likelihood ratios from individual channels are summed either across all sensors or within predefined groups, producing a single joint score per configuration. This mirrors how SHM systems are typically operated, where decisions are based on the collective evidence from multiple sensors.

Figure 1 illustrates the overall workflow. During training, sequences from multiple experimental datasets are fed to a shared LSTM backbone that learns generalizable features of structural response, while task-specific heads model dataset-dependent variations. In the zero-shot phase, the trained extractor is frozen and applied to the Z24 Bridge, generating embeddings that are projected into the latent space learned from the laboratory datasets. PLDA scoring is then used to assign class-membership likelihoods and perform novelty detection. By decoupling feature extraction from downstream scoring, the framework can apply a single trained model to unseen structures without additional calibration or retraining.

3. Experimental Data

The available datasets for training, validation, and evaluation are as follows. The first laboratory dataset is the NEESR reinforced-concrete column tests [

24], where scaled bridge columns instrumented with embedded “smart aggregates” are subjected to cyclic and seismic loading; broadband random excitation after each loading stage yields high-frequency time histories (20 kHz) from 9–12 sensors at different elevations. The second laboratory dataset is the LANL Bookshelf experiment [

25], a three-story aluminum frame excited by band-limited white noise, with progressive connection damage simulated by loosening bolts and responses measured by 24 accelerometers at 1600 Hz for 5.12 s records. These two datasets provide complementary structural types, materials, and damage mechanisms for learning generalizable features.

The NEESR dataset contains multiple progressive damage experiments for both hysteresis-induced damage and earthquake-induced damage. To balance classification granularity with sufficient training samples per class and to focus the model on learning primary damage mechanisms rather than fine-grained severity distinctions, the progressive damage states are grouped into four classes based on damage severity: Hysteresis Low Severity, Hysteresis High Severity, Earthquake Low Severity, and Earthquake High Severity. This grouping was determined through engineering judgment. While this grouping facilitates learning of the primary damage mechanisms, the boundaries between severity categories represent discretization of continuous damage progression and may introduce some ambiguity in classification.

The laboratory datasets were deliberately chosen to capture a wide range of structural characteristics that affect how damage develops and can be detected. They cover different materials (concrete and aluminum), structural configurations (columns and frames), and scales (from small components to full-scale elements) so the model is exposed to diverse dynamic behaviors and failure patterns. The damage scenarios include bolt loosening, hysteretic cracking, and earthquake-induced deterioration, including both connection-related and material-related forms of damage. The datasets also differ in excitation type, including ambient, harmonic, impulse, and seismic inputs. Since no single dataset includes all damage types, the multi-task learning framework should learn features that are broadly damage-sensitive rather than dataset-specific.

The unseen Z24 Bridge benchmark [

26,

27] serves as the experimental dataset validation. This pre-stressed concrete box-girder bridge in Switzerland was instrumented before its demolition to create a unique, large-scale dataset. The Progressive Damage Test (PDT) documents 17 systematically induced damage states, including pier settlements, spalling of concrete, tendon ruptures, and hinge failures, measured by a grid of accelerometers across the spans and piers. The damages are summarized in

Table 1. The data for each damage case are recorded sequentially in nine distinct “setups” due to channel limits. The sensor placements are shown in

Figure 3. The full Z24 Progressive Damage Test dataset contains 263 accelerometer channels distributed across nine measurement setups due to data acquisition system limitations. In this work, 124 accelerometers were used, comprising all the sensors available across the measurement setups that provided consistent coverage throughout the damage progression test. This subset included 134 span-mounted sensors distributed across the three bridge spans (100-series, 200-series, and 300-series designations) and 15 pier-mounted sensors at both the Koppigen and Utzenstorf piers (400-series and 500-series). The selected sensors provided comprehensive spatial coverage to capture global structural behavior across all damage scenarios. All measurements were sampled at 100 Hz for 81.92 s per record, ensuring sufficient frequency content and duration for damage-sensitive feature extraction.

Performance on the laboratory datasets (NEESR and LANL) is assessed using standard classification metrics (accuracy, precision, recall, F1, confusion matrix). For the zero-shot evaluation on Z24, two complementary metrics are reported: (i) the log-likelihood ratio (LLR) from the PLDA model to quantify differences between damage states and the healthy baseline, and (ii) the Equal Error Rate (EER) for the binary task of distinguishing any damage from the healthy state. This protocol tests both classification performance on the laboratory datasets and the model’s effectiveness when applied to an unseen, full-scale bridge without retraining.

4. Results and Discussion

The results are organized to reflect the progression from controlled experiments to real-world evaluation. Performance on the laboratory datasets, NEESR and LANL, is first presented to establish a reference for the stacked LSTM model, which is subsequently applied to the Z24 Bridge dataset in a zero-shot setting, with analyses covering LLR progression tracking and Detection Error Trade-off behavior.

4.1. Supervised Learning Performance on Laboratory Datasets

All the models were implemented in PyTorch v2.2.2 with CUDA 12.1 support and trained on a workstation equipped with an Intel Core i9–13900F CPU and an NVIDIA GeForce RTX 4090 GPU (64 GB system memory). Training of the multitask LSTM backbone on the laboratory datasets (NEESR and LANL) was performed on the CPU and required approximately 4 h for 100 epochs with a batch size of 32. The PLDA back-end was also trained on the CPU and completed in real time. End-to-end evaluation of the complete test set required approximately 2–3 min. The full pipeline (from raw acceleration signals to damage classification) therefore remained computationally efficient and practical for continuous monitoring applications.

Performance on the NEESR (Task A) and LANL (Task B) datasets was first evaluated to establish a baseline for the stacked LSTM architecture. The model achieved high classification accuracy on both tasks (

Table 2), demonstrating its ability to learn discriminative features across very different structural systems. In the NEESR columns, which involved hysteresis- and earthquake-induced damage, the LSTM achieved 98.3% accuracy with perfect separation between the two main damage mechanisms. Minor errors were confined to distinctions within the same mechanism (e.g., different hysteresis severities). This was expected, given that the NEESR damage labels were grouped into discrete severity categories through engineering judgment (see

Section 3); the boundaries between these categories represent discretization of continuous damage progression, and progressive damage states near these boundaries may exhibit overlapping response characteristics. Importantly, the model successfully distinguished between fundamentally different damage mechanisms (hysteresis vs. earthquake), with confusion limited to severity levels within the same mechanism. In the LANL Bookshelf experiment, which required discrimination among three progressive bolt-loosening levels and an undamaged state, the LSTM achieved 96.2% accuracy. The primary confusion occurred between the undamaged condition and moderate loosening, indicating overlapping response patterns at low damage levels, but the severe damage states remained well separated from the healthy state. A detailed breakdown of these misclassifications for both tasks is shown in the confusion matrices of

Figure 4. Overall, the results demonstrate that the LSTM learns highly discriminative and robust embeddings on the laboratory datasets, providing a strong basis for subsequent PLDA scoring and zero-shot evaluation on the Z24 Bridge.

The reported metrics in

Table 2 represent single-model performance after training with the specified hyperparameters. While multiple random initializations and cross-validation would provide confidence intervals on these metrics, the confusion matrices in

Figure 4 demonstrate that the classification errors followed interpretable patterns (confusion primarily within damage severity levels of the same mechanism rather than between different mechanisms), suggesting that the model learned robust damage-sensitive features. Future work should include systematic statistical validation across multiple training runs to fully characterize performance variability.

To validate the discriminative power of the learned embeddings on the laboratory datasets, a PLDA model was fitted on the training embeddings produced by the stacked LSTM backbone. The embeddings were standardized, projected into a low-dimensional latent space, and then scored on a completely held-out test set, which was not used in training the LSTM or the PLDA model. The result shows an overall accuracy of 96.69% for classification. Consistent with the classification performance, these results show that the LSTM backbone yields embeddings that project cleanly into the PLDA latent space, providing a reliable basis for subsequent zero-shot evaluation on the Z24 Bridge.

4.2. Application to Full-Scale Bridge Benchmark

To validate the framework on a real structure under strict holdout conditions, the trained model was applied to the Z24 Bridge dataset, which was never used during the model development. After multi-task training on the LANL and NEESR datasets, the stacked LSTM backbone was frozen. The PLDA model was fitted exclusively on embeddings from these two experimental datasets. Embeddings from the raw acceleration records of the Z24 Bridge were then generated using the frozen LSTM and projected into this pre-existing PLDA latent space. Because neither the LSTM nor the PLDA model was exposed to any Z24 data during training, this evaluation represents a realistic deployment scenario in which class likelihoods and novelty scores are computed directly from the projection.

For each Z24 damage scenario, PLDA scoring was applied to produce LLRs relative to the undamaged baseline. In practical SHM deployments, undamaged data from a structure are typically available and can serve as a reference. Deviations from this baseline indicate novelty and, therefore, potential damage. By comparing the LLRs of different damage scenarios to a chosen threshold, each state can be diagnosed as either consistent with the healthy baseline (Label 0) or as a novel/damaged condition. Higher LLRs correspond to greater statistical deviation from the healthy state and, thus, a higher likelihood or severity of damage. This allows the same PLDA mechanism to be used for both class scoring and novelty detection.

Figure 5 shows that the LSTM captured the general trend of progressive deterioration: low scores for early minor interventions, a marked increase at major events such as the concrete hinge failure (Label 11), and the highest scores at the most severe tendon rupture states and failure of the concrete hinges. Some variability remained in the early stages, but the overall trajectory reflects increasing damage severity as the test campaign progressed and no damage scenario was misclassified as undamage, which is the goal of SHM.

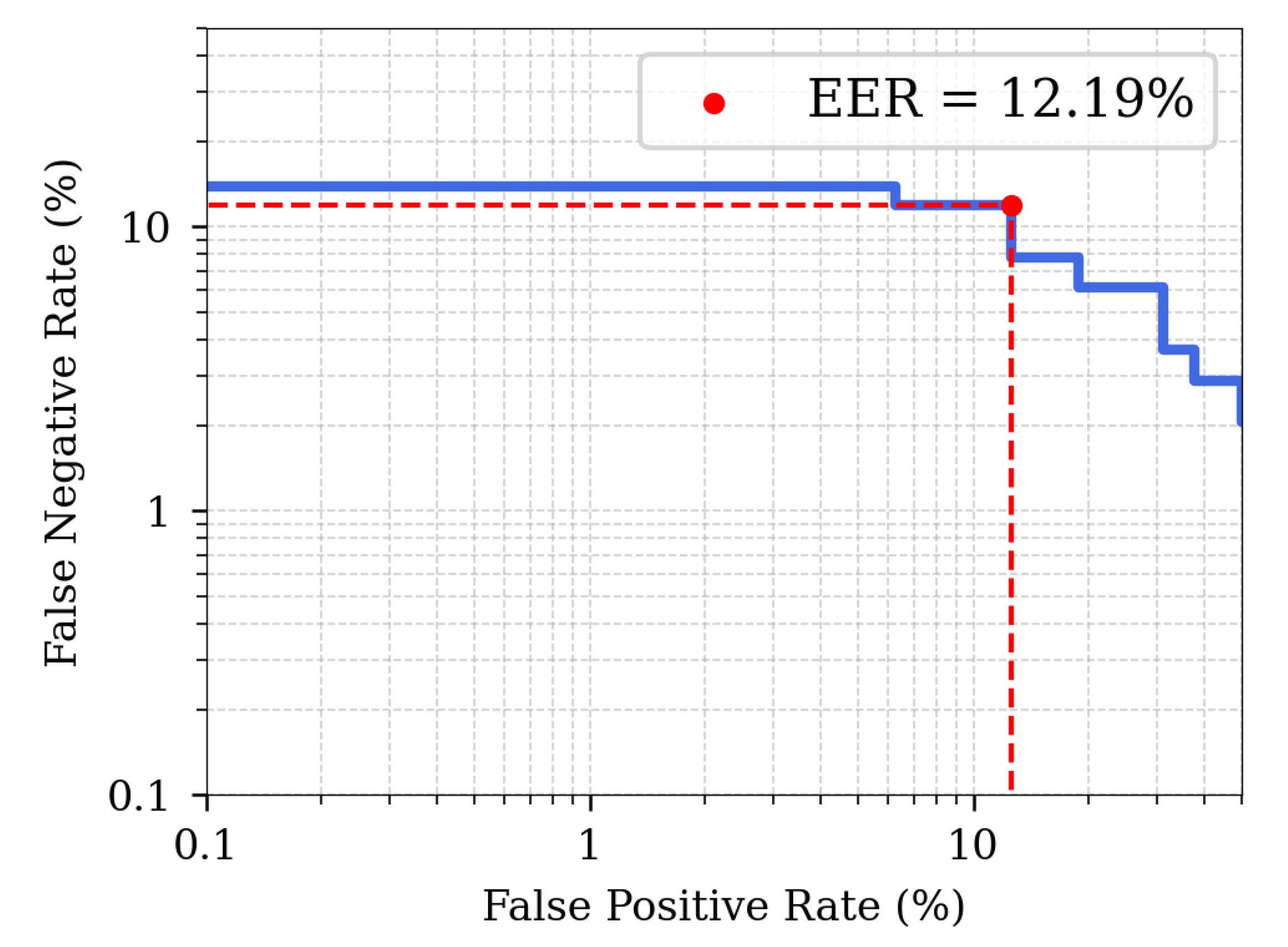

Beyond tracking progression with LLRs, it is also crucial to assess how well the framework can distinguish a healthy structure from any form of damage in a single decision step. Accordingly, the framework was evaluated on the binary task of separating the undamaged condition from all damaged states, directly measuring its ability to detect damage—a primary objective in SHM. A Detection Error Trade-off (DET) curve was generated by varying the decision threshold

on the PLDA scores and plotting the False Negative Rate (FNR) against the False Positive Rate (FPR).

Figure 6 illustrates the trade-off between missed detections and false alarms. The Equal Error Rate (EER), the point where FNR equals FPR, provided a single summary metric of performance. For the LSTM model, the EER corresponded to 12.2%, indicating that damage versus undamaged states can be correctly identified with approximately 87.8% accuracy in this zero-shot setting.

According to the hypothesis test defined in the

Section 2,

corresponds to the healthy baseline and

to damaged conditions. Because inverse LLRs are used here, the hypotheses are reversed relative to the standard formulation: FNR now represents incorrectly classifying a healthy state as damaged, while FPR represents incorrectly classifying a damaged state as healthy. Since missing a true damage event (a high FPR under this reversed definition) is typically more critical than issuing a false alarm, maintaining a low FPR is generally preferred in safety-critical monitoring.

These behaviors can be better understood by examining the extremes of the DET curve. When the FNR was driven close to zero (around 2% at its minimum in the plot), the FPR rose to approximately 40–50%, showing that reducing false alarms too aggressively comes at the cost of failing to correctly identify damage, a dangerous outcome for SHM. Conversely, when the FPR approached zero (around 0.1%), the FNR increased to about 13–14%, meaning that if one prioritizes eliminating false identification of damaged states as healthy (critical for safety), the system must tolerate roughly 13–14% false alarms, a trade-off that may be acceptable in practice. Between these extremes lies the EER, representing a balanced operating point.

Overall, the results demonstrate that the LSTM backbone, trained solely on laboratory data, generates embeddings that can be directly evaluated on the Z24 Bridge without any structure-specific retraining. The LLR analysis captures the progression of damage, while the DET curve quantifies binary detection performance, confirming that the framework successfully enables zero-shot structural health monitoring in realistic conditions.

These results were obtained under a strict zero-shot protocol: no data from the Z24 Bridge (neither baseline nor damaged states) were used during model training or calibration. The LSTM backbone and PLDA model were trained exclusively on laboratory datasets (NEESR and LANL) and then applied to Z24 for the first time at inference. This setting differs fundamentally from traditional SHM approaches, which require baseline data from the target structure to establish reference conditions for damage detection. Consequently, direct quantitative comparison with conventional vibration-based methods or supervised learning approaches is not meaningful, as those methods cannot operate without target-structure data. The framework’s ability to achieve 87.8% detection accuracy without any Z24-specific training demonstrates the viability of zero-shot structural health monitoring, addressing a deployment scenario that traditional methods cannot handle.

5. Conclusions

This study introduces a real-time, generalizable framework for vibration-based structural health monitoring that combines streaming neural networks with probabilistic modeling to bridge the gap between controlled laboratory experiments and in-service infrastructure. By transforming raw acceleration data into damage-sensitive cepstral features and processing them through a stacked LSTM, the framework learns discriminative embeddings from diverse experimental datasets and transfers this knowledge directly to an unseen full-scale bridge without retraining. The integration of Probabilistic Linear Discriminant Analysis enables interpretable classification and robust hypothesis testing, providing a principled way to quantify deviations from a healthy baseline and to detect damage in a zero-shot setting.

On the laboratory datasets (NEESR and LANL), the stacked LSTM achieved high classification accuracies of 98.3% and 96.2%, respectively, and produced well-structured embeddings with clear separation between damage classes. When applied to the unseen Z24 Bridge, the same trained model successfully captured the progression of damage through log-likelihood ratios, showing low scores for early minor interventions, a marked increase at major events such as the concrete hinge failure, and the highest scores at the most severe tendon rupture states. In the binary damage-versus-undamaged test, the Detection Error Trade-off curve yielded an Equal Error Rate of 12.2%, corresponding to approximately 87.8% correct identification in this strict zero-shot scenario. These results confirm that the proposed methodology can extract damage-sensitive features from laboratory data and apply them to real-world infrastructure without retraining.

In summary, these results demonstrate three key outcomes of this study: (1) the proposed multitask–PLDA framework can learn generalizable damage-sensitive representations from heterogeneous laboratory datasets; (2) the learned embeddings can be directly applied to a full-scale bridge without any retraining or calibration; and (3) the framework maintains interpretable probabilistic scoring performance under strict zero-shot conditions. Together, these findings confirm the feasibility of using knowledge learned from controlled experiments to detect and quantify damage in operational structures.

Collectively, these findings demonstrate that the framework can simultaneously handle streaming input data and generalize across domains, two requirements rarely addressed together in prior SHM work. Although the present study focused on a single full-scale bridge as the test case, the methodology is broadly applicable and extensible. Future work will be directed toward two main extensions. First, more expressive backbone networks, including attention-based and hybrid architectures, will be examined to better capture long-term temporal dependencies across experiments. Second, probabilistic variants of the PLDA model and alternative generative scoring approaches will be investigated to incorporate uncertainty quantification and enable continuous monitoring applications. These extensions are expected to further enhance the generalization and interpretability of cross-experiment damage detection frameworks. The results presented here establish a promising path toward practical, output-only SHM systems capable of delivering actionable insights for infrastructure resilience in real time.