1. Introduction

For years now, the primary sector has been enjoying the ever-increasing development of technology: robotics [

1,

2], Internet of Things (IoT) [

3,

4], and remote sensing [

5] are established technologies in the world of agriculture, which is increasingly taking on the meaning of Smart Agriculture. However, the agriculture industry sector is suffering in many countries due to the lack of labor force, due to the aging of the population, the rural–urban migration, and the lack of interest among younger generations about agricultural careers.

The high cost of training skilled operators, the low flexibility of the conditions necessary for training, like the strong dependence on seasonality, the need to train culturally multi-faceted personnel among non-homogeneous operators, and the delayed feedback on results are the reasons why the development of new solutions for training operators in the agricultural industry sector is required. These new solutions can address these issues by incorporating cutting-edge technologies, such as virtual reality (VR) and haptics, into the primary sector, developing a training platform available throughout the year with high flexibility and no safety issues.

This work takes place in the context of the POC NODES “DEMETRA” multidisciplinary research project for technological innovation in the agro-industry sector. It is aimed at evaluating the reliability of reality-based productions and offers optimal digital tools for training agricultural operators. DEMETRA aims to develop a platform for training agricultural operators in a virtual environment, leveraging human-in-the-loop techniques and offering innovative levels of immersiveness. As proof-of-concepts (PoCs) of the related technologies, the work deals with the design of an environment for virtual training, dedicated to pruning activities. Compared to existing simulators in the agriculture industry, DEMETRA’s platform features highly detailed and realistic 3D reconstructions of trees, enhancing the immersiveness of the training simulator and enabling operators to engage in complex agricultural scenarios with true-to-life interactions, significantly improving the verisimilitude of the training experience.

This paper develops from the complex task of creating parametric digital twins of real trees and on the advanced techniques used for the accurate simulation of the agricultural environment for operator training. Additionally, it describes various methods employed to simulate interactions with the trees, as well as the VR and haptic techniques used to enhance immersive experience. Finally, an assessment on the sense of presence (SoP) perceived by the user is presented; the interaction between human and virtual environments plays a pivotal role in enhancing perceived immersion, the learning effect, and the realism during the training activity [

6], so a SoP assessment is crucial when developing interactive systems, such as VR-based training platforms. In this study, the level of presence felt within the virtual environment has been measured through the Igroup Presence Questionnaire (IPQ) [

7].

The current state of research in the panorama of the digital reconstruction of greenery presents wide margins for investigation and in-depth analysis due to the organic and complex nature of the arboreal elements. The objective of the experiments is to validate the creation of a dynamic VR scenario with the highest possible level of truthfulness to real vegetation, which will then be exploited through multisensory tools (such as haptic gloves and vocal instructions) to allow maximum immersion with minimum response times.

The simulation takes place by cutting the model of a grafted hazelnut tree, reconstructed through an integrated survey between laser scanner and photogrammetry, followed by the parameterization of the individual branches and the insertion of the model into a suitable virtual environment. This project stands out because, unlike the most common laser scanner acquisitions of immobile objects, it addresses the age-old and difficult challenge of surveying during micro-movements caused by air displacement. The high precision of laser tools, integrated with the reconstruction functions of software such as SpeedTree [

8], has recently allowed an alternative workflow to convert imperfect photogrammetric models into realistic reconstructions down to the details, whose impact in virtual training can improve understanding of techniques, immersion, involvement, training accuracy, and various other qualities.

This study is therefore a first step in continuous experimentation to test high-fidelity simulations and optimize virtual training processes. This activity therefore presents an interdisciplinary character between graphic topology and simulation operations, exploiting the collaboration between researchers and agronomists in the innovation of specialist work.

2. State of the Art

Radhakrishnan analyzed 78 different studies in the field of industrial training to assess the effectiveness of immersive virtual reality (IVR) training solutions [

9,

10,

11]. The study shows how most of the IVR training solutions are implemented in the manufacturing and healthcare fields, with almost half of the solutions providing procedural skills to the operators rather than perceptual-motor or decision-making skills. It is noteworthy to underline that none of the analyzed studies were developed in industry, thus highlighting the need to incorporate these technologies into the primary industrial sector. However, the agro-industry is not entirely new to VR applications, and early developments, such as FARMTASIA [

12] and AgriVillage [

13], have already helped introduce virtual training programs aimed at agricultural operators. Most of the solutions presented in the literature suffer from low realism and immersivity offered using screens and manual controllers instead of head-mounted displays (HMDs) and haptic interfaces. In fact, the use of these technologies could enhance the user experience and the transfer of real-life skills. Buttussi compared learner performance in a task under three conditions: high-fidelity IVR, medium-fidelity IVR, and desktop VR. Higher fidelity was shown to significantly enhance learner engagement and sense of presence compared to medium-fidelity IVR and desktop VR, proving their superior effectiveness for training purposes [

14]. This highlights the importance of high-fidelity reconstruction of environments through advanced 3D modeling techniques to maximize the benefits of IVR in training scenarios.

To date, high-quality instrumental digital reconstruction procedures are mainly practiced in professional or R&I sectors, often for large works such as engineering, architecture [

15] or archaeology [

16]. Attempts to detect the natural landscape [

17,

18] using laser scanners or ground cameras have always left unsolved problems of detailed vegetation reconstruction [

19]. In fact, current scanning tools do not allow acquiring stable data on organic elements subject to wind movement, making this task one of the most sought-after objectives of current research in the field of green representation.

A promising alternative to manual modeling, which would be excessively demanding and imprecise in the segmentation of complex databases, is the segmentation of shapes using AI [

20]. In recent years, this methodology has been developed and implemented to support various professions [

21], including vegetation [

22,

23], but to date it is not sufficiently complete or transferable to industrial application.

For these reasons, the most valid alternative for immersive simulation purposes seems to be a multidisciplinary approach that passes through scanning, semi-automatic post-production, and model parameterization [

24]. This procedure has already been tested in other areas of botany [

25] but apparently never for the purpose of creating virtual training or applications more associated with industry.

After creating a faithful simulation environment, it is necessary to develop an instrument to direct the operator who is using the simulator for training. For this purpose, marker-based motion capture systems are today among the most structured and widespread techniques for reproducing human movement, both in the professional field and in the entertainment industry [

26]. In many studies, motion capture technologies have been used to improve social presence and communication effectiveness [

27], providing many insights for designing more natural VR interactions [

28]. It has been assessed how the absence of avatars reduces engagement and degrades communication quality [

29]. For these reasons, the development of a virtual instructor is considered a suitable option also in a simulator for agriculture. The creation of a virtual instructor (i.e., NPC) requires the recording of the spatial movements of an expert botanist and has been achieved using optoelectronic technology. To complement this system, haptic gloves have been shown in the literature to be the most suitable control/navigation system to reproduce such manual tasks based on hand sensoriality [

30].

3. Materials and Methods

The use of advanced digital technologies, such as scanning and photogrammetry, can generate vast amounts of three-dimensional data, making it difficult to extract an archetypal representation of vegetation. Transforming the point cloud into simplified geometric models requires advanced knowledge in agronomy and botany fields, as well as targeted methodologies for efficient and meaningful digitization procedures.

In this sense, the use of parametric processes for the predictive development of tree branching enables highly realistic and efficient simulations, offering a significant advantage in green space representation for planning and management. This approach combines integrated surveys, such as photogrammetry and ultra-high-resolution laser scanning, with reality-based parametric remodeling. For the study of the hazelnut tree considered in this work, the workflow was structured to ensure maximum fidelity to reality, allowing for an accurate analysis and verisimilitude to allow botanical operators maximum immersion during cutting training. After preliminary field tests, the survey was conducted in the most suitable season to capture the detailed evolution of the canopy and branches. The first phases were immediate tests and checks in the field to obtain cognitive outputs, on which to structure the actual survey in the right season with respect to the evolution of the tree.

The aim, following the digital reconstruction of an identical hazelnut, is to insert it into a multisensory simulation for training botanical operators in the pruning and cutting phase of the branches. This action, to be taught with care and awareness in the simulation, has been agreed upon and supervised by experts in general arboriculture and tree cultivation: a virtual trainer shows the technique to the user, who must repeat it. During the demonstration, the trainer explains the functioning of the servo-controlled shear and shows how to approach the plant with caution, the shape of the branches to be selected (i.e., the thinner ones that develop with a twisted direction towards the inside and which would hinder the correct expansion of the plant), how to bend down, extend the arm to reach the distant branches, at what height to make the cut (i.e., at the last internodes highlighted by blooms and changes in thickness), how to stand up, and finally how to put the tools back. All these actions, with simulated training in a safe environment, allow the user to make multiple attempts without harming himself or the plants, providing clear benefits for the introductory phases of this type of work.

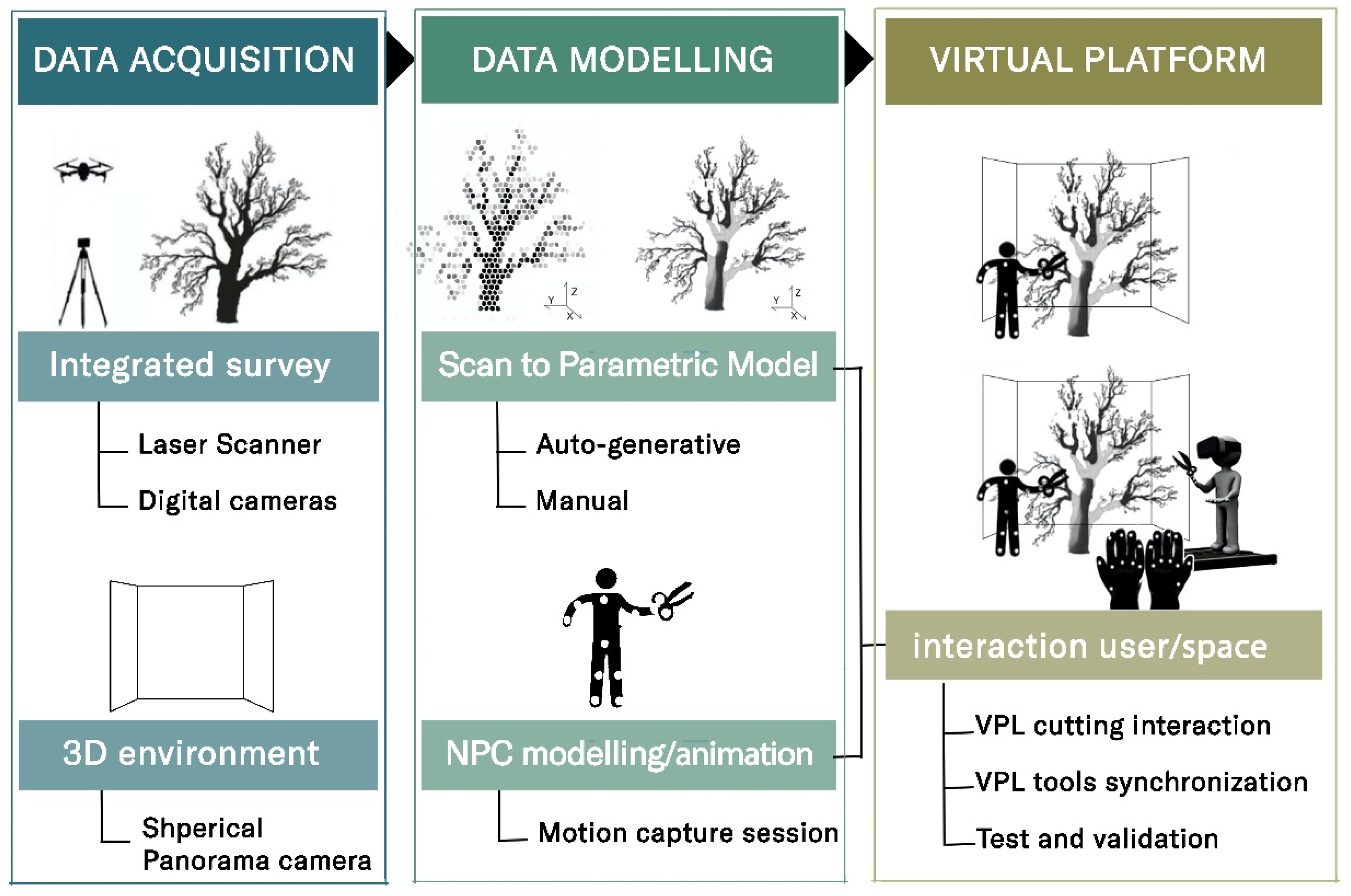

The complete workflow up to the simulation, schematized in

Figure 1, therefore, has a varied interdisciplinary nature:

Data acquisition campaign with integrated data acquisition survey;

Scan to model to full texturing process—parameterization of the model with completion of the missing parts;

Insertion of the parametric model into the graphics engine that will simulate the collisions;

Spherical panorama shooting for HDRI (high dynamic range image) and environmental composition;

Creation of the suit and motion capture session;

NPC modeling and animation;

Integration of haptic gloves into the virtual environment and development of the related logics;

Visual Programming Language (VPL) programming of branch cutting interactions;

VPL programming of synchronization between instruments (i.e., virtual shear and haptic gloves);

Tests and validation.

The structured method of study thus investigates the potential of scan-to-BIM procedures for the development of informative digital twins describing plants. Specifically, for this purpose, the research explores innovative methodological protocols, designed as PoC that can be replicated in other contexts, aiming at an effective representation of greenery through parameterized prototypes. The study then explores the interaction between 3D models, photogrammetric meshes, and point clouds within game engine platforms, aiming to create a single BIM-based (building information modeling) virtual scenario composed of highly efficient assets in terms of metric accuracy, visualization, and information. To further enhance this immersive experience, the research also presents a VR experiment based on Visual Scripting programming, designed to develop virtual tools for real-time querying of information associated with parametric models. The investigations and experimental applications within this work aim to demonstrate the potential impact of a multidisciplinary workflow designed for the large-scale use of informed and testable models.

4. Development

4.1. Survey Campaigns Experiments

The hazelnut detected is part of a culture of various specimens on the campus of the Università Cattolica del Sacro Cuore of Piacenza, and two acquisition sessions were carried out at different moments of its growth, at the beginning of October and at the end of January. The latter provided the cleanest data thanks to winter fading, and it is the most important part of the project, since pruning operations are carried out in wintertime (i.e., January, February).

The irregular shape of the arboreal architecture, the ascending branching, and the presence of a low and dense canopy led to the early exclusion of a single-sensor acquisition method, favoring a multi-resolution documentation approach suitable for describing the heterogeneous morphology of the vegetation [

31]. In particular, the survey was conducted by immediately identifying various environmental risk factors, which were addressed through diversified methodologies and by integrating range-based and image-based acquisitions.

To ensure maximum data integration during post-processing, control points were placed around the survey area on both vertical and horizontal planes. The initial processing results provided a representative database of the vegetation system, characterized by high metric accuracy but also an approximated digital morphological representation. The cause of this outcome lies, on one hand, in an excess of acquired digital data, which generated excessive noise around the canopy, and, on the other hand, in a general discontinuity of the point cloud due to shadowed areas created by the foliage.

Additionally, some issues related to canopy reproduction were identified, caused by the micro-movement of smaller shoots and the geometry of the leaves themselves, which are too thin and therefore complex to document.

With the aim of minimizing errors in the digital duplicate and addressing the identified challenges, an additional acquisition campaign was conducted on completely leafless trees. The second survey was carried out by applying new control points directly on the trees, thus optimizing the processing of photogrammetric datasets and laser scans.

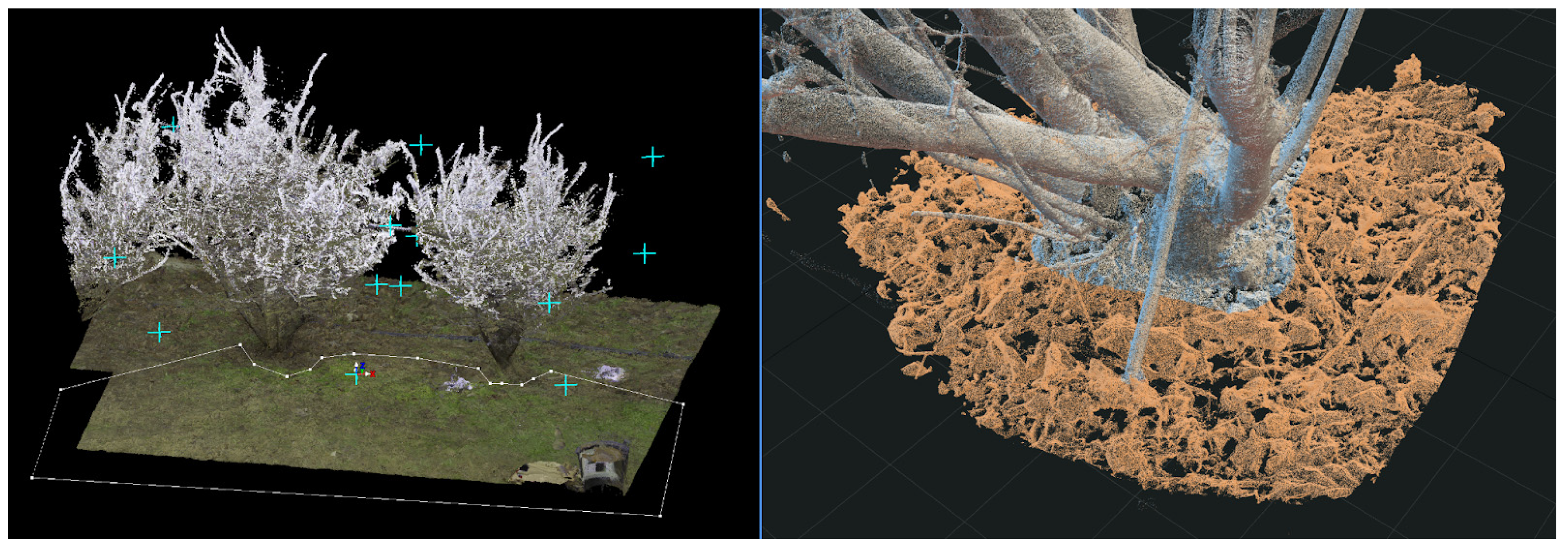

The acquisition from the lidar laser scanner was taken using an RTC 360 model (

Figure 2), while a Nikon D 5600 was used for the photographic sets. Branches most subjected to wind, as expected, were not acquired correctly, but are subsequently hypothesized in post-production by the SpeedTree software. In total, six photographic sets of 300 photos each and seven lidar laser stations were carried out in the final survey campaign.

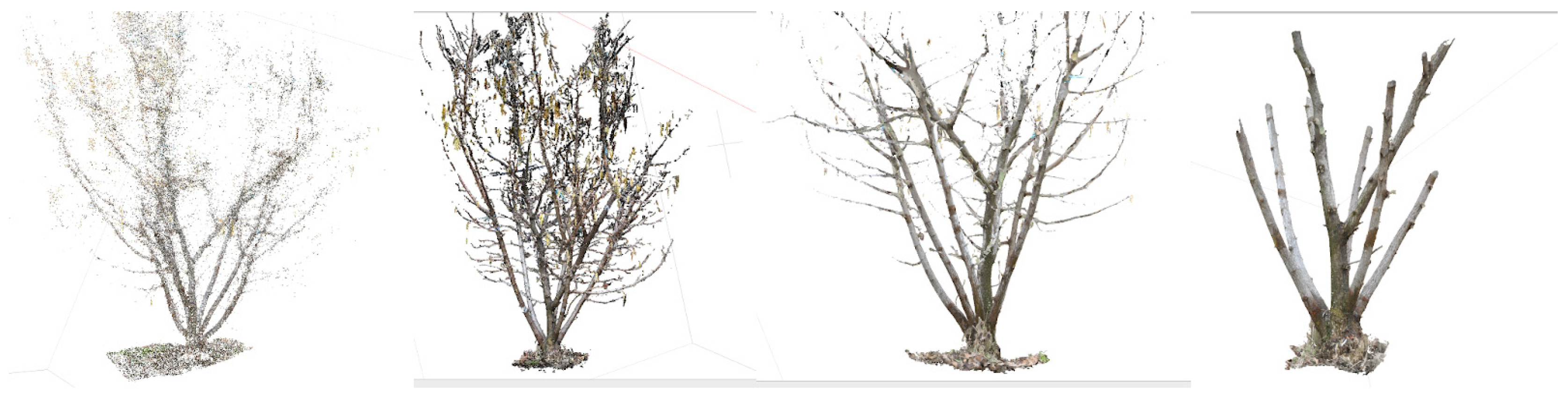

The acquired data, both range-based and image-based, were treated, respectively, with post-production software: Leica Cyclone for laser scanner data (

Figure 3) and Agisoft Metashape for 3D photogrammetry (

Figure 4). The various laser positions were aligned with manual verification of the homologous points [

32]. Their accuracy was ascertained with Cloud Compare software (the alignment error was less than 3 mm for most of the branches (

Figure 5), and finally they were used as a basis for the georeferencing of the photogrammetric model. The latter saw the alignment of 200,000 points for each set, and the final dense cloud counted around 30 million for a 5 million polygon mesh model. The dense cloud was first automatically cleaned with a color filter to erase movement noise, after which the imperfections were manually cleaned, and two versions were extracted from the most accurate model among those of the various sets: a complete digital twin (to be used later as a reference) and a clean model of trunks only (for subsequent parameterization).

After obtaining the most optimal static model, its transformation into a parametric model (i.e., a model that can be controlled in its various characteristics, such as length of the branches, radius, number of branches, leaves, and many others) is then necessary to allow live branch cutting and interactions based on real physics, but also to solve the problem of the impossibility of scanning the smallest branches, which would therefore leave gaps if the survey model were used.

The parameterization of a discrete model can take place in various ways. In particular, the construction phase of the three-dimensional model required a series of experiments to determine which of the processing options found in the literature [

23,

33] best suited the case study, allowing for the creation of a model with precise geometric and dimensional characteristics. In particular, different modeling approaches were evaluated and compared, including semi-automatic geometric modeling (reverse modeling and mesh from 3D scanning), manual modeling (low-poly mesh based on point cloud), and generative modeling (semi-automatic, AI, deep learning), in a process that goes from the most automatic (such as retopology or AI shape detection) to more manual ones. In this case study, the latter was preferred to maintain a level of detail and verisimilitude not achievable otherwise. The SpeedTree software boasts a series of functions that can be used to read the shape and color of the bases of the shoots to develop their continuations and optionally indicate the exact position/direction of the individual branches to generate a totally controllable digital twin.

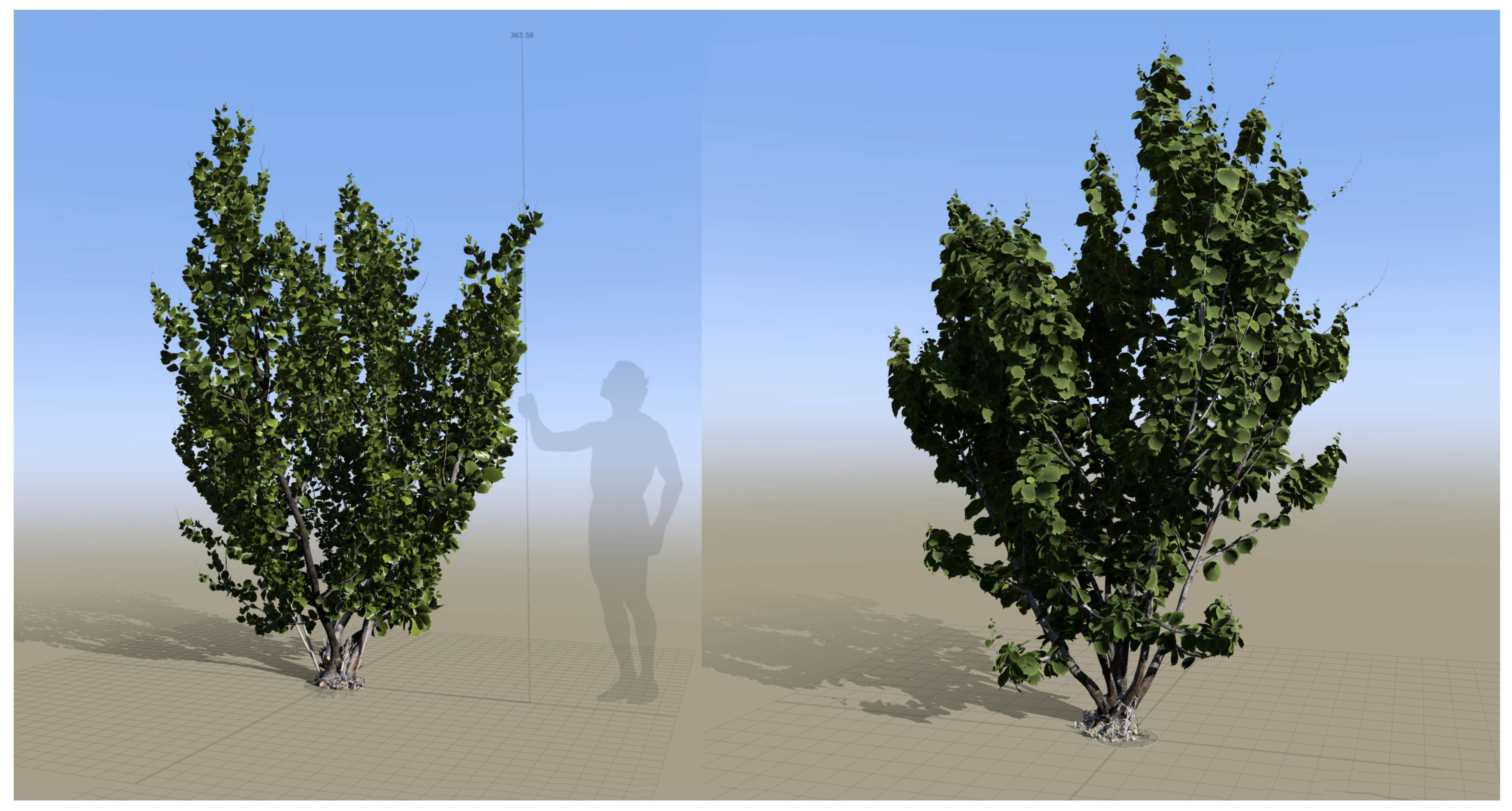

This phase (

Figure 6) was therefore divided into assisted reading of the cylindrical sections of each branch, extraction of the textures from the cylindrical mapping and transformation to seamless, clearance (cleaning of the edges of the mesh), extension of the branches, shaping (torsion and orientation of the branches), manual tracing of the positions of the original branches, and placement of the leaves.

The process took approximately 20 min per branch up to medium-sized ones, followed by multi-step branching of the main segments with appropriate parameters of twisting, internode, welding angle, and forces experienced (such as gravity and wind). The leaves and inflorescences were reproduced with a short photogrammetric campaign on real leaves in a closed environment on approximately 10 varieties to make the foliage heterogeneous (

Figure 7). The final model (

Figure 8) has approximately 500,000 polygons, of which approximately 200,000 are leaves, and the textures were arranged in a 2048 × 2048-pixel format for each branch, i.e., a format light enough not to burden the subsequent simulation.

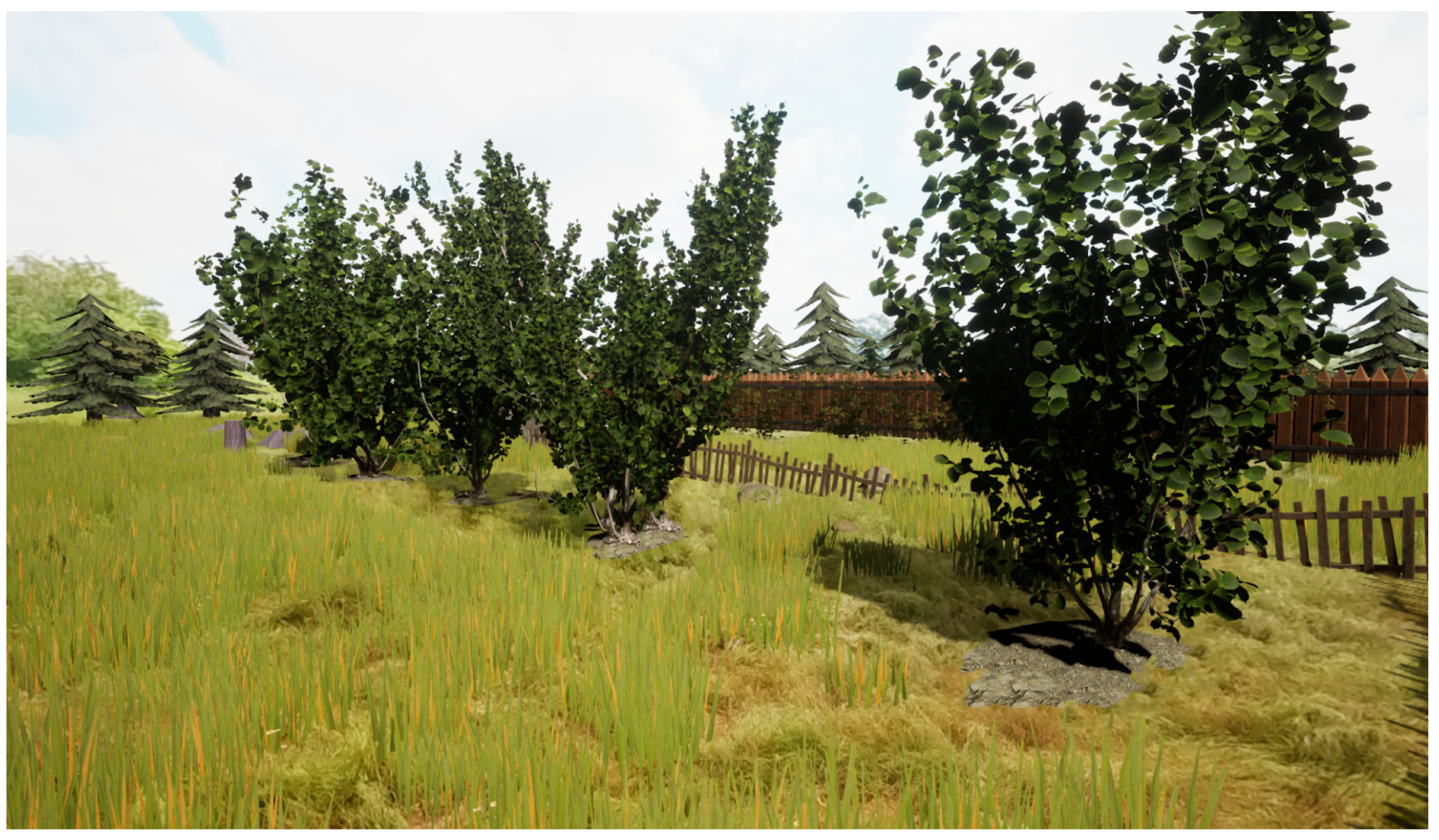

The virtual environment designed for the user to practice gives professional and convincing context to the training while maintaining lightweight computing performance (

Figure 9). A spherical panorama of the green environment in the urban area of Pavia was then acquired with an Insta 360, automatically processed, and extracted in HDR, which allowed the simulated space in Unreal Engine to be wrapped with an HDRI theme.

Finally, sounds and furnishings were specifically inserted in line with the natural context of the setting to recreate the illusion of an agricultural operation, and performance improvements such as post-process volumes and super resolution temporal anti-aliasing were applied.

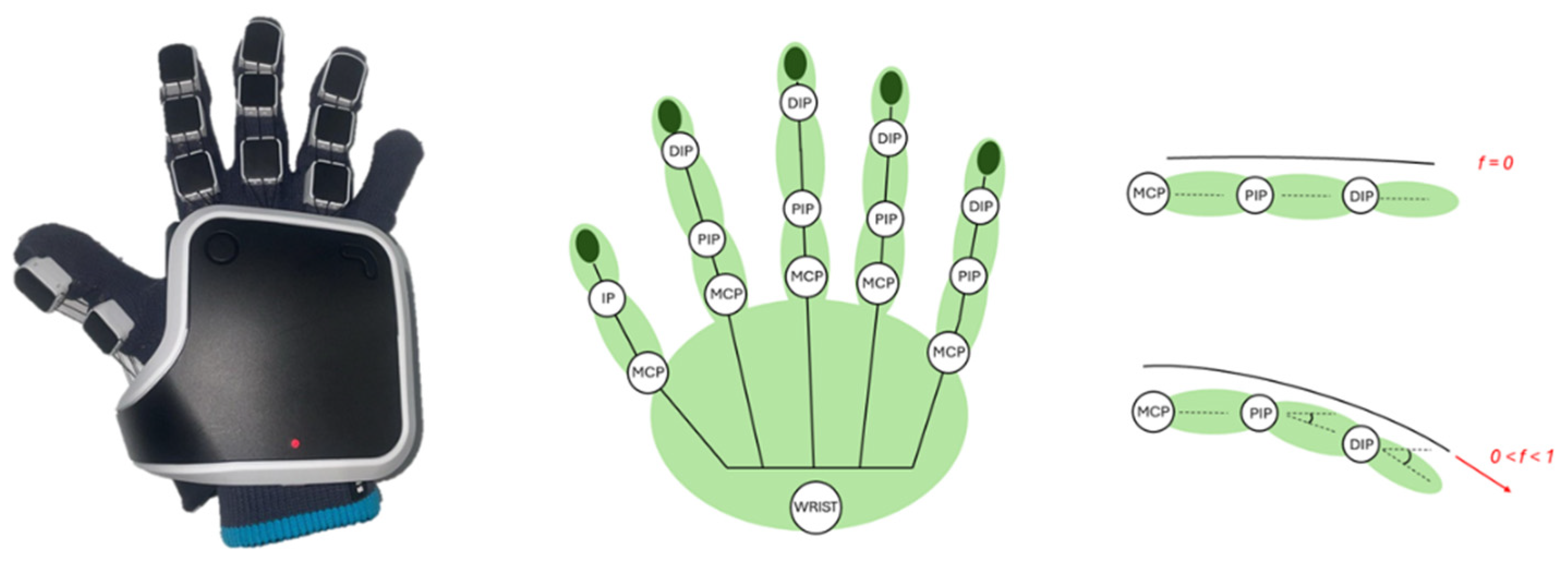

4.2. Haptic Interactions Within the Virtual Environment

The user starts the simulation in the middle of the virtual environment with the possibility of exploring it using the teleport function or by walking inside an empty room, using the built-in tracking of the headset. Once the user reaches the virtual instructor, the teaching demonstration starts, enabling the user to grab the pruning tool once finished. At this point the user can start to cut the branches of the chosen tree by following the suggestion previously given by the instructor. The haptic interface used for the development of the application is the haptic glove “Nova 2′ by SenseGlove. This device allows the reproduction of the movements of the operator’s fingers within the virtual environment, to actuate virtual pruning shears, and to provide force feedback and/or vibrotactile feedback to the user. The device works via wireless connection (Bluetooth), and its programming is implemented within Unreal Engine 5.1, using the plugin made available by the manufacturer.

To reproduce the movements of the user’s fingers within the virtual environment, it is necessary to extract the flexion data for each finger. The Nova 2 haptic glove is equipped with a system of three cables for each finger wrapped around pulleys inside the device’s case. Consequently, the extension of the cables is relative to the flexion of the fingers, which is indicated by a ’normalized flexion’ value ranging between 0 and 1.

To reproduce the movement of the fingers, the normalized flexion value was used to compute the rotation value of each finger’s joints. As each finger has three joints (two in the case of the thumb), the normalized flexion value alone is not sufficient to define their rotation values, but considering the natural closing gesture of the hand, certain relationships between the joints and the normalized flexion can be imposed, thus defining an unambiguous correspondence.

As previously introduced, the thumb has two joints: the meta-carpophalangeal (MCP) joint and the inter-phalangeal (IP) joint, while the other four fingers have three joints: the meta-carpophalangeal (MCP), the proximal inter-phalangeal (PIP), and the distal inter-phalangeal (DIP); see

Figure 10.

Given that each joint has a range of motion, it was decided to link normalized flexion (between 0 and 1) to the angle of rotation of the MCP joint of each finger, which typically has a range of motion between 0 and 90°. The following equations from the literature [

34] were used to calculate the rotation values of the finger joints to be applied to the avatar’s hand in the virtual environment:

The value indicated with f represents the normalized flexion of each finger, while three values—MCP, PIP, and DIP—describe the rotation angles of the respective joints.

Therefore, the user can interact with the virtual environment using Meta Quest 3 headset controllers and SenseGlove Nova 2 haptic gloves. Hand movement within the virtual environment is enabled by the headset’s controllers, while finger movement is managed through the haptic gloves, as previously explained.

The logic managing the interaction between the user’s hand and the virtual shear is based on the use of two Boolean variables: isGrabbing and isOverlapped. The variable isOverlapped describes the relative position between the hand and the shear, and it is true if the avatar’s hand is inside a collision box built around the shear, false otherwise. The variable isGrabbing describes the state of the fingers of the hand responsible for grabbing the shear. The value of the variable depends on the mean normalized flexion of the three fingers responsible for grabbing (thumb, middle finger, ring finger), so the variable is true if the mean value exceeds a certain threshold, false otherwise.

The shear is initially placed on the avatar’s right hip. A third variable, isEquipped, is necessary to describe the shear’s equipment status. The variable isEquipped becomes true as soon as the variable isGrabbed switches from false to true, while isOverlapped is true. When the variable isEquipped becomes true, the shear is attached to the avatar’s hand. If the user releases the grip, the variable isGrabbed becomes false again as the variable is Equipped and the shear returns to its initial location.

This grabbing logic is accompanied by vibration and force feedback to make the experience more realistic and immersive. Specifically, force feedback on the three fingers responsible for grasping is used to simulate the stiffness of the tool, blocking their movement, while vibrotactile feedback on the fingertips and palm is provided to the user to reproduce the sensation of contact at the beginning of the grasping. The actuation of the shear is regulated by the index finger, which is not subjected to force feedback. The normalized bending value of the index finger is used to control the actuation of the shear trigger once a limit value has been exceeded. Once the trigger is actuated, the shear blade closes, with an imposed law of motion, and, if the action corresponds to the cutting of a branch, vibro-tactile feedback is delivered to the user’s palm to reproduce the vibration of the tool caused by the cut.

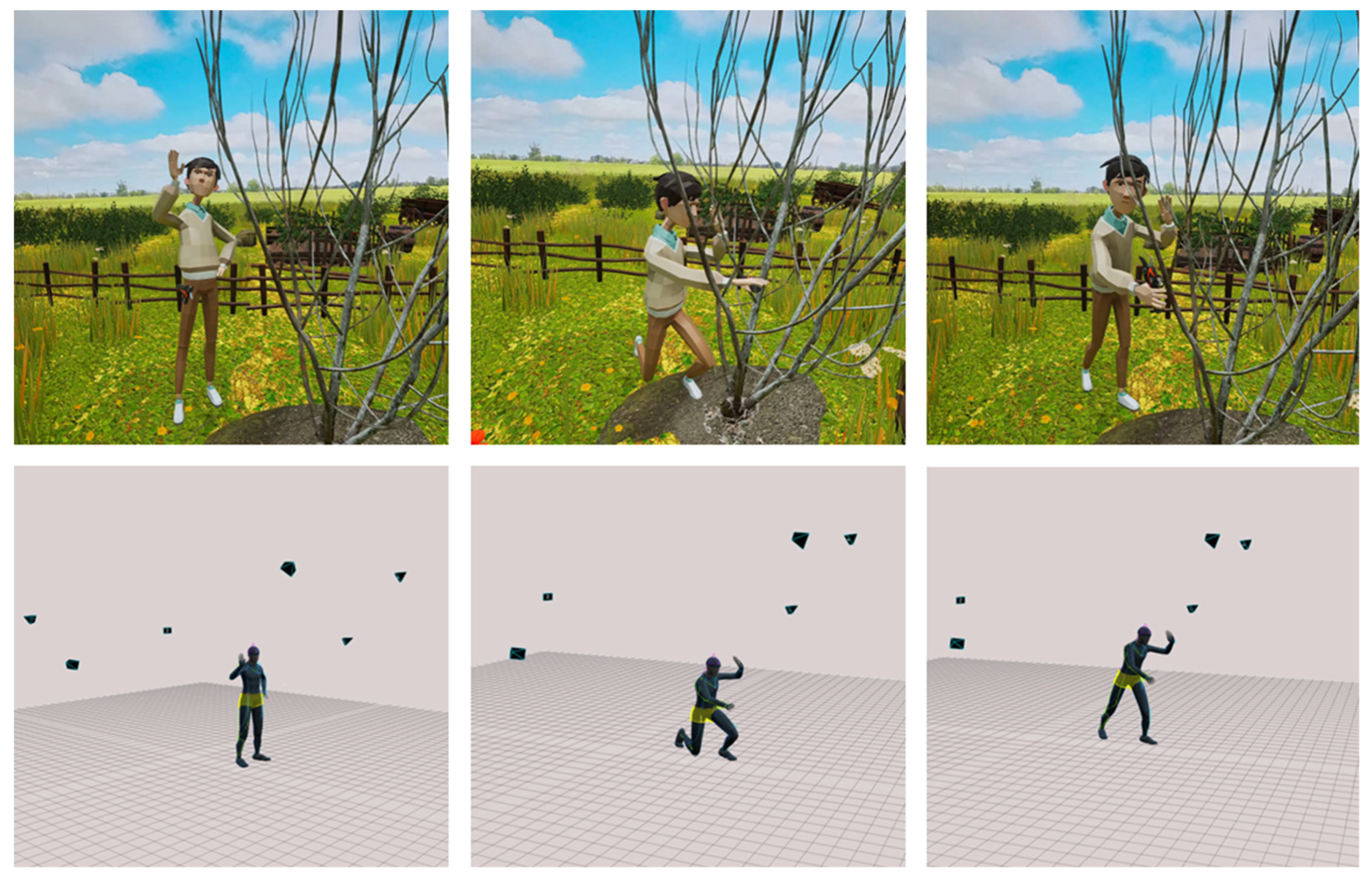

4.3. Cutting Tree Branches Logic

The virtual model of the leafless tree, once uploaded into Unreal Engine 5.1, was further processed to allow the interaction between the user and the branches and to develop the methodology necessary to enable the cutting of branches using virtual pruning shears.

The virtual tree, selected among the various developed models, was divided into several meshes, one for each branch. Different procedural meshes were generated, equal in number to the branches obtained from the previous subdivision of the model.

A procedural mesh is a three-dimensional data structure representing the shape of an object, with the characteristics of being generated, when necessary, through an algorithm, rather than being manually created and stored on a static file. This type of mesh is particularly useful in the reproduction of objects that need to be created dynamically and modified during a simulation. Using the ‘Copy static mesh to procedural’ command, the same mesh corresponding to the branches of the tree is associated with each procedural mesh. Subsequently, dependencies between the various branches were set up using parent/child relationships; thus, each branch is defined as a child of the branch to which it is attached. These dependencies are useful during the development of the cutting methodology, as they allow all branches belonging to the cut branch to be instantly identified, enabling the subsequent simulation of the physics and their simultaneous fall to the ground (

Figure 11).

Once the cutting command is executed through the haptic gloves, the shortest distance between each branch and a specific point between the scissor blades, defined as ‘CuttingPoint’, is computed. The branch corresponding to the shortest distance is designated as ‘BranchToCut’. An additional check is carried out to ensure that the distance is below a certain threshold to prevent accidental cutting of branches if the scissors are operated away from the tree.

Once the branch to be cut is identified, the Blueprint function ‘Slice Procedural Mesh’ is used to make the cut. This function requires inputting the point in space to which the cut is to be applied and the plane secant to the mesh that will generate the cut. The CuttingPoint of the scissors is used as input, while the cutting plane is identified by its normal, corresponding to the vector starting from the CuttingPoint and orthogonal to the blades. The normal of the cutting plane is identified in such a way that the new procedural mesh created by the function corresponds to the cut part of the branch, which must then fall.

To allow the cut part of the branch and all branches attached to it to fall to the ground, all branches defined as children of the BranchToCut and above the cutting plane are attached to the new mesh generated by the cut by means of a physical constraint, with which all degrees of freedom are blocked. The physical simulation of the cut branch and all branches attached to it will allow the entire block to fall to the ground, with high levels of realism.

This methodology makes it possible to perform the cut on any branch of the tree, whether it is a single branch or a branch with several children, with realistic feedback related to the generation of the cut surface and the consequent fall of the cut part of the branch. Furthermore, this cutting logic allows branches already subjected to the operation to be cut an infinite number of times, thus placing no limits on any refinements and operations.

4.4. User Avatar and Virtual Instructor

The simulated environment was completed by inserting the user’s avatar and a character instructor of pruning techniques (i.e., NPC), together with background and explanation audio assets. To enhance the immersiveness and the sense of presence perceived by the user, it has been decided to represent the whole upper body of the avatar. Since only position data from HMD and controllers were available, the Unreal Engine plugin Mimic Pro [

35] has been used to simulate the movement of the upper body. The Mimic Pro plugin uses a control rig to provide stable, realistic full-body estimation that does not impede the player’s movement and has minimal impact on performance. The Inverse Kinematic (IK) solver uses the position of the hands, extracted from the HMD’s controllers, and the position of the head, extracted from the HMD sensors, to reconstruct the upper body movement in a realistic way. Full-body IK has been applied to a very realistic human body skeletal mesh created through the MetaHuman plugin [

36], which allows creating, animating, and using highly realistic digital human characters.

The NPC, on the other hand, required a pre-recorded animation and voice that are activated when the user who is learning the pruning activity approaches. To achieve maximum coherence, expert project partners from the Università Cattolica del Sacro Cuore of Piacenza were recorded and consulted. The recording of the movements to be taught was taken with a motion capture system based on 6 OptiTrack Prime 13 W [

37] high-speed cameras (

Figure 12) with an infrared detection system, tracking 25 reflective markers on the upper body and 17 on the legs, to calculate the 3D position of the body in real-time.

The motion capture recording allowed for precise documentation of the movements necessary for pruning instruction. These movements, synchronized with an audio recording of guidance and instructions, were performed in a controlled environment without direct reference to a tree, instead mimicking the gestures required for pruning.

The recorded data was saved in Motive software and streamed in real-time to a skeletal mesh within Unreal Engine via the OptiTrack Live-Link plugin. This plugin not only enabled live streaming of the animation but also facilitated the application of the necessary rigging to align the recorded movement data with the corresponding bones in the virtual character’s skeleton.

Specifically, the rigging process ensured that the skeletal mesh in Unreal properly interpreted the motion capture data, maintaining realistic joint movement and anatomical fidelity. Once the animation was successfully transferred to Unreal Engine, it was recorded as an fbx animation file. This file was then processed to refine the animation by correcting potential issues, such as unwanted vibrations or unnatural movements, that may have occurred during the motion capture session. After cleaning up the animation, it was reimported into Unreal Engine, where additional modifications were made to align it with one of the hazelnut trees present in the virtual scene. This adjustment ensured that the instructional sequence took place in direct proximity to the tree, enhancing the realism and effectiveness of the training session.

Figure 13 shows a comparison between the pose of the virtual instructor within the virtual environment and the raw skeletons acquired with the OptiTrack motion capture system.

Furthermore, a pruning shear was added to the NPC’s hand, and functionality was implemented to allow the shears to interact with the environment. Specifically, the plant model’s behavior during the NPC tutorial is not directly integrated into the recorded NPC animation but is controlled through special branches that have an additional feature compared to the standard ones of the tree. These branches include a collision check that is triggered exclusively by the NPC’s shears. When a collision with the shears is detected during the animation, the branches detach from the tree and fall to the ground, simulating a natural cutting behavior.

4.5. User Presence Assessment

To measure the level of presence perceived by the user within the virtual environment, during the simulation, the IPQ [

7] was used. The questionnaire comprises 14 items with a 7-point Likert scale and it is divided into three subscales along with an additional general item (G). The subscales address spatial presence (SP)—the sensation of being physically present in the virtual environment; Realism (REAL)—the subjective experience of realism; and Involvement (INV)—the degree of attention dedicated to the virtual environment. A group of 30 volunteers (9 females and 21 males) aged between 22 and 44 years (M = 28.83, SD = 5.00) was recruited within the university community using a convenience sampling approach [

38]. These participants, who had little or no prior experience with VR and haptic technologies, were instructed to perform the virtual pruning training session. The training comprised an initial instructional segment led by a virtual instructor, followed by a hands-on phase where users were required to cut tree branches according to the given instructions, using a haptic glove in conjunction with a VR headset and controllers. Immediately after the training session, the participants were asked to complete the IPQ questionnaire.

Table 1 shows the questions of the IPQ with the items grouped by category.

5. Results and Discussion

The first testing was carried out in a laboratory environment, with an environment simulated via a 1.32 Gb executable file. The test was performed on a system running Windows 11, equipped with an Intel Core i9-K processor and an NVIDIA RTX 4090 GPU, and no glitches or slowdowns were found upon subjective analysis. The cutting operation was successful according to the expected parameters; the frame rate was set at 90 fps, the resolution (as per the headset settings) was 2064 × 2208, and no bug reports were found from the engine. The simulation, therefore, fully reaches the relevant standards and allows the training of agricultural operators in complete safety and with maximum immersion while providing selected and always updatable theoretical knowledge.

The immersive simulator environment was then proposed to early adopters and at a trade fair for the agro-industry (EIMA 2024), with good user response regarding the immersiveness of the pruning action.

The construction of a three-dimensional model, especially in the context of simulating vegetation, requires careful consideration of the processing methods that will best meet the specific needs of the case study. A series of experiments were conducted to evaluate and compare different modeling approaches. These included semi-automatic geometric modeling (such as reverse modeling and mesh generation from 3D scanning), manual modeling (low-poly mesh based on point clouds), and generative modeling (using AI and deep learning techniques). Among these, manual modeling was preferred in this case study to ensure a high level of detail and realism, which would be difficult to achieve with automated approaches. The selected software (SpeedTree 9.5.2) was used to develop fully controllable digital duplicates of the hazelnut tree. However, it is important to emphasize that the methods used, while ensuring a high level of detail and realism, are rather costly in terms of time, required expertise, or replicability on other plant specimens.

Manual modeling, for example, requires significant effort and technical knowledge, not only for the creation of the three-dimensional model but also for the optimization of textures and geometries.

The accurate reproduction of finger movements and the manipulation of virtual pruning shears via force feedback were achieved by translating finger flexion data into joint rotations within the virtual environment. Overall, this approach demonstrates the importance of blending various modeling and interactive technologies to create immersive, detailed simulations that can be used for practical applications, such as virtual training or environmental simulations. The combination of manual modeling, generative techniques, and haptic feedback creates a highly controllable, engaging experience that stands out for its realism and attention to detail.

5.1. User Virtual Environment Interaction

To transfer real-life skills through VR-based training, it is fundamental to ensure high levels of embodiment and presence to the user, enhancing his experience. To this end, every aspect of this study has been meticulously designed to maximize immersion and realism, ensuring a more effective and impactful training experience using cutting-edge technologies, such as haptic interfaces. One of the key components of this system is the step-by-step virtual instructor, which is directly referenced to the tree, ensuring precise guidance without time constraints, language barriers, or variability in teaching approaches. The integration of haptic gloves further enhances realism by providing a tactile experience that closely mimics real-world pruning interactions, significantly reducing the gap between virtual training and real-life fieldwork, ensuring that the skills acquired in the simulation remain applicable and effective in real-world scenarios.

Additionally, the realistic simulation of branch-cutting mechanics, including the dynamic behavior of falling branches, contributes to an improved sense of immersion, along with the photorealistic representation of branches and their interaction with the environment, and reinforces the efficacy of the experience, further strengthening the user’s engagement and learning retention. Preliminary evaluations indicate that this technology has significant potential to improve skill acquisition and training efficiency in agriculture by offering a scalable, highly immersive solution and representing a valuable tool for operator education. Future developments may include the usage of the motion capture system to analyze the operator’s movements during training, enabling real-time corrections of improper techniques and postures. Moreover, the wealth of data collected from various sensors could facilitate in-depth ergonomic studies, made possible by markerless, AI-based, human pose tracking systems [

39], thus optimizing training strategies to enhance both efficiency and user comfort.

5.2. Sense of Presence Assessment Results

The collected data have been manipulated; specifically, the responses have been converted into the scale 0–6, and reversed items (REAL1, SP2, and INV3) have been appropriately modified to match the other items.

A first simple analysis was conducted on the collected data using descriptive methods and computing the mean, standard deviation, median, and range for each item across all 30 participants.

Table 2 shows the results obtained in this analysis.

By looking at the table, it is evident that the general item G, measuring the “sense of being there”, has a relatively high mean (4.73) and median (5.00), indicating general positive responses, while the range (3–6) indicates how the responses are concentrated in the upper half of the scale. All the items of the SP category have high mean scores (4.10 to 4.67), showing a general positive spatial presence perceived by the user, with a relatively good consistency in the responses (standard deviation between 0.88 and 1.57). The category INV, related to involvement, shows moderate means, with the item INV3, which has the lowest mean (3.53) and the greatest variability in the responses, with a standard deviation equal to 1.57. By looking at the ranges obtained for the different items, it is evident how the respondents had a wider spread of opinions. The Realism category shows the lowest mean, particularly for the item REAL4 (1.37). The low medians (1.00 and 2.00 for REAL3 and REAL4) suggest that many participants provided very low ratings, indicating that this aspect may be perceived less favorably.

Interestingly, the items with the highest variability (INV3 and SP2) correspond to two of the three reversed items in the questionnaire. This could suggest that the reversal process may have contributed to the increased variability in responses. Participants might have found these items more challenging to interpret, leading to a wider distribution of scores.

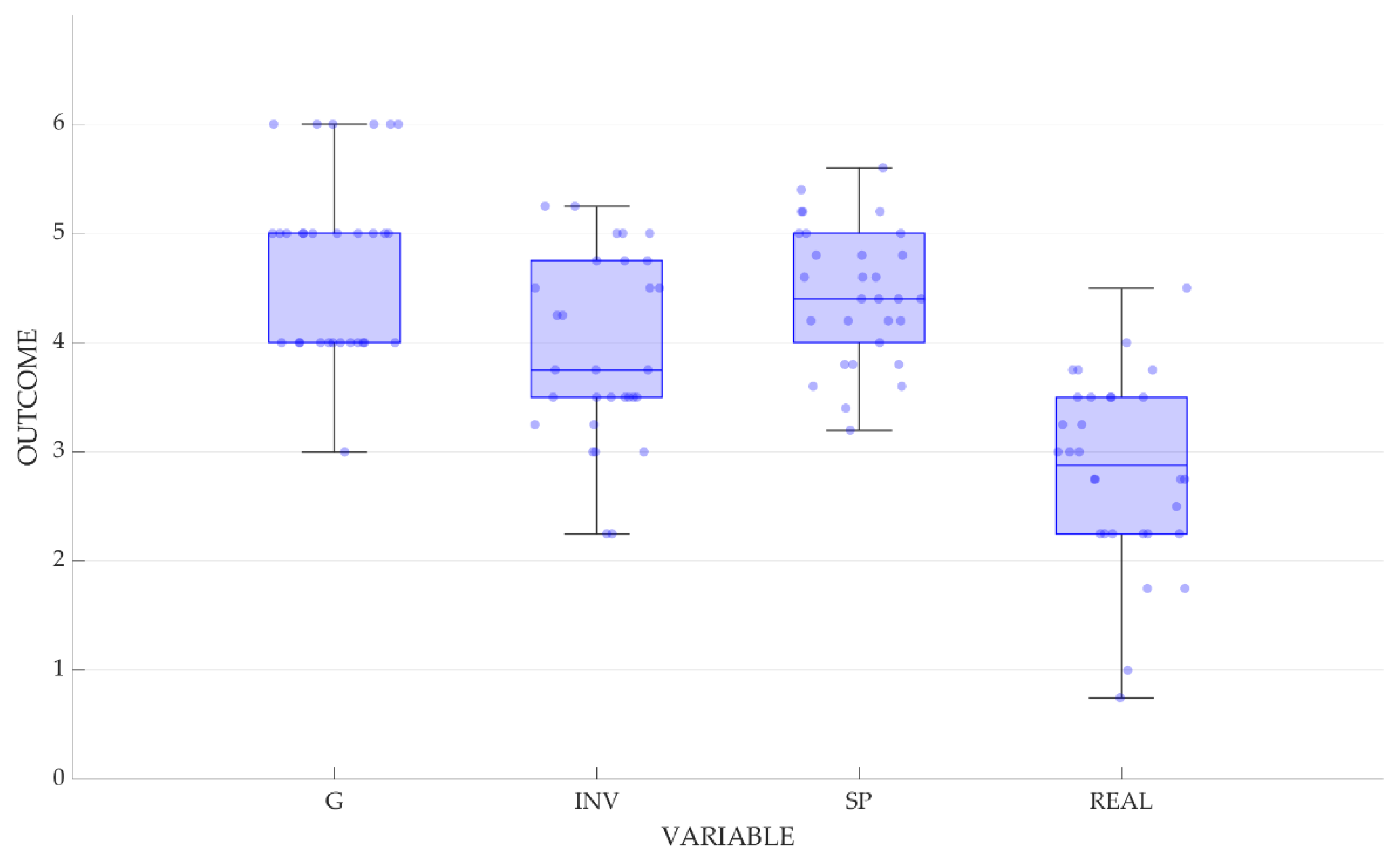

To have a deeper understanding of participants’ experience, the average scores for each category (G, INV, SP, and REAL) per participant have been computed, obtaining four aggregated scores for participants.

Figure 14 shows a boxplot visualization of these data to compare central tendency, variability, and potential outliers across the four categories.

The items related to spatial presence (SP = 4.44) and the sense of “being there” (G = 4.73) showed the highest and most consistent scores, suggesting a clear and convincing perception of the surrounding environment. The perceived involvement was relatively high (INV = 3.92), but it showed a slightly lower median with greater variability, indicating differences in the degree of immersion among participants. Finally, realism received the lowest scores (REAL = 2.83), indicating a weaker perception of realism compared to the other dimensions.

Although effort was made to model the plant faithfully, as evidenced by the low deviance values obtained when compared to real plants, the deliberate decision to introduce purely virtual elements, such as the instructor, and some necessary components for constructing the virtual environment, may have compromised the realism perceived by the users. Nevertheless, the values of spatial presence are high, as are those of involvement, emphasizing how immersive the virtual environment is. Overall, the experience helps the user immerse themselves in the pruning activity, which is also thanks to the high fidelity of the plant reconstruction and the perception of stimuli through the use of haptic interfaces.

5.3. Potential File Exchanges with Predictive Software

During the research, preliminary studies were also carried out on the interaction and bidirectional data exchange methods between the virtual training scenario developed in this work and a predictive model of tree growth, i.e., a digital version of the hazelnut used in botany for the digital simulation of the state of the hazelnut over time [

40,

41].

However, nowadays, the quality of the two models is very different:

Predictive growth models are based on a strong abstraction of the tree shapes and summarize the key elements of the hazelnut in simple geometries: cylinders, spheres, and surfaces.

On the other hand, the survey model is a reconstruction faithful to detail, with the aim of providing maximum immersion to the agricultural operators who will use it.

Both formats can be in obj but have different polygon counting orders: from hundreds for the first to hundreds of thousands for the second. A first exchange was therefore attempted by sending the predictive model into the realistic simulation, then cutting it with haptic gloves and returning it to the growth simulation software. This exchange direction was successful, while the opposite (i.e., sending the detailed model to the growth simulation) was not feasible, since it was hindered by the reduced compatibility of the graphics engines.

We then proceeded with an analysis of the possible resolution strategies, as in the following: Starting from the high-resolution model of the core version, it is assumed that it is possible to apply scripts aimed at reducing the complexity of the model while preserving some of its properties. The process begins with separating all non-continuous geometries, ensuring that distinct parts of the model are treated independently. Once divided, the elements are categorized into three main groups—leaves, branches, and the main trunk—since each requires a different approach to simplification. Naming conventions are applied to help distinguish these components, with leaves, branches, and the trunk labeled accordingly based on their vertex count. To manage the excessive number of leaves (yet not present in the wintertime tree on which pruning is carried out), the script applies a controlled reduction by selecting a specified percentage of leaf objects and eliminating them. This process significantly decreases the number of leaves from approximately 20,000 to around 200, making the model more lightweight and manageable. Similarly, the branches undergo a restructuring process where their overall inclination is preserved, but unnecessary vertices are removed. By isolating the outermost edge loops and eliminating the inner vertices, the branches are simplified into a more streamlined form. By implementing these optimization steps, the script can drastically reduce the total polygon count to one hundredth. This makes the model significantly more efficient for rendering, exporting, or real-time applications while retaining its fundamental visual characteristics.

Although this meets the intended objectives, it remains to be resolved that most growth simulation software treats the leaves as solid objects, which are therefore deleted already at the first export in obj format to the cutting simulation. Furthermore, the main stem, which is the part of the mesh with the highest density of vertices, has loops and mesh directions that are different and irregular, as it is composed of multiple branches. The scripts described cannot completely solve these problems, and it will be the subject of subsequent research to find innovative solutions with the available technologies.

6. Conclusions

In conclusion, the development of a parametricly generated simulation has enabled the creation of an innovative model for highly realistic virtual training in pruning. The resulting model is dynamic and interactive, allowing real-time cuts and modifications within the simulation. Parametric modeling adds significant value by enabling adaptive design solutions. These models facilitate the analysis of tree development over time, improving maintenance strategies, environmental impact assessments, and biodiversity conservation. By integrating parametric tools, researchers and professionals can make data-driven decisions, ensuring resilient and efficiently managed green spaces. This research serves as a starting point for further exploration of topics and methodologies related to the parametric modeling of surveyed data, interactive virtual access to informational metadata, and model-user interoperability.

This model could make a significant contribution to the field of precision agriculture and plant research, as it combines high-resolution data from multiple sources into a cohesive and comprehensive representation. Such an integrated approach enables a variety of applications ranging from agricultural optimization to environmental monitoring. Likewise, the model-based simulation has proven to be sufficiently functional and responsive to successfully test a pruning training prototype with agricultural workers. The ability to simulate pruning in a virtual environment provides a safe and cost-effective method to train individuals in the appropriate techniques without the risk of damaging real trees. This is particularly important in educational and vocational training contexts, where the ability to repeatedly practice and learn from mistakes without real-world consequences can significantly improve learning outcomes. Furthermore, the possibility of using a training platform structured in a virtual environment and therefore available throughout the calendar year would separate the learning path of an agricultural activity from the seasonality connected to it, with obvious benefits in terms of costs and speed of training operations for operators. The workflow presented can be extended to other interdisciplinary research involving the creation of digital twins for monitoring agricultural management and can be extended to agricultural operations on different plant species or other difficult-to-reconstruct specimens belonging to the third sector. Similarly, simulation with such rapid and complete sensory feedback tools can offer potential support to any professional and/or industrial field not yet facilitated by immersive realities.

Author Contributions

Conceptualization, M.C., F.P. and H.G.; methodology, M.C., F.P., H.G., A.M. and D.F.; software, A.M. and D.F.; validation, M.C. and F.P.; formal analysis, A.M and D.F.; investigation, A.M, D.F. and M.C.; resources, M.C., F.P. and H.G.; data curation, A.M. and D.F.; writing—original draft preparation, A.M. and D.F.; writing—review and editing, M.C. and F.P.; visualization, A.M. and D.F.; supervision, M.C. and F.P.; project administration, M.C.; funding acquisition, M.C. All authors have read and agreed to the published version of the manuscript.

Funding

This paper is part of the project NODES which has received funding from the MUR—M4C2 1.5 of PNRR funded by the European Union—NextGenerationEU (Grant agreement no. ECS00000036).

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors on request.

Acknowledgments

We would like to thank Università Cattolica Del Sacro Cuore, Department of Sustainable Plant Production Sciences (DIPRO.VE.S), for having made the fields available on which to carry out the surveys and for the valuable support of Sergio Tombesi, scientific director of “DIGINUT—Development of digital twin tools for decision support in the hazelnut production chain”, complementary project to the DEMETRA project. We also extend our gratitude to Pellenc Italia S.R.L. for providing the 3D model of the pruning shears and for allowing us to present the application at EIMA 2024.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Bechar, A.; Vigneault, C. Agricultural Robots for Field Operations: Concepts and Components. Biosyst. Eng. 2016, 149, 94–111. [Google Scholar] [CrossRef]

- Rodenburg, J. Robotic Milking: Technology, Farm Design, and Effects on Work Flow. J. Dairy Sci. 2017, 100, 7729–7738. [Google Scholar] [CrossRef] [PubMed]

- Liu, W.; Shao, X.-F.; Wu, C.-H.; Qiao, P. A Systematic Literature Review on Applications of Information and Communication Technologies and Blockchain Technologies for Precision Agriculture Development. J. Clean. Prod. 2021, 298, 126763. [Google Scholar] [CrossRef]

- Akhigbe, B.I.; Munir, K.; Akinadé, O.O.; Àkànbí, L.A.; Oyedele, L.O. IoT Technologies for Livestock Management: A Review of Present Status, Opportunities, and Future Trends. Big Data Cogn. Comput. 2021, 5, 10. [Google Scholar] [CrossRef]

- Weiss, M.; Jacob, F.; Duveiller, G. Remote Sensing for Agricultural Applications: A Meta-Review. Remote Sens. Environ. 2020, 236, 111402. [Google Scholar] [CrossRef]

- Choi, I.; Culbertson, H.; Miller, M.R.; Olwal, A.; Follmer, S. Grabity: A Wearable Haptic Interface for Simulating Weight and Grasping in Virtual Reality. In Proceedings of the 30th Annual ACM Symposium on User Interface Software and Technology, Quebec City, QC, Canada, 22–25 October 2017. [Google Scholar]

- Igroup Presence Questionnaire (IPQ) Overview|Igroup.Org—Project Consortium. Available online: https://www.igroup.org/pq/ipq/index.php (accessed on 20 February 2025).

- SpeedTree—3D Vegetation Modeling and Middleware. Available online: https://store.speedtree.com/ (accessed on 20 February 2025).

- Radhakrishnan, U.; Konstantinos, K.; Chinello, F. A Systematic Review of Immersive Virtual Reality for Industrial Skills Training. Behav. Inf. Technol. 2021, 40, 1310–1339. [Google Scholar] [CrossRef]

- Thach, N.; Hung, N. Research and Design a Lifeboat Virtual Reality Simulation System for Maritime Safety Training in Vietnam. J. Mar. Sci. Technol. 2024, 32, 4. [Google Scholar] [CrossRef]

- Heibel, B.; Anderson, R.; Swafford, M.; Borges, B. Integrating Virtual Reality Technology into Beginning Welder Training Sequences. J. Agric. Educ. 2024, 65, 210–225. [Google Scholar] [CrossRef]

- Cheung, K.; Jong, M.; Lee, F.; Lee, J.; Luk, E.; Shang, J.; Wong, M. FARMTASIA: An Online Game-Based Learning Environment Based on the VISOLE Pedagogy. Virtual Real. 2008, 12, 17–25. [Google Scholar] [CrossRef]

- Yongyuth, P.; Prada, R.; Nakasone, A.; Prendinger, H. AgriVillage: 3D Multi-Language Internet Game for Fostering Agriculture Environmental Awareness. In Proceedings of the International Conference on Management of Emergent Digital EcoSystems, MEDES’10, Bangkok, Thailand, 26–29 October 2010; pp. 145–152. [Google Scholar]

- Buttussi, F.; Chittaro, L. Effects of Different Types of Virtual Reality Display on Presence and Learning in a Safety Training Scenario. IEEE Trans. Vis. Comput. Graph. 2017, 24, 1063–1076. [Google Scholar] [CrossRef]

- Parrinello, S.; Miceli, A.; Galasso, F. From Digital Survey to Serious Game. A Process of Knowledge for the Ark of Mastino II. Disegnarecon 2021, 14, 17.1–17.22. [Google Scholar] [CrossRef]

- Barber, D.; Dallas, R.; Mills, J. Laser Scanning for Architectural Conservation. J. Archit. Conserv. 2006, 12, 35–52. [Google Scholar] [CrossRef]

- Fonstad, M.; Dietrich, J.; Courville, B.; Jensen, J.; Carbonneau, P.E. Topographic Structure from Motion. In Proceedings of the AGU Fall Meeting Abstracts, February 2011; p. 5. Available online: https://onlinelibrary.wiley.com/doi/10.1002/esp.3366 (accessed on 7 February 2025).

- Bangen, S.; Wheaton, J.; Bouwes, N.; Bouwes, B.; Jordan, C. A Methodological Intercomparison of Topographic Survey Techniques for Characterizing Wadeable Streams and Rivers. Geomorphology 2014, 206, 343–361. [Google Scholar] [CrossRef]

- Berra, E.; Peppa, M.V. Advances and Challenges of UAV SFM MVS Photogrammetry and Remote Sensing: Short Review. ISPRS-Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, XLII-3/W12-2020, 267–272. [Google Scholar] [CrossRef]

- Muhar, A. Three-Dimensional Modelling and Visualisation of Vegetation for Landscape Simulation. Landsc. Urban Plan. 2001, 54, 5–17. [Google Scholar] [CrossRef]

- Wu, J.; Zhang, C.; Xue, T.; Freeman, B.; Tenenbaum, J. Learning a Probabilistic Latent Space of Object Shapes via 3D Generative-Adversarial Modeling. In Proceedings of the Advances in Neural Information Processing Systems; Lee, D., Sugiyama, M., Luxburg, U., Guyon, I., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2016; Volume 29. [Google Scholar]

- Digumarti, S.T.; Nieto, J.; Cadena, C.; Siegwart, R.; Beardsley, P. Automatic Segmentation of Tree Structure From Point Cloud Data. IEEE Robot. Autom. Lett. 2018, 3, 3043–3050. [Google Scholar] [CrossRef]

- Liu, Y.; Guo, J.; Benes, B.; Deussen, O.; Zhang, X.; Huang, H. TreePartNet: Neural Decomposition of Point Clouds for 3D Tree Reconstruction. ACM Trans. Graph. 2021, 40, 1–16. [Google Scholar] [CrossRef]

- Oxman, R. Thinking Difference: Theories and Models of Parametric Design Thinking. Des. Stud. 2017, 52, 4–39. [Google Scholar] [CrossRef]

- Favorskaya, M.N.; Jain, L.C. Handbook on Advances in Remote Sensing and Geographic Information Systems: Paradigms and Applications in Forest Landscape Modeling; Intelligent Systems Reference Library; Springer: Dordrecht, The Netherlands, 2017; Volume 122, ISBN 9783319523064. [Google Scholar]

- Nogueira, P.A. Motion Capture Fundamentals A Critical and Comparative Analysis on Real-World Applications. 2012. Available online: https://www.semanticscholar.org/paper/Motion-Capture-Fundamentals-A-Critical-and-Analysis-Nogueira/051361b591b4f7d981d5a31f67ee353104c86119 (accessed on 20 February 2025).

- Aseeri, S.; Marin, S.; Landers, R.; Interrante, V.; Rosenberg, E. Embodied Realistic Avatar System with Body Motions and Facial Expressions for Communication in Virtual Reality Applications. In Proceedings of the 2020 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Atlanta, GA, USA, 22–26 March 2020; pp. 581–582. [Google Scholar]

- Buck, L.; Chakraborty, S.; Bodenheimer, B. The Impact of Embodiment and Avatar Sizing on Personal Space in Immersive Virtual Environments. IEEE Trans. Vis. Comput. Graph. 2022, 28, 2102–2113. [Google Scholar] [CrossRef]

- Smith, H.J.; Neff, M. Communication Behavior in Embodied Virtual Reality. In CHI ’18, Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 21–26 April 2018; Association for Computing Machinery: New York, NY, USA, 2018; pp. 1–12. [Google Scholar]

- Perret, J.; Vander Poorten, E. Touching Virtual Reality: A Review of Haptic Gloves. In Proceedings of the ACTUATOR 2018; 16th International Conference on New Actuators, Bremen, Germany, 25–27 June 2018. [Google Scholar]

- Verim, Ö.; Sen, O. Application of Reverse Engineering Method on Agricultural Machinery Parts. Int. Adv. Res. Eng. J. 2023, 7, 35–40. [Google Scholar] [CrossRef]

- La Placa, S.; Doria, E. Digital Documentation and Fast Census for Monitoring the University’s Built Heritage. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2024, XLVIII-2/W4-2024, 271–278. [Google Scholar] [CrossRef]

- Okura, F. 3D Modeling and Reconstruction of Plants and Trees: A Cross-Cutting Review across Computer Graphics, Vision, and Plant Phenotyping. Breed Sci. 2022, 72, 31–47. [Google Scholar] [CrossRef] [PubMed]

- Park, Y.; Lee, J.; Bae, J. Development of a Wearable Sensing Glove for Measuring the Motion of Fingers Using Linear Potentiometers and Flexible Wires. IEEE Trans. Ind. Inform. 2015, 11, 198–206. [Google Scholar] [CrossRef]

- Mimic Pro—VR Body IK System for Unreal Engine. Available online: https://jakeplayable.gumroad.com/l/MimicPro (accessed on 20 February 2025).

- MetaHuman|Realistic Person Creator—Unreal Engine. Available online: https://www.unrealengine.com/en-US/metahuman (accessed on 20 February 2025).

- OptiTrack—Motion Capture Systems. Available online: https://www.optitrack.com/ (accessed on 20 February 2025).

- Robinson, O.C. Sampling in Interview-Based Qualitative Research: A Theoretical and Practical Guide. Qual. Res. Psychol. 2014, 11, 25–41. [Google Scholar] [CrossRef]

- Giulietti, N.; Todesca, D.; Carnevale, M.; Giberti, H. A Real-Time Human Pose Measurement System for Human-In-The-Loop Dynamic Simulators. IEEE Access 2025, 13, 24954–24969. [Google Scholar] [CrossRef]

- Lei, X.; Chang, M.; Lu, Y.; Zhao, T. A review on growth modelling and visualization for virtual trees. Sci. Silvae Sin. 2006, 42, 123–131. [Google Scholar] [CrossRef]

- Jinasena, K.; Sonnadara, U. A Dynamic Simulation Model for Tree Development. In Proceedings of the International Conference on Mathematical Modeling, Colombo, Sri Lanka, 10–21 March 2014. [Google Scholar]

Figure 1.

Research diagram of the workflow stages.

Figure 1.

Research diagram of the workflow stages.

Figure 2.

Use of the laser scanner and photographic campaign.

Figure 2.

Use of the laser scanner and photographic campaign.

Figure 3.

Laser scanner data cleaning process. The tops of the branches, colored white, are subject to too much noise and therefore appear to be unreliable data.

Figure 3.

Laser scanner data cleaning process. The tops of the branches, colored white, are subject to too much noise and therefore appear to be unreliable data.

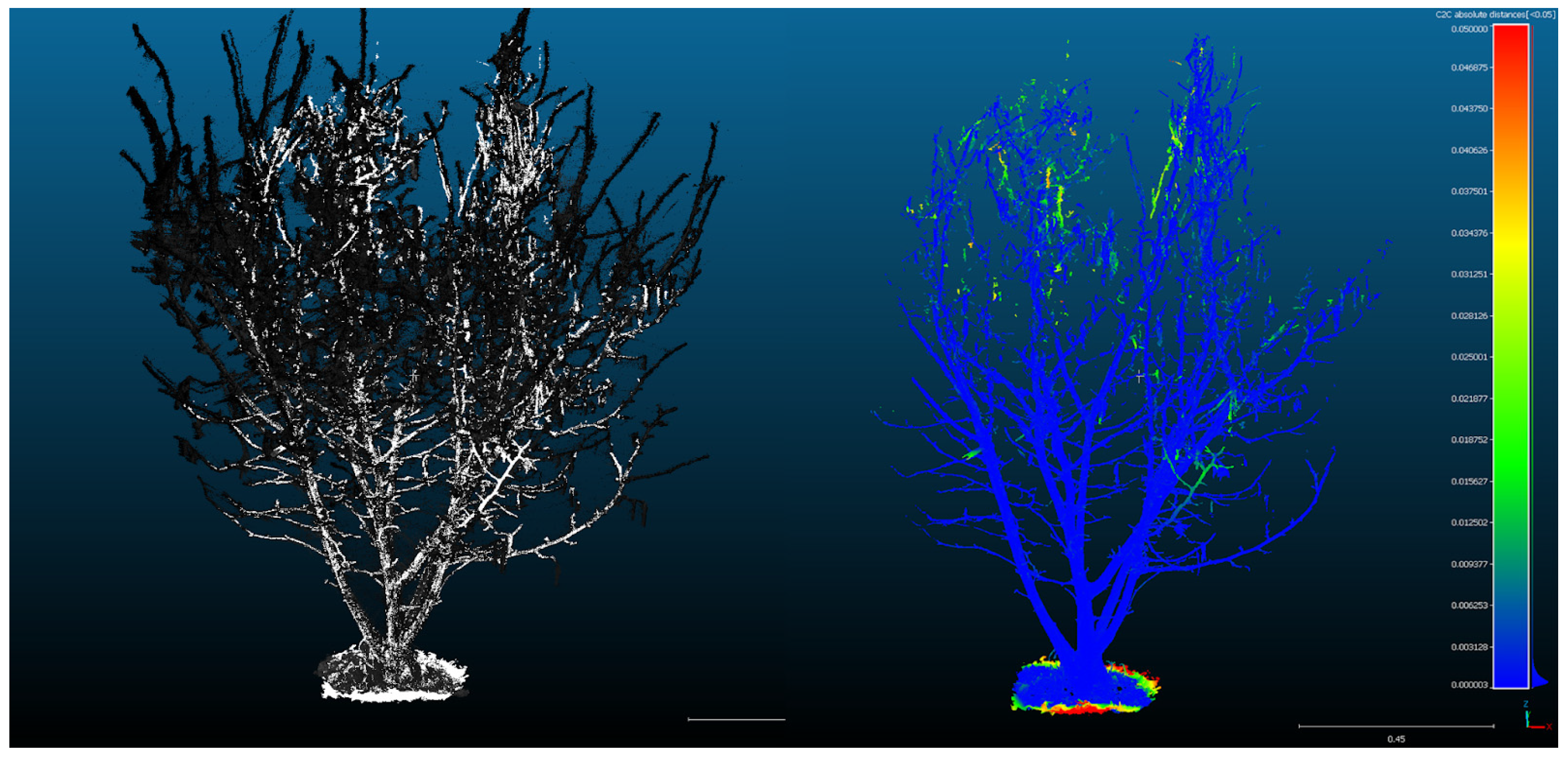

Figure 4.

Evolution of photogrammetric data. Sparse point cloud, dense cloud, textured 3D model, clean and trimmed 3D model for import into SpeedTree.

Figure 4.

Evolution of photogrammetric data. Sparse point cloud, dense cloud, textured 3D model, clean and trimmed 3D model for import into SpeedTree.

Figure 5.

Comparison of the point cloud from the laser scanner with that from photogrammetry, using the Cloud Compare software. As for the deviation, the graph highlights a gap constantly lower than 3 mm on the whole surface, therefore a highly reliable data in terms of geometric precision.

Figure 5.

Comparison of the point cloud from the laser scanner with that from photogrammetry, using the Cloud Compare software. As for the deviation, the graph highlights a gap constantly lower than 3 mm on the whole surface, therefore a highly reliable data in terms of geometric precision.

Figure 6.

Generation process on SpeedTree. Starting from the photogrammetric data, the trunks are further cut to be replaced with parametrized branches, initially straight, to which the texture extracted from the first model is then applied, and the direction of the real branches is given (taken from the most complete cloud of the laser scanner) until the exact original conformation is obtained.

Figure 6.

Generation process on SpeedTree. Starting from the photogrammetric data, the trunks are further cut to be replaced with parametrized branches, initially straight, to which the texture extracted from the first model is then applied, and the direction of the real branches is given (taken from the most complete cloud of the laser scanner) until the exact original conformation is obtained.

Figure 7.

Assignment of low-res mesh to the leaves of a hazel tree atlas. Placing the leaves and activating collisions on the final model.

Figure 7.

Assignment of low-res mesh to the leaves of a hazel tree atlas. Placing the leaves and activating collisions on the final model.

Figure 8.

Final version of the two trees detected and reproduced in the parametric software: grafted (on the right) and non-grafted (on the left).

Figure 8.

Final version of the two trees detected and reproduced in the parametric software: grafted (on the right) and non-grafted (on the left).

Figure 9.

View of the virtual environment in Unreal Engine.

Figure 9.

View of the virtual environment in Unreal Engine.

Figure 10.

On the left: SenseGlove Nova 2 used in the simulation. On the right: hand joint model used for finger movement reconstruction, where f is the normalized flexion provided by the haptic gloves, and it depends on the finger flexion. The proposed model computes the finger’s joint angles starting from the f value.

Figure 10.

On the left: SenseGlove Nova 2 used in the simulation. On the right: hand joint model used for finger movement reconstruction, where f is the normalized flexion provided by the haptic gloves, and it depends on the finger flexion. The proposed model computes the finger’s joint angles starting from the f value.

Figure 11.

Simulation of branch cutting within VR.

Figure 11.

Simulation of branch cutting within VR.

Figure 12.

First tests on instrument calibration, and virtual instructor animation registration.

Figure 12.

First tests on instrument calibration, and virtual instructor animation registration.

Figure 13.

Comparison between the poses of the virtual instructor within the simulation, after the post-processing of the motion capture animation, and the raw registrations of the skeleton within the Motive Software before post-processing.

Figure 13.

Comparison between the poses of the virtual instructor within the simulation, after the post-processing of the motion capture animation, and the raw registrations of the skeleton within the Motive Software before post-processing.

Figure 14.

Boxplots for the four categories (G, INV, SP, and REAL), providing an overview of how participants responded to each dimension.

Figure 14.

Boxplots for the four categories (G, INV, SP, and REAL), providing an overview of how participants responded to each dimension.

Table 1.

The IGroup Presence Questionnaire (IPQ).

Table 1.

The IGroup Presence Questionnaire (IPQ).

| Variable | Item | Question |

|---|

| G | G | In the computer generated world I had a sense of “being there”. |

| P | SP1 | Somehow I felt that the virtual world surrounded me. |

| | SP2 | I felt like I was just perceiving pictures. |

| | SP3 | I did not feel present in the virtual space. |

| | SP4 | I had a sense of acting in the virtual space, rather than operating something from outside. |

| | SP5 | I felt present in the virtual space. |

| INV | INV1 | How aware were you of the real world surrounding while navigating in the virtual world? (i.e., sounds, room temperature, other people, etc.)? |

| | INV2 | I was not aware of my real environment. |

| | INV3 | I still paid attention to the real environment. |

| | INV4 | I was completely captivated by the virtual world. |

| REAL | REAL1 | How much did your experience in the virtual environment seem consistent with your real world experience? |

| | REAL2 | How much did your experience in the virtual environment seem consistent with your real world experience ? |

| | REAL3 | How real did the virtual world seem to you? |

| | REAL4 | The virtual world seemed more realistic than the real world. |

Table 2.

Mean, standard deviation, median, and range of each item across the whole sample.

Table 2.

Mean, standard deviation, median, and range of each item across the whole sample.

| Variable | Item | Mean | Standard Deviation | Median | Range |

|---|

| G | G | 4.73 | 0.83 | 5.00 | 3–6 |

| SP | SP1 | 4.67 | 0.88 | 5.00 | 3–6 |

| | SP2 | 4.40 | 1.57 | 5.00 | 0–6 |

| | SP3 | 4.50 | 1.48 | 5.00 | 1–6 |

| | SP4 | 4.10 | 1.45 | 4.50 | 1–6 |

| | SP5 | 4.57 | 1.17 | 5.00 | 1–6 |

| INV | INV1 | 3.77 | 1.41 | 4.00 | 1–6 |

| | INV2 | 3.70 | 1.47 | 4.00 | 0–6 |

| | INV3 | 3.53 | 1.57 | 3.50 | 0–6 |

| | INV4 | 4.67 | 0.88 | 5.00 | 2–6 |

| REAL | REAL1 | 3.53 | 1.41 | 4.00 | 0–6 |

| | REAL2 | 4.03 | 1.33 | 4.00 | 1–6 |

| | REAL3 | 2.40 | 1.48 | 2.00 | 0–5 |

| | REAL4 | 1.37 | 1.22 | 1.00 | 0–4 |

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).