The Assistant Personal Robot Project: From the APR-01 to the APR-02 Mobile Robot Prototypes

Abstract

:1. Introduction

2. Background

2.1. The Assistant Personal Robot (APR) Project

2.2. Reference Assistant and Companion Mobile Robots

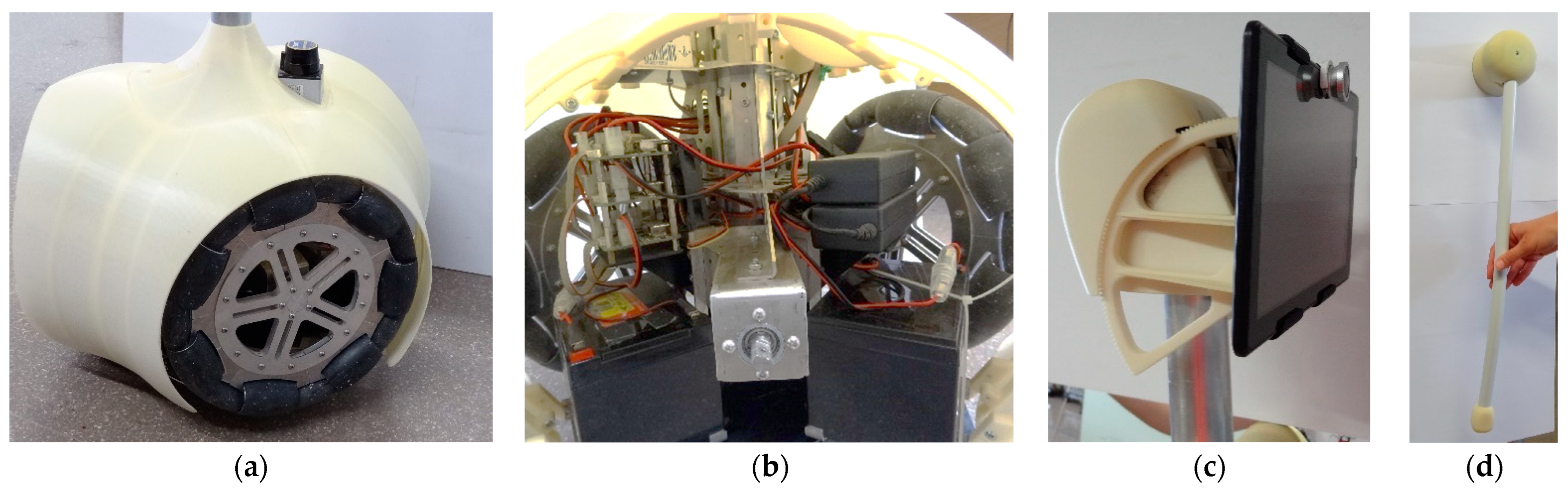

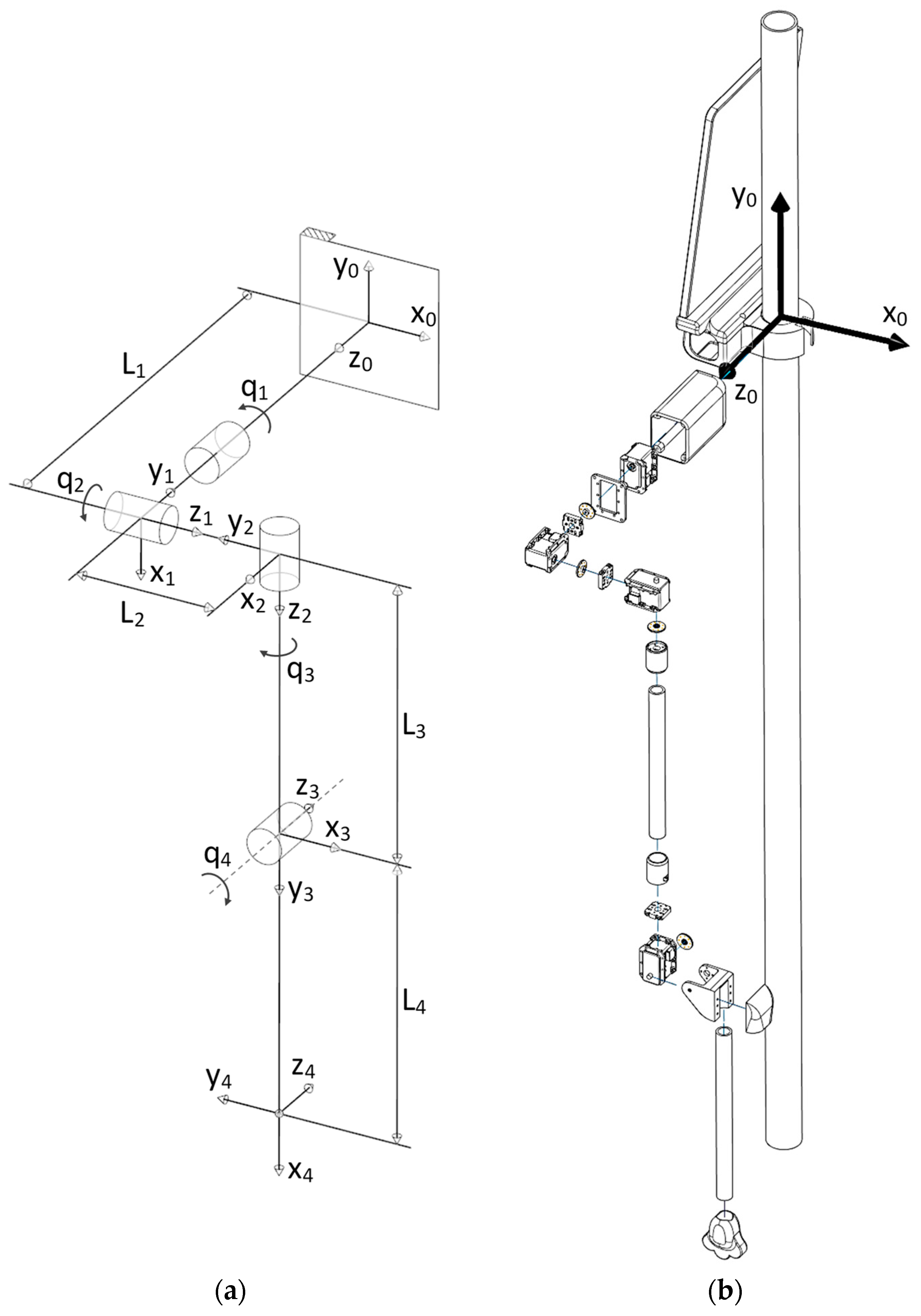

2.3. Initial APR-01 Mobile Robot Prototype

2.4. Basic APR-02 Prototype Evolution

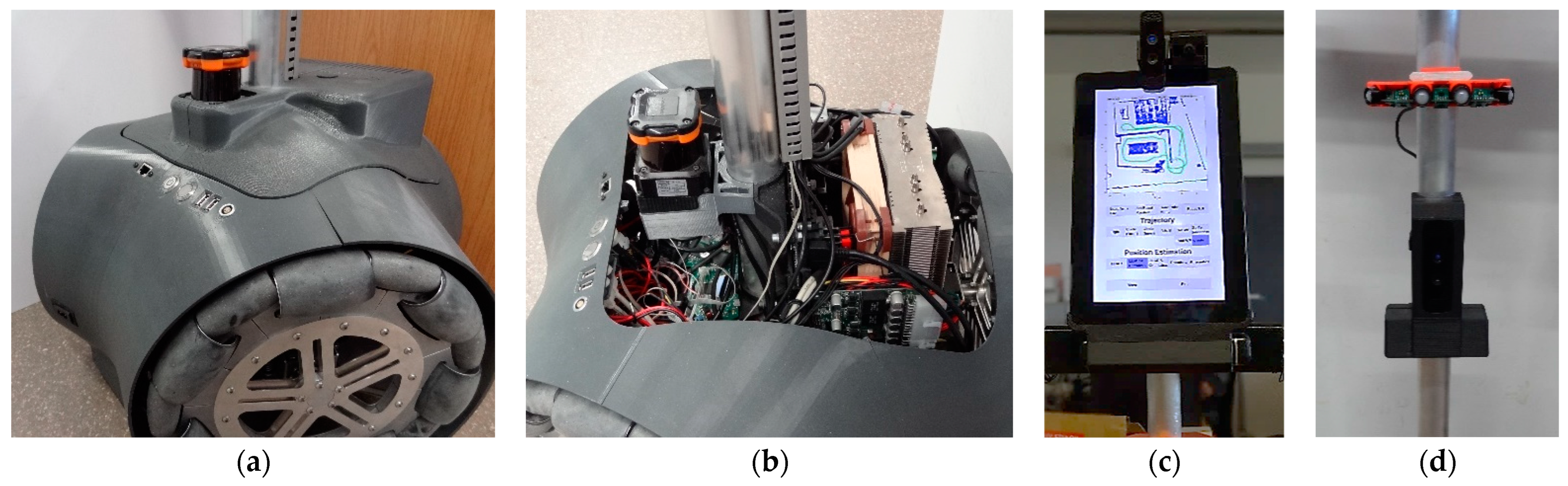

2.5. The Uncanny Valley Theory

2.6. Selection of New Anthropomorphic Improvements for the APR-02

3. Selection of Mobile Robot Improvements

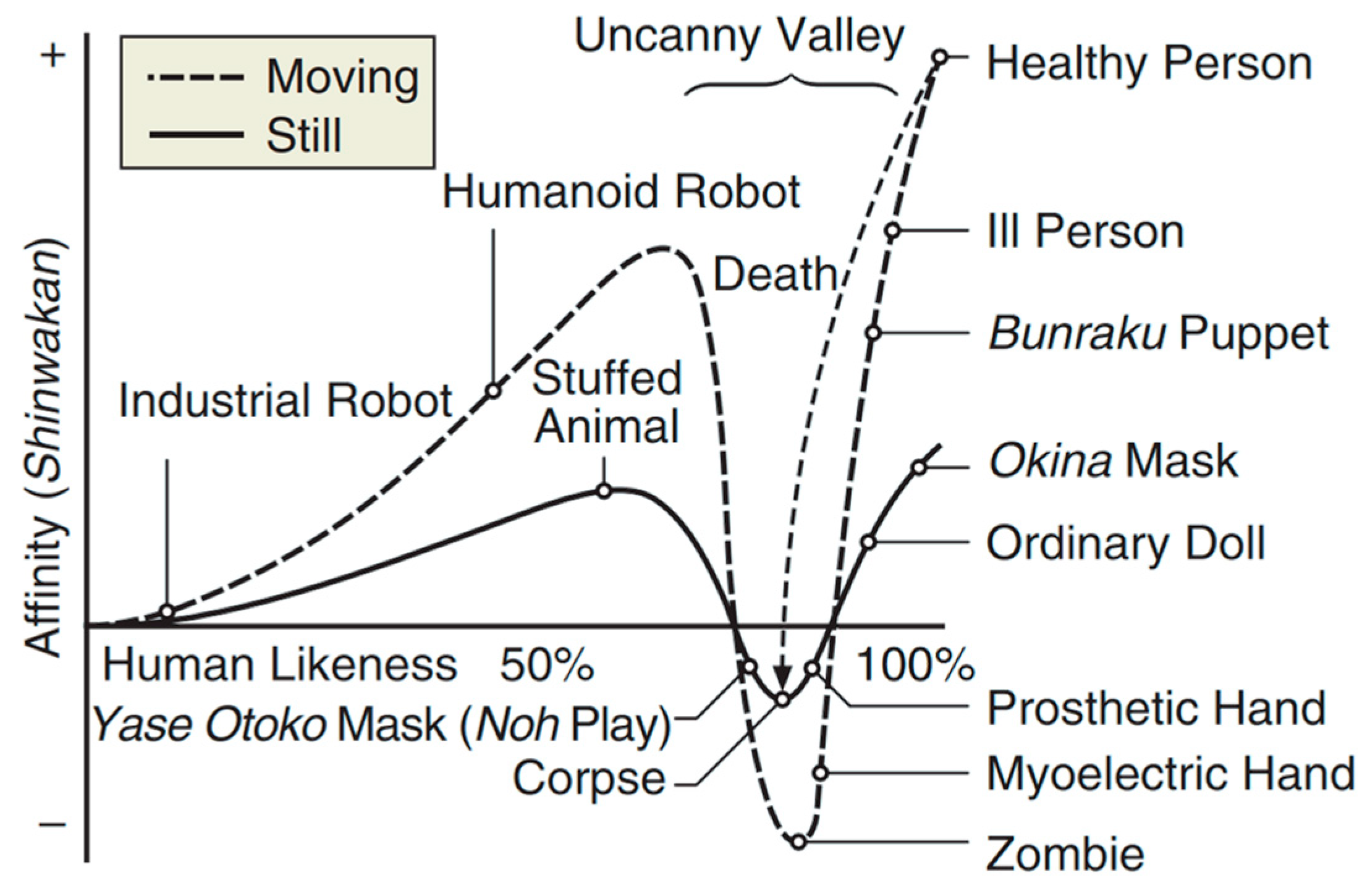

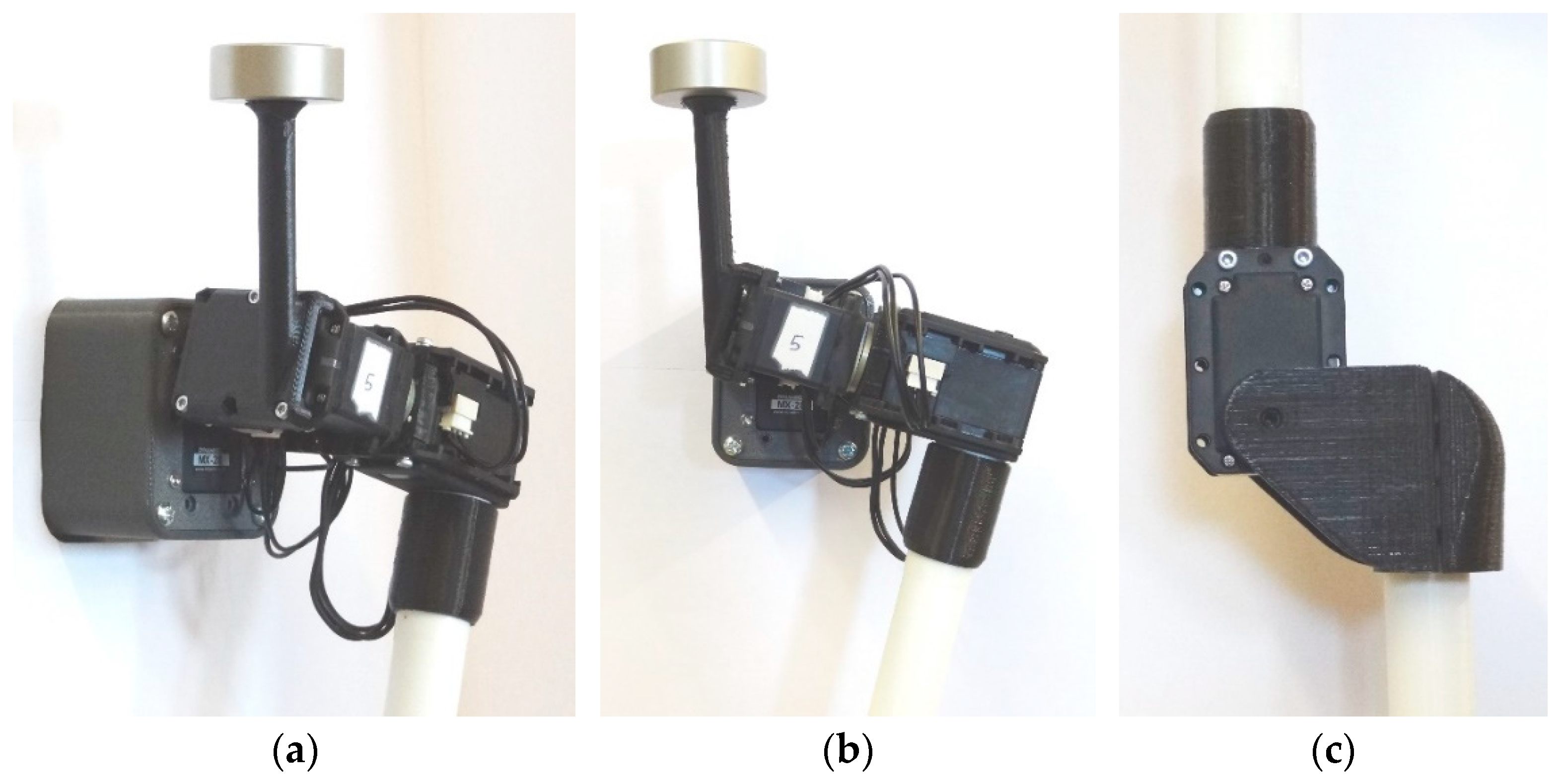

3.1. Selection of the Robot Arm

3.2. Selection of the Forearm and Hand

3.3. Selection of the Robot Face

3.4. Selection of the Neutral Facial Expression

3.5. Selection of the Mouth Animation to Generate Sounds

3.6. Selection of the Proximity Feedback Expressed with the Mouth

3.7. Selection of the Gaze Feedback

3.8. Selection of Arm Gestures

| Algorithm 1. Pseudo-code used to animate the arms. |

| activate_arm_servos(); [gesture_index, randon_increment] = initialize_variables(); while gesture_activated do gesture_index + 1; [servo_angles] = get_gesture(gesture_index, randon_increment); set_arm_angles(servo_angles); if gesture_index > limit then gesture_index = 1; end end deactivate_arm_servos(); |

3.9. Selection of the Motion Planning Strategy

3.10. Selection of the Nominal Translational Velocity

4. Conclusions and Future Works

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Fong, T.; Nourbakhsh, I.; Dautenhahn, K. A survey of socially interactive robots. Robot. Auton. Syst. 2003, 42, 143–166. [Google Scholar] [CrossRef] [Green Version]

- Busch, B.; Maeda, G.; Mollard, Y.; Demangeat, M.; Lopes, M. Postural optimization for an ergonomic human-robot interaction. In Proceedings of the International Conference on Intelligent Robots and Systems, Vancouver, BC, Canada, 24–28 September 2017; pp. 1–8. [Google Scholar] [CrossRef] [Green Version]

- Young, J.E.; Hawkins, R.; Sharlin, E.; Igarashi, T. Toward Acceptable Domestic Robots: Applying Insights from Social Psychology. Int. J. Soc. Robot. 2009, 1, 95–108. [Google Scholar] [CrossRef] [Green Version]

- Saldien, J.; Goris, K.; Vanderborght, B.; Vanderfaeillie, J.; Lefeber, D. Expressing Emotions with the Social Robot Probo. Int. J. Soc. Robot. 2010, 2, 377–389. [Google Scholar] [CrossRef]

- Conti, D.; Di Nuovo, S.; Buono, S.; Di Nuovo, A. Robots in Education and Care of Children with Developmental Disabilities: A Study on Acceptance by Experienced and Future Professionals. Int. J. Soc. Robot. 2017, 9, 51–62. [Google Scholar] [CrossRef] [Green Version]

- Casas, J.A.; Céspedes, N.; Cifuentes, C.A.; Gutierrez, L.F.; Rincón-Roncancio, M.; Múnera, M. Expectation vs. reality: Attitudes towards a socially assistive robot in cardiac rehabilitation. Appl. Sci. 2019, 9, 4651. [Google Scholar] [CrossRef] [Green Version]

- Rossi, S.; Santangelo, G.; Staffa, M.; Varrasi, S.; Conti, D.; Di Nuovo, A. Psychometric Evaluation Supported by a Social Robot: Personality Factors and Technology Acceptance. In Proceedings of the IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), Nanjing, China, 27–31 August 2018. [Google Scholar] [CrossRef] [Green Version]

- Wada, K.; Shibata, T.; Saito, T.; Tanie, K. Effects of robot-assisted activity for elderly people and nurses at a day service center. Proc. IEEE 2004, 92, 1780–1788. [Google Scholar] [CrossRef]

- Kozima, H.; Michalowski, M.P.; Nakagawa, C. Keepon: A playful robot for research, therapy, and entertainment. Int. J. Soc. Robot. 2009, 1, 3–18. [Google Scholar] [CrossRef]

- Palacín, J.; Clotet, E.; Martínez, D.; Martínez, D.; Moreno, J. Extending the Application of an Assistant Personal Robot as a Walk-Helper Tool. Robotics 2019, 8, 27. [Google Scholar] [CrossRef] [Green Version]

- Lin, C.; Šabanović, S.; Dombrowski, L.; Miller, A.D.; Brady, E.; MacDorman, K.F. Parental Acceptance of Children’s Storytelling Robots: A Projection of the Uncanny Valley of AI. Front. Robot. AI 2021, 8, 579993. [Google Scholar] [CrossRef]

- De Graaf, M.M.A.; Allouch, S.B. Exploring influencing variables for the acceptance of social robots. Robot. Auton. Syst. 2013, 61, 1476–1486. [Google Scholar] [CrossRef]

- Kanda, T.; Miyashita, T.; Osada, T.; Haikawa, Y.; Ishiguro, H. Analysis of Humanoid Appearances in Human—Robot Interaction. IEEE Trans. Robot. 2008, 24, 725–735. [Google Scholar] [CrossRef]

- Belpaeme, T.; Kennedy, J.; Ramachandranbrian, A.; Scassellati, B.; Tanaka, F. Social robots for education: A review. Sci. Robot. 2018, 3, eaat5954. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Murphy, J.; Gretzel, U.; Pesonen, J. Marketing robot services in hospitality and tourism: The role of anthropomorphism. J. Travel Tour. Mark. 2019, 36, 784–795. [Google Scholar] [CrossRef]

- Duffy, B.R. Anthropomorphism and the social robot. Robot. Auton. Syst. 2003, 42, 177–190. [Google Scholar] [CrossRef]

- Gasteiger, N.; Ahn, H.S.; Gasteiger, C.; Lee, C.; Lim, J.; Fok, C.; Macdonald, B.A.; Kim, G.H.; Broadbent, E. Robot-Delivered Cognitive Stimulation Games for Older Adults: Usability and Acceptability Evaluation. ACM Trans. Hum. Robot. Interact. 2021, 10, 1–18. [Google Scholar] [CrossRef]

- Elazzazi, M.; Jawad, L.; Hilfi, M.; Pandya, A. A Natural Language Interface for an Autonomous Camera Control System on the da Vinci Surgical Robot. Robotics 2022, 11, 40. [Google Scholar] [CrossRef]

- Guillén Ruiz, S.; Calderita, L.V.; Hidalgo-Paniagua, A.; Bandera Rubio, J.P. Measuring Smoothness as a Factor for Efficient and Socially Accepted Robot Motion. Sensors 2020, 20, 6822. [Google Scholar] [CrossRef]

- Martín, A.; Pulido, J.C.; González, J.C.; García-Olaya, A.; Suárez, C. A Framework for User Adaptation and Profiling for Social Robotics in Rehabilitation. Sensors 2020, 20, 4792. [Google Scholar] [CrossRef]

- Ribeiro, T.; Gonçalves, F.; Garcia, I.S.; Lopes, G.; Ribeiro, A.F. CHARMIE: A Collaborative Healthcare and Home Service and Assistant Robot for Elderly Care. Appl. Sci. 2021, 11, 7248. [Google Scholar] [CrossRef]

- Calderita, L.V.; Vega, A.; Barroso-Ramírez, S.; Bustos, P.; Núñez, P. Designing a Cyber-Physical System for Ambient Assisted Living: A Use-Case Analysis for Social Robot Navigation in Caregiving Centers. Sensors 2020, 20, 4005. [Google Scholar] [CrossRef]

- Muthugala, M.A.V.J.; Jayasekara, A.G.B.P. MIRob: An intelligent service robot that learns from interactive discussions while handling uncertain information in user instructions. In Proceedings of the Moratuwa Engineering Research Conference (MERCon), Moratuwa, Sri Lanka, 5–6 April 2016; pp. 397–402. [Google Scholar] [CrossRef]

- Garcia-Salguero, M.; Gonzalez-Jimenez, J.; Moreno, F.A. Human 3D Pose Estimation with a Tilting Camera for Social Mobile Robot Interaction. Sensors 2019, 19, 4943. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Pathi, S.K.; Kiselev, A.; Kristoffersson, A.; Repsilber, D.; Loutfi, A. A Novel Method for Estimating Distances from a Robot to Humans Using Egocentric RGB Camera. Sensors 2019, 19, 3142. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hellou, M.; Gasteiger, N.; Lim, J.Y.; Jang, M.; Ahn, H.S. Personalization and Localization in Human-Robot Interaction: A Review of Technical Methods. Robotics 2021, 10, 120. [Google Scholar] [CrossRef]

- Holland, J.; Kingston, L.; McCarthy, C.; Armstrong, E.; O’Dwyer, P.; Merz, F.; McConnell, M. Service Robots in the Healthcare Sector. Robotics 2021, 10, 47. [Google Scholar] [CrossRef]

- Penteridis, L.; D’Onofrio, G.; Sancarlo, D.; Giuliani, F.; Ricciardi, F.; Cavallo, F.; Greco, A.; Trochidis, I.; Gkiokas, A. Robotic and Sensor Technologies for Mobility in Older People. Rejuvenation Res. 2017, 20, 401–410. [Google Scholar] [CrossRef] [Green Version]

- Clotet, E.; Martínez, D.; Moreno, J.; Tresanchez, M.; Palacín, J. Assistant Personal Robot (APR): Conception and Application of a Tele-Operated Assisted Living Robot. Sensors 2016, 16, 610. [Google Scholar] [CrossRef] [Green Version]

- Moreno, J.; Clotet, E.; Lupiañez, R.; Tresanchez, M.; Martínez, D.; Pallejà, T.; Casanovas, J.; Palacín, J. Design, Implementation and Validation of the Three-Wheel Holonomic Motion System of the Assistant Personal Robot (APR). Sensors 2016, 16, 1658. [Google Scholar] [CrossRef] [Green Version]

- Palacín, J.; Martínez, D.; Rubies, E.; Clotet, E. Suboptimal Omnidirectional Wheel Design and Implementation. Sensors 2021, 21, 865. [Google Scholar] [CrossRef]

- Palacín, J.; Rubies, E.; Clotet, E. Systematic Odometry Error Evaluation and Correction in a Human-Sized Three-Wheeled Omnidirectional Mobile Robot Using Flower-Shaped Calibration Trajectories. Appl. Sci. 2022, 12, 2606. [Google Scholar] [CrossRef]

- Ishiguro, H.; Ono, T.; Imai, M.; Maeda, T.; Kanda, T.; Nakatsu, R. Robovie: An interactive humanoid robot. Ind. Robot. Int. J. 2001, 28, 498–503. [Google Scholar] [CrossRef]

- Cavallo, F.; Aquilano, M.; Bonaccorsi, M.; Limosani, R.; Manzi, A.; Carrozza, M.C.; Dario, P. On the design, development and experimentation of the ASTRO assistive robot integrated in smart environments. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Karlsruhe, Germany, 6–10 May 2013. [Google Scholar]

- Iwata, H.; Sugano, S. Design of Human Symbiotic Robot TWENDY-ONE. In Proceedings of the IEEE International Conference on Robotics and Automation, Kobe, Japan, 12–17 May 2009. [Google Scholar]

- Mukai, T.; Hirano, S.; Nakashima, H.; Kato, Y.; Sakaida, Y.; Guo, S.; Hosoe, S. Development of a Nursing-Care Assistant Robot RIBA That Can Lift a Human in its Arms. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Taipei, Taiwan, 18–22 October 2010. [Google Scholar]

- Lunenburg, J.; Van Den Dries, S.; Elfring, J.; Janssen, R.; Sandee, J.; van de Molengraft, M. Tech United Eindhoven Team Description 2013. In Proceedings of the RoboCup International Symposium, Eindhoven, The Netherlands, 1 July 2013. [Google Scholar]

- Kittmann, R.; Fröhlich, T.; Schäfer, J.; Reiser, U.; Weißhardt, F.; Haug, A. Let me Introduce Myself: I am Care-O-bot 4, a Gentleman Robot. In Proceedings of the Mensch und Computer 2015, Stuttgart, Germany, 6–9 September 2015. [Google Scholar]

- Palacín, J.; Martínez, D. Improving the Angular Velocity Measured with a Low-Cost Magnetic Rotary Encoder Attached to a Brushed DC Motor by Compensating Magnet and Hall-Effect Sensor Misalignments. Sensors 2021, 21, 4763. [Google Scholar] [CrossRef] [PubMed]

- Palacín, J.; Rubies, E.; Clotet, E.; Martínez, D. Evaluation of the Path-Tracking Accuracy of a Three-Wheeled Omnidirectional Mobile Robot Designed as a Personal Assistant. Sensors 2021, 21, 7216. [Google Scholar] [CrossRef] [PubMed]

- Rubies, E.; Palacín, J. Design and FDM/FFF Implementation of a Compact Omnidirectional Wheel for a Mobile Robot and Assessment of ABS and PLA Printing Materials. Robotics 2020, 9, 43. [Google Scholar] [CrossRef]

- Moreno, J.; Clotet, E.; Tresanchez, M.; Martínez, D.; Casanovas, J.; Palacín, J. Measurement of Vibrations in Two Tower-Typed Assistant Personal Robot Implementations with and without a Passive Suspension System. Sensors 2017, 17, 1122. [Google Scholar] [CrossRef] [Green Version]

- Gardecki, A.; Podpora, M. Experience from the operation of the Pepper humanoid robots. In Proceedings of the Progress in Applied Electrical Engineering (PAEE), Koscielisko, Poland, 25–30 June 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Cooper, S.; Di Fava, A.; Vivas, C.; Marchionni, L.; Ferro, F. ARI: The Social Assistive Robot and Companion. In Proceedings of the 29th IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), Naples, Italy, 31 August–4 September 2020. [Google Scholar]

- Kompaï Robots. Available online: http://kompairobotics.com/robot-kompai/ (accessed on 27 May 2022).

- Zenbo Robots. Available online: https://zenbo.asus.com/ (accessed on 27 May 2022).

- Weiss, A.; Hannibal, G. What makes people accept or reject companion robots? In Proceedings of the PETRA ‘18: Proceedings of the 11th PErvasive Technologies Related to Assistive Environments Conference, Corfu, Greece, 26–29 June 2018. [Google Scholar] [CrossRef]

- Qian, J.; Zi, B.; Wang, D.; Ma, Y.; Zhang, D. The Design and Development of an Omni-Directional Mobile Robot Oriented to an Intelligent Manufacturing System. Sensors 2017, 17, 2073. [Google Scholar] [CrossRef] [Green Version]

- Pages, J.; Marchionni, L.; Ferro, F. Tiago: The modular robot that adapts to different research needs. In Proceedings of the International workshop on robot modularity, IROS, Daejeon, Korea, 9–14 October 2016. [Google Scholar]

- Yamaguchi, U.; Saito, F.; Ikeda, K.; Yamamoto, T. HSR, Human Support Robot as Research and Development Platform. In Proceedings of the 6th International Conference on Advanced Mechatronics (ICAM2015), Tokyo, Japan, 5–8 December 2015. [Google Scholar]

- Jiang, C.; Hirano, S.; Mukai, T.; Nakashima, H.; Matsuo, K.; Zhang, D.; Honarvar, H.; Suzuki, T.; Ikeura, R.; Hosoe, S. Development of High-functionality Nursing-care Assistant Robot ROBEAR for Patient-transfer and Standing Assistance. In Proceedings of the JSME Conference on Robotics and Mechatronics, Kyoto, Japan, 17–19 May 2015. [Google Scholar]

- Hendrich, N.; Bistry, H.; Zhang, J. Architecture and Software Design for a Service Robot in an Elderly-Care Scenario. Engineering 2015, 1, 27–35. [Google Scholar] [CrossRef] [Green Version]

- Yamazaki, K.; Ueda, R.; Nozawa, S.; Kojima, M.; Okada, K.; Matsumoto, K.; Ishikawa, M.; Shimoyama, I.; Inaba, M. Home-Assistant Robot for an Aging Society. Proc. IEEE 2012, 100, 2429–2441. [Google Scholar] [CrossRef]

- Ammi, M.; Demulier, V.; Caillou, S.; Gaffary, Y.; Tsalamlal, Y.; Martin, J.-C.; Tapus, A. Haptic Human-Robot Affective Interaction in a Handshaking Social Protocol. In Proceedings of the Tenth Annual ACM/IEEE International Conference on Human-Robot Interaction (HRI ‘15), Portland, OR, USA, 2–5 March 2015. [Google Scholar] [CrossRef]

- Tapus, A.; Tapus, C.; Mataric, M. Long term learning and online robot behavior adaptation for individuals with physical and cognitive impairments. Springer Tracts Adv. Robot. 2010, 62, 389–398. [Google Scholar] [CrossRef]

- Bohren, J.; Rusu, R.B.; Jones, E.G.; Marder-Eppstein, E.; Pantofaru, C.; Wise, M.; Mösenlechner, L.; Meeussen, W.; Holzer, S. Towards Autonomous Robotic Butlers: Lessons Learned with the PR2. In Proceedings of the IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011. [Google Scholar] [CrossRef]

- Kume, Y.; Kawakami, H. Development of Power-Motion Assist Technology for Transfer Assist Robot. Matsushita Tech. J. 2008, 54, 50–52. (In Japanese) [Google Scholar]

- Zhang, Z.; Cao, Q.; Zhang, L.; Lo, C. A CORBA-based cooperative mobile robot system. Ind. Robot. Int. J. 2009, 36, 36–44. [Google Scholar] [CrossRef]

- Kanda, S.; Murase, Y.; Sawasaki, N.; Asada, T. Development of the service robot “enon”. J. Robot. Soc. Jpn. 2006, 24, 288–291. (In Japanese) [Google Scholar] [CrossRef]

- Palacín, J.; Martínez, D.; Rubies, E.; Clotet, E. Mobile Robot Self-Localization with 2D Push-Broom LIDAR in a 2D Map. Sensors 2020, 20, 2500. [Google Scholar] [CrossRef] [PubMed]

- Mori, M. The Uncanny Valley. Energy 1970, 7, 33–35. (In Japanese) [Google Scholar]

- Mori, M.; MacDorman, K.F.; Kageki, N. The Uncanny Valley [From the Field]. IEEE Robot. Autom. Mag. 2012, 19, 98–100. [Google Scholar] [CrossRef]

- Bartneck, C.; Kanda, T.; Ishiguro, H.; Hagita, N. My Robotic Doppelgänger–A Critical Look at the Uncanny Valley. In Proceedings of the International Symposium on Robot and Human Interactive Communication, Toyama, Japan, 27 September–2 October 2009. [Google Scholar]

- Bartneck, C.; Kulić, D.; Croft, E.; Zoghbi, S. Measurement instruments for the anthropomorphism, animacy, likeability, perceived intelligence, and perceived safety of robots. Int. J. Soc. Robot. 2009, 1, 71–81. [Google Scholar] [CrossRef] [Green Version]

- Weiss, A.; Bartneck, C. Meta analysis of the usage of the Godspeed Questionnaire Series. In Proceedings of the 24th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), Kobe, Japan, 31 August–4 September 2015. [Google Scholar] [CrossRef]

- Ortiz-Torres, G.; Castillo, P.; Reyes-Reyes, J. An Actuator Fault Tolerant Control for VTOL vehicles using Fault Estimation Observers: Practical validation. In Proceedings of the 2018 International Conference on Unmanned Aircraft Systems (ICUAS), Dallas, TX, USA, 12–15 June 2018; pp. 1054–1062. [Google Scholar] [CrossRef]

- Denavit, J.; Hartenberg, R.S. A kinematic notation for lower-pair mechanisms based on matrices. J. Appl. Mech. 1955, 22, 215–221. [Google Scholar] [CrossRef]

- Pérez Vidal, A.F.; Rumbo Morales, J.Y.; Ortiz Torres, G.; Sorcia Vázquez, F.D.J.; Cruz Rojas, A.; Brizuela Mendoza, J.A.; Rodríguez Cerda, J.C. Soft Exoskeletons: Development, Requirements, and Challenges of the Last Decade. Actuators 2021, 10, 166. [Google Scholar] [CrossRef]

- Nikafrooz, N.; Leonessa, A. A Single-Actuated, Cable-Driven, and Self-Contained Robotic Hand Designed for Adaptive Grasps. Robotics 2021, 10, 109. [Google Scholar] [CrossRef]

- Bateson, M.; Callow, L.; Holmes, J.R.; Redmond Roche, M.L.; Nettle, D. Do Images of ‘Watching Eyes’ Induce Behaviour That Is More Pro-Social or More Normative? A Field Experiment on Littering. PLoS ONE 2013, 8, e82055. [Google Scholar] [CrossRef] [Green Version]

- Metahuman Creator. Available online: https://www.unrealengine.com/en-US/digital-humans (accessed on 16 February 2021).

- Unreal Engine. Available online: https://www.unrealengine.com (accessed on 16 February 2021).

- Epic Games. Available online: https://www.epicgames.com/site/en-US/home (accessed on 16 February 2021).

- Palacín, J.; Martínez, D.; Clotet, E.; Pallejà, T.; Burgués, J.; Fonollosa, J.; Pardo, A.; Marco, S. Application of an Array of Metal-Oxide Semiconductor Gas Sensors in an Assistant Personal Robot for Early Gas Leak Detection. Sensors 2019, 19, 1957. [Google Scholar] [CrossRef] [Green Version]

- Cole, J. About Face; MIT Press: Cambridge, MA, USA, 1998. [Google Scholar]

- Mehrabian, A. Communication without words. In Communication Theory, 2nd ed.; Mortensen, C.D., Ed.; Routledge: Oxfordshire, UK, 2008. [Google Scholar] [CrossRef]

- DePaulo, B.M. Nonverbal behavior and self-presentation. Psychol. Bull. 1992, 111, 203–243. [Google Scholar] [CrossRef] [PubMed]

- Hall, E.T. The Hidden Dimension; Doubleday Company: Chicago, IL, USA, 1966. [Google Scholar]

- Walters, M.L.; Dautenhahn, K.; te Boekhorst, R.; Koay, K.L.; Kaouri, C.; Woods, S.; Nehaniv, C.; Lee, D.; Werry, I. The influence of subjects’ personality traits on personal spatial zones in a human–robot interaction experiment. In Proceedings of the IEEE international workshop on robot and human interactive communication, Nashville, TN, USA, 13–15 August 2005; pp. 347–352. [Google Scholar] [CrossRef] [Green Version]

- Saunderson, S.; Nejat, G. How Robots Influence Humans: A Survey of Nonverbal Communication in Social Human-Robot Interaction. Int. J. Soc. Robot. 2019, 11, 575–608. [Google Scholar] [CrossRef]

- Gonsior, B.; Sosnowski, S.; Mayer, C.; Blume, J.; Radig, B.; Wollherr, D.; Kühnlenz, K. Improving aspects of empathy and subjective performance for HRI through mirroring facial expressions. In Proceedings of the IEEE international workshop on robot and human interactive communication, Atlanta, GA, USA, 31 July–3 August 2001; pp. 350–356. [Google Scholar] [CrossRef]

- Cook, M. Gaze and mutual gaze in social encounters. Am. Sci. 1977, 65, 328–333. [Google Scholar]

- Mazur, A.; Rosa, E.; Faupel, M.; Heller, J.; Leen, R.; Thurman, B. Physiological aspects of communication via mutual gaze. Am. J. Sociol. 1980, 86, 50–74. [Google Scholar] [CrossRef]

- Babel, F.; Kraus, J.; Miller, L.; Kraus, M.; Wagner, N.; Minker, W.; Baumann, M. Small Talk with a Robot? The Impact of Dialog Content, Talk Initiative, and Gaze Behavior of a Social Robot on Trust, Acceptance, and Proximity. Int. J. Soc. Robot. 2021, 13, 1485–1498. [Google Scholar] [CrossRef]

- Belkaid, M.; Kompatsiari, K.; De Tommaso, D.; Zablith, I.; Wykowska, A. Mutual gaze with a robot affects human neural activity and delays decision-making processes. Sci. Robot. 2021, 6, eabc5044. [Google Scholar] [CrossRef]

- Chidambaram, V.; Chiang, Y.-H.; Mutlu, B. Designing persuasive robots: How robots might persuade people using vocal and nonverbal cues. In Proceedings of the ACM/IEEE international conference human–robot interaction, Boston, MA, USA, 5–8 March 2012; pp. 293–300. [Google Scholar] [CrossRef]

- Viola, P.; Jones, M. Rapid object detection using a boosted cascade of simple features. In Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR 2001), Kauai, HI, USA, 8–14 December 2001; Volume 1, p. I. [Google Scholar]

- Montaño-Serrano, V.M.; Jacinto-Villegas, J.M.; Vilchis-González, A.H.; Portillo-Rodríguez, O. Artificial Vision Algorithms for Socially Assistive Robot Applications: A Review of the Literature. Sensors 2021, 21, 5728. [Google Scholar] [CrossRef]

- Rubies, E.; Palacín, J.; Clotet, E. Enhancing the Sense of Attention from an Assistance Mobile Robot by Improving Eye-Gaze Contact from Its Iconic Face Displayed on a Flat Screen. Sensors 2022, 22, 4282. [Google Scholar] [CrossRef]

- Aly, A.; Tapus, A. Towards an intelligent system for generating an adapted verbal and nonverbal combined behavior in human–robot interaction. Auton. Robot. 2016, 40, 193–209. [Google Scholar] [CrossRef]

- Cao, Z.; Bryant, D.; Molteno, T.C.A.; Fox, C.; Parry, M. V-Spline: An Adaptive Smoothing Spline for Trajectory Reconstruction. Sensors 2021, 21, 3215. [Google Scholar] [CrossRef]

- Shi, D.; Collins, E.G., Jr.; Goldiez, B.; Donate, A.; Liu, X.; Dunlap, D. Human-aware robot motion planning with velocity constraints. In Proceedings of the International symposium on collaborative technologies and systems, Irvine, CA, USA, 19–23 May 2008; pp. 490–497. [Google Scholar] [CrossRef]

- Tsui, K.M.; Desai, M.; Yanco, H.A. Considering the Bystander’s perspective for indirect human–robot interaction. In Proceedings of the 5th ACM/IEEE International Conference on Human Robot Interaction, Osaka, Japan, 2–5 March 2010; pp. 129–130. [Google Scholar] [CrossRef] [Green Version]

- Butler, J.T.; Agah, A. Psychological effects of behavior patterns of a mobile personal robot. Auton. Robot. 2001, 10, 185–202. [Google Scholar] [CrossRef]

- Charisi, V.; Davison, D.; Reidsma, D.; Evers, V. Evaluation methods for user-centered child-robot interaction. In Proceedings of the IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), New York, NY, USA, 26–31 August 2016; pp. 545–550. [Google Scholar] [CrossRef]

- Luo, L.; Ogawa, K.; Ishiguro, H. Identifying Personality Dimensions for Engineering Robot Personalities in Significant Quantities with Small User Groups. Robotics 2022, 11, 28. [Google Scholar] [CrossRef]

- Dey, A.K.; Abowd, G.D.; Salber, D. A conceptual framework and a toolkit for supporting the rapid prototyping of context-aware applications. Hum.-Comput. Interact. 2001, 16, 97–166. [Google Scholar] [CrossRef]

- Rosa, J.H.; Barbosa, J.L.V.; Kich, M.; Brito, L. A Multi-Temporal Context-aware System for Competences Management. Int. J. Artif. Intell. Educ. 2015, 25, 455–492. [Google Scholar] [CrossRef] [Green Version]

- Da Rosa, J.H.; Barbosa, J.L.; Ribeiro, G.D. ORACON: An adaptive model for context prediction. Expert Syst. Appl. 2016, 45, 56–70. [Google Scholar] [CrossRef]

- Bricon-Souf, N.; Newman, C.R. Context awareness in health care: A review. Int. J. Med. Inf. 2007, 76, 2–12. [Google Scholar] [CrossRef]

| Year | Robot Name | Omnidirectional Mobility | Autonomous | Articulated Arms | Articulated Hands | Handle a Person | Face | Animated Face | Gaze Feedback |

|---|---|---|---|---|---|---|---|---|---|

| APR-02 (This paper) | 3W | Yes | Yes | No | No, walk-tool | Iconic | Yes | Yes | |

| 2020 | ARI [44] C | No | Yes | Yes | Yes | No | Iconic | Eyes | Yes |

| 2019 | Kompaï-3 [45] C | No | Yes | No | No | No, walk-tool | Iconic | No | No |

| 2018 | Zenbo Junior [46] C | No | Yes | No | No | No | Iconic | Yes | Yes |

| 2018 | BUDDY [47] | No | Yes | No | No | No | Iconic | Yes | No |

| 2017 | Pepper [43] C | 3W | Yes | Yes | Yes | No | Iconic | Yes | No |

| 2017 | Zenbo [46] C | No | Yes | No | No | No | Iconic | Yes | Yes |

| 2017 | RedwallBot-1 [48] | 4W | Yes | No | No | No | No | No | No |

| 2016 | TIAGo [49] C | No/4W | Yes | Yes | Yes | No | No | No | No |

| 2016 | APR-01 [29] | 3W | Yes | Long stick | No | No, walk-tool | No | No | No |

| 2016 | Kompaï-2 [45] C | No | Yes | No | No | No, walk-tool | Iconic | Eyes | No |

| 2015 | HSR [50] | Yes A | Yes | Yes | Yes | No | No | No | No |

| 2015 | Care-O-bot 4 [38] C | 3W | Yes | Yes | Yes | No | Iconic | Eyes | Yes |

| 2015 | ROBEAR [51] | No | Yes | Yes | No | Yes | Zoomorphic | No | No |

| 2013 | ASTRO [34] | No | Yes | No | No | No, walk-tool | Iconic | No | No |

| 2013 | Amigo [37] | 4W | Yes | Yes | Yes | No | No | No | No |

| 2013 | Robot-Era [52] | No | Yes | Yes | Yes | No | Iconic | No | No |

| 2012 | Robot Maid [53] | No | Yes | Yes | Yes | No | Iconic | No | No |

| 2012 | Meka [54] | Yes A | Yes | Yes | Yes | No | Iconic | Yes | Yes |

| 2010 | RIBA [36] | 4W | Yes | Yes | No | Yes | Zoomorphic | No | No |

| 2010 | Bandit-II [55] | No | Yes | Yes | Yes | No | Mechanistic | Yes | No |

| 2010 | PR2 [56] | Yes A | Yes | Yes | Yes | No | No | No | No |

| 2009 | Kompaï-1 [45] C | No | Yes | No | No | No, walk-tool | Iconic | No | No |

| 2009 | Twendy-One [35] | 4W | Yes | Yes | Yes | Partially | Zoomorphic | No | No |

| 2008 | TAR [57] | No | No | Yes | No | Yes | No | No | No |

| 2007 | SMARTPAL [58] | Yes B | Yes | Yes | Yes | No | No | No | No |

| 2005 | Enon [59] C | No | Yes | Yes | No | No | Iconic | No | No |

| 2001 | Robovie [33] | No | Yes | Yes | No | No | Mechanistic | No | No |

| Robot Arm Alternatives | |

|---|---|

| Rigid | Articulated |

|  |

| 5 votes | 71 votes (93%) |

| Link | ||||

|---|---|---|---|---|

| 1 | ||||

| 2 | ||||

| 3 | ||||

| 4 |

| Robot Forearm and Hand Alternatives | |||||

|---|---|---|---|---|---|

| Original | Flexible gripper | Iconic | Fixed (skin) | Fixed (white) | Articulated |

|  |  |  |  |  |

| 0 votes | 11 votes | 9 votes | 0 votes | 65 votes (86%) | 0 votes |

| Robot Role | Robot Face Alternatives | ||||

|---|---|---|---|---|---|

| Information | HAL | Iconic | Robot | Synthetic human | |

|  |  |  |  | |

| Guide | 9 votes | 4 votes | 46 votes (60%) | 4 votes | 13 votes |

| Assistant | 2 votes | 2 votes | 46 votes (60%) | 6 votes | 20 votes |

| Security | 2 votes | 65 votes (85%) | 0 votes | 9 votes | 0 votes |

| Average votes | 6% | 31% | 40% | 8% | 14% |

| Neutral Facial Expression Alternatives | |||

|---|---|---|---|

| Plain | Slightly smiley | Short | Short smiley |

|  |  |  |

| 0 votes | 61 votes (91%) | 1 vote | 5 votes |

| Sound | Face Mouth Animation Alternatives | |||||

|---|---|---|---|---|---|---|

| Wave-line | Rectangular | Rounded | ||||

|  |  |  |  |  | |

| Beeps | 67 votes (100%) | 0 votes | 0 votes | |||

| Speech | 3 votes | 6 votes | 58 votes (87%) | |||

| Proximity Feedback Alternatives | |||

|---|---|---|---|

| Mouth gestures | None | ||

|  |  |  |

| 49 votes (94%) | 3 votes | ||

| Gaze Feedback Alternatives | |||

|---|---|---|---|

| Eye-gaze feedback | None | ||

|  |  |  |

| 49 votes (94%) | 3 votes | ||

| Arm Gesture Alternatives | |||

|---|---|---|---|

| Random arm gestures | None | ||

|  |  |  |

| 39 votes (90%) | 4 votes | ||

| Alternative Motion Planning Strategies | |

|---|---|

| Straight path | Smooth path (spline interpolated) |

|  |

| 0 votes | 43 votes (100%) |

| Translational Nominal Velocity Alternatives | |

|---|---|

| Low (0.15 m/s) | Medium (0.3 m/s) |

|  |

| 28 votes (65%) | 15 votes |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Palacín, J.; Rubies, E.; Clotet, E. The Assistant Personal Robot Project: From the APR-01 to the APR-02 Mobile Robot Prototypes. Designs 2022, 6, 66. https://doi.org/10.3390/designs6040066

Palacín J, Rubies E, Clotet E. The Assistant Personal Robot Project: From the APR-01 to the APR-02 Mobile Robot Prototypes. Designs. 2022; 6(4):66. https://doi.org/10.3390/designs6040066

Chicago/Turabian StylePalacín, Jordi, Elena Rubies, and Eduard Clotet. 2022. "The Assistant Personal Robot Project: From the APR-01 to the APR-02 Mobile Robot Prototypes" Designs 6, no. 4: 66. https://doi.org/10.3390/designs6040066

APA StylePalacín, J., Rubies, E., & Clotet, E. (2022). The Assistant Personal Robot Project: From the APR-01 to the APR-02 Mobile Robot Prototypes. Designs, 6(4), 66. https://doi.org/10.3390/designs6040066