Abstract

This paper describes the evolution of the Assistant Personal Robot (APR) project developed at the Robotics Laboratory of the University of Lleida, Spain. This paper describes the first APR-01 prototype developed, the basic hardware improvement, the specific anthropomorphic improvements, and the preference surveys conducted with engineering students from the same university in order to maximize the perceived affinity with the final APR-02 mobile robot prototype. The anthropomorphic improvements have covered the design of the arms, the implementation of the arm and symbolic hand, the selection of a face for the mobile robot, the selection of a neutral facial expression, the selection of an animation for the mouth, the application of proximity feedback, the application of gaze feedback, the use of arm gestures, the selection of the motion planning strategy, and the selection of the nominal translational velocity. The final conclusion is that the development of preference surveys during the implementation of the APR-02 prototype has greatly influenced its evolution and has contributed to increase the perceived affinity and social acceptability of the prototype, which is now ready to develop assistance applications in dynamic workspaces.

1. Introduction

Social robots are expected to gradually appear in our everyday life, interacting and communicating with people either at home, work, or during leisure time. The common assumption in the design of social robots is that humans prefer to interact with machines in the same way as they would interact with other humans [1]. The research on social robots comprises two main concepts [2,3]: social acceptability and practical acceptability. Social acceptability refers to how robots are perceived by society, whereas practical acceptability refers to how people perceive the use of a specific robot during or after interacting with this robot in their working environment.

The ideal social robot was defined by Saldien et al. [4] as a robot able to communicate with people through verbal and nonverbal signals, engaging users on the cognitive and on the emotional level. The development of communicative capabilities enables promising applications of social robots, such as the education and care of children with disabilities [5], social assistance in cardiac rehabilitation [6], psychological assessment of patients using robots as diagnostic tools [7], human care [8,9,10], or education as entertainment [11].

The variables influencing the acceptance of social robots have been widely explored in different areas and scenarios. De Graaf et al. [12] conducted an extensive literature review and concluded that the most important variables when evaluating the acceptability of social robots are usefulness, adaptability, enjoyment, sociability, companionship, and perceived behavioral control. In the case of humanoid social robots, the research is focused on the adaptation of the robot appearance and behaviors in order to improve the acceptance by the user [13]. A recent literature review on how the appearance and behavior of a robot affect its application in education can be found in Belpaeme et al. [14]. Other specific works such as Murphy et al. [15] concluded that anthropomorphism (attribution of physical or behavioral human characteristics to a robot [16]) was a critical factor in accepting and rationalizing robot actions and services in hospitality and tourism applications. Alternatively, Gasteiger et al. [17] explored the social acceptability and the practical usability of a cognitive game robot used by older adults during a long-term study. The conclusion was that the social acceptability of a robot can be improved through culturalization, for example, adapting the language and pronunciation of the robot to the culture of the user.

At this moment, the applied research in social and service robots is focused on the improvement of the interaction with the robot. For example, Elazzazi et al. [18] developed a natural language interface for a voice-activated autonomous camera system used during robotic laparoscopic procedures in order to control the camera with a higher level of abstraction. Guillén et al. [19] analyzed the trajectory of a mobile robot and concluded that the development of smoother trajectories increases the acceptability of a mobile robot. Martín et al. [20] proposed the development of a complete inclusive framework for the application of social robotics in rehabilitation with the aim to increase the benefits of the therapies. Ribeiro et al. [21] proposed a complete and exhaustive collaborative healthcare and home service based on an assistant robot. Calderita et al. [22] proposed a combined cyber–physical system to integrate an assistive social robot in a physical or cognitive rehabilitation process. Muthugala et al. [23] presented an intelligent service robot that can acquire knowledge during an interaction and handle the uncertain information in the user instructions. Focused on sensor improvements, Garcia-Salguero et al. [24] investigated the application of a tilting fish-eye camera located in the head of a social robot for human 3D pose estimation and Pathi et al. [25] proposed the use of an egocentric RGB camera to estimate the distance between the robot and the user during an interaction. Finally, Hellou et al. [26] analyzed different robots and reviewed the models used to adapt the robot to its environment, and Holland et al. [27] reviewed the advances in service robots in the healthcare sector, highlighting the benefits of robots during the COVID-19 pandemic.

This paper describes the evolution of the Assistant Personal Robot (APR) project from the initial APR-01 mobile robot prototype implementation to the improved APR-02 design. This paper details the inspiration of the initial design, the basic hardware improvements, the specific anthropomorphic improvements, and the preference surveys conducted with university engineering students during this prototype implementation in order to maximize the perceived affinity and social acceptability of the evolved mobile robot prototype.

Although there are many works in the literature focused on the evaluation of social robotic systems as a whole [28], there are no similar scientific papers describing all the internal stages of the evolution of a prototype design. In this case, the APR concept was initially proposed as a tool to evaluate teleoperation and omnidirectional motion system performances [29,30]. At a later stage, the improved APR-02 prototype was proposed as an autonomous mobile robot with the objective to increase the anthropomorphism and affinity with the robot while continuing the improvement of the performances of its three-wheeled omnidirectional motion system [31,32].

2. Background

2.1. The Assistant Personal Robot (APR) Project

The concept and project of the Assistant Personal Robot (APR) were proposed and developed at the University of Lleida (Lleida, Spain), in order to create and experiment with assistant and companion mobile robots. The first prototype created within this concept was the APR-01 mobile robot prototype (Figure 1-left), firstly presented by Clotet et al. [29] in 2016 in order to evaluate the implementation of teleoperation services and to evaluate the performance of a custom omnidirectional motion system. The evolved APR-02 mobile robot prototype (Figure 1-right) was proposed with the objective to increase the anthropomorphism and affinity with the robot, to operate autonomously, and to optimize the performances of its three-wheeled omnidirectional motion system [31,32]. This paper summarizes the internal process followed to evolve from the initial APR-01 implementation to the APR-02 mobile robot prototype.

Figure 1.

Mobile robots developed in the APR project: initial APR-01 prototype (left) and new APR-02 prototype (right).

2.2. Reference Assistant and Companion Mobile Robots

Table 1 shows the list of wheeled mobile robots that were used as a reference for the APR concept in order to create the APR-01 and APR-02 mobile robot prototypes. The table also highlights the models that have been commercialized. The APR-01 was designed as a teleoperation tool and its development was focused on providing omnidirectional motion performances. The most common motion system in the mobile robot designs that inspired the APR-01 mobile robot design (from Robovie [33], 2001 up to ASTRO [34], 2013) used conventional wheels, while the most promising mobility performances were provided by Twendy-One [35], RIBA [36], and Amigo [37], which used an omnidirectional motion system based on four omnidirectional wheels (4W). However, the use of four omnidirectional wheels has the disadvantage that the practical implementation of some omnidirectional angular and straight displacements requires wheel slippage, a factor that complicates the odometry and the control of the mobile robot. In order to avoid this problem, the motion system of the APR-01 mobile robot prototype was initially designed to use a three-wheeled (3W) omnidirectional motion system, presented in 2016 [29]. This proposal agreed with the omnidirectional system included in the Care-O-bot 4 [38] presented previously in 2015. The motion performances achieved with the three-wheeled omnidirectional motion system implemented in the APR mobile robot have been evaluated and improved in [32,39,40,41,42] with very successful performances, but since then only the commercial robot Pepper [43] has newly implemented a similar three-wheeled (3W) omnidirectional motion system.

Table 1.

List of wheeled assistant or companion mobile robots used as references during the evolution of the APR mobile robots.

The information shown in Table 1 highlights the application of the mobile robots Kompaï-1 [45], ASTRO [34], and Kompaï-2 [45] as walking assistants, and the APR-01 was originally designed with a stick-arm articulated at the shoulder in order to be able to operate as a walking assistant tool. This capability to operate as a walking assistant has been maintained in the APR-02 design [10] and it has also been included in other mobile robots such as Kompaï-3 [45]. Table 1 also highlights the capacity to handle a person, implemented in TAR [57], RIBA [36], and ROBEAR [51], but this capacity was discarded because of the reduced base and weight of the APR mobile robot concept.

With regard to the height of the robot, most of the assistance robots presented in Table 1 have heights between 1.0 m and 1.50 m, for example, Robovie [33] is 1.20 m, Enon [59] is 1.30 m, and Twendy-One [35] is 1.46 m. The robots that are able to handle a person are bigger and slightly taller: Riba [36] is 1.40 m and Robear [51] is 1.50 m. Some robots also have variable height: AMIGO [37] can be 1.0–1.35 m and HSR [50] can be 1.0–1.35 m. Based on the results published from the application of these robots, the tall design of the mobile robot APR-01 was designed with a height of 1.60 m in order to encourage face-to-face videoconference interaction with standing people.

The design of the APR-01 was focused on telecontrol and consequently did not include an articulated hand, because the accurate control of an articulated hand requires a precise vision system and huge computational resources. In this direction, Table 1 shows that only 13 of the 26 robots listed were originally designed with articulated hands.

Finally, other features that Table 1 highlights and that have been considered to be included in the design of the evolved APR-02 prototype are the use of a characteristic animated face and the implementation of gaze feedback during the interaction with the mobile robot. This gaze feature feedback was only included on Meka [54] and on Care-O-bot 4 [38] before the development of the APR-01, and more recently in Zenbo [46], Zenbo Junior [46], and ARI [44].

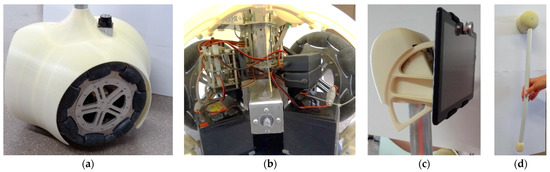

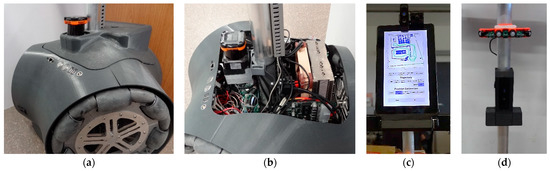

2.3. Initial APR-01 Mobile Robot Prototype

The APR-01 mobile robot prototype (Figure 1-left) was firstly described in Clotet et al. [29] and it was originally conceived as a research testbench for telepresence services, omnidirectional motion systems, sensors, controllers, motors, omnidirectional wheels, batteries and structural materials. The APR-01 was a tall (1.60 m) and medium weighted (33 kg) mobile robot. The thin robot was defined by a thin aluminum tube that connected the base and the head of the robot. The structure of the base of the robot that internally supported the three motors and three lead batteries was also made with aluminum (Figure 2b). The cover of the base and other visible parts of the robot were made with additive manufacturing in ABS (Figure 2a) using a Dimension SST 1200es printer from Stratasys (Edina, MN, USA). The omnidirectional motion of the mobile robot was achieved with the use of three optimized omnidirectional wheels [30] also made in aluminum (Figure 2a), although the free rollers of the wheels were covered with polyolefin heat shrink tubing.

Figure 2.

Close view of the APR-01 mobile robot prototype: (a) detail of the base of the robot containing the omnidirectional wheels, the characteristic ABS cover, and a 2D low-cost LIDAR; (b) detail of the interior of the base containing three lead batteries, the DC motors of the wheels, the battery chargers, and the main electronic board; (c) detail of the tablet located at the top of the robot and of the panoramic lens; and (d) detail of the arm used in walk-helper applications.

The APR-01 prototype was created to evaluate the development of telepresence services based on the communication capabilities of an Android© tablet that was the main control unit of the robot, placed on the head of the robot (Figure 2c) in order to use its screen to display usage information. The control software included a custom server videoconference application that was able to show the face of the teleoperator that remotely controlled the mobile robot [29]. Additionally, the rear camera of the tablet (that was over the screen of the tablet, showing what was in front of the robot) had a panoramic lens attached (Figure 2c) in order to double the limited field of view of the rear cameras included in the tablets and to offer this scenario information to the operator of the mobile robot. The tablet with the panoramic camera could be oriented to view the ground in front of the robot or the area in front of the robot (Figure 2c).

The tablet was connected with only a USB wire to an electronic control board that was in charge of controlling the three DC motors of the wheels, an additional small DC motor that controlled the angular orientation of the tablet, and two additional DC motors that controlled the rotation of the shoulders of the mobile robot. The USB wire provided power to the tablet and provided communication control with the electronic board of the robot. This configuration allowed the tablet to continue operating using its internal lithium batteries even when the lead batteries of the mobile robot were exhausted.

The mobile robot was designed to allow remote control by registered remote operators (emergency services, family member, doctor, etc.) that could take control of the robot using an Android© smartphone, an Android© tablet, or a PC. The custom client videoconference application provided access to the images gathered from the frontal and rear cameras of the tablet embedded in the robot. The custom client application also provided control of the motion of the mobile robot and of the orientation of the head by using an onscreen joystick in the client Android© application and by using a physical joystick on a PC [29].

The design of the APR-01 mobile robot included a basic or low-cost LIDAR URG-04 from Hokuyo (Figure 2a) with a distance range of 4 m that was controlled by a high-priority agent that was able to stop the robot in case of detecting an obstacle in the current planned trajectory. Finally, the arms of the mobile robot had one degree of freedom and a long heavy stick (Figure 2d) made of aluminum designed to mimic the natural motion of the arms of a person while walking and to provide a holding stick when the robot was used as a walking assistant tool.

2.4. Basic APR-02 Prototype Evolution

The initial APR-01 mobile robot prototype design was proposed to be operated remotely as a telepresence service and to experiment with a three-wheeled omnidirectional motion system. Considering the information described in Table 1 and the good results obtained with the omnidirectional motion system, the natural evolution of this mobile robot design concept was the inclusion of a new control system with autonomous operation capabilities as well as the inclusion of anthropomorphic characteristics in the mobile robot design. The basic APR-02 prototype evolution (Figure 3) is also a tall (1.760 m) and medium weighted (30 kg) mobile robot defined by a thin aluminum tube that connects the base and the head (screen) of the robot.

Figure 3.

Close view of the APR-02 mobile robot prototype: (a) detail of the base of the robot containing the omnidirectional wheels, the characteristic cover, and a 2D Precision LIDAR; (b) detail of the interior of the base containing an onboard PC above three lithium batteries; (c) detail of the panoramic tactile screen of the robot which has a small black structure on its top to hold and hide an RGB-D and an RGB panoramic camera; and (d) detail of the central black structure that holds and hides two RGB-D cameras and of the orange structure that supports PIR sensors and warning lights.

The development of the new APR-02 prototype design as an autonomous mobile robot is based on the use of an improved 2D LIDAR (Figure 3a) and on the use of a PC as a main control board (Figure 3b). The LIDAR used is the UTM-30LX from Hokuyo, which provides an indoor 2D measurement range of 30 m, 270° scan range, scan speed of 25 ms, and 0.25° radial resolution. This LIDAR can be tilted down in order to detect obstacles or holes on the ground [60]. The most relevant advantage of this LIDAR is the operation with 12V DC voltage that in practice allows its direct operation with 12V lead acid or lithium batteries (13.4V at full charge) without using a DC/DC converter. The disadvantages of this LIDAR are its power consumption, which ranges from 0.7 to 1.0 A, and the inexistence of a power off or standby internal status, so the power applied from the batteries is controlled with a CMOS transistor operating as a power switch. The PC used in the APR-02 mobile robot is equipped with an Intel Core i7-6700 K processor, a 16 GB DDR4 RAM (2 × 8 GB modules with dual channel), and an SSD hard disk. This PC provides sensor connectivity by means of 12 USB plugs. In addition to the PC, there is a motion control board that provides enhanced control of the angular rotational velocity of the omnidirectional wheels of the robot [39]. Additionally, the Android© tablet used in the head of the APR-01 was replaced with a 12″ tactile screen monitor (Figure 3c) in order to display graphical information. The upper part of the monitor has a support structure that holds and hides an RGB-D (Creative 3D Senz, Jurong East, Singapore) and an RGB panoramic camera (Ailipu Technology, Shenzhen, China). The RGB-D camera provides depth information of people in front of the mobile robot, while the panoramic information provides valuable information of almost 180° around the robot. The mobile robot also has two additional RGB-D cameras placed hidden on a central support structure (Figure 3d), one pointed to the ground in front the robot and one pointed to the front in order to be able to detect children interacting with the robot. There is also an orange support structure with warning lights and six passive infrared sensors (PIR) covering 360° around the robot for security and surveillance applications.

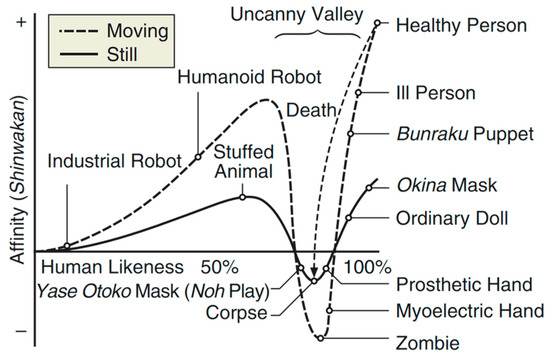

2.5. The Uncanny Valley Theory

The incorporation of anthropomorphic characteristics in a mobile robot prototype is a huge and challenging task. In order to graphically illustrate this difficulty, Mori [61] proposed in 1970 the Uncanny Valley theory (Figure 4) (originally published in Japanese and updated by Mori et al. [62] in 2012), suggesting that there is a relationship between the human likeness of a robot or entity and its perceived affinity. The existence of the Uncanny Valley has lately been questioned by several authors such as Bartneck et al. [63], who concluded that the Uncanny Valley hypothesis should no longer be used to guide the development of highly realistic androids because “anthropomorphism and likeability may be multi-dimensional constructs that cannot be projected into a two-dimensional space”. However, the APR design concept is far from being considered a highly realistic robot, so the incorporation of anthropomorphic improvements during the prototype development of the APR-02 design has been made by considering the opinion of university engineering students in order to maximize the affinity with the robot while avoiding the Uncanny Valley effect.

Figure 4.

Graph depicting the Uncanny Valley, introduced by Masahiro Mori in 1970 [61], translated and redrawn by Karl F. MacDorman and Norri Kageki in consultation with Mori [62]. Courtesy of Karl F. MacDorman.

The Uncanny Valley theory [61,62] (Figure 4) proposes the existence of a characteristic valley in the relationship between the human likeness of a robot or entity (puppet, mask, toy, etc.) and the perceived Shinwakan, a Japanese word that embraces concepts such as affinity, agreeability, familiarity, and comfort level. According to this theory, the relationship between the human likeness of a robot or entity and its perceived affinity increases until arriving to a local maximum, and then enters a valley, named the Uncanny Valley by Mori [61]. This abrupt decrease from empathy to revulsion appears when human-like entities such as robots fail to attain a lifelike appearance, while the maximum affinity is provided by a healthy person.

2.6. Selection of New Anthropomorphic Improvements for the APR-02

New anthropomorphic improvements to be applied in the evolved APR-02 mobile robot design were selected by conducting short-scale preference surveys with university engineering students during prototype development. The objective of these short-scale surveys was to maximize the perceived affinity and social acceptability of the robot without entering into the Uncanny Valley during the prototype development.

Please note that at this early prototype development stage, the evaluation of the social acceptability of a robot for the selection of improvement alternatives does not require long interaction times, because the concept of social acceptability [2] is based on how robots are perceived. The factors evaluated are the realism of the behavior and the perceived appearance [12].

The selection of the alternative improvements to be implemented in the APR-02 prototype was performed by conducting surveys with groups of engineering students who participated in outreach robotic activities organized periodically at the University of Lleida, Spain, from 2016 to 2020. The activities consisted of a 30 to 45 min presentation of the APR mobile robots followed by a live 5 to 10 min demonstration featuring some current and planned mobile robot improvements. The demonstration was followed by a 5 min break that fostered the development of interesting informal conversations followed by a final voluntary survey in which the remaining students were asked for their preferences in relation to the planned mobile robot improvements. The total number of participating engineering students was 347 in a total of 20 groups of 7 to 25 students ranging in age from 18 to 21, with an average age of approximately 20 years and an approximate average gender of 70% men and 30% women. The normal development of the outreach robotic activities was delayed by the COVID-19 outbreak.

The short surveys consisted of asking the students to express their preference or affinity for one of the proposed improvement alternatives by voting for only one of them using the quick and immediate show of hands voting method. The use of questionnaires [64] was discarded because they are designed to test specific quality criteria instead of the attitude towards a robot [65]. Additionally, the non-controversial and non-personal nature of the surveys conducted did not require safeguarding the anonymity of the participants during the vote procedure. The numerical results of each voting survey were registered without additional traces. The majority results of each collective survey were used to select the alternative to be implemented in the APR-02 with the expectation to maximize the affinity with the robot while avoiding entering in the Uncanny Valley during this prototype development.

Please note that conducting short-scale surveys for the selection of alternative design implementations is a common technique used during the prototype development of mobile robots, although this procedure and the intermediate results obtained are not usually reported.

3. Selection of Mobile Robot Improvements

This section summarizes the planned mobile robot improvements, the preference surveys conducted, and the alternatives finally selected to be implemented in the APR-02 mobile robot prototype. The objective was to maximize the affinity and social acceptance of the mobile robot while optimizing the efforts and resources invested in this evolution. The anthropomorphic improvements proposed include the design of the arms, the implementation of the arm and symbolic hand, the selection of a face for the mobile robot, the selection of a neutral facial expression, the selection of an animation for the mouth, the application of proximity feedback, the application of gaze feedback, the use of arm gestures, the selection of the motion planning strategy, and the selection of the nominal translational velocity.

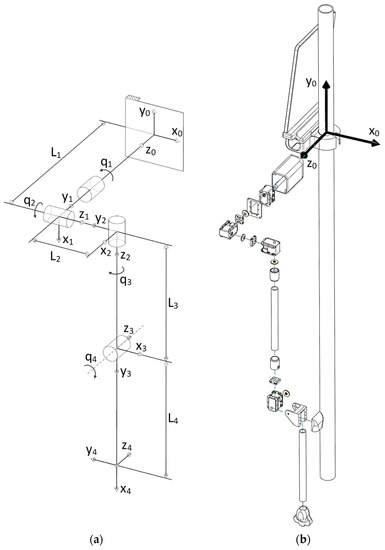

3.1. Selection of the Robot Arm

The first selection of mobile robot improvement to be applied in the APR-02 mobile robot was the implementation of the robot arm. Table 2 shows the two alternatives considered: rigid, representing the original rigid arm implemented in the APR-01 prototype, with one degree of freedom in the shoulder, and articulated, a proposal representing an arm with four degrees of freedom able to mimic basic human gestures.

Table 2.

Alternatives considered to implement the robot arm and votes received.

Table 2 also shows the votes received by each alternative: the rigid alternative had 5 votes and the articulated alternative had 71 votes, so the vast majority of the participants selected the incorporation of an articulated arm in the design of the APR-02 mobile robot. This articulated alternative was recently implemented using digital bus servos operating in a closed loop in order to be robust in terms of fault-tolerant control (FTC) [66] while allowing precise arm movements.

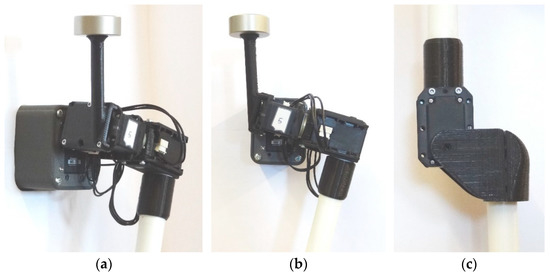

Figure 5a shows the simplified kinematic representation of the left arm of the robot (seen from its back), and Figure 5b shows an exploded view of the left arm assembly. Each articulated arm of the mobile robot has four degrees of freedom (DOF): three in the shoulder and one in the elbow, whereas the hand is fixed.

Figure 5.

Representation of the left articulated arm of the mobile robot (seen from its back): (a) simplified kinematic representation and (b) exploded view of the parts of the arm.

Figure 6 shows the images of the implementation of the arm based on Dynamixel AX-12 digital servomotors. The motor in link 1 (motor 1 as ; joint 1 as ) is housed inside a part that is fixed to the central structure of the robot, whereas motors in links 2, 3, and 4 (motors , , and ; joints , , and ) are visible. Figure 6a shows a partial view of the piece that holds , which is visible across a lateral aperture of the holding piece. A 3D printed piece is used to fix (labelled as “5” in the images) to the shaft of . Figure 6b shows a lateral view of the robot shoulder, with partially visible in the background and and in the foreground. Again, 3D printed parts are used to fix to the shaft of , and also to fix the robot arm to the shaft of . Figure 6c shows a lateral view of the elbow of the robot, in which the forearm is fixed to the shaft of . As shown in Figure 6a, the arm mechanism includes a counterweight of 0.1 kg to reduce the toque that the motor in link 1 has to deliver when the arm is extended and pointing forward. Finally, Table 3 describes the standard Denavit–Hartenberg (DH) [67] parameters for each link of the left articulated arm of the robot that are used to control the motion of the arm. The right articulated arm operates symmetrically.

Figure 6.

Detail of the implementation of the arm: (a) partial view of the structure that holds motor , with motors and attached; (b) lateral view of the robot shoulder, with in the background and and in the foreground; and (c) lateral view of the robot elbow, with .

Table 3.

Denavit–Hartenberg parameters for each link of the left articulated arm of the robot.

3.2. Selection of the Forearm and Hand

The second selection of mobile robot improvements was focused on the implementation of the arm, forearm, and hand in the APR-02 mobile robot. The alternatives considered represented feasible color implementations for the arm and forearm and different non-motorized hand implementations. The implementation of a motorized hand [68,69] will be addressed in future works tailored to specific practical applications. Table 4 shows the six alternatives considered to implement the arm, forearm, and arm: original, the original arm implemented in the APR-01 mobile robot using aluminum tubes with a white plastic cover and a symbolic compact hand 3D printed using green acrylonitrile butadiene styrene (ABS); flexible gripper, a proposal that replaces the original handle with a gripper (designed to hold empty slim cans by friction) 3D printed using blue flexible thermoplastic polyurethane (TPU); iconic, a proposal that includes three circular fingers in the original handle 3D printed using a flexible red TPU; fixed (skin), a simplified representation of a complete human hand with fixed fingers 3D printed using black polylactic acid (PLA) and an arm and forearm 3D printed using skin-colored PLA; fixed (white), a similar implementation with the arm and forearm 3D printed using white PLA; and articulated, a complete representation of an articulated hand 3D printed using black TPU that can be manually adjusted to reproduce different hand gestures and with a forearm 3D printed using skin-colored PLA.

Table 4.

Alternatives considered to implement the forearm, arm and hand and votes received.

Table 4 shows the votes received by each alternative. The majority results of this survey selected the fixed (white) alternative based on the use of white arm and forearm sections 3D printed using white PLA and a fixed hand 3D printed using black PLA. Please note that the images presented in Table 4 are not able to reproduce the visual impression of a live observation of the proposed alternatives. The results of this survey agree with the Uncanny Valley theory in the sense that the use of human-like skin colors in the materials used to implement the arms does not automatically increase the affinity or social acceptability of the alternative. In this case, the uniform color obtained with FDM/FFF 3D printing was not able to replicate the effect of human skin (see Table 4).

3.3. Selection of the Robot Face

The APR-02 mobile robot prototype was designed to include a bigger 12″ vertical tactile screen in order to combine the display of information and the display of a representative face. The next selection was focused on the face or information to be displayed on the screen of the APR-02 mobile robot prototype. As a reference, Duffy [16] classified head constructions for robots according to their anthropomorphism as human-like, iconic, and abstract. The human-like category includes designs that are as close as possible to a human head; iconic refers to designs with a minimum set of features that are still expressive, such as the ones used in comics; and abstract corresponds to mechanistic functional designs, with minimal resemblance to humans. Duffy [16] concluded that robotic head designs should be rather mechanistic and iconic in order to avoid the Uncanny Valley effect and successfully implement robotic facial expressions.

Table 5 shows the five alternatives considered to implement the face of the mobile robot: information, HAL, iconic, robot, and synthetic human. These alternatives have been evaluated considering three feasible mobile robot applications: guide, assistant, and security. Table 5-information consists of using the screen of the mobile robot to represent figures, maps, messages, and some buttons for interaction. Table 5-HAL represents a framed circular red light freely inspired by the physical representation of the HAL 9000 computer with fictional artificial intelligence appearing in the film “2001: A Space Odyssey” directed by Stanley Kubrick in 1968. Table 5-iconic is a basic representation of a neutral humanoid face with big eyes and a long line as a mouth. The use of big eyes is inspired by Bateson et al. [70], who concluded that the use of big eyes in images tends to induce more pro-social behavior. In this case, the eyes, eyelashes, and mouth of this iconic face have been created using simple lines, rectangles, and circles that can be easily animated to reproduce emotions and facial gestures. Table 5-robot presents an artistic 2D humanoid robot face with male facial features, a representation that allows the future development of basic animations and gesticulations by modifying the projection of the image on the screen. Finally, Table 5-synthetic human presents a photorealistic artificial human with female facial features created with Metahuman Creator [71], a cloud-streamer application for 3D and real-time digital human creation using the game engine Unreal Engine [72] developed by Epic Games [73]. At this development stage, this face is only a static photorealistic image of an unreal woman available as a featured example in the demonstration package of Metahuman Creator. This framework allows the development of sophisticated photorealistic 3D animations and gesticulations of male and female genders, although this development may require an implementation effort comparable to the development of a computer game. The use of male and female facial features in the robot and in the photorealistic face were selected as random alternatives for these two implementations.

Table 5.

Alternatives considered to implement the face of the mobile robot in different applications and votes received.

Table 5 also shows the votes for each alternative. The majority results of this survey selected the use of the iconic face for the mobile robot operating as a guide or as a personal assistant, and the HAL face for the mobile robot developing a security application. These results agree with Duffy [16] and the Uncanny Valley theory in the sense that a simpler iconic representation of the face is preferred over a complex photorealistic implementation. These results also agree with Bartneck et al. [64], who stated that negative impressions caused by robots might be avoided by matching the appearance of the robot with its real abilities. Finally, the tailored use of different faces depending on the application also agrees with Kanda et al. [13], who concluded that the interpretation of the appearance of a mobile robot is based on subjective impressions that may change depending on the circumstances or application. Since these results, the iconic face has been mainly used in the new applications of the APR-02 mobile robot [10] and the HAL and information faces have been used specifically in security applications [74].

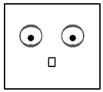

3.4. Selection of the Neutral Facial Expression

It is known that the human face plays a very important role in the expression of character, emotion, and identity [75], and that 55% of affective information is transferred by facial expression [76]. The next selection of human-like improvements was focused on the selection of a neutral facial expression for the iconic face to represent a neutral emotion that must encourage interaction with the mobile robot. Table 6 shows the four alternatives considered to implement the neutral facial expression: plain, slightly smiley, short, and short smiley. The plain expression uses a simple horizontal line to represent the mouth, the slightly smiley expression introduces a slight curvature in the long line of the mouth, the short expression uses a short horizontal line as a mouth, and the short smiley introduces a slight curvature in the short mouth.

Table 6.

Alternatives considered to represent a neutral facial expression and votes received.

Table 6 includes the votes received by each alternative. The results of this survey selected by a vast majority the use of the slightly smiley face to represent a neutral facial expression or neutral emotion that encourages interaction. This result agrees with the conclusion of De Paulo et al. [77], who stated that people like expressive people more than passive people, although Kanda et al. [13] concluded that the interpretation of the appearance of a mobile robot is based on subjective impressions that may change depending on the circumstances or specific application.

3.5. Selection of the Mouth Animation to Generate Sounds

The next selection was focused on the face mouth animation displayed on the iconic face of the mobile robot when generating beeps and speech. The generation of different beeps in a mobile robot is very effective to express short common salutations, provide acceptance feedback, and enhance the effect of warning messages, especially in the cases in which a user-spoken response is not expected or processed by the mobile robot.

Table 7 shows two frames for each of the alternative animations considered: wave-line, rectangular, and rounded. The case of no animation in the mouth was not considered. The wave-line animation implements a random sequence of two oval-shaped sine curves created using two frequencies and two amplitudes; the rectangular animation is a random sequence of four rectangles with two widths and two heights; and the rounded animation is a random sequence of two asymmetric oval shapes with two different widths and heights.

Table 7.

Alternatives considered to implement the animation of the mouth and votes received.

The results of the survey are shown in Table 7. The participants selected by a vast majority the use of wave-line for the generation of beeps, and rounded for the generation of speech. These results seem to correlate the generation of a rare beep sound with the use of a rare animation and the generation of speech with a human-like animation.

3.6. Selection of the Proximity Feedback Expressed with the Mouth

A human–robot interaction can be a new experience in which the human often has no clear reference about the correct proximity to the mobile robot, so it is quite normal for a person interacting with a robot to have doubts about it. The next selection was focused on providing visual feedback about the distance of the user in front of the mobile robot. Table 8 shows the two alternatives considered: mouth gestures and none. Table 8-none provides no proximity feedback, with the face of the robot showing the neutral expression. Table 8-mouth gestures provides visual proximity feedback by subtle changes in the mouth of the iconic face depending on the distance between the mobile robot and the user: the face has a neutral expression when the user is far, it is smiley when the user is detected and is in an optimal distance range, and it is sad or unhappy when the user is too close to the mobile robot. The distance thresholds applied to classify the distances are based on the scientific literature and some practical experimentation. In 1966, Hall [78] classified social distances into four zones: public (>3.65 m), social (1.21–3.65 m), personal (0.45–1.21 m), and intimate (0–0.45 m). Walters et al. [79] analyzed these distances in some human–robot interaction experiments and concluded that up to 40% of the participants approached the robot at less than 0.45 m, which is the intimate (or threatening) social distance. In the APR project, the diameter of the base of the mobile robot (Ø = 0.54 m) was used as a reference to propose three interaction zones: public, personal, and intimate. In the public zone (distance > 1.20 m), the mobile robot is able to detect the user but assumes that there is no planned interaction, so it shows the habitual neutral facial expression. In the personal zone (distance between 0.60 and 1.20 m), the mobile robot visually acknowledges the detection of the user by changing its mouth expression from neutral to smiley. Finally, the intimate zone (distance < 0.6 m) is considered too close and potentially dangerous, so the mobile robot visually responds by changing its mouth expression to sad or unhappy.

Table 8.

Alternatives considered to implement the proximity feedback and votes received.

Table 8 shows the votes to each alternative. The results of this survey selected by a vast majority the use of mouth gestures to exhibit the proximity feedback to the user. These results agree with Saunderson et al. [80], who concluded that the facial expression of a robot influences several factors such as empathy, likeability, perceived intelligence, and acceptance. Specifically, Gonsior et al. [81] found that when a robot shows social expressions in response to the expression of the user, participants rate user acceptance, likeability, and perceived intelligence higher than when the robot shows a neutral face.

The development of this proximity feedback in the mobile robot APR-02 has been implemented as a reflex action based on the distance information provided by the onboard LIDAR and RGB-D cameras. The frontal onboard LIDAR is always active when the mobile robot is in operation and the two frontal RGB-D cameras [25] are active depending on the application or expected interaction with the mobile robot.

3.7. Selection of the Gaze Feedback

During a social interaction between people, the eye-gaze occurs automatically. The eye-gaze behavior by itself can be used to express interpersonal intent, dominance, create social bonds, and even to manipulate [82,83]. Saunderson et al. [80] stated that the appropriate use of eye-gaze in a robot has potential to support or accomplish numerous objectives in human–robot interaction. The next selection of mobile robot improvements was focused on the implementation of gaze feedback with the iconic face displayed on the mobile robot.

Table 9 shows the two alternatives considered: eye-gaze feedback and none. Table 9-none does not provide feedback, so the gaze of the robot is fixed to the front. Table 9-eye-gaze feedback provides visual gaze feedback consisting of following the user with the eyes of the robot.

Table 9.

Alternatives considered to implement the eye-gaze feedback and votes received.

The results of this survey are shown in Table 9. Participants selected by a vast majority the use of eye-gaze feedback to express that the user has the attention of the mobile robot. This result agrees with the conclusion of Babel et al. [84], who stated that users preferred eye-gaze feedback in some dialog initiatives such as small talk. Specifically, Belkaid et al. [85] investigated the influence of the gaze of a humanoid robot on the way people strategically reason in social decision-making, concluding that robot gaze acts as a strong social signal for humans, affecting response times, decision threshold, neural synchronization, choice strategies, and sensitivity to outcomes. Finally, Chidambaram et al. [86] demonstrated that the levels of persuasion of a robot were higher when implementing eye-gaze during the interaction with a human.

The development of this gaze-based proximity feedback in the mobile robot APR-02 has also been incorporated as a reflex action based on the distance information provided by the onboard LIDAR and RGB-D cameras. In this case, the LIDAR provides long range planar information of the location of the legs of potential users, and this information can be used to gaze at moving objects around the mobile robot. Additionally, the images provided by the frontal RGB-D cameras are also analyzed using the Viola and Jones procedure [87] in order to detect the face and the relative height of a user in front of the mobile robot and to precisely guide its gaze. However, the continuous application of this algorithm in a battery-operated mobile robot consumes a lot of energy, so it is executed discontinuously until the first detection of a face. In this direction, Montaño et al. [88] presented a recent literature review (2010–2021) focused on the suitability of the application of computer vision algorithms in the development of assistive robotic applications. Finally, the continuous current improvement of the APR-02 mobile robot performed since the development of this study includes new realistic and precise eye-gaze feedback focused on enhancing the sense of attention from the mobile robot [89].

3.8. Selection of Arm Gestures

The implementation of a practical application with the mobile robot APR-02 may require giving convincing advice to people in emergency situations. The next selection was focused on the automatic generation of arm gestures in order to complement and increase the persuasive effect of spoken messages. Table 10 shows the two alternatives considered: random arm gesture and none. Table 10-random arm gestures refers to the use of random (or cyclic pseudo-random) extroverted arm gestures while the mobile robot provides spoken messages. Table 10-none refers to the absence of gestures while providing spoken messages.

Table 10.

Alternatives considered to implement the arm gestures and votes received.

Table 10 shows the results of this survey. The use of random arm gestures was selected by a vast majority. The result of this experiment agrees with the reviews of Saunderson et al. [80] and Chidambaram et al. [86], which concluded that a robot is more persuasive when it also uses arm gestures. Algorithm 1 shows the implementation of these random gestures: arm gesticulation is based on a combination of a limited sequence of primary predefined movements with a small random offset proposed to increase arm motion variability. Additionally, a fixed sequence of arm movements tailored to implement planned warning messages is also registered [74], while the Denavit–Hartenberg parameters listed in Table 3 allow the graphical simulation of the arms and the estimation of the angles of the servo motors (servo_angles) in order to implement specific gesticulations. Although limited, this rough gesticulation is very convincing and increases the perceived anthropomorphism of the APR-02 mobile robot. Finally, the implementation of natural and precise arm gestures and the possible synchronization of the arms and the mouth will be addressed in future works [90].

| Algorithm 1. Pseudo-code used to animate the arms. |

| activate_arm_servos(); [gesture_index, randon_increment] = initialize_variables(); while gesture_activated do gesture_index + 1; [servo_angles] = get_gesture(gesture_index, randon_increment); set_arm_angles(servo_angles); if gesture_index > limit then gesture_index = 1; end end deactivate_arm_servos(); |

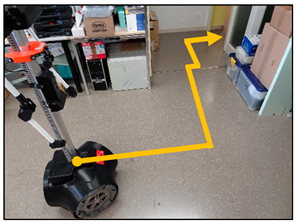

3.9. Selection of the Motion Planning Strategy

One of the factors that may heavily influence the social acceptance of a mobile robot is the perception of the motion of the mobile robot [19]. The next selection was focused on the motion strategy implemented in the mobile robot. Table 11 shows the two alternatives considered: straight path and smooth path. Table 11-straight path represents the motion implemented originally in the mobile robot APR-01, which was composed of straight displacements and rotations. Table 11-smooth path represents a spline-based interpolation [91] of a planned trajectory. The details of the implementation of this smooth path procedure are available in [40], which is based on the information gathered from the LIDAR and on the full motion capabilities of an omnidirectional mobile robot [40].

Table 11.

Alternatives considered to implement the motion planning strategy and votes received.

Table 11 shows that the results of this survey selected the use of smooth path by a vast majority. This result agrees with Guillén et al. [19], who concluded that mobile robots following smooth paths are more socially accepted. In general, the omnidirectional motion performances of the APR-02 were perceived as fascinating by the participants in the demonstrations.

3.10. Selection of the Nominal Translational Velocity

The last selection was focused on the nominal translational velocity of the mobile robot. The perception of the velocity of motion of a mobile robot is another factor that influences the social acceptance of a mobile robot operating in a shared space with humans [19]. The omnidirectional motion system of the mobile robot APR-02 was designed to be able to reproduce the speed of a person walking fast (up to 1.3 m/s). Table 12 shows the two translational velocities considered in the last selection: low (0.15 m/s) and medium (0.30 m/s), which is approximately half the speed of a person walking normally. The use of a faster velocity in a crowded environment is not considered adequate and was not evaluated. In this case, the demonstration of the application of these two translational velocities in the mobile robot APR-02 was performed in the same conventional classroom where the outreach activity took place. Table 12 shows images of this empty classroom. The arrow in the images shown in Table 12 depicts the starting point of the trajectory, from which the mobile robot moves along the corridor to the end of the classroom, leaves the room through the door, explores the hall in front of the classroom, and returns to the starting point moving along the corridor. The demonstrations were performed first with the low translational velocity and then with the medium translational velocity.

Table 12.

Alternatives considered to define the translational nominal velocity and votes received.

Table 12 shows that the results of this survey selected the use of low nominal translational velocity by a majority. Probably the result of this survey was biased by the fact that it was the first contact or experience of the students with a human-sized mobile robot moving autonomously in a classroom. During the development of the first displacement at low velocity, the participants said nice spontaneous comments, but during the second displacement at medium velocity the spontaneous comments expressed surprise and most of the participants moved from their seats to get a better view of the displacement of the mobile robot in the small area of the corridor (maybe expecting a crash). Therefore, the result of this survey can be biased by the confidence in the mobile robot. The medium nominal translational velocity has recently been used in some practical applications of the mobile robot APR-02 [10,29,74].

The result of this survey is in accordance with the conclusions of Shi et al. [92], who studied the perceived safety levels associated with robot distance and velocity, finding that participants felt safer when a robot approached them at slow speeds. Other authors assessed the translational velocity from different points of view. For instance, Tsui et al. [93] explored the perceived politeness of and trust in a robot with different passing behaviors: stopping, slowing down, maintaining velocity, and speeding up. When the robot passed participants, it was perceived as politer and more trustworthy with the stopping behavior, despite the participants stated that the robot should move like humans: keeping a constant speed for passing, and slowing down or stopping only if it is justified. Alternatively, Bulter et al. [94] found that participants felt more comfortable when the robot slowed down its velocity upon approach and made trajectory adjustments to avoid the people while moving, rather than stopping and adjusting course before continuing. These approaching alternatives will be analyzed in future works.

4. Conclusions and Future Works

This paper describes the evolution of the Assistant Personal Robot (APR) project developed at the Robotics Laboratory of the University of Lleida, from the APR-01 to the APR-02 mobile robot prototypes. This paper describes the first APR-01 prototype developed, the basic hardware improvement, the specific anthropomorphic improvements, and the preference surveys conducted with engineering students from the same university in order to maximize the affinity with the final APR-02 mobile robot prototype as a way to avoid the Uncanny Valley effect and optimize the efforts and resources invested in this evolution. The alternatives surveyed have covered the implementation of different anthropomorphic improvements of the design of the arms, the implementation of the arm and of the symbolic hand, the selection of a face for the mobile robot, the selection of a neutral facial expression, the selection of an animation for the mouth, the application of proximity feedback, the application of gaze feedback, the use of arm gestures, the selection of the motion planning strategy, and the selection of the nominal translational velocity.

The final conclusion is that the development of preference surveys during the implementation of the APR-02 prototype has greatly influenced its evolution and has contributed to increase the perceived affinity and social acceptability of the prototype, which is now ready to develop assistance applications in dynamic workspaces.

The surveys conducted to select the new improvements incorporated in the mobile robot APR-02 prototype have some limitations. On the one hand, participants in the surveys were motivated science and technology university students who represented a limited and biased part of society, although their impressions and expectations are a valuable source of information in this prototype stage development. On the other hand, social acceptability is a culture-specific concept [17,95], so results are biased by the regional cultural background of the participants in the surveys.

If we have funding possibilities, future works with the APR-02 mobile robot will evaluate the implementation of robot personalities (imitating human behavior) [96] and the evaluation of the practical acceptability of the design improvements when developing specific applications. Future works will be focused on improving the current limitations of the mobile robot, such as the incorporation of a versatile motorized hand [21], the synchronization of the animation of the mouth and the movements of the arms, and the analysis of different motion strategies in the presence of people. Finally, future works will also explore the benefits of applying context prediction [97] and the possibility to exploit context histories [98] for recording the data generated during long-term use of the robot as a way to enhance the services provided. Specifically, comparing predicted contexts with context histories can allow better prediction of motion trajectories, detection of irregularities, and enhancement of the interaction with the user based on past experiences. Additionally, the development of the concept of context-awareness [99] will enable making decisions based on the profile and present context of the users in order to foster the development of new services and applications that shift repetitive activities to robots [100].

Author Contributions

Investigation, J.P., E.R. and E.C.; software, E.C.; writing—original draft, E.R.; writing—review and editing, J.P. All authors have read and agreed to the published version of the manuscript.

Funding

This research was partially funded by the Accessibility Chair promoted by Indra, Adecco Foundation and the University of Lleida Foundation from 2006 to 2018. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Fong, T.; Nourbakhsh, I.; Dautenhahn, K. A survey of socially interactive robots. Robot. Auton. Syst. 2003, 42, 143–166. [Google Scholar] [CrossRef] [Green Version]

- Busch, B.; Maeda, G.; Mollard, Y.; Demangeat, M.; Lopes, M. Postural optimization for an ergonomic human-robot interaction. In Proceedings of the International Conference on Intelligent Robots and Systems, Vancouver, BC, Canada, 24–28 September 2017; pp. 1–8. [Google Scholar] [CrossRef] [Green Version]

- Young, J.E.; Hawkins, R.; Sharlin, E.; Igarashi, T. Toward Acceptable Domestic Robots: Applying Insights from Social Psychology. Int. J. Soc. Robot. 2009, 1, 95–108. [Google Scholar] [CrossRef] [Green Version]

- Saldien, J.; Goris, K.; Vanderborght, B.; Vanderfaeillie, J.; Lefeber, D. Expressing Emotions with the Social Robot Probo. Int. J. Soc. Robot. 2010, 2, 377–389. [Google Scholar] [CrossRef]

- Conti, D.; Di Nuovo, S.; Buono, S.; Di Nuovo, A. Robots in Education and Care of Children with Developmental Disabilities: A Study on Acceptance by Experienced and Future Professionals. Int. J. Soc. Robot. 2017, 9, 51–62. [Google Scholar] [CrossRef] [Green Version]

- Casas, J.A.; Céspedes, N.; Cifuentes, C.A.; Gutierrez, L.F.; Rincón-Roncancio, M.; Múnera, M. Expectation vs. reality: Attitudes towards a socially assistive robot in cardiac rehabilitation. Appl. Sci. 2019, 9, 4651. [Google Scholar] [CrossRef] [Green Version]

- Rossi, S.; Santangelo, G.; Staffa, M.; Varrasi, S.; Conti, D.; Di Nuovo, A. Psychometric Evaluation Supported by a Social Robot: Personality Factors and Technology Acceptance. In Proceedings of the IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), Nanjing, China, 27–31 August 2018. [Google Scholar] [CrossRef] [Green Version]

- Wada, K.; Shibata, T.; Saito, T.; Tanie, K. Effects of robot-assisted activity for elderly people and nurses at a day service center. Proc. IEEE 2004, 92, 1780–1788. [Google Scholar] [CrossRef]

- Kozima, H.; Michalowski, M.P.; Nakagawa, C. Keepon: A playful robot for research, therapy, and entertainment. Int. J. Soc. Robot. 2009, 1, 3–18. [Google Scholar] [CrossRef]

- Palacín, J.; Clotet, E.; Martínez, D.; Martínez, D.; Moreno, J. Extending the Application of an Assistant Personal Robot as a Walk-Helper Tool. Robotics 2019, 8, 27. [Google Scholar] [CrossRef] [Green Version]

- Lin, C.; Šabanović, S.; Dombrowski, L.; Miller, A.D.; Brady, E.; MacDorman, K.F. Parental Acceptance of Children’s Storytelling Robots: A Projection of the Uncanny Valley of AI. Front. Robot. AI 2021, 8, 579993. [Google Scholar] [CrossRef]

- De Graaf, M.M.A.; Allouch, S.B. Exploring influencing variables for the acceptance of social robots. Robot. Auton. Syst. 2013, 61, 1476–1486. [Google Scholar] [CrossRef]

- Kanda, T.; Miyashita, T.; Osada, T.; Haikawa, Y.; Ishiguro, H. Analysis of Humanoid Appearances in Human—Robot Interaction. IEEE Trans. Robot. 2008, 24, 725–735. [Google Scholar] [CrossRef]

- Belpaeme, T.; Kennedy, J.; Ramachandranbrian, A.; Scassellati, B.; Tanaka, F. Social robots for education: A review. Sci. Robot. 2018, 3, eaat5954. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Murphy, J.; Gretzel, U.; Pesonen, J. Marketing robot services in hospitality and tourism: The role of anthropomorphism. J. Travel Tour. Mark. 2019, 36, 784–795. [Google Scholar] [CrossRef]

- Duffy, B.R. Anthropomorphism and the social robot. Robot. Auton. Syst. 2003, 42, 177–190. [Google Scholar] [CrossRef]

- Gasteiger, N.; Ahn, H.S.; Gasteiger, C.; Lee, C.; Lim, J.; Fok, C.; Macdonald, B.A.; Kim, G.H.; Broadbent, E. Robot-Delivered Cognitive Stimulation Games for Older Adults: Usability and Acceptability Evaluation. ACM Trans. Hum. Robot. Interact. 2021, 10, 1–18. [Google Scholar] [CrossRef]

- Elazzazi, M.; Jawad, L.; Hilfi, M.; Pandya, A. A Natural Language Interface for an Autonomous Camera Control System on the da Vinci Surgical Robot. Robotics 2022, 11, 40. [Google Scholar] [CrossRef]

- Guillén Ruiz, S.; Calderita, L.V.; Hidalgo-Paniagua, A.; Bandera Rubio, J.P. Measuring Smoothness as a Factor for Efficient and Socially Accepted Robot Motion. Sensors 2020, 20, 6822. [Google Scholar] [CrossRef]

- Martín, A.; Pulido, J.C.; González, J.C.; García-Olaya, A.; Suárez, C. A Framework for User Adaptation and Profiling for Social Robotics in Rehabilitation. Sensors 2020, 20, 4792. [Google Scholar] [CrossRef]

- Ribeiro, T.; Gonçalves, F.; Garcia, I.S.; Lopes, G.; Ribeiro, A.F. CHARMIE: A Collaborative Healthcare and Home Service and Assistant Robot for Elderly Care. Appl. Sci. 2021, 11, 7248. [Google Scholar] [CrossRef]

- Calderita, L.V.; Vega, A.; Barroso-Ramírez, S.; Bustos, P.; Núñez, P. Designing a Cyber-Physical System for Ambient Assisted Living: A Use-Case Analysis for Social Robot Navigation in Caregiving Centers. Sensors 2020, 20, 4005. [Google Scholar] [CrossRef]

- Muthugala, M.A.V.J.; Jayasekara, A.G.B.P. MIRob: An intelligent service robot that learns from interactive discussions while handling uncertain information in user instructions. In Proceedings of the Moratuwa Engineering Research Conference (MERCon), Moratuwa, Sri Lanka, 5–6 April 2016; pp. 397–402. [Google Scholar] [CrossRef]

- Garcia-Salguero, M.; Gonzalez-Jimenez, J.; Moreno, F.A. Human 3D Pose Estimation with a Tilting Camera for Social Mobile Robot Interaction. Sensors 2019, 19, 4943. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Pathi, S.K.; Kiselev, A.; Kristoffersson, A.; Repsilber, D.; Loutfi, A. A Novel Method for Estimating Distances from a Robot to Humans Using Egocentric RGB Camera. Sensors 2019, 19, 3142. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hellou, M.; Gasteiger, N.; Lim, J.Y.; Jang, M.; Ahn, H.S. Personalization and Localization in Human-Robot Interaction: A Review of Technical Methods. Robotics 2021, 10, 120. [Google Scholar] [CrossRef]

- Holland, J.; Kingston, L.; McCarthy, C.; Armstrong, E.; O’Dwyer, P.; Merz, F.; McConnell, M. Service Robots in the Healthcare Sector. Robotics 2021, 10, 47. [Google Scholar] [CrossRef]

- Penteridis, L.; D’Onofrio, G.; Sancarlo, D.; Giuliani, F.; Ricciardi, F.; Cavallo, F.; Greco, A.; Trochidis, I.; Gkiokas, A. Robotic and Sensor Technologies for Mobility in Older People. Rejuvenation Res. 2017, 20, 401–410. [Google Scholar] [CrossRef] [Green Version]

- Clotet, E.; Martínez, D.; Moreno, J.; Tresanchez, M.; Palacín, J. Assistant Personal Robot (APR): Conception and Application of a Tele-Operated Assisted Living Robot. Sensors 2016, 16, 610. [Google Scholar] [CrossRef] [Green Version]

- Moreno, J.; Clotet, E.; Lupiañez, R.; Tresanchez, M.; Martínez, D.; Pallejà, T.; Casanovas, J.; Palacín, J. Design, Implementation and Validation of the Three-Wheel Holonomic Motion System of the Assistant Personal Robot (APR). Sensors 2016, 16, 1658. [Google Scholar] [CrossRef] [Green Version]

- Palacín, J.; Martínez, D.; Rubies, E.; Clotet, E. Suboptimal Omnidirectional Wheel Design and Implementation. Sensors 2021, 21, 865. [Google Scholar] [CrossRef]

- Palacín, J.; Rubies, E.; Clotet, E. Systematic Odometry Error Evaluation and Correction in a Human-Sized Three-Wheeled Omnidirectional Mobile Robot Using Flower-Shaped Calibration Trajectories. Appl. Sci. 2022, 12, 2606. [Google Scholar] [CrossRef]

- Ishiguro, H.; Ono, T.; Imai, M.; Maeda, T.; Kanda, T.; Nakatsu, R. Robovie: An interactive humanoid robot. Ind. Robot. Int. J. 2001, 28, 498–503. [Google Scholar] [CrossRef]

- Cavallo, F.; Aquilano, M.; Bonaccorsi, M.; Limosani, R.; Manzi, A.; Carrozza, M.C.; Dario, P. On the design, development and experimentation of the ASTRO assistive robot integrated in smart environments. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Karlsruhe, Germany, 6–10 May 2013. [Google Scholar]

- Iwata, H.; Sugano, S. Design of Human Symbiotic Robot TWENDY-ONE. In Proceedings of the IEEE International Conference on Robotics and Automation, Kobe, Japan, 12–17 May 2009. [Google Scholar]

- Mukai, T.; Hirano, S.; Nakashima, H.; Kato, Y.; Sakaida, Y.; Guo, S.; Hosoe, S. Development of a Nursing-Care Assistant Robot RIBA That Can Lift a Human in its Arms. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Taipei, Taiwan, 18–22 October 2010. [Google Scholar]

- Lunenburg, J.; Van Den Dries, S.; Elfring, J.; Janssen, R.; Sandee, J.; van de Molengraft, M. Tech United Eindhoven Team Description 2013. In Proceedings of the RoboCup International Symposium, Eindhoven, The Netherlands, 1 July 2013. [Google Scholar]

- Kittmann, R.; Fröhlich, T.; Schäfer, J.; Reiser, U.; Weißhardt, F.; Haug, A. Let me Introduce Myself: I am Care-O-bot 4, a Gentleman Robot. In Proceedings of the Mensch und Computer 2015, Stuttgart, Germany, 6–9 September 2015. [Google Scholar]

- Palacín, J.; Martínez, D. Improving the Angular Velocity Measured with a Low-Cost Magnetic Rotary Encoder Attached to a Brushed DC Motor by Compensating Magnet and Hall-Effect Sensor Misalignments. Sensors 2021, 21, 4763. [Google Scholar] [CrossRef] [PubMed]

- Palacín, J.; Rubies, E.; Clotet, E.; Martínez, D. Evaluation of the Path-Tracking Accuracy of a Three-Wheeled Omnidirectional Mobile Robot Designed as a Personal Assistant. Sensors 2021, 21, 7216. [Google Scholar] [CrossRef] [PubMed]

- Rubies, E.; Palacín, J. Design and FDM/FFF Implementation of a Compact Omnidirectional Wheel for a Mobile Robot and Assessment of ABS and PLA Printing Materials. Robotics 2020, 9, 43. [Google Scholar] [CrossRef]

- Moreno, J.; Clotet, E.; Tresanchez, M.; Martínez, D.; Casanovas, J.; Palacín, J. Measurement of Vibrations in Two Tower-Typed Assistant Personal Robot Implementations with and without a Passive Suspension System. Sensors 2017, 17, 1122. [Google Scholar] [CrossRef] [Green Version]

- Gardecki, A.; Podpora, M. Experience from the operation of the Pepper humanoid robots. In Proceedings of the Progress in Applied Electrical Engineering (PAEE), Koscielisko, Poland, 25–30 June 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Cooper, S.; Di Fava, A.; Vivas, C.; Marchionni, L.; Ferro, F. ARI: The Social Assistive Robot and Companion. In Proceedings of the 29th IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), Naples, Italy, 31 August–4 September 2020. [Google Scholar]

- Kompaï Robots. Available online: http://kompairobotics.com/robot-kompai/ (accessed on 27 May 2022).

- Zenbo Robots. Available online: https://zenbo.asus.com/ (accessed on 27 May 2022).

- Weiss, A.; Hannibal, G. What makes people accept or reject companion robots? In Proceedings of the PETRA ‘18: Proceedings of the 11th PErvasive Technologies Related to Assistive Environments Conference, Corfu, Greece, 26–29 June 2018. [Google Scholar] [CrossRef]

- Qian, J.; Zi, B.; Wang, D.; Ma, Y.; Zhang, D. The Design and Development of an Omni-Directional Mobile Robot Oriented to an Intelligent Manufacturing System. Sensors 2017, 17, 2073. [Google Scholar] [CrossRef] [Green Version]

- Pages, J.; Marchionni, L.; Ferro, F. Tiago: The modular robot that adapts to different research needs. In Proceedings of the International workshop on robot modularity, IROS, Daejeon, Korea, 9–14 October 2016. [Google Scholar]

- Yamaguchi, U.; Saito, F.; Ikeda, K.; Yamamoto, T. HSR, Human Support Robot as Research and Development Platform. In Proceedings of the 6th International Conference on Advanced Mechatronics (ICAM2015), Tokyo, Japan, 5–8 December 2015. [Google Scholar]

- Jiang, C.; Hirano, S.; Mukai, T.; Nakashima, H.; Matsuo, K.; Zhang, D.; Honarvar, H.; Suzuki, T.; Ikeura, R.; Hosoe, S. Development of High-functionality Nursing-care Assistant Robot ROBEAR for Patient-transfer and Standing Assistance. In Proceedings of the JSME Conference on Robotics and Mechatronics, Kyoto, Japan, 17–19 May 2015. [Google Scholar]

- Hendrich, N.; Bistry, H.; Zhang, J. Architecture and Software Design for a Service Robot in an Elderly-Care Scenario. Engineering 2015, 1, 27–35. [Google Scholar] [CrossRef] [Green Version]

- Yamazaki, K.; Ueda, R.; Nozawa, S.; Kojima, M.; Okada, K.; Matsumoto, K.; Ishikawa, M.; Shimoyama, I.; Inaba, M. Home-Assistant Robot for an Aging Society. Proc. IEEE 2012, 100, 2429–2441. [Google Scholar] [CrossRef]

- Ammi, M.; Demulier, V.; Caillou, S.; Gaffary, Y.; Tsalamlal, Y.; Martin, J.-C.; Tapus, A. Haptic Human-Robot Affective Interaction in a Handshaking Social Protocol. In Proceedings of the Tenth Annual ACM/IEEE International Conference on Human-Robot Interaction (HRI ‘15), Portland, OR, USA, 2–5 March 2015. [Google Scholar] [CrossRef]

- Tapus, A.; Tapus, C.; Mataric, M. Long term learning and online robot behavior adaptation for individuals with physical and cognitive impairments. Springer Tracts Adv. Robot. 2010, 62, 389–398. [Google Scholar] [CrossRef]

- Bohren, J.; Rusu, R.B.; Jones, E.G.; Marder-Eppstein, E.; Pantofaru, C.; Wise, M.; Mösenlechner, L.; Meeussen, W.; Holzer, S. Towards Autonomous Robotic Butlers: Lessons Learned with the PR2. In Proceedings of the IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011. [Google Scholar] [CrossRef]

- Kume, Y.; Kawakami, H. Development of Power-Motion Assist Technology for Transfer Assist Robot. Matsushita Tech. J. 2008, 54, 50–52. (In Japanese) [Google Scholar]

- Zhang, Z.; Cao, Q.; Zhang, L.; Lo, C. A CORBA-based cooperative mobile robot system. Ind. Robot. Int. J. 2009, 36, 36–44. [Google Scholar] [CrossRef]

- Kanda, S.; Murase, Y.; Sawasaki, N.; Asada, T. Development of the service robot “enon”. J. Robot. Soc. Jpn. 2006, 24, 288–291. (In Japanese) [Google Scholar] [CrossRef]

- Palacín, J.; Martínez, D.; Rubies, E.; Clotet, E. Mobile Robot Self-Localization with 2D Push-Broom LIDAR in a 2D Map. Sensors 2020, 20, 2500. [Google Scholar] [CrossRef] [PubMed]

- Mori, M. The Uncanny Valley. Energy 1970, 7, 33–35. (In Japanese) [Google Scholar]

- Mori, M.; MacDorman, K.F.; Kageki, N. The Uncanny Valley [From the Field]. IEEE Robot. Autom. Mag. 2012, 19, 98–100. [Google Scholar] [CrossRef]

- Bartneck, C.; Kanda, T.; Ishiguro, H.; Hagita, N. My Robotic Doppelgänger–A Critical Look at the Uncanny Valley. In Proceedings of the International Symposium on Robot and Human Interactive Communication, Toyama, Japan, 27 September–2 October 2009. [Google Scholar]

- Bartneck, C.; Kulić, D.; Croft, E.; Zoghbi, S. Measurement instruments for the anthropomorphism, animacy, likeability, perceived intelligence, and perceived safety of robots. Int. J. Soc. Robot. 2009, 1, 71–81. [Google Scholar] [CrossRef] [Green Version]

- Weiss, A.; Bartneck, C. Meta analysis of the usage of the Godspeed Questionnaire Series. In Proceedings of the 24th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), Kobe, Japan, 31 August–4 September 2015. [Google Scholar] [CrossRef]

- Ortiz-Torres, G.; Castillo, P.; Reyes-Reyes, J. An Actuator Fault Tolerant Control for VTOL vehicles using Fault Estimation Observers: Practical validation. In Proceedings of the 2018 International Conference on Unmanned Aircraft Systems (ICUAS), Dallas, TX, USA, 12–15 June 2018; pp. 1054–1062. [Google Scholar] [CrossRef]

- Denavit, J.; Hartenberg, R.S. A kinematic notation for lower-pair mechanisms based on matrices. J. Appl. Mech. 1955, 22, 215–221. [Google Scholar] [CrossRef]

- Pérez Vidal, A.F.; Rumbo Morales, J.Y.; Ortiz Torres, G.; Sorcia Vázquez, F.D.J.; Cruz Rojas, A.; Brizuela Mendoza, J.A.; Rodríguez Cerda, J.C. Soft Exoskeletons: Development, Requirements, and Challenges of the Last Decade. Actuators 2021, 10, 166. [Google Scholar] [CrossRef]

- Nikafrooz, N.; Leonessa, A. A Single-Actuated, Cable-Driven, and Self-Contained Robotic Hand Designed for Adaptive Grasps. Robotics 2021, 10, 109. [Google Scholar] [CrossRef]

- Bateson, M.; Callow, L.; Holmes, J.R.; Redmond Roche, M.L.; Nettle, D. Do Images of ‘Watching Eyes’ Induce Behaviour That Is More Pro-Social or More Normative? A Field Experiment on Littering. PLoS ONE 2013, 8, e82055. [Google Scholar] [CrossRef] [Green Version]

- Metahuman Creator. Available online: https://www.unrealengine.com/en-US/digital-humans (accessed on 16 February 2021).

- Unreal Engine. Available online: https://www.unrealengine.com (accessed on 16 February 2021).

- Epic Games. Available online: https://www.epicgames.com/site/en-US/home (accessed on 16 February 2021).

- Palacín, J.; Martínez, D.; Clotet, E.; Pallejà, T.; Burgués, J.; Fonollosa, J.; Pardo, A.; Marco, S. Application of an Array of Metal-Oxide Semiconductor Gas Sensors in an Assistant Personal Robot for Early Gas Leak Detection. Sensors 2019, 19, 1957. [Google Scholar] [CrossRef] [Green Version]

- Cole, J. About Face; MIT Press: Cambridge, MA, USA, 1998. [Google Scholar]

- Mehrabian, A. Communication without words. In Communication Theory, 2nd ed.; Mortensen, C.D., Ed.; Routledge: Oxfordshire, UK, 2008. [Google Scholar] [CrossRef]

- DePaulo, B.M. Nonverbal behavior and self-presentation. Psychol. Bull. 1992, 111, 203–243. [Google Scholar] [CrossRef] [PubMed]

- Hall, E.T. The Hidden Dimension; Doubleday Company: Chicago, IL, USA, 1966. [Google Scholar]

- Walters, M.L.; Dautenhahn, K.; te Boekhorst, R.; Koay, K.L.; Kaouri, C.; Woods, S.; Nehaniv, C.; Lee, D.; Werry, I. The influence of subjects’ personality traits on personal spatial zones in a human–robot interaction experiment. In Proceedings of the IEEE international workshop on robot and human interactive communication, Nashville, TN, USA, 13–15 August 2005; pp. 347–352. [Google Scholar] [CrossRef] [Green Version]

- Saunderson, S.; Nejat, G. How Robots Influence Humans: A Survey of Nonverbal Communication in Social Human-Robot Interaction. Int. J. Soc. Robot. 2019, 11, 575–608. [Google Scholar] [CrossRef]

- Gonsior, B.; Sosnowski, S.; Mayer, C.; Blume, J.; Radig, B.; Wollherr, D.; Kühnlenz, K. Improving aspects of empathy and subjective performance for HRI through mirroring facial expressions. In Proceedings of the IEEE international workshop on robot and human interactive communication, Atlanta, GA, USA, 31 July–3 August 2001; pp. 350–356. [Google Scholar] [CrossRef]

- Cook, M. Gaze and mutual gaze in social encounters. Am. Sci. 1977, 65, 328–333. [Google Scholar]

- Mazur, A.; Rosa, E.; Faupel, M.; Heller, J.; Leen, R.; Thurman, B. Physiological aspects of communication via mutual gaze. Am. J. Sociol. 1980, 86, 50–74. [Google Scholar] [CrossRef]

- Babel, F.; Kraus, J.; Miller, L.; Kraus, M.; Wagner, N.; Minker, W.; Baumann, M. Small Talk with a Robot? The Impact of Dialog Content, Talk Initiative, and Gaze Behavior of a Social Robot on Trust, Acceptance, and Proximity. Int. J. Soc. Robot. 2021, 13, 1485–1498. [Google Scholar] [CrossRef]

- Belkaid, M.; Kompatsiari, K.; De Tommaso, D.; Zablith, I.; Wykowska, A. Mutual gaze with a robot affects human neural activity and delays decision-making processes. Sci. Robot. 2021, 6, eabc5044. [Google Scholar] [CrossRef]

- Chidambaram, V.; Chiang, Y.-H.; Mutlu, B. Designing persuasive robots: How robots might persuade people using vocal and nonverbal cues. In Proceedings of the ACM/IEEE international conference human–robot interaction, Boston, MA, USA, 5–8 March 2012; pp. 293–300. [Google Scholar] [CrossRef]

- Viola, P.; Jones, M. Rapid object detection using a boosted cascade of simple features. In Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR 2001), Kauai, HI, USA, 8–14 December 2001; Volume 1, p. I. [Google Scholar]

- Montaño-Serrano, V.M.; Jacinto-Villegas, J.M.; Vilchis-González, A.H.; Portillo-Rodríguez, O. Artificial Vision Algorithms for Socially Assistive Robot Applications: A Review of the Literature. Sensors 2021, 21, 5728. [Google Scholar] [CrossRef]

- Rubies, E.; Palacín, J.; Clotet, E. Enhancing the Sense of Attention from an Assistance Mobile Robot by Improving Eye-Gaze Contact from Its Iconic Face Displayed on a Flat Screen. Sensors 2022, 22, 4282. [Google Scholar] [CrossRef]

- Aly, A.; Tapus, A. Towards an intelligent system for generating an adapted verbal and nonverbal combined behavior in human–robot interaction. Auton. Robot. 2016, 40, 193–209. [Google Scholar] [CrossRef]

- Cao, Z.; Bryant, D.; Molteno, T.C.A.; Fox, C.; Parry, M. V-Spline: An Adaptive Smoothing Spline for Trajectory Reconstruction. Sensors 2021, 21, 3215. [Google Scholar] [CrossRef]

- Shi, D.; Collins, E.G., Jr.; Goldiez, B.; Donate, A.; Liu, X.; Dunlap, D. Human-aware robot motion planning with velocity constraints. In Proceedings of the International symposium on collaborative technologies and systems, Irvine, CA, USA, 19–23 May 2008; pp. 490–497. [Google Scholar] [CrossRef]

- Tsui, K.M.; Desai, M.; Yanco, H.A. Considering the Bystander’s perspective for indirect human–robot interaction. In Proceedings of the 5th ACM/IEEE International Conference on Human Robot Interaction, Osaka, Japan, 2–5 March 2010; pp. 129–130. [Google Scholar] [CrossRef] [Green Version]

- Butler, J.T.; Agah, A. Psychological effects of behavior patterns of a mobile personal robot. Auton. Robot. 2001, 10, 185–202. [Google Scholar] [CrossRef]

- Charisi, V.; Davison, D.; Reidsma, D.; Evers, V. Evaluation methods for user-centered child-robot interaction. In Proceedings of the IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), New York, NY, USA, 26–31 August 2016; pp. 545–550. [Google Scholar] [CrossRef]

- Luo, L.; Ogawa, K.; Ishiguro, H. Identifying Personality Dimensions for Engineering Robot Personalities in Significant Quantities with Small User Groups. Robotics 2022, 11, 28. [Google Scholar] [CrossRef]

- Dey, A.K.; Abowd, G.D.; Salber, D. A conceptual framework and a toolkit for supporting the rapid prototyping of context-aware applications. Hum.-Comput. Interact. 2001, 16, 97–166. [Google Scholar] [CrossRef]

- Rosa, J.H.; Barbosa, J.L.V.; Kich, M.; Brito, L. A Multi-Temporal Context-aware System for Competences Management. Int. J. Artif. Intell. Educ. 2015, 25, 455–492. [Google Scholar] [CrossRef] [Green Version]