Mimicking Facial Expressions Facilitates Working Memory for Stimuli in Emotion-Congruent Colours

Abstract

1. Introduction

2. Experiment 1

2.1. Method

2.1.1. Transparency and Openness

2.1.2. Participants

2.1.3. Materials and Stimuli

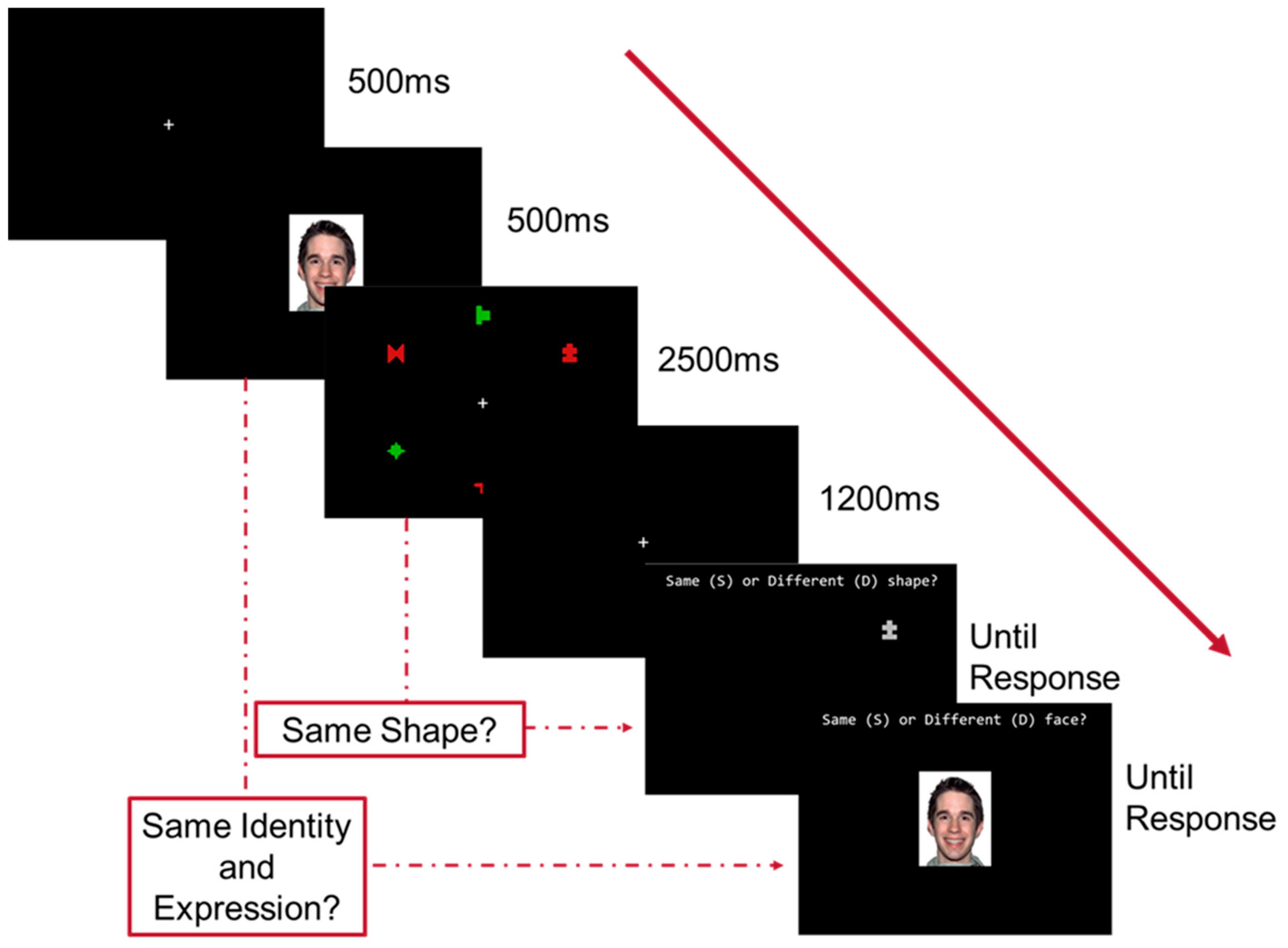

2.1.4. Design and Procedure

2.1.5. Statistical Analysis

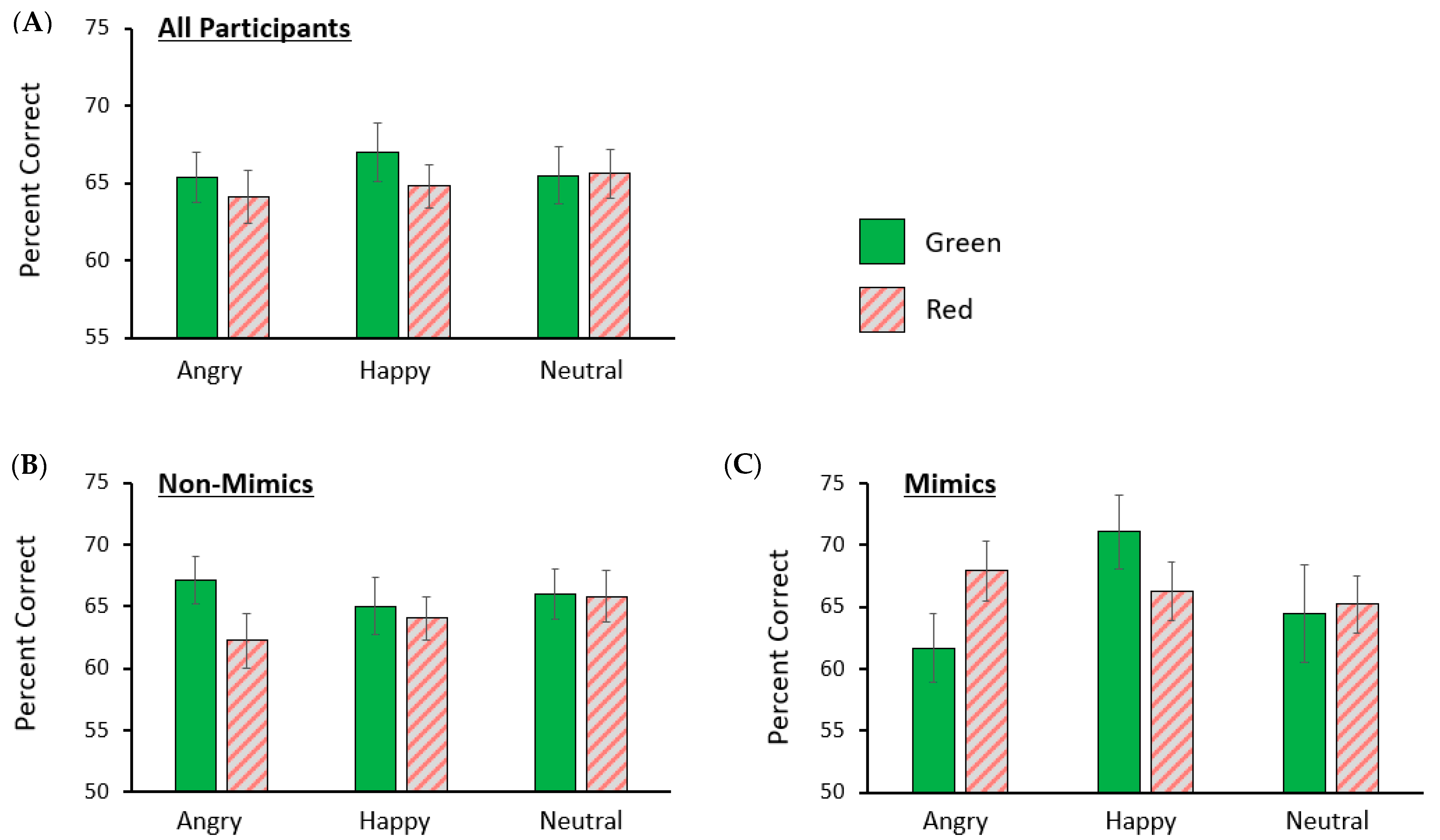

2.2. Results

Post hoc Analysis

2.3. Discussion

3. Experiment 2

3.1. Method

3.1.1. Participants

3.1.2. Design and Procedure

3.2. Results

3.3. Discussion

4. General Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Fetterman, A.K.; Robinson, M.D.; Meier, B.P. Anger as “seeing red”: Evidence for a perceptual association. Cogn. Emot. 2012, 26, 1445–1458. [Google Scholar] [CrossRef]

- Hill, R.A.; Barton, R.A. Red enhances human performance in contests. Nature 2005, 435, 293. [Google Scholar] [CrossRef] [PubMed]

- Jonauskaite, D.; Wicker, J.; Mohr, C.; Dael, N.; Havelka, J.; Papadatou-Pastou, M.; Zhang, M.; Oberfeld, D. A machine learning approach to quantify the specificity of colour–emotion associations and their cultural differences. R. Soc. Open Sci. 2019, 6, 190741. [Google Scholar] [CrossRef]

- Fetterman, A.K.; Robinson, M.D.; Gordon, R.D.; Elliot, A.J. Anger as seeing red: Perceptual sources of evidence. Soc. Psychol. Personal. Sci. 2011, 2, 311–316. [Google Scholar] [CrossRef] [PubMed]

- Young, S.G.; Elliot, A.J.; Feltman, R.; Ambady, N. Red enhances the processing of facial expressions of anger. Emotion 2013, 13, 380–384. [Google Scholar] [CrossRef]

- Stephen, I.D.; Oldham, F.H.; Perrett, D.I.; Barton, R.A. Redness enhances perceived aggression, dominance and attractiveness in men’s faces. Evol. Psychol. 2012, 10, 562–572. [Google Scholar] [CrossRef] [PubMed]

- Gil, S.; Le Bigot, L. Seeing life through positive-tinted glasses: Color–meaning associations. PLoS ONE 2014, 9, e104291. [Google Scholar] [CrossRef]

- Sivananthan, T.; de Lissa, P.; Curby, K.M. Colour context effects on speeded valence categorization of facial expressions. Vis. Cogn. 2021, 29, 348–365. [Google Scholar] [CrossRef]

- Kuhbandner, C.; Pekrun, R. Joint effects of emotion and color on memory. Emotion 2013, 13, 375–379. [Google Scholar] [CrossRef]

- Mammarella, N.; Di Domenico, A.; Palumbo, R.; Fairfield, B. When green is positive and red is negative: Aging and the influence of color on emotional memories. Psychol. Aging 2016, 31, 914. [Google Scholar] [CrossRef] [PubMed]

- Sutton, T.M.; Altarriba, J. Color associations to emotion and emotion-laden words: A collection of norms for stimulus construction and selection. Behav. Res. Methods 2016, 48, 686–728. [Google Scholar] [CrossRef] [PubMed]

- Ikeda, S. Influence of color on emotion recognition is not bidirectional: An investigation of the association between color and emotion using a stroop-like task. Psychol. Rep. 2019, 123, 1226–1239. [Google Scholar] [CrossRef] [PubMed]

- Bahle, B.; Beck, V.M.; Hollingworth, A. The architecture of interaction between visual working memory and visual attention. J. Exp. Psychol. Hum. Percept. Perform. 2018, 44, 992–1011. [Google Scholar] [CrossRef] [PubMed]

- Downing, P.E. Interactions between visual working memory and selective attention. Psychol. Sci. 2000, 11, 467–473. [Google Scholar] [CrossRef]

- Pashler, H.; Shiu, L.-P. Do images involuntarily trigger search? A test of Pillsbury’s hypothesis. Psychon. Bull. Rev. 1999, 6, 445–448. [Google Scholar] [CrossRef]

- van Moorselaar, D.; Theeuwes, J.; Olivers, C.N. In competition for the attentional template: Can multiple items within visual working memory guide attention? J. Exp. Psychol. Hum. Percept. Perform. 2014, 40, 1450–1464. [Google Scholar] [CrossRef]

- Kazak, A.E. Editorial: Journal article reporting standards. Am. Psychol. 2018, 73, 1–2. [Google Scholar] [CrossRef] [PubMed]

- JASP Team. JASP, Version 0.14.1; Computer Software; University of Amsterdam Nieuwe Achtergracht: Amsterdam, The Netherlands, 2020. Available online: https://jasp-stats.org/ (accessed on 27 December 2020).

- Tottenham, N.; Tanaka, J.W.; Leon, A.C.; McCarry, T.; Nurse, M.; Hare, T.A.; Marcus, D.J.; Westerlund, A.; Casey, B.J.; Nelson, C. The NimStim set of facial expressions: Judgments from untrained research participants. Psychiatry Res. 2009, 168, 242–249. [Google Scholar] [CrossRef]

- Blacker, K.J.; Curby, K.M. Enhanced visual short-term memory in action video game players. Atten. Percept. Psychophys. 2013, 75, 1128–1136. [Google Scholar] [CrossRef]

- Fiser, J.; Aslin, R.N. Statistical learning of higher-order temporal structure from visual shape sequences. J. Exp. Psychol. Learn. Mem. Cogn. 2002, 28, 458–467. [Google Scholar] [CrossRef]

- Elliot, A.J.; Maier, M.A.; Moller, A.C.; Friedman, R.; Meinhardt, J. Color and psychological functioning: The effect of red on performance attainment. J. Exp. Psychol. Gen. 2007, 136, 154–168. [Google Scholar] [CrossRef]

- Schneider, W.; Eschman, A.; Zuccolotto, A. E-Prime User’s Guide; Psychology Software Tools Inc.: Pittsburgh, PA, USA, 2002. [Google Scholar]

- Curby, K.M.; Gauthier, I. A visual short-term memory advantage for faces. Psychon. Bull. Rev. 2007, 14, 620–628. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Clarke, T.; Costall, A. The emotional connotations of color: A qualitative investigation. Color Res. Appl. 2008, 33, 406–410. [Google Scholar] [CrossRef]

- Lakens, D. Calculating and reporting effect sizes to facilitate cumulative science: A practical primer for t-test and ANOVAs. Front. Psychol. 2013, 4, 863. [Google Scholar] [CrossRef] [PubMed]

- Hollingworth, A.; Beck, V.M. Memory-based attention capture when multiple items are maintained in visual working memory. J. Exp. Psychol. Hum. Percept. Perform. 2016, 42, 911–917. [Google Scholar] [CrossRef]

- Woodman, G.F.; Luck, S.J. Do the contents of visual working memory automatically influence attentional selection during visual search? J. Exp. Psychol. Hum. Percept. Perform. 2007, 33, 363–377. [Google Scholar] [CrossRef]

- Niedenthal, P.M. Embodying emotion. Science 2007, 316, 1002–1005. [Google Scholar] [CrossRef]

- Chartrand, T.L.; Bargh, J.A. The chameleon effect: The perception–behavior link and social interaction. J. Personal. Soc. Psychol. 1999, 76, 893–910. [Google Scholar] [CrossRef]

- Strack, F.; Martin, L.L.; Stepper, S. Inhibiting and facilitating conditions of the human smile: A nonobtrusive test of the facial feedback hypothesis. J. Personal. Soc. Psychol. 1988, 54, 768–777. [Google Scholar] [CrossRef] [PubMed]

- Dimberg, U.; Thunberg, M.; Grunedal, S. Facial reactions to emotional stimuli: Automatically controlled emotional responses. Cogn. Emot. 2002, 16, 449–471. [Google Scholar] [CrossRef]

- Korb, S.; Grandjean, D.; Scherer, K.R. Timing and voluntary suppression of facial mimicry to smiling faces in a Go/NoGo task—An EMG study. Biol. Psychol. 2010, 85, 347–349. [Google Scholar] [CrossRef] [PubMed]

- Dimberg, U.; Thunberg, M. Empathy, emotional contagion, and rapid facial reactions to angry and happy facial expressions. Psych. J. 2012, 1, 118–127. [Google Scholar] [CrossRef]

- Dimberg, U.; Andréasson, P.; Thunberg, M. Emotional empathy and facial reactions to facial expressions. J. Psychophysiol. 2011, 25, 26–31. [Google Scholar] [CrossRef]

- Wood, A.; Rychlowska, M.; Korb, S.; Niedenthal, P. Fashioning the face: Sensorimotor simulation contributes to facial expression recognition. Trends Cogn. Sci. 2016, 20, 227–240. [Google Scholar] [CrossRef]

- Ishihara, S. Tests for Colour-Blindness; Kanehara Shuppan Company Japan: Tokyo, Japan, 1960. [Google Scholar]

- Soussignan, R. Duchenne smile, emotional experience, and autonomic reactivity: A test of the facial feedback hypothesis. Emotion 2002, 2, 52–74. [Google Scholar] [CrossRef] [PubMed]

- Wood, A.; Lupyan, G.; Sherrin, S.; Niedenthal, P. Altering sensorimotor feedback disrupts visual discrimination of facial expressions. Psychon. Bull. Rev. 2016, 23, 1150–1156. [Google Scholar] [CrossRef]

- Neal, D.T.; Chartrand, T.L. Embodied emotion perception: Amplifying and dampening facial feedback modulates emotion perception accuracy. Soc. Psychol. Personal. Sci. 2011, 2, 673–678. [Google Scholar] [CrossRef]

- Oberman, L.M.; Winkielman, P.; Ramachandran, V.S. Face to face: Blocking facial mimicry can selectively impair recognition of emotional expressions. Soc. Neurosci. 2007, 2, 167–178. [Google Scholar] [CrossRef]

- Ponari, M.; Conson, M.; D’Amico, N.P.; Grossi, D.; Trojano, L. Mapping correspondence between facial mimicry and emotion recognition in healthy subjects. Emotion 2012, 12, 1398–1403. [Google Scholar] [CrossRef]

- Rychlowska, M.; Cañadas, E.; Wood, A.; Krumhuber, E.G.; Fischer, A.; Niedenthal, P.M. Blocking mimicry makes true and false smiles look the same. PLoS ONE 2014, 9, e90876. [Google Scholar] [CrossRef]

- Sessa, P.; Schiano Lomoriello, A.; Luria, R. Neural measures of the causal role of observers’ facial mimicry on visual working memory for facial expressions. Soc. Cogn. Affect. Neurosci. 2018, 13, 1281–1291. [Google Scholar] [CrossRef]

- Moores, E.; Laiti, L.; Chelazzi, L. Associative knowledge controls deployment of visual selective attention. Nat. Neurosci. 2003, 6, 182–189. [Google Scholar] [CrossRef]

- Becker, M.W.; Leinenger, M. Attentional selection is biased toward mood congruent stimuli. Emotion 2011, 11, 1248–1254. [Google Scholar] [CrossRef] [PubMed]

- Cavanagh, S.R.; Urry, H.L.; Shin, L.M. Mood-induced shifts in attentional bias to emotional information predict ill-and well-being. Emotion 2011, 11, 241–248. [Google Scholar] [CrossRef]

- Curby, K.M.; Smith, S.D.; Moerel, D.; Dyson, A. The cost of facing fear: Visual working memory is impaired for faces expressing fear. Br. J. Psychol. 2019, 110, 428–448. [Google Scholar] [CrossRef]

- Garrison, K.E.; Schmeichel, B.J. Effects of emotional content on working memory capacity. Cogn. Emot. 2019, 33, 370–377. [Google Scholar] [CrossRef] [PubMed]

- Vasara, D.; Surakka, V. Haptic responses to angry and happy faces. Int. J. Hum.-Comput. Interact. 2021, 37, 1625–1635. [Google Scholar] [CrossRef]

- Rosenthal, R.; Jacobson, L. Pygmalion in the Classroom: Teacher Expectations and Student Intellectual Development; Holt: New York, NY, USA, 1968. [Google Scholar]

Disclaimer/Publisher’s Note: The statements 1968, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sivananthan, T.; Most, S.B.; Curby, K.M. Mimicking Facial Expressions Facilitates Working Memory for Stimuli in Emotion-Congruent Colours. Vision 2024, 8, 4. https://doi.org/10.3390/vision8010004

Sivananthan T, Most SB, Curby KM. Mimicking Facial Expressions Facilitates Working Memory for Stimuli in Emotion-Congruent Colours. Vision. 2024; 8(1):4. https://doi.org/10.3390/vision8010004

Chicago/Turabian StyleSivananthan, Thaatsha, Steven B. Most, and Kim M. Curby. 2024. "Mimicking Facial Expressions Facilitates Working Memory for Stimuli in Emotion-Congruent Colours" Vision 8, no. 1: 4. https://doi.org/10.3390/vision8010004

APA StyleSivananthan, T., Most, S. B., & Curby, K. M. (2024). Mimicking Facial Expressions Facilitates Working Memory for Stimuli in Emotion-Congruent Colours. Vision, 8(1), 4. https://doi.org/10.3390/vision8010004