Capacity and Allocation across Sensory and Short-Term Memories

Abstract

1. Introduction

2. Materials and Methods

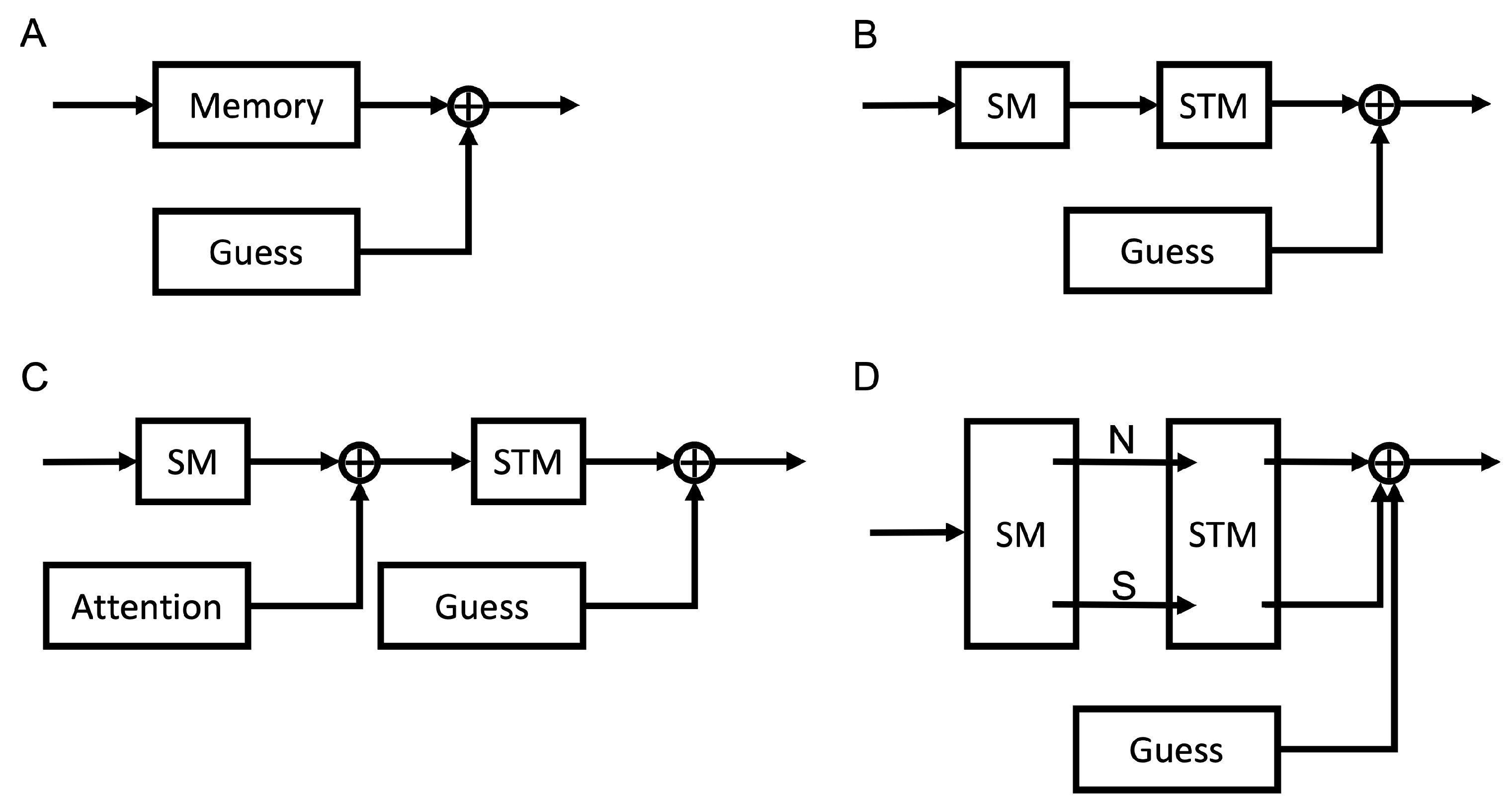

2.1. Models

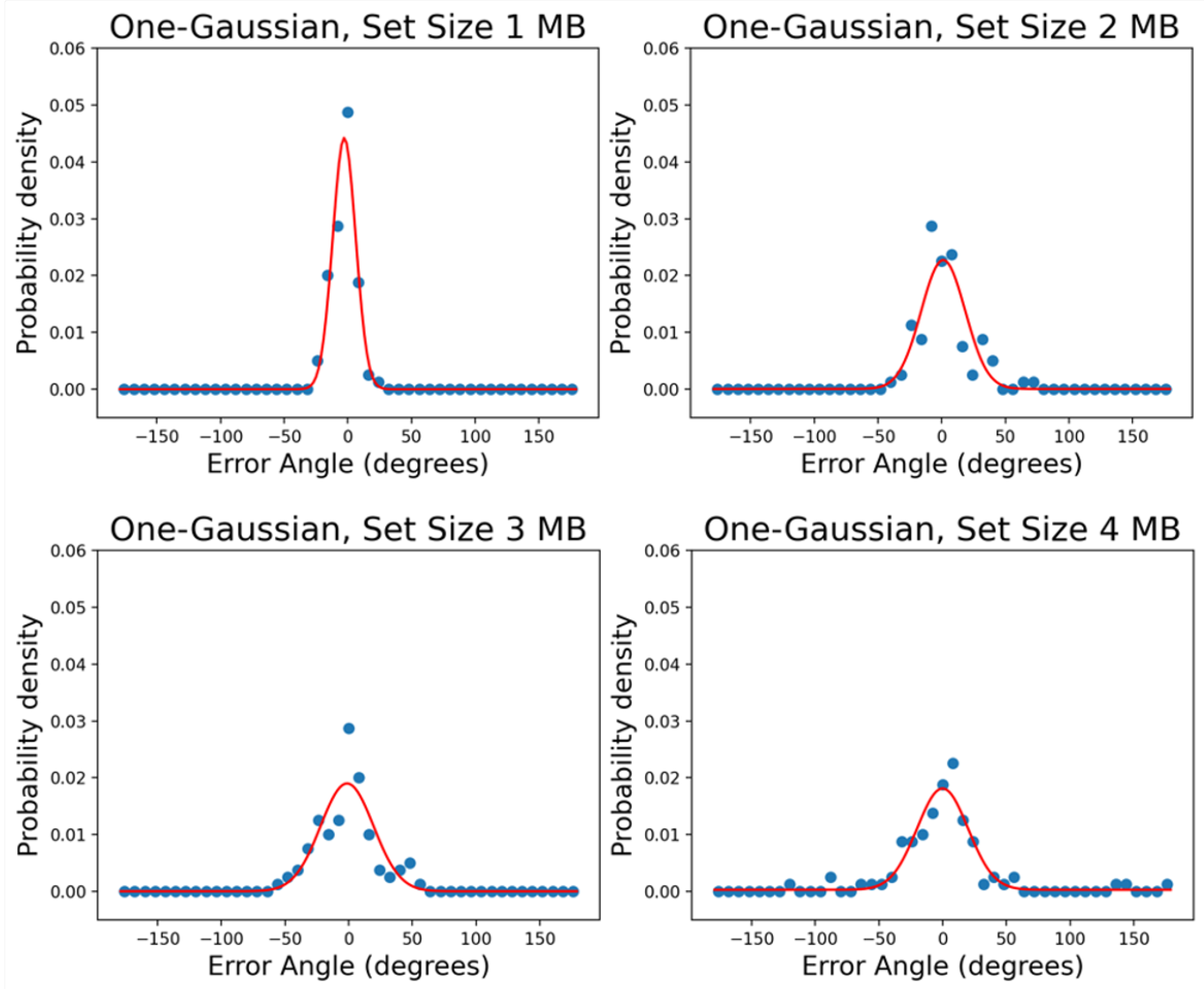

2.1.1. One Gaussian + Uniform Mixture Model

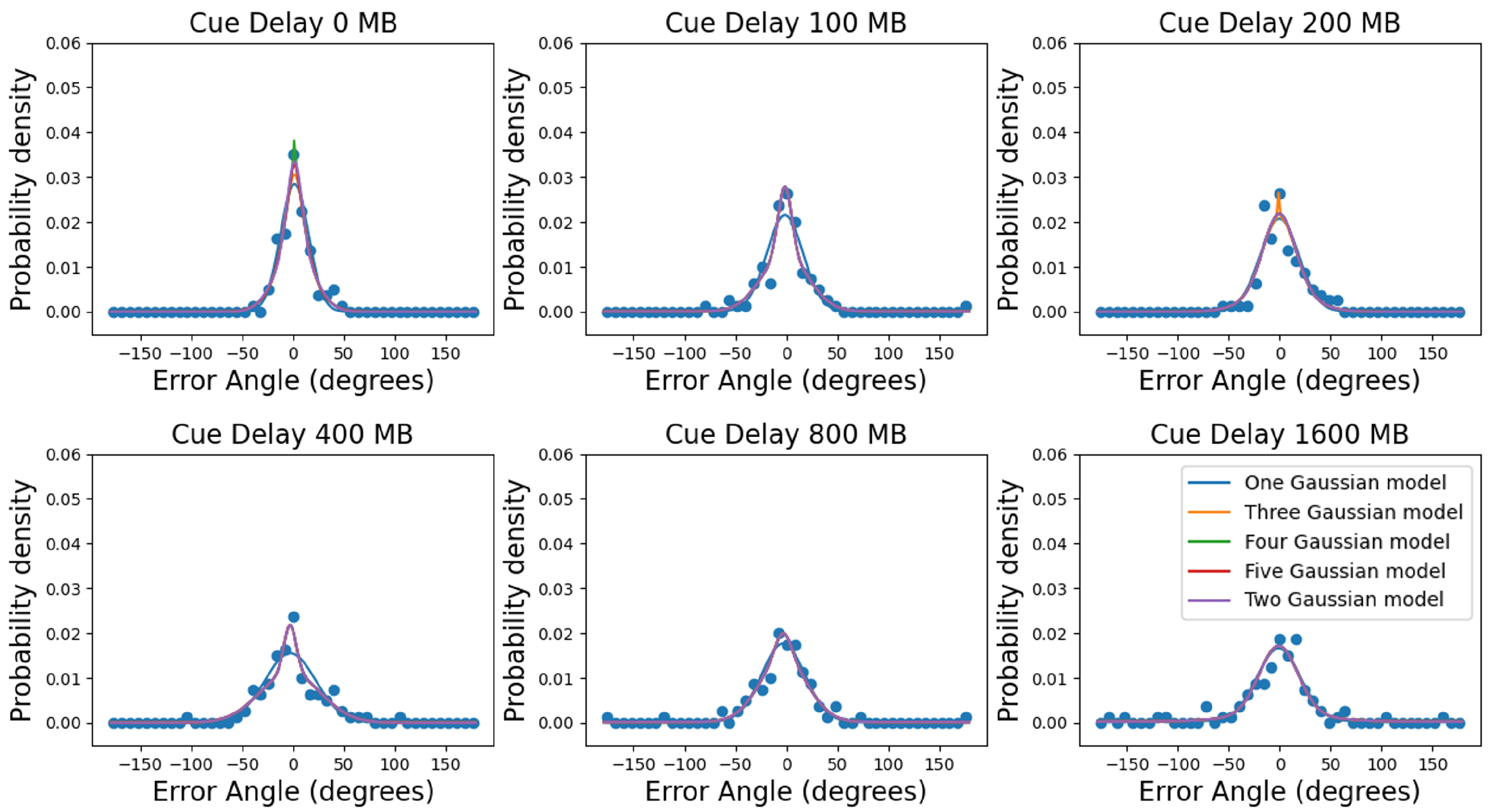

2.1.2. Multiple Gaussians Models

3. Results

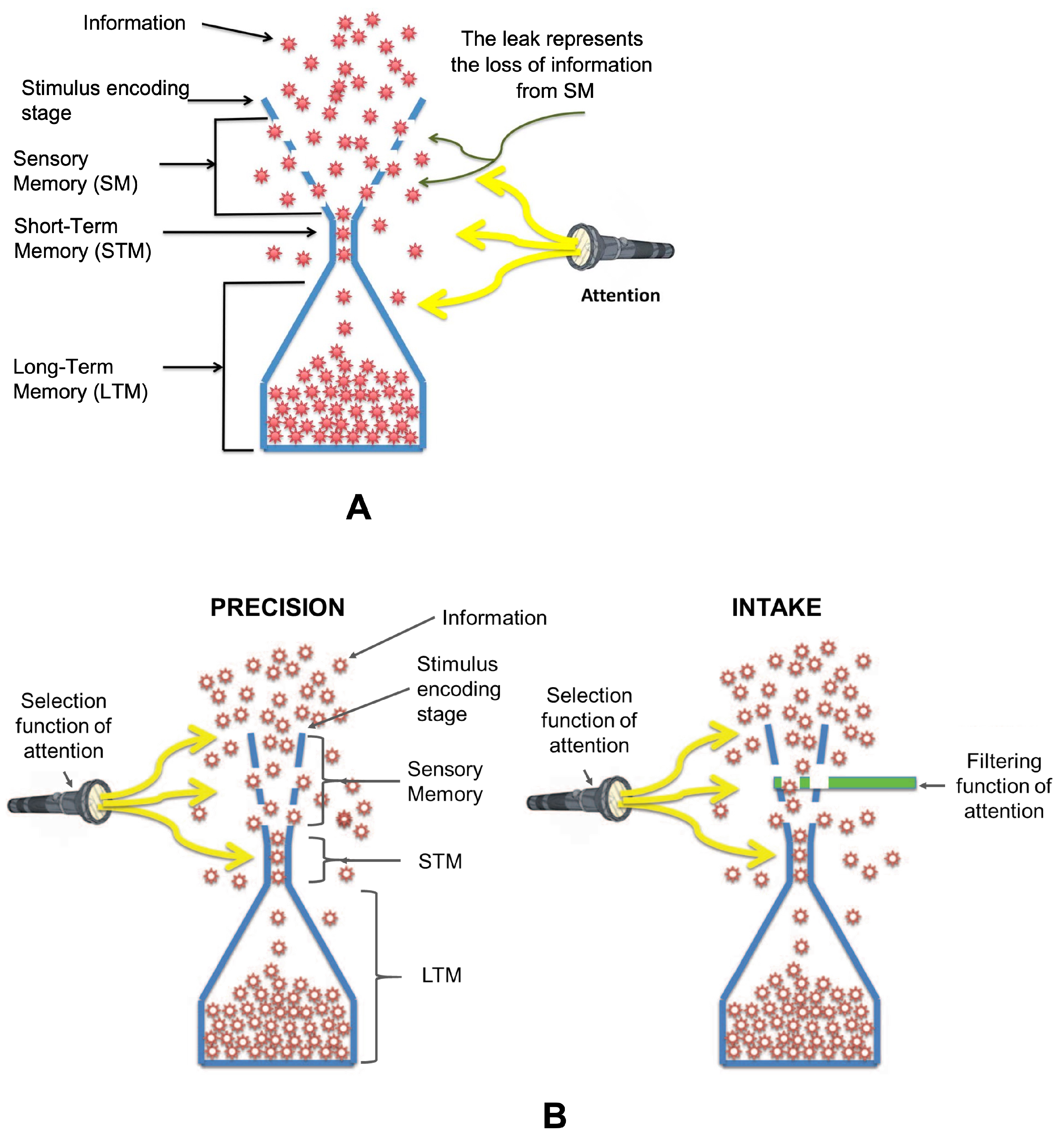

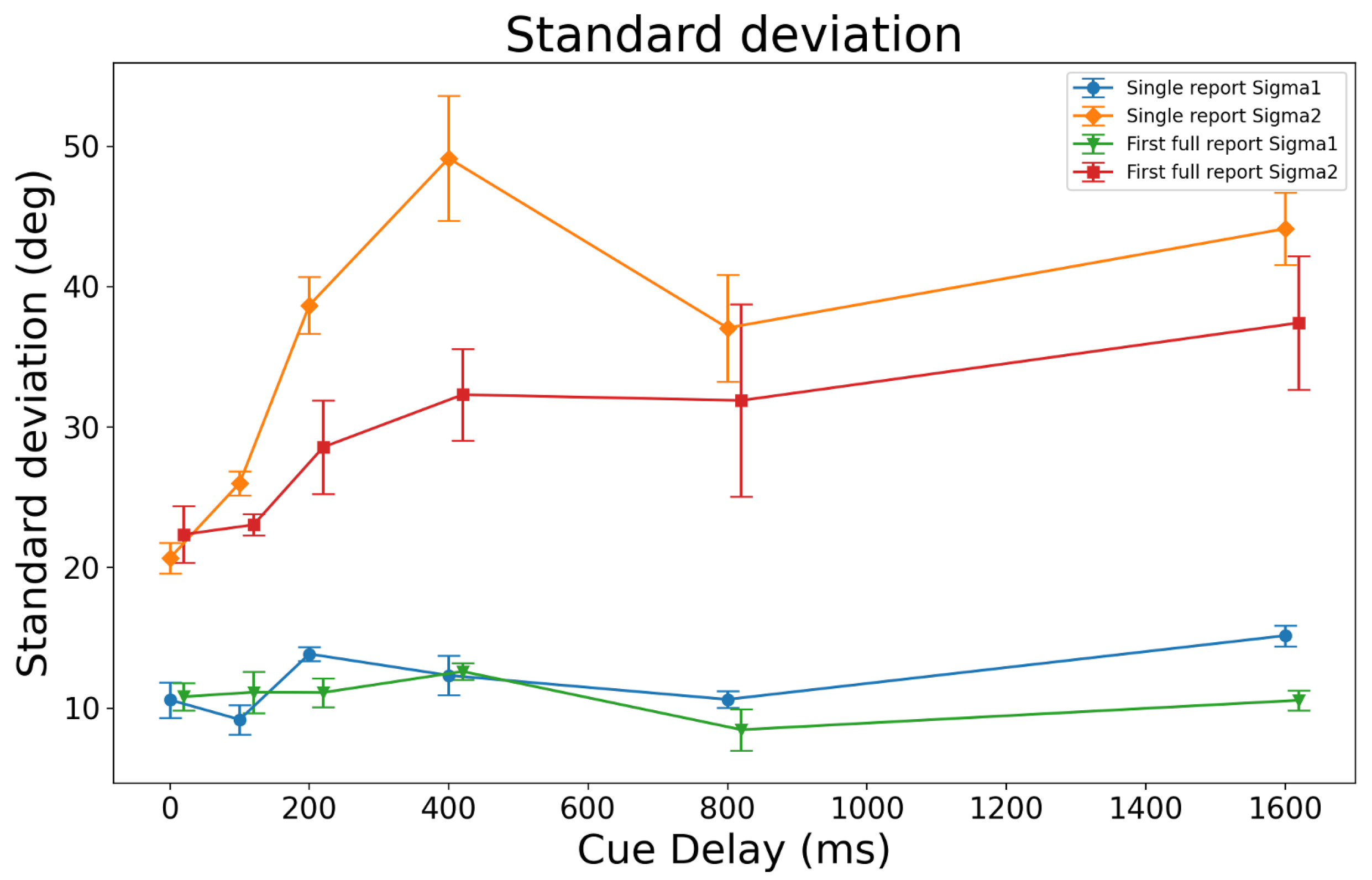

3.1. Testing the Leaky Flask Model on the New Dataset

3.2. Multiple Gaussians Models

4. Discussion

4.1. Confirmation of the Leaky Flask Model

4.2. A Few Gaussians Are Adequate to Accurately Model the Data for Recall of Direction of Motion

4.3. Modeling Different Configurations of Storage and Recall

4.4. Limitations of the Current Study

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| MDPI | Multidisciplinary Digital Publishing Institute |

| DOAJ | Directory of open access journals |

| TLA | Three letter acronym |

| LD | Linear dichroism |

Appendix A. Fitting Methods

References

- Averbach, E.; Coriell, A.S. Short-term memory in vision. Bell Syst. Tech. J. 1961, 40, 309–328. [Google Scholar] [CrossRef]

- Coltheart, M. Iconic memory. Philos. Trans. R. Soc. London. Ser. B Biol. Sci. 1983, 302, 283–294. [Google Scholar] [CrossRef]

- Haber, R.N. The impending demise of the icon: A critique of the concept of iconic storage in visual information processing. Behav. Brain Sci. 1983, 6, 1–11. [Google Scholar] [CrossRef]

- Sperling, G. The information available in brief visual presentations. Psychol. Monogr. Gen. Appl. 1960, 74, 1–29. [Google Scholar] [CrossRef]

- Alvarez, G.A.; Cavanagh, P. The capacity of visual short-term memory is set both by visual information load and by number of objects. Psychol. Sci. 2004, 15, 106–111. [Google Scholar] [CrossRef]

- Baddeley, A. Working Memory; Oxford University Press: Oxford, UK, 1986. [Google Scholar]

- Baddeley, A.; Logie, R.H. Models of Working Memory; Cambridge University Press: Cambridge, UK, 1999; pp. 28–61. [Google Scholar] [CrossRef]

- Bays, P.M.; Husain, M. Dynamic shifts of limited working memory resources in human vision. Science 2008, 321, 851–854. [Google Scholar] [CrossRef]

- Cowan, N. The magical number 4 in short-term memory: A reconsideration of mental storage capacity. Behav. Brain Sci. 2001, 24, 87–114. [Google Scholar] [CrossRef]

- Cowan, N. Working Memory Capacity; Psychology Press: East Sussex, UK, 2005. [Google Scholar] [CrossRef]

- Cowan, N. The magical mystery four: How is working memory capacity limited, and why? Curr. Dir. Psychol. Sci. 2010, 19, 51–57. [Google Scholar] [CrossRef]

- Fukuda, K.; Awh, E.; Vogel, E.K. Discrete capacity limits in visual working memory. Curr. Opin. Neurobiol. 2010, 20, 177–182. [Google Scholar] [CrossRef]

- Luck, S.J.; Vogel, E.K. The capacity of visual working memory for features and conjunctions. Nature 1997, 390, 279–281. [Google Scholar] [CrossRef]

- Pasternak, T.; Greenlee, M.W. Working memory in primate sensory systems. Nat. Rev. Neurosci. 2005, 6, 97–107. [Google Scholar] [CrossRef]

- Rensink, R.A. The dynamic representation of scenes. Vis. Cogn. 2000, 7, 17–42. [Google Scholar] [CrossRef]

- Vogel, E.K.; Woodman, G.F.; Luck, S.J. Storage of features, conjunctions, and objects in visual working memory. J. Exp. Psychol. Hum. Percept. Perform. 2001, 27, 92–114. [Google Scholar] [CrossRef]

- Wilken, P.; Ma, W.J. A detection theory account of change detection. J. Vis. 2004, 4, 1120–1135. [Google Scholar] [CrossRef]

- Zhang, W.; Luck, S.J. Discrete fixed-resolution representations in visual working memory. Nature 2008, 453, 233–235. [Google Scholar] [CrossRef]

- Baddeley, A. Working Memory, Thought, and Action; Oxford University Press: Oxford, UK, 2007. [Google Scholar] [CrossRef]

- Marois, R.; Ivanoff, J. Capacity limits of information processing in the brain. Trends Cogn. Sci. 2005, 9, 296–305. [Google Scholar] [CrossRef]

- Öğmen, H.; Ekiz, O.; Huynh, D.; Bedell, H.E.; Tripathy, S.P. Bottlenecks of motion processing during a visual glance: The leaky flask model. PLoS ONE 2013, 8, e83671. [Google Scholar] [CrossRef]

- Tripathy, S.P.; Öǧmen, H. Sensory memory is allocated exclusively to the current event segment. Front. Psychol. 2018, 9, 1435. [Google Scholar] [CrossRef]

- Bays, P.M.; Catalao, R.F.; Husain, M. The precision of visual working memory is set by allocation of a shared resource. J. Vis. 2009, 9, 1–11. [Google Scholar] [CrossRef]

- Luck, S.J.; Vogel, E.K. Visual working memory capacity: From psychophysics and neurobiology to individual differences. Trends Cogn. Sci. 2013, 17, 391–400. [Google Scholar] [CrossRef]

- Zhang, W.; Luck, S.J. The number and quality of representations in working memory. Psychol. Sci. 2011, 22, 1434–1441. [Google Scholar] [CrossRef]

- Fougnie, D.; Suchow, J.W.; Alvarez, G.A. Variability in the quality of visual working memory. Nat. Commun. 2012, 3, 1229. [Google Scholar] [CrossRef]

- Machizawa, M.G.; Goh, C.C.W.; Driver, J. Human visual short-term memory precision can be varied at will when the number of retained items is low. Psychol. Sci. 2012, 23, 554–559. [Google Scholar] [CrossRef]

- Miller, G.A. The magical number seven, plus or minus two: Some limits on our capacity for processing information. Psychol. Rev. 1956, 63, 81–97. [Google Scholar] [CrossRef]

- Pashler, H. Familiarity and visual change detection. Percept. Psychophys. 1988, 44, 369–378. [Google Scholar] [CrossRef]

- Ma, W.J.; Husain, M.; Bays, P.M. Changing concepts of working memory. Nat. Neurosci. 2014, 17, 347–356. [Google Scholar] [CrossRef]

- Palmer, J. Attentional limits on the perception and memory of visual information. J. Exp. Psychol. Hum. Percept. Perform. 1990, 16, 332–350. [Google Scholar] [CrossRef]

- Gorgoraptis, N.; Catalao, R.F.; Bays, P.M.; Husain, M. Dynamic updating of working memory resources for visual objects. J. Neurosci. 2011, 31, 8502–8511. [Google Scholar] [CrossRef]

- Van Den Berg, R.; Shin, H.; Chou, W.C.; George, R.; Ma, W.J. Variability in encoding precision accounts for visual short-term memory limitations. Proc. Natl. Acad. Sci. USA 2012, 109, 8780–8785. [Google Scholar] [CrossRef]

- van den Berg, R.; Awh, E.; Ma, W.J. Factorial comparison of working memory models. Psychol. Rev. 2014, 121, 124–149. [Google Scholar] [CrossRef]

- Emrich, S.M.; Lockhart, H.A.; Al-Aidroos, N. Attention mediates the flexible allocation of visual working memory resources. J. Exp. Psychol. Hum. Percept. Perform. 2017, 43, 1454–1465. [Google Scholar] [CrossRef]

- Huynh, D.; Tripathy, S.P.; Bedell, H.E.; Ögmen, H. Stream specificity and asymmetries in feature binding and content-addressable access in visual encoding and memory. J. Vis. 2015, 15, 14. [Google Scholar] [CrossRef]

- Huynh, D.; Tripathy, S.P.; Bedell, H.E.; Öğmen, H. The reference frame for encoding and retention of motion depends on stimulus set size. Attention Perception Psychophys. 2017, 79, 888–910. [Google Scholar] [CrossRef][Green Version]

- Pylyshyn, Z.W.; Storm, R.W. Tracking multiple independent targets: Evidence for a parallel tracking mechanism. Spat. Vis. 1988, 3, 179–197. [Google Scholar] [CrossRef]

- Tripathy, S.P.; Narasimhan, S.; Barrett, B.T. On the effective number of tracked trajectories in normal human vision. J. Vis. 2007, 7, 2. [Google Scholar] [CrossRef]

- Tripathy, S.P.; Barrett, B.T. Gross misperceptions in the perceived trajectories of moving dots. Perception 2003, 32, 1403–1408. [Google Scholar] [CrossRef]

- Tripathy, S.P.; Barrett, B.T. Severe loss of positional information when detecting deviations in multiple trajectories. J. Vis. 2004, 4, 4. [Google Scholar] [CrossRef]

- Tripathy, S.P.; Howard, C.J. Multiple trajectory tracking. Scholarpedia 2012, 7, 11287. [Google Scholar] [CrossRef]

- Shooner, C.; Tripathy, S.P.; Bedell, H.E.; Öğmen, H. High-capacity, transient retention of direction-of-motion information for multiple moving objects. J. Vis. 2010, 10, 8. [Google Scholar] [CrossRef][Green Version]

- Fougnie, D.; Asplund, C.L.; Marois, R. What are the units of storage in visual working memory? J. Vis. 2010, 10, 27. [Google Scholar] [CrossRef]

- Atkinson, R.C.; Shiffrin, R.M. Human memory: A proposed system and its control processes. Psychol. Learn. Motiv. 1968, 2, 89–195. [Google Scholar]

- Atkinson, R.C.; Shiffrin, R.M. The control of short-term memory. Sci. Am. 1971, 225, 82–91. [Google Scholar] [CrossRef] [PubMed]

- Lu, Z.L.; Dosher, B.A. External noise distinguishes mechanisms of attention. Neurobiol. Atten. 2005, 38, 448–453. [Google Scholar] [CrossRef]

- Chen, Y.; Martinez-Conde, S.; Macknik, S.L.; Bereshpolova, Y.; Swadlow, H.A.; Alonso, J.M. Task difficulty modulates the activity of specific neuronal populations in primary visual cortex. Nat. Neurosci. 2008, 11, 974–982. [Google Scholar] [CrossRef] [PubMed]

- Dolcos, F.; Miller, B.; Kragel, P.; Jha, A.; McCarthy, G. Regional brain differences in the effect of distraction during the delay interval of a working memory task. Brain Res. 2007, 1152, 171–181. [Google Scholar] [CrossRef] [PubMed]

- Gazzaley, A.; Nobre, A.C. Top-down modulation: Bridging selective attention and working memory. Trends Cogn. Sci. 2012, 16, 129–135. [Google Scholar] [CrossRef]

- Huang, J.; Sekuler, R. Attention protects the fidelity of visual memory: Behavioral and electrophysiological evidence. J. Neurosci. 2010, 30, 13461–13471. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Makovski, T.; Jiang, Y.V. Distributing versus focusing attention in visual short-term memory. Psychon. Bull. Rev. 2007, 14, 1072–1078. [Google Scholar] [CrossRef]

- Polk, T.A.; Drake, R.M.; Jonides, J.J.; Smith, M.R.; Smith, E.E. Attention enhances the neural processing of relevant features and suppresses the processing of irrelevant features in humans: A functional magnetic resonance imaging study of the stroop task. J. Neurosci. 2008, 28, 13786–13792. [Google Scholar] [CrossRef]

- Reynolds, J.H.; Chelazzi, L. Attentional modulation of visual processing. Annu. Rev. Neurosci. 2004, 27, 611–647. [Google Scholar] [CrossRef]

- Sreenivasan, K.K.; Jha, A.P. Selective attention supports working memory maintenance by modulating perceptual processing of distractors. J. Cogn. Neurosci. 2007, 19, 32–41. [Google Scholar] [CrossRef] [PubMed]

- Tombu, M.N.; Asplund, C.L.; Dux, P.E.; Godwin, D.; Martin, J.W.; Marois, R. A unified attentional bottleneck in the human brain. Proc. Natl. Acad. Sci. USA 2011, 108, 13426–13431. [Google Scholar] [CrossRef] [PubMed]

- Yoon, J.H.; Curtis, C.E.; D’Esposito, M. Differential effects of distraction during working memory on delay-period activity in the prefrontal cortex and the visual association cortex. NeuroImage 2006, 29, 1117–1126. [Google Scholar] [CrossRef] [PubMed]

- Cutzu, F.; Tsotsos, J.K. The selective tuning model of attention: Psychophysical evidence for a suppressive annulus around an attended item. Vis. Res. 2003, 43, 205–219. [Google Scholar] [CrossRef]

- Yoo, S.A.; Tsotsos, J.K.; Fallah, M. The Attentional Suppressive Surround: Eccentricity, Location-Based and Feature-Based Effects and Interactions. Front. Neurosci. 2018, 12, 1–14. [Google Scholar] [CrossRef]

- He, S.; Cavanagh, P.; Intriligator, J. Attentional Resolution and the Locus of Visual Awareness. Nature 1996, 383, 334–337. [Google Scholar] [CrossRef]

- Intriligator, J.; Cavanagh, P. The spatial resolution of visual attention. Cogn. Psychol. 2001, 43, 171–216. [Google Scholar] [CrossRef]

- Gegenfurtner, K.R.; Sperling, G. Information transfer in iconic memory experiments. J. Exp. Psychol. Hum. Percept. Perform. 1993, 19, 845–866. [Google Scholar] [CrossRef]

- Breitmeyer, B.; Öğmen, H. Visual Masking: Time Slices Through Conscious and Unconscious Vision; Oxford University Press: Oxford, UK, 2006. [Google Scholar] [CrossRef]

- Kurby, C.A.; Zacks, J.M. Segmentation in the Perception and Memory of Events. Trends Cogn. Sci. 2008, 12, 72–79. [Google Scholar] [CrossRef]

- Zacks, J.M.; Swallow, K.M. Event segmentation. Curr. Dir. Psychol. Sci. 2007, 16, 80–84. [Google Scholar] [CrossRef]

- Zokaei, N.; Gorgoraptis, N.; Bahrami, B.; Bays, P.M.; Husain, M. Precision of working memory for visual motion sequences and transparent motion surfaces. J. Vis. 2011, 11, 1–18. [Google Scholar] [CrossRef] [PubMed]

- Baddeley, A.D.; Hitch, G. Working Memory. Psychol. Learn. Motiv.-Adv. Res. Theory 1974, 8, 47–89. [Google Scholar] [CrossRef]

- Schwarz, G. Estimating the dimension of a model. Ann. Stat. 1978, 6, 461–464. [Google Scholar] [CrossRef]

- Newville, M.; Stensitzki, T.; Allen, D.B.; Ingargiola, A. LMFIT: Non-Linear Least-Squares Minimization and Curve-Fitting for Python; Zenodo: Geneva, Switzerland, 2014. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, S.; Tripathy, S.P.; Öğmen, H. Capacity and Allocation across Sensory and Short-Term Memories. Vision 2022, 6, 15. https://doi.org/10.3390/vision6010015

Wang S, Tripathy SP, Öğmen H. Capacity and Allocation across Sensory and Short-Term Memories. Vision. 2022; 6(1):15. https://doi.org/10.3390/vision6010015

Chicago/Turabian StyleWang, Shaoying, Srimant P. Tripathy, and Haluk Öğmen. 2022. "Capacity and Allocation across Sensory and Short-Term Memories" Vision 6, no. 1: 15. https://doi.org/10.3390/vision6010015

APA StyleWang, S., Tripathy, S. P., & Öğmen, H. (2022). Capacity and Allocation across Sensory and Short-Term Memories. Vision, 6(1), 15. https://doi.org/10.3390/vision6010015