Seeing Beyond Salience and Guidance: The Role of Bias and Decision in Visual Search

Abstract

:1. Perception and Attention in Visual Search: A Brief Summary

1.1. Visual Salience

1.2. Attentional Guidance and Control Settings

1.3. Eye Movements in Visual Search

2. Biases and Strategy

2.1. Optimality vs. Stochasticity

2.2. Oculomotor Biases

2.3. Decision Biases

2.4. Stopping Rules

3. Search Strategy and Individual Differences

4. Conclusions

- The focus of our experiments and analyses should not only be to explain average patterns, but also to account for variance. The large sources of variance, relative to smaller ones, will be the more powerful predictors of search performance. If we can understand and control these, the smaller sources of variance will be easier to tackle. Individual differences and variability within conditions should not be hidden away in averaged data, but made a central part of our models and theories.

- A related suggestion is to be cautious in interpreting measures of central tendency, such as means and medians. Given the large range of individual differences we have observed in most of our own search data, and the spurious conclusions we could have reached if we relied on average patterns alone, we think it is important to consider carefully whether a measure of central tendency is, in fact, a good representation of a particular set of data. That is, is the mean (or median) pattern similar to most of the trial and individual level results? If a particular summary statistic is not an accurate or adequate representation of most of your data, do not report it. Instead, show the full range of results so other researchers can understand how variable a given behaviour is within and between individuals. This variance does not indicate a failed manipulation or “noisy data”; instead, consider that it contains essential information, without which we cannot fully understand visual search performance.

- Based on observations of independent sources of variance across different tasks [84,89], it is clearly important to address directly the question of how confidently we can apply conclusions from one search task to related and unrelated tasks and contexts. For example, we often assume that visual primitives like line segments and Gabor patches will scale up to more complex scenes and objects or that basic phenomena like attentional capture or inhibition of return will be easy to observe in real-world situations. In fact, it is difficult to find straightforward instances in the literature where these basic effects have clearly generalized from the laboratory to more complex real-world situations. It is important to note that the context-specificity of a given effect is not an indication that it is trivial or unimportant. Instead, it is an important source of data for understanding the constraints and boundary conditions for patterns of results that can be reliably produced in the laboratory. Directly measuring how particular manipulations and interventions affect search in a variety of situations can be a fruitful source of insight into these effects.

- We all have a tendency to stay within the bounds of familiar theories and models. Looking outside the vision and attention literature can lead to many new useful ideas and explanations, especially in visual search, which is a rich and complex task. Our understanding can be enriched from insights and models from other fields such as decision-making, learning, human factors and individual differences.

Author Contributions

Funding

Conflicts of Interest

References

- Palmer, J.; Verghese, P.; Pavel, M. The psychophysics of visual search. Vis. Res. 2000, 40, 1227–1268. [Google Scholar] [CrossRef] [Green Version]

- Eckstein, M.P.; Thomas, J.P.; Palmer, J.; Shimozaki, S.S. A signal detection model predicts the effects of set size on visual search accuracy for feature, conjunction, triple conjunction, and disjunction displays. Percept. Psychophys. 2000, 62, 425–451. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Parr, T.; Friston, K.J. Attention or salience? Curr. Opin. Psychol. 2019, 29, 1–5. [Google Scholar] [CrossRef] [PubMed]

- Anderson, B.A. Neurobiology of value-driven attention. Curr. Opin. Psychol. 2018. [Google Scholar] [CrossRef] [PubMed]

- Geng, J.J.; Witkowski, P. Template-to-distractor distinctiveness regulates visual search efficiency. Curr. Opin. Psychol. 2019, 29, 119–125. [Google Scholar] [CrossRef] [PubMed]

- Theeuwes, J. Goal-Driven, Stimulus-Driven and History-Driven selection. Curr. Opin. Psychol. 2019, 29, 97–101. [Google Scholar] [CrossRef] [PubMed]

- Wolfe, J.M.; Utochkin, I.S. What is a preattentive feature? Curr. Opin. Psychol. 2019, 29, 19–26. [Google Scholar] [CrossRef] [PubMed]

- Gaspelin, N.; Luck, S.J. Inhibition as a potential resolution to the attentional capture debate. Curr. Opin. Psychol. 2019. [Google Scholar] [CrossRef]

- Itti, L.; Koch, C.; Niebur, E. A model of saliency-based visual attention for rapid scene analysis. IEEE Trans. Pattern Anal. Mach. Intell. 1998, 20, 1254–1259. [Google Scholar] [CrossRef] [Green Version]

- Itti, L.; Koch, C. A saliency-based search mechanism for overt and covert shifts of visual attention. Vis. Res. 2000, 40, 1489–1506. [Google Scholar] [CrossRef] [Green Version]

- Bylinskii, Z.; Judd, T.; Oliva, A.; Torralba, A.; Durand, F. What do different evaluation metrics tell us about saliency models? arXiv 2016, arXiv:1604.03605. [Google Scholar] [CrossRef] [PubMed]

- Bylinskii, Z.; Judd, T.; Borji, A.; Itti, L.; Durand, F.; Oliva, A.; Torralba, A. MIT Saliency Benchmark. Available online: http://saliency.mit.edu/ (accessed on 10 September 2019).

- Kong, P.; Mancas, M.; Thuon, N.; Kheang, S.; Gosselin, B. Do Deep-Learning Saliency Models Really Model Saliency? In Proceedings of the 2018 25th IEEE International Conference on Image Processing (ICIP), Athens, Greece, 7–10 October 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 2331–2335. [Google Scholar]

- Kümmerer, M.; Wallis, T.S.; Gatys, L.A.; Bethge, M. Understanding low-and high-level contributions to fixation prediction. In Proceedings of the 2017 IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 4799–4808. [Google Scholar]

- Walther, D.; Koch, C. Modeling attention to salient proto-objects. Neural Netw. 2006, 19, 1395–1407. [Google Scholar] [CrossRef] [PubMed]

- Nuthmann, A.; Henderson, J.M. Object-based attentional selection in scene viewing. J. Vis. 2010, 10, 20. [Google Scholar] [CrossRef] [PubMed]

- Cadieu, C.F.; Hong, H.; Yamins, D.L.; Pinto, N.; Ardila, D.; Solomon, E.A.; Majaj, N.J.; DiCarlo, J.J. Deep neural networks rival the representation of primate IT cortex for core visual object recognition. PLoS Comput. Biol. 2014, 10, e1003963. [Google Scholar] [CrossRef] [PubMed]

- Wolfe, J.M.; Cave, K.R.; Franzel, S.L. Guided search: An alternative to the feature integration model for visual search. J. Exp. Psychol. Hum. Percept. Perform. 1989, 15, 419. [Google Scholar] [CrossRef] [PubMed]

- Wolfe, J.; Cain, M.; Ehinger, K.; Drew, T. Guided Search 5.0: Meeting the challenge of hybrid search and multiple-target foraging. J. Vis. 2015, 15, 1106. [Google Scholar] [CrossRef]

- Wolfe, J.M.; Gray, W. Guided search 4.0. In Integrated Models of Cognitive Systems; Oxford University Press: New York, NY, USA, 2007; pp. 99–119. [Google Scholar]

- Treisman, A.M.; Gelade, G. A feature-integration theory of attention. Cogn. Psychol. 1980, 12, 97–136. [Google Scholar] [CrossRef]

- Remington, R.W.; Johnston, J.C.; Yantis, S. Involuntary attentional capture by abrupt onsets. Percept. Psychophys. 1992, 51, 279–290. [Google Scholar] [CrossRef] [PubMed]

- Corbetta, M.; Shulman, G.L. Control of goal-directed and stimulus-driven attention in the brain. Nat. Rev. Neurosci. 2002, 3, 201. [Google Scholar] [CrossRef]

- Bacon, W.F.; Egeth, H.E. Overriding stimulus-driven attentional capture. Percept. Psychophys. 1994, 55, 485–496. [Google Scholar] [CrossRef]

- Duncan, J.; Humphreys, G.W. Visual search and stimulus similarity. Psychol. Rev. 1989, 96, 433. [Google Scholar] [CrossRef] [PubMed]

- Lavie, N. Distracted and confused?: Selective attention under load. Trends Cogn. Sci. 2005, 9, 75–82. [Google Scholar] [CrossRef] [PubMed]

- Folk, C.L.; Remington, R.W.; Johnston, J.C. Involuntary covert orienting is contingent on attentional control settings. J. Exp. Psychol. Hum. Percept. Perform. 1992, 18, 1030. [Google Scholar] [CrossRef] [PubMed]

- von Mühlenen, A.; Lleras, A. No-onset looming motion guides spatial attention. J. Exp. Psychol. Hum. Percept. Perform. 2007, 33, 1297. [Google Scholar] [CrossRef] [PubMed]

- Cosman, J.D.; Vecera, S.P. Attentional capture under high perceptual load. Psychon. Bull. Rev. 2010, 17, 815–820. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Di Lollo, V.; Enns, J.T.; Rensink, R.A. Competition for consciousness among visual events: The psychophysics of reentrant visual processes. J. Exp. Psychol. Gen. 2000, 129, 481. [Google Scholar] [CrossRef] [PubMed]

- Awh, E.; Belopolsky, A.V.; Theeuwes, J. Top-down versus bottom-up attentional control: A failed theoretical dichotomy. Trends Cogn. Sci. 2012, 16, 437–443. [Google Scholar] [CrossRef] [PubMed]

- Anderson, B.A.; Laurent, P.A.; Yantis, S. Value-driven attentional capture. Proc. Natl. Acad. Sci. USA 2011, 108, 10367–10371. [Google Scholar] [CrossRef] [Green Version]

- Hulleman, J.; Olivers, C.N. On the brink: The demise of the item in visual search moves closer. Behav. Brain Sci. 2017, 40. [Google Scholar] [CrossRef]

- Zelinsky, G.J. A theory of eye movements during target acquisition. Psychol. Rev. 2008, 115, 787. [Google Scholar] [CrossRef]

- Findlay, J.M. Global visual processing for saccadic eye movements. Vis. Res. 1982, 22, 1033–1045. [Google Scholar] [CrossRef]

- Zelinsky, G.J.; Sheinberg, D.L. Eye movements during parallel–serial visual search. J. Exp. Psychol. Hum. Percept. Perform. 1997, 23, 244. [Google Scholar] [CrossRef] [PubMed]

- Klein, R.; Farrell, M. Search performance without eye movements. Percept. Psychophys. 1989, 46, 476–482. [Google Scholar] [CrossRef]

- Boot, W.R.; Kramer, A.F.; Becic, E.; Wiegmann, D.A.; Kubose, T. Detecting transient changes in dynamic displays: The more you look, the less you see. Hum. Factors 2006, 48, 759–773. [Google Scholar] [CrossRef] [PubMed]

- Najemnik, J.; Geisler, W.S. Optimal eye movement strategies in visual search. Nature 2005, 434, 387. [Google Scholar] [CrossRef] [PubMed]

- Najemnik, J.; Geisler, W.S. Eye movement statistics in humans are consistent with an optimal search strategy. J. Vis. 2008, 8, 4. [Google Scholar] [CrossRef]

- Hoppe, D.; Rothkopf, C.A. Multi-step planning of eye movements in visual search. Sci. Rep. 2019, 9, 144. [Google Scholar] [CrossRef] [Green Version]

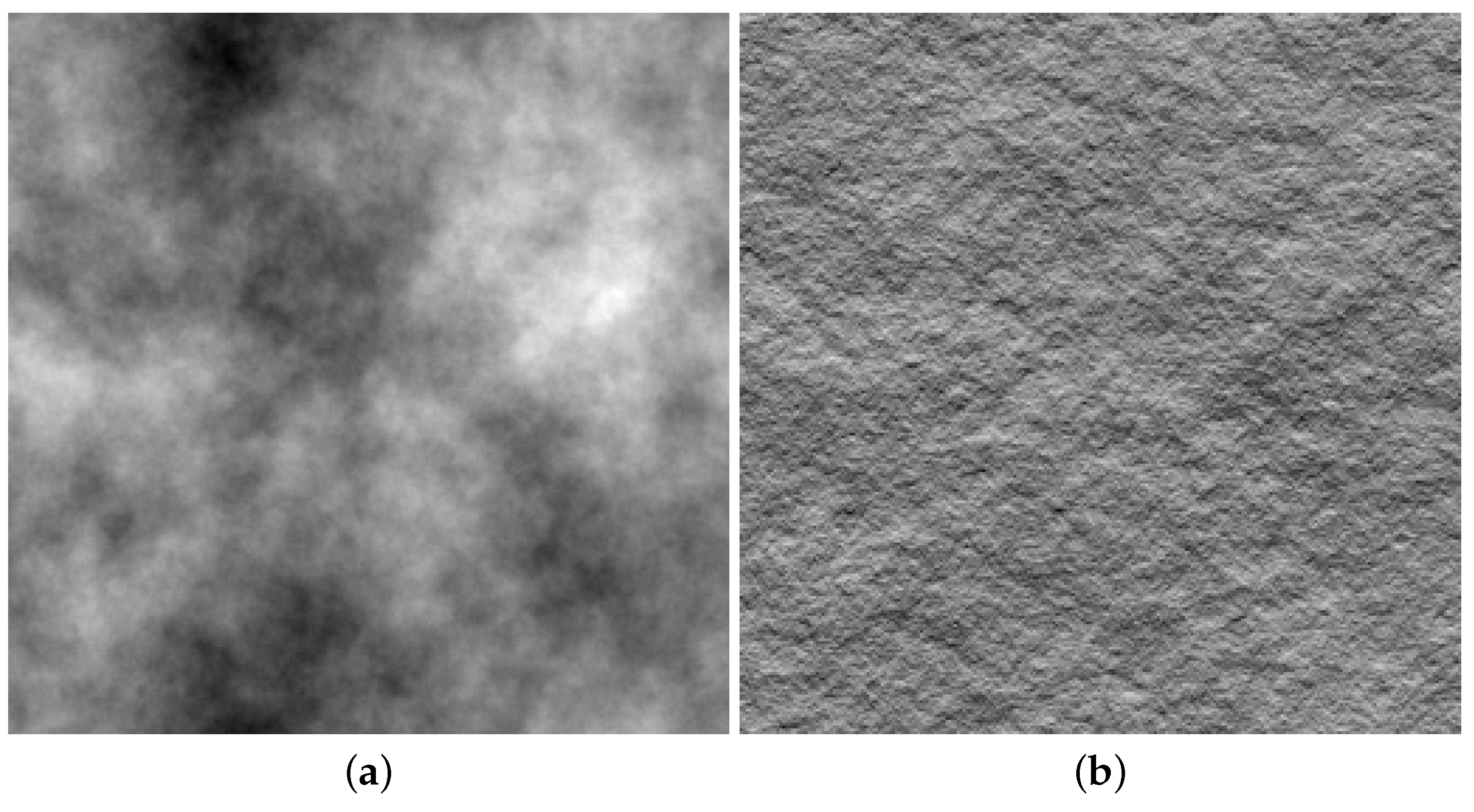

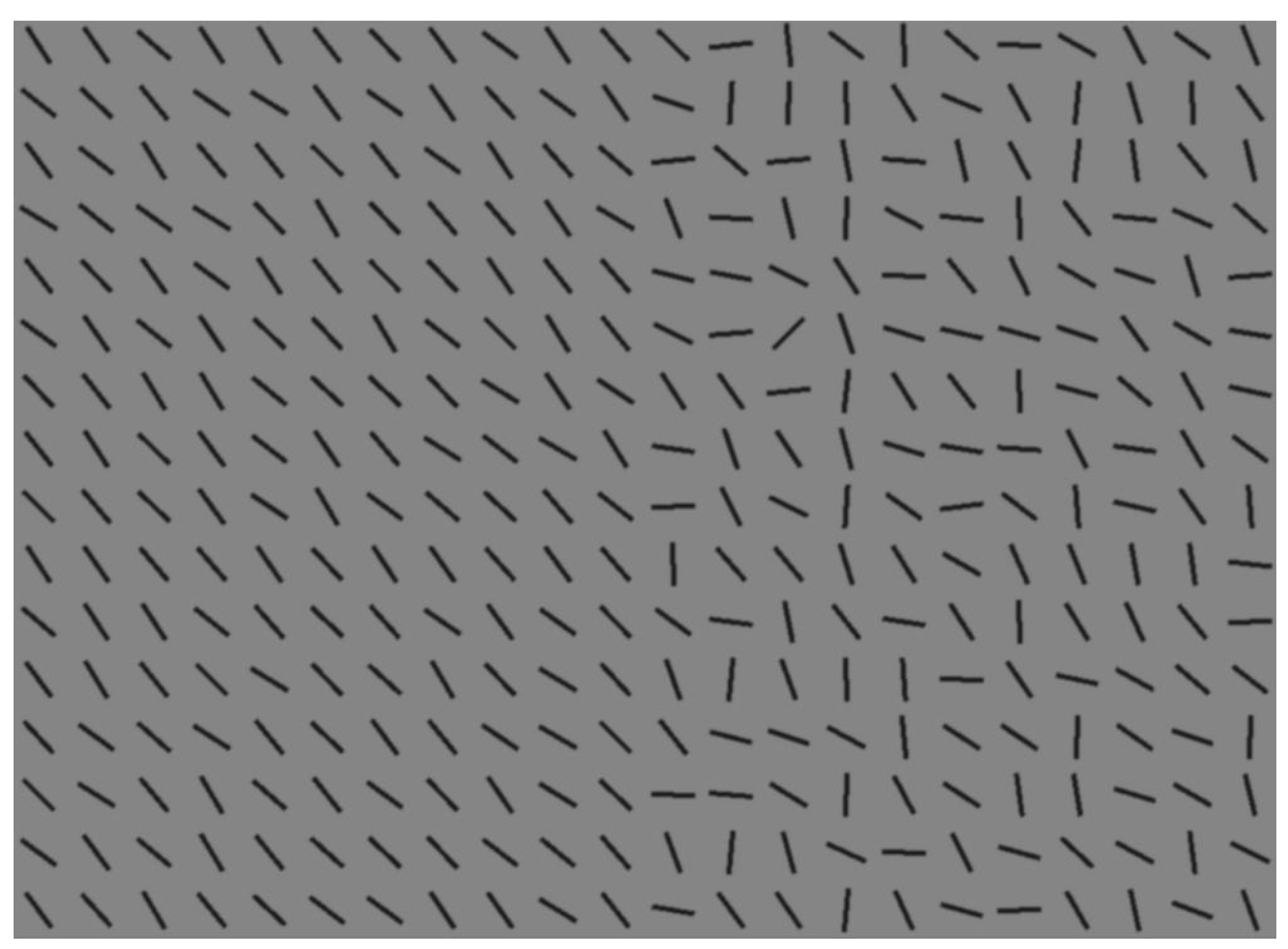

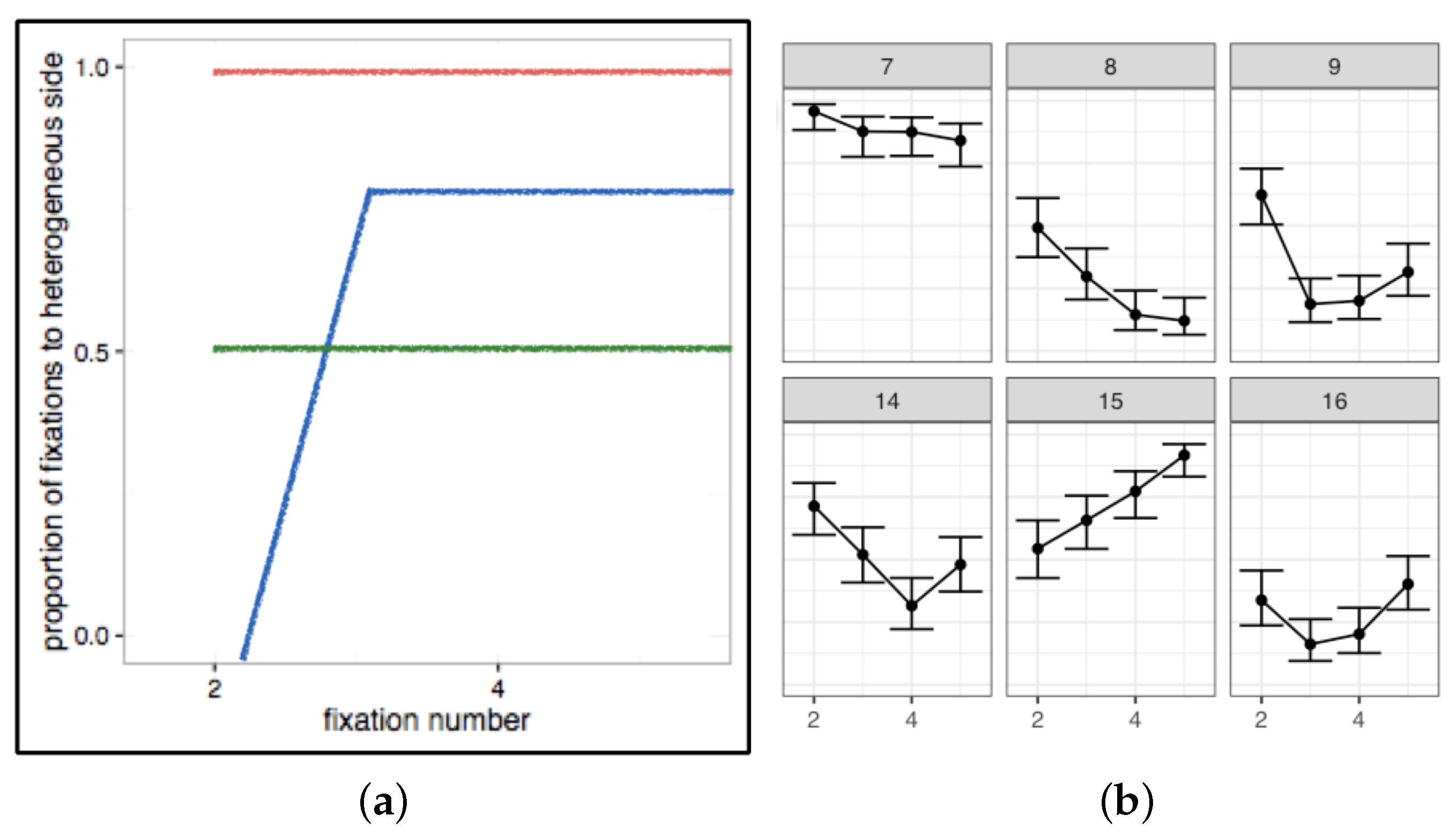

- Clarke, A.D.; Green, P.; Chantler, M.J.; Hunt, A.R. Human search for a target on a textured background is consistent with a stochastic model. J. Vis. 2016, 16, 4. [Google Scholar] [CrossRef] [Green Version]

- Boccignone, G.; Ferraro, M. Modelling gaze shift as a constrained random walk. Phys. A Stat. Mech. Its Appl. 2004, 331, 207–218. [Google Scholar] [CrossRef]

- Tatler, B.W. The central fixation bias in scene viewing: Selecting an optimal viewing position independently of motor biases and image feature distributions. J. Vis. 2007, 7, 1–17. [Google Scholar] [CrossRef]

- Clarke, A.D.; Tatler, B.W. Deriving an appropriate baseline for describing fixation behaviour. Vis. Res. 2014, 102, 41–51. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Tatler, B.W.; Vincent, B.T. The prominence of behavioural biases in eye guidance. Vis. Cognit. 2009, 17, 1029–1054. [Google Scholar] [CrossRef]

- Gilchrist, I.D.; Harvey, M. Evidence for a systematic component within scan paths in visual search. Vis. Cognit. 2006, 14, 704–715. [Google Scholar] [CrossRef]

- Parkhurst, D.; Law, K.; Niebur, E. Modeling the role of salience in the allocation of overt visual attention. Vis. Res. 2002, 42, 107–123. [Google Scholar] [CrossRef] [Green Version]

- Zelinsky, G.J. Using eye saccades to assess the selectivity of search movements. Vis. Res. 1996, 36, 2177–2187. [Google Scholar] [CrossRef]

- Nuthmann, A.; Matthias, E. Time course of pseudoneglect in scene viewing. Cortex 2014, 52, 113–119. [Google Scholar] [CrossRef] [PubMed]

- Foulsham, T.; Gray, A.; Nasiopoulos, E.; Kingstone, A. Leftward biases in picture scanning and line bisection: A gaze-contingent window study. Vis. Res. 2013, 78, 14–25. [Google Scholar] [CrossRef] [PubMed]

- Over, E.; Hooge, I.; Vlaskamp, B.; Erkelens, C. Coarse-to-fine eye movement strategy in visual search. Vis. Res. 2007, 47, 2272–2280. [Google Scholar] [CrossRef] [Green Version]

- Klein, R.M. Inhibition of return. Trends Cogn. Sci. 2000, 4, 138–147. [Google Scholar] [CrossRef]

- Sumner, P. Inhibition versus attentional momentum in cortical and collicular mechanisms of IOR. Cognit. Neuropsychol. 2006, 23, 1035–1048. [Google Scholar] [CrossRef]

- MacInnes, W.J.; Hunt, A.R.; Hilchey, M.D.; Klein, R.M. Driving forces in free visual search: An ethology. Atten. Percept. Psychophys. 2014, 76, 280–295. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Clarke, A.D.; Stainer, M.J.; Tatler, B.W.; Hunt, A.R. The saccadic flow baseline: Accounting for image- independent biases in fixation behaviour. J. Vis. 2017, 17, 12. [Google Scholar] [CrossRef] [PubMed]

- Dickinson, A. Actions and habits: The development of behavioural autonomy. Philos. Trans. R. Soc. Lond. Ser. B Biol. Sci. 1985, 308, 67–78. [Google Scholar] [CrossRef]

- Morvan, C.; Maloney, L.T. Human visual search does not maximize the post-saccadic probability of identifying targets. PLoS Comput. Biol. 2012, 8, e1002342. [Google Scholar] [CrossRef] [PubMed]

- Clarke, A.D.; Hunt, A.R. Failure of intuition when choosing whether to invest in a single goal or split resources between two goals. Psychol. Sci. 2016, 27, 64–74. [Google Scholar] [CrossRef] [PubMed]

- Hunt, A.R.; von Mühlenen, A.; Kingstone, A. The time course of attentional and oculomotor capture reveals a common cause. J. Exp. Psychol. Hum. Percept. Perform. 2007, 33, 271. [Google Scholar] [CrossRef]

- Kowler, E. Eye Movements and Their Role in Visual and Cognitive Processes; Elsevier: Amsterdam, The Netherlands, 1990. [Google Scholar]

- Platt, M.L.; Glimcher, P.W. Neural correlates of decision variables in parietal cortex. Nature 1999, 400, 233. [Google Scholar] [CrossRef] [PubMed]

- Schall, J.D. Neural basis of deciding, choosing and acting. Nat. Rev. Neurosci. 2001, 2, 33. [Google Scholar] [CrossRef] [PubMed]

- DeMiguel, V.; Garlappi, L.; Uppal, R. How inefficient are simple asset allocation strategies. Rev. Financ. Stud. 2009, 22, 1915–1953. [Google Scholar] [CrossRef]

- Maier, N.R. The behaviour mechanisms concerned with problem solving. Psychol. Rev. 1940, 47, 43. [Google Scholar] [CrossRef]

- Krechevsky, I. Brain mechanisms and “hypotheses”. J. Comp. Psychol. 1935, 19, 425. [Google Scholar] [CrossRef]

- Krechevsky, I. Brain mechanisms and variability: II. Variability where no learning is involved. J. Comp. Psychol. 1937, 23, 139. [Google Scholar] [CrossRef]

- Dukewich, K.R.; Klein, R.M. Finding the target in search tasks using detection, localization, and identification responses. Can. J. Exp. Psychol. Can. Psychol. Exp. 2009, 63, 1. [Google Scholar] [CrossRef] [PubMed]

- Wolfe, J.M.; Palmer, E.M.; Horowitz, T.S. Reaction time distributions constrain models of visual search. Vis. Res. 2010, 50, 1304–1311. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Tatler, B.W.; Brockmole, J.R.; Carpenter, R.H. LATEST: A model of saccadic decisions in space and time. Psychol. Rev. 2017, 124, 267. [Google Scholar] [CrossRef] [PubMed]

- Charnov, E.L. Optimal foraging, the marginal value theorem. Theor. Popul. Biol. 1976, 9, 129–136. [Google Scholar] [CrossRef] [Green Version]

- McNair, J.N. Optimal giving-up times and the marginal value theorem. Am. Nat. 1982, 119, 511–529. [Google Scholar] [CrossRef]

- Smith, A.D.; Hood, B.M.; Gilchrist, I.D. Visual search and foraging compared in a large-scale search task. Cogn. Process. 2008, 9, 121–126. [Google Scholar] [CrossRef] [Green Version]

- Gilchrist, I.D.; North, A.; Hood, B. Is visual search really like foraging? Perception 2001, 30, 1459–1464. [Google Scholar] [CrossRef]

- Wolfe, J.M. When is it time to move to the next raspberry bush? Foraging rules in human visual search. J. Vis. 2013, 13, 10. [Google Scholar] [CrossRef]

- Chun, M.M.; Wolfe, J.M. Just say no: How are visual searches terminated when there is no target present? Cogn. Psychol. 1996, 30, 39–78. [Google Scholar] [CrossRef] [PubMed]

- Hedge, C.; Powell, G.; Sumner, P. The reliability paradox: Why robust cognitive tasks do not produce reliable individual differences. Behav. Res. Methods 2018, 50, 1166–1186. [Google Scholar] [CrossRef] [PubMed]

- Nowakowska, A.; Clarke, A.D.; Hunt, A.R. Human visual search behaviour is far from ideal. Proc. R. Soc. B 2017, 284, 20162767. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Irons, J.L.; Leber, A.B. Choosing attentional control settings in a dynamically changing environment. Atten. Percept. Psychophys. 2016, 78, 2031–2048. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Irons, J.L.; Leber, A.B. Characterizing individual variation in the strategic use of attentional control. J. Exp. Psychol. Hum. Percept. Perform. 2018, 44, 1637. [Google Scholar] [CrossRef] [PubMed]

- Jóhannesson, Ó.I.; Thornton, I.M.; Smith, I.J.; Chetverikov, A.; Kristjánsson, A. Visual foraging with fingers and eye gaze. i-Perception 2016, 7, 2041669516637279. [Google Scholar] [CrossRef] [PubMed]

- Kristjánsson, Á.; Jóhannesson, Ó.I.; Thornton, I.M. Common attentional constraints in visual foraging. PLoS ONE 2014, 9, e100752. [Google Scholar] [CrossRef]

- Araujo, C.; Kowler, E.; Pavel, M. Eye movements during visual search: The costs of choosing the optimal path. Vis. Res. 2001, 41, 3613–3625. [Google Scholar] [CrossRef]

- Clarke, A.; Irons, J.; James, W.; Leber, A.B.; Hunt, A.R. Stable individual differences in strategies within, but not between, visual search tasks. PsyArXiv 2019. [Google Scholar] [CrossRef]

- Nowakowska, A.; Clarke, A.D.; Sahraie, A.; Hunt, A.R. Inefficient search strategies in simulated hemianopia. J. Exp. Psychol. Hum. Percept. Perform. 2016, 42, 1858. [Google Scholar] [CrossRef]

- Nowakowska, A.; Clarke, A.D.; Sahraie, A.; Hunt, A.R. Practice-related changes in eye movement strategy in healthy adults with simulated hemianopia. Neuropsychologia 2019, 128, 232–240. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zihl, J. Oculomotor scanning performance in subjects with homonymous visual field disorders. Vis. Impair. Res. 1999, 1, 23–31. [Google Scholar] [CrossRef]

- Tant, M.; Cornelissen, F.W.; Kooijman, A.C.; Brouwer, W.H. Hemianopic visual field defects elicit hemianopic scanning. Vis. Res. 2002, 42, 1339–1348. [Google Scholar] [CrossRef] [Green Version]

- Cooper, L.A. Individual differences in visual comparison processes. Percept. Psychophys. 1976, 19, 433–444. [Google Scholar] [CrossRef] [Green Version]

- Russell, R.; Duchaine, B.; Nakayama, K. Super-recognizers: People with extraordinary face recognition ability. Psychon. Bull. Rev. 2009, 16, 252–257. [Google Scholar] [CrossRef] [PubMed]

- Zhu, Q.; Song, Y.; Hu, S.; Li, X.; Tian, M.; Zhen, Z.; Dong, Q.; Kanwisher, N.; Liu, J. Heritability of the specific cognitive ability of face perception. Curr. Biol. 2010, 20, 137–142. [Google Scholar] [CrossRef]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Clarke, A.D.F.; Nowakowska, A.; Hunt, A.R. Seeing Beyond Salience and Guidance: The Role of Bias and Decision in Visual Search. Vision 2019, 3, 46. https://doi.org/10.3390/vision3030046

Clarke ADF, Nowakowska A, Hunt AR. Seeing Beyond Salience and Guidance: The Role of Bias and Decision in Visual Search. Vision. 2019; 3(3):46. https://doi.org/10.3390/vision3030046

Chicago/Turabian StyleClarke, Alasdair D. F., Anna Nowakowska, and Amelia R. Hunt. 2019. "Seeing Beyond Salience and Guidance: The Role of Bias and Decision in Visual Search" Vision 3, no. 3: 46. https://doi.org/10.3390/vision3030046

APA StyleClarke, A. D. F., Nowakowska, A., & Hunt, A. R. (2019). Seeing Beyond Salience and Guidance: The Role of Bias and Decision in Visual Search. Vision, 3(3), 46. https://doi.org/10.3390/vision3030046