MDSCNet: A Lightweight Radar Image-Based Model for Multi-Action Classification in Elderly Healthcare

Abstract

1. Introduction

- Previous studies do not use data recorded with real nursing-care beds. In this study, we record radar data from 15 subjects using an actual electric nursing-care bed.

- Previous studies often focus only on binary classification problems such as fall detection, whereas real applications require the recognition of more postures. Based on practical nursing scenarios, this study performs a multiclass classification of five actions: lying down, long-sitting, standing, exiting, and falling.

- In real applications, lightweight models are required for deployment on edge devices. Based on extensive ablation experiments, we develop a lightweight network, MDSCNet, with a size of only 0.29 MB, which is significantly smaller than other models while achieving comparable accuracy. Furthermore, to ensure the reliability of the results, this work performs extensive cross-validation experiments. All the baseline comparisons and ablation experiments are evaluated using cross-validation of leave-one-subject-out (LOSO).

2. Methods

3. Results

3.1. Experimental Environment

3.2. Dataset and Implementation Details

3.3. Evaluation Metrics

3.4. Ablation Study

3.5. Experimental Results and Discussion

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| MDSCNet | Multi-Depthwise-Separable Convolution Network |

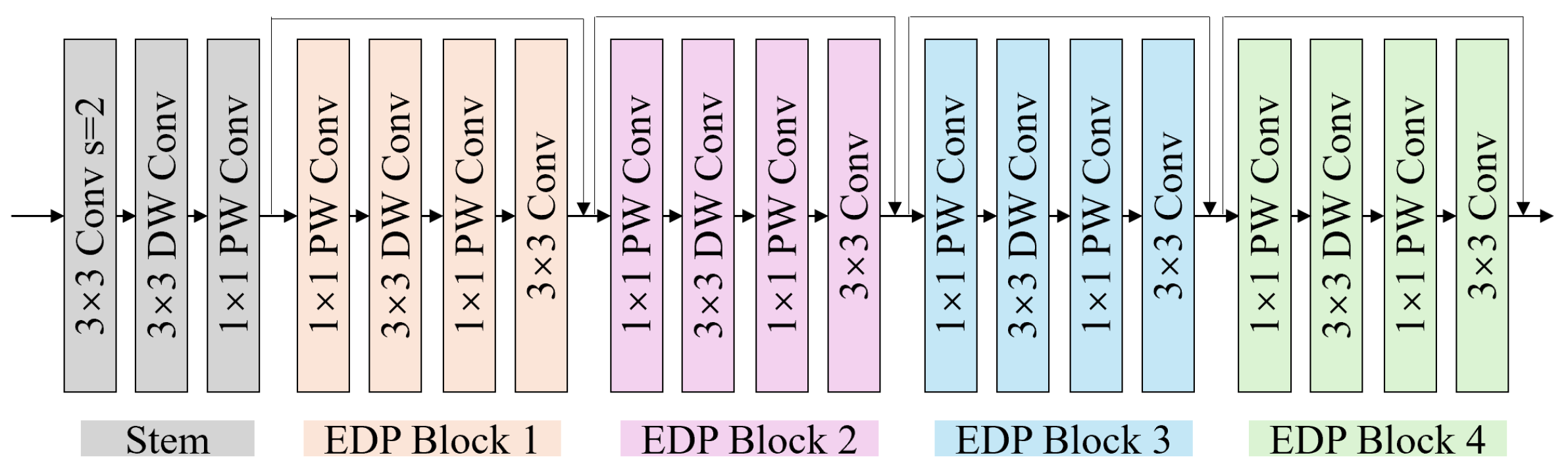

| EDP Block | Expansion–Depthwise–Projection Block |

| CNN | Convolutional Neural Network |

| TP | True Positive |

| TN | True Negative |

| FP | False Positive |

| FN | False Negatve |

References

- Vespa, J.; Medina, L.; Armstrong, D.M. Demographic Turning Points for the United States: Population Projections for 2020 to 2060. Available online: https://www.census.gov/content/dam/Census/library/publications/2020/demo/p25-1144.pdf (accessed on 13 August 2025).

- The State Council of the People’s Republic of China. Available online: https://www.gov.cn/xinwen/2018-07/19/content_5307839.htm (accessed on 13 August 2025).

- Eurostat. Population Structure and Ageing. Available online: https://ec.europa.eu/eurostat/statistics-explained/index.php?title=Population_structure_and_ageing (accessed on 13 August 2025).

- Cabinet Office, Government of Japan. Available online: https://www8.cao.go.jp/kourei/whitepaper/w-2023/html/zenbun/index.html (accessed on 13 August 2025).

- World Health Organization. Ageing and Health. Available online: https://www.who.int/news-room/fact-sheets/detail/ageing-and-health (accessed on 13 August 2025).

- World Health Organization. Falls. Available online: https://www.who.int/news-room/fact-sheets/detail/falls (accessed on 13 August 2025).

- Harrou, F.; Zerrouki, F.; Sun, Y.; Houacine, A. Vision-based fall detection system for improving safety of elderly people. IEEE Instrum. Meas. Mag. 2017, 20, 49–55. [Google Scholar] [CrossRef]

- Yang, Z.; Tsui, B.; Ning, J.; Wu, Z. Falling Detection of Toddlers Based on Improved YOLOv8 Models. Sensors 2024, 24, 6451. [Google Scholar] [CrossRef] [PubMed]

- Bian, Z.-P.; Hou, J.; Chau, L.-P.; Magnenat-Thalmann, N. Fall Detection Based on Body Part Tracking Using a Depth Camera. IEEE J. Biomed. Health Inform. 2015, 19, 430–439. [Google Scholar] [CrossRef] [PubMed]

- Su, M.-C.; Chen, J.-H.; Hsieh, Y.Z.; Hsu, S.-C.; Liao, C.-W. Enhancing Detection of Falls and Bed-Falls Using a Depth Sensor and Convolutional Neural Network. IEEE Sens. J. 2024, 24, 23150–23162. [Google Scholar] [CrossRef]

- Tseng, C.-K.; Huang, S.-J.; Kau, L.-J. Wearable Fall Detection System with Real-Time Localization and Notification Capabilities. Sensors 2025, 25, 3632. [Google Scholar] [CrossRef] [PubMed]

- Leone, A.; Manni, A.; Rescio, G.; Siciliano, P.; Caroppo, A. Deep Transfer Learning Approach in Smartwatch-Based Fall Detection Systems. Eng. Proc. 2024, 78, 2. [Google Scholar]

- Guo, W.; Liu, X.; Lu, C.; Jing, L. PIFall: A Pressure Insole-Based Fall Detection System for the Elderly Using ResNet3D. Electronics 2024, 13, 1066. [Google Scholar] [CrossRef]

- Chaccour, K.; Darazi, R.; Hassans, A.H.; Andres, E. Smart carpet using differential piezoresistive pressure sensors for elderly fall detection. In Proceedings of the IEEE International Conference on Wireless and Mobile Computing, Networking and Communications, Abu Dhabi, United Arab Emirates, 19–21 October 2015. [Google Scholar]

- Yu, Y.S.; Wie, S.; Lee, H.; Lee, J.; Kim, N.H. Long Short-Term Memory-Based Fall Detection by Frequency-Modulated Continuous Wave Millimeter-Wave Radar Sensor for Seniors Living Alone. Appl. Sci. 2025, 15, 8381. [Google Scholar] [CrossRef]

- Ahn, S.; Choi, M.; Lee, J.; Kim, J.; Chung, S. Non-Contact Fall Detection System Using 4D Imaging Radar for Elderly Safety Based on a CNN Model. Sensors 2025, 25, 3452. [Google Scholar] [CrossRef] [PubMed]

- Apple Inc. Use Fall Detection with Apple Watch. Available online: https://support.apple.com/en-us/108896 (accessed on 13 August 2025).

- Zhang, Y.; Tang, H.; Wu, Y.; Wang, B.; Yang, D. FMCW Radar Human Action Recognition Based on Asymmetric Convolutional Residual Blocks. Sensors 2024, 24, 4570. [Google Scholar] [CrossRef] [PubMed]

- Ayaz, F.; Alhumaily, B.; Hussain, S.; Imran, M.; Arshad, K.; Assaleh, K.; Zoha, A. Radar Signal Processing and Its Impact on Deep Learning-Driven Human Activity Recognition. Sensors 2025, 25, 724. [Google Scholar] [CrossRef] [PubMed]

- Hashimoto, S.; Kong, X.; Kamiya, K.; Saho, K. Behavior Classification for Bed Monitoring Using Short-Term 60 GHz-Band FMCW Radar Images. In Proceedings of the Advanced Technologies and Applications in the Internet of Things, Ceur Workshop Proceedings, Kusatsu, Siga, Japan, 19–21 October 2024. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. In Proceedings of the Advances in Neural Information Processing Systems, Curran Associates Inc., Lake Tahoe, NV, USA, 3–6 December 2012. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D. Going Deeper with Convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Xie, S.; Girshick, R.; Dollar, P.; Tu, Z.; He, K. Aggregated Residual Transformations for Deep Neural Networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Wey, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:1704.04861. [Google Scholar] [CrossRef]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L. MobileNetV2: Inverted Residuals and Linear Bottlenecks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Howard, A.; Sandler, M.; Chen, B.; Wang, W.; Chen, L.; Tan, M.; Chu, G.; Vasudevan, V.; Zhu, Y.; Pang, R.; et al. Searching for MobileNetV3. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Venice, Italy, 22–29 October 2017. [Google Scholar]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. ShuffleNet: An Extremely Efficient Convolutional Neural Network for Mobile Devices. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Ma, N.; Zhang, X.; Zheng, H.; Sun, J. ShuffleNet V2: Practical Guidelines for Efficient CNN Architecture Design. In Proceedings of the European Conference on Computer Vision, Springer, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale. In Proceedings of the International Conference on Learning, Curran Associates Inc., Virtual Conference, 3–7 May 2021. [Google Scholar]

- Kong, X.; Urano, K.; Takebayashi, A. Radar-Based Action Classification for Elderly Healthcare in Caregiving Environment. In Proceedings of the International Conference on Advanced Mechatronic Systems, Xi’an, China, 19–22 September 2025. [Google Scholar]

- SC1220AT2-B-113 60GHz Radar Kit. Available online: https://www.socionext.com/jp/download/catalog/AD04-00141-1.pdf (accessed on 13 August 2025).

- Noury, N.; Fleury, A.; Rumeau, P.; Bourke, A.K.; Laighin, G.O.; Rialle, V. Fall Detection-Principles and Methods. In Proceedings of the Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Lyon, France, 22–26 August 2007. [Google Scholar]

| Blocks | Residual | Lying | Sitting | Standing | Exiting | Falling | Average |

|---|---|---|---|---|---|---|---|

| 2 | ✓ | 83.21 | 73.33 | 76.99 | 81.73 | 67.21 | 76.49 |

| 3 | ✓ | 82.22 | 74.61 | 78.62 | 79.56 | 70.52 | 77.10 |

| 4 | ✓ | 85.97 | 74.85 | 80.44 | 77.04 | 71.95 | 78.05 |

| 5 | ✓ | 87.21 | 77.52 | 80.25 | 75.11 | 72.10 | 78.44 |

| 6 | ✓ | 87.01 | 78.35 | 80.79 | 77.14 | 72.20 | 79.10 |

| 7 | ✓ | 86.42 | 76.53 | 78.81 | 76.05 | 72.15 | 77.99 |

| 2 | × | 86.66 | 71.06 | 75.80 | 82.62 | 66.37 | 76.50 |

| 3 | × | 85.93 | 72.13 | 77.23 | 79.90 | 69.68 | 76.97 |

| 4 | × | 85.88 | 74.16 | 80.49 | 77.28 | 70.62 | 77.69 |

| 5 | × | 86.12 | 73.31 | 79.46 | 76.00 | 72.15 | 77.41 |

| 6 | × | 85.68 | 79.00 | 79.36 | 76.35 | 72.59 | 78.59 |

| 7 | × | 87.45 | 75.05 | 80.54 | 76.10 | 71.21 | 78.07 |

| Model | Lying | Sitting | Standing | Exiting | Falling | Average | Model Size (MB) | GFLOPs |

|---|---|---|---|---|---|---|---|---|

| ResNet18 | 84.63 | 76.98 | 80.25 | 77.33 | 75.70 | 78.98 | 42.70 | 1.80 |

| ResNeXt50 | 83.50 | 75.59 | 78.72 | 75.56 | 76.10 | 77.89 | 88.00 | 8.50 |

| MobileNetV3-Small | 86.81 | 77.87 | 84.64 | 72.99 | 77.43 | 79.95 | 5.93 | 0.13 |

| MobileNetV3-Large | 87.25 | 79.05 | 84.20 | 74.52 | 77.43 | 80.49 | 16.20 | 0.44 |

| ShuffleNetV2 | 87.70 | 75.00 | 81.04 | 75.01 | 75.26 | 78.80 | 5.45 | 0.29 |

| ViT | 64.97 | 58.70 | 63.46 | 78.96 | 52.05 | 63.63 | 327.00 | 35.13 |

| MDSCNet (proposed) | 87.01 | 78.35 | 80.79 | 77.14 | 72.20 | 79.10 | 0.29 | 0.40 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kong, X.; Saho, K.; Takebayashi, A. MDSCNet: A Lightweight Radar Image-Based Model for Multi-Action Classification in Elderly Healthcare. Inventions 2025, 10, 98. https://doi.org/10.3390/inventions10060098

Kong X, Saho K, Takebayashi A. MDSCNet: A Lightweight Radar Image-Based Model for Multi-Action Classification in Elderly Healthcare. Inventions. 2025; 10(6):98. https://doi.org/10.3390/inventions10060098

Chicago/Turabian StyleKong, Xiangbo, Kenshi Saho, and Akari Takebayashi. 2025. "MDSCNet: A Lightweight Radar Image-Based Model for Multi-Action Classification in Elderly Healthcare" Inventions 10, no. 6: 98. https://doi.org/10.3390/inventions10060098

APA StyleKong, X., Saho, K., & Takebayashi, A. (2025). MDSCNet: A Lightweight Radar Image-Based Model for Multi-Action Classification in Elderly Healthcare. Inventions, 10(6), 98. https://doi.org/10.3390/inventions10060098