Automatic Fish Age Determination across Different Otolith Image Labs Using Domain Adaptation

Abstract

:1. Introduction

2. Materials and Methods

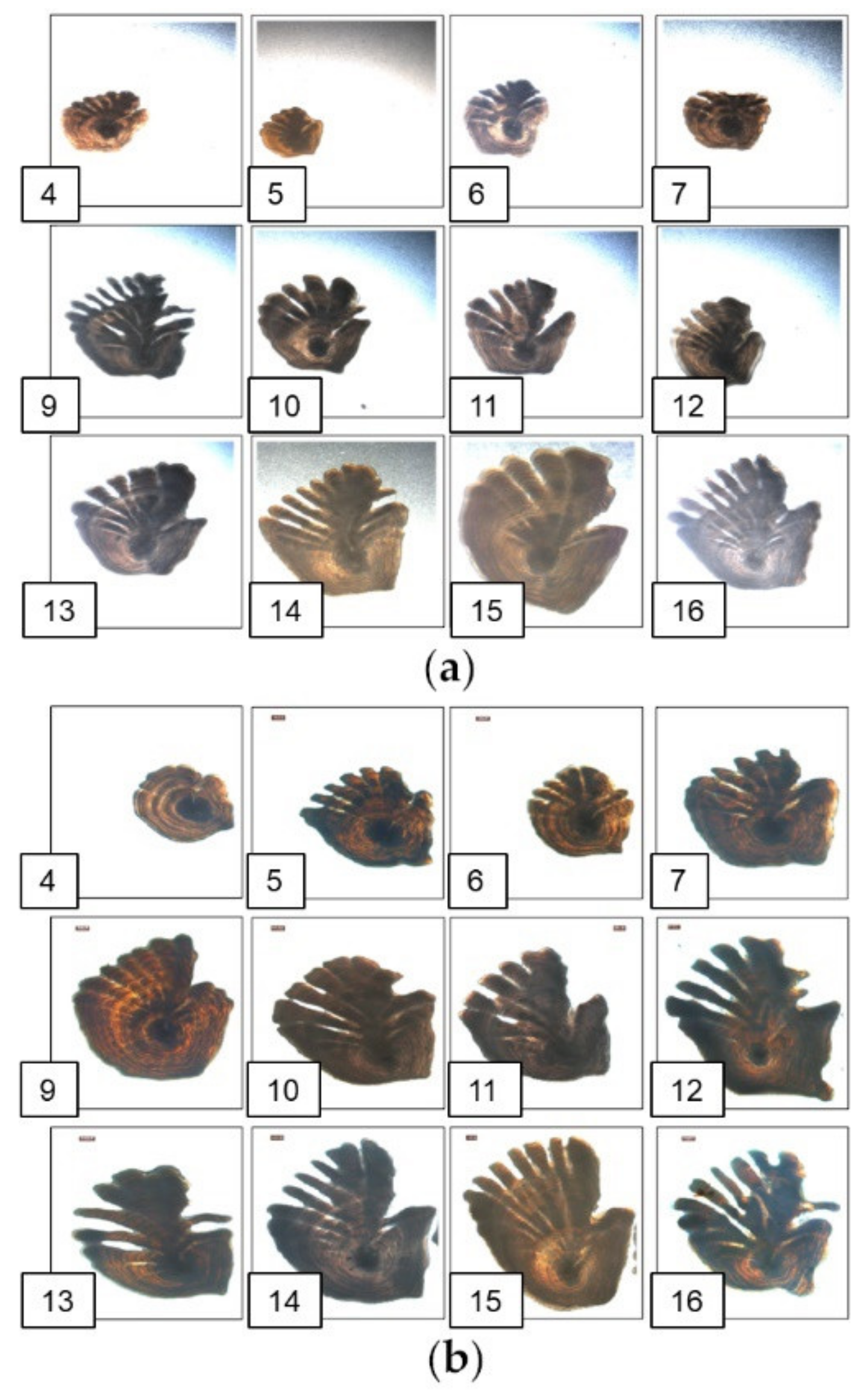

2.1. Data

2.2. UDA for Age Classification

2.2.1. Adversarial Generative Adaptation

2.2.2. Adversarial Discriminative Adaptation

2.2.3. Self-Supervised Adaptation

2.2.4. Implementation Details

2.3. Other Considered Classifiers

2.4. Performance Measurement

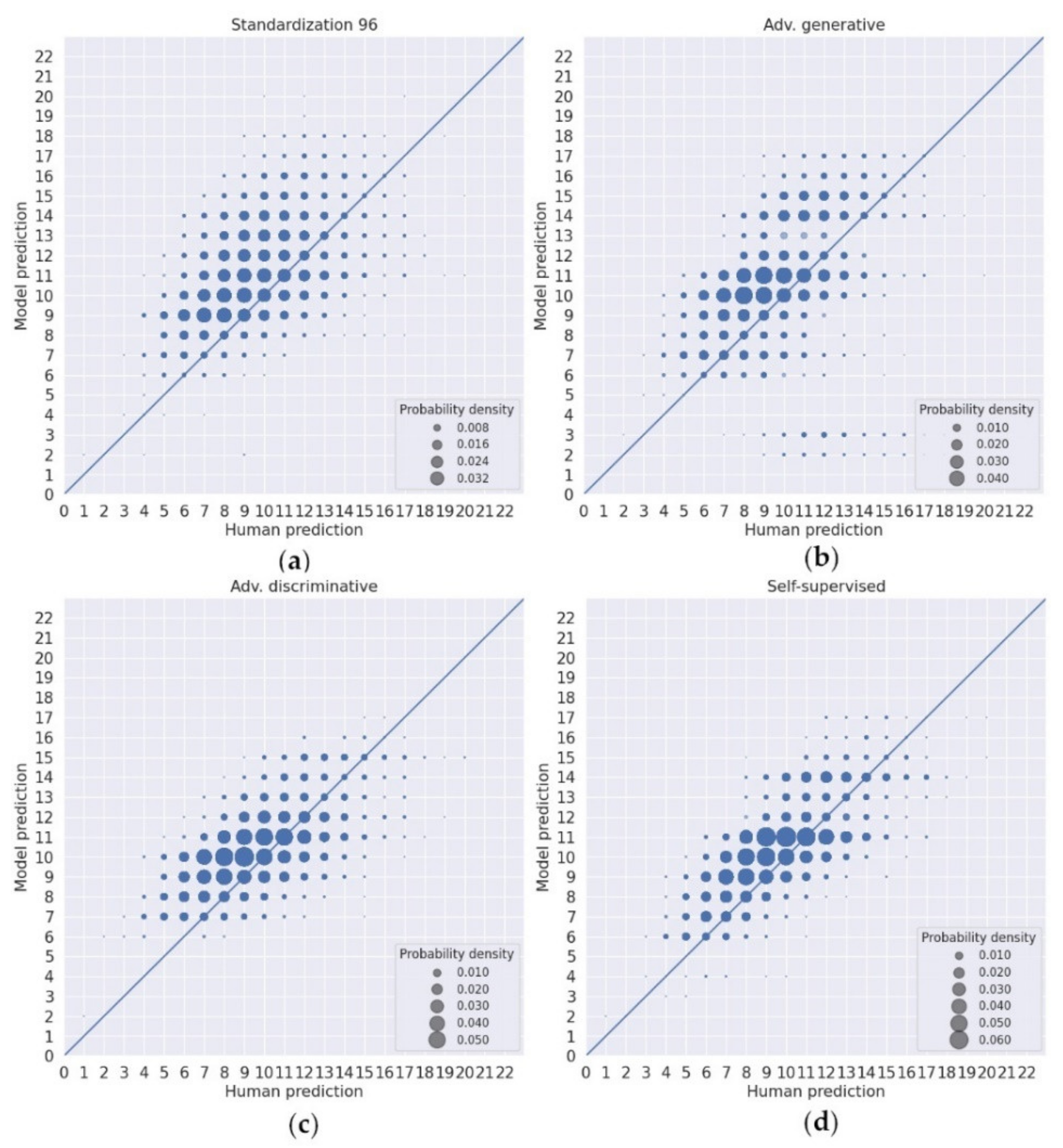

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Schulz-Mirbach, T.; Ladich, F.; Plath, M.; Heß, M. Enigmatic Ear Stones: What We Know about the Functional Role and Evolution of Fish Otoliths. Biol. Rev. Camb. Philos. Soc. 2019, 94, 457–482. [Google Scholar] [CrossRef] [PubMed]

- Patterson, W.P.; Smith, G.R.; Lohmann, K.C. Continental Paleothermometry and Seasonality Using the Isotopic Composition of Aragonitic Otoliths of Freshwater Fishes. Wash. DC Am. Geophys. Union Geophys. Monogr. Ser. 1993, 78, 191–202. [Google Scholar] [CrossRef]

- Patterson, W.P. Oldest Isotopically Characterized Fish Otoliths Provide Insight to Jurassic Continental Climate of Europe. Geology 1999, 27, 199–202. [Google Scholar] [CrossRef]

- Enoksen, S.; Haug, T.; Lindstrøm, U.; Nilssen, K. Recent Summer Diet of Hooded Cystophora Cristata and Harp Pagophilus Groenlandicus Seals in the Drift Ice of the Greenland Sea. Polar Biol. 2016, 40, 931–937. [Google Scholar] [CrossRef]

- Polito, M.J.; Trivelpiece, W.Z.; Karnovsky, N.J.; Ng, E.; Patterson, W.P.; Emslie, S.D. Integrating Stomach Content and Stable Isotope Analyses to Quantify the Diets of Pygoscelid Penguins. PLoS ONE 2011, 6, e26642. [Google Scholar] [CrossRef] [Green Version]

- Kalish, J.M. Pre- and Post-Bomb Radiocarbon in Fish Otoliths. Earth Planet. Sci. Lett. 1993, 114, 549–554. [Google Scholar] [CrossRef]

- Campana, S.; Thorrold, S. Otoliths, Increments, and Elements: Keys to a Comprehensive Understanding of Fish Populations? Can. J. Fish. Aquat. Sci. 2001, 58, 30–38. [Google Scholar] [CrossRef]

- Morison, A.; Burnett, J.; McCurdy, W.; Moksness, E. Quality Issues in the Use of Otoliths for Fish Age Estimation. Mar. Freshw. Res. 2005, 56, 773–782. [Google Scholar] [CrossRef]

- Fablet, R.; Josse, N. Automated Fish Age Estimation from Otolith Images Using Statistical Learning. Fish. Res. 2005, 72, 279–290. [Google Scholar] [CrossRef] [Green Version]

- Moen, E.; Handegard, N.O.; Allken, V.; Albert, O.T.; Harbitz, A.; Malde, K. Automatic Interpretation of Otoliths Using Deep Learning. PLoS ONE 2018, 13, e0204713. [Google Scholar] [CrossRef] [Green Version]

- Ordoñez, A.; Eikvil, L.; Salberg, A.-B.; Harbitz, A.; Murray, S.M.; Kampffmeyer, M.C. Explaining Decisions of Deep Neural Networks Used for Fish Age Prediction. PLoS ONE 2020, 15, e0235013. [Google Scholar] [CrossRef]

- Moore, B.; Maclaren, J.; Peat, C.; Anjomrouz, M.; Horn, P.L.; Hoyle, S.D. Feasibility of Automating Otolith Ageing Using CT Scanning and Machine Learning; New Zealand Fisheries Assessment Report 2019/58; New Zealand Fisheries Assessment: Wellington, New Zealand, 2019; ISBN 978-1-990008-66-5. [Google Scholar]

- Politikos, D.V.; Petasis, G.; Chatzispyrou, A.; Mytilineou, C.; Anastasopoulou, A. Automating Fish Age Estimation Combining Otolith Images and Deep Learning: The Role of Multitask Learning. Fish. Res. 2021, 242, 106033. [Google Scholar] [CrossRef]

- Vabø, R.; Moen, E.; Smoliński, S.; Husebø, Å.; Handegard, N.O.; Malde, K. Automatic Interpretation of Salmon Scales Using Deep Learning. Ecol. Inform. 2021, 63, 101322. [Google Scholar] [CrossRef]

- ICES. Report of the Workshop on Age Reading of Greenland Halibut (WKARGH); ICES CM 2011/ACOM:41; International Council for Exploration of the Seas: Vigo, Spain, 2011. [Google Scholar]

- ICES. Report of the Workshop on Age Reading of Greenland Halibut 2 (WKARGH2); ICES CM 2016/SSGIEOM:16; International Council for Exploration of the Seas: Reykjavik, Iceland, 2016. [Google Scholar]

- Morison, A.K.; Robertson, S.G.; Smith, D.C. An Integrated System for Production Fish Aging: Image Analysis and Quality Assurance. N. Am. J. Fish. Manag. 1998, 18, 587–598. [Google Scholar] [CrossRef]

- Quiñonero-Candela, J. Dataset Shift in Machine Learning. In Neural Information Processing Series; MIT Press: Cambridge, MA, USA, 2009; ISBN 978-0-262-17005-5. [Google Scholar]

- Torralba, A.; Efros, A.A. Unbiased Look at Dataset Bias. In CVPR 2011; IEEE: Colorado Springs, CO, USA, 2011; pp. 1521–1528. [Google Scholar]

- Ben-David, S.; Blitzer, J.; Crammer, K.; Kulesza, A.; Pereira, F.; Vaughan, J.W. A Theory of Learning from Different Domains. Mach. Learn. 2010, 79, 151–175. [Google Scholar] [CrossRef] [Green Version]

- Zhao, S.; Yue, X.; Zhang, S.; Li, B.; Zhao, H.; Wu, B.; Krishna, R.; Gonzalez, J.E.; Sangiovanni-Vincentelli, A.L.; Seshia, S.A.; et al. A Review of Single-Source Deep Unsupervised Visual Domain Adaptation. IEEE Trans. Neural Netw. Learn. Syst. 2020, 33, 473–493. [Google Scholar] [CrossRef]

- Sólmundsson, J.; Kristinsson, K.; Steinarsson, B.; Jonsson, E.; Karlsson, H.; Björnsson, H.; Palsson, J.; Bogason, V.; Sigurdsson, T.; Hjörleifsson, E. Manuals for the Icelandic Bottom Trawl Surveys in Spring and Autumn; Hafrannsóknir nr. 156: Reykjavík, Iceland, 2010. [Google Scholar]

- Liu, M.-Y.; Tuzel, O. Coupled Generative Adversarial Networks. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: Barcelona, Spain, 2016. [Google Scholar]

- Linder-Norén, E. PyTorch CoGAN. Available online: https://github.com/eriklindernoren/PyTorch-GAN (accessed on 2 March 2022).

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; IEEE: Las Vegas, NV, USA, 2016; pp. 770–778. [Google Scholar]

- Long, M.; CAO, Z.; Wang, J.; Jordan, M.I. Conditional Adversarial Domain Adaptation. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: Montréal, QC, Canada, 2018; pp. 1647–1657. [Google Scholar]

- Jiang, J.; Chen, B.; Fu, B.; Long, M. Transfer-Learning-Library. Available online: https://github.com/thuml/Transfer-Learning-Library (accessed on 31 January 2022).

- Gidaris, S.; Singh, P.; Komodakis, N. Unsupervised Representation Learning by Predicting Image Rotations. arXiv 2018, arXiv:1803.07728. [Google Scholar]

- Noroozi, M.; Favaro, P. Unsupervised Learning of Visual Representations by Solving Jigsaw Puzzles. In European Conference on Computer Vision; Springer International Publishing: Amsterdam, The Netherlands, 2016. [Google Scholar]

- Zhang, R.; Isola, P.; Efros, A.A. Colorful Image Colorization. In European Conference on Computer Vision; Springer International Publishing: Amsterdam, The Netherlands, 2016; pp. 649–666. [Google Scholar]

- Xu, J.; Xiao, L.; Lopez, A.M. Self-Supervised Domain Adaptation for Computer Vision Tasks. IEEE Access 2019, 7, 156694–156706. [Google Scholar] [CrossRef]

- Jiaolong, X. Self-Supervised Domain Adaptation. Available online: https://github.com/Jiaolong/self-supervised-da (accessed on 1 February 2022).

- Chen, T.; Kornblith, S.; Norouzi, M.; Hinton, G. A Simple Framework for Contrastive Learning of Visual Representations. In Proceedings of the 37th International Conference on Machine Learning, Virtual Event, 12–18 July 2020; pp. 1597–1607. Available online: http://proceedings.mlr.press/v119/chen20j.html (accessed on 13 March 2022).

- Silva, T. PyTorch SimCLR: A Simple Framework for Contrastive Learning of Visual Representations. Available online: https://github.com/sthalles/SimCLR/blob/1848fc934ad844ae630e6c452300433fe99acfd9/models/resnet_simclr.py (accessed on 1 February 2022).

- Van den Oord, A.; Li, Y.; Vinyals, O. Representation Learning with Contrastive Predictive Coding. arXiv 2019, arXiv:Abs/1807.03748. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Delving Deep into Rectifiers: Surpassing Human-Level Performance on ImageNet Classification. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; IEEE: Santiago, Chile, 2015; pp. 1026–1034. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2015, arXiv:1412.6980. [Google Scholar]

- Liu, M. CoGAN. Available online: https://github.com/mingyuliutw/CoGAN (accessed on 31 January 2022).

- Zhao, N.; Wu, Z.; Lau, R.W.H.; Lin, S. What Makes Instance Discrimination Good for Transfer Learning. arXiv 2021, arXiv:Abs/2006.06606. [Google Scholar]

- Punt, A.E.P.E.; Smith, D.C.S.C.; KrusicGolub, K.K.; Robertson, S.R. Quantifying Age-Reading Error for Use in Fisheries Stock Assessments, with Application to Species in Australia’s Southern and Eastern Scalefish and Shark Fishery. Can. J. Fish. Aquat. Sci. 2008, 65, 1991–2005. [Google Scholar] [CrossRef]

- Nedreaas, K.; Soldal, A.V.; Bjordal, Å. Performance and Biological Implications of a Multi-Gear Fishery for Greenland Halibut (Reinhardtius Hippoglossoides). J. Northwest Atl. Fish. Sci. 1996, 19, 59–72. [Google Scholar] [CrossRef]

- Martinsen, I. Deep Learning Applied to FIsh Otolith Images. Master’s Thesis, The Arctic University of Norway, Tromsø, Norway, 2021. [Google Scholar]

- Cao, Z.; Ma, L.; Long, M.; Wang, J. Partial Adversarial Domain Adaptation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September; Springer International Publishing: Munich, Germany, 2018. [Google Scholar]

- Cao, Z.; You, K.; Long, M.; Wang, J.; Yang, Q. Learning to Transfer Examples for Partial Domain Adaptation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; IEEE: Long Beach, CA, USA, 2019; pp. 2980–2989. [Google Scholar]

- Busto, P.P.; Gall, J. Open Set Domain Adaptation. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; IEEE: Venice, Italy, 2017; pp. 754–763. [Google Scholar]

| Experiment | Training Labels | Training Images | Test Images | Resolution | Image Preprocessing | Considered as DA |

|---|---|---|---|---|---|---|

| Norwegian bound 224 | Nor. | Nor. | Nor. | 224 | No | No |

| Norwegian bound 96 | Nor. | Nor. | Nor. | 96 | No | No |

| Lower performance 224 | Nor. | Nor. | Ice. | 224 | No | No |

| Lower performance 96 | Nor. | Nor. | Ice. | 96 | No | No |

| Higher performance 224 | Ice. | Ice. | Ice. | 224 | No | No |

| Higher performance 96 | Ice. | Ice. | Ice. | 96 | No | No |

| Standardization 224 | Nor. | Nor. | Ice. | 224 | Yes | Yes |

| Standardization 96 | Nor. | Nor. | Ice. | 96 | Yes | Yes |

| Adv. generative (CoGAN) | Nor. | Nor. and Ice. | Ice. | 224 | No | Yes |

| Adv. discriminative (CDAN) | Nor. | Nor. and Ice. | Ice. | 224 | No | Yes |

| Self-supervised (SimCLR) | Nor. | Nor. and Ice. | Ice. | 96 | No | Yes |

| Experiment | RMSE (Years) | CV (%) |

|---|---|---|

| Norwegian bound 224 | ||

| Norwegian bound 96 | ||

| Lower performance 224 | ||

| Lower performance 96 | ||

| Higher performance 224 | ||

| Higher performance 96 |

| Experiment | RMSE (Years) | CV (%) |

|---|---|---|

| Standardization 224 | ||

| Standardization 96 | ||

| Adv. generative (CoGAN) | ||

| Adv. discriminative (CDAN) | ||

| Self-supervised (SimCLR) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ordoñez, A.; Eikvil, L.; Salberg, A.-B.; Harbitz, A.; Elvarsson, B.Þ. Automatic Fish Age Determination across Different Otolith Image Labs Using Domain Adaptation. Fishes 2022, 7, 71. https://doi.org/10.3390/fishes7020071

Ordoñez A, Eikvil L, Salberg A-B, Harbitz A, Elvarsson BÞ. Automatic Fish Age Determination across Different Otolith Image Labs Using Domain Adaptation. Fishes. 2022; 7(2):71. https://doi.org/10.3390/fishes7020071

Chicago/Turabian StyleOrdoñez, Alba, Line Eikvil, Arnt-Børre Salberg, Alf Harbitz, and Bjarki Þór Elvarsson. 2022. "Automatic Fish Age Determination across Different Otolith Image Labs Using Domain Adaptation" Fishes 7, no. 2: 71. https://doi.org/10.3390/fishes7020071

APA StyleOrdoñez, A., Eikvil, L., Salberg, A.-B., Harbitz, A., & Elvarsson, B. Þ. (2022). Automatic Fish Age Determination across Different Otolith Image Labs Using Domain Adaptation. Fishes, 7(2), 71. https://doi.org/10.3390/fishes7020071