Predicting the Body Weight of Tilapia Fingerlings from Images Using Computer Vision

Abstract

1. Introduction

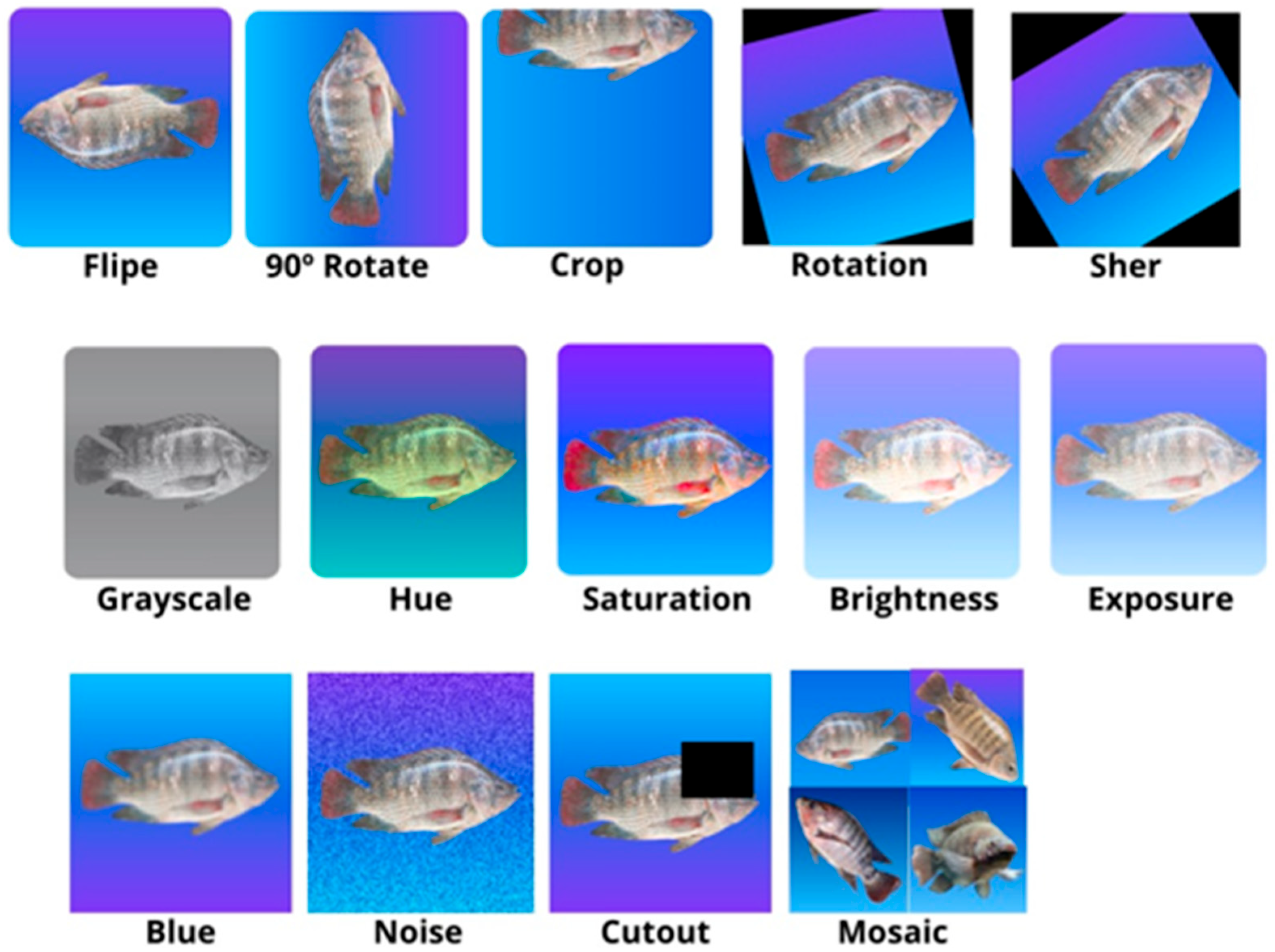

2. Materials and Methods

2.1. Data Collection

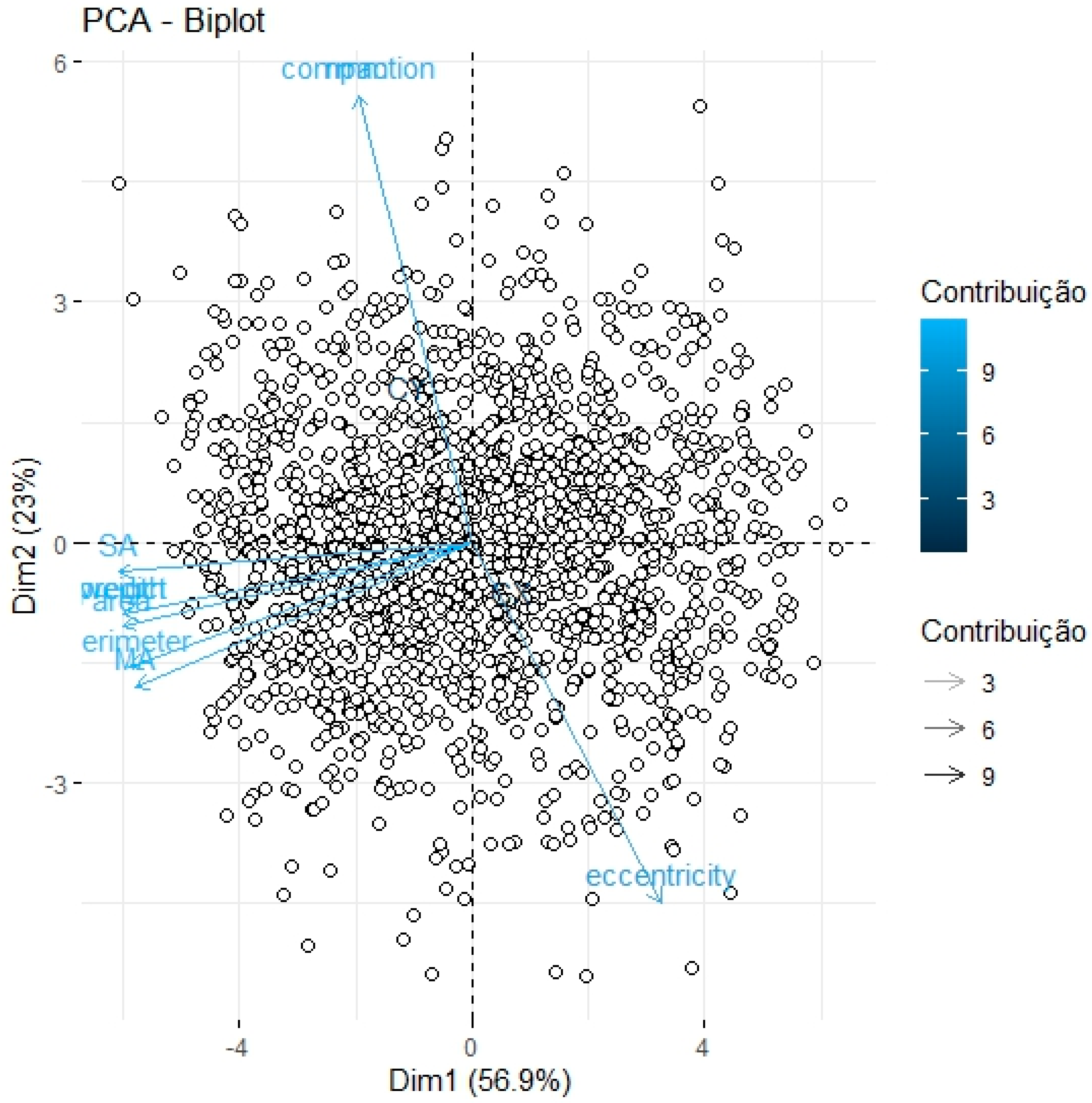

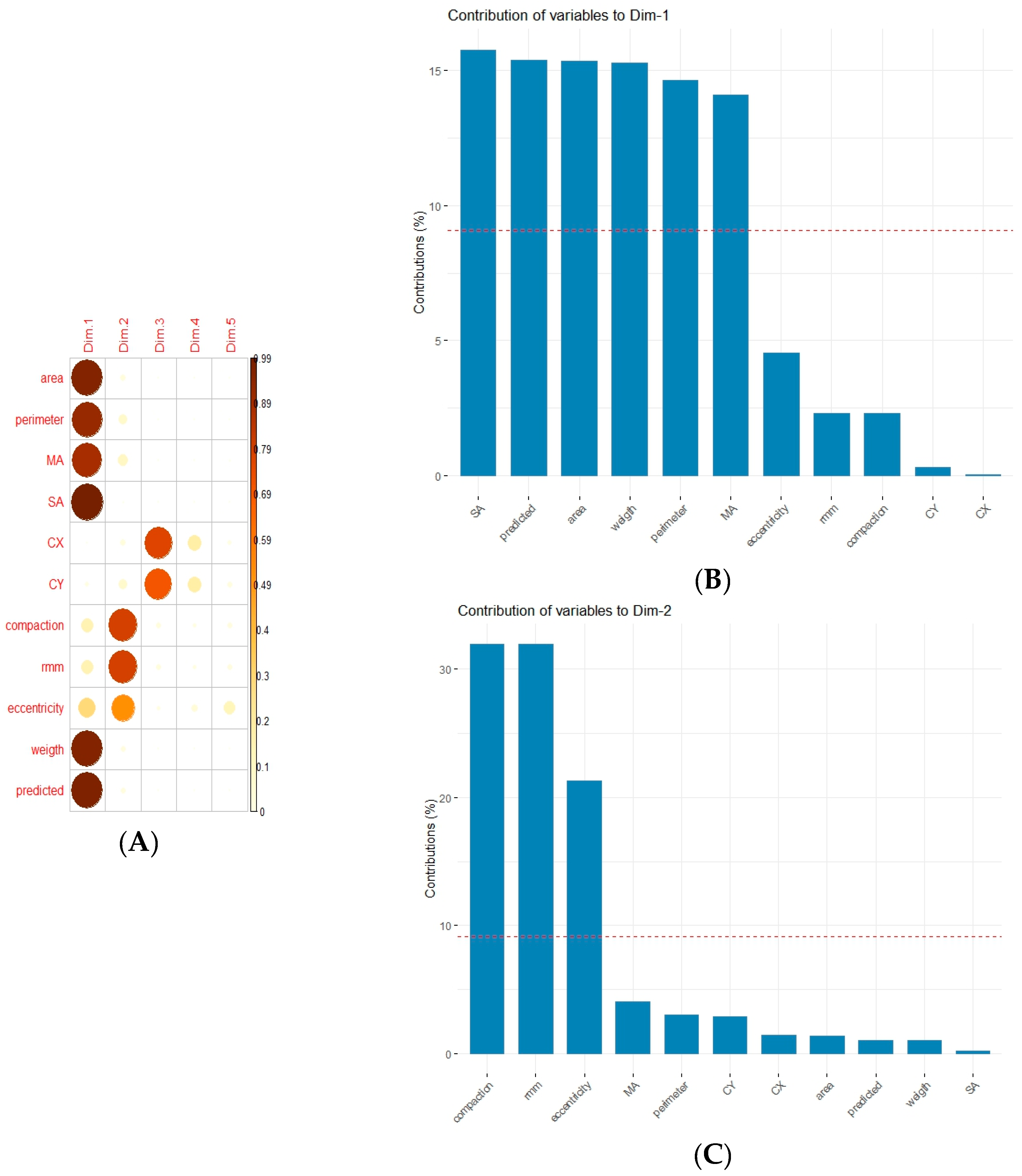

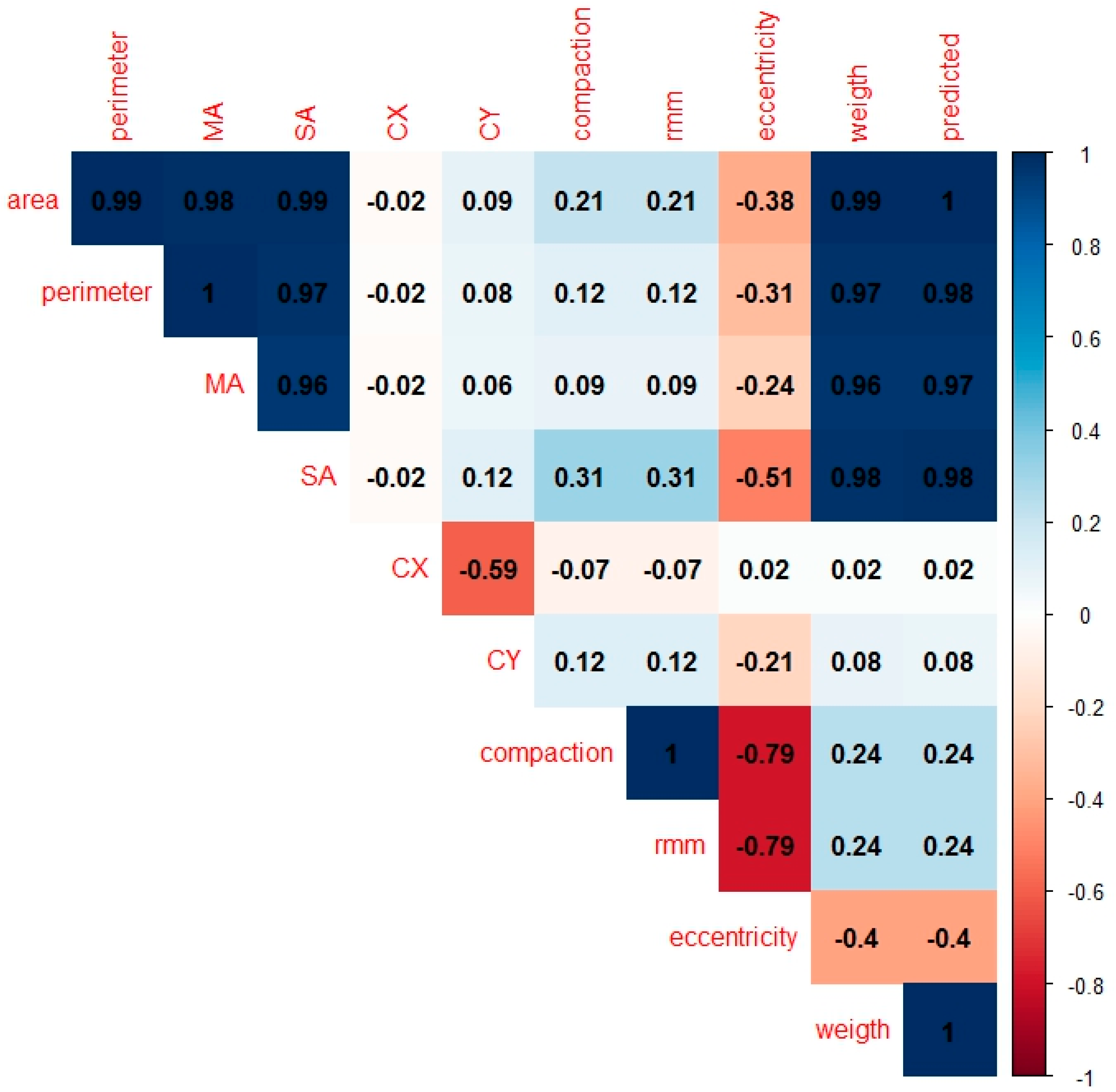

2.2. Statistical Analysis

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| SA | Minor Axis |

| CEUA | Ethics Committee on the Use of Animals |

| CX | X Centroid |

| CY | Y Centroid |

| JPG | Joint Photographic Experts Group |

| LCD | Liquid Crystal Display |

| LI | Lower Limit |

| LS | Upper Limit |

| MA | Major Axis |

| mAP | Mean Average Precision |

| RMM | Major-Minor Axis Ratio |

| OpenCV | Open Source Computer Vision Library |

| RMSE | Root Mean Square Error |

References

- Owatari, M.S.; da Silva, L.R.; Ferreira, G.B.; Rodhermel, J.C.B.; de Andrade, J.I.A.; Dartora, A.; Jatobá, A. Body yield, growth performance, and haematological evaluation of Nile tilapia fed a diet supplemented with Saccharomyces cerevisiae. Anim. Feed. Sci. Technol. 2022, 293, 115453. [Google Scholar] [CrossRef]

- Lima, A.R.; Booms, E.M.; Lopes, A.R.; Martins-Cardoso, S.; Novais, S.C.; Lemos, M.F.; Ribeiro, L.; Castanho, S.; Candeis-Mendes, A.; Pousão-Ferreira, P.; et al. Early life stage mechanisms of an active fish species to cope with ocean warming and hypoxia as interacting stressors. Environ. Pollut. 2024, 341, 122989. [Google Scholar] [CrossRef] [PubMed]

- Wolff, F.C.; Salladarré, F.; Baranger, L. A classification of buyers in first-sale fish markets: Evidence from France. Fish. Res. 2024, 275, 107022. [Google Scholar] [CrossRef]

- Zhang, H.; Li, W.; Qi, Y.; Liu, H.; Li, Z. Dynamic fry counting based on multi-object tracking and one-stage detection. Comput. Electron. Agric. 2023, 209, 107871. [Google Scholar] [CrossRef]

- Robichaud, J.A.; Piczak, M.L.; LaRochelle, L.; Reid, J.L.; Chhor, A.D.; Holder, P.E.; Nowell, L.B.; Brownscombe, J.W.; Danylchuck, A.J.; Cooke, S.J. Interactive effects of fish handling and water temperature on reflex impairment of angled Rainbow Trout. Fish. Res. 2024, 274, 106993. [Google Scholar] [CrossRef]

- Shibata, Y.; Iwahara, Y.; Manano, M.; Kanaya, A.; Sone, R.; Tamura, S.; Kakuta, N.; Nishino, T.; Ishihara, A.; Kugai, S. Length estimation of fish detected as non-occluded using a smartphone application and deep learning method. Fish. Res. 2024, 273, 106970. [Google Scholar] [CrossRef]

- Gamage, A.; Gangahagedara, R.; Gamage, J.; Jayasinghe, N.; Kodikara, N.; Suraweera, P.; Merah, O. Role of organic farming for achieving sustainability in agriculture. Farming Syst. 2023, 1, 100005. [Google Scholar] [CrossRef]

- Prakash, C.; Singh, L.P.; Gupta, A.; Lohan, S.K. Advancements in smart farming: A comprehensive review of IoT, wireless communication, sensors, and hardware for agricultural automation. Sens. Actuators A Phys. 2023, 362, 114605. [Google Scholar] [CrossRef]

- Fernandes, M.P.; Costa, A.C.; França, H.F.C.; Souza, A.S.; Viadanna, P.H.O.; Lima, L.C.; Horn, L.D.; Pierozan, M.B.; Rezende, I.R.; Medeiros, R.M.S.; et al. Convolutional Neural Networks in the Inspection of Serrasalmids (Characiformes) Fingerlings. Animals 2024, 14, 606. [Google Scholar] [CrossRef]

- Zhao, Y.; Xiao, Q.; Li, J.; Tian, K.; Yang, L.; Shan, P.; Lv, X.; Li, L.; Zhan, Z. Review on image-based animals weight weighing. Comput. Electron. Agric. 2023, 215, 108456. [Google Scholar] [CrossRef]

- İşgüzar, S.; Türkoğlu, M.; Ateşşahin, T.; Dürrani, Ö. FishAgePredictioNet: A multi-stage fish age prediction framework based on segmentation, deep convolution network, and Gaussian process regression with otolith images. Fish. Res. 2024, 271, 106916. [Google Scholar] [CrossRef]

- Fernandes, A.F.; Turra, E.M.; de Alvarenga, É.R.; Passafaro, T.L.; Lopes, F.B.; Alves, G.F.; Singh, V.; Rosa, G.J. Deep Learning image segmentation for extraction of fish body measurements and prediction of body weight and carcass traits in Nile tilapia. Comput. Electron. Agric. 2020, 170, 105274. [Google Scholar] [CrossRef]

- Pache, M.C.B.; Sant’Ana, D.A.; Rezende, F.P.C.; Porto, J.V.A.; Rozales, J.V.A.; Weber, V.A.M.; Oliveira Junior, A.S.; Garcia, V.; Naka, M.H.; Pistori, H. Non-intrusive estimation of live body biomass of Pintado Real® fingerlings: A trait selection approach. Ecol. Inform. 2022, 68, 101509. [Google Scholar] [CrossRef]

- Pache, M.C.B.; Sant’Ana, D.A.; Rozales, J.V.A.; Weber, V.A.M.; Oliveira Junior, A.S.; Garcia, V.; Pistori, H.; Naka, M.H. Prediction of fingerling biomass with deep learning. Ecol. Inform. 2022, 71, 101785. [Google Scholar] [CrossRef]

- Peixebr. Brazilian Fish Farming Yearbook PEIXE BR 2024. Tilapia Reaches 579,080 t and Represents 65% of Brazilian Production. 2024. Rua Claudio Soares, 72-cj. 417-Pinheiros-São Paulo/SP-Brazil-CEP 05422-030. Available online: https://www.peixebr.com.br/anuario-2024/ (accessed on 2 April 2025).

- Kutner, M.H.; Nachtsheim, C.J.; Neter, J.; Li, W. Applied Linear Statistical Models. 2004. Available online: https://d1b10bmlvqabco.cloudfront.net/attach/is282rqc4001vv/is6ccr3fl0e37q/iwfnjvgvl53z/Michael_H_Kutner_Christopher_J._Nachtsheim_JohnBookFi.org.pdf (accessed on 12 April 2025).

- Russ, J.C. The Image Processing Handbook; CRC Press: Boca Raton, FL, USA, 2006. [Google Scholar]

- Li, W.; Goodchild, M.F.; Church, R. An efficient measure of compactness for two-dimensional shapes and its application in regionalization problems. Int. J. Geogr. Inf. Sci. 2013, 27, 1227–1250. [Google Scholar] [CrossRef]

- Palomares, M.L.; Pauly, D. A multiple regression model for prediction the food consumption of marine fish populations. Mar. Freshw. Res. 1989, 40, 259–273. [Google Scholar] [CrossRef]

- Li, D.; Yang, Y.; Zhao, S.; Yang, H. A fish image segmentation methodology in aquaculture environment based on multi-feature fusion model. Mar. Environ. Res. 2023, 190, 106085. [Google Scholar] [CrossRef]

- Oliveira Junior, A.S.; Sant’Ana, D.A.; Pache, M.C.B.; Garcia, V.; Weber, V.A.M.; Astolfi, G.W.F.L.; Menezes, G.V.; Menezes, G.K.; Albuquerque, P.L.F.; Costa, C.S.; et al. Fingerlings mass estimation: A comparison between deep and shallow learning algorithms. Smart Agric. Technol. 2021, 1, 100020. [Google Scholar] [CrossRef]

- Silva, F.V.; Sarmento, N.L.A.F.; Vieira, J.S.; Tessitore, A.J.A.; Oliveira, L.L.S.; Saraiva, E.P. Característicasmorfométricas, rendimentos de carcaça, filé, vísceras e resíduosemtilápias-do-niloemdiferentesfaixas de peso. Ver. Bras. Zootec. 2009, 38, 1407–1412. [Google Scholar] [CrossRef]

- Hair, J.F.; Black, W.C.; Babin, B.J.; Anderson, R.E.; Tatham, R.L. Multivariate Data Analysis; Cengage Learning: Hampshire, UK, 2019. [Google Scholar]

- Melo, C.C.V.; Reis Neto, R.V.; Costa, A.C.; Freitas, R.T.F.; Freato, T.A.; Souza, U.N. Direct and indirect effects of measures and reasons morphometric on the body yield of Nile tilapia, Oreochromis niloticus. Acta Sci. Anim. Sci. 2013, 35, 357–363. [Google Scholar] [CrossRef]

- Reis Neto, R.V.; Freitas, R.T.F.; Serafini, M.A.; Costa, A.C.; Freato, T.A.; Rosa, P.V.; Allaman, I.B. Interrelationships between morphometric variables and rounded fish body yields evaluated by path analysis. Rev. Bras. Zootec. 2012, 41, 1576–1582. [Google Scholar] [CrossRef]

- Collins, G.S.; Groot, J.A.; Dutton, S.; Omar, O.; Shanyinde, M.; Tajar, A.; Voysey, M.; Wharton, R.; Yu, L.M.; Moons, K.; et al. External validation of multivariable prediction models: A systematic review of methodological pipelines and reports. BMC Med. Res. Methodol. 2014, 14, 1–11. [Google Scholar] [CrossRef] [PubMed]

- Roberts, D.R.; Bahn, V.; Ciuti, S.; Boyce, M.S.; Elith, J.; Guillera-Arroita, G.; Hauenstein, S.; Lahoz-Monfort, J.J.; Schröder, B.; Thuiller, W.; et al. Cross-validation strategies for data with temporal, spatial, hierarchical, or phylogenetic structure. Ecography 2017, 40, 913–929. [Google Scholar] [CrossRef]

- Gladju, J.; Kamalam, B.S.; Kanagaraj, A. Applications of data mining and machine learning framework in aquaculture and fisheries: A review. Smart Agric. Technol. 2022, 2, 100061. [Google Scholar] [CrossRef]

- Gao, Z.; Huang, J.; Chen, J.; Shao, T.; Ni, H.; Cai, H. Deep transfer learning-based computer vision for real-time harvest period classification and impurity detection of Porphyra haitnensis. Aquac. Int. 2024, 32, 5171–5198. [Google Scholar] [CrossRef]

- Dembani, R.; Karvelas, I.; Akbar, N.A.; Rizou, S.; Tegolo, D.; Fountas, S. Agricultural data privacy and federated learning: A review of challenges and opportunities. Comput. Electron. Agric. 2025, 232, 110048. [Google Scholar] [CrossRef]

- Silva, E.T.L.D.; Pedreira, M.M.; Moura, G.D.S.; Pereira, D.K.A.; Otoni, C.J. Larval culture of Nile tilapia lineages at different storage densities. Rev. Caatinga 2016, 29, 709–715. [Google Scholar] [CrossRef][Green Version]

- Fujimura, K.; Okada, N. Development of the embryo, larva and early juvenile of Nile tilapia Oreochromis niloticus (Pisces: Cichlidae). Developmental staging system. Dev. Growth Differ. 2007, 49, 301–324. [Google Scholar] [CrossRef]

- Lazzari, R.; Peixoto, N.; Hermes, L.; Villes, V.; Durigon, E.; Uczay, J. Feeding rates affect growth, metabolism and oxidative status of Nile tilapia rearing in a biofloc system. Trop. Anim. Health Prod. 2024, 56, 208. [Google Scholar] [CrossRef]

- Peres, H.; Oliva-Teles, A.; Pezzato, L.; Furuya, W.; Freitas, J.; Satori, M.; Barros, M.; Carvalho, P. Growth performance and metabolic responses of Nile tilapia fed diets with different protein to energy ratios. Aquaculture 2022, 547, 737493. [Google Scholar] [CrossRef]

| Models | Parameter | Estimate | Standard Error | LI | LS | R2 |

|---|---|---|---|---|---|---|

| Model Stepwise | ||||||

| Obtained | Intercepto | 17.760 | 1.702 | 14.432 | 21.103 | 0.99 |

| Area | 0.00075 | 0.000011 | 0.000732928 | 0.000774872 | ||

| MA | −0.0848 | 0.002712 | −0.090146016 | −0.079514584 | ||

| SA | −0.1083 | 0.007221 | −0.122491848 | −0.094184352 | ||

| CX | 0.00345 | 0.000248 | 0.002962736 | 0.003936464 | ||

| Validation | Intercepto | 23.567 | 4.200 | 15.335 | 31.799 | 0.99 |

| Area | 0.0008 | 0.000026 | 0.000749658 | 0.000853342 | ||

| MA | −0.0974 | 0.006705 | −0.1105229 | −0.0842393 | ||

| SA | −0.1281 | 0.01735 | −0.16209 | −0.094078 | ||

| CX | 0.00436 | 0.0006 | 0.003184896 | 0.005536504 | ||

| Final Model | ||||||

| Obtained | Intercepto | −28.83 | 0.5742 | −29.53 | −27.7 | 0.99 |

| Area | 0.000462 | 0.00001243 | 0.00045937 | 0.00046427 | ||

| CX | 0.004234 | 0.000387 | 0.0036287 | 0.004839 | ||

| Validation | Intercepto | −28.86 | 0.6946 | −29.81 | −27.09 | 0.99 |

| Area | 0.000462 | 0.00001514 | 0.00045976 | 0.0004657 | ||

| CX | 0.003917 | 0.0003739 | 0.003183755 | 0.004650813 | ||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lima, L.d.C.; Costa, A.C.; França, H.F.d.C.; Souza, A.S.; Melo, G.A.F.d.; Vitorino, B.M.; Kretschmer, V.d.V.; Marcionilio, S.M.L.d.O.; Reis Neto, R.V.; Viadanna, P.H.; et al. Predicting the Body Weight of Tilapia Fingerlings from Images Using Computer Vision. Fishes 2025, 10, 371. https://doi.org/10.3390/fishes10080371

Lima LdC, Costa AC, França HFdC, Souza AS, Melo GAFd, Vitorino BM, Kretschmer VdV, Marcionilio SMLdO, Reis Neto RV, Viadanna PH, et al. Predicting the Body Weight of Tilapia Fingerlings from Images Using Computer Vision. Fishes. 2025; 10(8):371. https://doi.org/10.3390/fishes10080371

Chicago/Turabian StyleLima, Lessandro do Carmo, Adriano Carvalho Costa, Heyde Francielle do Carmo França, Alene Santos Souza, Gidélia Araújo Ferreira de Melo, Brenno Muller Vitorino, Vitória de Vasconcelos Kretschmer, Suzana Maria Loures de Oliveira Marcionilio, Rafael Vilhena Reis Neto, Pedro Henrique Viadanna, and et al. 2025. "Predicting the Body Weight of Tilapia Fingerlings from Images Using Computer Vision" Fishes 10, no. 8: 371. https://doi.org/10.3390/fishes10080371

APA StyleLima, L. d. C., Costa, A. C., França, H. F. d. C., Souza, A. S., Melo, G. A. F. d., Vitorino, B. M., Kretschmer, V. d. V., Marcionilio, S. M. L. d. O., Reis Neto, R. V., Viadanna, P. H., Lattanzi, G. R., Silva, L. M. d., & Costa, K. A. d. P. (2025). Predicting the Body Weight of Tilapia Fingerlings from Images Using Computer Vision. Fishes, 10(8), 371. https://doi.org/10.3390/fishes10080371