YOLO-DFAM-Based Onboard Intelligent Sorting System for Portunus trituberculatus

Abstract

1. Introduction

- We propose YOLO-DFAM, an enhanced YOLOv11n-based model that integrates a spatial-channel dual-attention gating mechanism to improve feature discrimination under complex marine conditions.

- We introduce an attentional scale fusion (ASF-YOLO) module that enhances multi-scale feature aggregation, enabling accurate detection of varying-size targets such as Portunus trituberculatus.

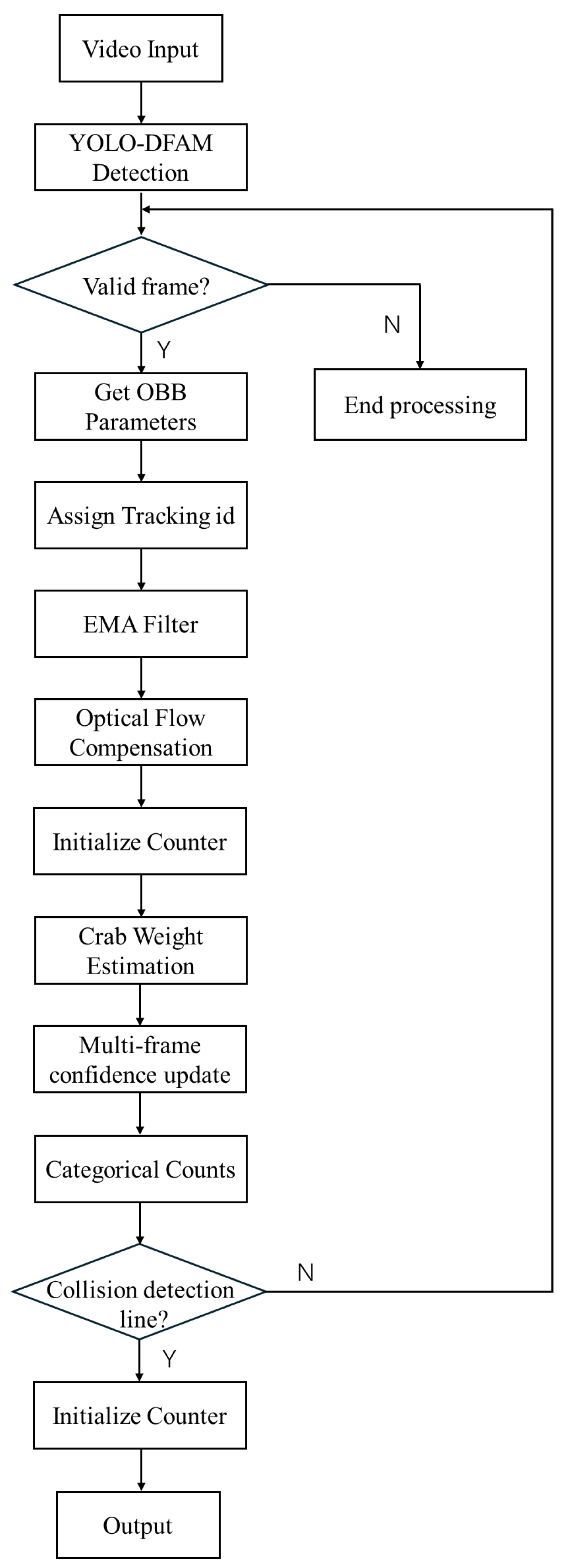

- We develop a real-time tracking pipeline based on ByteTrack, augmented by dynamic scale calibration, EMA filtering, and optical flow compensation, which significantly improves tracking robustness during vessel motion.

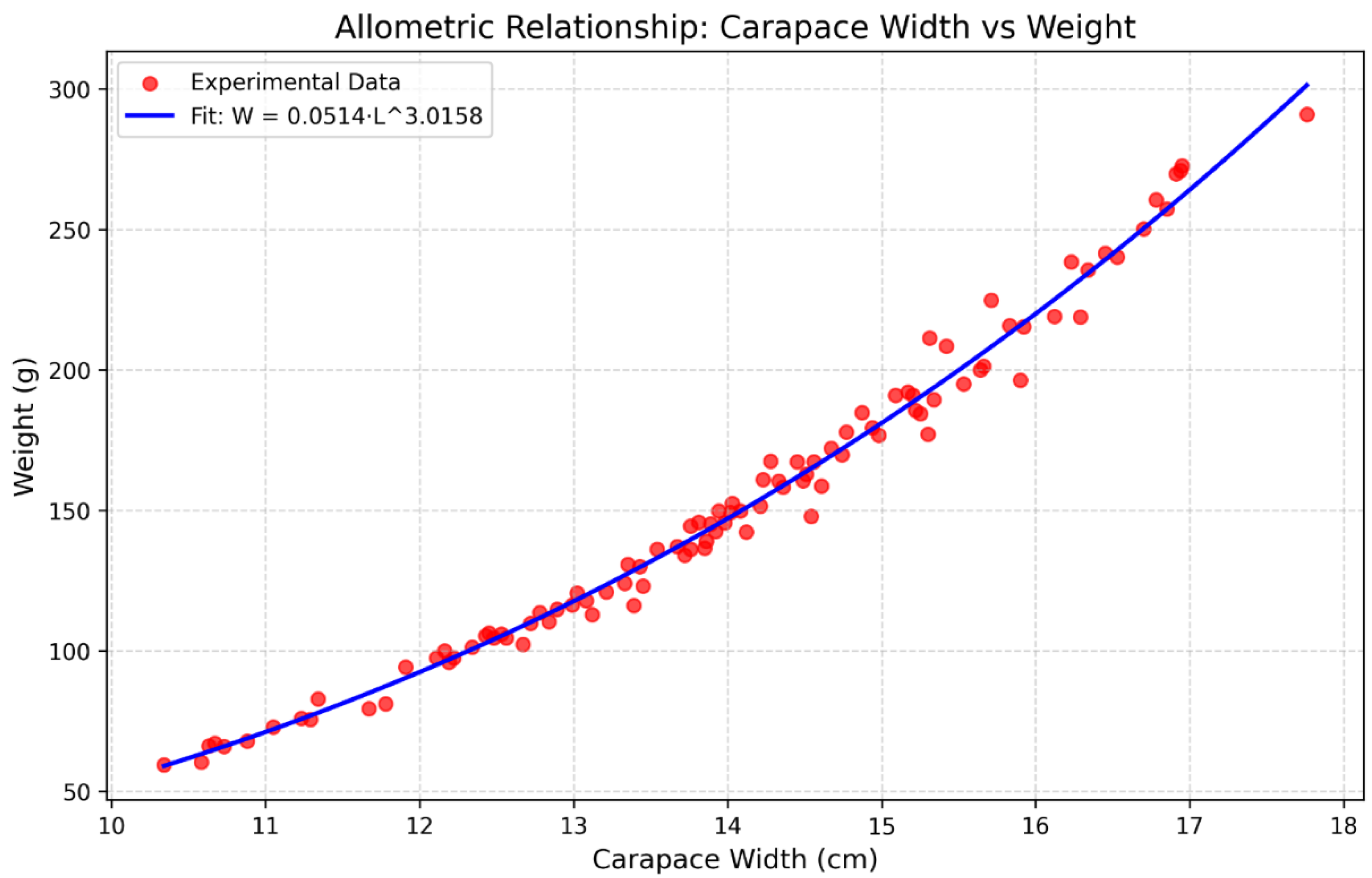

- We design a lightweight region-specific allometric weight estimation model (R2 = 0.9856) that achieves ≤4.7% prediction error using only 12 spline-interpolation calibration sets.

- The proposed model outperforms state-of-the-art detectors (YOLOv8n, RetinaNet-CSL, Rotated Faster R-CNN) in precision (+2.8%) while maintaining real-time inference (180.3 FPS at 7.2 GFLOPs), offering a deployable solution for intelligent fishery monitoring onboard.

2. Data and Methodology

2.1. Data Sources

2.2. Production of Datasets

2.3. Research Methodology

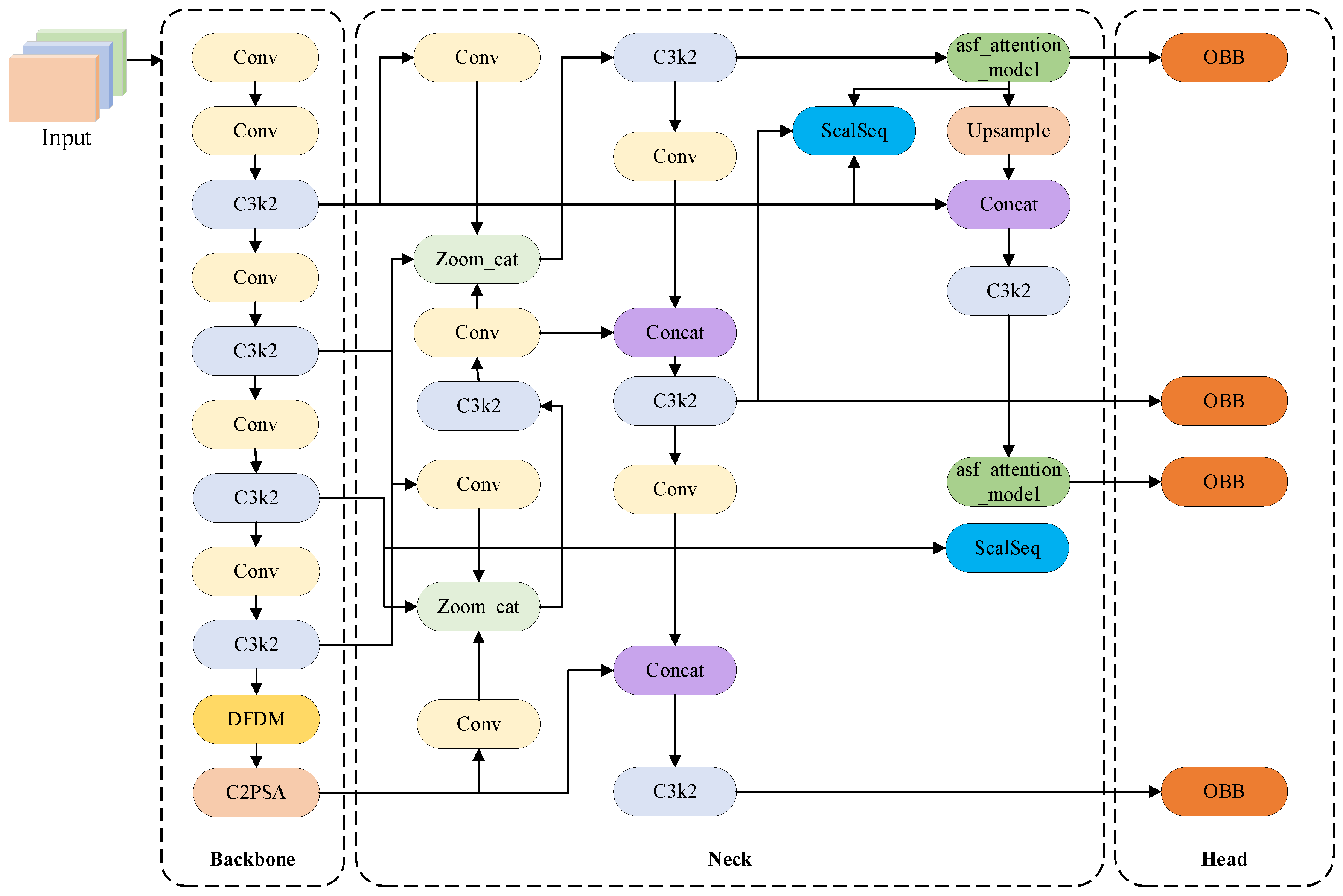

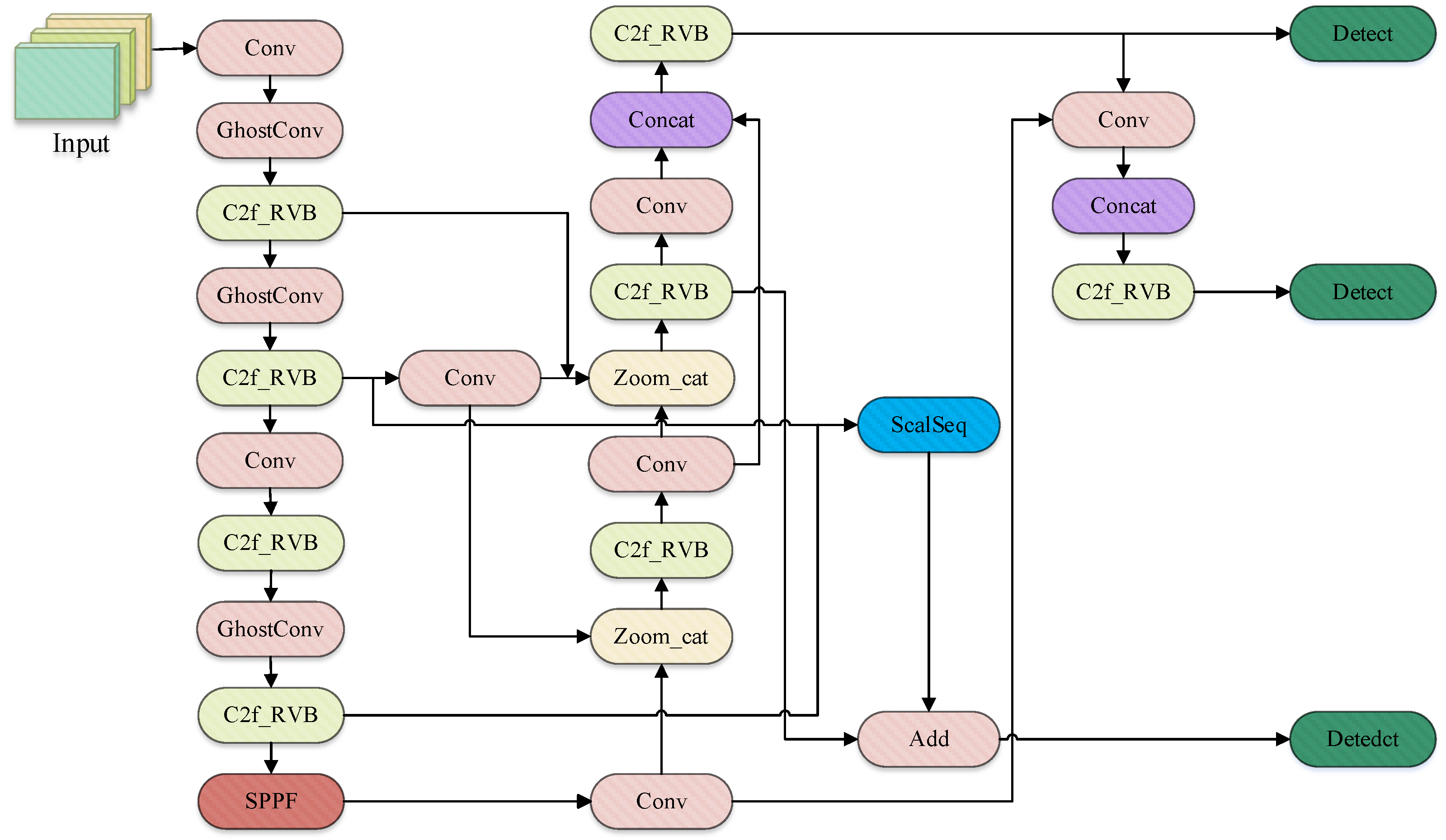

2.4. The YOLOv11 Network Model and Its Improvements

2.4.1. YOLOv11 Improvement Method

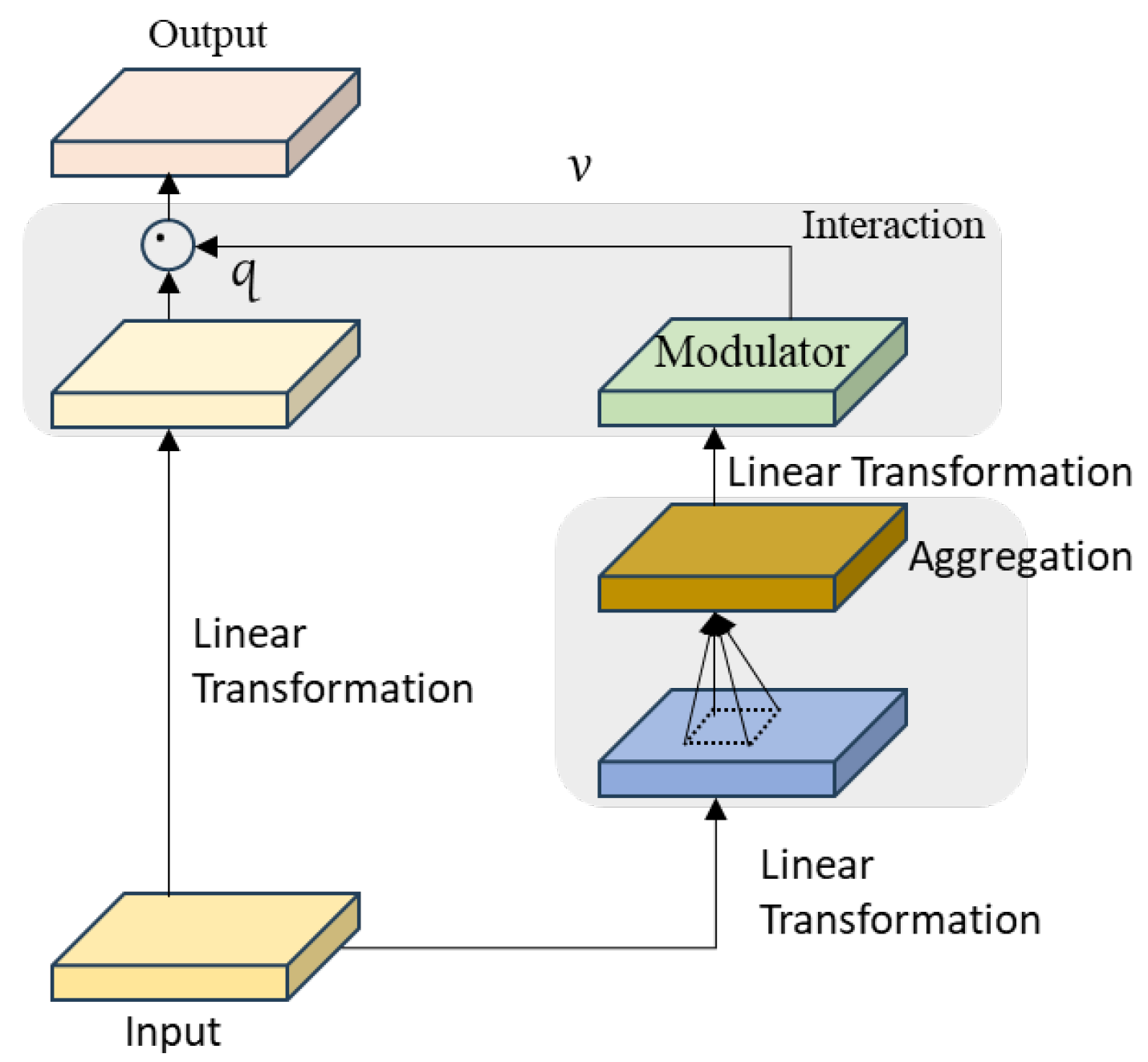

2.4.2. DualFocus Dynamic Modulation (DFDM)

2.4.3. ASF-YOLO

3. Experimental Methods and Results

3.1. Experimental Environment

3.2. Experimental Evaluation Indicators

3.3. Model Training Results and Analysis

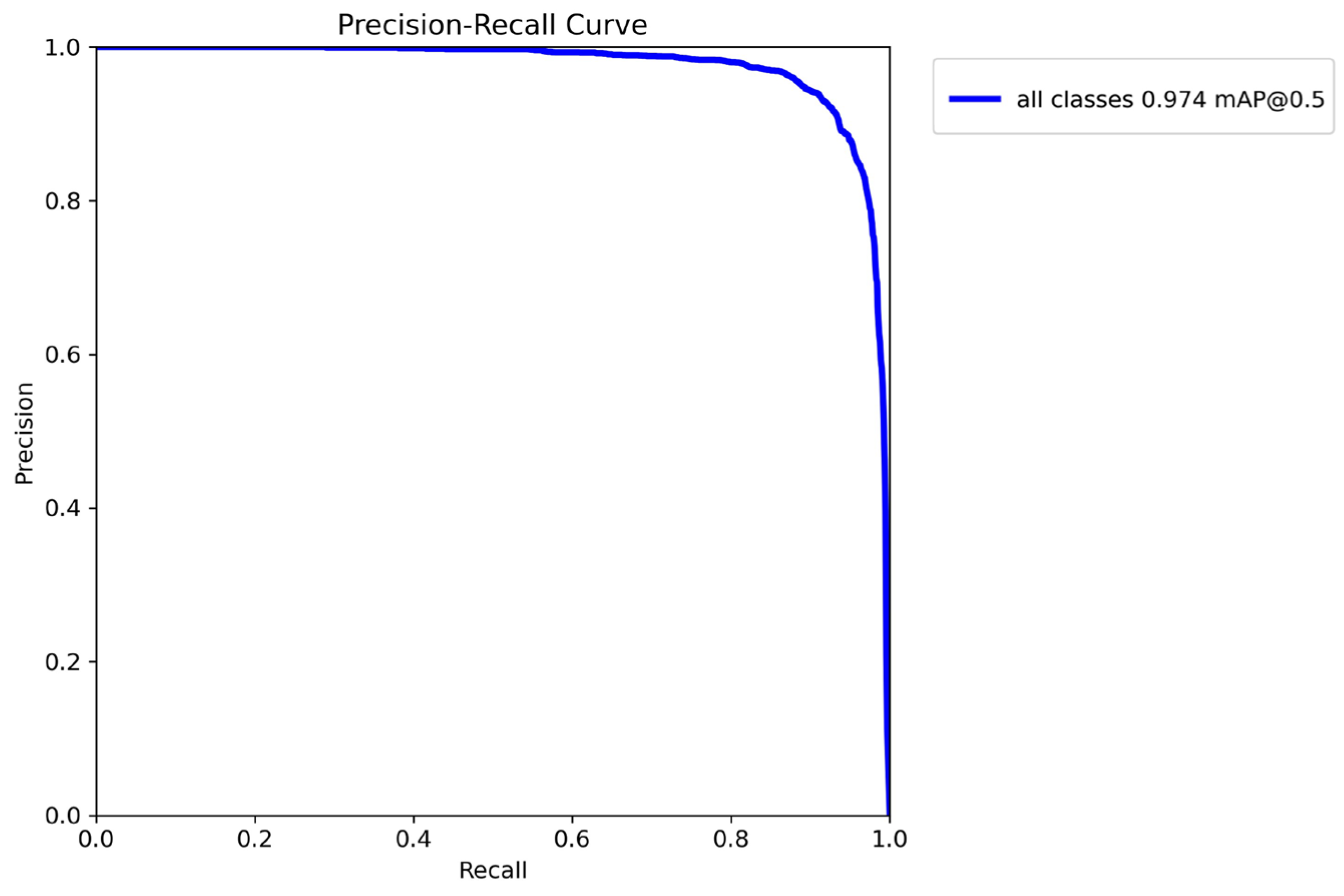

3.3.1. YOLO-DFAM Model

3.3.2. Ablation Experiment

3.3.3. Detection Performance of Different Models

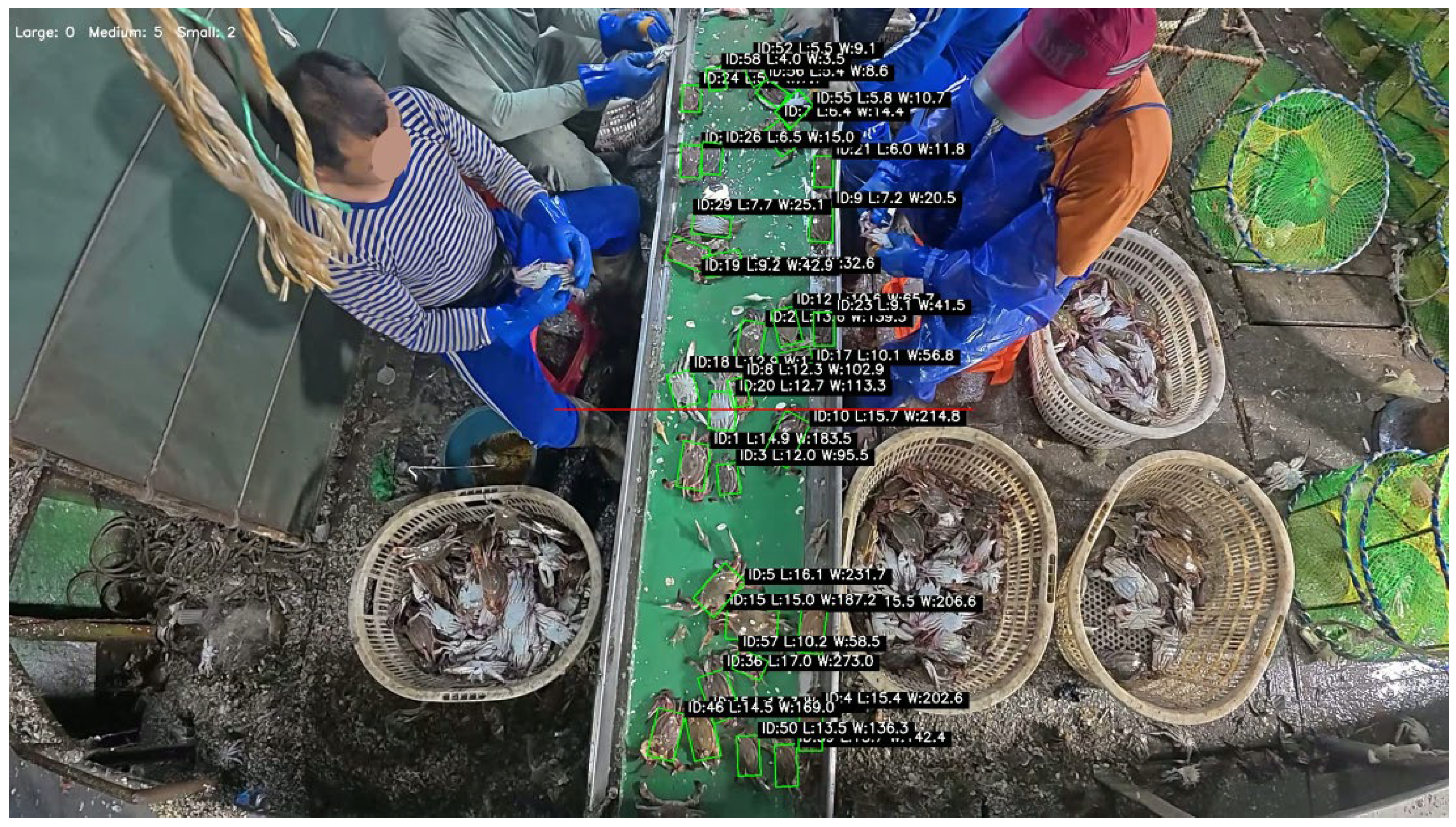

4. Portunus Trituberculatus Detection and Tracking Counting

4.1. Portunus Trituberculatus Detection

4.2. Precision Biomass Estimation System for Swimming Crab Grading: Integrating Allometric Modeling, Dynamic Calibration and Multi-Modal Verification

4.2.1. Nonlinear Allometric Modeling for Precision Crab Biomass Estimation in Coastal Aquaculture

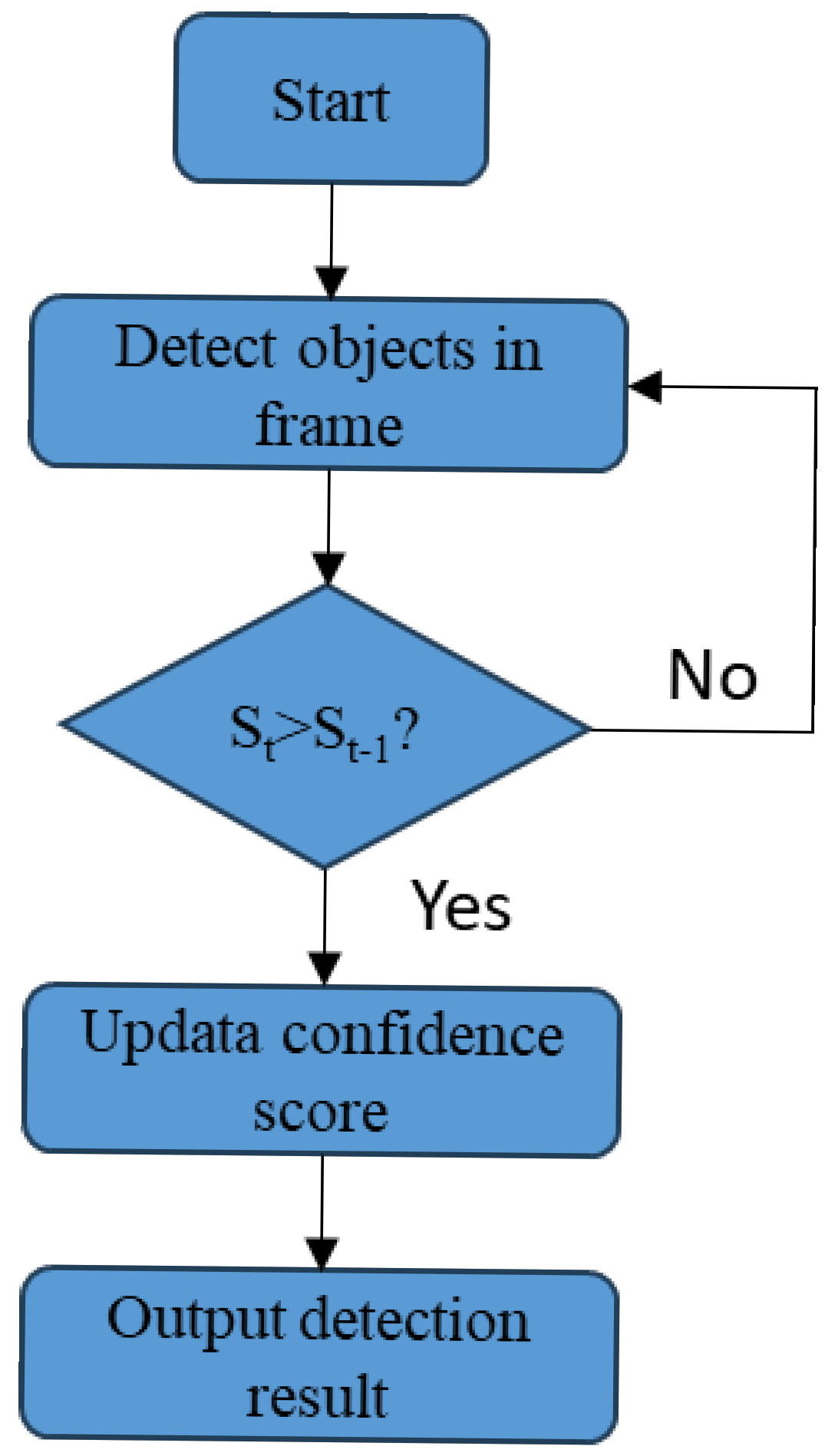

4.2.2. Real-Time Biomass Calculation Architecture: Spatial Scaling Correction and Multi-Frame Confidence Fusion

- (1)

- Real-time spatial scale reconstruction utilizing visual sensors:

- (2)

- Comprehensive measurement method for crab classification with multi-frame confidence dynamic optimization

4.2.3. Validation of Performance for the Co-Optimization of Dynamic Scaling and EMA Filtering in Crab Sorting Weight Estimate

4.3. Rotational Smoothing and Local Motion Compensation Together Enhance Target Tracking Stability in Aquatic Sorting Environments

5. Discussion

5.1. The Effect of Different Interpolation Algorithms on Dynamic Scale Construction

5.2. Impact Analysis of Enhanced Detection Accuracy of Portunus Trituberculatus on Yield Estimation and Fisheries Resource Management

5.3. Multidimensional Collaborative Enhancement Strategies for High-Density Portunus Trituberculatus Sorting and Detection

5.4. Prospects for Enhancement and Research Outlook

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Ryabinin, V.; Barbière, J.; Haugan, P.; Kullenberg, G.; Smith, N.; McLean, C.; Troisi, A.; Fischer, A.; Aricò, S.; Aarup, T. The UN decade of ocean science for sustainable development. Front. Mar. Sci. 2019, 6, 470. [Google Scholar] [CrossRef]

- Miers, E.J. XXII.—Descriptions of some new species of Crustacea, chiefly from New Zealand. J. Nat. Hist. 1876, 17, 218–229. [Google Scholar] [CrossRef]

- Ministry of Agriculture Fishery of the People’s Republic of China. Chinese Fishery Statistical Yearbook 2023; China Agriculture Press: Beijing, China, 2023. [Google Scholar]

- Ya, L.; Jing, W.; Xiaodong, L.; Yingdin, W. Stock Assessment and Management Decision Analysis of Portunus trituberculatus inhabiting Northern East China Sea. Period. Ocean Univ. China 2023, 53, 55–64. [Google Scholar] [CrossRef]

- Longtao, Y.; Yibang, W.; Hui, Z.; Weiwei, X. Stock assessment using the LBB method for Portunus trituberculatus collected from the Yangtze Estuary in China. Appl. Sci. 2020, 11, 342. [Google Scholar] [CrossRef]

- Dan, W.; Fanxiu, W. China Fishery Statistical Yearbook 2024; China Agriculture Press: Beijing, China, 2024. [Google Scholar]

- Yi, K. China Statistical Yearbook; Beijing Shu Tong Dian Zi Chu Ban She: Beijing, China, 2024. [Google Scholar]

- Li, P.; Han, H.; Zhang, S.; Fang, H.; Fan, W.; Zhao, F.; Xu, C. Reviews on the development of digital intelligent fisheries technology in aquaculture. Aquac. Int. 2025, 33, 191. [Google Scholar] [CrossRef]

- Liang, H.; Song, T. Lightweight marine biological target detection algorithm based on YOLOv5. Front. Mar. Sci. 2023, 10, 1219155. [Google Scholar] [CrossRef]

- Zhang, Z.; Liu, F.; He, X.; Wu, X.; Xu, M.; Feng, S. Soft-shell crab detection model based on YOLOF. Aquac. Int. 2024, 32, 5269–5298. [Google Scholar] [CrossRef]

- Sun, Y.; Zhang, S.; Shi, Y.; Tang, F.; Chen, J.; Xiong, Y.; Dai, Y.; Li, L. YOLOv7-DCN-SORT: An algorithm for detecting and counting targets on Acetes fishing vessel operation. Fish. Res. 2024, 274, 106983. [Google Scholar] [CrossRef]

- Liu, Y.; An, D.; Ren, Y.; Zhao, J.; Zhang, C.; Cheng, J.; Liu, J.; Wei, Y. DP-FishNet: Dual-path Pyramid Vision Transformer-based underwater fish detection network. Expert Syst. Appl. 2024, 238, 122018. [Google Scholar] [CrossRef]

- Zheng, T.; Wu, J.; Kong, H.; Zhao, H.; Qu, B.; Liu, L.; Yu, H.; Zhou, C. A video object segmentation-based fish individual recognition method for underwater complex environments. Ecol. Inform. 2024, 82, 102689. [Google Scholar] [CrossRef]

- Zhao, Y.; Qin, H.; Xu, L.; Yu, H.; Chen, Y. A review of deep learning-based stereo vision techniques for phenotype feature and behavioral analysis of fish in aquaculture. Artif. Intell. Rev. 2025, 58, 7. [Google Scholar] [CrossRef]

- Yang, J.; Li, C.; Dai, X.; Gao, J. Focal modulation networks. Adv. Neural Inf. Process. Syst. 2022, 35, 4203–4217. [Google Scholar]

- Kang, M.; Ting, C.-M.; Ting, F.F.; Phan, R.C.-W. ASF-YOLO: A novel YOLO model with attentional scale sequence fusion for cell instance segmentation. Image Vis. Comput. 2024, 147, 105057. [Google Scholar] [CrossRef]

- Zhang, Y.; Sun, P.; Jiang, Y.; Yu, D.; Weng, F.; Yuan, Z.; Luo, P.; Liu, W.; Wang, X. Bytetrack: Multi-object tracking by associating every detection box. In Proceedings of the European Conference on Computer Vision (ECCV), Tel Aviv, Israel, 23–27 October 2022; pp. 1–21. [Google Scholar]

- Chang, Y.-J.; Sun, C.-L.; Chen, Y.; Yeh, S.-Z. Modelling the growth of crustacean species. Rev. Fish Biol. Fish. 2012, 22, 157–187. [Google Scholar] [CrossRef]

- Cungen, Y.; Yongjiu, X.; Lihong, C.; Hengtao, X.; Huijun, W.; Peiyi, Z.; Kun, L. The relationship between distribution of fish abundance and environmental factors in the outer waters of the Zhoushan Islands. Haiyang Xuebao 2020, 42, 80–91. [Google Scholar]

- Froese, R. Cube law, condition factor and weight–length relationships: History, meta-analysis and recommendations. J. Appl. Ichthyol. 2006, 22, 241–253. [Google Scholar] [CrossRef]

- Kai, Z.; Zhenhua, L.; Yongdong, Z.; Kaida, X.; Wenbin, Z.; Zhongming, W. Growth, mortality parameters and exploitation of the swimming crab Portunus trituberculatus (Miers, 1876) in the East China Sea. Indian J. Fish. 2021, 68, 8–16. [Google Scholar] [CrossRef]

- Gang, Y.; Bingqing, X.; Xiuxia, W.; Fan, L.; Xiaonan, Y.; Zhenbo, L. On biological parameters and growth characteristics of Portunus trituberculatus in the Laizhou Bay. Mar. Fish. 2017, 39, 401–410. [Google Scholar] [CrossRef]

- Wei, Y.; Xianshi, J.; Xiujuan, S. Population biology relationship with environmental factors of swimming crab in Changjiang River Estuary and adjacent waters. Fish. Sci. 2016, 35, 105–110. [Google Scholar] [CrossRef]

- Huber, M.E. Allometric growth of the carapace in Trapezia (Brachyura, Xanthidae). J. Crustac. Biol. 1985, 5, 79–83. [Google Scholar] [CrossRef]

- Zhang, N.; Canini, K.; Silva, S.; Gupta, M. Fast linear interpolation. ACM J. Emerg. Technol. Comput. Syst. 2021, 17, 20. [Google Scholar] [CrossRef]

- Xing, Y.; Song, Q.; Cheng, G. Benefit of interpolation in nearest neighbor algorithms. SIAM J. Math. Data Sci. 2022, 4, 935–956. [Google Scholar] [CrossRef]

- Sun, M.; Lan, L.; Zhu, C.-G.; Lei, F. Cubic spline interpolation with optimal end conditions. J. Comput. Appl. Math. 2023, 425, 115039. [Google Scholar] [CrossRef]

- Cuevas, E.; Luque, A.; Escobar, H. Spline interpolation. In Computational Methods with MATLAB®; Springer: Berlin/Heidelberg, Germany, 2023; pp. 151–177. ISBN 978-3-031-40480-1. [Google Scholar]

- Cao, S.; Zhao, D.; Liu, X.; Sun, Y. Real-time robust detector for underwater live crabs based on deep learning. Comput. Electron. Agric. 2020, 172, 105339. [Google Scholar] [CrossRef]

- Chen, K.; Chen, Z.; Wang, C.; Zhou, Z.; Xiao, M.; Zhu, H.; Li, D.; Liu, W. Improved YOLOv8-Based method for the carapace keypoint detection and size measurement of Chinese mitten crabs. Animals 2025, 15, 941. [Google Scholar] [CrossRef]

- Hoyle, S.D.; Campbell, R.A.; Ducharme-Barth, N.D.; Grüss, A.; Moore, B.R.; Thorson, J.T.; Tremblay-Boyer, L.; Winker, H.; Zhou, S.; Maunder, M.N. Catch per unit effort modelling for stock assessment: A summary of good practices. Fish. Res. 2024, 269, 106860. [Google Scholar] [CrossRef]

- Iftikhar, M.; Neal, M.; Hold, N.; Dal Toé, S.G.; Tiddeman, B. Detection of crabs and lobsters using a benchmark single-stage detector and novel fisheries dataset. Computers 2024, 13, 119. [Google Scholar] [CrossRef]

- Tang, C.; Zhang, G.; Hu, H.; Wei, P.; Duan, Z.; Qian, Y. An improved YOLOv3 algorithm to detect molting in Portunus trituberculatus against a complex background. Aquac. Eng. 2020, 91, 102115. [Google Scholar] [CrossRef]

- Zhong, C.; Chen, P.; Zhang, Z.; Sun, M.; Xie, C. CPUE retrieval from spaceborne lidar data: A case study in the Atlantic bigeye tuna fishing area and Antarctica fishing area. Front. Mar. Sci. 2022, 9, 1009620. [Google Scholar] [CrossRef]

- Kunimatsu, S.; Kurota, H.; Muko, S.; Ohshimo, S.; Tomiyama, T. Predicting unseen chub mackerel densities through spatiotemporal machine learning: Indications of potential hyperdepletion in catch-per-unit-effort due to fishing ground contraction. Ecol. Inform. 2025, 85, 102944. [Google Scholar] [CrossRef]

- Li, G.; Lu, Z.; Cao, Y.; Zou, L.; Chen, X. CPUE estimation and standardization based on VMS: A case study for squid-jigging fishery in the equatorial eastern Pacific Ocean. Fishes 2023, 8, 2. [Google Scholar] [CrossRef]

- den Boer, R. Machine vision tool assesses quality of live feed. Laser Focus. World 2024, 60, 40–42. [Google Scholar]

- Yadav, V.K.; Jahageerdar, S.; Ramasubramanian, V.; Bharti, V.S.; Adinarayana, J. Use of different approaches to model catch per unit effort (CPUE) abundance of fish. Indian J. Geo-Mar. Sci. 2016, 45, 1677–1687. Available online: https://www.researchgate.net/profile/Vinod-Yadav-15/publication/314116423_Use_of_different_approaches_to_model_catch_per_unit_effort_CPUE_abundance_of_fish/links/58b65d3faca27261e5166593/Use-of-different-approaches-to-model-catch-per-unit-effort-CPUE-abundance-of-fish.pdf (accessed on 20 May 2024).

- Ashar, A.A.K.; Abrar, A.; Liu, J. A survey on object detection and recognition for blurred and low-quality images: Handling, deblurring, and reconstruction. In Proceedings of the 2024 8th International Conference on Information System and Data Mining, Los Angeles, CA, USA, 24–26 June 2024. [Google Scholar] [CrossRef]

- Wang, X.; Xiao, T.; Jiang, Y.; Shao, S.; Sun, J.; Shen, C. Repulsion loss: Detecting pedestrians in a crowd. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 7774–7783. [Google Scholar]

- Cai, D.; Zhang, Z.; Zhang, Z. Corner-point and foreground-area IoU loss: Better localization of small objects in bounding box regression. Sensors 2023, 23, 4961. [Google Scholar] [CrossRef]

- Lian, Z.; Wang, H. An image deblurring method using improved U-Net model based on multilayer fusion and attention mechanism. Sci. Rep. 2023, 13, 21402. [Google Scholar] [CrossRef]

| Module | FocalModulation | ASF-P2 | DFDM | P/% | R/% | mAP@50/% | mAP@50–95/% | GFLOPS |

|---|---|---|---|---|---|---|---|---|

| N1 | 92.3 ± 0.13 | 94.1 ± 0.05 | 97.8 ± 0.08 | 74.1 ± 0.02 | 6.6 | |||

| N2 | √ | 93.6 | 94.0 | 97.7 | 74.3 | 6.6 | ||

| N3 | √ | 93.0 | 93.8 | 97.9 | 75.0 | 7.1 | ||

| N4 | √ | 94.1 | 92.9 | 97.9 | 74.3 | 6.6 | ||

| N5 | √ | √ | 93.8 | 93.2 | 97.7 | 74.2 | 7.2 | |

| N6 | √ | √ | 94.6 ± 0.11 | 93.4 ± 0.03 | 98.0 ± 0.06 | 74.4 ± 0.03 | 7.2 |

| Models | P% | R/% | mAP@50/% | mAP@50-95/% | GFLOPS | Detectionspeed (Fps) |

|---|---|---|---|---|---|---|

| Rotated Faster R-CNN | 88.3 | 87.0 | 90.6 | 85.0 | 68.4 | 8.7 |

| RetinaNet (CSL-Based) | 88.5 | 87.2 | 90.7 | 85.3 | 81.5 | 272.2 |

| YOLOv5n-obb | 89.6 | 88.7 | 95.7 | 70.6 | 6.0 | 203.0 |

| YOLOv6n-obb | 88.7 | 88.5 | 94.8 | 68.0 | 11.6 | 105.4 |

| YOLOv8n-obb | 91.8 | 91.6 | 96.9 | 72.7 | 7.1 | 193.2 |

| YOLOv10n-obb | 91.9 | 91.7 | 96.7 | 73.1 | 6.8 | 201.5 |

| YOLOv11n-obb | 92.3 | 94.1 | 97.8 | 74.1 | 6.6 | 198.6 |

| YOLO-DFAM | 94.6 | 93.4 | 98.0 | 74.4 | 7.2 | 180.3 |

| Metric | Sample Size (N) | Carapace Width MAE | Mean Relative Error (Weight Prediction) | Max Relative Error (Weight Prediction) | Min Relative Error (Weight Prediction | 95% Confidence Interval |

|---|---|---|---|---|---|---|

| Value | 100 | 0.18 cm | 4.7% | 5.4% | 1.2% | [3.8%, 5.6%] |

| Improvement Strategies | Same Time Effect | Clarification |

|---|---|---|

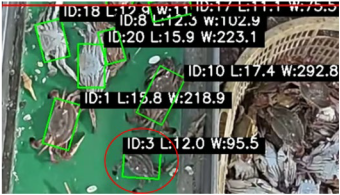

| Unchanged |  | The preliminary tracking data indicated that smaller Portunus trituberculatus specimens inside the red-circled regions were not monitored, and the carapace width and weight measurements linked to other identifiers were inconsistent. |

| Dynamic confidence thresholding, a multi-frame confidence update mechanism, and local optical flow compensation were introduced. |  | Following the enhancements, instances of missed detections and discontinuities in trajectories were rectified. Nonetheless, angular deviation and irregular variations in detection box dimensions were not addressed. |

| An exponential weighted moving average (EMA) filtering mechanism was introduced. |  | The erratic fluctuations in detection box size were resolved, but when Portunus trituberculatus rotates, a delay in the adjustment of the detection angle occurs. |

| Continuous frame smoothing technology was introduced. |  | The delay in detection-angle adjustment during Portunus trituberculatus rotation has been resolved. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, P.; Zhang, S.; Zheng, H.; Fan, X.; Shi, Y.; Wu, Z.; Zhang, H. YOLO-DFAM-Based Onboard Intelligent Sorting System for Portunus trituberculatus. Fishes 2025, 10, 364. https://doi.org/10.3390/fishes10080364

Li P, Zhang S, Zheng H, Fan X, Shi Y, Wu Z, Zhang H. YOLO-DFAM-Based Onboard Intelligent Sorting System for Portunus trituberculatus. Fishes. 2025; 10(8):364. https://doi.org/10.3390/fishes10080364

Chicago/Turabian StyleLi, Penglong, Shengmao Zhang, Hanfeng Zheng, Xiumei Fan, Yonchuang Shi, Zuli Wu, and Heng Zhang. 2025. "YOLO-DFAM-Based Onboard Intelligent Sorting System for Portunus trituberculatus" Fishes 10, no. 8: 364. https://doi.org/10.3390/fishes10080364

APA StyleLi, P., Zhang, S., Zheng, H., Fan, X., Shi, Y., Wu, Z., & Zhang, H. (2025). YOLO-DFAM-Based Onboard Intelligent Sorting System for Portunus trituberculatus. Fishes, 10(8), 364. https://doi.org/10.3390/fishes10080364