An Inexpensive 3D Camera System Based on a Completely Synchronized Stereo Camera, Open-Source Software, and a Raspberry Pi for Accurate Fish Size, Position, and Swimming Speed

Abstract

1. Introduction

2. Materials and Methods

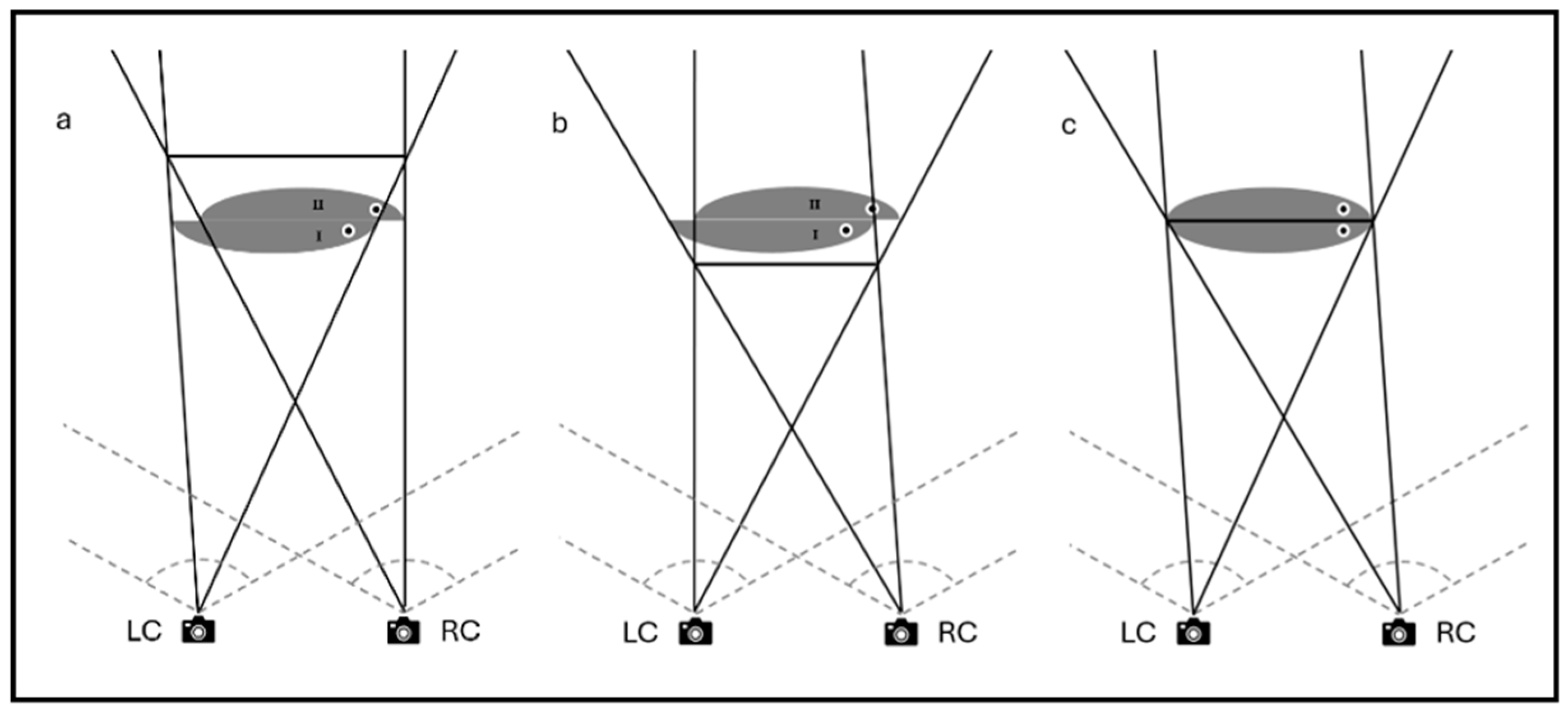

2.1. Principles of 3D Visualization and Calibration

2.2. Camera Setup

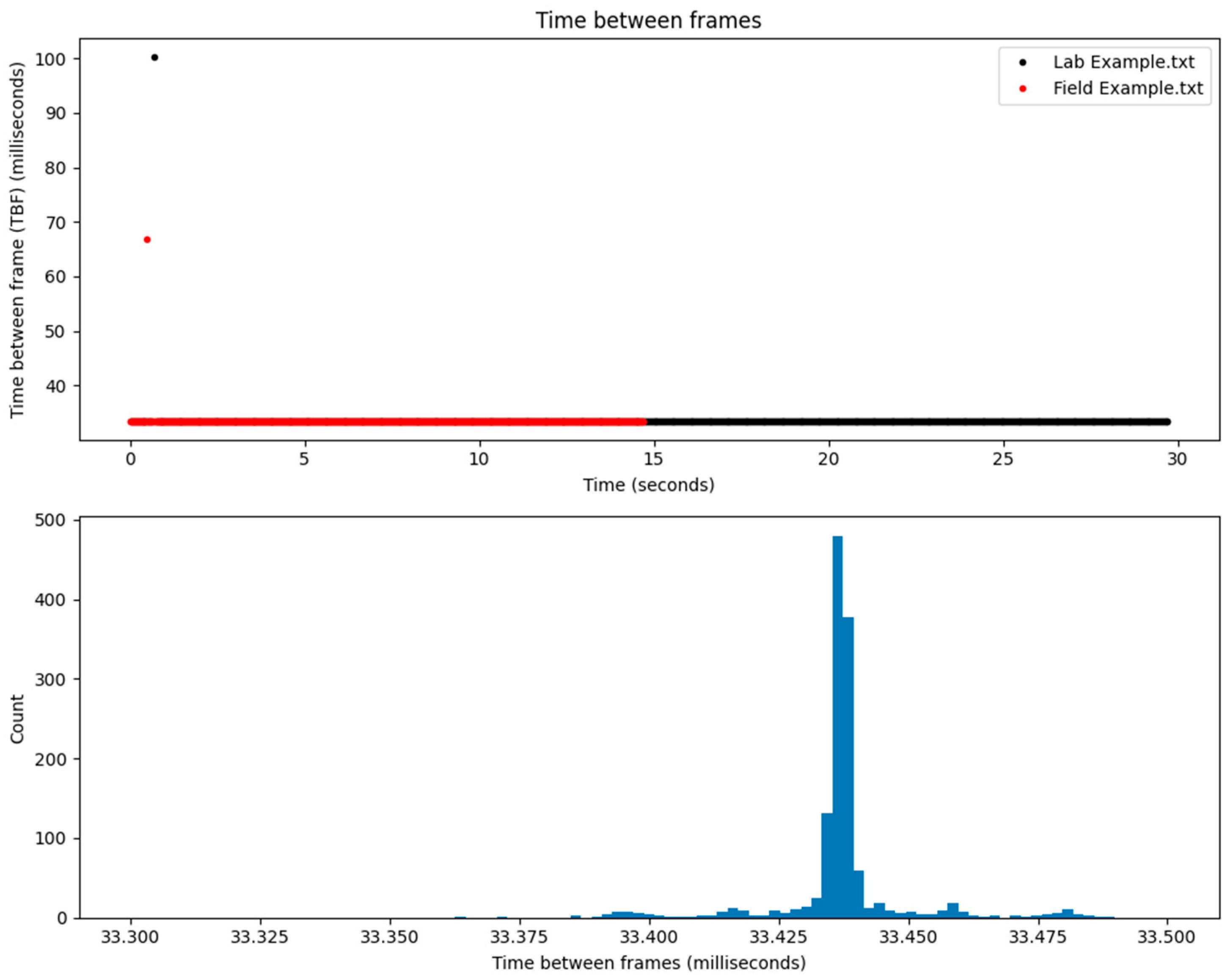

2.3. Footage Capturing and Processing

2.4. Calibration in VidSync

3. Results

3.1. Measurement Tests

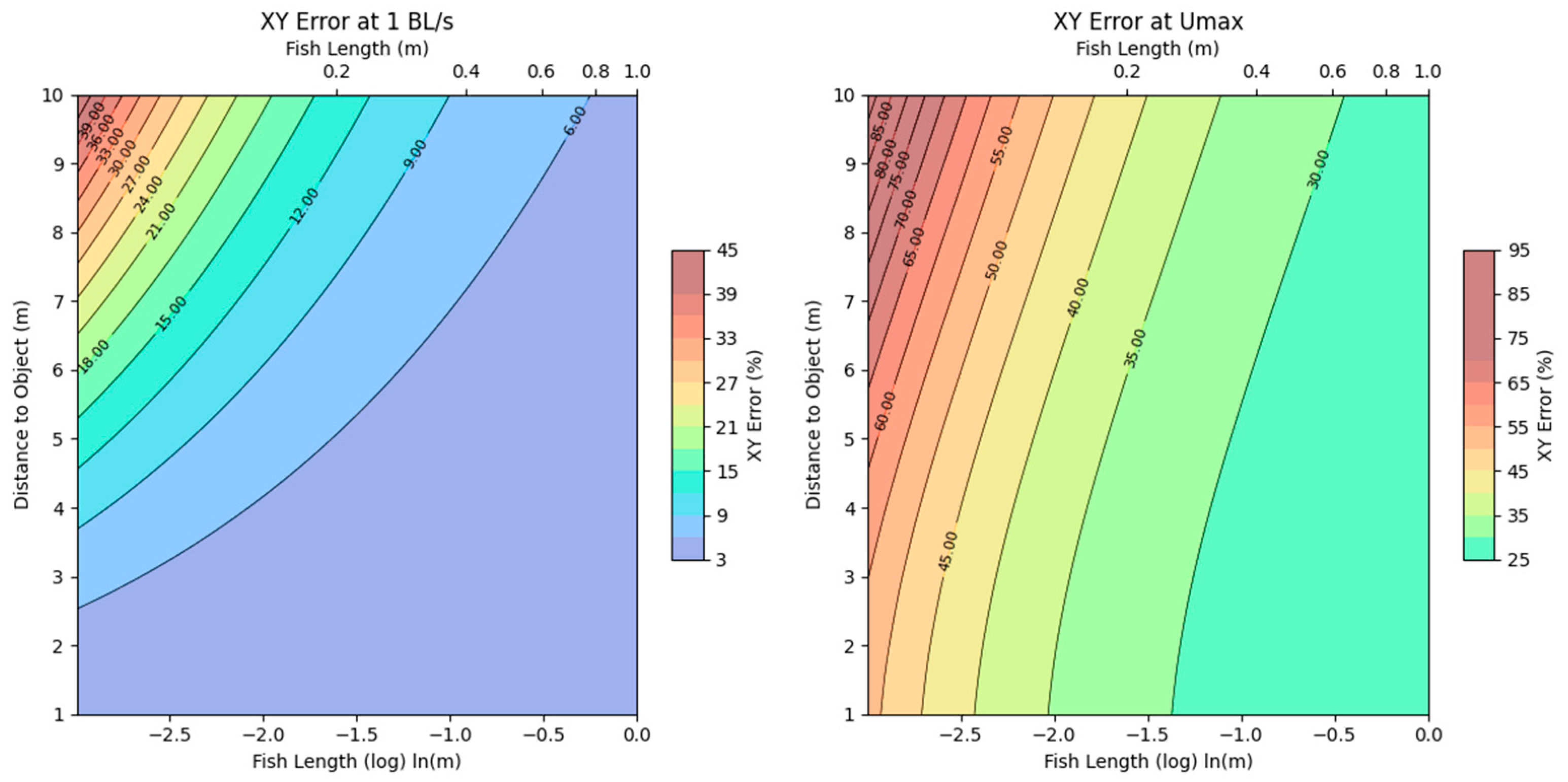

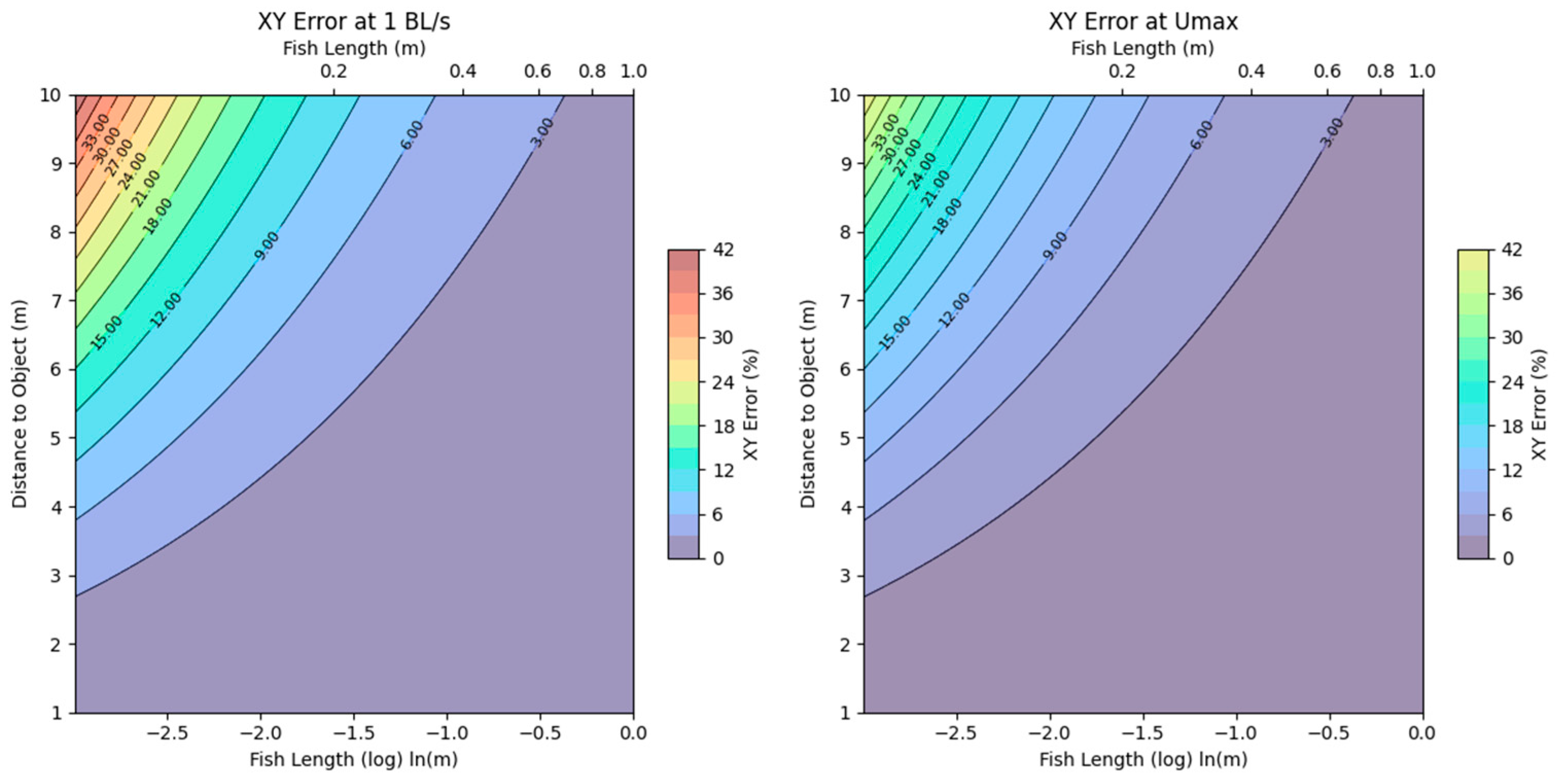

3.2. Camera Error Estimations

4. Discussion

4.1. Improved Accuracy and Cost-Effectiveness

4.2. Remote Access and Control

4.3. Usability

4.4. Future Development and Perspectives

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Føre, M.; Frank, K.; Norton, T.; Svendsen, E.; Alfredsen, J.A.; Dempster, T.; Eguiraun, H.; Watson, W.; Stahl, A.; Sunde, L.M.; et al. Precision fish farming: A new framework to improve production in aquaculture. Biosyst. Eng. 2018, 173, 176–193. [Google Scholar] [CrossRef]

- Mallet, D.; Pelletier, D. Underwater video techniques for observing coastal marine biodiversity: A review of sixty years of publications (1952–2012). Fish. Res. 2014, 154, 44–62. [Google Scholar] [CrossRef]

- Murphy, H.M.; Jenkins, G.P. Observational methods used in marine spatial monitoring of fishes and associated habitats: A review. Mar. Freshw. Res. 2010, 61, 236–252. [Google Scholar] [CrossRef]

- Smith, J.E.; Pinter-Wollman, N. Observing the unwatchable: Integrating automated sensing, naturalistic observations and animal social network analysis in the age of big data. J. Anim. Ecol. 2021, 90, 62–75. [Google Scholar] [CrossRef] [PubMed]

- Shortis, M.; Abdo, E.H.D. A review of underwater stereo-image measurement for marine biology and ecology applications. In Oceanography and Marine Biology; CRC Press: Boca Raton, FL, USA, 2016; pp. 269–304. [Google Scholar]

- Miranda, J.M.; Romero, M. A prototype to measure rainbow trout’s length using image processing. Aquac. Eng. 2017, 76, 41–49. [Google Scholar] [CrossRef]

- Shortis, M.R.; Ravanbakskh, M.; Shaifat, F.; Harvey, E.S.; Mian, A.; Seager, J.W.; Culverhouse, P.F.; Cline, D.E.; Edgington, D.R. A review of techniques for the identification and measurement of fish in underwater stereo-video image sequences. In Proceedings of the Videometrics, Range Imaging, and Applications XII; and Automated Visual Inspection, SPIE Optical Metrology 2013, Munich, Germany, 16–17 May 2013; Volume 8791, pp. 107–116. [Google Scholar] [CrossRef]

- Harvey, E.; Shortis, M. A system for stereo-video measurement of sub-tidal organisms. Mar. Technol. Soc. J. 1995, 29, 10–22. [Google Scholar]

- Heppell, S.A.; Semmens, B.X.; Archer, S.K.; Pattengill-Semmens, C.V.; Bush, P.G.; McCoy, C.M.; Heppell, S.S.; Johnson, B.C. Documenting recovery of a spawning aggregation through size frequency analysis from underwater laser calipers measurements. Biol. Conserv. 2012, 155, 119–127. [Google Scholar] [CrossRef]

- Lopes, F.; Silva, H.; Almeida, J.M.; Pinho, C.; Silva, E. Fish farming autonomous calibration system. In Proceedings of the OCEANS 2017-Aberdeen, Aberdeen, UK, 19–22 June 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Risholm, P.; Mohammed, A.; Kirkhus, T.; Clausen, S.; Vasilyev, L.; Folkedal, O.; Johnsen, Ø.; Haugholt, K.H.; Thielemann, J. Automatic length estimation of free-swimming fish using an underwater 3D range-gated camera. Aquacultural Engineering 2022, 97, 102227. [Google Scholar] [CrossRef]

- Kulawik, J.; Kubanek, M. Detection of false synchronization of stereo image transmission using a convolutional neural network. Symmetry 2021, 13, 78. [Google Scholar] [CrossRef]

- Chen, X.; Wu, X.; Gao, S.; Xie, X.; Huang, Y. Synchronization and calibration of a stereo vision system. In Proceedings of the Global Oceans 2020: Singapore—U.S. Gulf Coast, Biloxi, MS, USA, 5–30 October 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Savina, E.; Krag, L.A.; Madsen, N. Developing and testing a computer vision method to quantify 3D movements of bottom-set gillnets on the seabed. ICES J. Mar. Sci. 2018, 75, 814–824. [Google Scholar] [CrossRef]

- Dunkley, K.; Dunkley, A.; Drewnicki, J.; Keith, I.; Herbert-Read, J.E. A low-cost, long-running, open-source stereo camera for tracking aquatic species and their behaviours. Methods Ecol. Evol. 2023, 14, 2549–2556. [Google Scholar] [CrossRef]

- Schmidt, V.E.; Rzhanov, Y. Measurement of micro-bathymetry with a GOPRO underwater stereo camera pair. In Proceedings of the 2012 Oceans, Hampton Roads, VA, USA, 14–19 October 2012; pp. 1–6. [Google Scholar] [CrossRef]

- Neuswanger, J.R.; Wipfli, M.S.; Rosenberger, A.E.; Hughes, N.F. Measuring fish and their physical habitats: Versatile 2D and 3D video techniques with user-friendly software. Can. J. Fish. Aquat. Sci. 2016, 73, 1861–1873. [Google Scholar] [CrossRef]

- Bradski, G.; Kaehler, A. OpenCV. Dr. Dobb’s J. Softw. Tools 2000, 120, 122–125. [Google Scholar]

- Van Rossum, G.; Drake, F.L. Python Reference Manual; Centrum voor Wiskunde en Informatica: Amsterdam, The Netherlands, 1995; Volume 111, pp. 1–52. [Google Scholar]

- Tomar, S. Converting video formats with FFmpeg. Linux J. 2006, 146, 10. [Google Scholar]

- Arducam.com. Available online: https://www.arducam.com/product/arducam-2-3mp2-ar0234-color-global-shutter-synchronized-stereo-camera-bundle-kit-for-raspberry-pi/ (accessed on 30 December 2024).

- Raspberrypi.com. Available online: https://www.raspberrypi.com/products/raspberry-pi-4-model-b/ (accessed on 30 December 2024).

- Dk.rs-online.com. Available online: https://dk.rs-online.com/web/p/allround-kabinetter/0251356?cm_mmc=DK-PLA-DS3A-_-google-_-CSS_DK_DK_Pmax_Test-_--_-251356&matchtype=&&gad_source=1&gclid=CjwKCAjwk8e1BhALEiwAc8MHiBh_5-iOa4enfQVwSI4WvDxYiNdGTNyTXV4Hwl3_Vgt9yA7HjpaqkxoCw_EQAvD_BwE&gclsrc=aw.ds (accessed on 30 December 2024).

- Tme.eu. Available online: https://www.tme.eu/dk/en/details/ekp30-t/enclosures-other-accessories/fibox/ekp-30t/?gad_source=1&gclid=CjwKCAjwk8e1BhALEiwAc8MHiJhZJ2pqFnnzzS4XbaYj5sxLXmNlGGcl2YyaIUIcgyfFzBezGr-BChoCSTEQAvD_BwE&gclsrc=aw.ds (accessed on 30 December 2024).

- Realvnc.com. Available online: https://www.realvnc.com/en/ (accessed on 30 December 2024).

- Remotedesktop.google.com. Available online: https://remotedesktop.google.com/ (accessed on 30 December 2024).

- Wikipedia.com. Available online: https://en.wikipedia.org/wiki/Comparison_of_remote_desktop_software (accessed on 30 January 2025).

- BlueRobotics.com. Available online: https://bluerobotics.com/product-category/cables-connectors/penetrators/ (accessed on 30 December 2024).

- Elextra.dk. Available online: https://www.elextra.dk/sv-se/p/dc-dc-step-down-konverter-4-40v-125-37v-15w-2a/H29272 (accessed on 30 December 2024).

- Videler, J.J. Fish Swimming; Springer Sci. & Bus. Media: Berlin/Heidelberg, Germany, 1993; p. 260. ISBN 978-0-412-40860-1. [Google Scholar]

- Svendsen, M.B.S.; Domenici, P.; Marras, S.; Krause, J.; Boswell, K.M.; Rodriguez-Pinto, I.; Wilson, A.D.M.; Kurvers, R.H.J.M.; Viblanc, P.E.; Finger, J.S.; et al. Maximum swimming speeds of sailfish and three other large marine predatory fish species based on muscle contraction time and stride length: A myth revisited. Biol. Open 2016, 5, 1415–1419. [Google Scholar] [CrossRef] [PubMed]

- Mansour, M.; Davidson, P.; Stepanov, O.; Piché, R. Relative importance of binocular disparity and motion parallax for depth estimation: A computer vision approach. Remote Sens. 2019, 11, 1990. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mittún, Ó.a.F.; Andersen, L.E.J.; Svendsen, M.B.S.; Steffensen, J.F. An Inexpensive 3D Camera System Based on a Completely Synchronized Stereo Camera, Open-Source Software, and a Raspberry Pi for Accurate Fish Size, Position, and Swimming Speed. Fishes 2025, 10, 139. https://doi.org/10.3390/fishes10040139

Mittún ÓaF, Andersen LEJ, Svendsen MBS, Steffensen JF. An Inexpensive 3D Camera System Based on a Completely Synchronized Stereo Camera, Open-Source Software, and a Raspberry Pi for Accurate Fish Size, Position, and Swimming Speed. Fishes. 2025; 10(4):139. https://doi.org/10.3390/fishes10040139

Chicago/Turabian StyleMittún, Ólavur av Fløtum, Lars Emil Juel Andersen, Morten Bo Søndergaard Svendsen, and John Fleng Steffensen. 2025. "An Inexpensive 3D Camera System Based on a Completely Synchronized Stereo Camera, Open-Source Software, and a Raspberry Pi for Accurate Fish Size, Position, and Swimming Speed" Fishes 10, no. 4: 139. https://doi.org/10.3390/fishes10040139

APA StyleMittún, Ó. a. F., Andersen, L. E. J., Svendsen, M. B. S., & Steffensen, J. F. (2025). An Inexpensive 3D Camera System Based on a Completely Synchronized Stereo Camera, Open-Source Software, and a Raspberry Pi for Accurate Fish Size, Position, and Swimming Speed. Fishes, 10(4), 139. https://doi.org/10.3390/fishes10040139