Sciences of Observation

Abstract

1. Introduction

- What kinds of systems exchange energy for information?

- How does this exchange work?

- What kinds of information do such systems exchange energy for?

- How is this information stored?

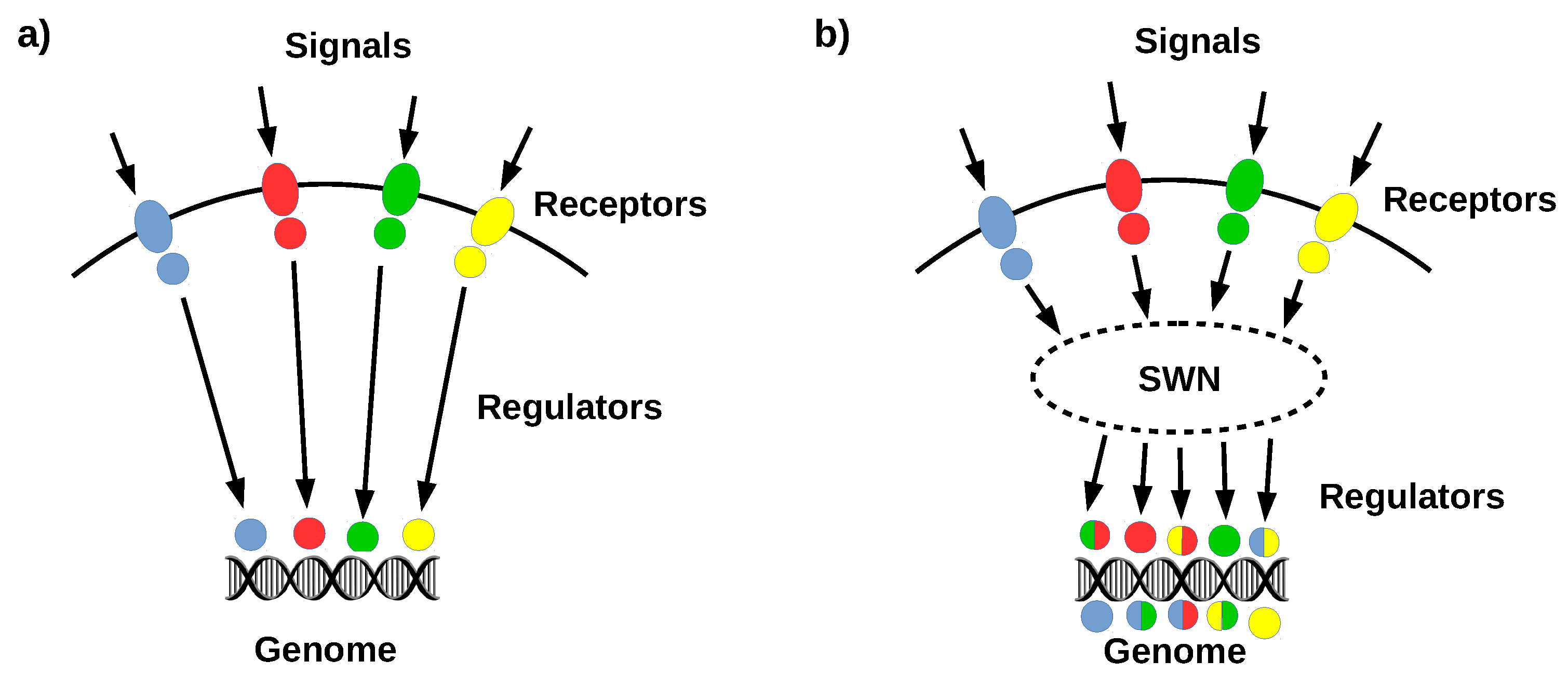

- What is it used for?

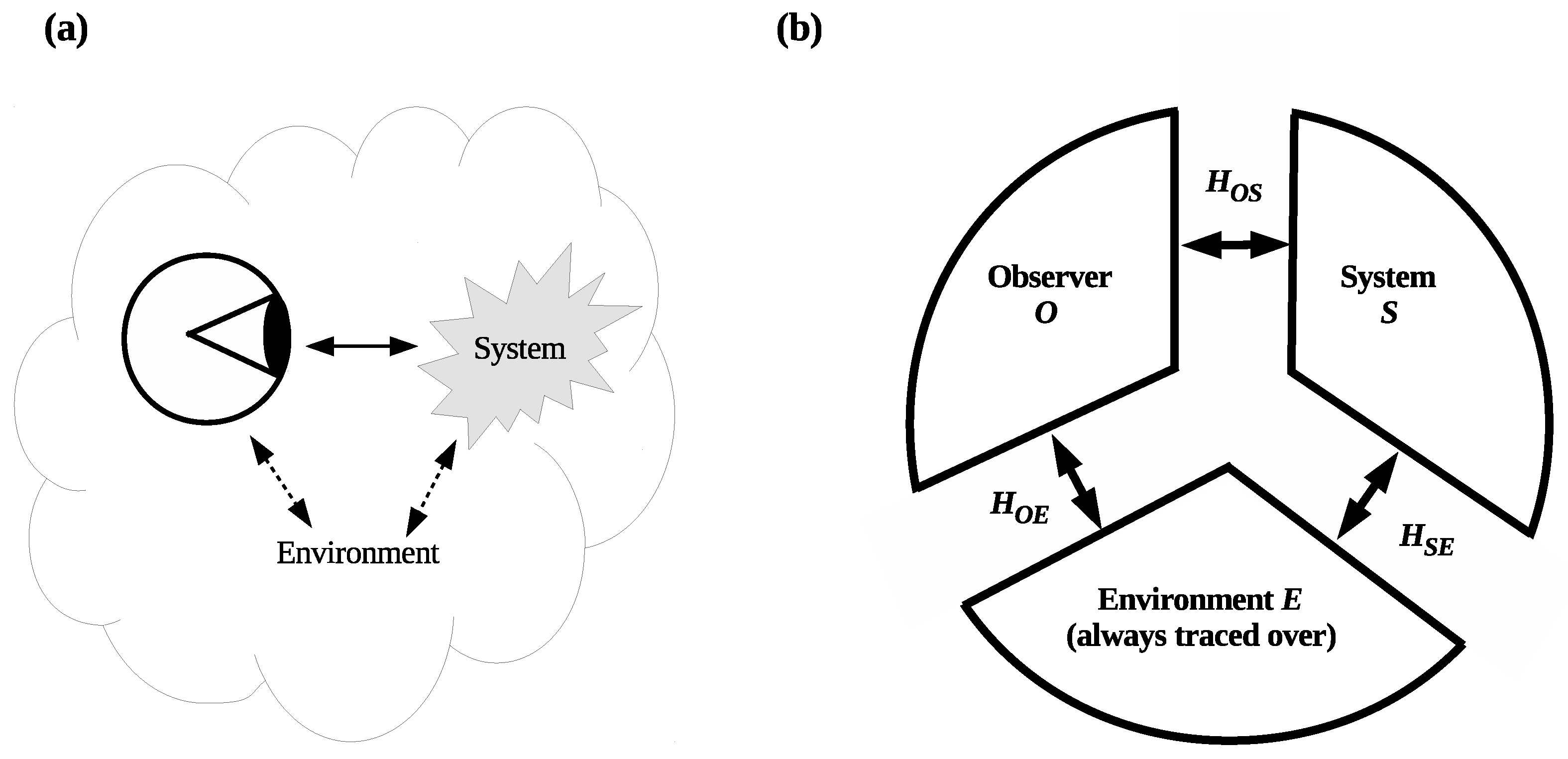

2. What Is an Observer?

Here are some words which, however legitimate and necessary in application, have no place in a formulation with any pretension to physical precision: system, apparatus, environment, microscopic, macroscopic, reversible, irreversible, observable, information, measurement.

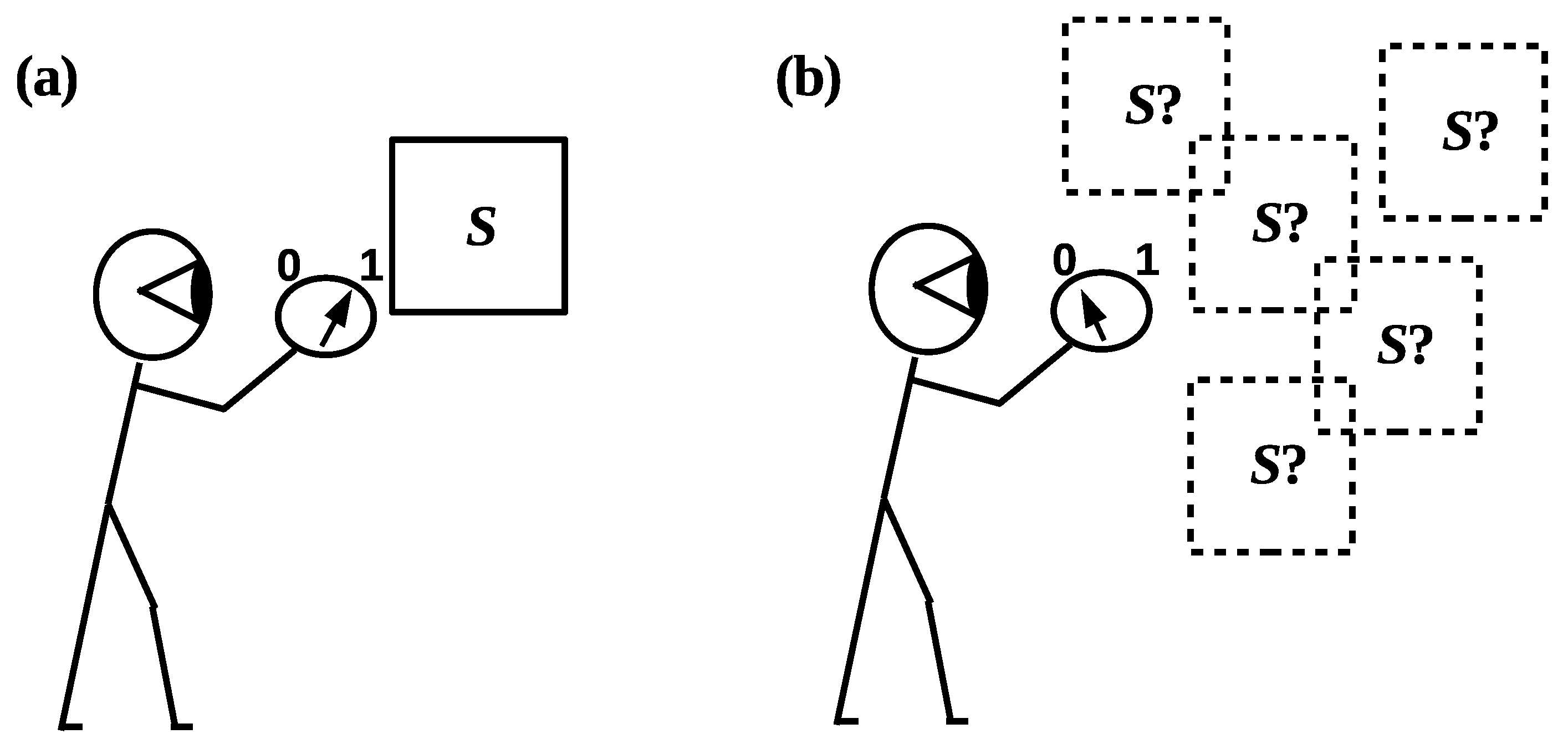

3. What Is Observed?

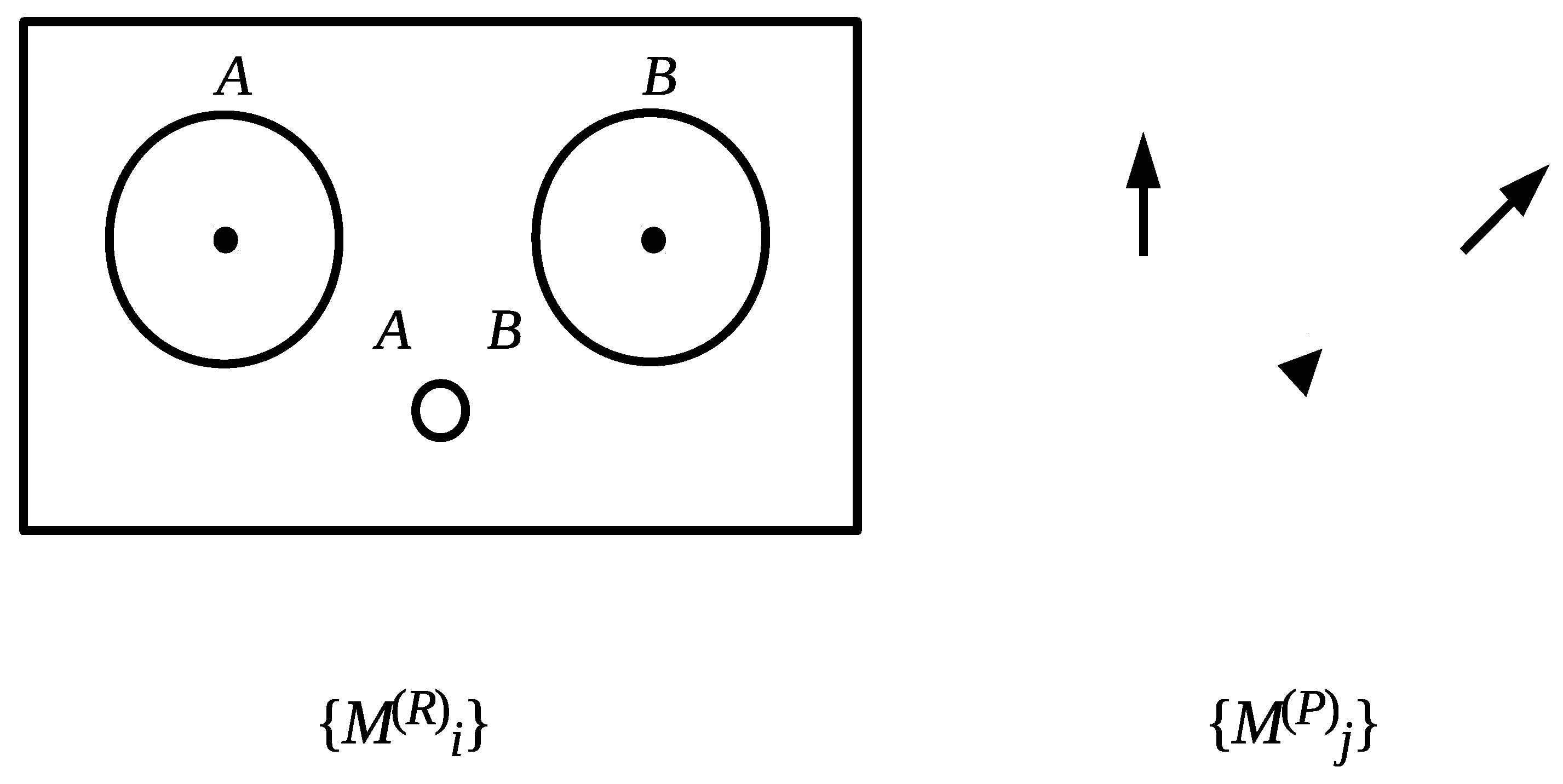

4. What Information Is Collected?

- “Context-free” observers that waste their observational overhead.

- “Context-sensitive” observers that use (at least some of) their observational overhead.

5. What Is Memory?

6. What Is Observation for?

What exactly qualifies some physical systems to play the role of ‘measurer’? Was the wavefunction of the world waiting to jump for thousands of millions of years until a single-celled living creature appeared? Or did it have to wait a little longer, for some better qualified system … with a PhD?

For any observer, the goal of observation is to continue to observe.

7. A Spectrum of Observers

8. An Observer-Based Ontology

9. Conclusions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| AI | Artificial intelligence |

| CA | Conscious agent |

| FAAP | For all practical purposes |

| GOFAI | Good old fashioned AI |

| IGUS | Information gathering and using system |

| ITP | Interface theory of perception |

| LoT | Language of Thought |

| QBism | Quantum Bayesianism |

| SETI | Search for extra-terrestrial intelligence |

References

- Landsman, N.P. Between classical and quantum. In Handbook of the Philosophy of Science: Philosophy of Physics; Butterfield, J., Earman, J., Eds.; Elsevier: Amsterdam, The Netherlands, 2007; pp. 417–553. [Google Scholar]

- Schlosshauer, M. Decoherence and the Quantum to Classical Transition; Springer: Berlin, Germany, 2007. [Google Scholar]

- Ashby, W.R. Introduction to Cybernetics; Chapman and Hall: London, UK, 1956. [Google Scholar]

- Weiss, P. One plus one does not equal two. In The Neurosciences: A Study Program; Gardner, C., Quarton, G.C., Melnechuk, T., Schmitt, F.O., Eds.; Rockefeller University Press: New York, NY, USA, 1967; pp. 801–821. [Google Scholar]

- Von Foerster, H. Objects: Tokens for (eigen-) behaviors. ASC Cybern. Forum 1976, 8, 91–96. [Google Scholar]

- Rosen, R. On information and complexity. In Complexity, Language, and Life: Mathematical Approaches; Casti, J.L., Karlqvist, A., Eds.; Springer: Berlin, Germany, 1986; pp. 174–196. [Google Scholar]

- Rössler, O.E. Endophysics. In Real Brains—Artificial Minds; Casti, J., Karlquist, A., Eds.; North-Holland: New York, NY, USA, 1987; pp. 25–46. [Google Scholar]

- Kampis, G. Explicit epistemology. Revue de la Pensee d’Aujourd’hui 1996, 24, 264–275. [Google Scholar]

- Pattee, H.H. The physics of symbols: Bridging the epistemic cut. Biosystems 2001, 60, 5–21. [Google Scholar] [CrossRef]

- Dodig Crnkovic, G. Information, computation, cognition. Agency-based hierarchies of levels. In Fundamental Issues of Artificial Intelligence; Múller, V.C., Ed.; Springer: Berlin, Germany, 2016; pp. 141–159. [Google Scholar]

- Dodig Crnkovic, G. Nature as a network of morphological infocomputational processes for cognitive agents. Eur. Phys. J. Spec. Top. 2017, 226, 181–195. [Google Scholar] [CrossRef]

- Boltzmann, L. Lectures on Gas Theory; Dover Press: New York, NY, USA, 1995. First published in 1896. [Google Scholar]

- Shannon, C.E. A mathematical theory of communication. Bell Syst. Tech. J. 1948, 27, 379–423. [Google Scholar] [CrossRef]

- Landauer, R. Irreversibility and heat generation in the computing process. IBM J. Res. Dev. 1961, 5, 183–195. [Google Scholar] [CrossRef]

- Landauer, R. Information is a physical entity. Physical A 1999, 263, 63–67. [Google Scholar] [CrossRef]

- Moore, E.F. Gedankenexperiments on sequential machines. In Autonoma Studies; Shannon, C.W., McCarthy, J., Eds.; Princeton University Press: Princeton, NJ, USA, 1956; pp. 129–155. [Google Scholar]

- Fields, C. Building the observer into the system: Toward a realistic description of human interaction with the world. Systems 2016, 4, 32. [Google Scholar] [CrossRef]

- Koenderink, J. The all-seeing eye. Perception 2014, 43, 1–6. [Google Scholar] [CrossRef] [PubMed]

- Bohr, N. Atomic Physics and Human Knowledge; Wiley: New York, NY, USA, 1958. [Google Scholar]

- von Neumann, J. The Mathematical Foundations of Quantum Mechanics; Princeton University Press: Princeton, NJ, USA, 1955. [Google Scholar]

- Zeh, D. On the interpretation of measurement in quantum theory. Found. Phys. 1970, 1, 69–76. [Google Scholar] [CrossRef]

- Zeh, D. Toward a quantum theory of observation. Found. Phys. 1973, 3, 109–116. [Google Scholar] [CrossRef]

- Zurek, W.H. Pointer basis of the quantum apparatus: Into what mixture does the wave packet collapse? Phys. Rev. D 1981, 24, 1516–1525. [Google Scholar] [CrossRef]

- Zurek, W.H. Environment-induced superselection rules. Phys. Rev. D 1982, 26, 1862–1880. [Google Scholar] [CrossRef]

- Joos, E.; Zeh, D. The emergence of classical properties through interaction with the environment. Z. Phys. B Condens. Matter 1985, 59, 223–243. [Google Scholar] [CrossRef]

- Gisin, N. Non-realism: Deep thought or a soft option? Found. Phys. 2012, 42, 80–85. [Google Scholar] [CrossRef]

- Hofer-Szabó, G. How human and nature shake hands: The role of no-conspiracy in physical theories. Stud. Hist. Philos. Mod. Phys. 2017, 57, 89–97. [Google Scholar] [CrossRef]

- Gell-Mann, M.; Hartle, J.B. Quantum mechanics in the light of quantum cosmology. In Complexity, Entropy, and the Physics of Information; Zurek, W., Ed.; CRC Press: Boca Raton, FL, USA, 1989; pp. 425–458. [Google Scholar]

- Ollivier, H.; Poulin, D.; Zurek, W.H. Environment as a witness: Selective proliferation of information and emergence of objectivity in a quantum universe. Phys. Rev. A 2005, 72, 042113. [Google Scholar] [CrossRef]

- Blume-Kohout, R.; Zurek, W.H. Quantum Darwinism: Entanglement, branches, and the emergent classicality of redundantly stored quantum information. Phys. Rev. A 2006, 73, 062310. [Google Scholar] [CrossRef]

- Zurek, W.H. Quantum Darwinism. Nat. Phys. 2009, 5, 181–188. [Google Scholar] [CrossRef]

- Bohr, N. The quantum postulate and the recent development of atomic theory. Nature 1928, 121, 580–590. [Google Scholar] [CrossRef]

- Wheeler, J.A. Information, physics, quantum: The search for links. In Complexity, Entropy, and the Physics of Information; Zurek, W., Ed.; CRC Press: Boca Raton, FL, USA, 1989; pp. 3–28. [Google Scholar]

- Rovelli, C. Relational quantum mechanics. Int. J. Theory Phys. 1996, 35, 1637–1678. [Google Scholar] [CrossRef]

- Fuchs, C. QBism, the perimeter of Quantum Bayesianism. arXiv, 2010; arXiv:1003.5201v1. [Google Scholar]

- Zanardi, P. Virtual quantum subsystems. Phys. Rev. Lett. 2001, 87, 077901. [Google Scholar] [CrossRef] [PubMed]

- Zanardi, P.; Lidar, D.A.; Lloyd, S. Quantum tensor product structures are observable-induced. Phys. Rev. Lett. 2004, 92, 060402. [Google Scholar] [CrossRef] [PubMed]

- Dugić, M.; Jeknić, J. What is “system”: Some decoherence-theory arguments. Int. J. Theory Phys. 2006, 45, 2249–2259. [Google Scholar] [CrossRef]

- Dugić, M.; Jeknić-Dugić, J. What is “system”: The information-theoretic arguments. Int. J. Theory Phys. 2008, 47, 805–813. [Google Scholar] [CrossRef]

- De la Torre, A.C.; Goyeneche, D.; Leitao, L. Entanglement for all quantum states. Eur. J. Phys. 2010, 31, 325–332. [Google Scholar] [CrossRef]

- Harshman, N.L.; Ranade, K.S. Observables can be tailored to change the entanglement of any pure state. Phys. Rev. A 2011, 84, 012303. [Google Scholar] [CrossRef]

- Thirring, W.; Bertlmann, R.A.; Köhler, P.; Narnhofer, H. Entanglement or separability: The choice of how to factorize the algebra of a density matrix. Eur. Phys. J. D 2011, 64, 181–196. [Google Scholar] [CrossRef]

- Dugić, M.; Jeknić-Dugić, J. Parallel decoherence in composite quantum systems. Pramana 2012, 79, 199–209. [Google Scholar] [CrossRef]

- Fields, C. A model-theoretic interpretation of environment-induced superselection. Int. J. Gen. Syst. 2012, 41, 847–859. [Google Scholar] [CrossRef]

- Hensen, B.; Bernien, H.; Dreau, A.E.; Reiserer, A.; Kalb, N.; Blok, M.S.; Ruitenberg, J.; Vermeulen, R.F.L.; Schouten, R.N.; Abellán, C.; et al. Loophole-free Bell inequality violation using electron spins separated by 1.3 kilometres. Nature 2015, 526, 682–686. [Google Scholar] [CrossRef] [PubMed]

- Giustina, M.; Versteegh, M.A.M.; Wengerowsky, S.; Handsteiner, J.; Hochrainer, A.; Phelan, K.; Steinlechner, F.; Kofler, J.; Larsson, J.-Å.; Abellán, C.; et al. A significant-loophole-free test of Bell’s theorem with entangled photons. Phys. Rev. Lett. 2015, 115, 250401. [Google Scholar] [CrossRef] [PubMed]

- Shalm, L.K.; Meyer-Scott, E.; Christensen, B.G.; Bierhorst, P.; Wayne, M.A.; Stevens, M.J.; Gerrits, T.; Glancy, S.; Hamel, D.R.; Allman, M.S.; et al. A strong loophole-free test of local realism. Phys. Rev. Lett. 2015, 115, 250402. [Google Scholar] [CrossRef] [PubMed]

- Peres, A. Unperformed experiments have no results. Am. J. Phys. 1978, 46, 745–747. [Google Scholar] [CrossRef]

- Jennings, D.; Leifer, M. No return to classical reality. Contemp. Phys. 2016, 57, 60–82. [Google Scholar] [CrossRef]

- Zurek, W.H. Decoherence, einselection, and the quantum origins of the classical. Rev. Mod. Phys. 2003, 75, 715–775. [Google Scholar] [CrossRef]

- Bell, J.S. Against measurement. Phys. World 1990, 3, 33–41. [Google Scholar] [CrossRef]

- Clifton, R.; Bub, J.; Halvorson, H. Characterizing quantum theory in terms of information-theoretic constraints. Found. Phys. 2003, 33, 1561–1591. [Google Scholar] [CrossRef]

- Bub, J. Why the quantum? Stud. Hist. Philos. Mod. Phys. 2004, 35, 241–266. [Google Scholar] [CrossRef]

- Lee, J.-W. Quantum mechanics emerges from information theory applied to causal horizons. Found. Phys. 2011, 41, 744–753. [Google Scholar] [CrossRef]

- Fuchs, C. Quantum mechanics as quantum information (and only a little more). arXiv, 2002; arXiv:quant-ph/0205039v1. [Google Scholar]

- Chiribella, G.; D’Ariano, G.M.; Perinotti, P. Informational derivation of quantum theory. Phys. Rev. A 2011, arXiv:1011.6451v384, 012311. [Google Scholar] [CrossRef]

- Chiribella, G.; D’Ariano, G.M.; Perinotti, P. Quantum theory, namely the pure and reversible theory of information. Entropy 2012, 14, 1877–1893. [Google Scholar] [CrossRef]

- Rau, J. Measurement-based quantum foundations. Found. Phys. 2011, 41, 380–388. [Google Scholar] [CrossRef]

- Leifer, M.S.; Spekkens, R.W. Formulating quantum theory as a causally neutral theory of Bayesian inference. arXiv, 2011; arXiv:1107.5849v1. [Google Scholar]

- D’Ariano, G.M. Physics without physics: The power of information-theoretical principles. Int. J. Theory Phys. 2017, 56, 97–128. [Google Scholar] [CrossRef]

- Goyal, P. Information physics—Towards a new conception of physical reality. Information 2012, 3, 567–594. [Google Scholar] [CrossRef]

- Fields, C.; Hoffman, D.D.; Prakash, C.; Prentner, R. Eigenforms, interfaces and holographic encoding: Toward an evolutionary account of objects and spacetime. Construct. Found. 2017, 12, 265–291. [Google Scholar]

- Zurek, W.H. Decoherence, einselection and the existential interpretation (the rough guide). Philos. Trans. R. Soc. A 1998, 356, 1793–1821. [Google Scholar]

- Zurek, W.H. Probabilities from entanglement, Born’s rule pk=|ψk|2 from envariance. Phys. Rev. A 2005, 71, 052105. [Google Scholar] [CrossRef]

- Tegmark, M. Importance of quantum decoherence in brain processes. Phys. Rev. E 2000, 61, 4194–4206. [Google Scholar] [CrossRef]

- Tegmark, M. How unitary cosmology generalizes thermodynamics and solves the inflationary entropy problem. Phys. Rev. D 2012, 85, 123517. [Google Scholar] [CrossRef]

- Fields, C. If physics is an information science, what is an observer? Information 2012, 3, 92–123. [Google Scholar] [CrossRef]

- Bronfenbrenner, U. Toward an experimental ecology of human development. Am. Psychol. 1977, 32, 513–531. [Google Scholar] [CrossRef]

- Barwise, J.; Seligman, J. Information Flow: The Logic of Distributed Systems; (Cambridge Tracts in Theoretical Computer Science 44); Cambridge University Press: Cambridge, UK, 1997. [Google Scholar]

- Scholl, B.J. Object persistence in philosophy and psychology. Mind Lang. 2007, 22, 563–591. [Google Scholar] [CrossRef]

- Martin, A. The representation of object concepts in the brain. Annu. Rev. Psychol. 2007, 58, 25–45. [Google Scholar] [CrossRef] [PubMed]

- Fields, C. The very same thing: Extending the object token concept to incorporate causal constraints on individual identity. Adv. Cognit. Psychol. 2012, 8, 234–247. [Google Scholar] [CrossRef]

- Keifer, M.; Pulvermüller, F. Conceptual representations in mind and brain: Theoretical developments, current evidence, and future directions. Cortex 2012, 48, 805–825. [Google Scholar] [CrossRef] [PubMed]

- Fields, C. Visual re-identification of individual objects: A core problem for organisms and AI. Cognit. Process. 2016, 17, 1–13. [Google Scholar] [CrossRef] [PubMed]

- Baillargeon, R. Innate ideas revisited: For a principle of persistence in infants’ physical reasoning. Perspect. Psychol. Sci. 2008, 3, 2–13. [Google Scholar] [CrossRef] [PubMed]

- Rakison, D.H.; Yermoleva, Y. Infant categorization. WIRES Cognit. Sci. 2010, 1, 894–905. [Google Scholar] [CrossRef] [PubMed]

- Polanyi, M. Life’s irreducible structure. Science 1968, 160, 1308–1312. [Google Scholar] [CrossRef] [PubMed]

- Bateson, G. Steps to an Ecology of Mind: Collected Essays in Anthropology, Psychiatry, Evolution, and Epistemology; Jason Aronson: Northvale, NJ, USA, 1972. [Google Scholar]

- McCarthy, J.; Hayes, P.J. Some philosophical problems from the standpoint of artificial intelligence. In Machine Intelligence; Michie, D., Meltzer, B., Eds.; Edinburgh University Press: Edinburgh, UK, 1969; Volume 4, pp. 463–502. [Google Scholar]

- Fodor, J.A. Modules, frames, fridgeons, sleeping dogs and the music of the spheres. In The Robot’s Dilemma: The Frame Problem in Artificial Intelligence; Pylyshyn, Z., Ed.; Ablex: Norwood, NJ, USA, 1987; pp. 139–149. [Google Scholar]

- Fields, C. How humans solve the frame problem. J. Exp. Theory Artif. Intell. 2013, 25, 441–456. [Google Scholar] [CrossRef]

- Lyubova, N.; Ivaldi, S.; Filliat, D. From passive to interactive object learning and recognition through self-identification on a humanoid robot. Auton. Robots 2016, 40, 33–57. [Google Scholar] [CrossRef]

- Sowa, J.F. Semantic networks. In Encyclopedia of Cognitive Science; Nadel, L., Ed.; John Wiley: New York, NY, USA, 2006. [Google Scholar]

- Hampton, J.A. Categories, prototypes and exemplars. In Routledge Handbook of Semantics; Reimer, N., Ed.; Routledge: Abington, UK, 2016; Chapter 7. [Google Scholar]

- Johnson, S.P.; Hannon, E.E. Perceptual development. In Handbook of Child Psychology and Developmental Science, Volume 2: Cognitive Processes; Liben, L.S., Müller, U., Eds.; Wiley: Hoboken, NJ, USA, 2016; pp. 63–112. [Google Scholar]

- Clarke, A.; Tyler, L.K. Understanding what we see: How we derive meaning from vision. Trends Cognit. Sci. 2015, 19, 677–687. [Google Scholar] [CrossRef] [PubMed]

- Waxman, S.R.; Leddon, E.M. Early word learning and conceptual development: Everything had a name, and each name gave birth to a new thought. In The Wiley-Blackwell Handbook of Childhood Cognitive Development; Goswami, U., Ed.; Wiley-Blackwell: Malden, MA, USA, 2002; pp. 180–208. [Google Scholar]

- Pinker, S. Language as an adaptation to the cognitive niche. In Language Evolution; Christiansen, M.H., Kirby, S., Eds.; Oxford University Press: Oxford, UK, 2003; pp. 16–37. [Google Scholar]

- Ashby, F.G.; Maddox, W.T. Human category learning 2.0. Ann. N. Y. Acad. Sci. 2011, 1224, 147–161. [Google Scholar] [CrossRef] [PubMed]

- Bargh, J.A.; Ferguson, M.J. Beyond behaviorism: On the automaticity of higher mental processes. Psychol. Bull. 2000, 126, 925–945. [Google Scholar] [CrossRef] [PubMed]

- Ulrich, L.E.; Koonin, E.V.; Zhulin, I.B. One-component systems dominate signal transduction in prokaryotes. Trends Microbiol. 2005, 13, 52–56. [Google Scholar] [CrossRef] [PubMed]

- Ashby, M.K. Survey of the number of two-component response regulator genes in the complete and annotated genome sequences of prokaryotes. FEMS Microbiol. Lett. 2004, 231, 277–281. [Google Scholar] [CrossRef]

- Wadhams, G.H.; Armitage, J.P. Making sense of it all: Bacterial chemotaxis. Nat. Rev. Mol. Cell Biol. 2004, 5, 1024–1037. [Google Scholar] [CrossRef] [PubMed]

- Schuster, M.; Sexton, D.J.; Diggle, S.P.; Greenberg, E.P. Acyl-Homoserine Lactone quorum sensing: From evolution to application. Annu. Rev. Microbiol. 2013, 67, 43–63. [Google Scholar] [CrossRef] [PubMed]

- Logan, C.Y.; Nuss, R. The Wnt signalling pathway in development and disease. Annu. Rev. Cell. Dev. Biol. 2004, 20, 781–810. [Google Scholar] [CrossRef] [PubMed]

- Wagner, E.F.; Nebreda, A.R. Signal integration by JNK and p38 MAPK pathways in cancer development. Nat. Rev. Cancer 2009, 9, 537–549. [Google Scholar] [CrossRef] [PubMed]

- Agrawal, H. Extreme self-organization in networks constructed from gene expression data. Phys. Rev. Lett. 2002, 89, 268702. [Google Scholar] [CrossRef] [PubMed]

- Barabási, A.-L.; Oltvai, Z.N. Network biology: Understanding the cell’s functional organization. Nat. Rev. Genet. 2004, 5, 101–114. [Google Scholar] [CrossRef] [PubMed]

- Watts, D.J.; Strogatz, S.H. Collective dynamics of ‘small-world’ networks. Nature 1998, 393, 440–442. [Google Scholar] [CrossRef] [PubMed]

- Dzhafarov, E.N.; Kujala, J.V. Context-content systems of random variables: The Contextuality-by-Default theory. J. Math. Psychol. 2016, 74, 11–33. [Google Scholar] [CrossRef]

- Dzhafarov, E.N.; Kon, M. On universality of classical probability with contextually labeled random variables. J. Math. Psychol. 2016, 85, 17–24. [Google Scholar] [CrossRef]

- Pothos, E.M.; Busemeyer, J.R. Can quantum probability provide a new direction for cognitive modeling? Behav. Brain Sci. 2013, 36, 255–327. [Google Scholar] [CrossRef] [PubMed]

- Aerts, D.; Sozzo, S.; Veloz, T. Quantum structure in cognition and the foundations of human reasoning. Int. J. Theory Phys. 2015, 54, 4557–4569. [Google Scholar] [CrossRef]

- Bruza, P.D.; Kitto, K.; Ramm, B.J.; Sitbon, L. A probabilistic framework for analysing the compositionality ofconceptual combinations. J. Math. Psychol. 2015, 67, 26–38. [Google Scholar] [CrossRef]

- Emery, N.J. Cognitive ornithology: The evolution of avian intelligence. Philos. Trans. R. Soc. B 2006, 361, 23–43. [Google Scholar] [CrossRef] [PubMed]

- Godfrey-Smith, P. Other Minds: The Octopus, the Sea, and the Deep Origins of Consciousness; Farrar, Straus and Giroux: New York, NY, USA, 2016. [Google Scholar]

- Etienne, A.S.; Jeffery, K.J. Path integration in mammals. Hippocampus 2004, 14, 180–192. [Google Scholar] [CrossRef] [PubMed]

- Wiltschko, R.; Wiltschko, W. Avian navigation: From historical to modern concepts. Anim. Behav. 2003, 65, 257–272. [Google Scholar] [CrossRef]

- Klein, S.B. What memory is. WIRES Interdiscip. Rev. Cognit. Sci. 2015, 1, 1–38. [Google Scholar]

- Eichenbaum, H. Still searching for the engram. Learn. Behav. 2016, 44, 209–222. [Google Scholar] [CrossRef] [PubMed]

- Newell, A. Physical Symbol Systems. Cognit. Sci. 1980, 4, 135–183. [Google Scholar] [CrossRef]

- Markman, A.B.; Dietrich, E. In defense of representation. Cognit. Psychol. 2000, 40, 138–171. [Google Scholar] [CrossRef] [PubMed]

- Gibson, J.J. The Ecological Approach to Visual Perception; Houghton Mifflin: Boston, MA, USA, 1979. [Google Scholar]

- Dennett, D. Intentional systems. J. Philos. 1971, 68, 87–106. [Google Scholar] [CrossRef]

- Anderson, M.L. Embodied cognition: A field guide. Artif. Intell. 2003, 149, 91–130. [Google Scholar] [CrossRef]

- Froese, T.; Ziemke, T. Enactive artificial intelligence: Investigating the systemic organization of life and mind. Artif. Intell. 2009, 173, 466–500. [Google Scholar] [CrossRef]

- Chemero, A. Radical embodied cognitive science. Rev. Gen. Psychol. 2013, 17, 145–150. [Google Scholar] [CrossRef]

- Fodor, J. The Language of Thought; Harvard University Press: Cambridge, MA, USA, 1975. [Google Scholar]

- Dehaene, S.; Naccache, L. Towards a cognitive neuroscience of consciousness: Basic evidence and a workspace framework. Cognition 2001, 79, 1–37. [Google Scholar] [CrossRef]

- Baars, B.J. Global workspace theory of consciousness: Toward a cognitive neuroscience of human experience. Prog. Brain Res. 2005, 150, 45–53. [Google Scholar] [PubMed]

- Sporns, O.; Honey, C.J. Small worlds inside big brains. Proc. Natl. Acad. Sci. USA 2006, 111, 15220–15225. [Google Scholar] [CrossRef] [PubMed]

- Shanahan, M. The brain’s connective core and its role in animal cognition. Philos. Trans. R. Soc. B 2012, 367, 2704–2714. [Google Scholar] [CrossRef] [PubMed]

- Baars, B.J.; Franklin, S.; Ramsoy, T.Z. Global workspace dynamics: Cortical “binding and propagation’’ enables conscious contents. Front. Psychol. 2013, 4, 200. [Google Scholar] [CrossRef] [PubMed]

- Dehaene, S.; Charles, L.; King, J.-R.; Marti, S. Toward a computational theory of conscious processing. Curr. Opin. Neurobiol. 2014, 25, 76–84. [Google Scholar] [CrossRef] [PubMed]

- Ball, G.; Alijabar, P.; Zebari, S.; Tusor, N.; Arichi, T.; Merchant, N.; Robinson, E.C.; Ogundipe, E.; Ruekert, D.; Edwards, A.D.; et al. Rich-club organization of the newborn human brain. Proc. Natl. Acad. Sci. USA 2014, 111, 7456–7461. [Google Scholar] [CrossRef] [PubMed]

- Gao, W.; Alcauter, S.; Smith, J.K.; Gilmore, J.H.; Lin, W. Development of human brain cortical network architecture during infancy. Brain Struct. Funct. 2015, 220, 1173–1186. [Google Scholar] [CrossRef] [PubMed]

- Huang, H.; Shu, N.; Mishra, V.; Jeon, T.; Chalak, L.; Wang, Z.J.; Rollins, N.; Gong, G.; Cheng, H.; Peng, Y.; et al. Development of human brain structural networks through infancy and childhood. Cereb. Cortex 2015, 25, 1389–1404. [Google Scholar] [CrossRef] [PubMed]

- Fields, C.; Glazebrook, J.F. Disrupted development and imbalanced function in the global neuronal workspace: A positive-feedback mechanism for the emergence of ASD in early infancy. Cognit. Neurodyn. 2017, 11, 1–21. [Google Scholar] [CrossRef] [PubMed]

- Chater, N. The Mind Is Flat; Allen lane: London, UK, 2018. [Google Scholar]

- Friston, K.J.; Kiebel, S. Predictive coding under the free-energy principle. Philos. Trans. R. Soc. B 2009, 364, 1211–1221. [Google Scholar] [CrossRef] [PubMed]

- Friston, K.J. The free-energy principle: A unified brain theory? Nat. Rev. Neurosci. 2010, 11, 127–138. [Google Scholar] [CrossRef] [PubMed]

- Clark, A. Whatever next? Predictive brains, situated agents, and the future of cognitive science. Behav. Brain Sci. 2013, 36, 181–204. [Google Scholar] [PubMed]

- Hohwy, J. The self-evidencing brain. Noûs 2016, 50, 259–285. [Google Scholar] [CrossRef]

- Spratling, M.W. Predictive coding as a model of cognition. Cognit. Process. 2016, 17, 279–305. [Google Scholar] [CrossRef] [PubMed]

- Bastos, A.M.; Usrey, W.M.; Adams, R.A.; Mangun, G.R.; Fries, P.; Friston, K.J. Canonical microcircuits for predictive coding. Neuron 2012, 76, 695–711. [Google Scholar] [CrossRef] [PubMed]

- Adams, R.A.; Friston, K.J.; Bastos, A.M. Active inference, predictive coding and cortical architecture. In Recent Advances in the Modular Organization of the Cortex; Casanova, M.F., Opris, I., Eds.; Springer: Berlin, Germany, 2015; pp. 97–121. [Google Scholar]

- Spratling, M.W. A hierarchical predictive coding model of object recognition in natural images. Cognit. Comput. 2017, 9, 151–167. [Google Scholar] [CrossRef] [PubMed]

- Shipp, S.; Adams, R.A.; Friston, K.J. Reflections on agranular architecture: Predictive coding in the motor cortex. Trends Neurosci. 2013, 36, 706–716. [Google Scholar] [CrossRef] [PubMed]

- Seth, A.K. Interoceptive inference, emotion, and the embodied self. Trends Cognit. Sci. 2013, 17, 565–573. [Google Scholar] [CrossRef] [PubMed]

- Harold, F.M. Molecules into cells: Specifying spatial architecture. Microbiol. Mol. Biol. Rev. 2005, 69, 544–564. [Google Scholar] [CrossRef] [PubMed]

- Laland, K.N.; Uller, T.; Feldman, M.W.; Sterelny, K.; Müller, G.B.; Moczek, A.; Jablonka, E.; Odling-Smee, J. The extended evolutionary synthesis: Its structure, assumptions and predictions. Proc. R. Soc. B 2015, 282, 20151019. [Google Scholar] [CrossRef] [PubMed]

- Pezzulo, G.; Levin, M. Top-down models in biology: Explanation and control of complex living systems above the molecular level. J. R. Soc. Interface 2016, 13, 20160555. [Google Scholar] [CrossRef] [PubMed]

- Fields, C.; Levin, M. Multiscale memory and bioelectric error correction in the cytoplasm- cytoskeleton- membrane system. WIRES Syst. Biol. Med. 2018, 10, e1410. [Google Scholar] [CrossRef] [PubMed]

- Kull, K.; Emmeche, C.; Hoffmeyer, J. Why biosemiotics? An introduction to our view on the biology of life itself. In Towards a Semiotic Biology: Life Is the Action of Signs; Emmeche, C., Kull, K., Eds.; Imperial College Press: London, UK, 2011; pp. 1–21. [Google Scholar]

- Barbieri, M. A short history of biosemiotics. Biosemiotics 2009, 2, 221–245. [Google Scholar] [CrossRef]

- Friston, K.; Levin, M.; Sengupta, B.; Pezzulo, G. Knowing one’s place: A free-energy approach to pattern regulation. J. R. Soc. Interface 2015, 12, 20141383. [Google Scholar] [CrossRef] [PubMed]

- Schwabe, L.; Nader, K.; Pruessner, J.C. Reconsolidation of human memory: Brain mechanisms and clinical relevance. Biol. Psychiatry 2014, 76, 274–280. [Google Scholar] [CrossRef] [PubMed]

- Clark, A.; Chalmers, D. The extended mind. Analysis 1998, 58, 7–19. [Google Scholar] [CrossRef]

- Kull, K.; Deacon, T.; Emmeche, C.; Hoffmeyer, J.; Stjernfelt, F. Theses on biosemiotics: Prolegomena to a theoretical biology. In Towards a Semiotic Biology: Life Is the Action of Signs; Emmeche, C., Kull, K., Eds.; Imperial College Press: London, UK, 2011; pp. 25–41. [Google Scholar]

- Roederer, J. Information and Its Role in Nature; Springer: Berlin, Germany, 2005. [Google Scholar]

- Roederer, J. Pragmatic information in biology and physics. Philos. Trans. R. Soc. A 2016, 374, 20150152. [Google Scholar] [CrossRef] [PubMed]

- Gu, Z.-C.; Wen, X.-G. Emergence of helicity ±2 modes (gravitons) from qubit models. Nucl. Phys. B 2012, 863, 90–129. [Google Scholar] [CrossRef]

- Swingle, B. Entanglement renormalization and holography. Phys. Rev. D 2012, 86, 065007. [Google Scholar] [CrossRef]

- Dowker, F. Introduction to causal sets and their phenomenology. Class. Quantum Gravity 2013, 45, 1651–1667. [Google Scholar] [CrossRef]

- Pastawski, F.; Yoshida, B.; Harlow, D.; Preskill, J. Holographic quantum error-correcting codes: Toy models for the bulk/boundary correspondence. J. High Energy Phys. 2015, 2015, 149. [Google Scholar] [CrossRef]

- Tegmark, M. Consciousness as a state of matter. Chaos Solitons Fractals 2015, 76, 238–270. [Google Scholar] [CrossRef]

- Hooft, G. The Cellular Automaton Interpretation of Quantum Mechanics; Springer: Heidelberg, Germany, 2016. [Google Scholar]

- Han, M.; Hung, L.-Y. Loop quantum gravity, exact holographic mapping, and holographic entanglement entropy. Phys. Rev. D 2017, 95, 024011. [Google Scholar] [CrossRef]

- Setty, Y.; Mayo, A.E.; Surette, M.G.; Alon, U. Detailed map of a cis-regulatory input function. Proc. Natl. Acad. Sci. USA 2003, 100, 7702–7707. [Google Scholar] [CrossRef] [PubMed]

- Dennett, D. From Bacteria to Bach and Back; Norton: New York, NY, USA, 2017. [Google Scholar]

- Simons, J.S.; Henson, R.N.A.; Gilbert, S.J.; Fletcher, P.C. Separable forms of reality monitoring supported by anterior prefrontal cortex. J. Cognit. Neurosci. 2008, 20, 447–457. [Google Scholar] [CrossRef] [PubMed]

- Picard, F. Ecstatic epileptic seizures: A glimpse into the multiple roles of the insula. Front. Behav. Neurosci. 2016, 10, 21. [Google Scholar]

- Debruyne, H.; Portzky, M.; Van den Eynde, F.; Audenaert, K. Cotard’s syndrome: A review. Curr. Psychiatry Rep. 2009, 11, 197–202. [Google Scholar] [CrossRef] [PubMed]

- Kahneman, D. Thinking, Fast and Slow; Farrar, Straus and Giroux: New York, NY, USA, 2011. [Google Scholar]

- Bargh, J.A.; Schwader, K.L.; Hailey, S.E.; Dyer, R.L.; Boothby, E.J. Automaticity in social-cognitive processes. Trends Cognit. Sci. 2012, 16, 593–605. [Google Scholar] [CrossRef] [PubMed]

- Heider, F.; Simmel, M. An experimental study of apparent behavior. Am. J. Psychol. 1944, 57, 243–259. [Google Scholar] [CrossRef]

- Scholl, B.J.; Tremoulet, P. Perceptual causality and animacy. Trends Cognit. Sci. 2000, 4, 299–309. [Google Scholar] [CrossRef]

- Scholl, B.J.; Gao, T. Perceiving animacy and intentionality: Visual processing or higher-level judgment. In Social Perception: Detection and Interpretation of Animacy, Agency and Intention; Rutherford, M.D., Kuhlmeier, V.A., Eds.; MIT Press: Cambridge, MA, USA, 2013; pp. 197–230. [Google Scholar]

- Fields, C. Motion, identity and the bias toward agency. Front. Hum. Neurosci. 2014, 8, 597. [Google Scholar] [CrossRef] [PubMed]

- Blackmore, S. Dangerous memes; or, What the Pandorans let loose. In Cosmos & Culture: Culture Evolution in a Cosmic Context; Dick, S.J., Lupisdella, M.L., Eds.; NASA: Washington, DC, USA, 2009; pp. 297–317. [Google Scholar]

- Patton, C.M.; Wheeler, J.A. Is physics legislated by cosmogony? In Quantum Gravity; Isham, C.J., Penrose, R., Sciama, D.W., Eds.; Clarendon: Oxford, UK, 1975; pp. 538–605. [Google Scholar]

- Conway, J.; Kochen, S. The free will theorem. Found. Phys. 2006, 36, 1441–1473. [Google Scholar] [CrossRef]

- Horsman, C.; Stepney, S.; Wagner, R.C.; Kendon, V. When does a physical system compute? Proc. R. Soc. A 2014, 470, 20140182. [Google Scholar] [CrossRef] [PubMed]

- Oudeyer, P.-Y.; Kaplan, F. What is intrinsic motivation? A typology of computational approaches. Front. Neurorobot. 2007, 1, 6. [Google Scholar] [CrossRef] [PubMed]

- Baldassarre, G.; Mirolli, M. (Eds.) Intrinsically Motivated Learning in Natural and Artificial Systems; Springer: Berlin, Germany, 2013. [Google Scholar]

- Cangelosi, A.; Schlesinger, M. Developmental Robotics: From Babies to Robots; MIT Press: Cambridge, MA, USA, 2015. [Google Scholar]

- Adams, A.; Zenil, H.; Davies, P.C.W.; Walker, S.I. Formal definitions of unbounded evolution and innovation reveal universal mechanisms for open-ended evolution in dynamical systems. Nat. Rep. 2017, 7, 997. [Google Scholar] [CrossRef] [PubMed]

- Pattee, H.H. Cell psychology. Cognit. Brain Theory 1982, 5, 325–341. [Google Scholar]

- Stewart, J. Cognition = Life: Implications for higher-level cognition. Behav. Process. 1996, 35, 311–326. [Google Scholar] [CrossRef]

- di Primio, F.; Müller, B.F.; Lengeler, J.W. Minimal cognition in unicellular organisms. In From Animals to Animats; Meyer, J.A., Berthoz, A., Floreano, D., Roitblat, H.L., Wilson, S.W., Eds.; International Society for Adaptive Behavior: Honolulu, HI, USA, 2000; pp. 3–12. [Google Scholar]

- Baluška, F.; Levin, M. On having no head: Cognition throughout biological systems. Front. Psychol. 2016, 7, 902. [Google Scholar] [CrossRef] [PubMed]

- Cook, N.D. The neuron-level phenomena underlying cognition and consciousness: Synaptic activity and the action potential. Neuroscience 2008, 153, 556–570. [Google Scholar] [CrossRef] [PubMed]

- Gagliano, M.; Renton, M.; Depczynski, M.; Mancuso, S. Experience teaches plants to learn faster and forget slower in environments where it matters. Oecologia 2014, 175, 63–72. [Google Scholar] [CrossRef] [PubMed]

- Heil, M.; Karban, R. Explaining evolution of plant communication by airborne signals. Trends Ecol. Evolut. 2010, 25, 137–144. [Google Scholar] [CrossRef] [PubMed]

- Rasmann, S.; Turlings, T.C.J. Root signals that mediate mutualistic interactions in the rhizosphere. Curr. Opin. Plant Biol. 2016, 32, 62–68. [Google Scholar] [CrossRef] [PubMed]

- Hilker, M.; Schwachtje, J.; Baier, M.; Balazadeh, S.; Bäurle, I.; Geiselhardt, S.; Hincha, D.K.; Kunze, R.; Mueller-Roeber, B.; Rillig, M.C.; et al. Priming and memory of stress responses in organisms lacking a nervous system. Biol. Rev. 2016, 91, 1118–1133. [Google Scholar] [CrossRef] [PubMed]

- Panksepp, J. Affective consciousness: Core emotional feelings in animals and humans. Conscious. Cognit. 2005, 14, 30–80. [Google Scholar] [CrossRef] [PubMed]

- Rochat, P. Primordial sense of embodied self-unity. In Early Development of Body Representations; Slaughter, V., Brownell, C.A., Eds.; Cambridge University Press: Cambridge, UK, 2012; pp. 3–18. [Google Scholar]

- Queller, D.C.; Strassmann, J.E. Beyond society: The evolution of organismality. Philos. Trans. R. Soc. B 2009, 364, 3143–3155. [Google Scholar] [CrossRef] [PubMed]

- Strassmann, J.E.; Queller, D.C. The social organism: Congresses, parties and committees. Evolution 2010, 64, 605–616. [Google Scholar] [CrossRef] [PubMed]

- Fields, C.; Levin, M. Are planaria individuals? What regenerative biology is telling us about the nature of multicellularity. Evolut. Biol. 2018, 45, 237–247. [Google Scholar] [CrossRef]

- Müller, G.B. Evo-devo: Extending the evolutionary synthesis. Nat. Rev. Genet. 2007, 8, 943–949. [Google Scholar] [CrossRef] [PubMed]

- Carroll, S.B. Evo-devo and an expanding evolutionary synthesis: A genetic theory of morphological evolution. Cell 2008, 134, 25–36. [Google Scholar] [CrossRef] [PubMed]

- Bjorklund, D.F.; Ellis, B.J. Children, childhood, and development in evolutionary perspective. Dev. Rev. 2014, 34, 225–264. [Google Scholar] [CrossRef]

- Gentner, D. Why we’re so smart. In Language and Mind: Advances in the Study of Language and Thought; Gentner, D., Goldin-Meadow, S., Eds.; MIT Press: Cambridge, MA, USA, 2003; pp. 195–235. [Google Scholar]

- Dietrich, E.S. Analogical insight: Toward unifying categorization and analogy. Cognit. Process. 2010, 11, 331–345. [Google Scholar] [CrossRef] [PubMed]

- Fields, C. Metaphorical motion in mathematical reasoning: Further evidence for pre-motor implementation of structure mapping in abstract domains. Cognit. Process. 2013, 14, 217–229. [Google Scholar] [CrossRef] [PubMed]

- Sellars, W. Philosophy and the scientific image of man. In Frontiers of Science and Philosophy; Colodny, R., Ed.; University of Pittsburgh Press: Pittsburgh, PA, USA, 1962; pp. 35–78. [Google Scholar]

- Fields, C. Quantum Darwinism requires an extra-theoretical assumption of encoding redundancy. Int. J. Theory Phys. 2010, 49, 2523–2527. [Google Scholar] [CrossRef]

- Hooft, G. Dimensional reduction in quantum gravity. In Salamfestschrift; Ali, A., Ellis, J., Randjbar-Daemi, S., Eds.; World Scientific: Singapore, 1993; pp. 284–296. [Google Scholar]

- Hoffman, D.D.; Singh, M.; Prakash, C. The interface theory of perception. Psychon. Bull. Rev. 2015, 22, 1480–1506. [Google Scholar] [CrossRef] [PubMed]

- Hoffman, D.D. The interface theory of perception. Curr. Dir. Psychol. Sci. 2016, 25, 157–161. [Google Scholar] [CrossRef]

- Mark, J.T.; Marion, B.B.; Hoffman, D.D. Natural selection and veridical perceptions. J. Theory Biol. 2010, 266, 504–515. [Google Scholar] [CrossRef] [PubMed]

- Prakash, C. On invention of structure in the world: Interfaces and conscious agents. Found. Sci. 2018, in press. [Google Scholar]

- Hoffman, D.D.; Prakash, C. Objects of consciousness. Front. Psychol. 2014, 5, 577. [Google Scholar] [CrossRef] [PubMed]

- Fields, C.; Hoffman, D.D.; Prakash, C.; Singh, M. Conscious agent networks: Formal analysis and application to cognition. Cognit. Syst. Res. 2018, 47, 186–213. [Google Scholar] [CrossRef]

- Carter, B. Anthropic principle in cosmology. In Current Issues in Cosmology; Pecker, J.-C., Narlikar, J.V., Eds.; Cambridge University Press: Cambridge, UK, 2006; pp. 173–179. [Google Scholar]

- von Uexküll, J. A Stroll through the Worlds of Animals and Men. In Instinctive Behavior; Schiller, C., Ed.; International Universities Press: New York, NY, USA, 1957; pp. 5–80. [Google Scholar]

- Strawson, G. ConsciOusness and Its Place in Nature: Does Physicalism Entail Panpsychism? Imprint Academic: Exeter, UK, 2006. [Google Scholar]

- Weiner, N. Cybernetics; MIT Press: Cambridge, MA, USA, 1948. [Google Scholar]

© 2018 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fields, C. Sciences of Observation. Philosophies 2018, 3, 29. https://doi.org/10.3390/philosophies3040029

Fields C. Sciences of Observation. Philosophies. 2018; 3(4):29. https://doi.org/10.3390/philosophies3040029

Chicago/Turabian StyleFields, Chris. 2018. "Sciences of Observation" Philosophies 3, no. 4: 29. https://doi.org/10.3390/philosophies3040029

APA StyleFields, C. (2018). Sciences of Observation. Philosophies, 3(4), 29. https://doi.org/10.3390/philosophies3040029