Abstract

This app project was aimed to remotely deliver diagnoses and disease-progression information to COVID-19 patients to help minimize risk during this and future pandemics. Data collected from chest computed tomography (CT) scans of COVID-19-infected patients were shared through the app. In this article, we focused on image preprocessing techniques to identify and highlight areas with ground glass opacity (GGO) and pulmonary infiltrates (PIs) in CT image sequences of COVID-19 cases. Convolutional neural networks (CNNs) were used to classify the disease progression of pneumonia. Each GGO and PI pattern was highlighted with saliency map fusion, and the resulting map was used to train and test a CNN classification scheme with three classes. In addition to patients, this information was shared between the respiratory triage/radiologist and the COVID-19 multidisciplinary teams with the application so that the severity of the disease could be understood through CT and medical diagnosis. The three-class, disease-level COVID-19 classification results exhibited a macro-precision of more than 94.89% in a two-fold cross-validation. Both the segmentation and classification results were comparable to those made by a medical specialist.

1. Introduction

COVID-19 is an infectious disease with continuously emerging variants [1,2]. Real-time polymerase chain reaction (RT-PCR) is the principal technique used for the early diagnosis of COVID-19 infection [3,4]. Thereafter, chest computed tomography (CT) assumes an important role in assessing the extent of the lung damage in patients with moderate-to-severe COVID-19 pneumonic disease [4].

CT is one of the main techniques used to assess the severity of a pneumonic infection [5,6,7,8,9,10]. This approach allows patients to be stratified into risk categories, provides prognosis estimates, and facilitates informed medical decision making [10]. The most common CT finding associated with clinical severity is the extent of pulmonary involvement and pneumonia. [5,6,8,9,11,12]. The symptoms of severe COVID-19 are manifestations of ground glass opacity (GGO) and pulmonary infiltrates (PIs) in the peripheral subpleural region visualized on CT images; these symptoms usually occur within 14 days of exposure to the virus [13].

During the pandemic, CT-based evaluation became increasingly relevant for treating critically ill patients with COVID-19 who were seriously ill. CT demonstrated findings classified as common in COVID-19 pneumonia, according to the recommendations of the Radiologic Society of North America [14]. CT also plays a fundamental role in intensive care units for detecting and monitoring life-threatening complications related to COVID-19. Using CT improves prognosis and survival predictions in patients with COVID-19 [15].

Deep learning can be used to perform a quantitative and rigorous analysis of the infected volume on CT images [16,17]. Deep learning enables computers to perform human tasks [18,19]. Information is available on representative deep learning applications against the COVID-19 pandemic [20]. The authors of [21,22,23,24,25,26] suggested using different artificial intelligence techniques to detect and diagnose COVID-19 using CT images, which was the aim of the present study.

Mobiny et al. [21] presented a deep learning technique called DECAPS (detail-oriented capsule networks) to classify COVID-19 from CT images. Amyar et al. [22] proposed a deep learning model to segment and identify a COVID-19-infected region from CT images. Polsinelli et al. [23] used a SqueezeNet based-model to classify COVID-19 CT images. Li et al. [24] extracted features from 50 layers of a neural network (COVNet) to classify COVID-19 on CT images. Debanjan Konar et al. [25] used a parallel quantum-inspired self-supervised network (PQIS-Net)-assisted semi-supervised shallow learning method for COVID-19 classification from CT images. Yu-Huan Wu et al. [26] proposed a JCS (joint classification and segmentation) system to classify and diagnose COVID-19 using CT images.

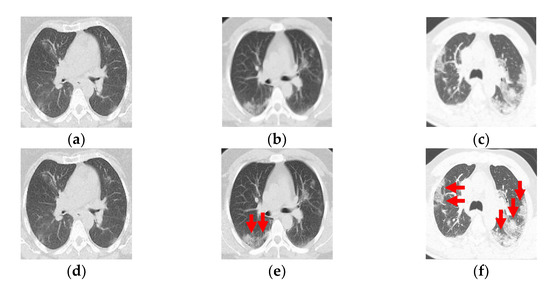

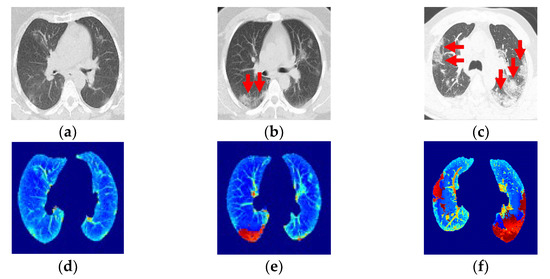

This research builds on those works as the next step by using image indicators and classification to categorize the damage and classify the progression of pneumonia over the pulmonary parenchyma (PP) in patients with COVID-19 (Figure 1a–c). In this paper, we present a competitive method for identifying the progression of pneumonia with the saliency of GGO and PI pattern areas and a deep learning technique for three-class classifications (Figure 1d–f).

Figure 1.

(a–c) Computed tomography from a patient with COVID-19. (d–f) Ground-glass opacity identification (red arrow marking) for three-class damage progression classification: (d) Class 1, (e) Class 2, and (f) Class 3.

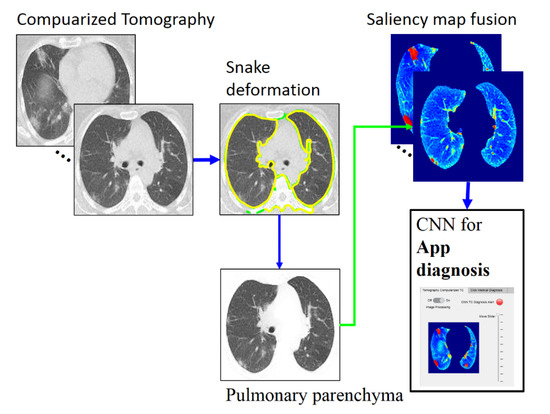

The paper is organized as follows. Section 2 describes the proposed GGO and PI preprocessing saliency and the three-class classification deep learning model for CT images (Figure 2). Section 3 presents the classification results obtained in two-fold cross-validation. Section 4 describes and discusses the results before finally concluding the paper.

Figure 2.

Overall method description, https://drive.google.com/drive/folders/1Q41lGFpawPvYmPZ8SNK3sgsuvjs4kB45?usp=sharing (accessed on 3 March 2022).

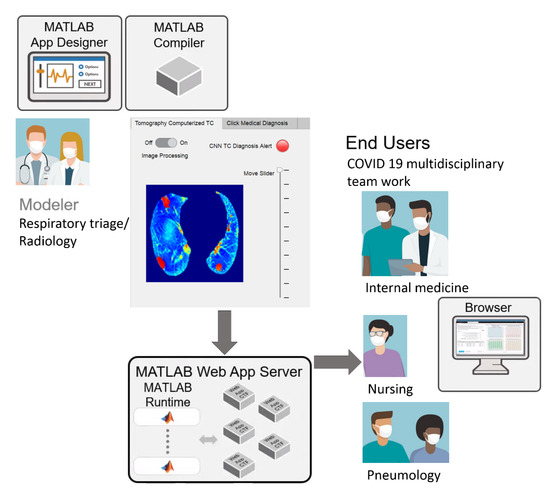

In this work, we devised a web application in MATLAB (MathWorks, Natick, MA, USA) designed to optimize shared workflows between doctors. Image processing and artificial intelligence were used to classify and diagnose the severity level of COVID-19. This system will help with the rapid hospitalization of high-risk patients while also preventing the passing of documents from hand to hand.

2. Materials and Methods

Figure 2 depicts the phases of the proposed classification method, which are detailed in the following subsections. The proposed method is accomplished in three stages. The first is PP image segmentation using an automatic active model (ACM) [27] via a Poisson inverse gradient (PIG) [28] based on our previous work [29]. Next is applying saliency maps to highlight the GGOs and PIs. Finally, CNN-based classification (three-class disease classification) is performed using the web app in MATLAB. The CT images used for the present analysis were from 54 RT-PCR-confirmed COVID-19 patients with “typical” disease presentation (GGO, PI, interlobular thickening, crazy-paving pattern, consolidation, reverse halo sign, and double reverse halo sign) [30]; all included 54 patients underwent RT-PCR confirmation for COVID-19.

2.1. Pulmonary Parenchymal Identification by Poisson Inverse Gradient

The CT images were converted to BMP files for image processing and data analysis using MATLAB. Based on our previous work [29], we used an ACM (active contour model) [27] in which the energy function (E) is minimized to identify the contours of the PP (Figure 3a). We used the ACM vector field by applying an active model via PIG (Poisson inverse gradient) [28]. The PIG method estimates the energy field (E) such that the negative gradient of E is the vector field closest to the force field F in the L2-norm sense:

where v(s) is a surface; v(s) = (x(s), y(s)) and Eint and Eext are the internal and external convolution (VFC) method energies of the image, respectively. The internal energy is defined as:

where vs and vss are the first and second derivatives of the surface v(s), respectively, and α and β are weighting parameters to constrain the degree of elasticity and rigidity of the surface, respectively. For our experiments, we used an implementation [28] where α = 0.5 and β = 0.1.

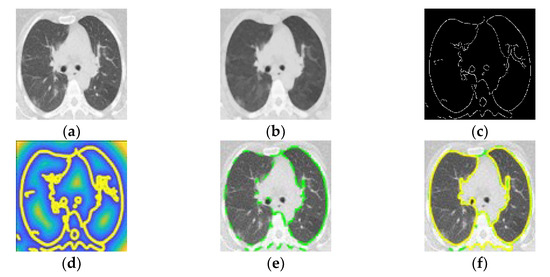

Figure 3.

(a) Computed tomography in patient with COVID-19. (b) Smoothed image. (c) Optimized edge map B. (d) External energy of the VFC field superposed with isolines. (e) PIG initialization. (f) Final snake deformation.

The edge force is the gradient of the image’s edge map B. Eext was calculated using the optimized edge map B using a Canny edge detector (Figure 3c) [31] from the smoothed image (Figure 3b). The external energy represents the image’s edge information, which is defined as:

where Gσ and I are the Gaussian function and the image, respectively, and ∇ is the gradient operator. Applying the VFC algorithm provided a smooth contour (Figure 3d) for the inner cross-section within 40 iterations (initial iteration in Figure 3e and final iteration in Figure 3f). Finally, the inner cross-sectional area of the PP was calculated by integrating the detected contour in a segmented area of the PP (Figure 4a).

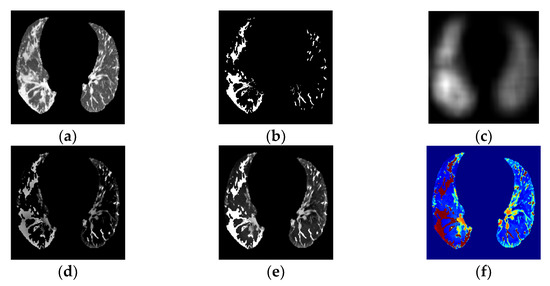

Figure 4.

(a) PP area image. (b) GGO–PI image. (c) Base fused image. (d) Detail fused image. (e) Fused image. (f) Transformed RGBjet Fused image.

2.2. Ground-Glass Opacity and Pulmonary Infiltrates Highlighted by Saliency Fusion

This subsection describes a segmentation and fusion process. An initial PP area identification (Figure 4a) and GGO–PI candidate-region selection (Figure 4b), based on the application described in our previous work [29], were followed by saliency fusion.

To identify ground-glass opacity (GGO) and pulmonary infiltrates (PIs) on segmented areas of PP, we used an efficient technique that used over-segmentation by mean shift and superpixel-SLIC (simple linear iterative clustering) on a CT image. First, by applying watershed segmentation to the mean-shift clusters, only PP segmentation-identified zones showed GGO and PIs based on the description of each watershed cluster regarding its position, grey intensity, gradient entropy, second-order texture, Euclidean position to the border region of the PI zone, and global saliency features after using TRF (tree random forest).

The objective was to isolate or highlight all regions that might be GGOs and PIs and to fuse these regions and the PP area for highlighted saliency identification.

The next step was PP and GGO–PI image fusion. Image fusion involves integrating relevant, complementary, and redundant visual information from multiple sources into a single composite image [32]. From the PP (Figure 4a) and GGO–PI (Figure 4b) images, the base fused image (Figure 4c) was extracted by combining the mean filter from both images; second, the detailed fused image (Figure 4d) was obtained by combining the median filter and the weight maps of visual saliency from both images. Last, the saliency fused image was obtained by calculating these two images (Figure 4e).

The principal contribution in this section is the saliency fusion approach for the GGOs and PIs highlighted in the PP on CT images.

The visual saliency process was used to obtain the most relevant information within the PP area to highlight identified GGO-PI, and obtain the final fusion image results using a weight-map approach (Figure 4e). This method highlighted the pertinent information in both images and combined these into a final transformed RGBjet fused image (Figure 4f) to feed to the CNN.

2.3. Convolutional Neural Network Classification for Telemedicine App

The resulting fused images (Figure 5d–f) allowed us to diagnose the evolution of COVID-19 and grade the damage caused by the infection using a CNN. The literature contains up to four categories for the standardized reporting of COVID-19-related chest CT findings proposed by the RSNA Expert Consensus Statement [33]. In addition, the prevalence of this COVID-19 CT pattern did not statistically differ over the course of the two-year pandemic, and this new type of pneumonia tends to present with “typical” radiological features in most patients [30].

Figure 5.

(a,b) Ground-glass opacity identification (red arrow marking) for three-class damage progression classification: (a) Early phase. (b) Progression phase. (c) Peak phase. (d) Class 1, early phase. (e) Class 2, progression phase. (f) Class 3, peak phase.

This section presents the application of a CNN to categorize clinical CT images into three categories based on the relationship between CT infection findings, symptoms, and symptom evolution [34,35,36]. The three evolutionary phases starting from the onset of symptoms are early, progression, and peak.

Class 1, the early phase (Figure 5a, 0 to 4 days): Slightly predominant GGO pattern and CT in 50% of the first two days may appear normal.

Class 2, the progression phase (Figure 5b, 5 to 8 days): Infection with GGO and PIs rapidly spreads and becomes bilateral. Patterns in the infected regions may appear as cobblestones and consolidations.

Class 3, the peak phase (Figure 5c, 9 to 14 days): GGO regions change to predominantly consolidation and some cobblestone regions.

The images were categorized into these three classes, with Class 3 being the final, most critical phase with the maximum peak.

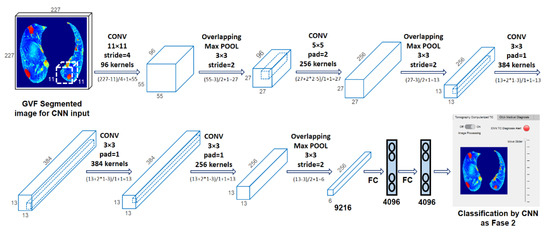

A CNN, as originally proposed by Yan LeCun et al. [37], is a deep learning neural network model with three key architectural ideas (local receptive fields, weight sharing, and sub-sampling in the spatial domain), and it consists of three main types of layers: spatial convolution layers (CONV l), subsampling pooling layers (MaxPool l), and fully connected layers (FC l).

We used a CNN based on previous studies designed to process three-dimensional (3D) images: AlexNet [38]. A CNN is optimal for feature extraction because it is hierarchical (with multiple layers for greater compactness and efficiency) and not variance-redundant (regarding position, size, luminance, rotation, pose-angle, noise, and distortion). Many comprehensive reviews of CNNs are available in the literature [39,40].

The proposed method extracts GGO–PI characteristics for classification features from the saliency image using the CNN. Our main contributions are generating input images and capturing relevant GGO–PI information to train and feed the CNN.

CNNs generally operate on thousands of standard-size input images that require prior adaptation. The advancements explored in this phase include replacing this adaptation process with a transformed RGBjet fused image. For each input CT image, transformed RGBjet fused images were generated. The images were first resized to 227 × 227 × 3 according to the AlexNet training set [41]. These RGB images (227 × 227 pixels × three color levels) were fed to the CNN (Figure 6), which was designed to extract 1043 high-level feature vectors, obtained by passing through the layers of the AlexNet architecture [41], using O = ((I – K + 2P)/S) + 1 for CONV layers and O = ((I − Ps)/S) + 1 for MaxPool layers, where O is output image size in both cases and depending on the following variables, I is the size of the input image, K is the width of the kernels (filters) used in the convolution layer, N is the number of kernels, S is the stride (pixel jumps) of the convolution operation, P is padding (fill-no), and Ps is the MaxPool filter size.

Figure 6.

Convolutional neural network [41], https://drive.google.com/drive/folders/1Q41lGFpawPvYmPZ8SNK3sgsuvjs4kB45?usp=sharing (accessed on 3 March 2022).

Figure 6 shows the evolution of the 227 × 227 × 3 input image through the different layers of the CNN. After CONV 1 (96 11 × 11 filters), the size changed to 55 × 55 × 96, which became 27 × 27 × 96 after MaxPool 1 (3 × 3 pooling). After CONV 2 (256 5 × 5 filters), the size changed to 27 × 27 × 256, and then MaxPool 2 (3 × 3 pooling) changed it to 13 × 13 × 256. CONV 3 (384 5 × 5 filters) transformed it to 13 × 13 × 384, CONV 4 (384 5 × 5 filters) preserved the size, and CONV 5 (256 5 × 5 filters) changed it back to 27 × 27 × 256. Finally, MaxPool 3 (5 × 5 pool) reduced the size to 6 × 6 × 256. This image was fed to FC 1, transforming it into a 4096 × 1 vector. These vectors contained the features of the CNN extraction for classification.

We used two-fold cross-validation, in which we randomly shuffled the dataset into two sets, d0 and d1, so that both sets were the same size. We first trained on d0 and validated on d1, followed by training on d1 and validating on d0.

The image processing results, CNN classification, and medical diagnosis on the hospital admission sheet were included in the MATLAB web app, facilitating streamlined workflow sharing. In this work, telemedicine was used as a virtual care platform that allowed for communication between respiratory triage/radiology and the COVID-19 multidisciplinary teams (Figure 7). It could be an essential medical instrument for containing the spread of COVID-19 and managing the care for COVID-19 patients in need of beds and further hospitalization.

Figure 7.

Schematic of streamlined telemedicine workflows, https://drive.google.com/drive/folders/1Q41lGFpawPvYmPZ8SNK3sgsuvjs4kB45?usp=sharing (accessed on 3 March 2022).

Hospital admissions are reserved for patients with urgent needs based on their symptoms. A patient arrives at the first contact physician (emergency physician or general physician) in respiratory triage, who, upon finding evidence of pulmonary compromise or respiratory distress, requests a CT from the radiology service. The radiologist assesses the CT image and, for cases with lung or lower-respiratory-tract involvement, refers the patient through the “referral sheet” to the second level of care. At this hospital, the CT is taken with a Philips Brilliance 16-slice helical CT scanner (Koninklijke Philips, Eindhoven, NV, Nederland).

Through the web app, the CT is sent with the CNN diagnosis and the reference sheet instead of being transferred hand-to-hand. The dated reference sheet includes the patient’s identifying information, personal history, current condition, established treatments, laboratory and imaging reports, and the diagnosis or reason for referral. Telemedicine describes a virtual care platform that enables remote diagnosis using telecommunication technology and, as such, represents an indispensable medical instrument in reducing COVID-19 spread [42,43].

3. Experimental Results

3.1. Dataset

The used dataset included 540 images (420 × 280 pixels) in the BMP format from 54 patients (10 CT images each). The ground-truth data (i.e., the identification of PP and their degree of alteration) were generated by a specialized doctor based on the GGO–PI localization.

3.2. Quantitative and Qualitative Evaluation of PP and GGO–PI Identification

To assess the segmentation quality, we compared the PP region with a correctly identified corresponding region in the ground truth. This comparison was quantified using the Zijdenbos similarity index [44], ZSI = 2*A1∩A1/|A1|+|A2|, where A1 and A2 refer to the compared regions (both binary masks). A ZSI value greater than 0.75% was considered to represent excellent agreement [44]. The PP identification results had a ZSI of 0.9484 ± 0.0030%, which indicates that the obtained segmentation agreed with the ground truth. This proves that the proposed segmentation approach can obtain results similar to those manually obtained by a medical expert. For GGO, the PI identification showed 96% precision and 96% recall in the two-fold cross-validation.

3.3. Quantitative Indicators for CNN Diagnosis

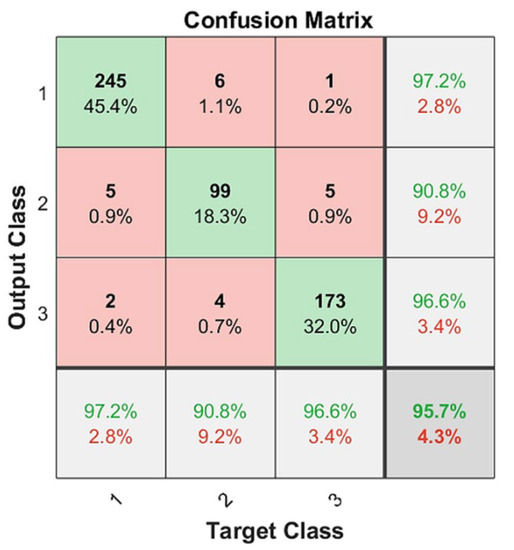

To quantitatively assess the three-class classification results and the performance of the CNN technique, several quality indicators were obtained. We used final or external quality indicators to evaluate the final classification results and to enable external comparison with other works. For multiclass problems, we used precision, recall, and F1 score metrics in a specific way. Let TP, FP, and FN be the number of true positives, false positives, and false negatives, respectively. For these metrics to be calculated in a multi-class problem, the problem needed to be treated as a set of binary problems (“one vs. all”). We then defined the precision or positive predictive value (PPV = TP/TP + FP); sensitivity, recall, or true positive rate (TPR = TP/P); and F1 score (F1 = 2*PPV*TPR/ PPV+TPR).

In this case, a metric could be calculated per class, and then the final metric was the average of the per-class metrics. These three metrics were calculated three times (Table 1). From the confusion matrix results (Figure 8), for each class, we determined the TP, FP, and FN by treating it as a “one vs. all” problem. Let P1 = 252 be the number of Class 1 cases in the dataset, P2 = 109 for Class 2, and P3 = 179 for Class 3. After conducting the same process for all of the classes, we collated and summed these values in Table 1. Next, we calculated the precision, recall, and F1 scores for each class.

Table 1.

Metrics for quantitative indicators from the two-fold cross-validation.

Figure 8.

CNN classification results on two-fold cross validation: green as correctly classified instances and red as incorrectly classified instances, https://drive.google.com/drive/folders/1Q41lGFpawPvYmPZ8SNK3sgsuvjs4kB45?usp=sharing (accessed on 3 March 2022).

Based on Table 1, the macro-average precision was calculated as the average precision of all classes (PPVmacro = (PPV1 + PPV2 + PPV3)/3); the macro-average recall was calculated as TPRmacro = (TPR1 + TPR2 + TPR3)/3; and the macro-average F1 score was calculated as F1macro = (F11 + F12 + F13)/3. These results are presented in Table 2.

Table 2.

Quantitative results on two-fold cross-validation.

Macro-averages are preferable for three-class CT diagnosis problems because these problems present a class-imbalanced dataset where one class is more important than the two others. Class 3 is the most important because it contains the patients in the most critical condition. Additionally, macro-averages are preferable because they highlight a model’s performance across all classes equally.

4. Discussion

Table 3 presents the best quality indicators for the state-of-the-art COVID-19 diagnosis classification methods.

Table 3.

Comparison of COVID-19 diagnosis classification methods (%).

Most of the deep learning publications on COVID-19 concentrate on the classification of COVID-19 or non-COVID-19. Alternatively, RT-PCR results can be used to perform this classification.

In this work, CT was used for patient hospitalization based on the progress of the disease (early, progression, or peak). However, our study was limited by the small size of the true training and test sets that therefore may not represent the entire patient population.

We compared the results of our work with published methods based on deep learning developments [21,22,23,24,25,26]. Although the methods and datasets (training and testing) used here and elsewhere differed, we compare respective results, as summarized in Table 3. The methods derived in [25,26] achieved better results than ours but only [26] classified patients into three classes, and none employed two-fold cross-validation.

Despite our dataset being relatively sparse, we outperformed radiologists on a high-quality subset of test data. More importantly, a respiratory triage/radiologist and a COVID-19 multidisciplinary team used our model to review and revise their clinical decisions, which suggests the importance of this promising clinical visualization instrument.

In addition, the proposed deep learning classifier obtained three-class classification results unlike other studies regarding the diagnosis of COVID-19 abnormalities. To some extent, these comparisons and our classification results confirm that the studied CNN approach is valid; for a dataset containing 540 COVID-19 images (252 early phase, 109 progression phase, and 179 peak phase), we obtained values of PPV = 0.9489, TPR = 0.9043, and F1 = 0.9259 using the most stringent two-fold cross-validation compared to other similar “state-of-the-art” studies.

5. Conclusions

The identification of the PP in CT images is generally accepted as a prerequisite to aid in the segmentation of lung regions and extract features for candidate infection-region detection or COVID-19 classification (healthy or infected). In this study, we proposed a method based on PIG to identify and segment the PP in CT images in practical situations using a MATLAB app. We obtained identification results with a ZSI of 0.9483 ± 0.0031% and segmentation results comparable to those obtained by a medical specialist in a representative dataset with a precision of 96% and a recall of 96% in two-fold cross-validation for GGO and PI identification.

To classify the CT images for disease diagnosis in COVID-19 patients, our main contributions were the novel use of over-segmentation and a GGO-highlighted fusion saliency technique for the precise CNN classification of segmented regions into three classes (early, progression, and peak). We obtained classification results with a macro-precision of more than 94.89% in a two-fold cross-validation. We thoroughly analyzed reported works on infection-region detection and COVID-19 classification. We demonstrated why the model presented here represents the next logical step in progressing the field of automated CT image analysis (application for telemedicine). We discussed our results while assessing the novelty and quality of our achievements in the absence of a systematic framework to objectively compare our results.

Furthermore, we presented an app based on the MATLAB platform. This interface includes CT and image processing analysis, and it presents GGO–PI with the highlighted identification of the PP by saliency map fusion and CNN classification results (early, progression, or peak phase). In addition, the reference sheet was digitized and no longer requires being passed along hand-to-hand.

Author Contributions

Conceptualization, methodology, formal analysis, investigation, writing—original draft preparation, writing—review and editing, visualization, supervision, and project administration, S.T.-M. and F.W.; software and resources, S.T.-M.; validation and data curation, F.W.; all authors have read and agreed to the published version of the manuscript. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data and materials are available for research purposes.

Acknowledgments

This work was partially supported by Internal Grant “ITSLerdo #001-POSG-2020”. This study was partially supported by Internal Grant no. 312304 from the F0005-2020-1 CONACYT Support for Scientific Research Projects, Technological Development, and Innovation in Health in the Face of Contingency by COVID-19.312304 from the F0005-2020-1.

Conflicts of Interest

The authors declare no conflict of interest.

References

- World Health Organization. Statement on the Second Meeting of the International Health Regulations (2005) Emergency Committee Regarding the Outbreak of Novel Coronavirus (2019-nCoV). Available online: https://www.who.int/news-room/detail/30-01-2020-statement-on-the-second-meeting-of-the-international-health-regulations-(2005)-emergency-committee-regarding-the-outbreak-of-novel-coronavirus-(2019-ncov) (accessed on 30 January 2020).

- World Health Organization. WHO Director-General’s Opening Remarks at the Media Briefing on COVID-19. Available online: https://www.who.int/dg/speeches/detail/who-director-general-s-opening-remarks-at-the-media-briefing-on-covid-19—11-march-2020 (accessed on 11 March 2020).

- Stogiannos, N.; Fotopoulos, D.; Woznitza, N.; Malamateniou, C. COVID-19 in the radiology department: What radiographers need to know. Radiography 2020, 26, 254–263. [Google Scholar] [CrossRef]

- Wong, H.Y.F.; Lam, H.Y.S.; Fong, A.H.-T.; Leung, S.T.; Chin, T.W.-Y.; Lo, C.S.Y. Frequency and Distribution of Chest Ra-diographic Findings in COVID-19 Positive Patients. Radiology 2020, 296, E72–E78. [Google Scholar] [CrossRef] [Green Version]

- Zhang, N.; Xu, X.; Zhou, L.-Y.; Chen, G.; Li, Y.; Yin, H. Clinical characteristics and chest CT imaging features of critically ill COVID-19 patients. Eur. Radiol. 2020, 30, 6151–6160. [Google Scholar] [CrossRef]

- Xie, X.; Zhong, Z.; Zhao, W.; Zheng, C.; Wang, F.; Liu, J. Chest CT for Typical Coronavirus Disease 2019 (COVID-19) Pneumonia: Relationship to Negative RT-PCR Testing. Radiology 2020, 296, E41–E45. [Google Scholar] [CrossRef] [Green Version]

- Zhao, W.; Zhong, Z.; Xie, X.; Yu, Q.; Liu, J. Relation Between Chest CT Findings and Clinical Conditions of Coronavirus Disease (COVID-19) Pneumonia: A Multicenter Study. AJR Am. J. Roentgenol. 2020, 214, 1072–1077. [Google Scholar] [CrossRef]

- Hani, C.; Trieu, N.H.; Saab, I.; Dangeard, S.; Bennani, S.; Chassagnon, G. COVID-19 pneumonia: A review of typical CT findings and differential diagnosis. Diagn. Interv. Imaging 2020, 101, 263–268. [Google Scholar] [CrossRef]

- Liu, K.-C.; Xu, P.; Lv, W.-F.; Qiu, X.-H.; Yao, J.-L.; Gu, J.-F. CT manifestations of coronavirus disease-2019: A retrospective analysis of 73 cases by disease severity. Eur. J. Radiol. 2020, 126, 108941. [Google Scholar] [CrossRef]

- Luo, N.; Zhang, H.; Zhou, Y.; Kong, Z.; Sun, W.; Huang, N. Utility of chest CT in diagnosis of COVID-19 pneumonia. Diagn. Interv. Radiol. 2020, 26, 437–442. [Google Scholar] [CrossRef]

- Chung, M.; Bernheim, A.; Mei, X.; Zhang, N.; Huang, M.; Zeng, X. CT Imaging Features of 2019 Novel Coronavirus (2019-nCoV). Radiology 2020, 295, 202–207. [Google Scholar] [CrossRef] [Green Version]

- Wang, Y.-C.; Luo, H.; Liu, S.; Huang, S.; Zhou, Z.; Yu, Q. Dynamic evolution of COVID-19 on chest computed tomography: Experience from Jiangsu Province of China. Eur. Radiol. 2020, 30, 6194–6203. [Google Scholar] [CrossRef]

- Guan, W.; Ni, Z.; Hu, Y.; Liang, W.; Ou, C.; He, J. Clinical Characteristics of Coronavirus Disease 2019 in China. N. Engl. J. Med. 2020, 382, 1708–1720. [Google Scholar] [CrossRef] [PubMed]

- Farina, D.; Rondi, P.; Botturi, E.; Renzulli, M.; Borghesi, A.; Guelfi, D.; Ravanelli, M. Gastrointestinal: Bowel ischemia in a suspected coronavirus disease (COVID-19) patient. J. Gastroenterol. Hepatol. 2021, 36, 41. [Google Scholar] [CrossRef] [PubMed]

- Brandi, N.; Ciccarese, F.; Rimondi, M.R.; Balacchi, C.; Modolon, C.; Sportoletti, C.; Golfieri, R. An Imaging Overview of COVID-19 ARDS in ICU Patients and Its Complications: A Pictorial Review. Diagnostics 2022, 12, 846. [Google Scholar] [CrossRef] [PubMed]

- Yang, Q.; Liu, Q.; Xu, H.; Lu, H.; Liu, S.; Li, H. Imaging of coronavirus disease 2019: A Chinese expert consensus statement. Eur. J. Radiol. 2020, 127, 109008. [Google Scholar] [CrossRef]

- Li, M. Chest CT features and their role in COVID-19. Radiol. Infect. Dis. 2020, 7, 51–54. [Google Scholar] [CrossRef]

- Greff, K.; Srivastava, R.; Jan, K.; Steunebrink, B. LSTM: A search space odyssey. IEEE Trans. Neural Netw. Learn. Syst. 2017, 28, 2222–2232. [Google Scholar] [CrossRef] [Green Version]

- Skalic, M.; Jiménez, J.; Sabbadin, D.; De, G. Shape-based generative modeling for de novo drug design. J. Chem. Info. Model. 2019, 59, 1205–1214. [Google Scholar] [CrossRef]

- Pham, Q.V.; Nguyen, D.C.; Huynh-The, T.; Hwang, W.J.; Pathirana, P.N. Artificial intelligence (AI) and big data for coro-navirus (COVID-19) pandemic: A survey on the state-of-the-arts. IEEE Access 2020, 8, 130820–130839. [Google Scholar] [CrossRef]

- Mobiny, A.; Cicalese, P.A.; Zare, S.; Yuan, P.; Abavisani, M.; Wu, C.C.; Van Nguyen, H. Radiologist-level COVID-19 detection using CT scans with detail-oriented capsule networks. arXiv 2020, arXiv:2004.07407. [Google Scholar] [CrossRef]

- Amyar, A.; Modzelewski, R.; Li, H.; Ruan, S. Multi-task deep learning based CT imaging analysis for COVID-19 pneumonia: Classification and segmentation. Comput. Biol. Med. 2020, 126, 104037. [Google Scholar] [CrossRef]

- Polsinelli, M.; Cinque, L.; Placidi, G. A light CNN for detecting COVID-19 from CT scans of the chest. Pattern Recognit. Lett. 2020, 140, 95–100. [Google Scholar] [CrossRef] [PubMed]

- Li, L.; Qin, L.; Xu, Z.; Yin, Y.; Wang, X.; Kong, B.; Xia, J. Using artificial intelligence to detect COVID-19 and community-acquired pneumonia based on pulmonary CT: Evaluation of the diagnostic accuracy. Radiology 2020, 296, E65–E71. [Google Scholar] [CrossRef] [PubMed]

- Konar, D.; Panigrahi, B.K.; Bhattacharyya, S.; Dey, N.; Jiang, R. Auto-diagnosis of COVID-19 using lung CT images with semi-supervised shallow learning network. IEEE Access 2021, 9, 28716–28728. [Google Scholar] [CrossRef]

- Wu, Y.H.; Gao, S.H.; Mei, J.; Xu, J.; Fan, D.P.; Zhang, R.G.; Cheng, M.M. Jcs: An explainable Covid-19 diagnosis system by joint classification and segmentation. IEEE Trans. Image Process. 2021, 30, 3113–3126. [Google Scholar] [CrossRef] [PubMed]

- Kass, M.; Witkin, A.; Terzopoulos, D. Snake: Snake energy models. Int. J. Comput. Vis. 1988, 1, 321–331. [Google Scholar] [CrossRef]

- Li, B.; Acton, S.T. Automatic active model initialization via Poisson inverse gradient. IEEE Trans. Image Process. 2008, 17, 1406–1420. [Google Scholar] [CrossRef]

- Tello-Mijares, S.; Woo, L. Computed tomography image processing analysis in COVID-19 patient follow-up assessment. J. Healthc. Eng. 2021, 2021, 8869372. [Google Scholar] [CrossRef]

- Balacchi, C.; Brandi, N.; Ciccarese, F.; Coppola, F.; Lucidi, V.; Bartalena, L.; Golfieri, R. Comparing the first and the second waves of COVID-19 in Italy: Differences in epidemiological features and CT findings using a semi-quantitative score. Emerg. Radiol. 2021, 28, 1055–1061. [Google Scholar] [CrossRef]

- Canny, J.F. A computation approach to edge detection. IEEE Trans. Pattern Anal. Mach. Intell. 1986, 8, 670–700. [Google Scholar] [CrossRef]

- Tello-Mijares, S.; Bescós, J. Region-based multifocus image fusion for the precise acquisition of Pap smear images. J. Biomed. Opt. 2018, 23, 056005. [Google Scholar] [CrossRef] [Green Version]

- De Jaegere, T.M.; Krdzalic, J.; Fasen, B.A.; Kwee, R.M. COVID-19 CT Investigators South-East Netherlands (CISEN) study group. Radiological Society of North America chest CT classification system for reporting COVID-19 pneumonia: Interobserver variability and correlation with RT-PCR. Radiol. Cardiothorac. Imaging 2020, 2, e200213. [Google Scholar] [CrossRef] [PubMed]

- Wu, J.; Wu, X.; Zeng, W.; Guo, D.; Fang, Z.; Chen, L. Chest CT. Findings in Patients with Coronavirus Disease 2019 and Its Relationship with Clinical Features. Invest Radiol. 2020, 55, 257–261. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Dong, C.; Hu, Y.; Li, C.; Ren, Q.; Zhang, X. Temporal Changes of CT Findings in 90 Patients with COVID-19 Pneu-monia: A Longitudinal Study. Radiology 2020, 296, E55–E64. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Prokop, M.; Van Everdingen, W.; Van Rees Vellinga, T.; Quarles Van Ufford, J.; Stöger, L.; Beenen, L. CO-RADS—A categorical CT assessment scheme for patients with suspected COVID-19: Definition and evaluation. Radiology 2020, 296, E97–E104. [Google Scholar] [CrossRef] [PubMed]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef] [Green Version]

- Krizhevsky, A.; Ilya, S.; Geoffrey, E.H. ImageNet Classification with Deep Convolutional Neural Networks. Adv. Neural Inf. Proc. Syst. 2012, 25, 1097–1105. [Google Scholar] [CrossRef]

- Ajit, A.; Acharya, K.; Samanta, A. A review of convolutional neural networks. In Proceedings of the 2020 International Conference on Emerging Trends in Information Technology and Engineering (ic-ETITE), Vellore, India, 24–25 February 2020; pp. 1–5. [Google Scholar] [CrossRef]

- Vargas-Hákim, G.A.; Mezura-Montes, E.; Acosta-Mesa, H.G. A Review on Convolutional Neural Networks Encodings for Neuroevolution. IEEE Trans. Evol. Comput. 2022, 26, 12–27. [Google Scholar] [CrossRef]

- Heravi, E.J.; Aghdam, H.H.; Puig, D. Classification of Foods Using Spatial Pyramid Convolutional Neural Network. In Frontiers in Artificial Intelligence and Applications; IOS Press: Amsterdam, The Netherlands, 2016; pp. 163–168. [Google Scholar]

- Greenhalgh, T.; Wherton, J.; Shaw, S.; Morrison, C. Video consultations for COVID-19. BMJ 2020, 368, m998. [Google Scholar] [CrossRef] [Green Version]

- Ohannessian, R.; Duong, T.A.; Odone, A. Global Telemedicine Implementation and Integration within Health Systems toFight the COVID-19 Pandemic: A Call to Action. JMIR Public Health Surveill. 2020, 6, e18810. [Google Scholar] [CrossRef]

- Zijdenbos, A.; Dawant, B.; Margolin, R.; Palmer, A. Morphometric analysis of white matter lesions in MR images: Method and validation. IEEE Trans. Med. Imag. 1994, 13, 716–724. [Google Scholar] [CrossRef] [Green Version]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).