Abstract

This paper discusses a hybrid grey wolf optimizer utilizing a clone selection algorithm (pGWO-CSA) to overcome the disadvantages of a standard grey wolf optimizer (GWO), such as slow convergence speed, low accuracy in the single-peak function, and easily falling into local optimum in the multi-peak function and complex problems. The modifications of the proposed pGWO-CSA could be classified into the following three aspects. Firstly, a nonlinear function is used instead of a linear function for adjusting the iterative attenuation of the convergence factor to balance exploitation and exploration automatically. Then, an optimal α wolf is designed which will not be affected by the wolves β and δ with poor fitness in the position updating strategy; the second-best β wolf is designed, which will be affected by the low fitness value of the δ wolf. Finally, the cloning and super-mutation of the clonal selection algorithm (CSA) are introduced into GWO to enhance the ability to jump out of the local optimum. In the experimental part, 15 benchmark functions are selected to perform the function optimization tasks to reveal the performance of pGWO-CSA further. Due to the statistical analysis of the obtained experimental data, the pGWO-CSA is superior to these classical swarm intelligence algorithms, GWO, and related variants. Furthermore, in order to verify the applicability of the algorithm, it was applied to the robot path-planning problem and obtained excellent results.

1. Introduction

The metaheuristic algorithm is an improvement of the heuristic algorithm combined with a random algorithm and local search algorithm to implement optimization tasks. In recent years, metaheuristic optimization has made some recent developments. Jiang X et al. proposed optimal pathfinding with a beetle antennae search algorithm using ant colony optimization initialization and different searching strategies [1]. Khan A H et al. proposed BAS-ADAM: an ADAM-based approach to improving the performance of beetle antennae search optimizer [2]. Ye et al. proposed a modified multi-objective cuckoo search mechanism and applied this algorithm to the obstacle avoidance problem of multiple uncrewed aerial vehicles (UAVs) for seeking a safe route by optimizing the coordinated formation control of UAVs to ensure the horizontal airspeed, yaw angle, altitude, and altitude rate are converged to the expected level within a given time for inverse kinematics and optimization [3]. Khan et al. proposed using the social behavior of beetles to establish a computational model for operational management [4]. As one of the latest metaheuristic algorithms, grey wolf optimizer (GWO) is widely employed to settle real industrial issues because GWO maintains a balance between exploitation and exploration through dynamic parameters and has a strong ability to explore the rugged search space of the problem [5,6], such as the selection problem [7,8,9], privacy protection issue [10], adaptive weight problem [11], smart home scheduling problem [12], prediction problem [13,14,15], classification problem [16], and optimization problem [17,18,19,20].

Although the theoretical analysis and industrial applications using GWO have gained fruitful achievements, there still exist some shortcomings that hinder the further developments of GWO, such as slow convergence speed and low accuracy in the single-peak function and easily falling into local optimum in the multi-peak function and complex problems. Recently, various GWO variants have been investigated to overcome the mentioned shortages. Mittal et al. proposed a modified GWO (mGWO) to solve the balance problem between the exploitation and exploration of GWO. The main contribution is to propose an exponential function instead of a linear function to adjust the iterative attenuation of parameter a. Experimental results proved that the mGWO improves the effectiveness, efficiency, and stability of GWO [21]. Saxena et al. proposed a β-GWO to improve the exploitation and exploration of GWO by embedding a β-chaotic sequence into parameter a through a normalized mapping method. Experimental results demonstrated that β-GWO had good exploitation and exploration performance [22]. Long et al. proposed the exploration-enhanced GWO (EEGWO) to overcome GWO’s weakness of good exploitation but poor exploration based on two modifications. Meanwhile, a random individual is used to guide the search for new individual candidates. Furthermore, a nonlinear control parameter strategy is introduced to obtain a good exploitation effect and avoid poor exploration effects. Experimental results illustrated that the proposed EEGWO algorithm significantly improves the performance of GWO [23]. Gupta and Deep proposed an RW-GWO to improve the search capability of GWO. The main contribution is to propose an improved method based on a random walk. Experimental results showed that RW-GWO provides a better lead in searching for grey wolf prey [24]. Teng et al. proposed a PSO_GWO to solve the problem of slow convergence speed and low accuracy of the grey wolf optimization algorithm. The main contribution can be divided into three aspects: firstly, a Tent chaotic sequence is used to initialize individual positions; secondly, a nonlinear control parameter is used; finally, particle swarm optimization (PSO) is combined with GWO. Experimental results showed that PSO_GWO could better search the optimal global solution and have better robustness [25]. Gupta et al. proposed SC-GWO to solve the balance problem of exploitation and exploration. The main contribution is the combination of the sine and cosine algorithm (SCA) and GWO. Experimental results showed that SC-GWO has good robustness to problem scalability [26]. In order to improve the performance of GWO in solving complex and multimodal functions, Yu et al. proposed an object-based learning wolf optimizer (OGWO). Without increasing the computational complexity, the algorithm integrates the opposing learning method into GWO in the form of a jump rate, which helps the algorithm jump out of the local optimum [27]. To improve the iterative convergence speed of GWO, Zhang et al. also improved the algorithm flow of GWO and proposed two dynamic GWOs (DGWO1 and DGWO2) [28].

Although these GWO variants improve the convergence speed and accuracy in the single-peak function and have the ability to jump out of local optimum in the multi-peak function and complex problems, they still have the disadvantages of slow convergence speed and low accuracy and easily falling into local optimum while solving some complex problems. To overcome these shortages, an improved GWO is proposed in this paper by combining it with a clonal selection algorithm (CSA) to improve the convergence speed, accuracy, and jump out of the local optimum of standard GWO. The proposed algorithm is called pGWO-CSA. The core improvements could be classified into the following points:

Firstly, a nonlinear function is used instead of a linear function for adjusting the iterative attenuation of the convergence factor to balance exploitation and exploration automatically.

Secondly, the pGWO-CSA adopts a new position updating strategy, and the position updating of α wolf is no longer affected by the wolves β and δ with poor fitness. The position updating of the β wolf is no longer affected by the low fitness value of the δ wolf.

Finally, the pGWO-CSA combines GWO with CSA and introduces the cloning and super-mutation of the CSA into GWO to improve GWO’s ability to jump out of local optimum.

In the experimental part, 15 benchmark functions are selected to perform the function optimization tasks to reveal the performance of pGWO-CSA further. Firstly, pGWO-CSA is compared with other swarm intelligence algorithms, particle swarm optimization (PSO) [29], differential evolution (DE) [30], and firefly algorithm (FA) [31]. Then pGWO-CSA is compared with GWO [5] and its variants OGWO [27], DGWO1, and DGWO2 [28]. Due to the statistical analysis of the obtained experimental data, the pGWO-CSA is superior to these classical swarm intelligence algorithms, GWO, and related variants.

The rest sections are organized as follows. Section 2 introduces the GWO and CSA, Section 3 introduces the improvement ideas and reasons for pGWO-CSA in detail, Section 4 mainly introduces experimental tests, Section 5 introduces the robot path-planning problem, and Section 6 is the summary of the whole paper.

2. GWO and CSA

This section mainly introduces the relevant concepts and algorithm ideas of GWO and CSA to provide theoretical support for subsequent improvement research.

2.1. GWO

GWO is a swarm intelligence algorithm proposed by Mirjalili et al. in 2014, which is inspired by the hunting behavior of grey wolves [5]. In nature, grey wolves like to live in packs and have a very strict social hierarchy. There are four types of wolves in the pack, ranked from highest to lowest in the social hierarchy: the α wolf, β wolf, δ wolf, and ω wolf. The GWO is also based on the social hierarchy of grey wolves and their hunting behavior, and its specific mathematical model is as follows.

- (1)

- Surround the prey

In the process of hunting, in order to surround the prey, it is necessary to calculate the distance between the current grey wolf and the prey and then update the position according to the distance. The behavior of grey wolves rounding up prey is defined as follows:

and

where Formula (1) is the updating formula of the grey wolf’s position, and Formula (2) is the calculation formula of the distance between the grey wolf individual and prey. Variable t is the current iteration number, and are the current position vectors of the prey and the grey wolf at iteration t, respectively. and are coefficient vectors calculated by Formula (3) and Formula (4), respectively.

and

where is the convergence factor, and linearly decreases from 2 to 0 as the number of iterations increases. and are random vectors in [0, 1]. Formula (5) is the calculation formula and indicates the maximum number of iterations.

- (2)

- Hunting

In an abstract search space, the position of the optimal solution is uncertain. In order to simulate the hunting behavior of grey wolves, α, β, and δ wolves are assumed to have a better understanding of the potential location of prey. α wolf is regarded as the optimal solution, β wolf is regarded as the suboptimal solution, and δ wolf is regarded as the third optimal solution. Other gray wolves update their positions based on α, β, and δ wolves, and the calculation formulas are as follows:

and

where represents the distance between the current grey wolf and α wolf; represents the distance between the current grey wolf and β wolf; represents the distance between the current grey wolf and δ wolf; and , , and represent the position vectors of α wolf, β wolf, and δ wolf, respectively. is the current position of the grey wolf. , , and are random vectors, calculated by Formula (4). , , and are determined by Formula (3). Formula (7) represents the step length and direction of grey wolf individuals to α, β, and δ wolves, and Formula (8) is the position-updating formula of grey wolf individuals.

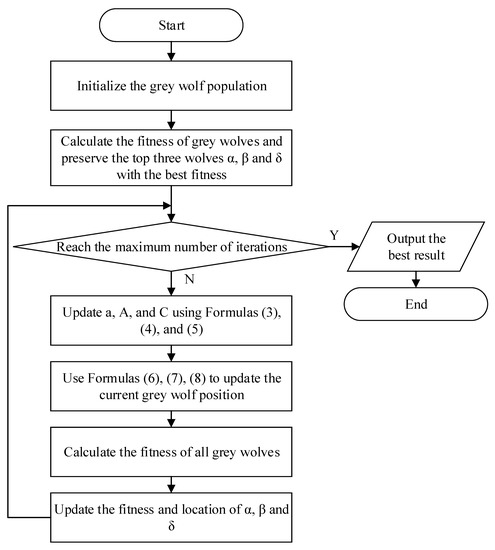

According to the description above, the algorithm flow chart of GWO is shown in Figure 1.

Figure 1.

Flow chart of GWO algorithm.

2.2. CSA

The CSA was proposed by De Castro and Von Zuben in 2002 according to the clonal selection theory [32]. The CSA simulates the mechanism of immunological multiplication, mutation, and selection and is widely used in many problems.

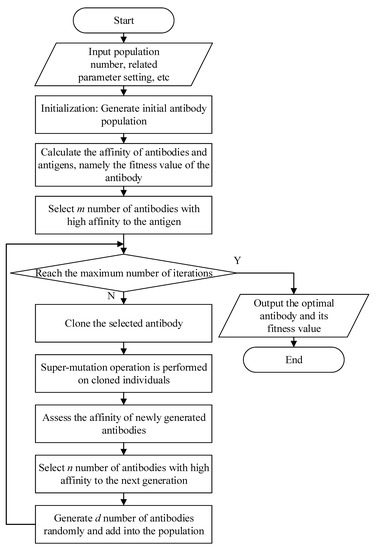

For the convenience of model design, the principle of the biological immune system is simplified. All substances that do not belong to themselves are regarded as antigens. When the immune system is stimulated by antigens, antibodies will be produced to bind to antigens specifically. The stronger the association between antigen and antibody, the higher the affinity. Then, the antibodies with high antigen affinity are selected to multiply and differentiate between binding to the antigens, increase their antigen affinity through super-mutation, and finally eliminate the antigens. In addition, some of the antibodies are converted into memory cells in order to respond quickly to the same or similar antigens in the future. In the CSA, the problem that needs to be solved is regarded as the antigen, and the solution to the problem is regarded as the antibody. At the same time, the receptor-editing mechanism is adopted to avoid falling into the local optimum. The flow chart of CSA is shown in Figure 2.

Figure 2.

Flow chart of CSA.

The above is the introduction of the GWO and CSA. The proposed algorithm in this paper is also inspired by GWO and CSA. The pGWO-CSA proposed in this paper is introduced in detail in Section 3 below.

3. The Proposed pGWO-CSA

In order to improve the convergence speed and accuracy in the single-peak function and the ability to jump out of local optimum in the multi-peak function and complex problems: Firstly, a nonlinear function is used instead of a linear function for adjusting the iterative attenuation of convergence factor to balance exploitation and exploration automatically; Secondly, the pGWO-CSA adopts a new position-updating strategy, and different position-updating strategies are used for α wolf, β wolf, and other wolves, so that the position updating of α wolf and β wolf are not affected by the wolves with lower fitness; Finally, the pGWO-CSA combines GWO with CSA and introduce the cloning and super-mutation of the CSA into GWO.

The detailed improvement strategy is as follows.

3.1. Replace Linear Function with Nonlinear Function

In GWO, decreases from 2 to 0 as the number of iterations increases, and the range of decreases as decreases. According to Formulas (6) and (7), when , the next position of the grey wolf can be anywhere between the current position and the prey, and the grey wolf approaches the prey guided by α, β, and δ. When , the grey wolf moves away from the current α, β, and δ wolves and searches for the optimal global value. Therefore, when , grey wolves approach their prey for exploitation. When , grey wolves move away from their prey for exploration. In the original GWO, the parameter linearly decreases from 2 to 0, with half of the iterations devoted to exploitation and half to exploration. In order to balance exploitation and exploration, the pGWO-CSA adopts a nonlinear function instead of a linear function to adjust the iterative attenuation of parameter so as to enhance the exploration ability of the grey wolf at the early stage of iteration. In pGWO-CSA, parameter is calculated by Formula (9).

where variable t is the current iteration number, is the maximum iteration number, and u is the coefficient, where the value in this paper is 2.

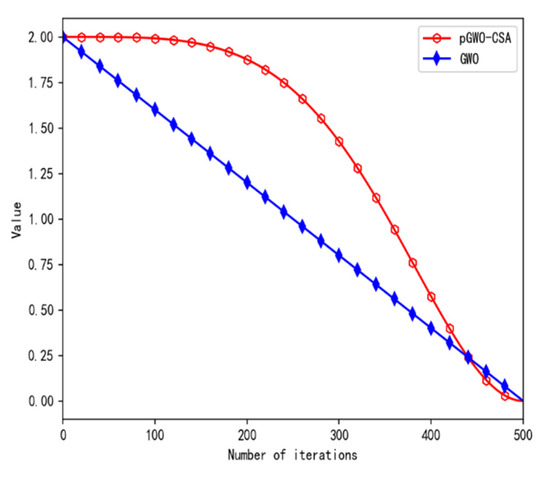

Iterative curves of parameter in the original GWO and pGWO-CSA are shown in Figure 3.

Figure 3.

Iterative curve of parameter .

As can be seen from Figure 3, the convergence factor slowly decays in the early stage, improving the global search ability, and rapidly decays in the later stage, accelerating the search speed and optimizing the global exploration and local development ability of the algorithm.

3.2. Improve the Grey Wolf Position Updating Strategy

In the original grey wolf algorithm, the positions of all grey wolves in each iteration are updated by Formulas (6)–(8). In the position-updating strategy, the position updating of the α wolf is affected by the β wolf and δ wolf with poor fitness. The position updating of the β wolf is affected by the δ wolf with poor fitness.

Therefore, a new location updating strategy is proposed in this paper. In each iteration, the fitness of grey wolves is calculated, and the top three wolves α, β, and δ with the best fitness are saved and recorded. The specific location update formula is as follows.

where , , and are determined by Formula (7). represents the pre-update position. On this basis, if the current α wolf and β wolf are close to the optimal solution, α wolf and β wolf have a greater probability to update to the position with better fitness so as to better guide wolves to hunt the prey and find the optimal solution. If α wolf and β wolf are in the local optimum, other wolves still update their positions according to Formulas (6)–(8) so that algorithm will not fall into the local optimum. Therefore, the proposed improved method can not only improve the exploitation capability but also not affect the exploration capability.

3.3. Combine GWO with CSA

GWO is combined with CSA by introducing the cloning and super-mutation of the CSA into GWO, and the exploitation and exploration ability of GWO is improved. For each grey wolf, a super-mutation coefficient (Sc) and a random number (r3) are introduced. The wolf with good adaptability has a small coefficient of super-variance and a small probability of variation, while the wolf with poor adaptability has a large probability of variation. If the super-variation coefficient Sc of the current grey wolf is greater than the random number r3, the current grey wolf will be cloned, and then the cloned grey wolf will be mutated through Formulas (6)–(8). If the mutated grey wolf has comparatively better adaptability, it will replace the current grey wolf. The specific calculation formula is as follows.

and

where represents the fitness of the current grey wolf, represents the fitness of the best wolf, and represents the fitness of the worst wolf. is a random number between [0, 1]. is determined by Formula (10). represents the best between and as the result of the variation of through Formulas (6)–(8).

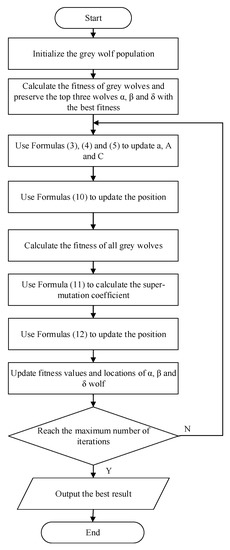

3.4. Algorithm Flow Chart of pGWO-CSA

According to the improvement idea mentioned above, the algorithm flow chart of pGWO-CSA is shown in Figure 4.

Figure 4.

Flow chart of pGWO-CSA.

3.5. Time Complexity Analysis of the Algorithm

Assuming that the population size is N, the dimension of objective function F is Dim, and the number of iterations is T, the time complexity of the pGWO-CSA algorithm can be calculated as follows.

First, the time complexity required to initialize the grey wolf population is O(N × Dim), the time complexity required to calculate the fitness of all grey wolves is O(N × F (Dim)), and the time complexity required to preserve the location of the best three wolves is O(3 × Dim).

Then, in each iteration, the time complexity required to complete all grey wolves’ position updating is O(N × Dim), the time complexity required to update a, A, and C is O(1), and the time complexity required to calculate the fitness of all grey wolves is O(N × F(Dim)). The time complexity of cloning and super-mutation is O(N1 × Dim), where N1 is the number of wolves meeting the mutating condition, and the time complexity of updating the fitness and location of α, β, and δ is O(3 × Dim). The total iteration is T times. So, the total time complexity is O(T × N × Dim) + O(T) + O(T × N × F (Dim)) + O(T × N × Dim) + O(T × 3 × Dim).

So, in the worst case, the time complexity of the whole algorithm is O(N × Dim) + O(N × F(Dim)) + O(3 × Dim) + O(T × N × Dim) + O(T) + O(T × N × F(Dim)) + O(T × N × Dim) + O(T × 3 × Dim)≈O(T × N × (Dim + F(Dim))).

4. Experimental Test

In this section, 15 benchmark functions from F1–F15 are selected to test the performance of pGWO-CSA. Firstly, pGWO-CSA is compared with other swarm intelligence algorithms. Then pGWO-CSA is compared with GWO and its variants. Table 1 describes these benchmark functions in detail. Section 4.1 will compare pGWO-CSA with other swarm intelligence algorithms. Section 4.2 will compare pGWO-CSA with GWO and its variants.

Table 1.

Detailed description of the test functions F1–F15.

Among these benchmark functions, the first seven benchmark functions, F1–F7, are simpler, while the last eight benchmark functions, F8–F15, are more complex. The dimension of these benchmark functions is 30 dimensions and 50 dimensions. The population size of all algorithms is set to 30, the maximal iteration of all algorithms is set to 500, and all experimental data are measured on the same computer to ensure a fair comparison between different algorithms. In order to avoid the randomness of the algorithm, each algorithm in this paper will be run on each test function 30 times. At the same time, the mean, standard deviation, and minimum and maximum values of the running results are recorded.

4.1. Compare with Other Swarm Intelligence Algorithms

In the comparison between pGWO-CSA and other swarm intelligence algorithms, PSO [29], DE [30], and FA [31] are selected to compare with pGWO-CSA. For each algorithm, the function optimization task is performed on the test functions F1–F15 in 30 dimensions and 50 dimensions, and the mean, standard deviation, and minimum and maximum values of the running results are recorded. The main parameters of the PSO, DE, FA, and pGWO-CSA are shown in Table 2.

Table 2.

Main parameters of the four algorithms.

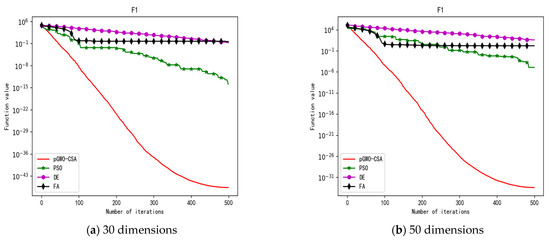

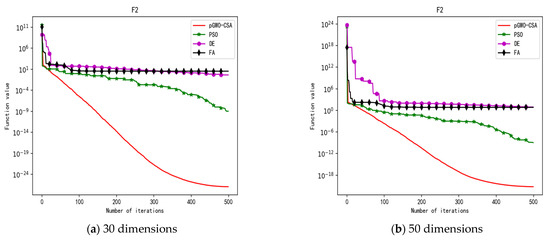

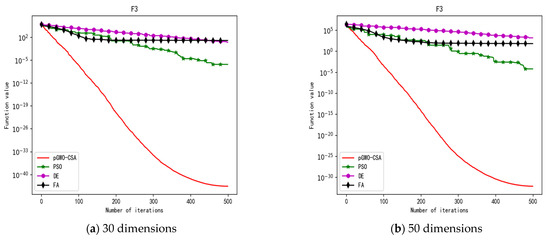

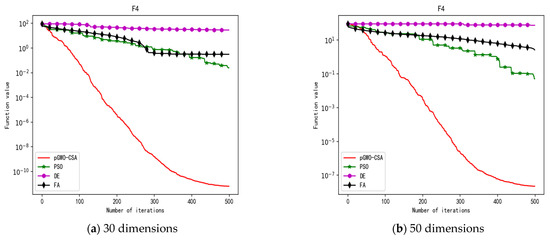

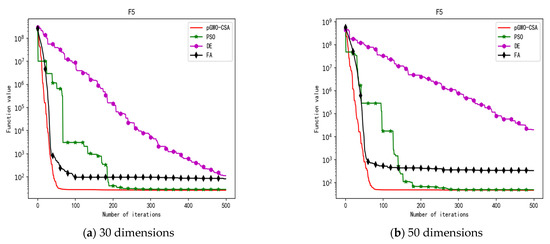

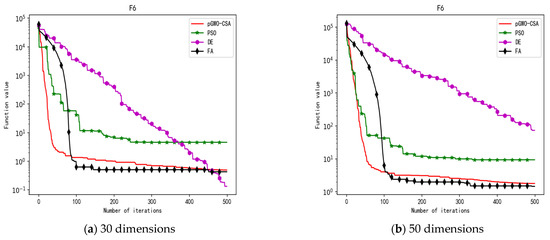

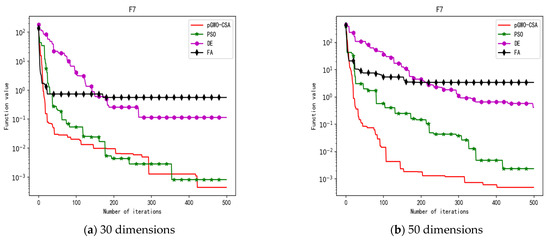

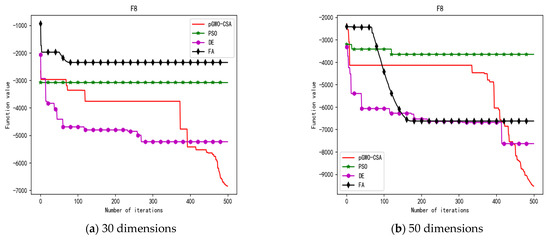

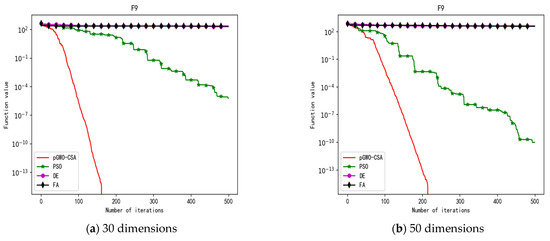

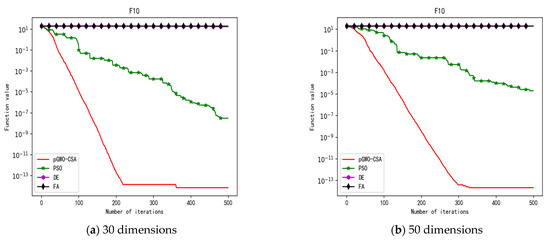

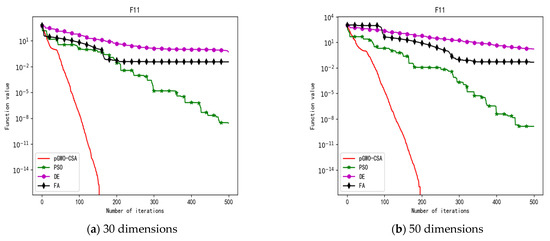

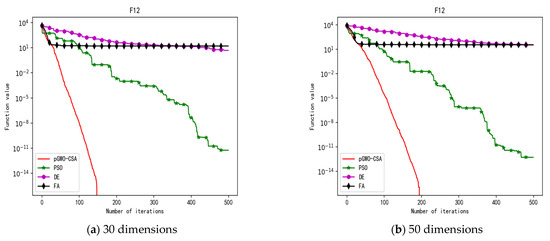

In 30 dimensions, the test results of these four algorithms on test function F1–F15 are shown in Table 3. The convergence curves of these four algorithms on test function F1–F15 are recorded in Figure 5a, Figure 6a, Figure 7a, Figure 8a, Figure 9a, Figure 10a, Figure 11a, Figure 12a, Figure 13a, Figure 14a, Figure 15a, Figure 16a, Figure 17a, Figure 18a and Figure 19a.

Table 3.

The experimental results under 30 dimensions.

Figure 5.

Convergence curve of test function F1.

Figure 6.

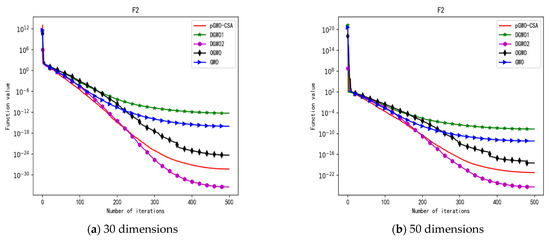

Convergence curve of test function F2.

Figure 7.

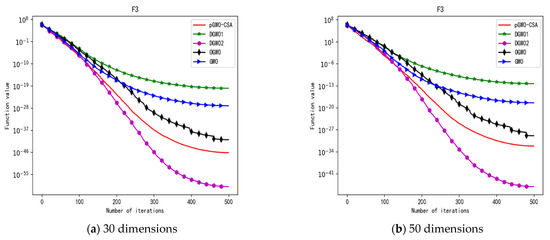

Convergence curve of test function F3.

Figure 8.

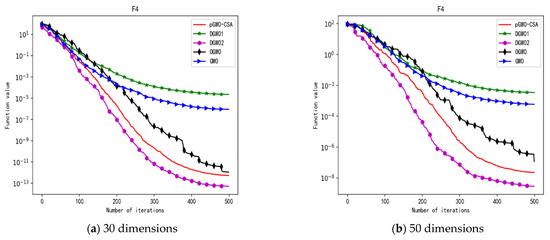

Convergence curve of test function F4.

Figure 9.

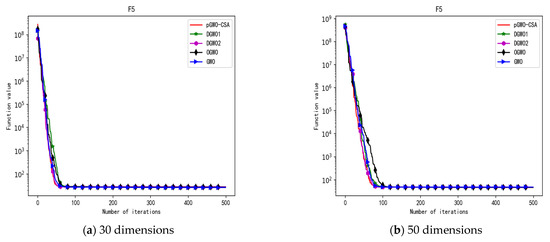

Convergence curve of test function F5.

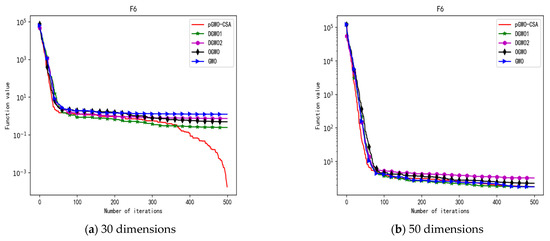

Figure 10.

Convergence curve of test function F6.

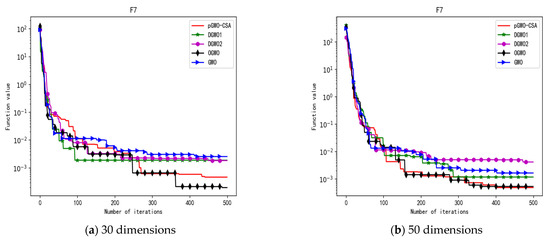

Figure 11.

Convergence curve of test function F7.

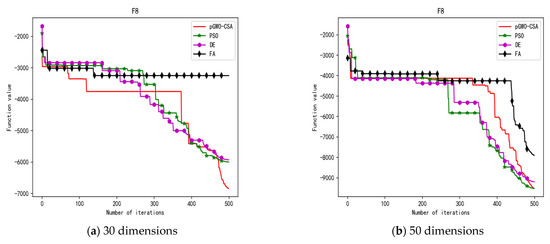

Figure 12.

Convergence curve of test function F8.

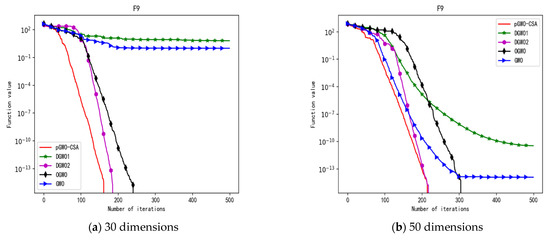

Figure 13.

Convergence curve of test function F9.

Figure 14.

Convergence curve of test function F10.

Figure 15.

Convergence curve of test function F11.

Figure 16.

Convergence curve of test function F12.

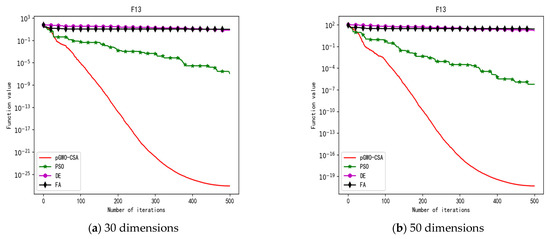

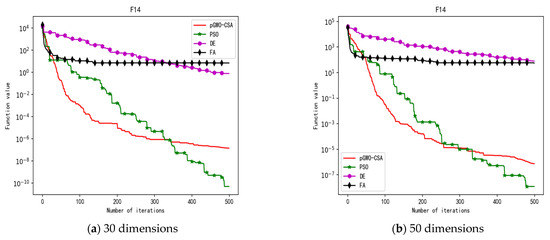

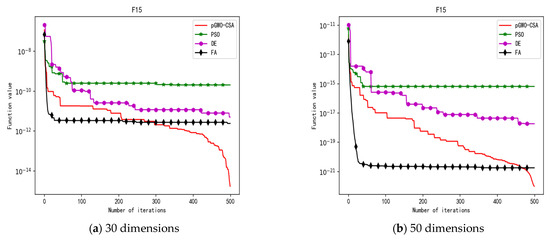

Figure 17.

Convergence curve of test function F13.

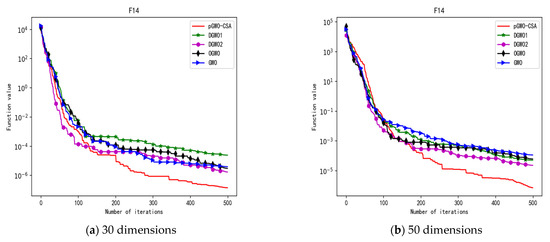

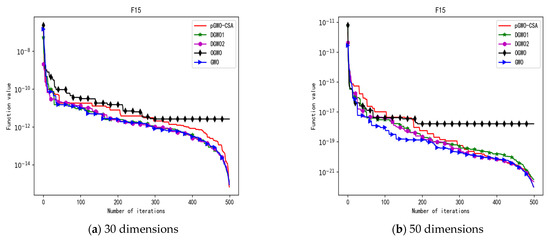

Figure 18.

Convergence curve of test function F14.

Figure 19.

Convergence curve of test function F15.

In terms of the performance of the mean. Sort by the number of optimal values. The pGWO-CSA ranked first with 13 optimal values. PSO and DE tied for second place with one optimal value. FA ranked fourth with zero optimal values. Compared with PSO, pGWO-CSA outperformed PSO in 14 out of 15 test functions, and PSO outperformed pGWO-CSA only in test function F14. Compared with DE, pGWO-CSA outperformed DE in 14 out of 15 test functions, and DE outperformed pGWO-CSA only in test function F6. Compared with FA, pGWO-CSA outperformed FA in all 15 test functions.

In terms of the performance of the standard deviation. Sort by the number of optimal values. The pGWO-CSA ranked first with 11 optimal values. PSO ranked second with three optimal values. FA ranked third with one optimal value. DE ranked fourth with zero optimal values. Compared with PSO, pGWO-CSA outperformed PSO in 11 out of 15 test functions, and PSO outperformed pGWO-CSA in test functions F5, F6, F8, and F14. Compared with DE, pGWO-CSA outperformed DE in 13 out of 15 test functions, and DE outperformed pGWO-CSA in test functions F6 and F8. Compared with FA, pGWO-CSA outperformed FA in 14 out of 15 test functions, and FA outperformed pGWO-CSA only in test function F6.

In terms of the performance of the minimum. Sort by the number of optimal values. The pGWO-CSA ranked first with 13 optimal values. PSO ranked second with four optimal values. DE and FA tied for third place with zero optimal values. Compared with PSO, pGWO-CSA outperformed PSO in 11 out of 15 test functions, and PSO outperformed pGWO-CSA in test functions F7 and F14. In addition, the minimum values of pGWO-CSA and PSO are the same on the test functions F9 and F12, and both pGWO-CSA and PSO find the minimum values of the functions. Compared with DE and FA, pGWO-CSA outperformed DE and FA in all 15 test functions.

In terms of the performance of the maximum. Sort by the number of optimal values. The pGWO-CSA ranked first with 12 optimal values. DE ranked second with two optimal values. PSO ranked third with one optimal value. FA ranked fourth with zero optimal values. Compared with PSO, pGWO-CSA outperformed PSO in 14 out of 15 test functions, and PSO only outperformed pGWO-CSA in test function F14. Compared with DE, pGWO-CSA outperformed DE in 13 out of 15 test functions, and DE outperformed pGWO-CSA in test functions F6 and F8. Compared with FA, pGWO-CSA outperformed FA in 14 out of 15 test functions, and FA outperformed pGWO-CSA only in test function F6.

In addition, the pGWO-CSA can find theoretical optimal values on the test functions F9, F11, and F12. In the test functions F1, F2, F3, F4, F10, F11, F13, and F15, pGWO-CSA is superior to PSO, DE, and FA in terms of the mean, standard deviation, and minimum and maximum. Although PSO outperformed pGWO-CSA in the mean, standard deviation, and minimum and maximum on test function F14, pGWO-CSA still outperformed DE and FA on test function F14.

In 50 dimensions, the test results of these four algorithms on test function F1–F15 are shown in Table 4. The convergence curves of these four algorithms on test function F1–F15 are recorded in Figure 5b, Figure 6b, Figure 7b, Figure 8b, Figure 9b, Figure 10b, Figure 11b, Figure 12b, Figure 13b, Figure 14b, Figure 15b, Figure 16b, Figure 17b, Figure 18b and Figure 19b.

Table 4.

The experimental results under 50 dimensions.

In terms of the performance of the mean. Sort by the number of optimal values. The pGWO-CSA ranked first with 13 optimal values. PSO and FA tied for second place with one optimal value. DE ranked fourth with zero optimal values. Compared with PSO, pGWO-CSA outperformed PSO in 14 out of 15 test functions, and PSO outperformed pGWO-CSA only in test function F14. Compared with DE, pGWO-CSA outperformed DE in all 15 test functions. Compared with FA, pGWO-CSA outperformed FA in 14 out of 15 test functions, and FA outperformed pGWO-CSA only in test function F6.

In terms of the performance of the standard deviation. Sort by the number of optimal values. The pGWO-CSA ranked first with 11 optimal values. PSO ranked second with three optimal values. FA ranked third with one optimal value. DE ranked fourth with zero optimal values. Compared with PSO, pGWO-CSA outperformed PSO in 11 out of 15 test functions, and PSO outperformed pGWO-CSA in test functions F5, F6, F8, and F14. Compared with DE, pGWO-CSA outperformed DE in 14 out of 15 test functions, and DE outperformed pGWO-CSA only in test function F8. Compared with FA, pGWO-CSA outperformed FA in 14 out of 15 test functions, and FA outperformed pGWO-CSA only in test function F6.

In terms of the performance of the minimum. Sort by the number of optimal values. The pGWO-CSA ranked first with 11 optimal values. PSO ranked second with four optimal values. FA ranked third with two optimal values. DE ranked fourth with zero optimal values. Compared with PSO, pGWO-CSA outperformed PSO in 11 out of 15 test functions, and PSO outperformed pGWO-CSA in test functions F7 and F14. In addition, the minimum value of pGWO-CSA and PSO are the same on the test functions F9 and F12, and both pGWO-CSA and PSO find the minimum values of the functions. Compared with DE, pGWO-CSA outperformed DE in all 15 test functions. Compared with FA, pGWO-CSA outperformed FA in 13 out of 15 test functions, and FA outperformed pGWO-CSA in test functions F6 and F8.

In terms of the performance of the maximum. Sort by the number of optimal values. The pGWO-CSA ranked first with 12 optimal values. PSO, DE, and FA are tied for second place with one optimal value. Compared with PSO, pGWO-CSA outperformed PSO in 14 out of 15 test functions, and PSO only outperformed pGWO-CSA in test function F14. Compared with DE, pGWO-CSA outperformed DE in 14 out of 15 test functions, and DE only outperformed pGWO-CSA in test function F8. Compared with FA, pGWO-CSA outperformed FA in 14 out of 15 test functions, and FA outperformed pGWO-CSA only in test function F6.

In addition, pGWO-CSA can find theoretical optimal values on the test functions F9, F11, and F12. In the test functions F1, F2, F3, F4, F9, F10, F13, and F15, pGWO-CSA is superior to PSO, DE, and FA in terms of the mean, standard deviation, and minimum and maximum. Although pGWO-CSA is not as good as FA on test function F6 and as good as PSO on test function F14, pGWO-CSA still outperformed the other two swarm intelligence algorithms on these two functions.

Based on the above data and analysis, pGWO-CSA has faster convergence speed, higher accuracy, and better ability to jump out of local optimum compared with other swarm intelligence algorithms in either 30 or 50 dimensions. In order to further verify the performance of pGWO-CSA, we will next compare pGWO-CSA with GWO and its variants.

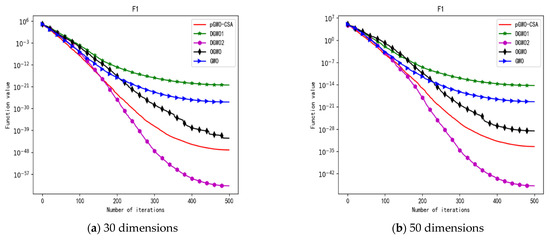

4.2. Compare with GWO and Its Variants

In order to further verify the performance of pGWO-CSA, pGWO-CSA is compared with GWO [5] and its variants OGWO [27], DGWO1, and DGWO2 [28] on the test functions F1–F15 in 30 dimensions and 50 dimensions. The main parameters of pGWO-CSA, GWO, OGWO, DGWO1, and DGWO2 are shown in Table 5.

Table 5.

Main parameters of the five algorithms.

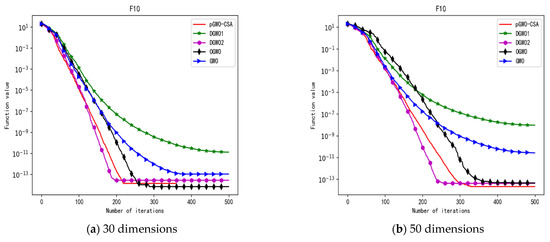

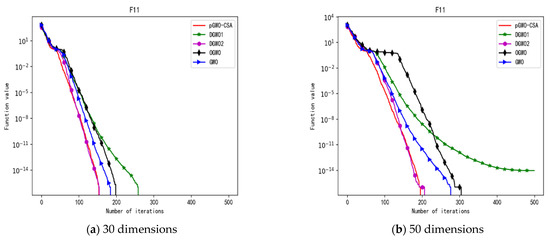

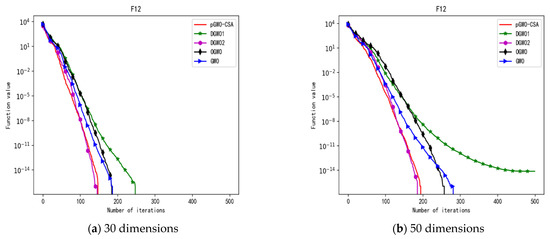

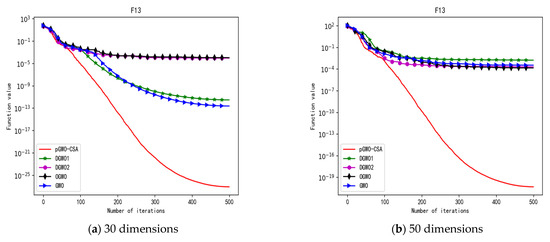

In 30 dimensions, the test results of these five algorithms on test function F1–F15 are shown in Table 6, with the optimal values highlighted in bold. The convergence curves of these five algorithms on test function F1–F15 are recorded in Figure 20a, Figure 21a, Figure 22a, Figure 23a, Figure 24a, Figure 25a, Figure 26a, Figure 27a, Figure 28a, Figure 29a, Figure 30a, Figure 31a, Figure 32a, Figure 33a and Figure 34a.

Table 6.

The experimental results under 30 dimensions.

Figure 20.

Convergence curve of test function F1.

Figure 21.

Convergence curve of test function F2.

Figure 22.

Convergence curve of test function F3.

Figure 23.

Convergence curve of test function F4.

Figure 24.

Convergence curve of test function F5.

Figure 25.

Convergence curve of test function F6.

Figure 26.

Convergence curve of test function F7.

Figure 27.

Convergence curve of test function F8.

Figure 28.

Convergence curve of test function F9.

Figure 29.

Convergence curve of test function F10.

Figure 30.

Convergence curve of test function F11.

Figure 31.

Convergence curve of test function F12.

Figure 32.

Convergence curve of test function F12.

Figure 33.

Convergence curve of test function F14.

Figure 34.

Convergence curve of test function F15.

In 50 dimensions, the test results of these five algorithms on test function F1–F15 are shown in Table 7. The convergence curves of these five algorithms on test function F1–F15 are recorded in Figure 20b, Figure 21b, Figure 22b, Figure 23b, Figure 24b, Figure 25b, Figure 26b, Figure 27b, Figure 28b, Figure 29b, Figure 30b, Figure 31b, Figure 32b, Figure 33b and Figure 34b.

Table 7.

The experimental results under 50 dimensions.

In terms of the performance of the mean. Sort by the number of optimal values. The pGWO-CSA ranked first with nine optimal values. DGWO2 ranked second with six optimal values. OGWO ranked third with two optimal values. DGWO1 and GWO tied for fourth place with one optimal value. Compared with GWO, pGWO-CSA outperformed GWO in 14 out of 15 test functions. Compared with OGWO, pGWO-CSA outperformed OGWO in 13 out of 15 test functions, and OGWO outperformed pGWO-CSA only in test function F7. Compared with DGWO1, pGWO-CSA outperformed DGWO1 in 14 out of 15 test functions. Compared with DGWO2, pGWO-CSA outperformed DGWO2 in 9 out of 15 test functions, and DGWO2 outperformed pGWO-CSA only in test functions F1, F2, F3, F4, and F14.

In terms of the performance of the standard deviation. Sort by the number of optimal values. The pGWO-CSA and DGWO2 tied for first place with seven optimal values. DGWO1 and OGWO tied for third place with two optimal values. GWO ranked fifth with one optimal value. Compared with GWO, pGWO-CSA outperformed GWO in 11 out of 15 test functions, and GWO only outperformed pGWO-CSA in test functions F7 and F15. Compared with OGWO, pGWO-CSA outperformed OGWO in 13 out of 15 test functions, and OGWO only outperformed pGWO-CSA in test function F7. Compared with DGWO1, pGWO-CSA outperformed DGWO1 in 13 out of 15 test functions, and DGWO1 only outperformed pGWO-CSA in test function F15. Compared with DGWO2, pGWO-CSA outperformed DGWO2 in seven out of fifteen test functions, and DGWO2 outperformed pGWO-CSA in test functions F1, F2, F3, F4, F6, F7, and F14.

In terms of the performance of the minimum. Sort by the number of optimal values. The pGWO-CSA and DGWO2 tied for first place with eight optimal values. OGWO ranked third with six optimal values. DGWO1 and GWO tied for fourth place with three optimal values. Compared with GWO, pGWO-CSA outperformed GWO in 12 out of 15 test functions. Compared with OGWO, pGWO-CSA outperformed OGWO in eight out of fifteen test functions, and OGWO outperformed pGWO-CSA only in test functions F4, F7, and F8. Compared with DGWO1, pGWO-CSA outperformed DGWO1 in 12 out of 15 test functions. Compared with DGWO2, pGWO-CSA outperformed DGWO2 in seven out of fifteen test functions, and DGWO2 outperformed pGWO-CSA only in test functions F1, F2, F3, F4, and F13.

In terms of the performance of the maximum. Sort by the number of optimal values. The pGWO-CSA and DGWO2 tied for first place with seven optimal values. OGWO ranked third with three optimal values. DGWO1 ranked fourth with two optimal values. GWO ranked fifth with one optimal value. Compared with GWO, pGWO-CSA outperformed GWO in 12 out of 15 test functions, and GWO outperformed pGWO-CSA only in test functions F7 and F15. Compared with OGWO, pGWO-CSA outperformed OGWO in 11 out of 15 test functions, and OGWO outperformed pGWO-CSA only in test functions F6 and F7. Compared with DGWO1, pGWO-CSA outperformed DGWO1 in 12 out of 15 test functions, and DGWO1 outperformed pGWO-CSA only in test functions F6 and F15. Compared with DGWO2, pGWO-CSA outperformed DGWO2 in eight out of fifteen test functions, and DGWO2 outperformed pGWO-CSA only in test functions F1, F2, F3, F4, F6, and F14.

In addition, pGWO-CSA can find theoretical optimal values on the test functions F9, F11, and F12. In the test functions F5, F9, F10, F11, and F12, pGWO-CSA is the optimal value among the five algorithms in terms of the mean, standard deviation, and minimum and maximum. Although DGWO2 outperformed pGWO-CSA in the performance of the first four test functions, F1, F2, F3, and F4, pGWO-CSA still outperformed the other three algorithms.

In terms of the performance of the mean. Sort by the number of optimal values. The pGWO-CSA ranked first with eight optimal values. DGWO2 ranked second with six optimal values. OGWO ranked third with three optimal values. DGWO1 and GWO tied for fourth place with one optimal value. Compared with GWO, pGWO-CSA outperformed GWO in 14 out of 15 test functions. Compared with OGWO, pGWO-CSA outperformed OGWO in 11 out of 15 test functions, and OGWO outperformed pGWO-CSA only in test functions F3, F7, and F10. Compared with DGWO1, pGWO-CSA outperformed DGWO1 in 14 out of 15 test functions. Compared with DGWO2, pGWO-CSA outperformed DGWO2 in nine out of fifteen test functions, and DGWO2 outperformed pGWO-CSA only in test functions F1, F2, F3, F4, and F14.

In terms of the performance of the standard deviation. Sort by the number of optimal values. The pGWO-CSA ranked first with eight optimal values. DGWO2 ranked second with seven optimal values. OGWO ranked third with two optimal values. GWO ranked fourth with one optimal value. DGWO1 ranked fifth with zero optimal values. Compared with GWO, pGWO-CSA outperformed GWO in 13 out of 15 test functions, and GWO only outperformed pGWO-CSA in test function F7. Compared with OGWO, pGWO-CSA outperformed OGWO in 11 out of 15 test functions, and OGWO only outperformed pGWO-CSA in test functions F3, F7, and F14. Compared with DGWO1, pGWO-CSA outperformed DGWO1 in all 15 test functions. Compared with DGWO2, pGWO-CSA outperformed DGWO2 in seven out of fifteen test functions, and DGWO2 outperformed pGWO-CSA in test functions F1, F2, F3, F4, F7, F10, and F14.

In terms of the performance of the minimum. Sort by the number of optimal values. DGWO2 ranked first with eight optimal values. The pGWO-CSA and OGWO tied for second place with six optimal values. GWO ranked fourth with two optimal values. DGWO1 ranked fifth with one optimal value. Compared with GWO, pGWO-CSA outperformed GWO in 13 out of 15 test functions. Compared with OGWO, pGWO-CSA outperformed OGWO in six out of fifteen test functions, and OGWO outperformed pGWO-CSA in test functions F1, F3, F4, F7, F10, and F13. Compared with DGWO1, pGWO-CSA outperformed DGWO1 in 14 out of 15 test functions, and DGWO1 outperformed pGWO-CSA only in test function F6. Compared with DGWO2, pGWO-CSA outperformed DGWO2 in six out of fifteen test functions, and DGWO2 outperformed pGWO-CSA in test functions F1, F2, F3, F4, F13, and F14.

In terms of the performance of the maximum. Sort by the number of optimal values. DGWO2 ranked first with eight optimal values. The pGWO-CSA ranked second with seven optimal values. OGWO ranked third with three optimal values. GWO ranked fourth with one optimal value. DGWO1 ranked fifth with zero optimal values. Compared with GWO, pGWO-CSA outperformed GWO in 12 out of 15 test functions, and GWO outperformed pGWO-CSA only in test functions F7 and F14. Compared with OGWO, pGWO-CSA outperformed OGWO in 10 out of 15 test functions, and OGWO outperformed pGWO-CSA only in test functions F4, F5, F7, and F14. Compared with DGWO1, pGWO-CSA outperformed DGWO1 in 13 out of 15 test functions, and DGWO1 outperformed pGWO-CSA only in test function F15. Compared with DGWO2, pGWO-CSA outperformed DGWO2 in six out of fifteen test functions, and DGWO2 outperformed pGWO-CSA in test functions F1, F2, F3, F4, F5, F7, F14, and F15.

In addition, pGWO-CSA can find theoretical optimal values on the test functions F9, F11, and F12. In the test functions F8, F9, F11, and F12, pGWO-CSA is the optimal value among the five algorithms in terms of the mean, standard deviation, and minimum and maximum. It is not difficult to find from Table 6 and Table 7 that DGWO2 performs better than pGWO-CSA in the first four test functions, F1–F4, in both the 30 dimensions and the 50 dimensions. As can be seen from Table 1, the first four test functions are simple single-peak functions, indicating that DGWO1 performs better than pGWO-CSA in simple single-peak functions. However, compared with the other three algorithms, pGWO-CSA still performs better on the test functions F1–F4.

By comparison, it is not difficult to find that pGWO-CSA performs better than the previous seven test functions, F1–F7, in the following eight test functions, F8–F15, whether in the 30 dimensions or the 50 dimensions. It can be seen that pGWO-CSA performs better in more complex functions, which is largely due to the super-mutation operation carried out by pGWO-CSA, which helps pGWO-CSA better jump out of local optimum.

Based on the above data and analysis, pGWO-CSA has faster convergence speed, higher accuracy, and better ability to jump out of the local optimum compared with GWO and its variants in either 30 or 50 dimensions. In order to further reveal the performance of pGWO-CSA, the Wilcoxon test is performed in Section 4.3 based on the experimental data in Section 4.1 and Section 4.2.

4.3. Wilcoxon Test

In order to further reveal the performance of pGWO-CSA, according to the experimental data in Section 4.1 and Section 4.2, the Wilcoxon test is conducted on the mean of the 30 running results of each algorithm. The statistical results are shown in Table 8. In the Wilcoxon test, ’+’ means that the proposed algorithm is inferior to the selected algorithm, ‘−‘ means that the proposed algorithm is superior to the selected algorithm, and ‘=’ means that the two algorithms get the same result.

Table 8.

The results of the Wilcoxon test.

It can be seen from Table 8 that the number of ‘+’ of each algorithm is small, indicating that the seven algorithms compared with pGWO-CSA only outperform pGWO-CSA in a few test functions, and the number of ‘−’ of each algorithm exceeds 15, indicating that pGWO-CSA outperformed other algorithms in most test functions. The results show that pGWO-CSA is superior to other swarm intelligence algorithms, GWO, and its variants.

5. Robot Path-Planning Problem

With the development of artificial intelligence, robots have been widely used in various fields [33,34,35]. Among them, robot path planning is an important research problem. To further verify the applicability and superiority of the proposed algorithm, it is applied to the robot path-planning problem.

5.1. Robot Path-Planning Problem Description

The robot path-planning problem mainly includes two aspects: environment modeling and evaluation function. Environment modeling is to transform the environmental information of the robot into a form that can be recognized and expressed by a computer. The evaluation function is used to measure the path quality and is regarded as the objective function to be optimized by the algorithm.

5.1.1. Environment Modeling

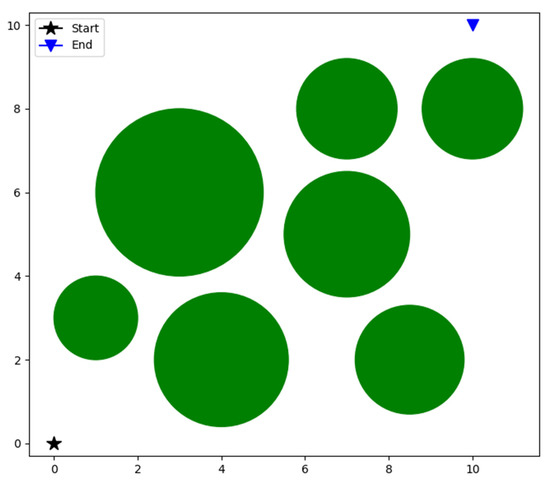

The environment model of the robot path-planning problem is shown in Figure 35. The starting point is located at (0,0) and marked with a black star, the endpoint is located at (10,10) and marked with a blue triangle, and the obstacles are marked with a green circle. The mathematical expression of the obstacles is shown in Formula (13).

where a and b represent the center coordinates of the obstacle, and r represents the radius of the circle.

Figure 35.

Environment modeling.

5.1.2. Evaluation Function

Suppose the robot finds some path points from start to end: (, ), (, ), …, (, ), and the coordinate of the path is (, ). A complete path formed by connecting these path points is a feasible solution to the robot path-planning problem. In order to reduce the optimization dimension of the problem and smooth the path curve, the spline interpolation method is used to construct the path curve. In order to evaluate the quality of the path, this paper considers the length of the path and the risk of the path. The evaluation function is shown in Formula (14).

where w1 and w2 are weight parameters and w1 + w2 = 1.0. represents the fitness value of the length of the path, which is calculated by Formula (15); represents the fitness value of the risk of the path, which is calculated by Formula (16).

where n is the total number of path points, and (, ) represents the coordinate of the path .

where c is the penalty coefficient, k is the total number of obstacles, (, ) is the coordinates of the center of obstacle i, and is the radius.

According to Formulas (14)–(16), when the fitness value of is small, then the length of the path is short. When the fitness value of is small, the risk of the path is low. Therefore, the smaller the , the higher the quality of the path.

5.2. The Experimental Results

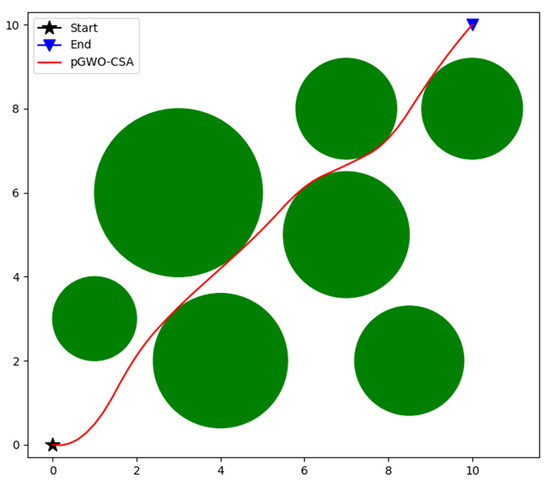

In order to verify the applicability and superiority of pGWO-CSA in robot path-planning problems, PSO, DE, FA, GWO, and its variants are compared with pGWO-CSA. The parameters of all algorithms are exactly the same as in Section 4. In order to avoid the randomness of the algorithm, each algorithm will be run 10 times, and then the minimum, maximum, and mean of the results will be recorded. The path planned by pGWO-CSA is shown in Figure 36, and the experimental results of all algorithms are shown in Table 9.

Figure 36.

The path of pGWO-CSA.

Table 9.

Experimental results.

According to the experimental data, pGWO-CSA is the optimal value of all algorithms in the performance of the minimum, maximum, and mean. The applicability and superiority of pGWO-CSA are further verified.

6. Conclusions

Aiming at the defects of the GWO, such as low convergence accuracy and easy precocity when dealing with complex problems, this paper proposes pGWO-CSA to settle these drawbacks. Firstly, the pGWO-CSA uses a nonlinear function instead of a linear function to adjust the iterative attenuation of the convergence factor to balance exploitation and exploration. Secondly, pGWO-CSA improves GWO’s position-updating strategy, and finally, pGWO-CSA is mixed with the CSA. The improved pGWO-CSA improves the convergence speed, precision, and ability to jump out of the local optimum. The experimental results show that the pGWO-CSA has obvious accuracy advantages. Compared with GWO and its variants participating in the experiment, the pGWO-CSA shows good stability in both 30 and 50 dimensions and is suitable for the optimization of complex and variable problems. Finally, the proposed algorithm is applied to the robot path-planning problem, which further verifies the applicability and superiority of the proposed algorithm.

Author Contributions

Conceptualization, Y.O. and P.Y.; Methodology, Y.O.; Software, Y.O.; Validation, Y.O.; Formal analysis, P.Y. and L.M.; Investigation, P.Y.; Resources, L.M.; Data curation, P.Y.; Writing—original draft, Y.O.; Writing—review & editing, Y.O., P.Y. and L.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China (Nos. 62266019 and 62066016), the Research Foundation of Education Bureau of Hunan Province, China (No. 21C0383).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Jiang, X.; Lin, Z.; He, T.; Ma, X.; Ma, S.; Li, S. Optimal path finding with beetle antennae search algorithm by using ant colony optimization initialization and different searching strategies. IEEE Access 2020, 8, 15459–15471. [Google Scholar] [CrossRef]

- Khan, A.H.; Cao, X.; Li, S.; Katsikis, V.N.; Liao, L. BAS-ADAM: An ADAM based approach to improve the performance of beetle antennae search optimizer. IEEE/CAA J. Autom. Sin. 2020, 7, 461–471. [Google Scholar] [CrossRef]

- Ye, S.-Q.; Zhou, K.-Q.; Zhang, C.-X.; Zain, A.M.; Ou, Y. An Improved Multi-Objective Cuckoo Search Approach by Ex-ploring the Balance between Development and Exploration. Electronics 2022, 11, 704. [Google Scholar] [CrossRef]

- Khan, A.H.; Cao, X.; Li, S.; Luo, C. Using social behavior of beetles to establish a computational model for operational management. IEEE Trans. Comput. Soc. Syst. 2020, 7, 492–502. [Google Scholar] [CrossRef]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey wolf optimizer. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar] [CrossRef]

- Makhadmeh, S.N.; Khader, A.T.; Al-Betar, M.A.; Naim, S. Multi-objective power scheduling problem in smart homes using grey wolf optimiser. J. Ambient. Intell. Humaniz. Comput. 2019, 10, 3643–3667. [Google Scholar] [CrossRef]

- Zawbaa, H.M.; Emary, E.; Grosan, C.; Snasel, V. Large-dimensionality small-instance set feature selection: A hybrid bio-inspired heuristic approach. Swarm Evol. Comput. 2018, 42, 29–42. [Google Scholar] [CrossRef]

- Daniel, E. Optimum wavelet-based homomorphic medical image fusion using hybrid genetic–grey wolf optimization algorithm. IEEE Sens. J. 2018, 18, 6804–6811. [Google Scholar] [CrossRef]

- Alomoush, A.A.; Alsewari, A.A.; Alamri, H.S.; Aloufi, K.; Zamli, K.Z. Hybrid harmony search algorithm with grey wolf optimizer and modified opposition-based learning. IEEE Access 2019, 7, 68764–68785. [Google Scholar] [CrossRef]

- Zhang, S.; Mao, X.; Choo, K.-K.R.; Peng, T.; Wang, G. A trajectory privacy-preserving scheme based on a dual-K mechanism for continuous location-based services. Inf. Sci. 2020, 527, 406–419. [Google Scholar] [CrossRef]

- Kaur, A.; Sharma, S.; Mishra, A. An Efficient opposition based Grey Wolf optimizer for weight adaptation in cooperative spectrum sensing. Wirel. Pers. Commun. 2021, 118, 2345–2364. [Google Scholar] [CrossRef]

- Makhadmeh, S.N.; Khader, A.T.; Al-Betar, M.A.; Naim, S.; Abasi, A.K.; Alyasseri, Z.A.A. A novel hybrid grey wolf optimizer with min-conflict algorithm for power scheduling problem in a smart home. Swarm Evol. Comput. 2021, 60, 100793. [Google Scholar] [CrossRef]

- Jeyafzam, F.; Vaziri, B.; Suraki, M.Y.; Hosseinabadi, A.A.R.; Slowik, A. Improvement of grey wolf optimizer with adaptive middle filter to adjust support vector machine parameters to predict diabetes complications. Neural Comput. Appl. 2021, 33, 15205–15228. [Google Scholar] [CrossRef]

- Zhou, J.; Huang, S.; Wang, M.; Qiu, Y. Performance evaluation of hybrid GA–SVM and GWO–SVM models to predict earthquake-induced liquefaction potential of soil: A multi-dataset investigation. Eng. Comput. 2021, 38, 4197–4215. [Google Scholar] [CrossRef]

- Khalilpourazari, S.; Doulabi, H.H.; Çiftçioğlu, A.Ö.; Weber, G.-W. Gradient-based grey wolf optimizer with Gaussian walk: Application in modelling and prediction of the COVID-19 pandemic. Expert Syst. Appl. 2021, 177, 114920. [Google Scholar] [CrossRef]

- Subudhi U Dash, S. Detection and classification of power quality disturbances using GWO ELM. J. Ind. Inf. Integr. 2021, 22, 100204. [Google Scholar] [CrossRef]

- Long, W.; Liang, X.; Cai, S.; Jiao, J.; Zhang, W. A modified augmented Lagrangian with improved grey wolf optimization to constrained optimization problems. Neural Comput. Appl. 2017, 28, 421–438. [Google Scholar] [CrossRef]

- Tikhamarine, Y.; Souag-Gamane, D.; Ahmed, A.N.; Kisi, O.; El-Shafie, A. Improving artificial intelligence models accuracy for monthly streamflow forecasting using grey Wolf optimization (GWO) algorithm. J. Hydrol. 2020, 582, 124435. [Google Scholar] [CrossRef]

- Shabeerkhan S Padma, A. A novel GWO optimized pruning technique for inexact circuit design. Microprocess. Microsyst. 2020, 73, 102975. [Google Scholar] [CrossRef]

- Chen, X.; Yi, Z.; Zhou, Y.; Guo, P.; Farkoush, S.G.; Niroumandi, H. Artificial neural network modeling and optimization of the solid oxide fuel cell parameters using grey wolf optimizer. Energy Rep. 2021, 7, 3449–3459. [Google Scholar] [CrossRef]

- Mittal, N.; Singh, U.; Sohi, B.S. Modified grey wolf optimizer for global engineering optimization. Appl. Comput. Intell. Soft Comput. 2016, 2016, 7950348. [Google Scholar] [CrossRef]

- Saxena, A.; Kumar, R.; Das, S. β-chaotic map enabled grey wolf optimizer. Appl. Soft Comput. 2019, 75, 84–105. [Google Scholar] [CrossRef]

- Long, W.; Jiao, J.; Liang, X.; Tang, M. An exploration-enhanced grey wolf optimizer to solve high-dimensional numerical optimization. Eng. Appl. Artif. Intell. 2018, 68, 63–80. [Google Scholar] [CrossRef]

- Gupta S Deep, K. A novel random walk grey wolf optimizer. Swarm Evol. Comput. 2019, 44, 101–112. [Google Scholar] [CrossRef]

- Teng, Z.; Lv, J.; Guo, L. An improved hybrid grey wolf optimization algorithm. Soft Comput. 2019, 23, 6617–6631. [Google Scholar] [CrossRef]

- Gupta, S.; Deep, K.; Moayedi, H.; Foong, L.K.; Assad, A. Sine cosine grey wolf optimizer to solve engineering design problems. Eng. Comput. 2021, 37, 3123–3149. [Google Scholar] [CrossRef]

- Yu, X.B.; Xu, W.Y.; Li, C.L. Opposition-based learning grey wolf optimizer for global optimization. Knowl.-Based Syst. 2021, 226, 107139. [Google Scholar] [CrossRef]

- Zhang, X.; Zhang, Y.; Ming, Z. Improved dynamic grey wolf optimizer. Frontiers of Information Technol. Electron. Eng. 2021, 22, 877–890. [Google Scholar] [CrossRef]

- Kennedy J Eberhart, R. Particle swarm optimization//Proceedings of ICNN’95-international conference on neural networks. IEEE 1995, 4, 1942–1948. [Google Scholar]

- Storn R Price, K. Differential evolution–a simple and efficient heuristic for global optimization over continuous spaces. J. Glob. Optim. 1997, 11, 341–359. [Google Scholar] [CrossRef]

- Yang, X.S. Nature-Inspired Metaheuristic Algorithms; Luniver Press: Beckington, UK, 2010. [Google Scholar]

- De Castro, L.N.; Von Zuben, F.J. Learning and optimization using the clonal selection principle. IEEE Trans. Evol. Comput. 2002, 6, 239–251. [Google Scholar] [CrossRef]

- Liu, H.-G.; Liu, D.-F.; Zhang, K.; Meng, F.-G.; Yang, A.-C.; Zhang, J.-G. Clinical Application of a Neurosurgical Robot in Intracranial Ommaya Reservoir Implantation. Front. Neurorobotics 2021, 15, 28. [Google Scholar] [CrossRef] [PubMed]

- Jin, Z.; Liu, L.; Gong, D.; Li, L. Target Recognition of Industrial Robots Using Machine Vision in 5G Environment. Front. Neurorobotics 2021, 15, 624466. [Google Scholar] [CrossRef] [PubMed]

- Cheng, X.; Li, J.; Zheng, C.; Zhang, J.; Zhao, M. An Improved PSO-GWO Algorithm With Chaos and Adaptive Inertial Weight for Robot Path Planning. Front. Neurorobotics 2021, 15, 770361. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).