Soft Robotic Glove with Sensing and Force Feedback for Rehabilitation in Virtual Reality

Abstract

1. Introduction

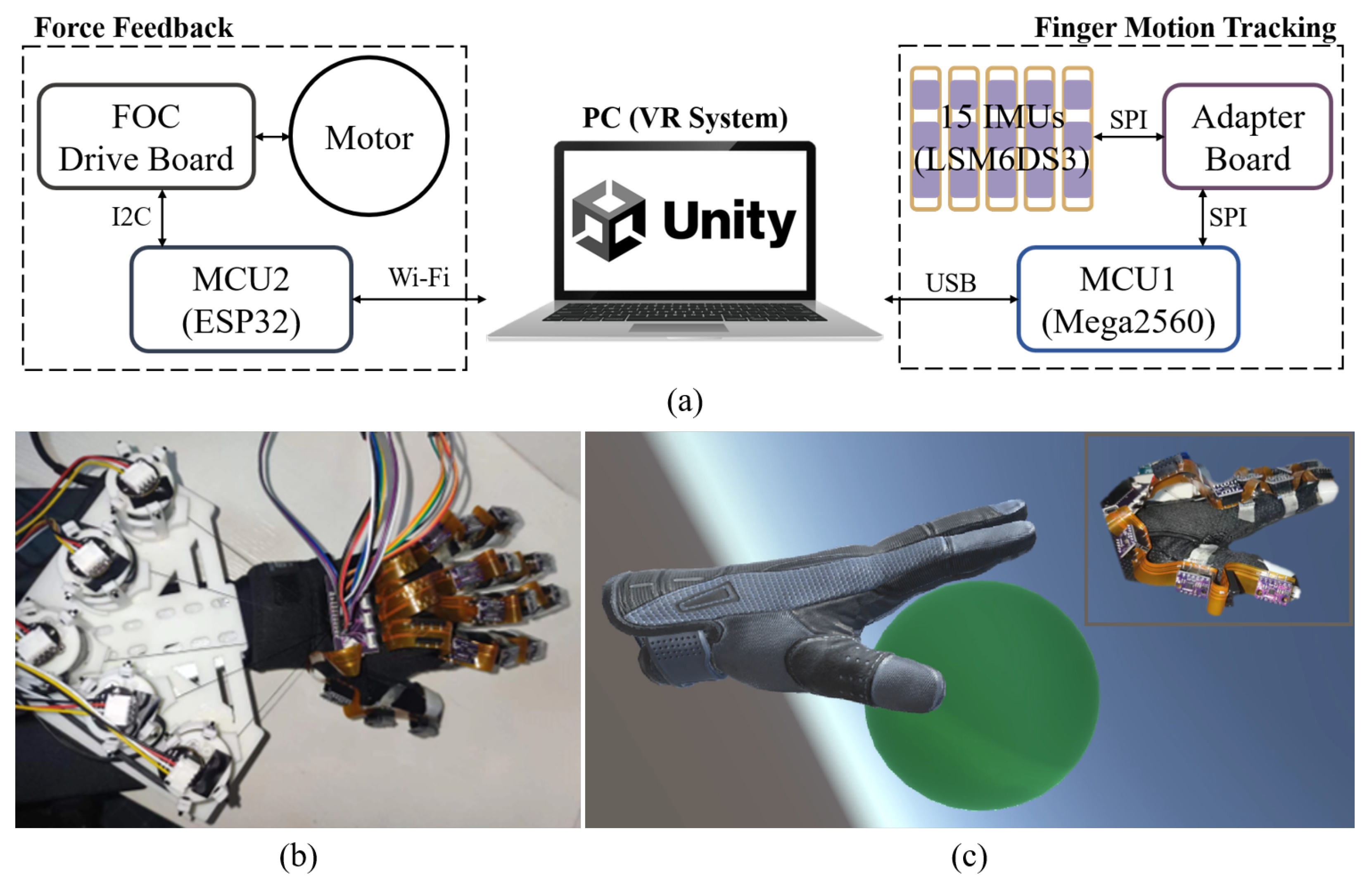

2. Hardware Design

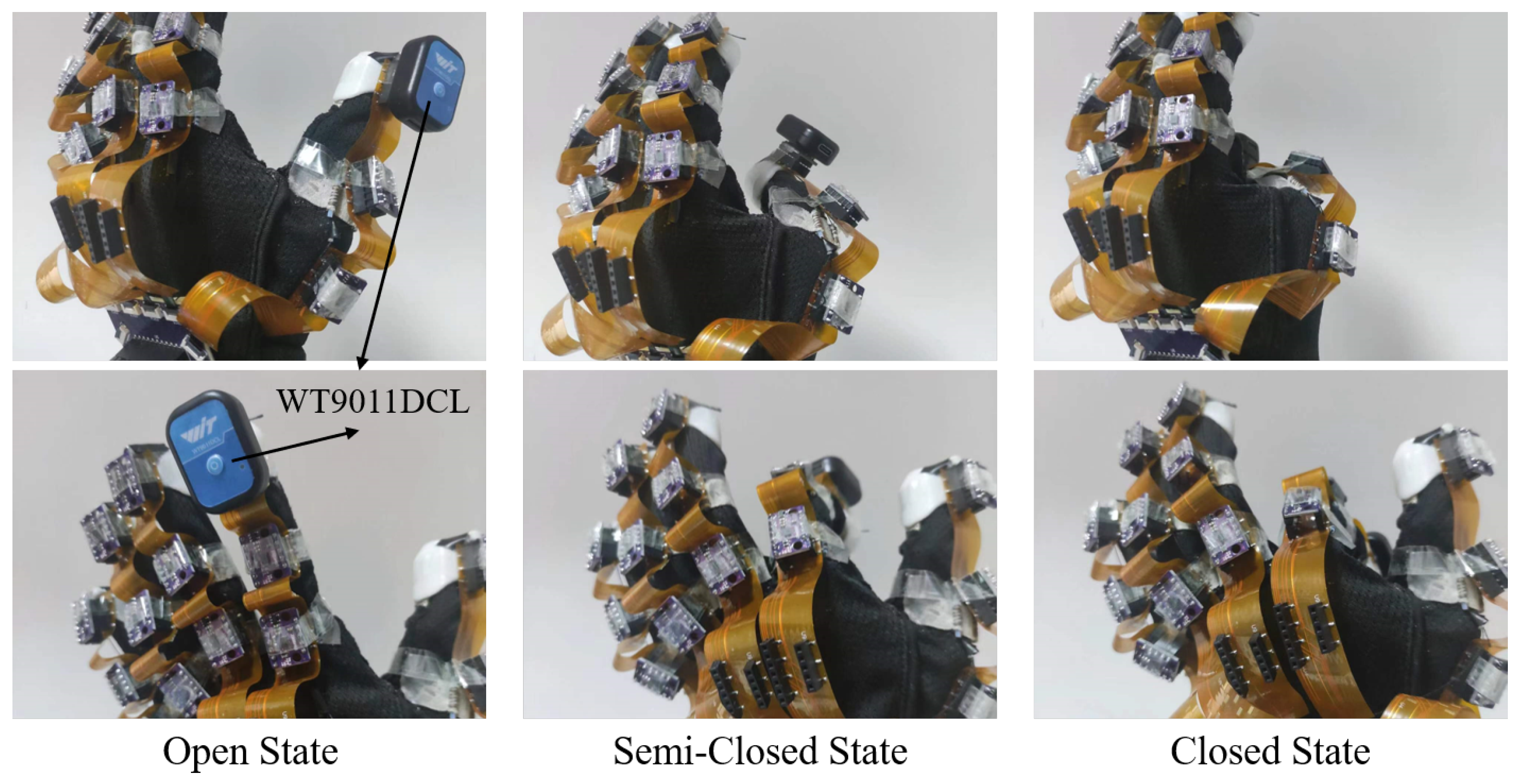

2.1. System Overview

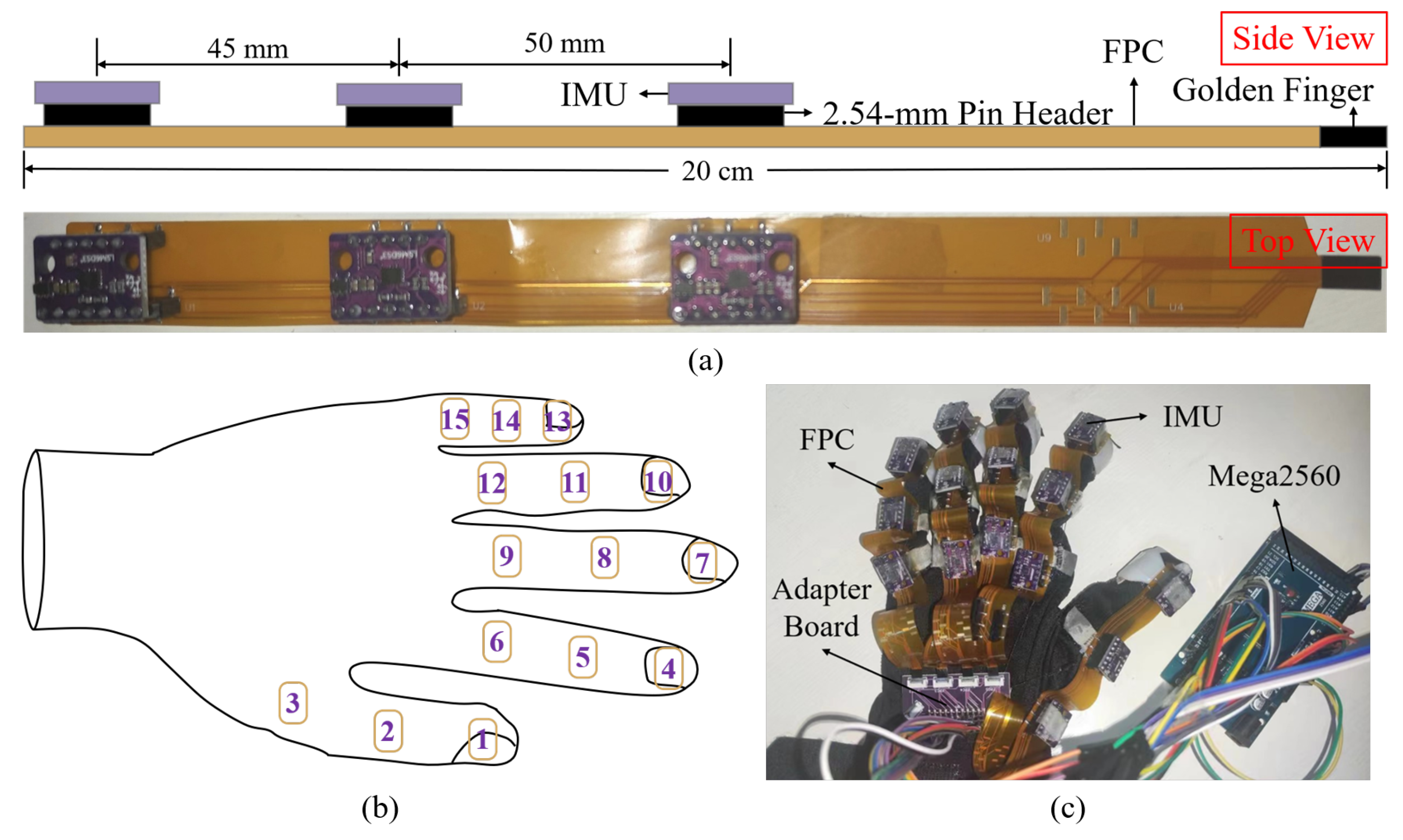

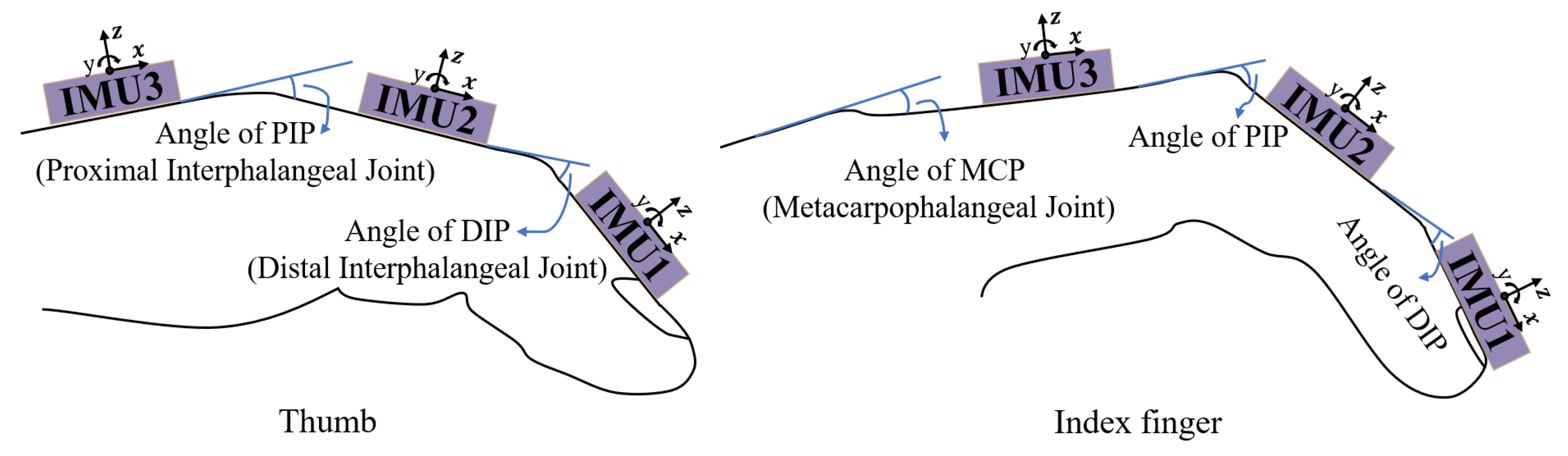

2.2. Hardware for Hand Simulation Based on Finger Motion Tracking

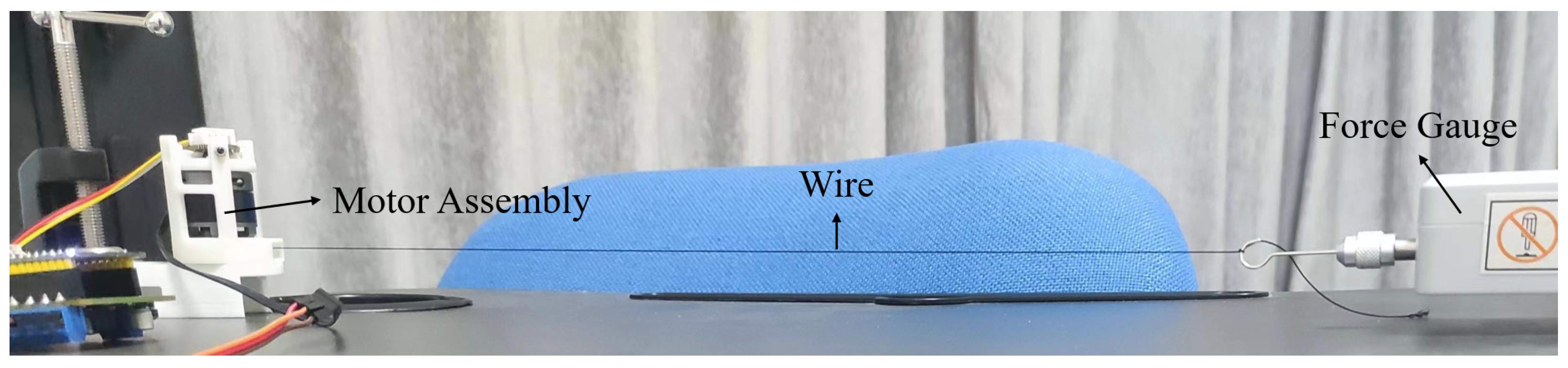

2.3. Hardware for Force Feedback

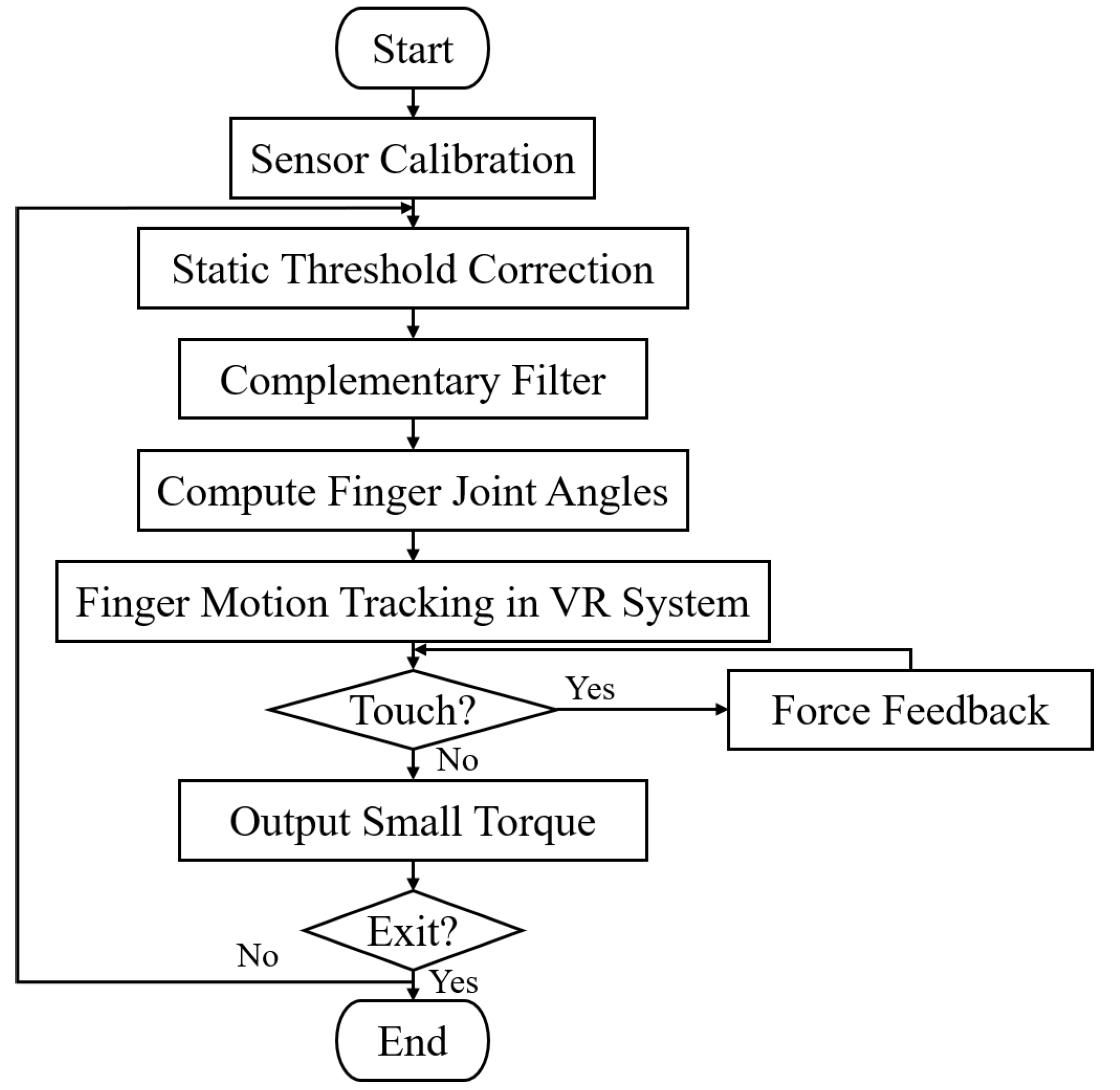

3. Software and Control

3.1. Execution Flowchart of Software Program

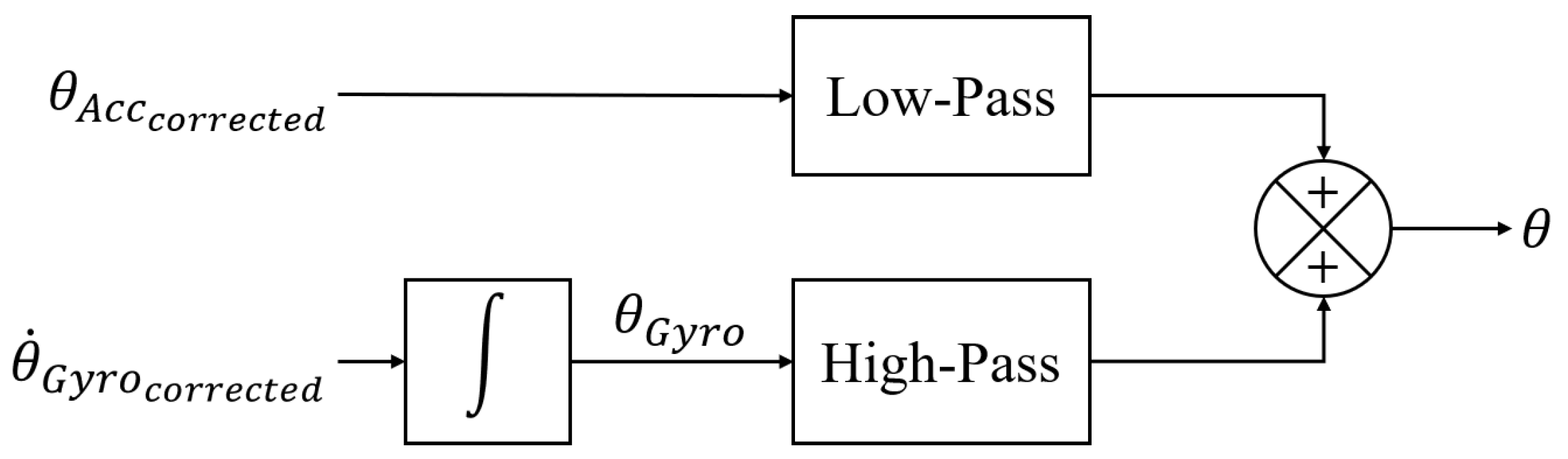

3.2. Attitude Angle Calculation Algorithm

3.2.1. Sensor Calibration

3.2.2. Static Threshold Correction

3.2.3. Complementary Filter

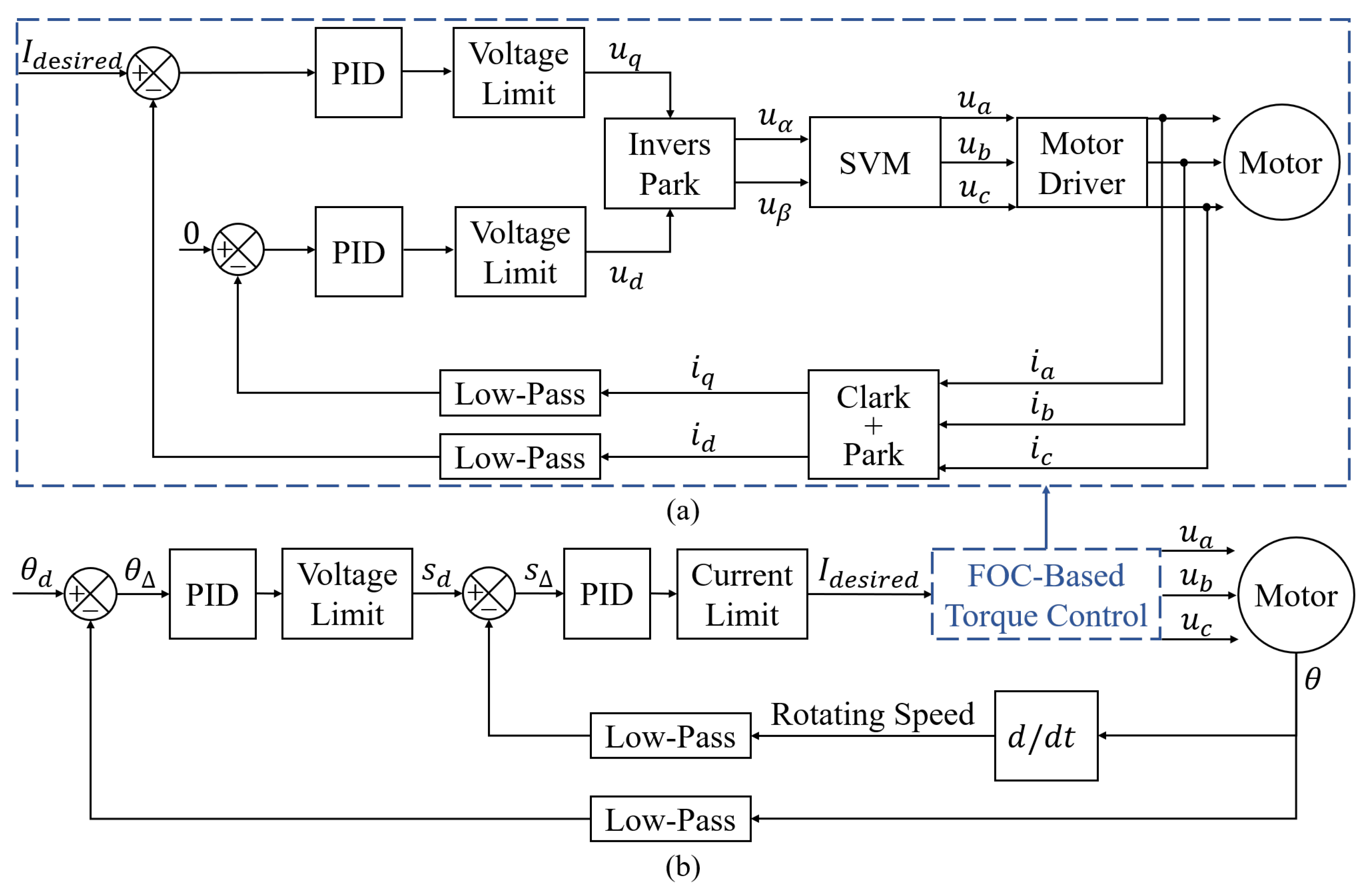

3.3. Force Feedback Control Algorithm

4. Experimental Validation and Application

4.1. Experimental Validation of Computing Static Attitude Angles

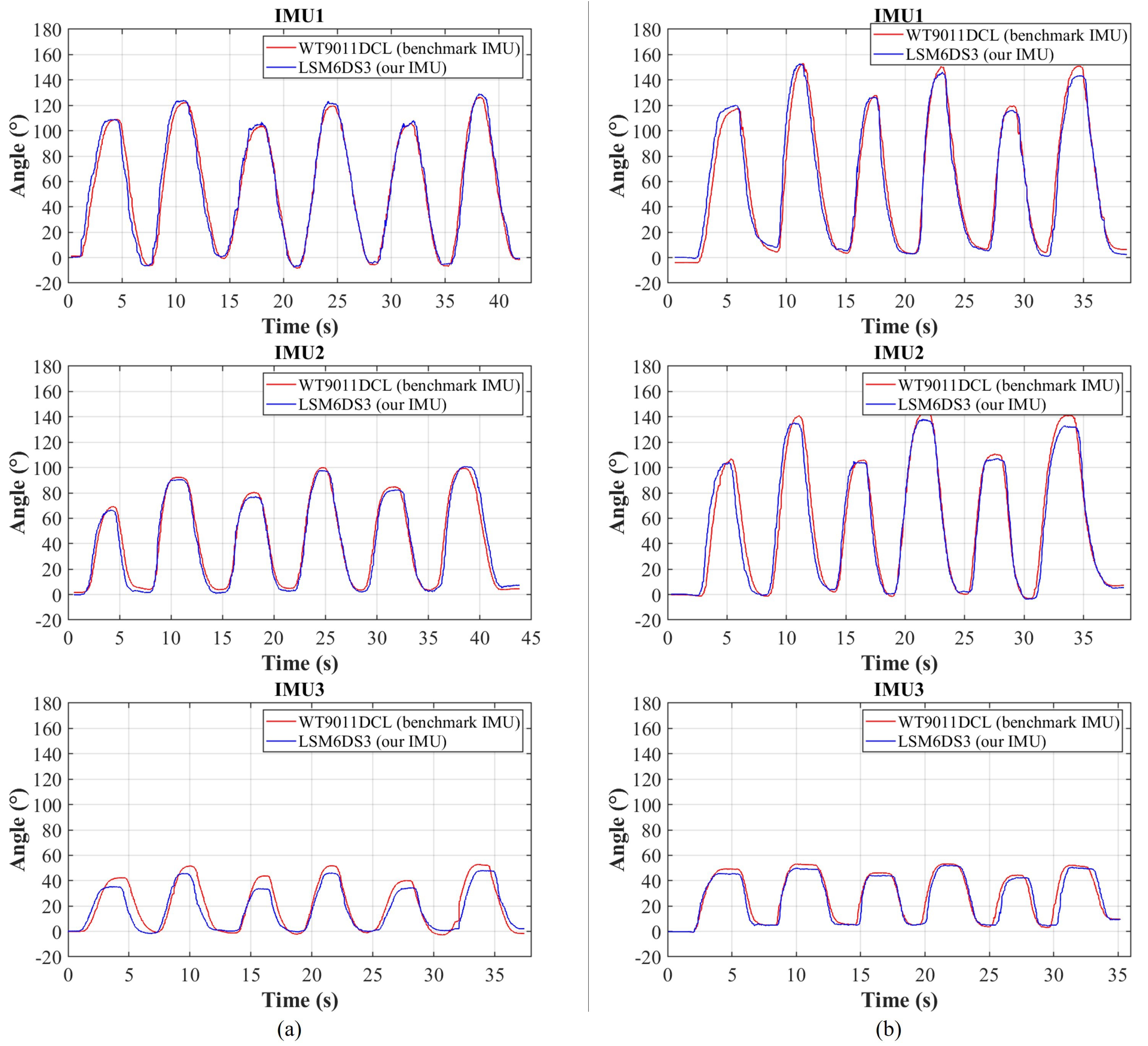

4.2. Experimental Validation of Computing Dynamic Attitude Angles

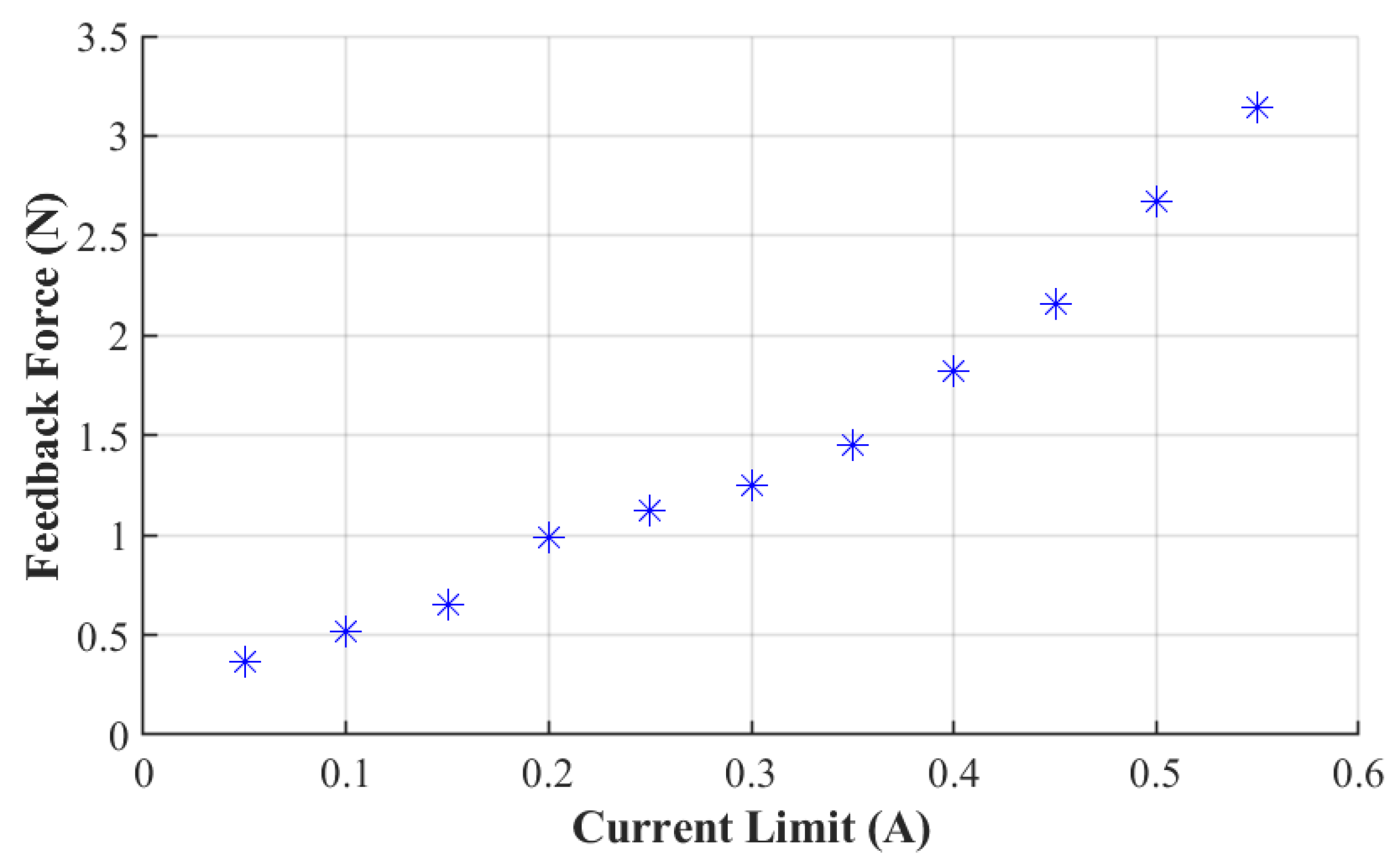

4.3. Test of Feedback Force versus Current Limit

4.4. Real-Time VR Scene Application

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Napier, J.R. The prehensile movements of the human hand. J. Bone Jt. Surgery. Br. Vol. 1956, 38, 902–913. [Google Scholar] [CrossRef] [PubMed]

- Chu, C.Y.; Patterson, R.M. Soft robotic devices for hand rehabilitation and assistance: A narrative review. J. Neuroeng. Rehabil. 2018, 15, 9. [Google Scholar] [CrossRef]

- Trail, I.A.; Fleming, A.N. Disorders of the Hand; Springer: Berlin/Heidelberg, Germany, 2015. [Google Scholar]

- Sharp, T.; Keskin, C.; Robertson, D.; Taylor, J.; Shotton, J.; Kim, D.; Rhemann, C.; Leichter, I.; Vinnikov, A.; Wei, Y.; et al. Accurate, robust, and flexible real-time hand tracking. In Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems, Seoul, Republic of Korea, 18–23 April 2015; pp. 3633–3642. [Google Scholar]

- Wang, R.Y.; Popović, J. Real-time hand-tracking with a color glove. ACM Trans. Graph. (TOG) 2009, 28, 1–8. [Google Scholar]

- Qian, C.; Sun, X.; Wei, Y.; Tang, X.; Sun, J. Realtime and robust hand tracking from depth. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1106–1113. [Google Scholar]

- Choi, Y.; Yoo, K.; Kang, S.J.; Seo, B.; Kim, S.K. Development of a low-cost wearable sensing glove with multiple inertial sensors and a light and fast orientation estimation algorithm. J. Supercomput. 2018, 74, 3639–3652. [Google Scholar] [CrossRef]

- Kortier, H.G.; Sluiter, V.I.; Roetenberg, D.; Veltink, P.H. Assessment of hand kinematics using inertial and magnetic sensors. J. Neuroeng. Rehabil. 2014, 11, 70. [Google Scholar] [CrossRef]

- Connolly, J.; Condell, J.; O’Flynn, B.; Sanchez, J.T.; Gardiner, P. IMU sensor-based electronic goniometric glove for clinical finger movement analysis. IEEE Sensors J. 2017, 18, 1273–1281. [Google Scholar] [CrossRef]

- Lin, B.S.; Lee, I.J.; Yang, S.Y.; Lo, Y.C.; Lee, J.; Chen, J.L. Design of an inertial-sensor-based data glove for hand function evaluation. Sensors 2018, 18, 1545. [Google Scholar] [CrossRef] [PubMed]

- Saggio, G.; De Sanctis, M.; Cianca, E.; Latessa, G.; De Santis, F.; Giannini, F. Long term measurement of human joint movements for health care and rehabilitation purposes. In Proceedings of the 2009 1st International Conference on Wireless Communication, Vehicular Technology, Information Theory and Aerospace & Electronic Systems Technology, Aalborg, Denmark, 17–20 May 2009; pp. 674–678. [Google Scholar]

- Saggio, G.; Riillo, F.; Sbernini, L.; Quitadamo, L.R. Resistive flex sensors: A survey. Smart Mater. Struct. 2015, 25, 013001. [Google Scholar] [CrossRef]

- Ziherl, J.; Novak, D.; Olenšek, A.; Mihelj, M.; Munih, M. Evaluation of upper extremity robot-assistances in subacute and chronic stroke subjects. J. Neuroeng. Rehabil. 2010, 7, 52. [Google Scholar] [CrossRef]

- Gu, X.; Zhang, Y.; Sun, W.; Bian, Y.; Zhou, D.; Kristensson, P.O. Dexmo: An inexpensive and lightweight mechanical exoskeleton for motion capture and force feedback in VR. In Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems, San Jose, CA, USA, 7–12 May 2016; pp. 1991–1995. [Google Scholar]

- Shahid, T.; Gouwanda, D.; Nurzaman, S.G.; Gopalai, A.A. Moving toward soft robotics: A decade review of the design of hand exoskeletons. Biomimetics 2018, 3, 17. [Google Scholar] [CrossRef]

- Rieger, C.; Desai, J. A Preliminary Study to Design and Evaluate Pneumatically Controlled Soft Robotic Actuators for a Repetitive Hand Rehabilitation Task. Biomimetics 2022, 7, 139. [Google Scholar] [CrossRef]

- Polygerinos, P.; Wang, Z.; Galloway, K.C.; Wood, R.J.; Walsh, C.J. Soft robotic glove for combined assistance and at-home rehabilitation. Robot. Auton. Syst. 2015, 73, 135–143. [Google Scholar] [CrossRef]

- In, H.; Kang, B.B.; Sin, M.; Cho, K.J. Exo-glove: A wearable robot for the hand with a soft tendon routing system. IEEE Robot. Autom. Mag. 2015, 22, 97–105. [Google Scholar] [CrossRef]

- Jadhav, S.; Kannanda, V.; Kang, B.; Tolley, M.T.; Schulze, J.P. Soft robotic glove for kinesthetic haptic feedback in virtual reality environments. Electron. Imaging 2017, 2017, 19–24. [Google Scholar] [CrossRef]

- Haptx. Available online: https://haptx.com/ (accessed on 30 January 2023).

- Terrile, S.; Miguelañez, J.; Barrientos, A. A soft haptic glove actuated with shape memory alloy and flexible stretch sensors. Sensors 2021, 21, 5278. [Google Scholar] [CrossRef] [PubMed]

- Hosseini, M.; Sengül, A.; Pane, Y.; De Schutter, J.; Bruyninck, H. Exoten-glove: A force-feedback haptic glove based on twisted string actuation system. In Proceedings of the 2018 27th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), Nanjing, China, 27–31 August 2018; pp. 320–327. [Google Scholar]

- Baik, S.; Park, S.; Park, J. Haptic glove using tendon-driven soft robotic mechanism. Front. Bioeng. Biotechnol. 2020, 8, 541105. [Google Scholar] [CrossRef]

- Hazman, M.A.W.; Nordin, I.; Noh, F.H.M.; Khamis, N.; Razif, M.; Faudzi, A.A.; Hanif, A.S.M. IMU sensor-based data glove for finger joint measurement. Indones. J. Electr. Eng. Comput. Sci. 2020, 20, 82–88. [Google Scholar]

- Aktakka, E.E.; Najafi, K. A six-axis micro platform for in situ calibration of MEMS inertial sensors. In Proceedings of the 2016 IEEE 29th International Conference on Micro Electro Mechanical Systems (MEMS), Shanghai, China, 24–28 January 2016; pp. 243–246. [Google Scholar]

- Nath, P.; Malepati, A. IMU based accident detection and intimation system. In Proceedings of the 2018 2nd International Conference on Electronics, Materials Engineering & Nano-Technology (IEMENTech), Kolkata, India, 4–5 May 2018; pp. 1–4. [Google Scholar]

- Tavares, R.; Sousa, P.J.; Abreu, P.; Restivo, M.T. Virtual environment for instrumented glove. In Proceedings of the 2016 13th International Conference on Remote Engineering and Virtual Instrumentation (REV), Madrid, Spain, 24–26 February 2016; pp. 311–312. [Google Scholar]

- Aparna, R.; Ruchitha, H.S.; Pranavi, N. IMU based Tracking of a Person using Nonlinear Autoregressive Exogenous (NARX) Algorithm in GPS-denied Areas. In Proceedings of the 2020 First IEEE International Conference on Measurement, Instrumentation, Control and Automation (ICMICA), Kurukshetra, India, 24–26 June 2020; pp. 1–4. [Google Scholar]

- Skuric, A.; Bank, H.S.; Unger, R.; Williams, O.; González-Reyes, D. SimpleFOC: A Field Oriented Control (FOC) Library for Controlling Brushless Direct Current (BLDC) and Stepper Motors. J. Open Source Softw. 2022, 7, 4232. [Google Scholar] [CrossRef]

- Candan, B.; Soken, H.E. Robust attitude estimation using IMU-only measurements. IEEE Trans. Instrum. Meas. 2021, 70, 9512309. [Google Scholar] [CrossRef]

- Luinge, H.J.; Veltink, P.H. Measuring orientation of human body segments using miniature gyroscopes and accelerometers. Med. Biol. Eng. Comput. 2005, 43, 273–282. [Google Scholar] [CrossRef]

- Mirzaei, F.M.; Roumeliotis, S.I. A Kalman filter-based algorithm for IMU-camera calibration: Observability analysis and performance evaluation. IEEE Trans. Robot. 2008, 24, 1143–1156. [Google Scholar] [CrossRef]

- Sarbishei, O. On the accuracy improvement of low-power orientation filters using IMU and MARG sensor arrays. In Proceedings of the 2016 IEEE International Symposium on Circuits and Systems (ISCAS), Montreal, QC, Canada, 22–25 May 2016; pp. 1542–1545. [Google Scholar]

- Jouybari, A.; Amiri, H.; Ardalan, A.A.; Zahraee, N.K. Methods comparison for attitude determination of a lightweight buoy by raw data of IMU. Measurement 2019, 135, 348–354. [Google Scholar] [CrossRef]

- Matsui, N.; Shigyo, M. Brushless DC motor control without position and speed sensors. IEEE Trans. Ind. Appl. 1992, 28, 120–127. [Google Scholar] [CrossRef]

- Amaechi, C.V.; Wang, F.; Ye, J. Experimental study on motion characterisation of CALM buoy hose system under water waves. J. Mar. Sci. Eng. 2022, 10, 204. [Google Scholar] [CrossRef]

- Huang, X.; Wang, R.; Miao, X. Research on Low Cost Multisensor Vehicle Integrated Navigation. In Proceedings of the 2022 IEEE Asia-Pacific Conference on Image Processing, Electronics and Computers (IPEC), Dalian, China, 14–16 April 2022; pp. 497–502. [Google Scholar]

- Sallam, E.; Abdel-Galil, E. Numerical Assessment of Building Vibration Techniques Using Laboratory Models. Port-Said Eng. Res. J. 2022, 26, 57–67. [Google Scholar]

- Hsiao, P.C.; Yang, S.Y.; Lin, B.S.; Lee, I.J.; Chou, W. Data glove embedded with 9-axis IMU and force sensing sensors for evaluation of hand function. In Proceedings of the 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Milan, Italy, 25–29 August 2015; pp. 4631–4634. [Google Scholar]

- Fang, B.; Sun, F.; Liu, H.; Guo, D. Development of a wearable device for motion capturing based on magnetic and inertial measurement units. Sci. Program. 2017, 2017, 7594763. [Google Scholar] [CrossRef]

- Bai, H.; Li, S.; Shepherd, R.F. Elastomeric haptic devices for virtual and augmented reality. Adv. Funct. Mater. 2021, 31, 2009364. [Google Scholar] [CrossRef]

| Open State | Semi-Closed State | Closed State | |

|---|---|---|---|

| MAE (°) | 0.3243 | 1.1090 | 2.6092 |

| Thumb | Index Finger | |||||

|---|---|---|---|---|---|---|

| IMU1 | IMU2 | IMU3 | IMU1 | IMU2 | IMU3 | |

| Mean Error (°) | 0.8415 | −1.1802 | −2.9858 | −0.8697 | −1.9731 | −1.7348 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, F.; Chen, J.; Ye, G.; Dong, S.; Gao, Z.; Zhou, Y. Soft Robotic Glove with Sensing and Force Feedback for Rehabilitation in Virtual Reality. Biomimetics 2023, 8, 83. https://doi.org/10.3390/biomimetics8010083

Li F, Chen J, Ye G, Dong S, Gao Z, Zhou Y. Soft Robotic Glove with Sensing and Force Feedback for Rehabilitation in Virtual Reality. Biomimetics. 2023; 8(1):83. https://doi.org/10.3390/biomimetics8010083

Chicago/Turabian StyleLi, Fengguan, Jiahong Chen, Guanpeng Ye, Siwei Dong, Zishu Gao, and Yitong Zhou. 2023. "Soft Robotic Glove with Sensing and Force Feedback for Rehabilitation in Virtual Reality" Biomimetics 8, no. 1: 83. https://doi.org/10.3390/biomimetics8010083

APA StyleLi, F., Chen, J., Ye, G., Dong, S., Gao, Z., & Zhou, Y. (2023). Soft Robotic Glove with Sensing and Force Feedback for Rehabilitation in Virtual Reality. Biomimetics, 8(1), 83. https://doi.org/10.3390/biomimetics8010083