AI-Driven Control Strategies for Biomimetic Robotics: Trends, Challenges, and Future Directions

Abstract

1. Introduction

2. Biomimetics

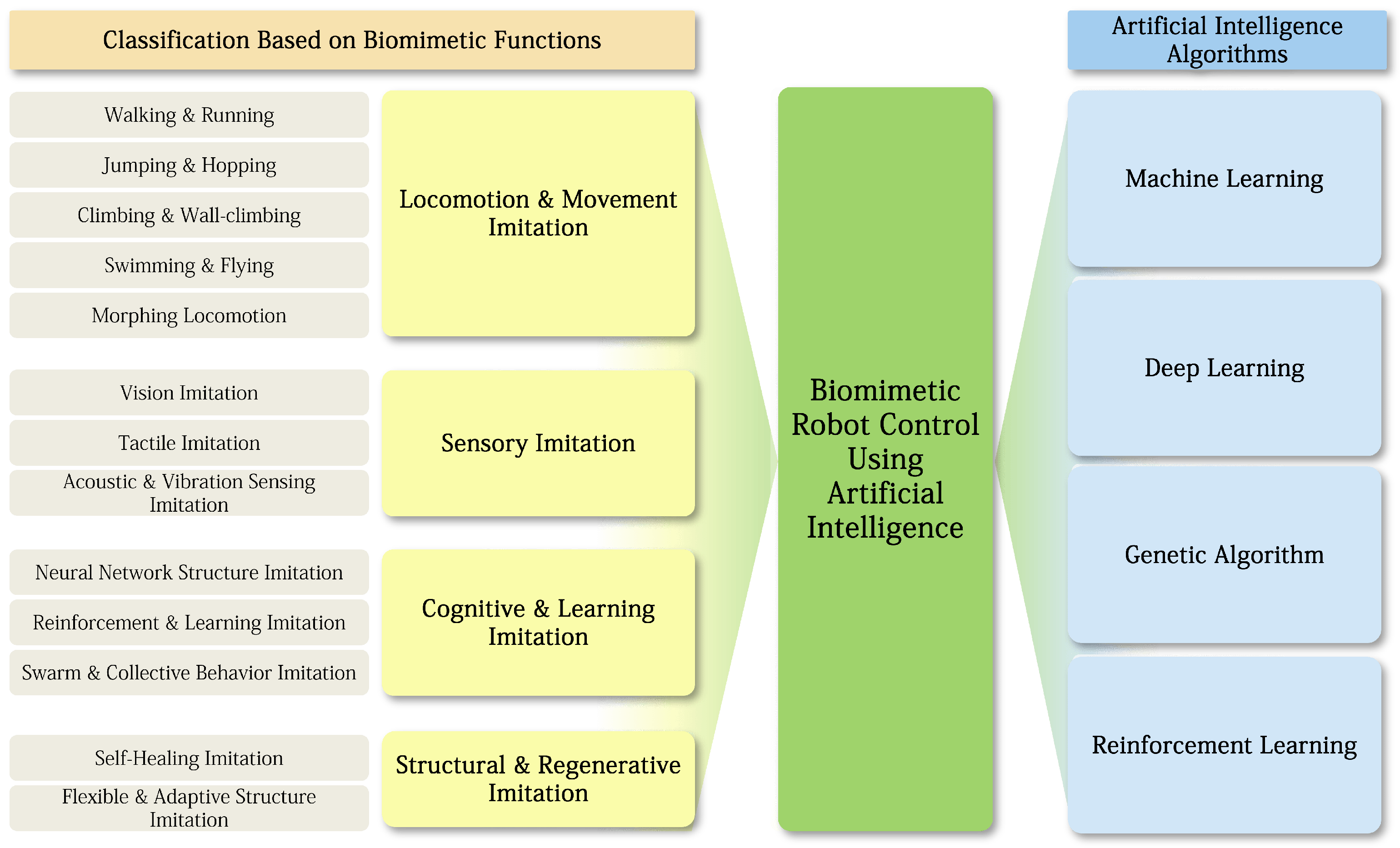

2.1. Locomotion Imitation

2.1.1. Walking and Running Imitation

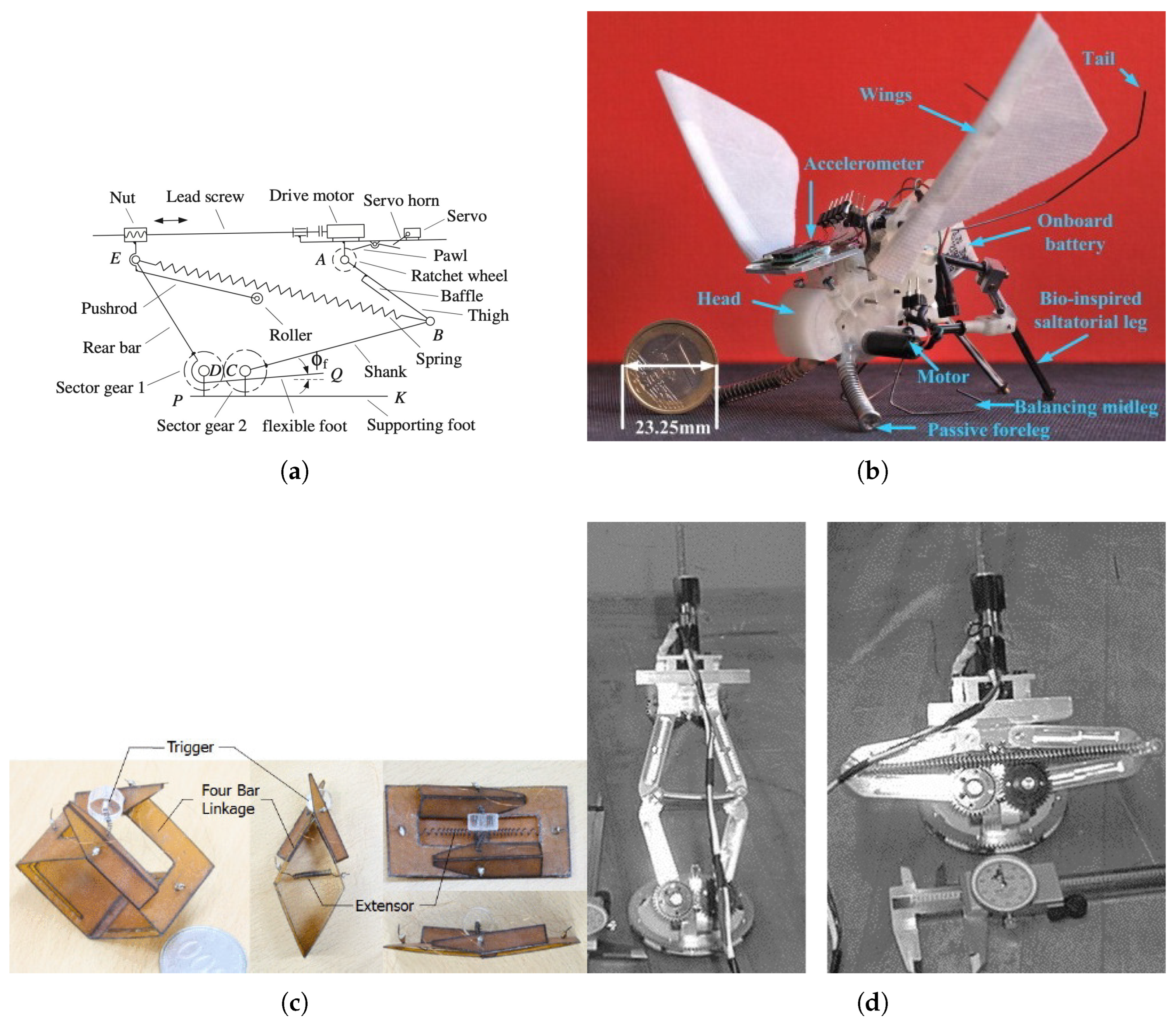

2.1.2. Jumping and Landing Imitation

2.1.3. Climbing and Wall-Climbing Imitation

2.1.4. Swimming and Flying Imitation in Fluid Environments

2.1.5. Morphing Locomotion

2.2. Sensory Imitation

2.2.1. Vision Imitation

2.2.2. Tactile Imitation

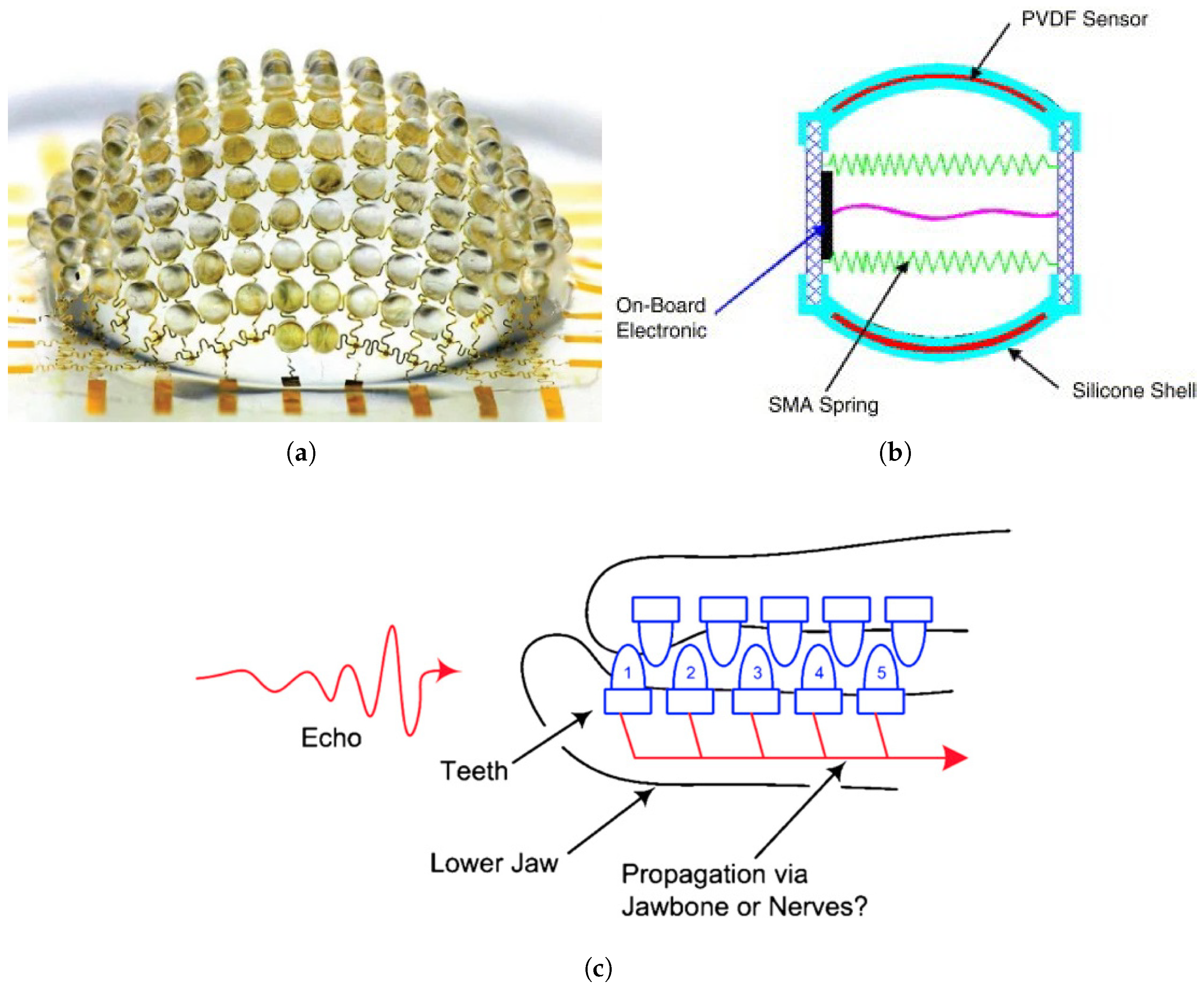

2.2.3. Acoustic and Vibration Sensing Imitation

2.2.4. Proprioceptive Sensing Imitation

2.3. Cognitive and Intelligence Imitation

2.3.1. Neural Network Imitation

2.3.2. Learning Imitation

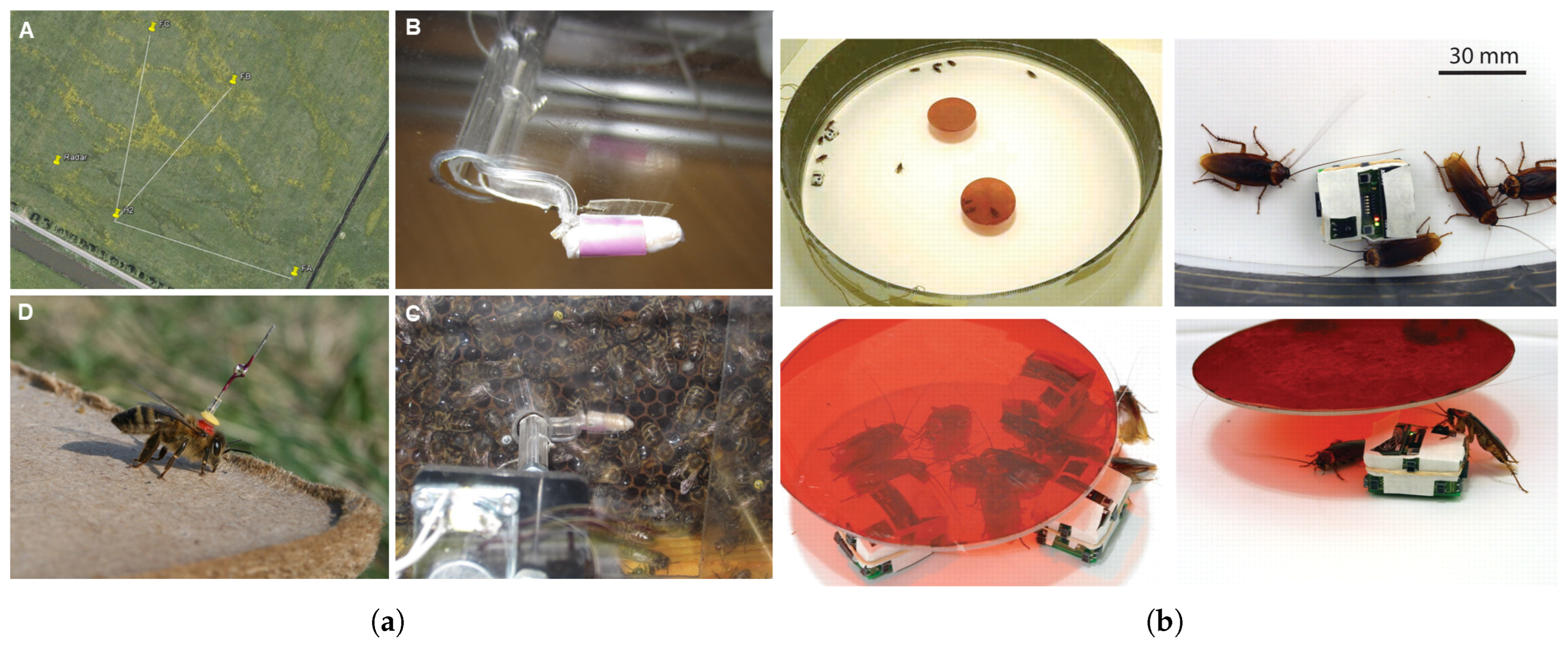

2.3.3. Swarm and Collective Behavior Imitation

2.4. Structure and Restoration Imitation

2.4.1. Self-Healing Imitation

2.4.2. Flexible and Adaptive Structure Imitation

3. Artificial Intelligence

3.1. Reinforcement Learning–Based Control Methods

3.2. Deep Learning–Based Control Methods

3.3. Genetic Algorithm–Based Control Methods

| Algorithm Type | Representative Algorithms | Key Strengths | Weaknesses | Application in Control Methods | Ref. |

|---|---|---|---|---|---|

| Machine Learning | KNN, SVM, Decision Tree, Random Forest, ANN | - Simple structure (KNN) - Strong classification performance in high dimensional space (SVM) - High accuracy, provides feature importance (RF) - Able to learn complex relationships (NN) | - Increased computational cost with large datasets (KNN) - High training time and memory requirements (SVM) - Prone to overfitting (RF) - Requires careful tuning and large datasets (NN) | Environment perception and prediction, data-driven state classification, sensor data-based fundamental decision-making | [48,63,64,65] |

| Deep Learning | CNN, RNN, LSTM, GRU, Transformer, CLIP, BLIP-2 | - Strong for image and local pattern extraction, easy parallelization (CNN) - Superior in handling time-series/sequential data (RNN) - Learns long-term dependencies and bidirectional context (LSTM/GRU/BiLSTM) - Stable for time-series inputs (TCN) - Excellent for parallel processing and context understanding; scalable for large text/multimodal data (Transformer) - Universal image-language integration (Vision Transformer/CLIP/BLIP-2) | - Limited past information reflection, risk of overfitting (CNN) - Gradient vanishing with long sequences, difficult to parallelize (RNN) - Increased complexity and tuning burden (LSTM/GRU) - Sensitive to network design (TCN) - High computation/memory burden, prone to overfitting (Transformer, large multimodal models) | Vision-based control, navigation, voice command processing, vision-based behavior generation | [59,60,66,67,68,69,70,71,72,73] |

| Genetic Algorithms | GA, NEAT, HyperNEAT, GP-based NEAT | - No need for differentiation (GA) - Simultaneous optimization of network structure and weights, supports incremental complexity (NEAT) - Efficient evolution for large scale neural networks (HyperNEAT) - Rapid evolution, efficient architecture search (RBF-NEAT) | - High repetitive computation, slow convergence (GA) - Large computational cost for large networks (NEAT) - Complex indirect encoding design (HyperNEAT) - Requires tuning, burden of hybridization (RBF-NEAT) | Co-evolution of robot morphology and control strategy, neural network architecture optimization, PID/ANFIS tuning | [61,62,74,75,76,77,78,79] |

| Algorithm Type | Representative Algorithms | Key Strengths | Weaknesses | Application in Control Methods | Ref. |

|---|---|---|---|---|---|

| Reinforcement Learning | DQN, PPO, TRPO, A3C, SAC, TD3 | - Effective in discrete state spaces, supports experience replay (Q-learning/DQN) - Efficient parallel training and high sample efficiency (A2C/A3C) - Stable convergence with policy-based methods, suitable for continuous control (TRPO/PPO) - Off-policy; excels in continuous/high-dimensional control, good exploration-exploitation balance (SAC/TD3/DDPG) - High energy efficiency, fast learning with SNN integration (DRL + SNN) | - Limited in continuous/complex environments (Q-learning/DQN) - Requires reward design and parameter tuning (A2C/A3C) - High computational load, sensitive to parameter setting (TRPO/PPO) - Performance heavily affected by environment/reward design, tuning difficulty (SAC/TD3/DDPG) - Hardware deployment complexity (DRL + SNN) | Autonomous locomotion, underwater/aerial robot path optimization, robotic manipulation control | [54,57,58,64,80,81,82,83,84,85] |

4. AI-Based Control of Biomimetic Robots

4.1. AI for the Control of Locomotion-Mimicking Biomimetic Robots

4.1.1. Walking and Running Imitation

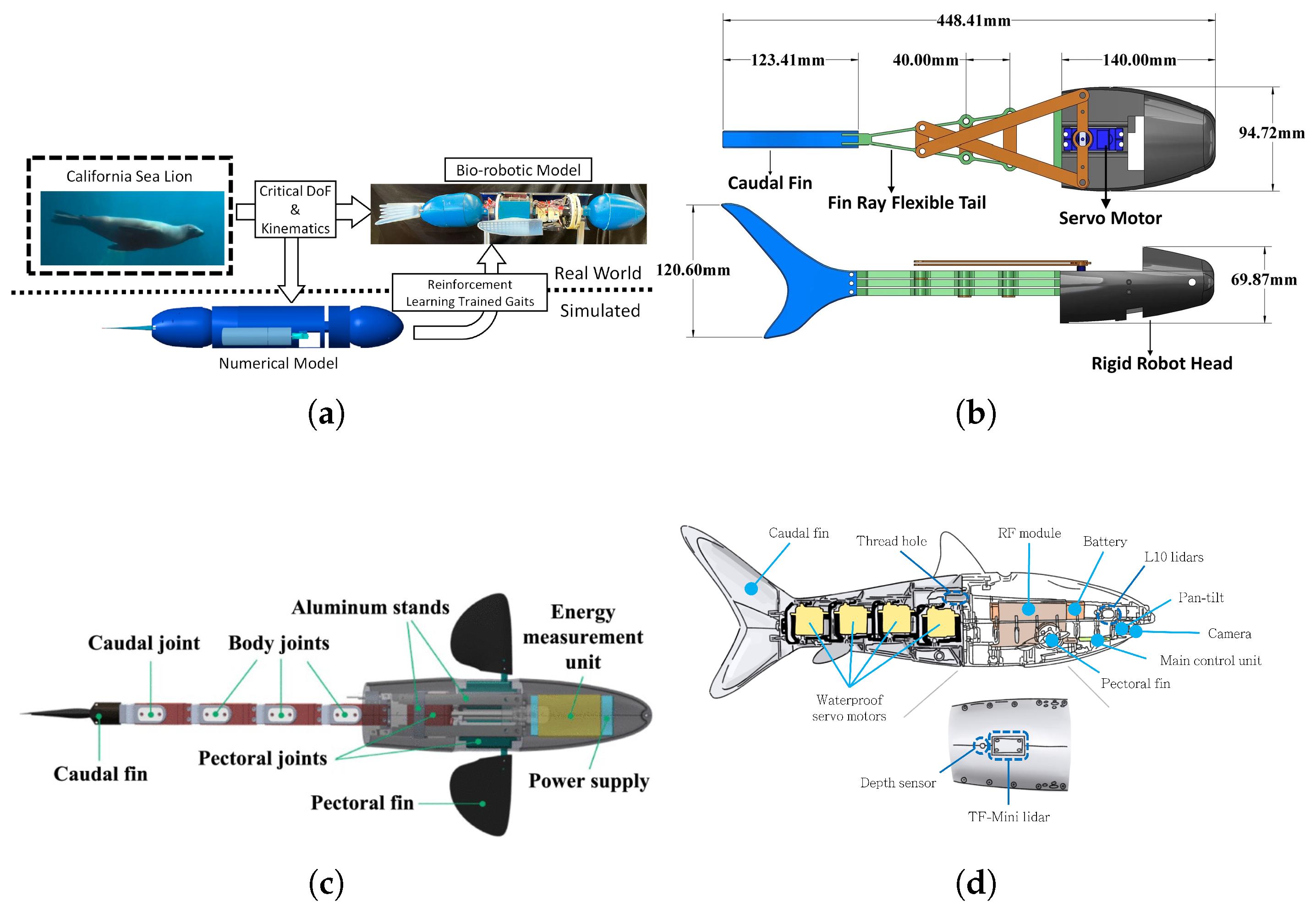

4.1.2. Swimming and Underwater Locomotion Imitation

4.1.3. Morphing Locomotion Imitation

4.2. AI for the Control of Sensory-Mimicking Biomimetic Robots

4.2.1. Vision Imitation

4.2.2. Tactile Imitation

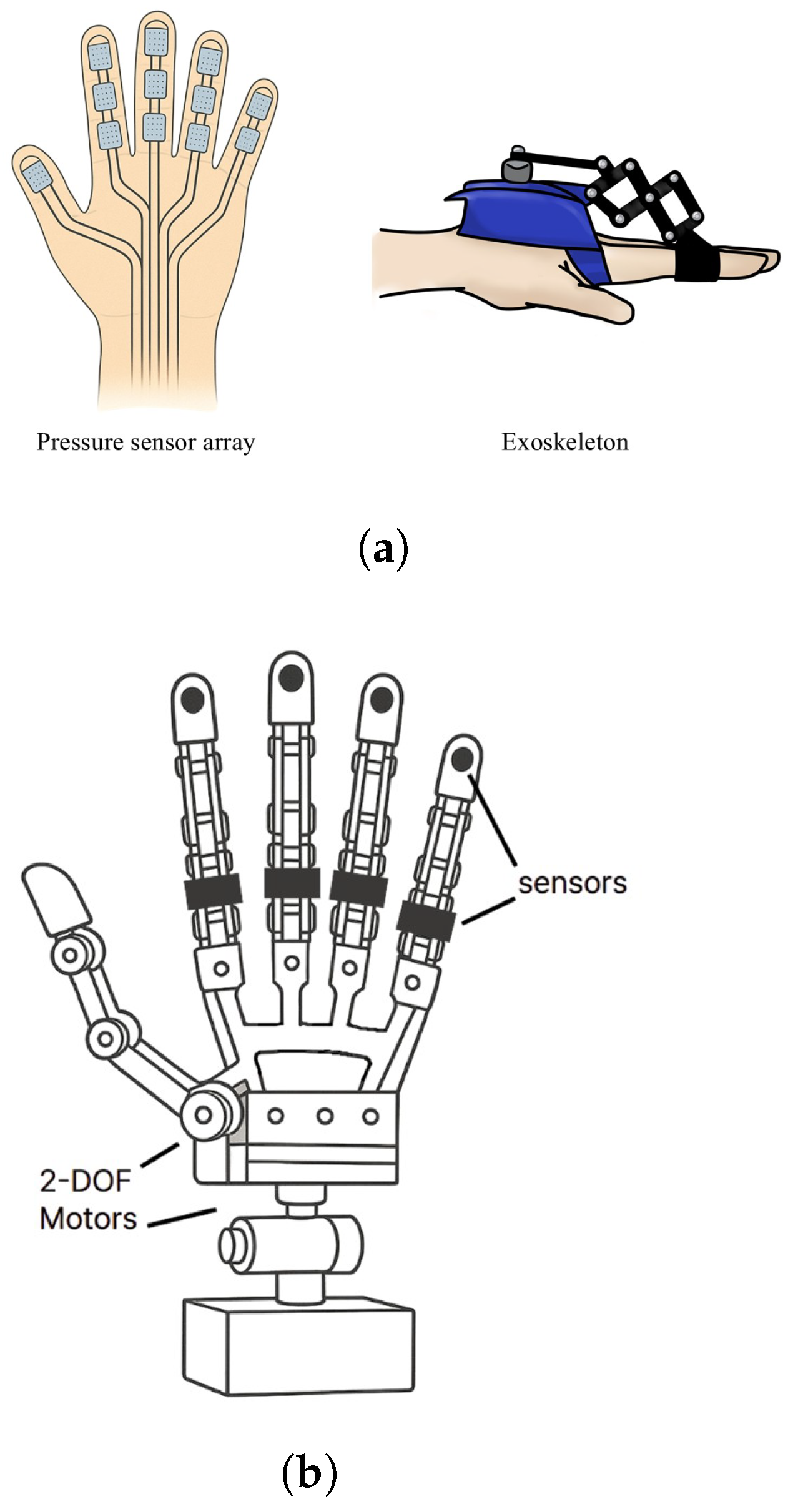

4.2.3. Acoustic and Vibration Sensing Imitation

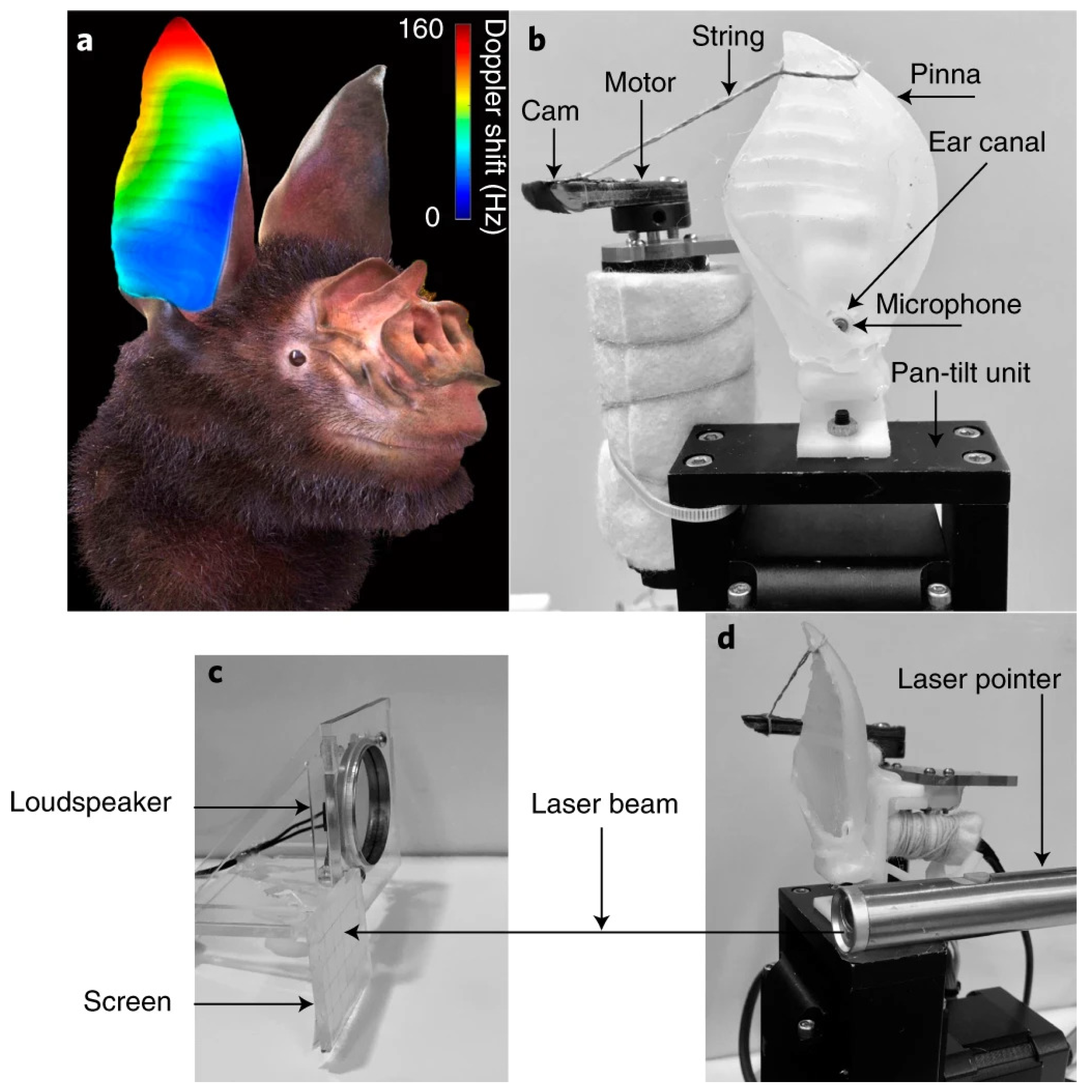

4.3. AI for the Control of Cognitive and Intelligence-Mimicking Robots

4.3.1. Adaptive Neural Imitation

4.3.2. Swarm Behavior Imitation

4.4. AI for the Control of Structural and Regenerative Biomimetic Robots

Self-Healing Imitation

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| DOF | Degree-of-Freedom |

| ACN | Adaptive Coordination Network |

| AFC | Active Flow Control |

| AHHS | Artificial Homeostatic Hormone System |

| AI | Artificial Intelligence |

| ANFIS | Adaptive Neuro-Fuzzy Inference System |

| ANFIS-GA | ANFIS-Genetic Algorithm |

| ANN | Artificial Neural Network |

| AP-MEM | biologically inspired muscle stimulation model |

| BCF | Body / Caudal Fin |

| BLIP | Bootstrapping Language-Image Pre-training |

| BMAI | Brain-Morphic Artificial Intelligence |

| BREAD | Biomimetic Research for Energy-efficient AI Designs |

| BSNN | Basic Spiking Neural Network |

| CGR | Climbing Gecko Robot |

| CNN | Convolutional Neural Network |

| CPPN | Compositional Pattern Producing Network |

| CPG | Central Pattern Generator |

| DL | Deep Learning |

| DLQR | Discrete-time Linear Quadratic Regulator |

| DOAJ | Directory of Open Access Journals |

| DQN | Deep Q-Network |

| DS-GMR | Dynamical-System Gaussian Mixture Regression |

| DSP | Digital Signal Processing |

| DRL | Deep Reinforcement Learning |

| EA | Evolutionary Algorithm |

| EMG | Electromyography |

| ETFE | Ethylene-Tetrafluoroethylene |

| FEM | Finite Element Method |

| FPGA | Field-Programmable Gate Array |

| FSR | Force-Sensing Resistor |

| FUM | Ferdowsi University of Mashhad |

| GA | Genetic Algorithm |

| GMM | Gaussian Mixture Model |

| GMR | Gaussian Mixture Regression |

| GPS | Global Positioning System |

| GRN | Gene Regulatory Network |

| GRU | Gated Recurrent Unit |

| HMAX | Hierarchical Model and X |

| HMM | Hidden Markov Model |

| I4.0 | Industry 4.0 |

| IMU | Inertial Measurement Unit |

| LIF | Leaky-Integrate-and-Fire |

| LSTM | Long Short-Term Memory |

| LVPS | Low-Voltage Power Supply |

| MDP | Markov Decision Process |

| MF | Membership Function |

| ML | Machine Learning |

| MLP | Multilayer Perceptron |

| MPC | Model Predictive Control |

| MPF | Muscle-Physiology-Inspired Fin |

| NMPC | Non-linear Model Predictive Control |

| NN | Neural Network |

| PID | Proportional-Integral-Derivative |

| PPO | Proximal Policy Optimization |

| PPC | Particle Swarm Optimization |

| PSO | Particle Swarm Optimization |

| PVDF | Poly-Vinylidene Fluoride |

| RBF-NEAT | Radial-Basis-Function NEAT |

| RF | Random Forest |

| RGR | Rigid Gecko Robot |

| RI | Reinforcement Learning |

| RL | Root-Mean-Square Error |

| RMSE | Root Mean Square Error |

| RNN | Recurrent Neural Network |

| RRT | Rapidly-exploring Random Tree |

| SAC | Soft Actor-Critic |

| SMA | Shape-Memory Alloy |

| SNN | Spiking Neural Network |

| STIFF-FLOP | STIFFness-controllable Flexible and Learnable manipulator for surgical OPerations |

| STOMP | Stochastic Trajectory Optimization for Motion Planning |

| SVM | Support Vector Machine |

| TCN | Temporal Convolutional Network |

| TORCS | The Open Racing Car Simulator |

| TRPO | Trust-Region Policy Optimization |

| UKF | Unscented Kalman Filter |

| UUV | Un-manned/Underwater Vehicle |

| VGG | Visual Geometry Group |

| VMP | Velocity-based Movement Primitive |

References

- Song, Y.M.; Xie, Y.; Malyarchuk, V.; Xiao, J.; Jung, I.; Choi, K.J.; Liu, Z.; Park, H.; Lu, C.; Kim, R.H.; et al. Digital cameras with designs inspired by the arthropod eye. Nature 2013, 497, 95–99. [Google Scholar] [CrossRef]

- El-Hussieny, H. Real-time deep learning-based model predictive control of a 3-DOF biped robot leg. Sci. Rep. 2024, 14, 16243. [Google Scholar] [CrossRef] [PubMed]

- Cai, Y.; Bi, S.; Zheng, L. Design Optimization of a Bionic Fish with Multi-Joint Fin Rays. Adv. Robot. 2012, 26, 177–196. [Google Scholar] [CrossRef]

- Malekzadeh, M.S.; Calinon, S.; Bruno, D.; Caldwell, D.G. Learning by imitation with the STIFF-FLOP surgical robot: A biomimetic approach inspired by octopus movements. Robot. Biomimetics 2014, 1, 13. [Google Scholar] [CrossRef]

- Yan, S.; Wu, Z.; Wang, J.; Huang, Y.; Tan, M.; Yu, J. Real-World Learning Control for Autonomous Exploration of a Biomimetic Robotic Shark. IEEE Trans. Ind. Electron. 2022, 70, 3966–3974. [Google Scholar] [CrossRef]

- Halloy, J.; Sempo, G.; Caprari, G.; Rivault, C.; Asadpour, M.; Tâche, F.; Saïd, I.; Durier, V.; Canonge, S.; Amé, J.M.; et al. Social Integration of Robots into Groups of Cockroaches to Control Self-Organized Choices. Science 2007, 318, 1155–1158. [Google Scholar] [CrossRef]

- Schramm, L.; Jin, Y.; Sendhoff, B. Emerged Coupling of Motor Control and Morphological Development in Evolution of Multi-cellular Animats. In Advances in Artificial Life; Springer: Berlin/Heidelberg, Germany, 2011. [Google Scholar] [CrossRef]

- Guo, H.; Meng, Y.; Jin, Y. A cellular mechanism for multi-robot construction via evolutionary multi-objective optimization of a gene regulatory network. Biosystems 2009, 98, 193–203. [Google Scholar] [CrossRef]

- Lee, J.; Sitte, J. Morphogenetic Evolvable Hardware Controllers for Robot Walking. In Proceedings of the 2nd International Symposium on Autonomous Minirobots for Research and Edutainment, Brisbane, Australia, 18–20 February 2003; Centre for IT Innovation, QUT, Brisbane: Australia, Brisbane, 2003; pp. 53–62. [Google Scholar]

- Xu, Z.; Yang, S.X.; Gadsden, S.A. Enhanced Bioinspired Backstepping Control for a Mobile Robot With Unscented Kalman Filter. IEEE Access 2020, 8, 125899–125908. [Google Scholar] [CrossRef]

- Chai, H.; Ge, W.; Yang, F.; Yang, G. Autonomous jumping robot with regulable trajectory and flexible feet. Proc. Inst. Mech. Eng. Part C J. Mech. Eng. Sci. 2014, 228, 2820–2832. [Google Scholar] [CrossRef]

- Li, F.; Liu, W.; Fu, X.; Bonsignori, G.; Scarfogliero, U.; Stefanini, C.; Dario, P. Jumping like an insect: Design and dynamic optimization of a jumping mini robot based on bio-mimetic inspiration. Mechatronics 2012, 22, 167–176. [Google Scholar] [CrossRef]

- Burrows, M. Froghopper insects leap to new heights. Nature 2003, 424, 509. [Google Scholar] [CrossRef]

- Nguyen, Q.V.; Park, H.C. Design and Demonstration of a Locust-Like Jumping Mechanism for Small-Scale Robots. J. Bionic Eng. 2012, 9, 271–281. [Google Scholar] [CrossRef]

- Koh, J.S.; Jung, S.P.; Noh, M.; Kim, S.W.; Cho, K.J. Flea inspired catapult mechanism with active energy storage and release for small scale jumping robot. In Proceedings of the 2013 IEEE International Conference on Robotics and Automation, Karlsruhe, Germany, 6–10 May 2013; pp. 26–31. [Google Scholar] [CrossRef]

- Fiorini, P.; Burdick, J. The Development of Hopping Capabilities for Small Robots. Auton. Robot. 2003, 14, 239–254. [Google Scholar] [CrossRef]

- Menon, C.; Sitti, M. A Biomimetic Climbing Robot Based on the Gecko. J. Bionic Eng. 2006, 3, 115–125. [Google Scholar] [CrossRef]

- Hawkes, E.W.; Ulmen, J.; Esparza, N.; Cutkosky, M.R. Scaling walls: Applying dry adhesives to the real world. In Proceedings of the 2011 IEEE/RSJ International Conference on Intelligent Robots and Systems, San Francisco, CA, USA, 25–30 September 2011; pp. 5100–5106. [Google Scholar] [CrossRef]

- Andrada, E.; Mämpel, J.; Schmidt, A.; Fischer, M.; Karguth, A.; Witte, H. From biomechanics of rats’ inclined locomotion to a climbing robot. Int. J. Des. Nat. Ecodynamics 2013, 8, 192–212. [Google Scholar] [CrossRef]

- Ji, A.; Zhao, Z.; Manoonpong, P.; Wang, W.; Chen, G.; Dai, Z. A Bio-inspired Climbing Robot with Flexible Pads and Claws. J. Bionic Eng. 2018, 15, 368–378. [Google Scholar] [CrossRef]

- Ajanic, E.; Paolini, A.; Coster, C.; Floreano, D.; Johansson, C. Robotic Avian Wing Explains Aerodynamic Advantages of Wing Folding and Stroke Tilting in Flapping Flight. Adv. Intell. Syst. 2022, 5, 2200148. [Google Scholar] [CrossRef]

- Ajanic, E.; Feroskhan, M.; Mintchev, S.; Noca, F.; Floreano, D. Bio-Inspired Synergistic Wing and Tail Morphing Extends Flight Capabilities of Drones. arXiv 2020. [Google Scholar] [CrossRef]

- Li, T.; Li, G.; Liang, Y.; Cheng, T.; Dai, J.; Yang, X.; Liu, B.; Zeng, Z.; Huang, Z.; Luo, Y.; et al. Fast-moving soft electronic fish. Sci. Adv. 2017, 3, e1602045. [Google Scholar] [CrossRef]

- Georgiades, C.; Nahon, M.; Buehler, M. Simulation of an underwater hexapod robot. Ocean Eng. 2009, 36, 39–47. [Google Scholar] [CrossRef]

- Behrens, M.R.; Ruder, W.C. Smart Magnetic Microrobots Learn to Swim with Deep Reinforcement Learning. Adv. Intell. Syst. 2022, 4, 2200023. [Google Scholar] [CrossRef]

- Wang, R.; Wang, S.; Wang, Y.; Cheng, L.; Tan, M. Development and Motion Control of Biomimetic Underwater Robots: A Survey. IEEE Trans. Syst. Man Cybern. Syst. 2022, 52, 833–844. [Google Scholar] [CrossRef]

- Schmickl, T. Major Feedbacks that Support Artificial Evolution in Multi-Modular Robotics. In Proceedings of the International Workshop Exploring New Horizons in Evolutionary Design of Robots at IROS’09, St. Louis, MO, USA, 11 October 2009. [Google Scholar]

- Liu, W.; Menciassi, A.; Scapellato, S.; Dario, P.; Chen, Y. A biomimetic sensor for a crawling minirobot. Robot. Auton. Syst. 2006, 54, 513–528. [Google Scholar] [CrossRef]

- Tao, K.; Yu, J.; Zhang, J.; Bao, A.; Hu, H.; Ye, T.; Ding, Q.; Wang, Y.; Lin, H.; Wu, J.; et al. Deep-Learning Enabled Active Biomimetic Multifunctional Hydrogel Electronic Skin. ACS Nano 2023, 17, 16160–16173. [Google Scholar] [CrossRef] [PubMed]

- Liu, W.; Li, F.; Stefanini, C.; Chen, D.; Dario, P. Biomimetic flexible/compliant sensors for a soft-body lamprey-like robot. Robot. Auton. Syst. 2010, 58, 1138–1148. [Google Scholar] [CrossRef]

- Dobbins, P. Dolphin sonar—modelling a new receiver concept. Bioinspir. Biomim. 2007, 2, 19–29. [Google Scholar] [CrossRef]

- Cianchetti, M.; Calisti, M.; Margheri, L.; Kuba, M.; Laschi, C. Bioinspired locomotion and grasping in water: The soft eight-arm OCTOPUS robot. Bioinspir. Biomim. 2015, 10, 035003. [Google Scholar] [CrossRef]

- Wensing, P.M.; Wang, A.; Seok, S.; Otten, D.; Lang, J.; Kim, S. Proprioceptive actuator design in the mit cheetah: Impact mitigation and high-bandwidth physical interaction for dynamic legged robots. IEEE Trans. Robot. 2017, 33, 509–522. [Google Scholar] [CrossRef]

- Lloyd, J.; Lepora, N.F. Goal-driven robotic pushing using tactile and proprioceptive feedback. IEEE Trans. Robot. 2021, 38, 1201–1212. [Google Scholar] [CrossRef]

- Liu, X.; Han, X.; Hong, W.; Wan, F.; Song, C. Proprioceptive learning with soft polyhedral networks. Int. J. Robot. Res. 2024, 43, 1916–1935. [Google Scholar] [CrossRef]

- Krichmar, J.L.; Severa, W.; Khan, M.S.; Olds, J.L. Making BREAD: Biomimetic Strategies for Artificial Intelligence Now and in the Future. Front. Neurosci. 2019, 13, 666. [Google Scholar] [CrossRef] [PubMed]

- Levi, T.; Nanami, T.; Tange, A.; Aihara, K.; Kohno, T. Development and Applications of Biomimetic Neuronal Networks Toward BrainMorphic Artificial Intelligence. IEEE Trans. Circuits Syst. II Express Briefs 2018, 65, 577–581. [Google Scholar] [CrossRef]

- Grossberg, S. Contour Enhancement, Short Term Memory, and Constancies in Reverberating Neural Networks. Stud. Appl. Math. 1973, 52, 213–257. [Google Scholar] [CrossRef]

- Landgraf, T.; Bierbach, D.; Kirbach, A.; Cusing, R.; Oertel, M.; Lehmann, K.; Greggers, U.; Menzel, R.; Rojas, R. Dancing Honey bee Robot Elicits Dance-Following and Recruits Foragers. arXiv 2018. [Google Scholar] [CrossRef]

- Hamann, H.; Worn, H.; Crailsheim, K.; Schmickl, T. Spatial macroscopic models of a bio-inspired robotic swarm algorithm. In Proceedings of the 2008 IEEE/RSJ International Conference on Intelligent Robots and Systems, Nice, France, 22–26 September 2008; pp. 1415–1420. [Google Scholar] [CrossRef]

- Guo, H.; Jin, Y.; Meng, Y. A morphogenetic framework for self-organized multirobot pattern formation and boundary coverage. ACM Trans. Auton. Adapt. Syst. 2012, 7, 1–23. [Google Scholar] [CrossRef]

- Jin, Y.; Guo, H.; Meng, Y. A Hierarchical Gene Regulatory Network for Adaptive Multirobot Pattern Formation. IEEE Trans. Syst. Man Cybern. Part B (Cybern.) 2012, 42, 805–816. [Google Scholar] [CrossRef]

- Blackiston, D.; Lederer, E.; Kriegman, S.; Garnier, S.; Bongard, J.; Levin, M. A cellular platform for the development of synthetic living machines. Sci. Robot. 2021, 6, eabf1571. [Google Scholar] [CrossRef]

- Schaffer, S.; Pamu, H.H.; Webster-Wood, V.A. Hitting the Gym: Reinforcement Learning Control of Exercise-Strengthened Biohybrid Robots in Simulation. arXiv 2024. [Google Scholar] [CrossRef]

- Kriegman, S.; Blackiston, D.; Levin, M.; Bongard, J. A scalable pipeline for designing reconfigurable organisms. Proc. Natl. Acad. Sci. USA 2020, 117, 1853–1859. [Google Scholar] [CrossRef]

- Kriegman, S.; Blackiston, D.; Levin, M.; Bongard, J. Kinematic self-replication in reconfigurable organisms. Proc. Natl. Acad. Sci. USA 2021, 118, e2112672118. [Google Scholar] [CrossRef]

- Cocho-Bermejo, A.; Vogiatzaki, M. Phenotype Variability Mimicking as a Process for the Test and Optimization of Dynamic Facade Systems. Biomimetics 2022, 7, 85. [Google Scholar] [CrossRef] [PubMed]

- Woschank, M.; Rauch, E.; Zsifkovits, H. A Review of Further Directions for Artificial Intelligence, Machine Learning, and Deep Learning in Smart Logistics. Sustainability 2020, 12, 3760. [Google Scholar] [CrossRef]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Graves, A.; Antonoglou, I.; Wierstra, D.; Riedmiller, M. Playing Atari with Deep Reinforcement Learning. arXiv 2013. [Google Scholar] [CrossRef]

- Schulman, J.; Levine, S.; Moritz, P.; Jordan, M.I.; Abbeel, P. Trust Region Policy Optimization. arXiv 2017. [Google Scholar] [CrossRef]

- Schulman, J.; Wolski, F.; Dhariwal, P.; Radford, A.; Klimov, O. Proximal Policy Optimization Algorithms. arXiv 2017. [Google Scholar] [CrossRef]

- Mnih, V.; Badia, A.P.; Mirza, M.; Graves, A.; Lillicrap, T.P.; Harley, T.; Silver, D.; Kavukcuoglu, K. Asynchronous Methods for Deep Reinforcement Learning. arXiv 2016. [Google Scholar] [CrossRef]

- He, X.; Yuan, C.; Zhou, W.; Yang, R.; Held, D.; Wang, X. Visual Manipulation with Legs. arXiv 2024. [Google Scholar] [CrossRef]

- Mock, J.W.; Muknahallipatna, S.S. Sim-to-Real: A Performance Comparison of PPO, TD3, and SAC Reinforcement Learning Algorithms for Quadruped Walking Gait Generation. J. Intell. Learn. Syst. Appl. 2024, 16, 23–43. [Google Scholar] [CrossRef]

- Zanatta, L.; Barchi, F.; Manoni, S.; Tolu, S.; Bartolini, A.; Acquaviva, A. Exploring spiking neural networks for deep reinforcement learning in robotic tasks. Sci. Rep. 2024, 14, 30648. [Google Scholar] [CrossRef]

- Liu, R.; Nageotte, F.; Zanne, P.; de Mathelin, M.; Dresp-Langley, B. Deep Reinforcement Learning for the Control of Robotic Manipulation: A Focussed Mini-Review. Robotics 2021, 10, 22. [Google Scholar] [CrossRef]

- Vignon, C.; Rabault, J.; Vinuesa, R. Recent advances in applying deep reinforcement learning for flow control: Perspectives and future directions. Phys. Fluids 2023, 35, 031301. [Google Scholar] [CrossRef]

- Esteso, A.; Peidro, D.; Mula, J.; Díaz-Madroñero, M. Reinforcement learning applied to production planning and control. Int. J. Prod. Res. 2023, 61, 5772–5789. [Google Scholar] [CrossRef]

- Bojarski, M.; Testa, D.D.; Dworakowski, D.; Firner, B.; Flepp, B.; Goyal, P.; Jackel, L.D.; Monfort, M.; Muller, U.; Zhang, J.; et al. End to End Learning for Self-Driving Cars. arXiv 2016. [Google Scholar] [CrossRef]

- Chen, C.; Seff, A.; Kornhauser, A.; Xiao, J. DeepDriving: Learning Affordance for Direct Perception in Autonomous Driving. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 2722–2730. [Google Scholar] [CrossRef]

- Selma, B.; Chouraqui, S.; Selma, B. A Genetic Algorithm-Based Neuro-Fuzzy Controller for Unmanned Aerial Vehicle Control. Int. J. Appl. Metaheuristic Comput. 2022, 13, 1–23. [Google Scholar] [CrossRef]

- Torres-Salinas, H.; Rodríguez-Reséndiz, J.; Cruz-Miguel, E.E.; Ángeles Hurtado, L.A. Fuzzy Logic and Genetic-Based Algorithm for a Servo Control System. Micromachines 2022, 13, 586. [Google Scholar] [CrossRef]

- AhmedK, A.; Aljahdali, S.; Naimatullah Hussain, S. Comparative Prediction Performance with Support Vector Machine and Random Forest Classification Techniques. Int. J. Comput. Appl. 2013, 69, 12–16. [Google Scholar] [CrossRef]

- Li, Y. Deep Reinforcement Learning: An Overview. arXiv 2018. [Google Scholar] [CrossRef]

- Boateng, E.Y.; Otoo, J.; Abaye, D.A. Basic Tenets of Classification Algorithms K-Nearest-Neighbor, Support Vector Machine, Random Forest and Neural Network: A Review. J. Data Anal. Inf. Process. 2020, 8, 341–357. [Google Scholar] [CrossRef]

- Perumal, T.; Mustapha, N.; Mohamed, R.; Shiri, F.M. A Comprehensive Overview and Comparative Analysis on Deep Learning Models. J. Artif. Intell. 2024, 6, 301–360. [Google Scholar] [CrossRef]

- Gehring, J.; Auli, M.; Grangier, D.; Yarats, D.; Dauphin, Y.N. Convolutional Sequence to Sequence Learning. arXiv 2017. [Google Scholar] [CrossRef]

- Yin, W.; Kann, K.; Yu, M.; Schütze, H. Comparative Study of CNN and RNN for Natural Language Processing. arXiv 2017. [Google Scholar] [CrossRef]

- Selvin, S.; Vinayakumar, R.; Gopalakrishnan, E.A.; Menon, V.K.; Soman, K.P. Stock price prediction using LSTM, RNN and CNN-sliding window model. In Proceedings of the 2017 International Conference on Advances in Computing, Communications and Informatics (ICACCI), Udupi, India, 13–16 September 2017; pp. 1643–1647. [Google Scholar] [CrossRef]

- Bahdanau, D.; Cho, K.; Bengio, Y. Neural Machine Translation by Jointly Learning to Align and Translate. arXiv 2016. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. arXiv 2023. [Google Scholar] [CrossRef]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning Transferable Visual Models From Natural Language Supervision. arXiv 2021. [Google Scholar] [CrossRef]

- Li, J.; Li, D.; Savarese, S.; Hoi, S. BLIP-2: Bootstrapping Language-Image Pre-training with Frozen Image Encoders and Large Language Models. arXiv 2023. [Google Scholar] [CrossRef]

- De Figueiredo, R.; Toso, B.; Schmith, J. Auto-Tuning PID Controller Based on Genetic Algorithm. In Disturbance Rejection Control; Shamsuzzoha, M., Raja, G.L., Eds.; IntechOpen: Rijeka, Croatia, 2023. [Google Scholar] [CrossRef]

- Tsoy, Y.; Spitsyn, V. Using genetic algorithm with adaptive mutation mechanism for neural networks design and training. In Proceedings of the 9th Russian-Korean International Symposium on Science and Technology, 2005. KORUS 2005, Novosibirsk, Russia, 26–2 July 2005; pp. 709–714. [Google Scholar] [CrossRef]

- Tsoy, Y.; Spitsyn, V. Digital Images Enhancement with Use of Evolving Neural Networks. In Parallel Problem Solving from Nature—PPSN IX; Runarsson, T.P., Beyer, H.G., Burke, E., Merelo-Guervós, J.J., Whitley, L.D., Yao, X., Eds.; Springer: Berlin/Heidelberg, Germany, 2006; pp. 593–602. [Google Scholar]

- Buk, Z.; Koutník, J.; Šnorek, M. NEAT in HyperNEAT Substituted with Genetic Programming. In Adaptive and Natural Computing Algorithms; Kolehmainen, M., Toivanen, P., Beliczynski, B., Eds.; Springer: Berlin/Heidelberg, Germany, 2009; pp. 243–252. [Google Scholar]

- Alfaham, A.; Van Raemdonck, S.; Mercelis, S. Genetic NEAT-Based Method for Multi-Class Classification. In Proceedings of the 2024 7th International Conference on Algorithms, Computing and Artificial Intelligence (ACAI), Guangzhou, China, 20–22 December 2024; pp. 1–5. [Google Scholar] [CrossRef]

- Mohabeer, H.; Soyjaudah, K.M.S. A Hybrid Genetic Algorithm and Radial Basis Function NEAT. In Neural Networks and Artificial Intelligence; Springer: Cham, Switzerland, 2014. [Google Scholar] [CrossRef]

- Tan, Z.; Karaköse, M. Comparative Evaluation for Effectiveness Analysis of Policy Based Deep Reinforcement Learning Approaches. Int. J. Comput. Inf. Technol. 2021, 10, 130–139. [Google Scholar] [CrossRef]

- Rio, A.d.; Jimenez, D.; Serrano, J. Comparative Analysis of A3C and PPO Algorithms in Reinforcement Learning: A Survey on General Environments. IEEE Access 2024, 12, 146795–146806. [Google Scholar] [CrossRef]

- Majid, A.Y.; Saaybi, S.; Francois-Lavet, V.; Prasad, R.V.; Verhoeven, C. Deep Reinforcement Learning Versus Evolution Strategies: A Comparative Survey. IEEE Trans. Neural Netw. Learn. Syst. 2024, 35, 11939–11957. [Google Scholar] [CrossRef]

- Fuente, N.D.L.; Guerra, D.A.V. A Comparative Study of Deep Reinforcement Learning Models: DQN vs. PPO vs A2C. arXiv 2024. [Google Scholar] [CrossRef]

- Ejaz, M.M.; Tang, T.B.; Lu, C.K. Autonomous Visual Navigation using Deep Reinforcement Learning: An Overview. In Proceedings of the 2019 IEEE Student Conference on Research and Development (SCOReD), Bandar Seri Iskandar, Malaysia, 15–17 October 2019; pp. 294–299. [Google Scholar] [CrossRef]

- Tan, Z.; Karakose, M. Comparative Study for Deep Reinforcement Learning with CNN, RNN, and LSTM in Autonomous Navigation. In Proceedings of the 2020 International Conference on Data Analytics for Business and Industry: Way Towards a Sustainable Economy (ICDABI), Sakheer, Bahrain, 26–27 October 2020; pp. 1–5. [Google Scholar] [CrossRef]

- Foroutannia, A.; Akbarzadeh-T, M.R.; Akbarzadeh, A. A deep learning strategy for EMG-based joint position prediction in hip exoskeleton assistive robots. Biomed. Signal Process. Control. 2022, 75, 103557. [Google Scholar] [CrossRef]

- Lee, J.; Hwangbo, J.; Wellhausen, L.; Koltun, V.; Hutter, M. Learning quadrupedal locomotion over challenging terrain. Sci. Robot. 2020, 5, eabc5986. [Google Scholar] [CrossRef]

- Bhatt, A.; Palenicek, D.; Belousov, B.; Argus, M.; Amiranashvili, A.; Brox, T.; Peters, J. Crossq: Batch normalization in deep reinforcement learning for greater sample efficiency and simplicity. arXiv 2019. [Google Scholar] [CrossRef]

- Bohlinger, N.; Kinzel, J.; Palenicek, D.; Antczak, L.; Peters, J. Gait in Eight: Efficient On-Robot Learning for Omnidirectional Quadruped Locomotion. arXiv 2025. [Google Scholar] [CrossRef]

- Drago, A.; Kadapa, S.; Marcouiller, N.; Kwatny, H.G.; Tangorra, J.L. Using Reinforcement Learning to Develop a Novel Gait for a Bio-Robotic California Sea Lion. Biomimetics 2024, 9, 522. [Google Scholar] [CrossRef] [PubMed]

- Youssef, S.M.; Soliman, M.; Saleh, M.A.; Elsayed, A.H.; Radwan, A.G. Design and control of soft biomimetic pangasius fish robot using fin ray effect and reinforcement learning. Sci. Rep. 2022, 12, 21861. [Google Scholar] [CrossRef] [PubMed]

- Yan, S.; Wu, Z.; Wang, J.; Tan, M.; Yu, J. Efficient Cooperative Structured Control for a Multijoint Biomimetic Robotic Fish. IEEE/ASME Trans. Mechatronics 2020, 26, 2506–2516. [Google Scholar] [CrossRef]

- Wang, Q.; Hong, Z.; Zhong, Y. Learn to swim: Online motion control of an underactuated robotic eel based on deep reinforcement learning. Biomim. Intell. Robot. 2022, 2, 100066. [Google Scholar] [CrossRef]

- Min, S.; Won, J.; Lee, S.; Park, J.; Lee, J. SoftCon: Simulation and control of soft-bodied animals with biomimetic actuators. ACM Trans. Graph. 2019, 38, 208. [Google Scholar] [CrossRef]

- Qiao, H.; Li, Y.; Tang, T.; Wang, P. Introducing Memory and Association Mechanism Into a Biologically Inspired Visual Model. IEEE Trans. Cybern. 2014, 44, 1485–1496. [Google Scholar] [CrossRef]

- Nakada, M.; Zhou, T.; Chen, H.; Weiss, T.; Terzopoulos, D. Deep learning of biomimetic sensorimotor control for biomechanical human animation. ACM Trans. Graph. 2018, 37, 56. [Google Scholar] [CrossRef]

- Balachandar, P.; Michmizos, K.P. A Spiking Neural Network Emulating the Structure of the Oculomotor System Requires No Learning to Control a Biomimetic Robotic Head. arXiv 2020. [Google Scholar] [CrossRef]

- Youssef, I.; Mutlu, M.; Bayat, B.; Crespi, A.; Hauser, S.; Conradt, J.; Bernardino, A.; Ijspeert, A. A Neuro-Inspired Computational Model for a Visually Guided Robotic Lamprey Using Frame and Event Based Cameras. IEEE Robot. Autom. Lett. 2020, 5, 2395–2402. [Google Scholar] [CrossRef]

- Dweiri, Y.M.; AlAjlouni, M.M.; Ayoub, J.R.; Al-Zeer, A.Y.; Hejazi, A.H. Biomimetic Grasp Control of Robotic Hands Using Deep Learning. In Proceedings of the 2023 IEEE Jordan International Joint Conference on Electrical Engineering and Information Technology (JEEIT), Amman, Jordan, 22–24 May 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Gomez, G.; Hotz, P.E. Evolutionary synthesis of grasping through self-exploratory movements of a robotic hand. In Proceedings of the 2007 IEEE Congress on Evolutionary Computation, Singapore, 25–28 September 2007; pp. 3418–3425. [Google Scholar] [CrossRef]

- Yin, X.; Müller, R. Integration of deep learning and soft robotics for a biomimetic approach to nonlinear sensing. Nat. Mach. Intell. 2021, 3, 507–512. [Google Scholar] [CrossRef]

- Yang, S.; Meng, M. Neural network approaches to dynamic collision-free trajectory generation. IEEE Trans. Syst. Man Cybern. Part B (Cybern.) 2001, 31, 302–318. [Google Scholar] [CrossRef]

- Shimoda, S.; Kimura, H. Biomimetic Approach to Tacit Learning Based on Compound Control. IEEE Trans. Syst. Man Cybern. Part B (Cybern.) 2009, 40, 77–90. [Google Scholar] [CrossRef]

- Zeng, C.; Su, H.; Li, Y.; Guo, J.; Yang, C. An Approach for Robotic Leaning Inspired by Biomimetic Adaptive Control. IEEE Trans. Ind. Inform. 2021, 18, 1479–1488. [Google Scholar] [CrossRef]

- Papaspyros, V.; Theraulaz, G.; Sire, C.; Mondada, F. Quantifying the biomimicry gap in biohybrid robot-fish pairs. Bioinspir. Biomim. 2024, 19, 046020. [Google Scholar] [CrossRef]

- Zhang, H.; Liu, L. Intelligent Control of Swarm Robotics Employing Biomimetic Deep Learning. Machines 2021, 9, 236. [Google Scholar] [CrossRef]

| Functional Area | Sub-Functional Area | Control Methods and Structural Characteristics | Applied AI Algorithms | Control Characteristics and Implementation Method | Ref. |

|---|---|---|---|---|---|

| Locomotion imitation | Human gait imitation | Predictive torque-based control | CNN + BiLSTM | Multi-sensor fusion, prediction-angle-based impedance control, network embedded | [86] |

| 3-DOF bipedal robot locomotion | DNN-prediction embedded MPC | DNN | Real-time torque optimization, Lyapunov stability, NMPC/PID performance comparison | [2] | |

| Multi-legged locomotion (evolutionary local control) | Evolutionary circuit-based distributed control | EL | Parallel operation, sensor-based local control, structural/contextual adaptation | [9] | |

| Quadrupedal locomotion | Direct control using reinforcement learning policy | Deep RL | Adaptation to various terrains in real environments, rapid learning, fast policy transfer | [87] | |

| Quadrupedal locomotion (high-efficiency off-policy RL) | CrossQ off-policy policy learning | CrossQ(Off-policy RL) | Rapid acquisition of walking policy within 8 minutes, fast convergence in real environment | [88,89] | |

| Sea lion swimming robot | Predictive trajectory-based roll/pitch/yaw control, biological motion benchmarking | SAC | Performance comparison with biological trajectories, offline training and policy transfer | [90] | |

| Eel-like soft robot | Online reinforcement learning-based propulsion control | SAC | Simulation-to-reality policy transfer, improved energy efficiency and straightness | [93] | |

| Helical propulsion microrobot | 4D continuous control + policy function approximation | SAC | Real-world experiments, policy-to-function approximation, simultaneous improvement of speed and reproducibility | [25] | |

| Soft-bodied robot inspired by mollusks | FEM-based fluid–structure interactive propulsion control + PPO | PPO + AP-MEM | Composite rewards (target speed/angle), superior learning/performance to previous MEM | [94] | |

| Pangasius hybrid robot | PPO-based tail gait control | PPO, A2C, DQN | PPO superiority in stability and performance, inherent nonlinear characteristics | [91] | |

| Multi-jointed fish robot | CPG-based rhythmic control + DDPG closed-loop correction, improved energy efficiency and generalization | Hopfield MLP + DDPG | Cooperative control, experimentally validated energy savings and improved tracking performance | [92] | |

| Continuum robot (STIFF-FLOP) | DS-GMR-based trajectory generalization, self-compensating iterative learning | DS-GMR (GMM + GMR) | Trajectory generalization + self-compensation, more than twofold improvement in imitation through repetition | [4] | |

| Modular self-assembling robot | AHHS-based evolutionary structural contro | AHHS+EA | Rapid speed recovery and strategy switching with increasing modules, structural diversity ensured | [27] | |

| Sensory imitation | Vision (cortical imitation) | HMAX hierarchical structure + associative memory control | HMAX + Memory/Association | Few-shot recognition, simultaneous improvement in efficiency and accuracy via patch reduction | [95] |

| Visuo-motor integration | Visual-muscular integrated DNN control | DNN | High-dimensional input integration, spontaneous/reflexive actions, autonomous control of complex motions | [96] | |

| Visual tracking (oculomotor) | SNN-based anatomical circuit control | SNN + Hebbian reward | Improvement in pre/post-training performance and convergence time, real-time servo motor integration | [97] | |

| Vision-CPG (Central Pattern Generator) coupling | LIF SNN-based vision-priority and CPG-coupled control | LIF SNN | Visual stimuli linked to motion, tenfold increase in response speed over frame-based, reduced error | [98] | |

| pressure-based grip | R-CNN-based pressure time series control | R-CNN (CNN + RNN) | Real-time sensor-control loop, improved grip precision for medical/industrial applications | [99] | |

| self-exploratory grip strateg | Evolutionary neural network-based adaptive control | Evolutionary algorithm-based neural network | Self-adaptive control, self-learning based on pressure feedback | [100] | |

| Auditory/vibration (bat ear-inspired) | CNN-based Doppler acoustic control | CNN | High-precision direction sensing with single microphone/frequency, real-time dynamic control | [101] | |

| Cognition and intelligence imitation | Neural dynamic path generation | Neural field-based path generation | Neural field model | Unsupervised real-time path planning/obstacle avoidance, Lyapunov-based stability verification | [102] |

| Implicit learning | Distributed neural network-based threshold/weight self-adjustment | Extended Hebbian neural network | Complex motion/balance/generation without external rewards, improved energy efficiency | [103] | |

| Human-like muscle-compliant motion | DMP + impedance adjustment, compliant control with dynamic parameter update | HI-CMP (DMP + impedance) | Insertion/cutting tasks, reduced tracking error/force ripple, prevention of surface damage | [104] | |

| Fish school interaction imitation | DLI-based acceleration distribution prediction + PID tracking | DLI (DNN) + PID | Social distance/alignment behavior clustering in both real and simulated environments | [105] | |

| Asymmetric swarm DNN (Deep Neural Network) | ACN + LDN, LVPS-based single neighbor interaction | DNN (ACN + LDN) + LVPS | Biological plausibility + computational efficiency, implementation of alignment/cohesion/counter-milling | [106] | |

| Structural and Regenerative imitation | Muscle-use reinforcement (self-reinforcement) | PPO-based distributed muscle activation control | PPO | PyElastica + Cosserat physical model, adaptive agent, curriculum learning effects | [44] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jung, H.; Park, S.; Joe, S.; Woo, S.; Choi, W.; Bae, W. AI-Driven Control Strategies for Biomimetic Robotics: Trends, Challenges, and Future Directions. Biomimetics 2025, 10, 460. https://doi.org/10.3390/biomimetics10070460

Jung H, Park S, Joe S, Woo S, Choi W, Bae W. AI-Driven Control Strategies for Biomimetic Robotics: Trends, Challenges, and Future Directions. Biomimetics. 2025; 10(7):460. https://doi.org/10.3390/biomimetics10070460

Chicago/Turabian StyleJung, Hoejin, Soyoon Park, Sunghoon Joe, Sangyoon Woo, Wonchil Choi, and Wongyu Bae. 2025. "AI-Driven Control Strategies for Biomimetic Robotics: Trends, Challenges, and Future Directions" Biomimetics 10, no. 7: 460. https://doi.org/10.3390/biomimetics10070460

APA StyleJung, H., Park, S., Joe, S., Woo, S., Choi, W., & Bae, W. (2025). AI-Driven Control Strategies for Biomimetic Robotics: Trends, Challenges, and Future Directions. Biomimetics, 10(7), 460. https://doi.org/10.3390/biomimetics10070460