Research on Motion Transfer Method from Human Arm to Bionic Robot Arm Based on PSO-RF Algorithm

Abstract

1. Introduction

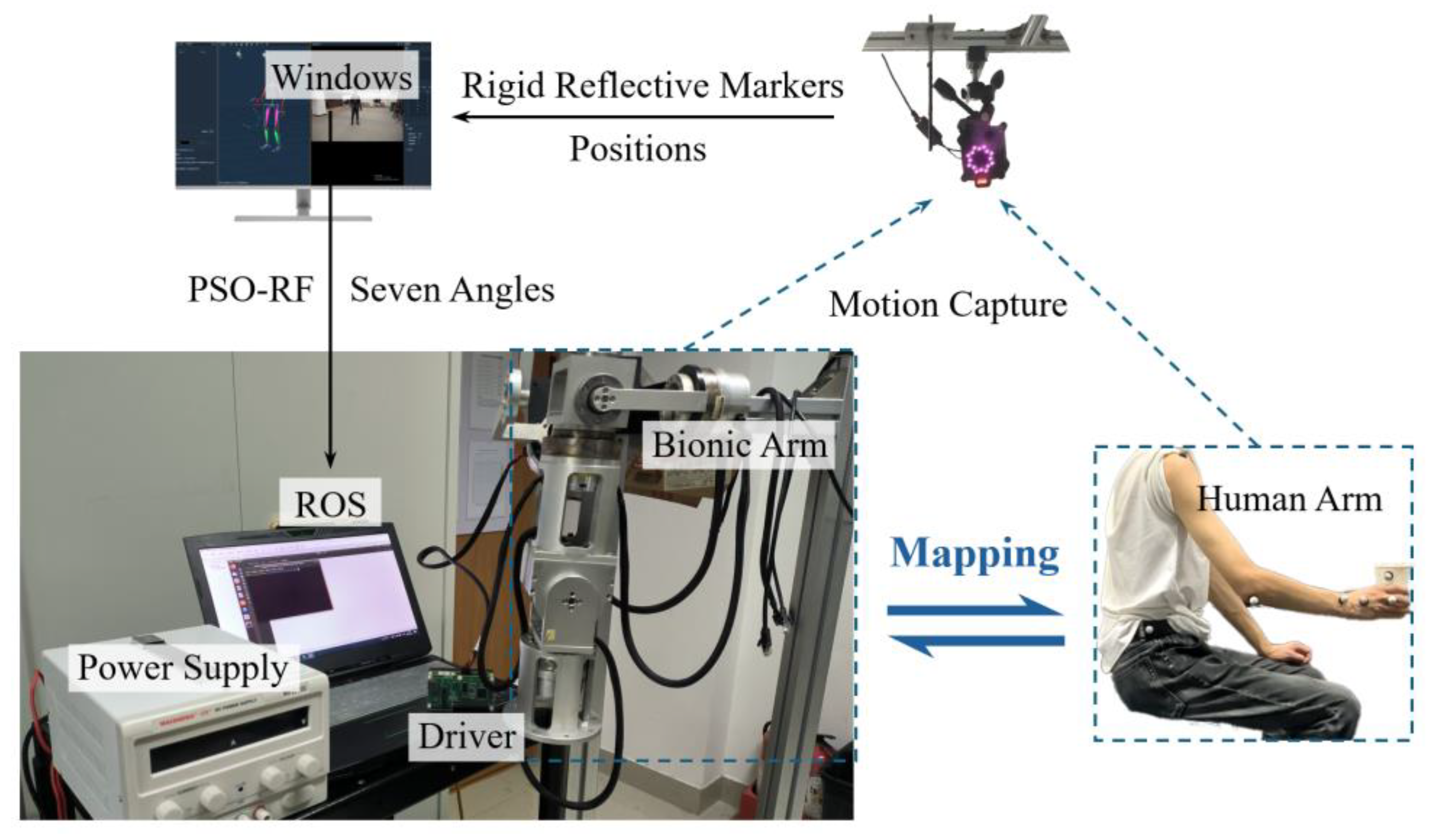

2. Motion Capture Solution

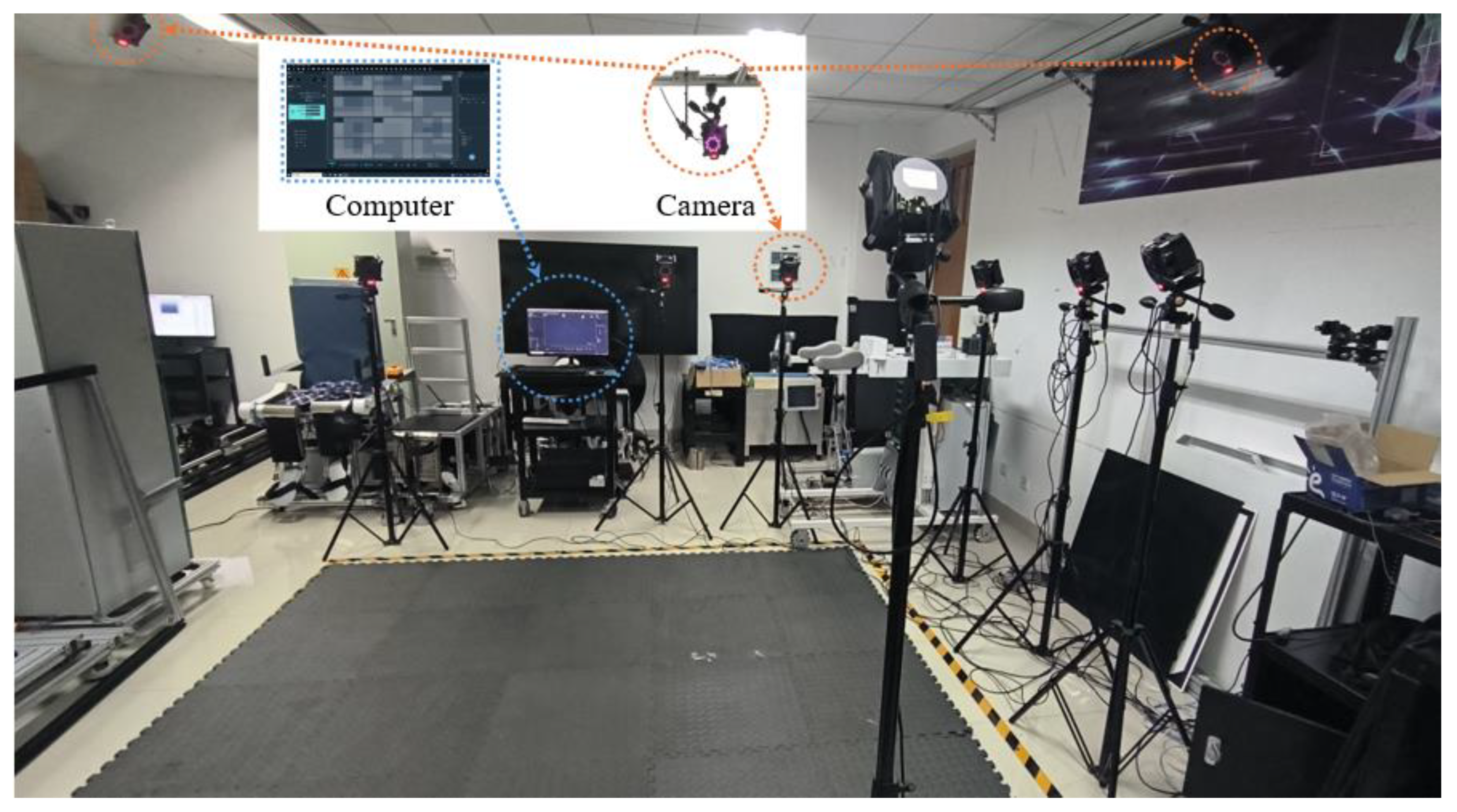

2.1. Arrangement of the Mocap System

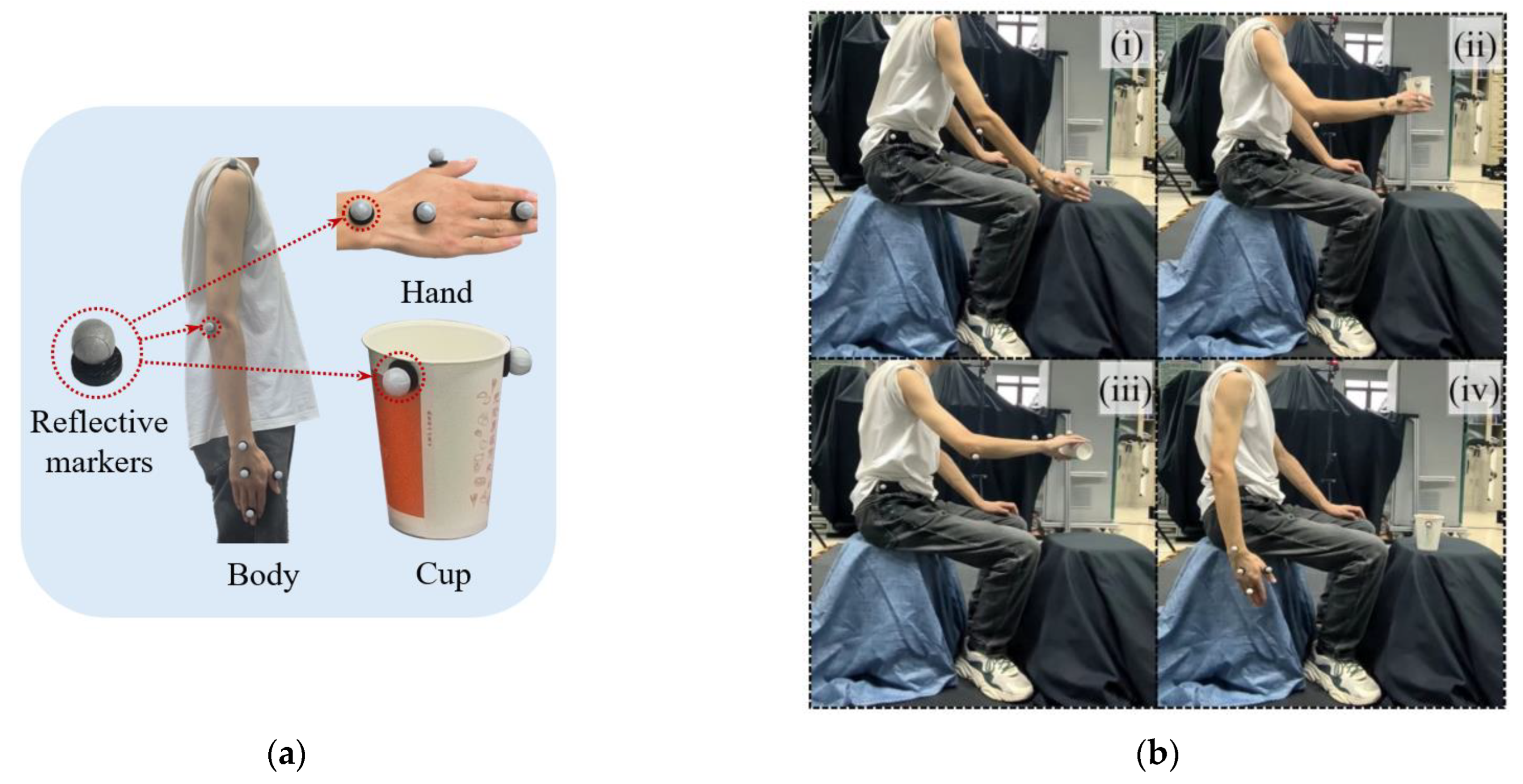

2.2. Motion Capture Recognition

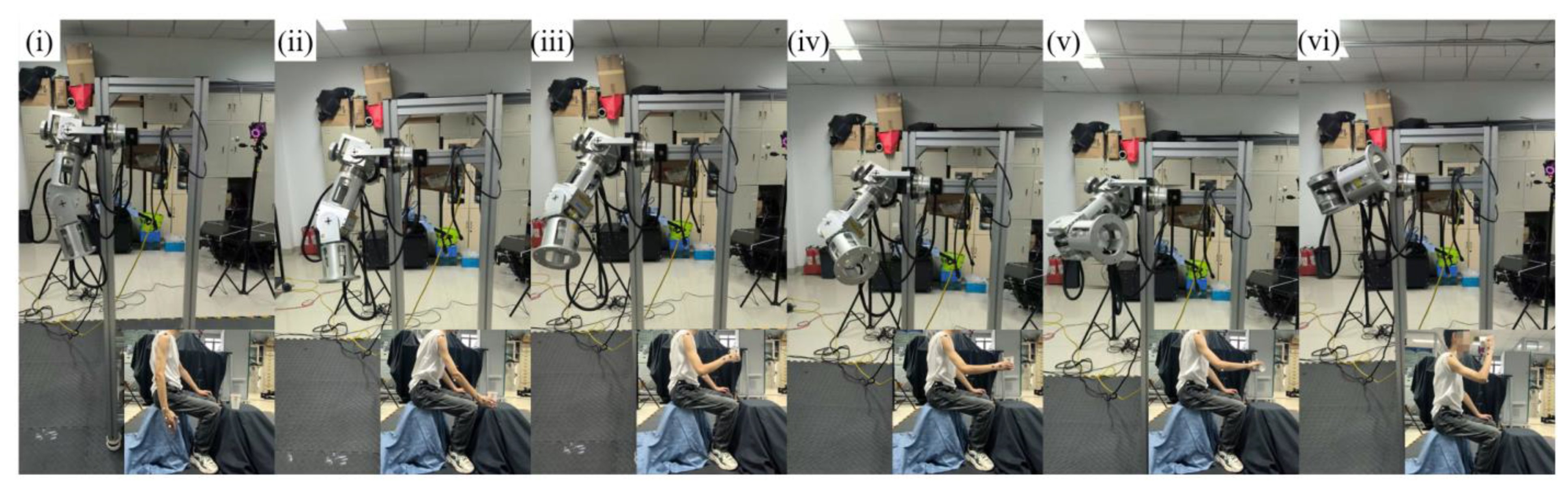

- (i)

- Vertically place the hand beside the cup.

- (ii)

- Extend the thumb, grip the cup, and lift it to a height of one palm.

- (iii)

- Tilt the wrist 90 degrees and maintain this position for 2 s, then rotate the wrist 90 degrees in the opposite direction to return it to the neutral position and lower the hand.

- (iv)

- Straighten the palm and return to the initial position.

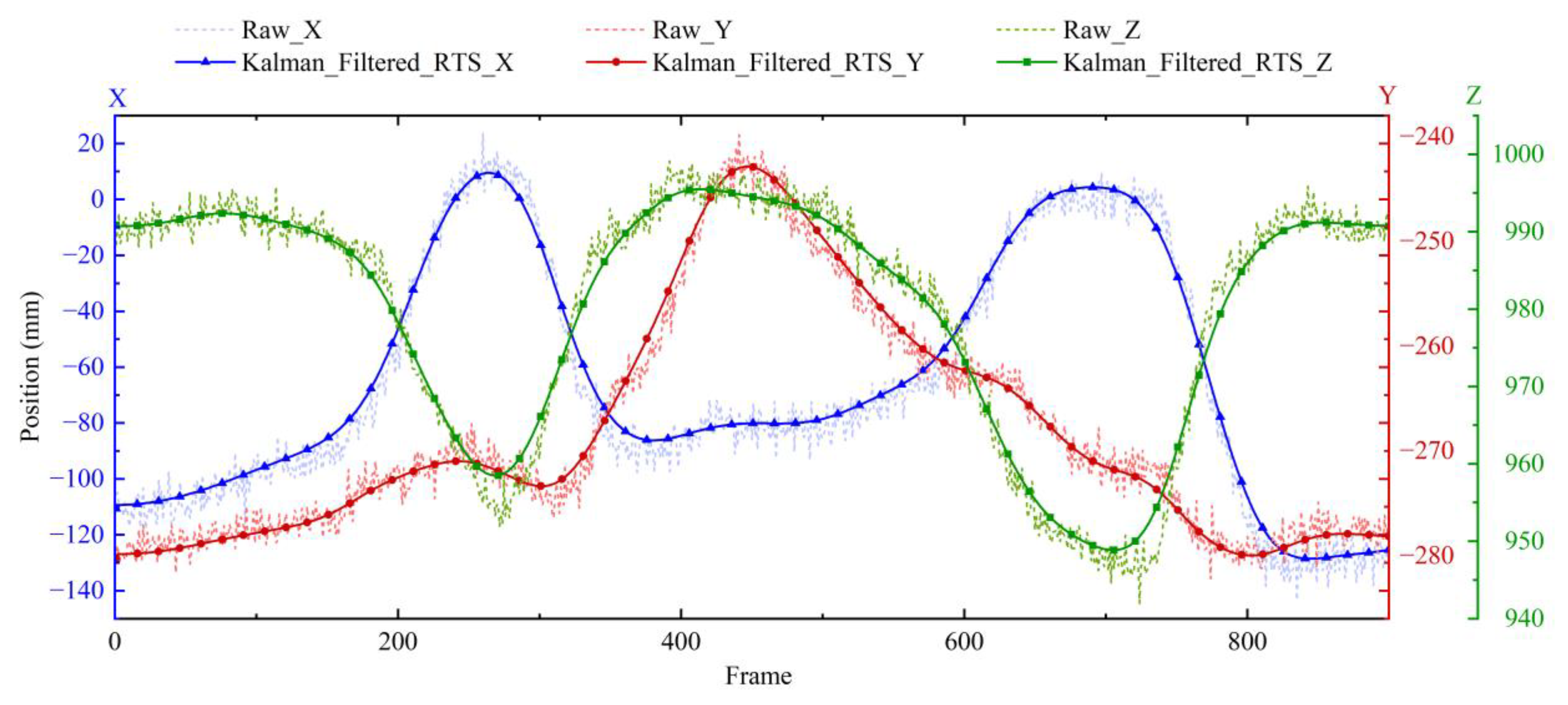

2.3. Coordinate Trajectory Processing

- (i)

- Prediction Step:

- (ii)

- Prediction Step:

3. Methodology

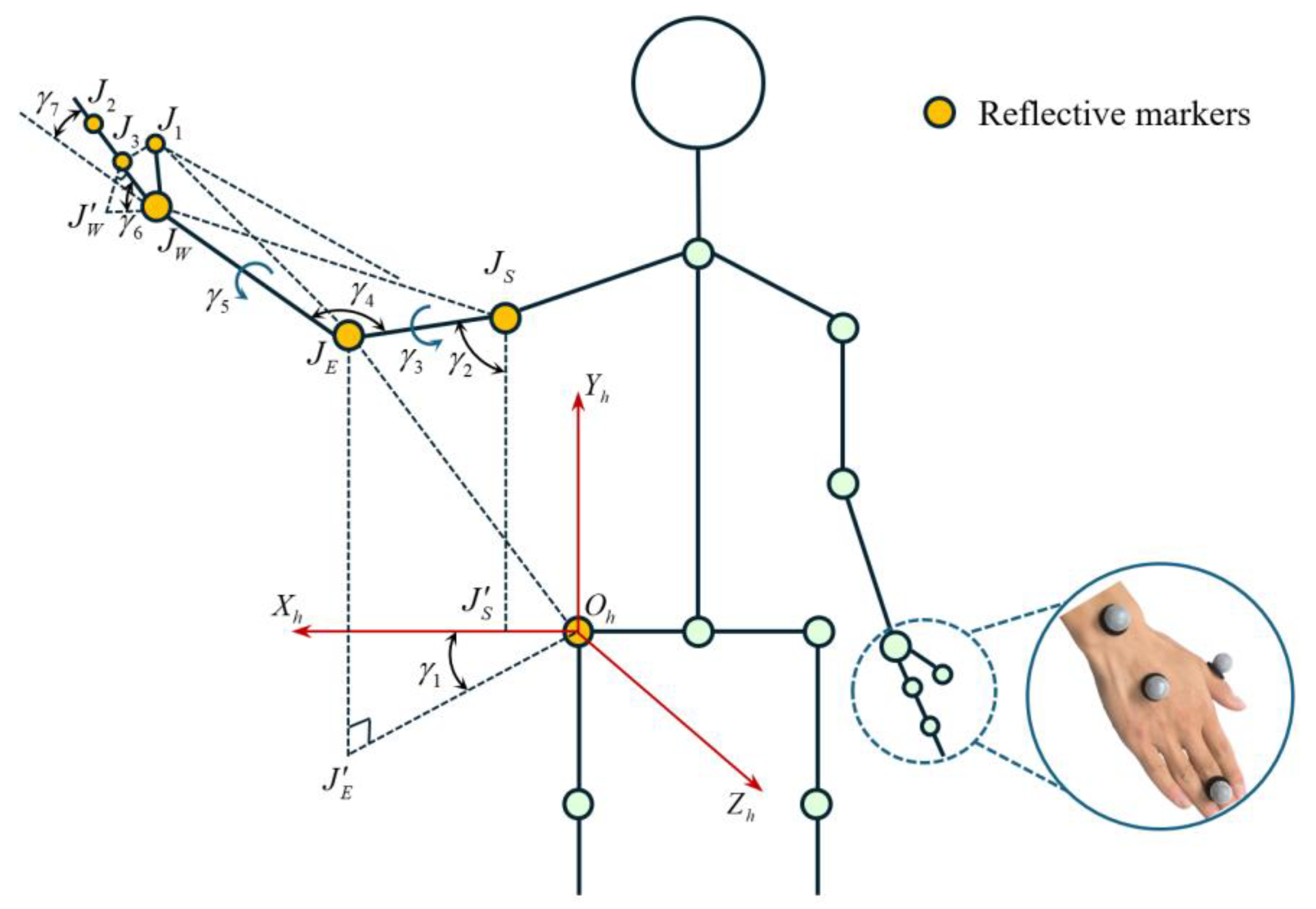

3.1. Calculation of the Human Arm Model Joint Angles

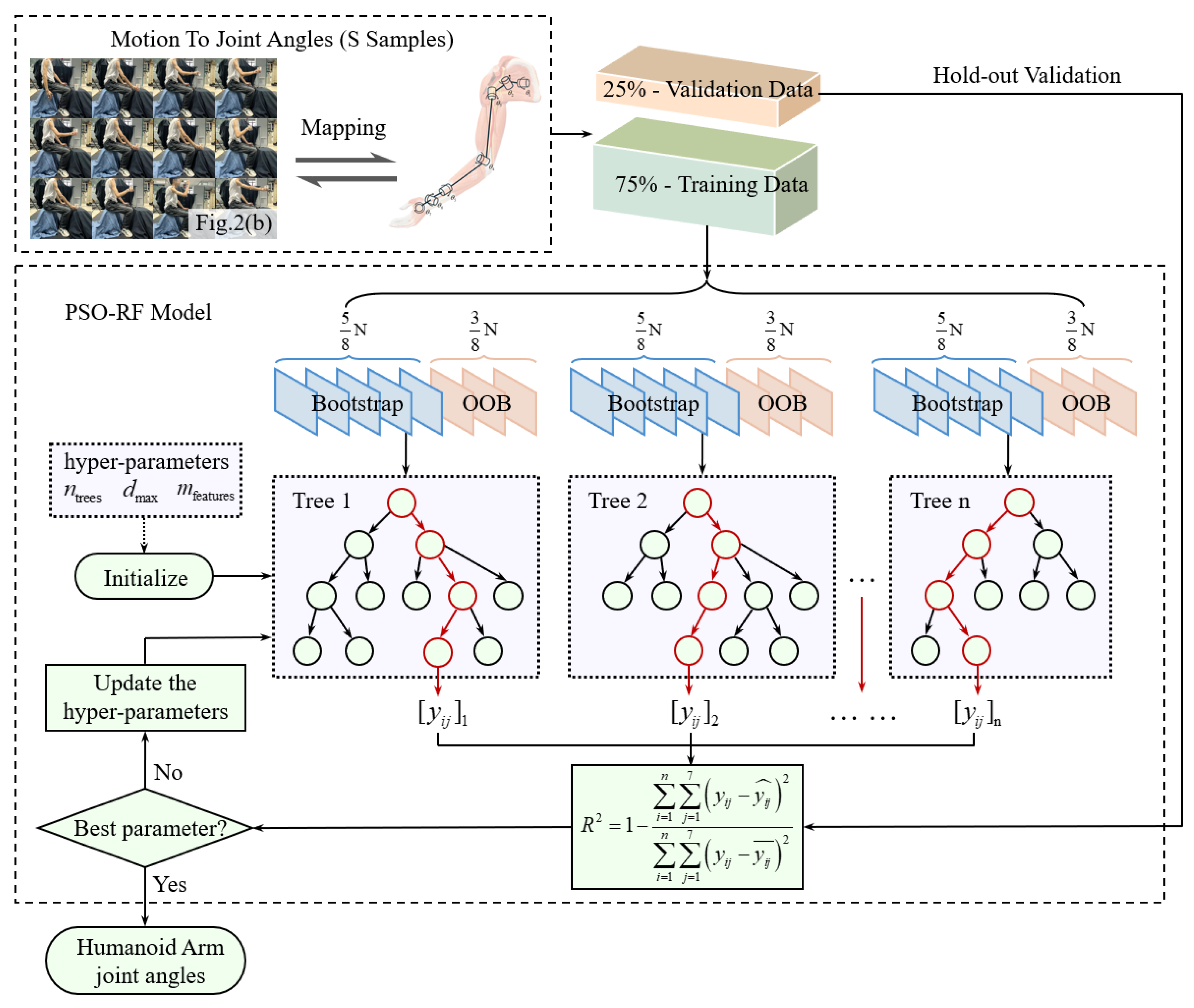

3.2. PSO-RF Algorithm

- Database establishment:

- Initial:

- Update:

| Algorithm 1. The pseudocode of the PSO-RF algorithm. |

| Pseudocode: PSO-RF algorithm for joint angles prediction (python) |

| # Particle initialization |

| NP = 50, t_max = 150, e = 0.005, w = 0.7, c1 = c2 = 2.05 # PSO |

| rf_para = {‘n_trees’:[50, 200], ‘d_max’:[5, 30], ‘m_features’:[0.2, 0.8]} # RF |

| # Swarm optimization |

| particles = [{‘pos’:[randint(n_trees), randint(d_max), random(m_features)], ‘vel’:[0, 0, 0], ‘pbest’:[], ‘pfit’: −inf}) |

| gbest = {‘pos’:[], ‘fit’: −inf}, prev_fit = −inf |

| for i = 1 to t_max: |

| for p in particles: |

| rf = RandomForest (n_trees = p.pos [0], d_max = p.pos [1], m_features = p.pos [2]) |

| X_train, X_val, y_train, y_val = split(S, 0.75) |

| rf.fit(X_train, y_train) # Train RF model y_pred = rf.predict(X_val) |

| R2 = 1 − (sum((y_val−y_pred)**2)/sum((y_val−mean(y_val))**2)) #R2 fitness |

| if R2 > p[‘pfit’]: p.update(pfit = R2, pbest = p[‘pos’]) if R2 > gbest[‘fit’]: gbest.update(fit = R2, pos = p[‘pos’]) # Update bests |

| # Update particle dynamics |

| for p in particles: |

| for i = 0 to 2 |

| r1, r2 = random(), random() p[‘vel’][i] = w*p[‘vel’][i] + c1*r1*(p[‘pbest’][i]−p[‘pos’][i]) + c2*r2*(gbest[‘pos’][i] − p[‘pos’][i]) p[‘pos’][i] = clamp(p[‘pos’][i] + p[‘vel’][i], rf_para [i]) |

| if abs(gbest[‘fit’] − prev_fit) < e: break prev_fit = gbest[‘fit’] |

| return gbest[‘pos’] |

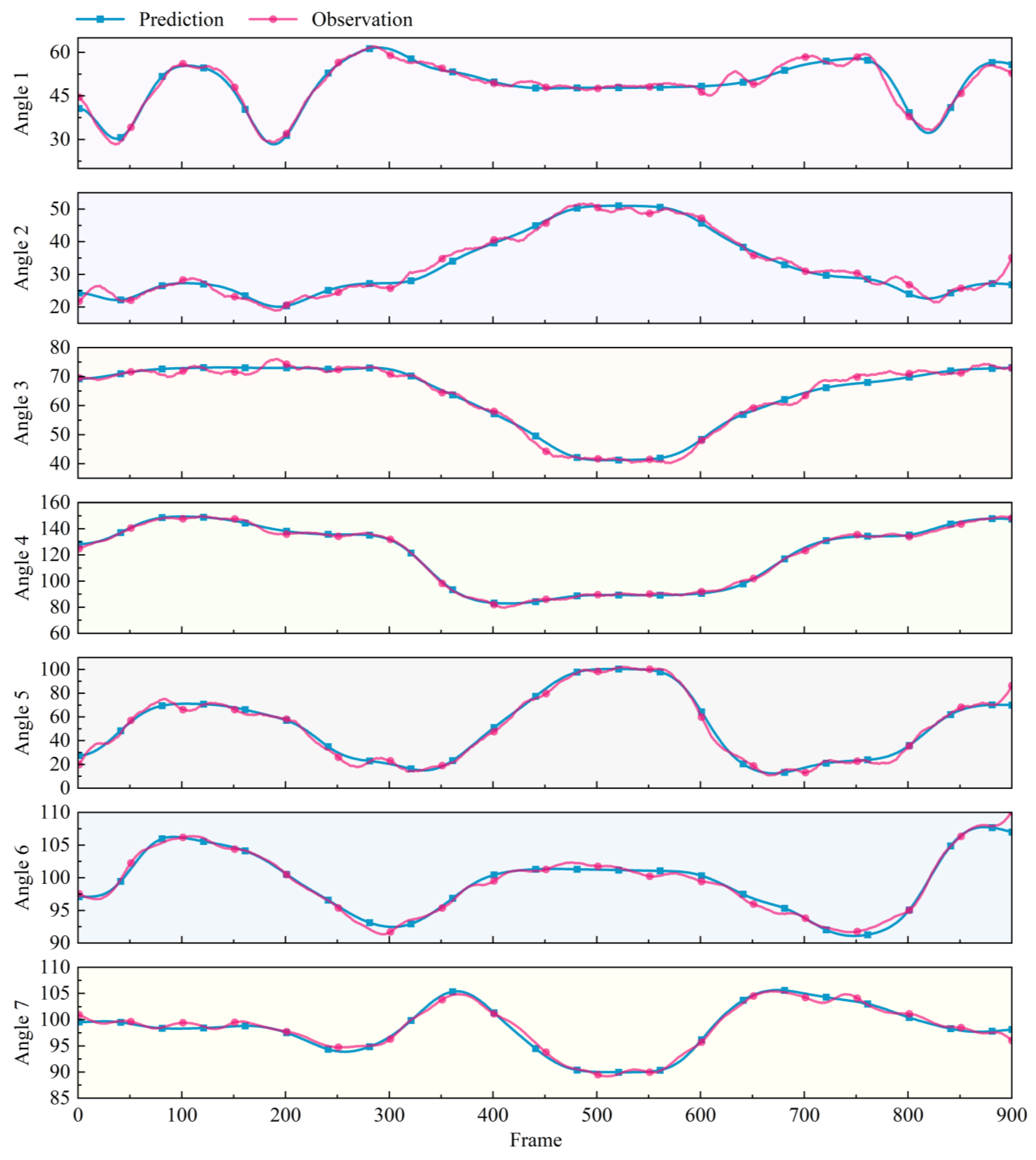

3.3. Analysis of Joint Angles Prediction Results

4. Experiment

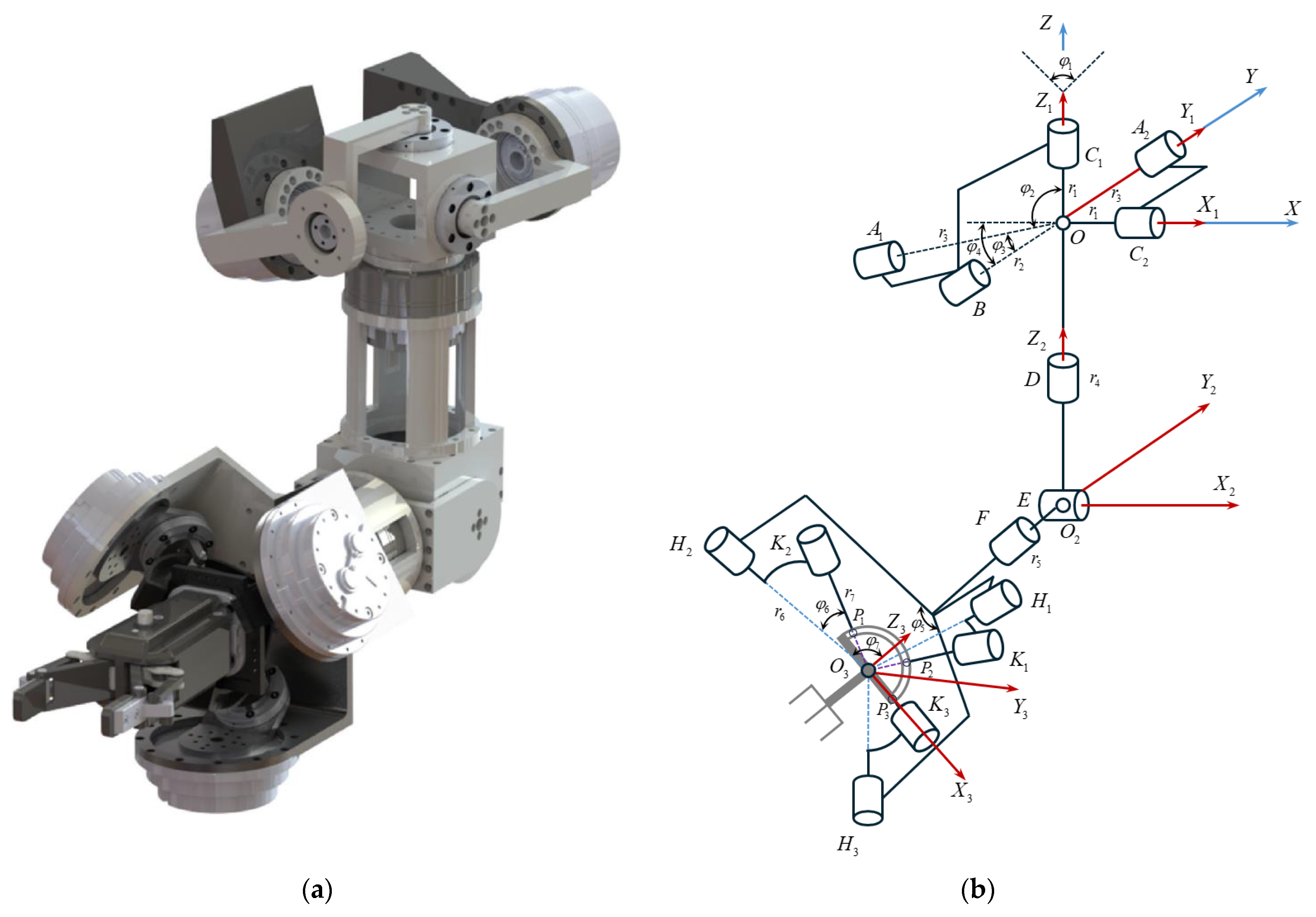

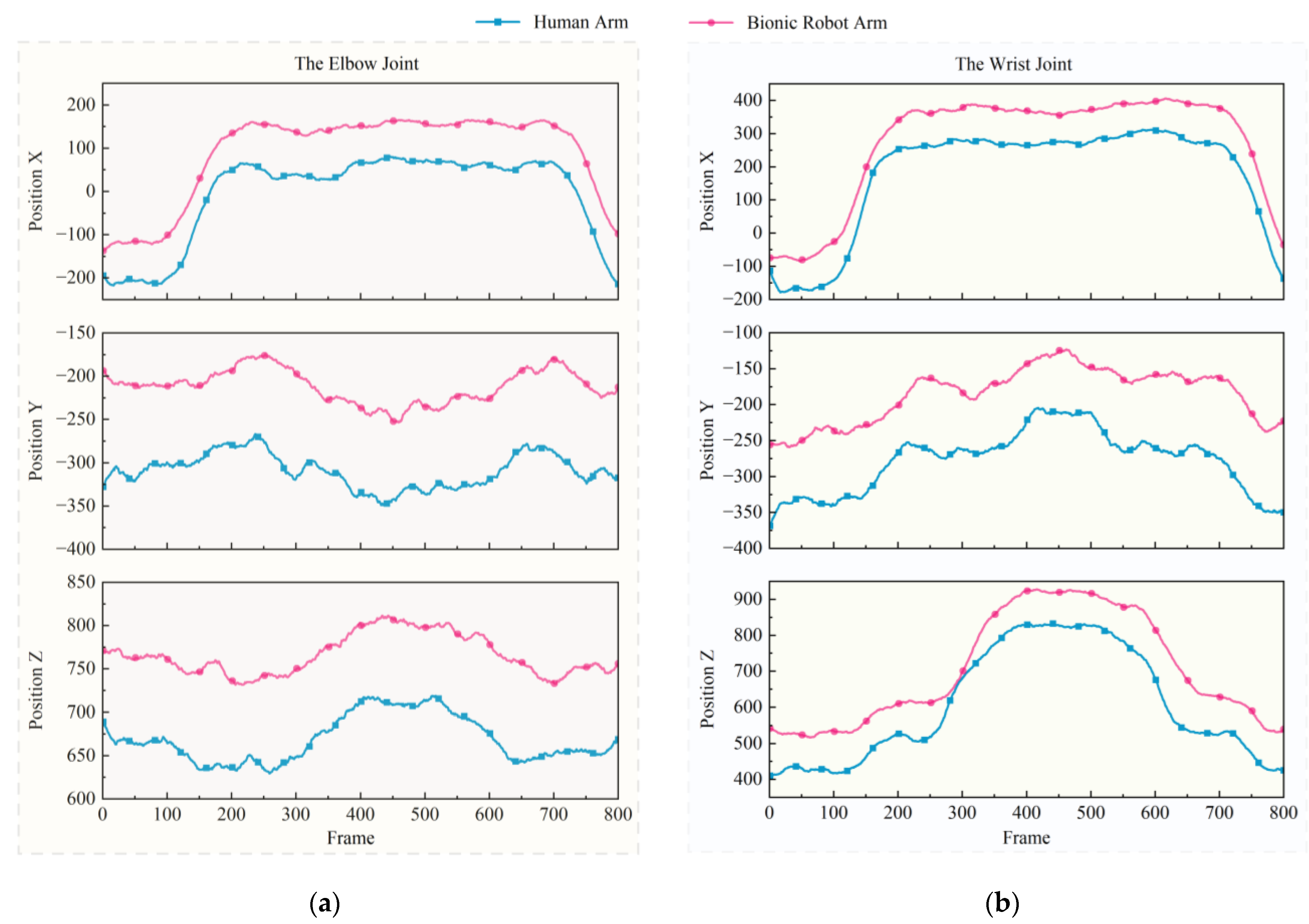

4.1. Joint Mapping Analysis

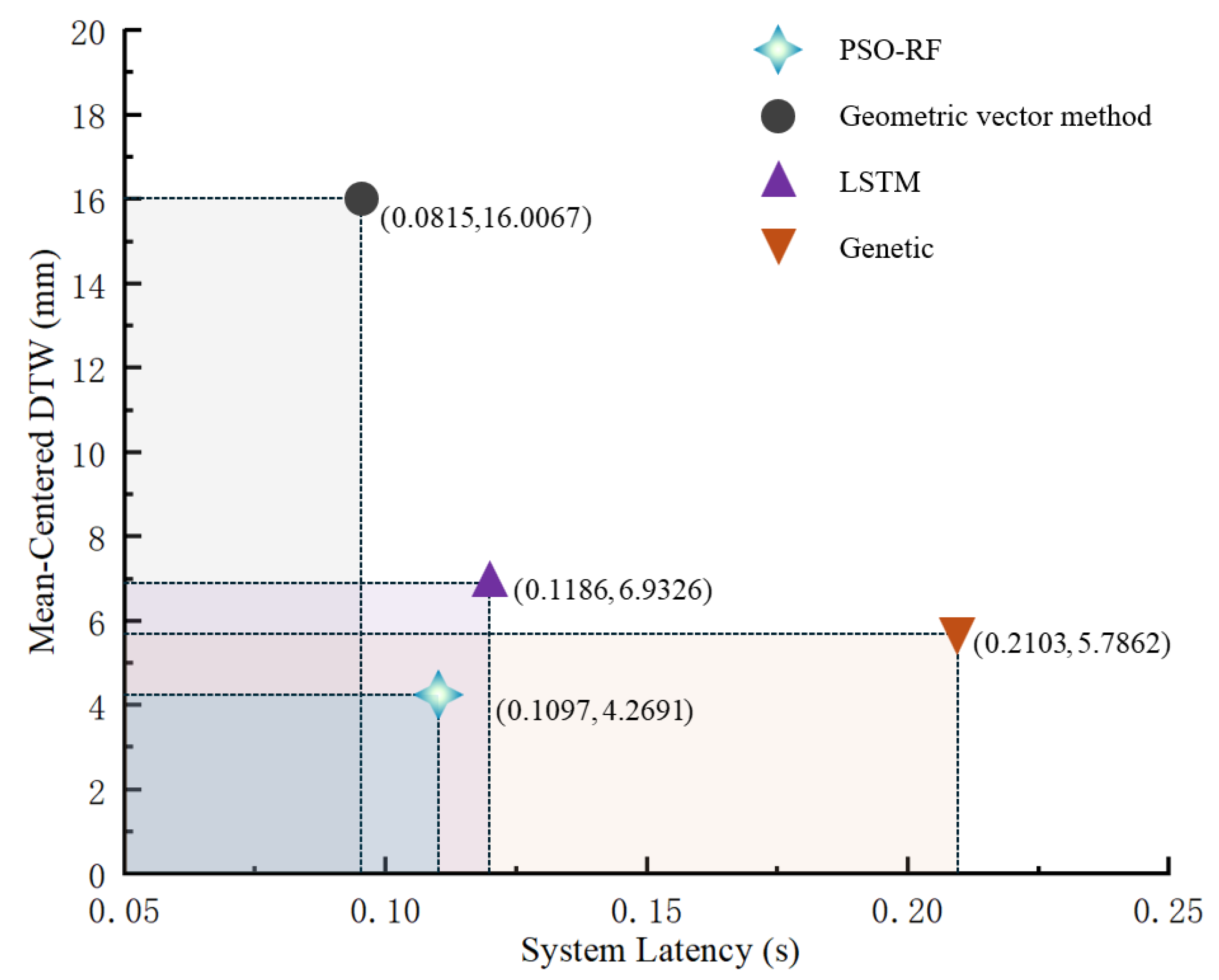

4.2. Arm–Bionic Robot Arm Motion Tranfer Experiment

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| DMPs | Dynamic Movement Primitives |

| EMG | Electromyography |

| HAMPs | Human Arm Motion Patterns |

| Mocap | Motion Capture |

| RTS | Rauch–Tung–Striebel |

| RF | Random Forest |

| PSO | Particle Swarm Optimization |

| DTW | Dynamic Time Warping |

References

- Ortiz-Catalan, M.; Zbinden, J.; Millenaar, J.; D’Accolti, D.; Controzzi, M.; Clemente, F.; Cappello, L.; Earley, E.J.; Mastinu, E.; Kolankowska, J.; et al. A highly integrated bionic hand with neural control and feedback for use in daily life. Sci. Robot. 2023, 8, eadf7360. [Google Scholar] [CrossRef] [PubMed]

- Toedtheide, A.; Fortunic, E.P.; Kühn, J.; Jensen, E.; Haddadin, S. A transhumeral prosthesis with an artificial neuromuscular system: Sim2real-guided design, modeling, and control. Int. J. Robot. Res. 2024, 43, 942–980. [Google Scholar] [CrossRef]

- Licardo, J.T.; Domjan, M.; Orehovacki, T. Intelligent Robotics-A Systematic Review of Emerging Technologies and Trends. Electronics 2024, 13, 542–553. [Google Scholar] [CrossRef]

- Blandine, C.G. Anatomie Our le Movement, Tome 1: Introduction à l’Analyse des Techniques Corporelles; Gap: Paris, France, 2005; pp. 50–80. [Google Scholar]

- Niu, H.; Zhao, X.; Jin, H.Z.; Zhang, X.L. A Whole-Body Coordinated Motion Control Method for Highly Redundant Degrees of Freedom Mobile Humanoid Robots. Biomimetics 2024, 9, 766. [Google Scholar] [CrossRef]

- Hyun-Sook, C. Design & Implementation of a Motion Capture Database Based on Motion Ontologies. J. Korea Multimed. Soc. 2005, 8, 618–632. [Google Scholar]

- Kim, M.S. A Study on Overcome of Marker-based Motion Capture Environment. J. Korea Entertain. Ind. Assoc. 2016, 10, 17–25. [Google Scholar] [CrossRef]

- Ashhar, K.; Khyam, M.O.; Soh, C.B.; Kong, K.H. A Doppler-tolerant ultrasonic multiple access localization system for human gait analysis. Sensors 2018, 18, 2447. [Google Scholar] [CrossRef]

- Laurijssen, D.; Truijen, S.; Saeys, W.; Daems, W.; Steckel, J. An Ultrasonic Six Degrees-of-Freedom Pose Estimation Sensor. IEEE Sens. J. 2017, 17, 151–159. [Google Scholar] [CrossRef]

- Baak, A.; Müller, M.; Bharaj, G.; Seidel, H.P.; Theobalt, C. A data-driven approach for real-time full body pose reconstruction from a depth camera. In Proceedings of the International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; Volume 96, pp. 71–98. [Google Scholar]

- Dobrian, C.; Bevilacqua, F. Gestural Control of Music Using the Vicon 8 Motion Capture System. In Proceedings of the 2003 Conference on New Interfaces for Musical Expression, Montréal, QC, Canada, 22–24 May 2003; Volume 43, pp. 160–163. [Google Scholar]

- Wu, X.L.; Tang, Q.R.; Wang, F.; Guo, R.Q.; Zhu, Q.; Li, S.; Tu, D.; Liu, Q. A Robot-Assisted System for Dental Implantation. In Proceedings of the International Conference on Intelligent Robotics and Applications, Harbin, China, 1–3 August 2022; Springer: Cham, Switzerland, 2022; Volume 64, pp. 15–28. [Google Scholar]

- Jia, X.H.; Zhao, B.; Liu, J.Y.; Zhang, S.L. A trajectory planning method for robotic arms based on improved dynamic motion primitives. Ind. Robot 2024, 51, 847–856. [Google Scholar] [CrossRef]

- Yu, X.B.; Liu, P.S.; He, W.; Liu, Y.; Chen, Q. Human-Robot Variable Impedance Skills Transfer Learning Based on Dynamic Movement Primitives. IEEE Robot. Autom. Lett. 2023, 7, 6463–6470. [Google Scholar] [CrossRef]

- Vuga, R.; Ogrinc, M.; Gams, A.; Petric, T.; Sugimoto, N.; Ude, A.; Morimoto, J. Motion Capture and Reinforcement Learning of Dynamically Stable Humanoid Movement Primitives. In Proceedings of the IEEE International Conference on Robotics and Automation, Karlsruhe, Germany, 6–10 May 2013. [Google Scholar]

- Zhao, J.; Wang, C.Y.; Xie, B.Y. Human-like motion planning of robotic arms based on human arm motion patterns. Robotica 2022, 41, 259–276. [Google Scholar] [CrossRef]

- Sheng, B.; Chen, L.F.; Cheng, J.; Zhang, Y.X.; Hua, Z.K.; Tao, J. A markless 3D human motion data acquisition method based on the binocular stereo vision and lightweight open pose algorithm. Measurement 2024, 225, 113908. [Google Scholar] [CrossRef]

- Roweis, S.; Ghahramani, Z. A unifying review of linear Gaussian models. Neural Comput. 1999, 11, 305–345. [Google Scholar] [CrossRef]

- Khodarahmi, M.; Maihami, V. A review on Kalman filter models. Arch. Comput. Methods Eng. 2023, 30, 727–747. [Google Scholar] [CrossRef]

- Sun, P.; Vasef, M.; Chen, L. Multi-vision-based displacement monitoring using global-local deep deblurring and Rauch-Tung-Striebel smoother. Measurement 2025, 242, 116292. [Google Scholar] [CrossRef]

- Zhang, T.Y.; Wang, H.G.; Lv, P.; Pan, X.A.; Wang, D.; Yuan, B.; Yu, H. Real-Time Motion Generation for Robot Manipulators in Complex Dynamic Environments. Adv. Intell. Syst. 2025, 2400738. [Google Scholar] [CrossRef]

- Roshan, T.R.; Jafari, M.; Golami, M.; Kazemi, M. Evaluating geometric measurement accuracy based on 3D model reconstruction of nursery tomato plants by Agisoft photoscan software. Comput. Electron. Agric. 2025, 221, 109000. [Google Scholar] [CrossRef]

- Daviran, M.; Maghsoudi, A.; Ghezelbash, R. Optimized AI-MPM: Application of PSO for tuning the hyperparameters of SVM and RF algorithms. Comput. Geosci. 2024, 195, 105785. [Google Scholar] [CrossRef]

- Shu, Y.R.; Kong, F.M.; He, Y.; Chen, L.H.; Liu, H.; Zan, F.; Lu, X.; Wu, T.; Si, D.; Mao, J.; et al. Machine learning-assisted source tracing in domestic-industrial wastewater: A fluorescence information-based approach. Water Res. 2024, 268, 122618. [Google Scholar] [CrossRef]

- Akesson, J.; Toger, J.; Heiberg, E. Random effects during training: Implications for deep learning-based medical image segmentation. Comput. Biol. Med. 2024, 180, 108944. [Google Scholar] [CrossRef]

- Billard, A.; Matarić, M.J. Learning human arm movements by imitation: Evaluation of a biologically inspired connectionist architecture. Robot. Auton. Syst. 2001, 37, 145–160. [Google Scholar] [CrossRef]

- Ahmed, M.H.; Kutsuzawa, K.; Hayashibe, M. Transhumeral arm reaching motion prediction through deep reinforcement learning-based synthetic motion cloning. Biomimetics 2023, 8, 367. [Google Scholar] [CrossRef] [PubMed]

- Sun, P.; Li, Y.B.; Wang, Z.S.; Chen, K.; Chen, B.; Zeng, X.; Zhao, J.; Yue, Y. Inverse displacement analysis of a novel hybrid humanoid robotic arm. Mech. Mach. Theory 2020, 147, 103743. [Google Scholar] [CrossRef]

- Sakoe, H.; Chiba, S. Dynamic programming algorithm optimization for spoken word recognition. IEEE Trans. Acoust. Speech Signal Process. 1978, 26, 43–49. [Google Scholar] [CrossRef]

- Belgiu, M.; Csillik, O. Sentinel-2 cropland mapping using pixel-based and object-based time-weighted dynamic time warping analysis. Remote Sens. Environ. 2018, 204, 509–523. [Google Scholar] [CrossRef]

- Székely, G.J.; Rizzo, M.L.; Bakirov, N.K. Measuring and testing dependence by correlation of distances. Ann. Stat. 2007, 35, 2769–2794. [Google Scholar] [CrossRef]

- Kharchenko, P.V.; Tolstorukov, M.Y.; Park, P.J. Design and analysis of ChIP-seq experiments for DNA-binding proteins. Nat. Biotechnol. 2008, 26, 1351–1359. [Google Scholar] [CrossRef]

- Band, S.S.; Lin, T.J.; Qasem, S.N.; Ameri, R.; Shahmirzadi, D.; Aslam, M.S.; Pai, H.T.; Salwana, E.; Mousavi, A. A deep reinforcement learning approach for wind speed forecasting. Eng. Appl. Comput. Fluid Mech. 2025, 19, 2498355. [Google Scholar] [CrossRef]

- Jafari, M.; Ehsani, M.; Hajikarimi, P.; Nejad, F.M. Nonlinear fractional viscoelastic modeling of high-temperature rheological behaviour of SBS and PPA modified asphalt binders. Int. J. Pavement Eng. 2025, 26, 2487614. [Google Scholar] [CrossRef]

| Motion | Shoulder | Elbow | Wrist |

|---|---|---|---|

| Drink water | Flexion/Extension | Flexion/Extension | Radial deviation/Ulnar deviation |

| Internal/External Rotation | Dorsiflexion/Palmar flexion | ||

| Pour water | Flexion/Extension | Flexion/Extension | Radial deviation/Ulnar deviation |

| Pronation/Supination | |||

| Draw a circle | Flexion/Extension | Flexion/Extension | Radial deviation/Ulnar deviation |

| Adduction/Abduction | Dorsiflexion/Palmar flexion | ||

| Take the cup | Flexion/Extension | Flexion/Extension | Radial deviation/Ulnar deviation |

| Move the cup | Flexion/Extension | Flexion/Extension | Radial deviation/Ulnar deviation |

| Adduction/Abduction |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zheng, Y.; Zhang, H.; Zheng, G.; Hong, Y.; Wei, Z.; Sun, P. Research on Motion Transfer Method from Human Arm to Bionic Robot Arm Based on PSO-RF Algorithm. Biomimetics 2025, 10, 392. https://doi.org/10.3390/biomimetics10060392

Zheng Y, Zhang H, Zheng G, Hong Y, Wei Z, Sun P. Research on Motion Transfer Method from Human Arm to Bionic Robot Arm Based on PSO-RF Algorithm. Biomimetics. 2025; 10(6):392. https://doi.org/10.3390/biomimetics10060392

Chicago/Turabian StyleZheng, Yuanyuan, Hanqi Zhang, Gang Zheng, Yuanjian Hong, Zhonghua Wei, and Peng Sun. 2025. "Research on Motion Transfer Method from Human Arm to Bionic Robot Arm Based on PSO-RF Algorithm" Biomimetics 10, no. 6: 392. https://doi.org/10.3390/biomimetics10060392

APA StyleZheng, Y., Zhang, H., Zheng, G., Hong, Y., Wei, Z., & Sun, P. (2025). Research on Motion Transfer Method from Human Arm to Bionic Robot Arm Based on PSO-RF Algorithm. Biomimetics, 10(6), 392. https://doi.org/10.3390/biomimetics10060392