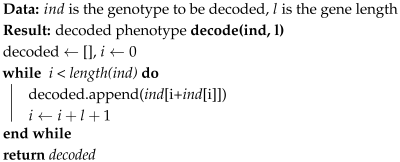

2.1. System Flexibility

System flexibility refers to a system’s ability (i) to easily adapt from being good at one task to being good at a related task and (ii) to cope with a diversity of related tasks. The paper [

4] introduces a general formalism for system flexibility that allows both aspects (i) and (ii) to be defined rigorously. In this paper, we focus on aspect (i). In preparation for the benchmark defined in

Section 3, we introduce some terminology and cost notions in this section. We follow [

3], which has studied an evolutionary algorithm from the viewpoint of system flexibility for the pole balancing problem.

We denote the system configuration space by X and a concrete system configuration by . As our application example we consider an orthogonal cutting process and the problem of adapting process parameters when a material change occurs. A cutting machine with the cutting tool forms a system that can perform the cutting process. The input of the cutting process is a workpiece of some metal, and the output is the workpiece with the desired amount of material removed. Here, the system configuration space is given by the vector space of all possible values for the process parameters.

We further consider the system to be equipped with an evolutionary algorithm A that the system uses to optimize process parameters. Since evolutionary algorithm A and how it operates on system configuration space X are the main concerns in this paper, we formally denote the system by a tuple . In our example, the task T that the system has to perform, for a specific type of metal, is to optimally cut the workpiece according to some feasibility criteria and multiple objectives. For our purposes, we can consider this to be equivalent to A solving the associated multi-objective optimization problem. The cost in terms of objective function evaluations to solve this problem from scratch is denoted by .

To measure the ability of evolutionary algorithm

A to adapt process parameters when a material change occurs, we consider a

task context of

n multi-objective optimization problems, each associated to a different type of metal. For each pair

, where

, the

adaption cost denotes the cost in terms of objective function evaluations to solve

given that the algorithm has previously solved

. To measure the adaption capability of

A with regard to the task context, we can consider the

worst-case adaption cost given by

the

average-case adaption cost given by

or the

best-case adaption cost given by

The lower the adaption cost, the better the considered algorithm is at exploiting information gained on a previous optimization task for a new optimization task. As reference values, we also consider the cost to solve the optimization task from scratch in the worst, average, or best case.

Note that the above definitions are not specific to evolutionary algorithms but can be used for any optimization algorithm. We only have to provide measures for

and

that are suitable for the considered optimization algorithm. The specific cost measures considered in this paper are discussed in

Section 5.

2.2. Orthogonal Metal Cutting and the Extended Oxley Model

Orthogonal metal cutting is a machining operation where the cutting edge of the tool is perpendicular to the direction of relative motion between the tool and the workpiece surface. The process involves the removal of material from the workpiece by the cutting tool in a series of small, discrete steps, as the tool chips away at the workpiece material through plastic deformation. The process is known for producing high-quality, precise cuts but is also challenging to optimize due to the complex interactions among the cutting tool, the workpiece material, and the machining environment.

Predictive models are extensively developed and used in the process planning phase in order to enhance the product quality and to optimize the process parameters with respect to tool life, surface finish, part accuracy and beyond. Predictive models are divided into analytical models, which describe an idealized underlying physics; empirical models, which are derived from experimental observations; and numerical models, such as the Finite Element method (FEM), which take into account the precise multiphysical phenomena involved in the cutting process [

11]. Due to the high costs involved in experimentally determining the empirical models and high computational power required for numerical methods, they are seldom used in an industrial context. On the contrary, analytical models including [

12] are fast and assists in developing practical tools for the industry, albeit with the drawback of not capturing the multidimensional physics. The recent advancements in analytical models have, however, enhanced the predictions to make the latter more realistic. Due to its simplicity and negligible numerical costs, the analytical model initially proposed by [

12] and the extension from [

5] is used in this study. The work of [

5] was implemented as a Python package and made available on GitHub (

https://github.com/pantale/OxleyPython accessed on 30 September 2024) by the authors [

13].

Oxley’s theory exploits the slip line field theory coupled with thermal phenomena to predict the cutting forces, temperatures, and stresses and strains in the workpiece. The flow stress

in the workpiece, which depends on the magnitude of plastic deformation

, rate of plastic deformation

, and current temperature

of the material, plays a central role in the prediction model. To account for a wide range of materials, a Johnson–Cook material flow rule (Equation (

4)) that multiplicatively accounts for each of the influencing phenomena is used. A material is then fully defined by the following material parameters: plastic hardening parameters

A,

B, and

n; plastic deformation rate sensitive parameters

C and

; thermal softening exponent

m; and the material’s melting point

:

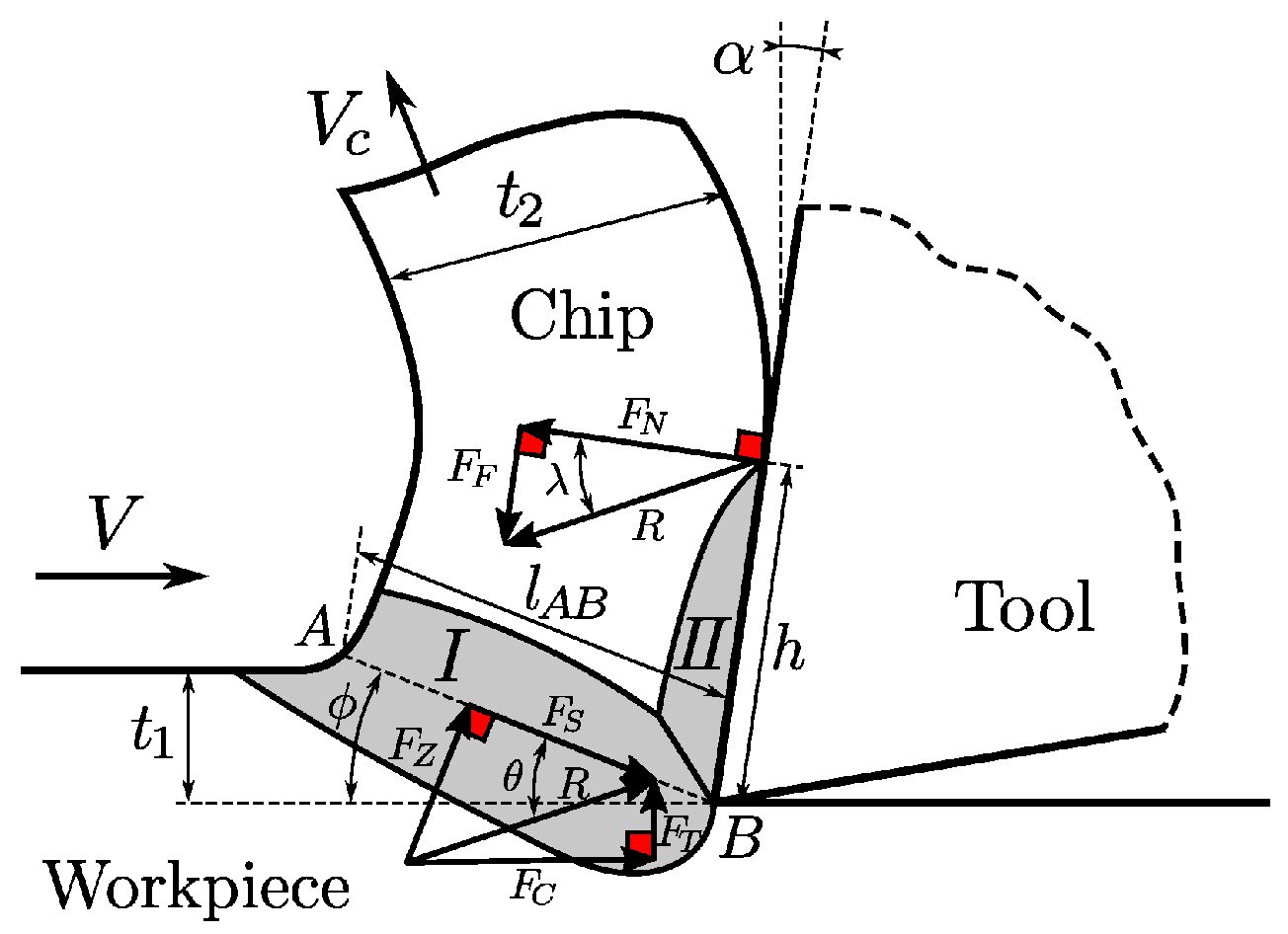

Figure 1 describes the analytical orthogonal cutting model. The material in the vicinity of the tool tip is divided into a primary shear zone (I) of length

, where the material experiences compressive forces and initiates the plastic deformation along the line AB leading to chip formation, and a secondary shear zone (II), where further plastic deformation is induced due to the friction of the chip and tool contact. The workpiece is fed at a velocity of

V against the tool to remove a layer of thickness

resulting in a chip of thickness

at a velocity of

. The primary task of Oxley’s theory is to identify three internal variables that depend on the shear angle

, the ratio of

to the thickness of the primary shear zone, and the ratio of chip thickness

to the thickness of the secondary zone, by solving a system of 3 nonlinear equations. The cutting force

, the advancing force

, and the rise in temperature in the individual zones are then computed from the internal variables. The readers are referred to [

13] for a detailed description of the algorithm. We stress once more that due to the simplicity of the model, one clearly has to expect a gap between the extended Oxley model and realistic simulations. Nevertheless, a benchmark based on the extended Oxley model can provide valuable insights for adapting process parameters in manufacturing contexts, as optimization methods that already need many evaluation methods to adapt parameters for the Oxley model do not even need to be considered in more realistic settings.

The benchmark in

Section 3 is an implementation of the formalism for the orthogonal cutting process and the problem of adapting process parameters when a material change occurs based on the extended Oxley model. In the formulation of

Section 2.1, system configuration space

X is given by the vector space of all possible values for process parameter tool speed

V, tool rake angle

, and the cutting depth in one step

(see

Figure 1).

2.3. NSGA-II: Non-Dominated Sorting Genetic Algorithm II

Many real-world problems require the optimization of two or more objectives at the same time, resulting in the class of multi-objective optimization problems [

10,

14]. Different objectives are often contradictory. Therefore, instead of searching for one solution that optimizes all objectives, multi-objective optimization methods search for a set of non-dominated solutions, the Pareto front.

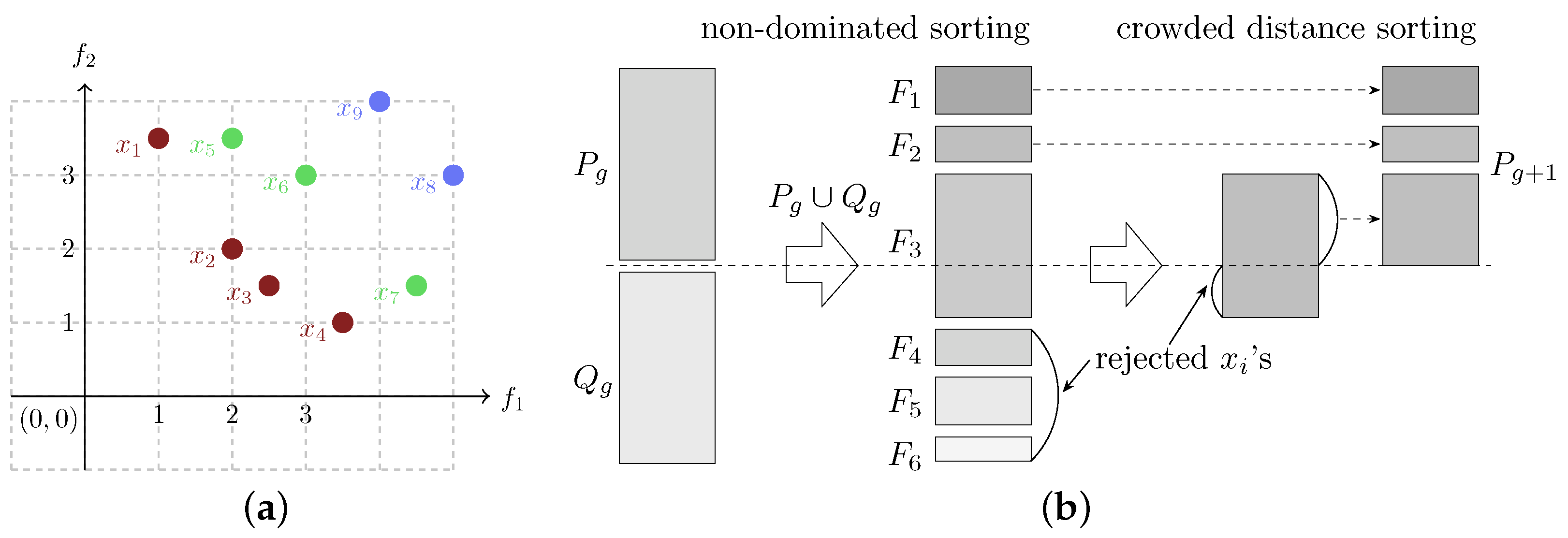

Definition 1. Given a set of solutions X, a Pareto front P is the set of non-dominated solutions from X. A solution is non-dominated if and only if, , with , is better than in at least one objective.

The Pareto front is thus the set of trade-off solutions whose objectives cannot be improved without negatively impacting one or more of the other objectives. Given an optimized Pareto front, the decision-making process for choosing the solution to use will depend on each specific application.

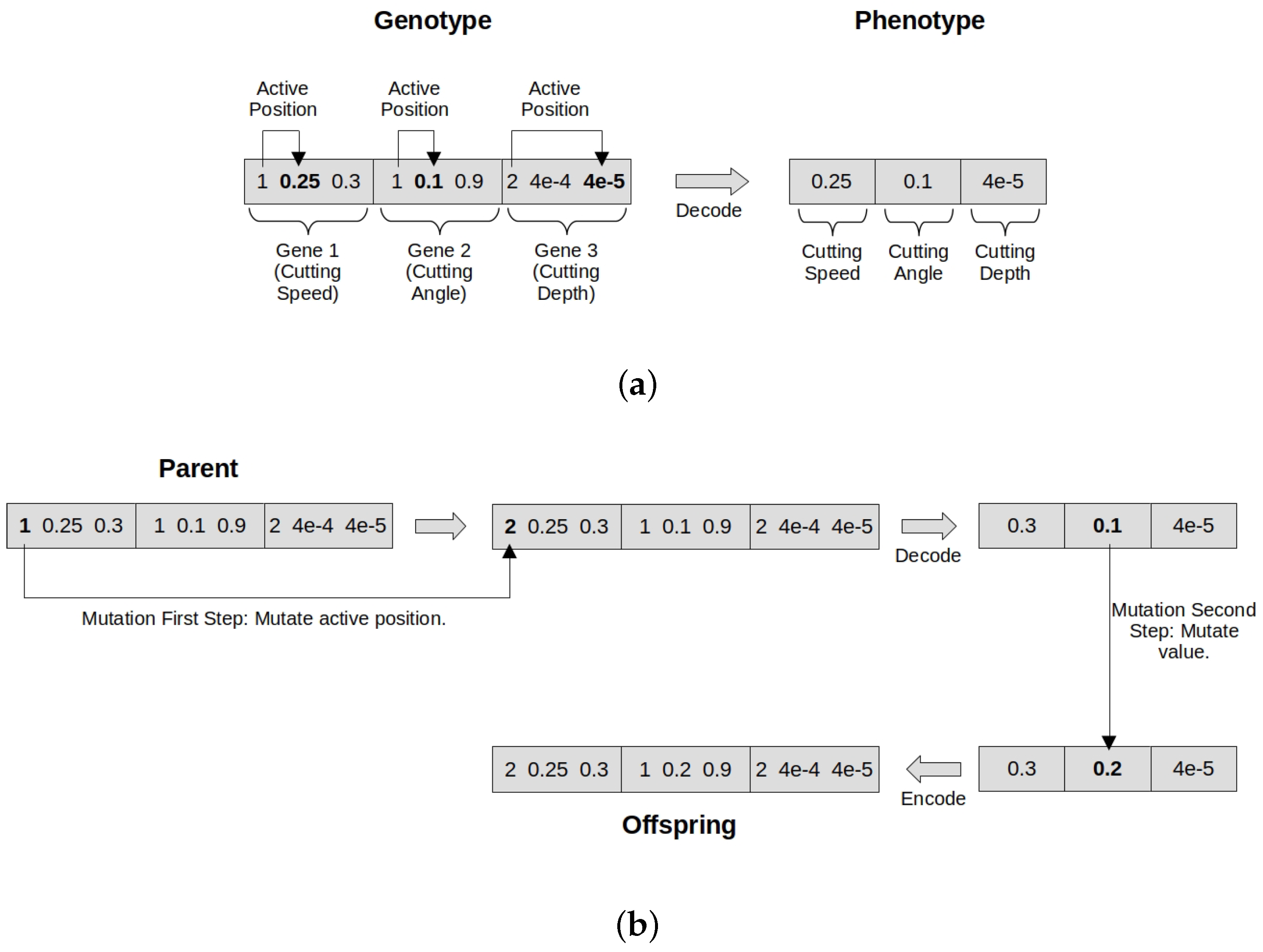

As evolutionary algorithms work with populations of solutions, they are often employed for this class of problems [

10,

14]. Among them, the Non-Dominated Sorting Genetic Algorithm (NSGA-II), proposed by [

15], is a popular multi-objective optimization algorithm. The NSGA-II algorithm has an overall functionality similar to that of a standard genetic algorithm. An initial population of candidate solutions (individuals) is randomly initialized. Individual chromosomes are represented as a vector of either integers or floats. Each generation, individuals are evaluated and attributed a fitness score. Tournaments are performed to select individuals based on this score, and these selected individuals are subject to the crossover and mutation operators, until a new population is formed. The search continues for a maximum number of generations or until a desired solution is found. The difference in NSGA-II lies mainly on the selection mechanism, which is supported by two other mechanisms: non-dominated sorting and crowding sorting.

Definition 2. The non-dominated sorting of a population P corresponds to sorting each individual into a non-domination class. The first domination class is composed of the non-dominated individuals of P, the second non-domination class is composed of the non-dominated individuals of P without the individuals in , and so on, until no individual is left in P.

Figure 2a illustrates the concepts of Pareto front and non-domination classes.

Definition 3. The crowded sorting of a non-domination class is the sorting of individuals in descending order of the crowding distance. The crowding distance of an individual is the volume in the objective space around it that is not covered by any other solution, calculated as the perimeter of the cuboid that has the nearest neighbors in the objective space as vertices.

Definition 4. Given two individuals and , with non-domination classes and and crowding distances and , respectively, the crowded comparison operator defines the partial order if and only if () or ( and ).

By considering both non-dominated sorting and crowded sorting in the crowded comparison operator, NSGA-II balances elitism and preservation of diversity of individuals inside a Pareto front. More detailed explanations of these metrics can be found in Deb et al. [

15]. With these definitions, the NSGA-II algorithm differs from a standard genetic algorithm in the following aspects: (1) The crowded comparison operator is used when comparing individuals for selection in the tournament. (2) Given a population

P, at each generation, a set

Q of offspring is generated via selection and application of genetic operators. The union of

P and

Q is then sorted in non-domination classes, and each class is internally sorted according to the crowding distances. The non-domination classes are then added to the next population until the population size is reached. If including the last non-dominated class before reaching the population size exceeds the population size, then individuals are chosen according to their crowding distance, in decreasing order. The process is illustrated in

Figure 2b. Algorithm 1 summarizes the optimization procedure of NSGA-II.

| Algorithm 1: Pseudocode for NSGA-II. |

![Biomimetics 10 00663 i001 Biomimetics 10 00663 i001]() |